Documente Academic

Documente Profesional

Documente Cultură

Knapsack Problem

Încărcat de

Gurpreet Singh100%(6)100% au considerat acest document util (6 voturi)

6K vizualizări6 pagini0/1 knapsack and fractional knapsack problem

Drepturi de autor

© © All Rights Reserved

Formate disponibile

DOCX, PDF, TXT sau citiți online pe Scribd

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest document0/1 knapsack and fractional knapsack problem

Drepturi de autor:

© All Rights Reserved

Formate disponibile

Descărcați ca DOCX, PDF, TXT sau citiți online pe Scribd

100%(6)100% au considerat acest document util (6 voturi)

6K vizualizări6 paginiKnapsack Problem

Încărcat de

Gurpreet Singh0/1 knapsack and fractional knapsack problem

Drepturi de autor:

© All Rights Reserved

Formate disponibile

Descărcați ca DOCX, PDF, TXT sau citiți online pe Scribd

Sunteți pe pagina 1din 6

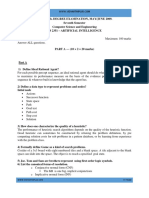

Fractional & 0/1 Knapsack Problem

Students: Daniel Intskirveli and Gurpreet Singh

CS220, The City College of New York

May 30th, 2014

Introduction

The problems being studied in this paper is from the family of knapsack problems,

the fractional and 0/1 knapsack problem. In the fraction knapsack problem we are

given a knapsack with capacity M, and a set N of n objects. Each object i has a given

weight wi and a prot pi. The goal is to pack these objects into a knapsack, such that

the prot of the objects in the knapsack is maximized, while the total weight does

not exceed the total capacity, M. In the fractional knapsack problem you choose a

fraction xi, 0 xi 1, of the object i and place it into the knapsack, then a profit pi * xi

is earned. If we limit the xi to only 1 or 0 (take it or leave it), this results in the 0/1

Knapsack problem.

Fractional Knapsack-- Greedy Method

The fractional knapsack problem can be solved using the greedy method because we

will work in stages, considering one input at a time. At each stage, we will consider

one input and decided whether it will be included to form an optimal solution. If

including this input results in an infeasible solution, then this input is useless.

Otherwise, it is added to the partial optimal solution being built.

We first compute the ratio ri of the profit to the weight, (pi/wi), for each item and

then sort the ratios so that pi/wi pi+1/wi+1 for 1 i n - 1. Afterward, we greedily

take objects in this order and add it to the knapsack as long as adding it does not

exceed the capacity of the knapsack. If the weight of the object exceeds the

remaining knapsack capacity, take a fraction of the object.

An example that performs this greedy algorithm is shown below:

Find an optimal solution to the knapsack instance n = 7, M = 15, (p1, p2, , p7) = (10,

5, 15, 7, 6, 18, 3) and (w1, w2, , w7) = (2, 3, 5, 7, 1, 4, 1)?

Step 1. Compute ri = (pi/wi)

Object 1 2 3 4 5 6 7

(pi/wi) 5.0 1.67 3.0 1.0 6.0 4.5 3.0

Step 2. Sort the ratios in non-increasing order

Object 5 1 6 3 7 2 4

pi 6 10 18 15 3 5 7

wi 1 2 4 5 1 3 7

(pi/wi) 6.0 5.0 4.5 3.0 3.0 1.67 1.0

Step 3. Greedily add objects to knapsack while the weight does not exceed capacity.

Objects 5, 1, 6, 3 and 7 are included in the knapsack and this adds up to the weight of

13. The next object we consider is object 2, which has the weight of 3. But, if we

include object 2 entirely, the sum of weights will be 1 + 2 + 4 + 5 + 1 + 3 = 16 and it

exceeds the capacity of the knapsack, which is only 15. We have only 2 weight units

left to fill the knapsack. Therefore, the fraction, (2/3), of object 2 is included in the

knapsack to fill its capacity. The profit obtained is 6 + 10 + 18 + 15 + 3 + 5(2/3) =

55.33.

0/1 Knapsack

The 0/1 knapsack problem has been proven to be NP-complete, meaning there are

no known algorithms that can solve it in polynomial time, and it is probably (but no

proven) that none can possible exist. So, were left to tinker with algorithms that are

slower than polynomial time. The nave solution to this problem is a brute-force

approach in which every combination of every object being chosen or not chosen is

tested, and the combination with the maximum value as well as a weight that does

not exceed the capacity of the knapsack is chosen as the correct answer. The

problem with this algorithm, of course, is that it runs in exponential time. For n

objects, there are 2

n

ways to choose them. This results in an exponential algorithmic

complexity, which, for all intents and purposes, is bad. We studied and

implemented two algorithms that improve on the nave approach using two well-

known problem-solving patterns: dynamic programming and backtracking.

Dynamic Programming

To solve this problem we start with a two dimensional array: X(i, W) = 2D array,

where i, which is the row, represents the number of items and W, which is the

column, represents the weight of the knapsack. i ranges from the 0 to N and W

ranges from 0 to W. In this 2D array, at any position X(i, W) represent how many

objects and which objects you can take up to that position of W. If we have no

objects to choose, then X(0, w) = 0. If we capacity of knapsack is 0, then X(i, 0) = 0.

To populate the 2D array, we use the following recurrence relation:

If the weight of the knapsack is less than weight of the i

th

object then that object

cannot be included in the knapsack. So that position will be equal to the value for

when an object is taken or when no object is taken. If the weight of the knapsack is

greater than the weight of the i

th

object, then the value of that position depends on

maximum of the two quantities:

1. If the i

th

object we are checking does not help us in increasing our total profit,

then we reject that object and continue to check other remaining objects.

2. If the i

th

object increases our total profit then we add the value of the i

th

object with the remaining objects and the remaining weight.

An illustration of this dynamic approach is shown below:

Find an optimal solution to the knapsack instance n = 7, M = 15, (p1, p2, , p7) = (10,

5, 15, 7, 6, 18, 3) and (w1, w2, , w7) = (2, 3, 5, 7, 1, 4, 1)?

The 2D array is shown below. When we have 0 items to put in the knapsack or the

weight of the knapsack is zero then X(0, w) and X(i, 0) is 0.

w

# Objects 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

1 0 0 10 10 10 10 10 10 10 10 10 10 10 10 10 10

2 0 0 10 10 10 15 15 15 15 15 15 15 15 15 15 15

3 0 0 10 10 10 15 15 25 25 25 30 30 30 30 30 30

4 0 0 10 10 10 15 15 25 25 25 30 30 30 30 32 32

5 0 6 10 16 16 16 21 25 31 31 31 36 36 36 36 38

6 0 6 10 16 18 24 28 34 34 34 39 43 49 49 49 54

7 0 6 10 16 19 24 28 34 37 37 39 43 49 52 52 54

X(1, 1): Wi = 2 > w = 1, so X(1, 1) = X(0, 1) = 0

X(1, 2): Wi = 2 <= w = 2, so X(1, 2) = Max( X(0,2), Pi + X(0, 1) ) = Max (0, 10) = 10

X(1, 3): Wi = 2 <= w = 3, so X(1, 3) = Max(X(0,3), Pi + X(0,2) ) = Max (0,10) = 10

(same for the entire row).

X(2, 1): Wi = 3 > w = 1, so X(2, 1) = X(1, 1) = 0

X(2, 2): Wi = 3 > w = 2, so X(2, 2) = X(1, 2) = 10

X(2, 3): Wi = 3 <= w = 3, so X(2, 3) = Max( X(1, 3), Pi + X(1, 0) ) = Max(10, 5) = 10

X(2, 4): Wi = 3 <= w = 4, so X(2, 4) = Max( X(1, 4), Pi + X(1, 1) ) = Max(10, 5) = 10

X(2, 5): Wi = 3 <= w = 5, so X(2, 5) = Max( X(1, 5), Pi + X(1, 2) ) = Max(10, 15) = 15

(same for the entire row).

This process can be continued for the entire table but will take forever to do it on

paper. We used python programming to generate of the results and we verified on

paper as well. The final result is stored in the last column and last row, X[7][15]

which is 54. To find out which objects are included in the final result we can have

another 2D array, call it Y, of same size as the previous array, which will have binary

results, 0 or 1. 1 indicates that the object will be included in the knapsack and 0

indicates that it will not be included. For an object to included it must be able to fit

in the knapsack must have higher profit than remaining objects. The keep array for

the previous example is shown below:

w

# Objects 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

1 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1

2 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1

3 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1

4 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1

5 0 1 0 1 1 1 1 0 1 1 1 1 1 1 1 1

6 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1

7 0 0 0 0 1 0 0 0 1 1 0 0 0 1 1 0

Once the keep array is generated, you can backtrack from the lower right corner of

the array, Y[7][15]. If that position is 0, then we decrement n by 1 (n-1) and then

look at that position. If that position is 1 then we know that coin has been taken.

Next, we decrement n 1 and then also decrement w by w wi of the object on that

index. Object included are the following: Object 6 with profit 18, Object 5 with profit

6, Object 3 with profit 15, Object 2 with profit 5, Object 1 with profit 10.

Dynamic programming was a useful technique of solving this certain kind of

problem. The running time of dynamic programming algorithm vs. nave algorithm

for the 0/1 Knapsack problem is O(nM) vs. O(2

n

), and the improvement is from

exponential to polynomial.

Backtracking

Backtracking improves on the nave approach in which all combinations are

tested by avoiding considering combinations that, at some point in the problem, can

be determined to be infeasible. For example, if a programmer wants to solve the

problem with a knapsack of capacity 10, and one of the candidate items has a weight

of 15, it is clear that that item will not appear in the solution, and neither will any

combination of items that includes that item. So, we can ignore those combinations

to improve the running time of the algorithm. Backtracking achieves this

optimization by performing a depth-first-search on an implicit state-space tree. As

the algorithm is searching, some logic defines whether or not the current node can

lead to a feasible solution. If it is determined that the node does not form a feasible

solution (for example if the total weight of objects chosen exceeds the capacity) it is

ignored (pruned from the state space tree). All the leaves of the space state tree

represent possible solutions. The best solution is the leaf with the largest value.

Two factors determine whether or not a node in the space-state tree is

feasible. The first is trivial: if the total weight of the objects considered so far

exceeds the capacity of the bag, the solution up to that point cannot be included and

the tree is pruned at that node. The second is more abstract: we calculate an upper

bound for the total value that the objects can have including the solution so far and

all possible child nodes under the current node. If the potential profit that we

calculated for the sub-tree of which the current node is the root plus the current

total profit up until that point is less than the maximum profit we have observed so

far, there is no point in continuing the search after that node and tree is therefore

pruned at that node. The big problem, then, is how to efficient calculate that upper

bound for pruning purposes. For the upper bound we used the maximum profit as

calculated by our greedy algorithm for the fractional knapsack problem. This

quickly calculates a maximum profit that cannot possibly be exceeded in the 0/1

knapsack problem for that node and therefore functions as a good upper bound.

Conclusion

The greedy algorithm that solves the fractional knapsack problem is relatively

trivial, and it is intuitiveif we are allowed to take fractions of objects, we have the

opportunity to fill 100% of the knapsack as long as there are enough objects to do

so. So, we fill it with the most expensive objects until a full object can no longer fit, at

which point we put the maximum possible fraction of the most expensive object that

is not yet used. Unfortunately, greedy approaches to the 0/1 problem are not

guaranteed to be correct. In an application where runtime is more important that

accuracy, it may be acceptable to use the greedy approach as an approximation. We

have also already seen that the solution to the fraction knapsack problem can serve

as an upper bound when testing whether a node is promising in the backtracking

algorithm. The runtime for the greedy solution to the fractional knapsack problem

is.

The dynamic programming algorithm has a complexity of O(nM), where M is the

capacity of the knapsack. This is pseudo-polynomial time. Because a pseudo-

polynomial time algorithm exists, the knapsack problem is considered weakly NP-

complete. The dynamic programming algorithm is good for finding solutions to

instances of the knapsack problem where the capacity is small, since the algorithms

is exponential relative to the length of M, the capacity. The dynamic programming

algorithm can basically be thought of as the nave recursive top-to-bottom approach

converted to an iterative bottom-up approach where sub-problems are memoized.

It is difficult to accurately predict the runtime of the backtracking algorithm because

it depends on the input, since non-promising solutions are not considered. The

worst case, assuming the profits and weights are already sorted (the greedy

algorithm which we use to determine whether a node is promising relies on sorted

input), is O(2

n

), because the entire state-space tree has 2

n

leaves. However, because

the algorithm prunes infeasible solutions, the runtime is actually much less in the

average case. In order to remove the requirement of a sorted input, our algorithm

sorts the input, which adds to the run time. We used the sort built-in to Python

which is an implementation of TimSort, and therefore has an average case

complexity of O(nlogn). This is irrelevant to the prediction because O(2

n

) is much

larger.

In this exercise we compared the running time of the dynamic programming and the

backtracking algorithms by measuring execution time using the system clock. This

approach gives us a rough idea of how the algorithms perform relative to each other.

Of course, using this approach tells us nothing about why one algorithm performs

better than anotheris one algorithm more efficient, or does one of them contain a

bug that unreasonably inflates run time? Assuming our code does not have

anomalies, which cause runtimes to be abnormally slow, this is an insightful

comparison because both algorithms have advantages and disadvantages. The

dynamic programming algorithm requires no sorting, and it does not make

recursive function calls. On the other hand, it is heavily dependent on the capacity of

the knapsack. The backtracking algorithms require sorting and makes recursive

calls, but pruning of the state-space tree makes the worst case rare.

To make a fair comparison, we ran both algorithms and a few problem sizes (10, 50,

100, 200, 500, 1000, 2000) and plotted the running time. Because one of the

dimensions of the weight matrix calculated by dynamic programming algorithm is

bounded by the capacity of the knapsack, that algorithms running time depends on

the capacity of the knapsack in the problem. Because of this, we also tested the

algorithms on different capacities for each problem size (0.25n, 0.5n, 1n, 2n, 4n,

where n is the problem size). The profits and weights for each problem are

generated randomly: all profits are random numbers between 10 and 100, and all

weights are random numbers between 2 and 10.

At first glance, our tests show that the backtracking algorithm performs better (the

generated plot is attached on the last page of this report). And indeed, in most cases,

it does. However, in theory the dynamic programming should be faster for

knapsacks with small capacities. Though it is difficult to see evidence of this on our

plot, skimming the program output shows several cases where, when the capacity is

small, the dynamic programming algorithm completes faster. In the future, it is

worth tweaking the test sizes and capacities to obtain a more definitive picture of

how well the two algorithms perform relative to each other.

S-ar putea să vă placă și

- MBA Knowledge Base: The Five Stages of Business IntelligenceDocument1 paginăMBA Knowledge Base: The Five Stages of Business Intelligenceiraj shaikhÎncă nu există evaluări

- Merge Sort Quick SortDocument43 paginiMerge Sort Quick SortTudor TilinschiÎncă nu există evaluări

- Dynamic ProgrammingDocument15 paginiDynamic ProgrammingKyambadde FranciscoÎncă nu există evaluări

- Sorting Insertion Sort Merge SortDocument45 paginiSorting Insertion Sort Merge SortMia SamÎncă nu există evaluări

- Design and Analysis of Algorithm Question BankDocument15 paginiDesign and Analysis of Algorithm Question BankGeo ThaliathÎncă nu există evaluări

- Sorting Algorithms: CENG 213 Data StructuresDocument53 paginiSorting Algorithms: CENG 213 Data Structuressachin012377Încă nu există evaluări

- Decision Support SystemDocument33 paginiDecision Support SystemSurekha PuriÎncă nu există evaluări

- Merge SortDocument9 paginiMerge SortΛίλη ΚαρυπίδουÎncă nu există evaluări

- Unit 13 Limitations of Algorithm Power: StructureDocument21 paginiUnit 13 Limitations of Algorithm Power: StructureRaj SinghÎncă nu există evaluări

- MS (Integer Programming)Document103 paginiMS (Integer Programming)Graham AkerÎncă nu există evaluări

- Cs 6402 Design and Analysis of AlgorithmsDocument112 paginiCs 6402 Design and Analysis of Algorithmsvidhya_bineeshÎncă nu există evaluări

- CS8451 Design and Analysis of Algorithms MCQDocument206 paginiCS8451 Design and Analysis of Algorithms MCQsanjana jadhavÎncă nu există evaluări

- OS Mini ProjectDocument21 paginiOS Mini ProjectDarrel Soo Pheng Kian50% (2)

- Machine Learning Is Fun! - Adam Geitgey - Part 1Document10 paginiMachine Learning Is Fun! - Adam Geitgey - Part 1Gatis GintingÎncă nu există evaluări

- Curve FittingDocument4 paginiCurve Fittingkh5892Încă nu există evaluări

- Limitations of Algorithm PowerDocument10 paginiLimitations of Algorithm PowerAkhilesh Kumar100% (1)

- Sample Questions AnswersDocument8 paginiSample Questions AnswersDavidÎncă nu există evaluări

- Machine Learning Is FunDocument142 paginiMachine Learning Is FunRajachandra VoodigaÎncă nu există evaluări

- MATLAB - ProgramsDocument10 paginiMATLAB - ProgramsRandhawa VermaÎncă nu există evaluări

- Soft Computing Notes PDFDocument69 paginiSoft Computing Notes PDFSidharth Bastia100% (1)

- Design and Analysis of Algorithm UNIT-II Divide and ConquerDocument11 paginiDesign and Analysis of Algorithm UNIT-II Divide and Conquerarkaruns_858818340Încă nu există evaluări

- Design and Analysis of Algorithms (DAA) NotesDocument169 paginiDesign and Analysis of Algorithms (DAA) Notesvinod.shringi7870Încă nu există evaluări

- Mathematical Analysis NonRecursive AlgorithmsDocument15 paginiMathematical Analysis NonRecursive AlgorithmsNIKHIL SOLOMON P URK19CS1045100% (1)

- Dynamic ProgrammingDocument51 paginiDynamic ProgrammingAbhishekGoyal0% (1)

- Design and Analysis of Algorithms (DAA) NotesDocument112 paginiDesign and Analysis of Algorithms (DAA) NotesMunavalli Matt K SÎncă nu există evaluări

- CS GATE 2019 SolutionsDocument39 paginiCS GATE 2019 SolutionsLakshay KakkarÎncă nu există evaluări

- ARTIFICIAL NEURAL NETWORKS-moduleIIIDocument61 paginiARTIFICIAL NEURAL NETWORKS-moduleIIIelakkadanÎncă nu există evaluări

- Design Analysis and AlgorithmDocument83 paginiDesign Analysis and AlgorithmLuis Anderson100% (2)

- CS301 Data Structures Final Term of 2012 Solved Subjective With References by MoaazDocument34 paginiCS301 Data Structures Final Term of 2012 Solved Subjective With References by MoaazTayyabah Shah67% (3)

- 178 145490762015 18 PDFDocument4 pagini178 145490762015 18 PDFLibra Boy KokoÎncă nu există evaluări

- Python Quiz AnswersDocument50 paginiPython Quiz AnswersRajsukh MohantyÎncă nu există evaluări

- CS301FinaltermMCQsbyDr TariqHanifDocument15 paginiCS301FinaltermMCQsbyDr TariqHanifRizwanIlyasÎncă nu există evaluări

- Python ProgramsDocument5 paginiPython Programskapil kumarÎncă nu există evaluări

- Coin Change Problem Using DPDocument2 paginiCoin Change Problem Using DPGoutam DasÎncă nu există evaluări

- Ford-Fulkerson Algorithm - Network Flow ProblemDocument45 paginiFord-Fulkerson Algorithm - Network Flow ProblemveningstonÎncă nu există evaluări

- Android IntentsDocument44 paginiAndroid IntentsUsama ChaudharyÎncă nu există evaluări

- Knapsack Problem CourseraDocument3 paginiKnapsack Problem CourseraAditya JhaÎncă nu există evaluări

- About The Classification and Regression Supervised Learning ProblemsDocument3 paginiAbout The Classification and Regression Supervised Learning ProblemssaileshmnsÎncă nu există evaluări

- CS502 Fundamentals of Algorithms 2013 Final Term Mcqs Solved With References by MoaazDocument46 paginiCS502 Fundamentals of Algorithms 2013 Final Term Mcqs Solved With References by MoaazShahrukh Usman0% (1)

- Greedy TechniqueDocument37 paginiGreedy TechniquegorakhnnathÎncă nu există evaluări

- Presantation - Chapter 07-Decrease and ConquerDocument41 paginiPresantation - Chapter 07-Decrease and ConquerDesadaÎncă nu există evaluări

- PlanningDocument26 paginiPlanningalvin augustineÎncă nu există evaluări

- Soft Computing MCQDocument10 paginiSoft Computing MCQSaurabhÎncă nu există evaluări

- Design and Analysis of AlgorithmDocument29 paginiDesign and Analysis of AlgorithmManishÎncă nu există evaluări

- A2020 DAY 24 APPS Java 15 April 2020Document19 paginiA2020 DAY 24 APPS Java 15 April 2020lok eshÎncă nu există evaluări

- Computer Graphics PDFDocument2 paginiComputer Graphics PDFSrujan SAIÎncă nu există evaluări

- Paper-2 Clustering Algorithms in Data Mining A ReviewDocument7 paginiPaper-2 Clustering Algorithms in Data Mining A ReviewRachel WheelerÎncă nu există evaluări

- Introduction To Data WarehousingDocument42 paginiIntroduction To Data WarehousingXavier Martinez Ruiz50% (2)

- Lecture Notes 5Document3 paginiLecture Notes 5fgsfgsÎncă nu există evaluări

- Exchange SortDocument3 paginiExchange SortAchyut KayasthaÎncă nu există evaluări

- Data StructureDocument5 paginiData StructureAhmed GamalÎncă nu există evaluări

- 0 1 KnapsackDocument47 pagini0 1 Knapsackdeepak11004467% (3)

- DSA RevisionDocument12 paginiDSA RevisionsiddhipandeyÎncă nu există evaluări

- Travelling Salesman Problem123Document20 paginiTravelling Salesman Problem123Chintal RavalÎncă nu există evaluări

- Compare - Dss and Bi PDFDocument6 paginiCompare - Dss and Bi PDFSharmila SaravananÎncă nu există evaluări

- Ai PDFDocument85 paginiAi PDFRomaldoÎncă nu există evaluări

- 3.6 The Knapsack ProblemDocument49 pagini3.6 The Knapsack ProblemDaljeet Kaur DhaliwalÎncă nu există evaluări

- Dynamic Prgming & BacktrackingDocument98 paginiDynamic Prgming & Backtrackingharimano0% (1)

- 012 Step by Step ApproachDocument140 pagini012 Step by Step Approachliyocot981Încă nu există evaluări

- 3 Greedy Method NewDocument92 pagini3 Greedy Method NewKartik VermaÎncă nu există evaluări

- Quadro Mobile Line Card n18 11x8.5 r4 HRDocument1 paginăQuadro Mobile Line Card n18 11x8.5 r4 HREka S. PaongananÎncă nu există evaluări

- Samsung Monte CodesDocument2 paginiSamsung Monte CodesideaamulÎncă nu există evaluări

- Recommender System With Sentiment Analysis: Summer Internship ReportDocument58 paginiRecommender System With Sentiment Analysis: Summer Internship ReportbhargavÎncă nu există evaluări

- Coupling and CohesionDocument2 paginiCoupling and CohesionDebadatta GadanayakÎncă nu există evaluări

- Lagos State Polytechnic: Student Examination Identity CardDocument1 paginăLagos State Polytechnic: Student Examination Identity CardSunday PeterÎncă nu există evaluări

- Presentation SLTDocument14 paginiPresentation SLTAliÎncă nu există evaluări

- Notebook Power System Introduction TroubleshootingDocument44 paginiNotebook Power System Introduction TroubleshootingDhruv Gonawala89% (9)

- Upload 1 Document To Download: Kill Team 2021 - Core BookDocument4 paginiUpload 1 Document To Download: Kill Team 2021 - Core BookgoneoÎncă nu există evaluări

- Es ZC424 Course HandoutDocument13 paginiEs ZC424 Course HandoutJAYAKRISHNAN.K.RÎncă nu există evaluări

- Different Approaches To Crack Monitoring of Buildings Using IOTDocument11 paginiDifferent Approaches To Crack Monitoring of Buildings Using IOTIJRASETPublicationsÎncă nu există evaluări

- FortiOS v4.0 MR3 Patch Release 11 Release NotesDocument31 paginiFortiOS v4.0 MR3 Patch Release 11 Release NotesmonsieurkozoÎncă nu există evaluări

- International Molecular Pathology Catalog 2020Document222 paginiInternational Molecular Pathology Catalog 2020nam7124119Încă nu există evaluări

- Devops ResumeDocument5 paginiDevops ResumeEkant BajajÎncă nu există evaluări

- SSA TrainingDocument48 paginiSSA TrainingStats TutorÎncă nu există evaluări

- Business Analyst Master's Program - Simplilearn - v3Document24 paginiBusiness Analyst Master's Program - Simplilearn - v3Pradeep JNA75% (4)

- THE Football Analytics HandbookDocument26 paginiTHE Football Analytics HandbookAlvaro Zabala100% (2)

- ACESYS V8.0 - User Manual - AlternateDocument12 paginiACESYS V8.0 - User Manual - AlternateMohamed AdelÎncă nu există evaluări

- 2.+AC Jurnal+GETS V1 N2 ArdiansyahDocument8 pagini2.+AC Jurnal+GETS V1 N2 ArdiansyahSEIN FOXINÎncă nu există evaluări

- 012-NetNumen U31 R22 Northbound Interface User Guide (SNMP Interface)Document61 pagini012-NetNumen U31 R22 Northbound Interface User Guide (SNMP Interface)buts101100% (5)

- TopFlite Components - Tinel - Lock® RingDocument1 paginăTopFlite Components - Tinel - Lock® Ringbruce774Încă nu există evaluări

- Tda 7293Document16 paginiTda 7293AntónioRodriguesÎncă nu există evaluări

- Basic Nonlinear Analysis User's Guide: Siemens Siemens SiemensDocument144 paginiBasic Nonlinear Analysis User's Guide: Siemens Siemens Siemensபென்ஸிஹர்Încă nu există evaluări

- Lab Manual 5Document6 paginiLab Manual 5Nasr UllahÎncă nu există evaluări

- Technote On - Communication Between M340 and ATV312 Over Modbus Using READ - VAR and WRITE - VARDocument14 paginiTechnote On - Communication Between M340 and ATV312 Over Modbus Using READ - VAR and WRITE - VARsimbamike100% (1)

- Mechanical Skull - Terraria WikiDocument1 paginăMechanical Skull - Terraria WikiEduard NagyÎncă nu există evaluări

- Advanced Computer Architectures: 17CS72 (As Per CBCS Scheme)Document31 paginiAdvanced Computer Architectures: 17CS72 (As Per CBCS Scheme)Aasim InamdarÎncă nu există evaluări

- Ling v. Microsoft Corporation - Document No. 1Document17 paginiLing v. Microsoft Corporation - Document No. 1Justia.comÎncă nu există evaluări

- Ultra-Low Standby Power SRAM With Adaptive Data-Retention-Voltage-Regulating SchemeDocument4 paginiUltra-Low Standby Power SRAM With Adaptive Data-Retention-Voltage-Regulating SchemeTasmiyaÎncă nu există evaluări

- Q3 Explain, How Much Can Business Intelligence and Business Analytics Help Companies Refine Their Business Strategy?Document7 paginiQ3 Explain, How Much Can Business Intelligence and Business Analytics Help Companies Refine Their Business Strategy?sandeep kumarÎncă nu există evaluări

- Codesys Professional Developer Edition: Professional Add-On Tools For The IEC 61131-3 Development SystemDocument3 paginiCodesys Professional Developer Edition: Professional Add-On Tools For The IEC 61131-3 Development SystemPauloRpindaÎncă nu există evaluări