Documente Academic

Documente Profesional

Documente Cultură

A New Decision Tree Learning Approach For Novel Class Detection in Concept Drifting Data Stream Classification

Încărcat de

Journal of Computer Science and EngineeringTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

A New Decision Tree Learning Approach For Novel Class Detection in Concept Drifting Data Stream Classification

Încărcat de

Journal of Computer Science and EngineeringDrepturi de autor:

Formate disponibile

JOURNAL OF COMPUTER SCIENCE AND ENGINEERING, VOLUME 14, ISSUE 1, JULY 2012 1

A New Decision Tree Learning Approach for Novel Class Detection in Concept Drifting Data Stream Classification

Amit Biswas, Dewan Md. Farid and Chowdhury Mofizur Rahman

AbstractNovel class detection in concept drifting data stream classification is the process of learning, where the data distributions change over time like weather conditions, economical changes, astronomical, and intrusion detection etc. Arrival of a novel class in concept-drift occurs in data stream when new data introduce the new concept classes or remove the old ones. Existing data mining classifiers cannot detect and classify the novel class instances until the classifiers are trained with the labeled instances of the novel class. In this paper, we propose a new approach for detecting novel class in concept drifting data stream classification using decision tree classifier that can determine whether new data instance belongs to a novel class. The proposed approach builds a decision tree from training data points, which continuously updates with recent data points so that the tree represents the most recent concept in data stream. The experimental analysis on benchmark datasets from UCI machine learning repository proved that the proposed approach can detect novel class in concept drifting data stream classification problems. Index TermsConcept Drifting, Data Stream Classification, Decision Tree, Novel Class.

1 INTRODUCTION

ATA stream classification is the process of extracting knowledge and information from continuous data instances. A data stream is an ordered sequence of data points that includes attribute values and class values. The goal of data mining classifiers is to predict the class value of a new or unseen instance, whose attribute values are known but the class value is unknown. The existing data mining classifiers (or classification models) are trained on instances of the dataset with fixed number of class values, but in real-world data stream classification problems a new data instance with new class value may appear and the classification model misclassify the new instance. Most of the existing data mining classifiers cannot detect and classify the novel class instances until the classifiers are trained with the labeled instances of the novel class. In real-life data stream mining problems the data distributions change over time, such as weather predictions, astronomical, and intrusion detection etc. Novel class detection in concept drifting data stream mining causes problems because the classification models become less accurate as time passes. The concept drift means the statistical properties of the target class, which the data mining classifiers are trying to classify, change over time in unforeseen ways. Novel class detection in concept drifting data stream classification refers to a change in the data stream when the underlying concept of

Amit Biswas is with the Department of Computer Science and Engineering, United International University, Dhaka, Bangladesh. Dewan Md. Farid is with the Department of Computer Science and Engineering, United International University, Dhaka, Bangladesh. Chowchury Mofizur Rahman is with the Department of Computer Science and Engineering, United International University, Dhaka, Bangladesh. 2012 JCSE www.Journalcse.co.uk

the data changes over time. Recently research on novel class detection in concept drifting data stream classification received much attention to intelligent computational researchers [1], [2], [3]. The data mining classifiers should update continuously so that it reflects the most recent concept in the data stream. The data stream classifiers are divided into two categories: single model and ensemble model. Single model incrementally update a single classifier and effectively respond to concept drifting [9], [13]. On the other side, ensemble model use a combination of classifiers, which combines a series of classifiers with the aim of creating an improved composite model, and also handle concept drifting efficiently [1], [5], [10], [12]. In this paper, we provide a solution for handling the novel class detection problem using decision tree. Our approach builds a decision tree from data stream, which continuously updates with new data points so that the latest tree represents the most recent concept in data stream. We calculate the threshold value based on the ratio of percentage of data points between each leaf node in the tree and the training dataset and also cluster the data points of training dataset based on the similarity of attribute values. If number of the data points classify by a leaf node of the tree increases than the threshold value that calculated before, which means a novel class arrived. Then we compare the new data point with existing data points based on the similarity of attributes value. If the attributes value of new data point is different than existing data points and the new data point does not belongs to any cluster, which confirms a novel class arrived. Then we add the new data point into training dataset and re-

build the decision tree. We organize this paper as follows. Section 2 discusses related work. Section 3 provides an overview of learning algorithms. Our approach is introduced in section 4. Section 5 discusses the datasets and experimental analysis. Finally, conclusions and future works are drawn is section 6.

3 LEARNING ALGORITHMS

Data mining is the process of finding hidden information and patterns in a huge database. Data mining algorithms have two major functions: classification and clustering. Classification maps data into predefined groups or classes. It is often referred to a supervised learning because the classes are determined before examining the data. Classification creates a function from training data. On the other side, clustering is similar to classification except that the groups are not predefined, but rather defined by the data alone. It is alternatively referred to as unsupervised learning.

2 RELATED WORK

Novelty detection and data stream classification, where data distributions inherently change over time that received much attention to the intelligent computational researchers in many practical real-world applications, such as spam, climate change and intrusion detection. In 2011, Masud et al. proposed a novelty detection and data stream classification technique, which integrates a novel class detection mechanism into traditional mining classifiers that enabling automatic detection of novel classes before the true labels of the novel class instances arrive [1]. In order to determine whether an instance belongs to a novel class, the classification model sometimes needs to wait for more test instances to discover similarities among those instances. In the same year, R. Elwell and R. Polikar introduced an ensemble of classifiers-based approach named Learn++.NSE for incremental learning of concept drift, characterized by nonstationary environments [2]. Learn++.NSE trains one new classier for each of data it receives, and combines these classifiers using a dynamically weighted majority voting. The novelty of the approach is in determining the voting weights, based on each classifiers time-adjusted accuracy on current and past environments. In 2007, Kolter and Maloof proposed an ensemble approach for concept drifting data stream classification that dynamically creates and removes weighted experts in response to changes in performance using dynamic weighted majority (DWM) [5]. It trains online learners of the ensemble and adds or removes experts based on the global performance of the ensemble. In 2006, Gaber and Yu [8] proposed a novel class detection approach termed as STREAM-DETECT to identify changes in data streams, which concerned with detecting changes in data streams by measuring online clustering result deviation over time. In 2005, Yang et al. [9] proposed an approach, which incorporates proactive and reactive predictions. In a proactive mode, it anticipates what the new concept will be if a future concept change takes place, and prepares prediction strategies in advance. If the anticipation turns out to be correct, a proper prediction model can be launched instantly upon the concept change. If not, it promptly resorts to a reactive mode: adapting a prediction model to the new data. Widmer and Kubat presented a single classifier named FLORA, which use a sliding window to choose a block of new instances to train a new classifier [14]. FLORA has a built-in forgetting mechanism with the implicit assumption that those instances that fall outside the window are no longer relevant, and the information carried by them can be forgotten.

3.1 Decision Tree Learning Decision tree (DT) learning is very popular mining tool for classification and prediction. It is easy to implement and requires little prior knowledge. DT can be build from large dataset with many attributes. In DT the successive division of the set of training instances proceeds until all the subsets consists of instances to a single class. There are 3 main components in a DT: nodes, leaves, and edges. Each node is labeled with an attribute by which the data is to be partitioned. Each node has a number of edges, which are labeled according to possible values of the attribute. An edge connects either two nodes or a node and a leaf. Leaves are labeled with a decision value for categorization of the data. To make a decision using a DT, start at the root node and follow the tree down the branches until a leaf node representing the class is reached. Each DT represents a rule set, which categorizes data according to the attribute of dataset. The ID3 (Iterative Dichotomiser) technique builds DT using information theory [16]. The basic strategy used by ID3 is to choose splitting attributes from a dataset with the highest information gain. The amount of information associated with an attribute value is related to the probability of occurrence. The concept used to quantify information is called entropy, which is used to measure the amount of randomness from a dataset. When all data in a set belong to a single class, there is no uncertainty then the entropy is zero. The objective of decision tree classification is to iteratively partition the given dataset into subsets where all elements in each final subset belong to the same class. The entropy calculation is shown in equation 1. Given probabilities p1, p2,, ps where i=1 pi = 1,

S

Entropy : H ( p1 , p2 ,..., pS ) = ( pi log(

i =1

1 )) pi

(1)

Given a dataset, D, H(D) finds the amount of subset of dataset. When that subset is split into s new subsets S = {D1, D2, , Ds}, we can again look at the entropy of those subsets. A subset of dataset is completely ordered if all examples in it are the same class. ID3 chooses the splitting attribute with the highest gain. The ID3 algorithm calculates the gain by the equation 2.

Gain( D, S ) = H ( D) p( Di ) H ( Di )

i =1

(2)

The C4.5 is a successor of ID3 through GainRatio [15].

For splitting purpose, C4.5 use the largest GainRatio that ensures a larger than average information gain.

GainRatio( D, S ) =

Gain( D, S ) |D | |D | H ( 1 ,..., S ) |D| |D|

(3)

The C5.0 algorithm improves the performance of building trees using boosting, which is an approach to combining different classifiers. But boosting does not always help when the training data contains a lot of noise. When C5.0 performs a classification, each classifier is assigned a vote, voting is performed, and the example of dataset is assigned to the class with the most number of votes. CART (Classification and Regression Trees) is a process of generating a binary tree for decision making [17]. CART handles missing data and contains a pruning strategy. The SPRINT (Scalable Parallelizable Induction of Decision Tree) algorithm uses an impurity function called gini index to find the best split [18]. Equation 4, difines the gini index for a dataset, D.

gini ( D) = 1 p 2 j

(4)

stream, and xnow is the latest data point, which has just arrived in the data stream. Each data point xi is an ndimensional feature vector that consists of a number of attributes Ai = {A1,A2,,An} with class label Ci = {C1,C2,,Cn}. Each attribute consists of a number of attribute values Ai = {Ai1,Ai2,,Aip}. Algorithm 1 outlines the overview of our approach. We build a decision tree from training data points and calculate the threshold value based on the ratio of percentage of data points between each leaf node in the tree and the training data points and also cluster the training data points based on the similarity of attribute values. When classifying the continuous data streams in real-time, if number of the data points classify by a leaf node of the tree increases than the threshold value that calculated before, which means a novel class arrived. Then we compare the new data point with existing data points based on the similarity of attributes value. If the attributes value of new data point is different than existing data points and the new data point does not belongs to any cluster, which confirms a novel class arrived. Then we add the new data point into training dataset and rebuild the decision tree. The decision tree classifier continuously updates so that if represents the most resent concept in the data stream. Algorithm 1: Novel Class Detection using Decision Tree 1. Find the best splitting attribute with highest information gain value in training dataset. 2. Create a node and label with splitting attribute. [First node is the root node, T of the decision tree] 3. For each branch of the node, partition the data points and grow sub training datasets Di by applying splitting predicate to training dataset D. 4. For each sub training datasets Di, if data points in Di, are all of same class value, Ci then the leaf node labeled with Ci. Else continues steps 1 to 4 until each final subset belong to the same class value or leaf node created. 5. When the decision tree construction is complete, calculate the threshold value for each leaf node in the tree based on the ratio of percentage of data points between each leaf node in the decision tree and the data points in the training dataset. 6. Cluster the training data points based on the similarity of attribute values. 7. For classifying the continuous data streams in realtime, if number of the data points classify by a leaf node of the decision tree increases compare to threshold value that calculated before, which means a novel class may arrived. 8. If the attributes value of new data point is different than existing data points of the leaf node of the decision tree, and also the new data point does not belongs to any existing cluster, which confirms a novel class arrived. 9. If novel class detected, then add the new data point into existing training data points and generate a new training dataset, Dnew. 10. Rebuild a new decision tree using new/updated training dataset, Dnew.

Where, pj is the frequency of class Cj in D. The goodness of a split of D into subsets D1 and D2 is defined by

ginisplit ( D) =

n1 n2 + n( gini ( D1 )) n( gini ( D2 ))

(5)

The split with the best gini value is chosen. A number of research projects of optimal feature selection and classification have been done, which adopt hybrid stratecy involving evolutionary algorithm and inductive decision tree learning [19], [20], [21], [22], [23].

3.2 Clustering Clustering can be considered the most important unsupervised learning problem, which has been used in many real-world application domains, including biology, medicine, anthropology, marketing etc. It is the process of organizing objects into groups whose members are similar in some way. A data point within one cluster is more like data points within that cluster than it is similar to data points outside it. A cluster is therefore a collection of objects which are similar between them and are dissimilar to the objects belonging to other clusters. So, the goal of clustering is to determine the intrinsic grouping in a set of unlabeled data. Give a dataset D = {t1,t2,,tn} of data points, a similarity measure, sim(ti,tl), defined between any two data points, ti, tl, D, and an integer value k, the clustering problem is to define a mapping f: D {1,,k} where each ti is assigned to one cluster Kj, 1 j k. Clustering algorithms can be categorized based on their cluster model, like k-means clustering, distribution-based clustering, density-based clustering etc.

4 PROPOSED APPROACH

The data stream is a continuous sequence of data points: {x1,x2,,xnow}, where x1 is the very first data point in the

5 EXPERIMENTAL ANALYSIS

In this section, we describe the datasets, and the experimental results.

Dataset Iris Plants Database Image Segmentation Data Large Soybean Database Fitting Contact Lenses Database NSL-KDD Dataset

TABLE 1 Data Set Descriptions

No of Attributes 4 Attribute Types Real No of Instances 150 No of Class Attribute 3

5.1 Datasets Data stream mining is the process of analyzing online data to discover patterns, which uses sophisticated mathematical algorithms to segment the continuous data and evaluate the probability of future events. A set of data items called the dataset, which is the very basic concept of data mining and machine learning research. A dataset is roughly equivalent to a two-dimensional spreadsheet or database table. Table 1 describes about the datasets from UCI machine learning repository, which are used in experimental analysis [26]. 1. Iris Plants Database: This is one of the best known dataset in the pattern recognition literature. This dataset contains 3 class values (Iris Setosa, Iris Versicolor, and Iris Virginica), where each class refers to a type of iris plant. There are 150 instances and 4 attributes in this dataset (50 in each of three classes). One class is linearly separable from the other 2 classes. 2. Image Segmentation Data: The goal of this dataset is to provide an empirical basis for research on image segmentation and boundary detection. There are 1500 data instances in this dataset with 19 attributes and all the attributes are real. There are 7 class attribute values: brickface, sky, foliage, cement, window, path, and grass. 3. Large Soybean Database: There are 35 attributes in this dataset and all attributes are nominalized. There are 683 data instances and 19 class values in this dataset. 4. Fitting Contact Lenses Database: It is very small dataset with only 24 data instances, 4 attibutes and 3 class attribute values (soft, hard, and none). All the attribute values are nominal in this dataset. The instances are complete and noise free and 9 rules cover the training set. 5. NSL-KDD Dataset: The Knowledge Discovery and Data Mining 1999 (KDD99) competition data contains simulated intrusions in a military network environment. It is often used a benchmark to evaluate handling concept drift. NSL-KDD dataset is the new version of the KDD99 dataset, which solved some of the inherent problems of the KDD99 dataset [25]. Although, NSL-KDD dataset still suffers from some of the problems that discussed by McHugh [24]. The main advantage of NSL-KDD dataset is that the training and testing data points are reasonable, so it become affordable to run the experiments on the complete set of training and testing dataset without the need to randomly select a small portion of dataset. Each record in NSL-KDD dataset consists of 41 attributes and 1 class attribute. NSL-KDD dataset does not include redundant and duplicate examples in training dataset.

19

Real

1500

35

Nominal

683

19

Nominal Real & Nominal

24

41

25192

23

5.2 Results We implement our algorithm in Java. The code for decision tree has been adapted from the Weka machine learning open source repository (http://www.cs.waikato.ac.nz/ml/weka). Weka contains tools for data pre-processing, classification, regression, clustering, association rules, and visualization. The experiments were run on an Intel Core 2 Duo Processor 2.0 GHz processor (2 MB Cache, 800 MHz FSB) with 1 GB of RAM. There are various approaches to determine the performance of data stream classifiers. The performance can most simply be measured by counting the proportion of correctly classified instances in an unseen test dataset. Table 2 summarizes the symbols and terms used throughout the equation 6 to 8.

TABLE 2 Used Symbols and Terms

Symbol N Nc Fp Fn Fe Mnew Term Total instances in the data stream Total novel class instances in the data stream Total existing class instances misclassified as novel class Total novel class instances misclassified as existing class Total existing class instances misclassified % of novel class instances misclassified as existing class % of existing class instances falsely identified as novel class Total misclassified error

Fnew ERR

M new =

Fn *100 Nc

Fp *100 N Nc

(6)

Fnew =

(7)

ERR =

( Fp + Fn + Fe ) *100 N

(8)

The equations 6, 7, and 8 are used to evaluate our approach. Table 3 and table 4 tabulate the results of performance com-

leafspot-size

lt-1/8

seed

dna leaf-malf

fruit-pods

diseased

gt-1/8

few -pr ese n

rm

no

seed

cystnematode

ab s

pre sen

rm

en t

t

2-4-dinjury

no

abn orm

purple-seedstain

Bacterial -blight

int-discolor

frog-eyeleaf-spot

rm

bro

n ab

ne

no

wn

m or

no

precip

brown-stemrot

leaf-mild

plant-growth

ab s

abn

m no r gtplant-growth

no r m

seed-discolor

en t

orm

orm

absent

phyllostictaleaf-spot

lt-norm

up u r-s pe rf

no rm

abs ent

abn

abo

e -nd sec ve -

stem-cankers abo

brown-stemrot

ves oi l

powderymildew

cystnematode

purple-seedstain

t en es pr

no rm

frog-eyeleaf-spot

alternarialeaf -spot

plant-stand

alternarialeaf -spot

diaporthestem-canker

brown-stemrot

no

lt-n orm al

phyllostictaleaf-spot

area-damaged

rm al

sc at te re d

low

frog-eyeleaf-spot

eas -ar

upp

80-89

frog-eyeleaf-spot

germination

10 0 90 -

wh ole ld -fie

frog-eyeleaf-spot

erare as

frog-eyeleaf-spot

8 lt0

alternarialeaf -spot

alternarialeaf -spot

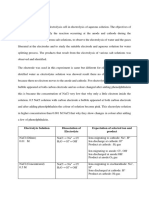

Fig. 1. Decision Tree DTA using Sub Dataset A.

parison between our approach and traditional decision tree classifier. TABLE 3 Performance of Proposed Approach

Dataset Iris Plants Database Image Segmentation Data Large Soybean Database Fitting Contact Lenses Database NSL-KDD Dataset ERR 4 2.9 9.2 16.6 4.0 Mnew 4 1.3 2.8 0 8.4 Fnew 3 2.9 1.9 5.2 1.2

TABLE 4 Performance of Traditional Decision Tree

Dataset Iris Plants Database Image Segmentation Data Large Soybean Database Fitting Contact Lenses Database NSL-KDD Dataset ERR 5.3 5.2 10.8 50 5.3 Mnew 4 3.7 6.5 100 10.0 Fnew 5 5.2 2.8 5.2 1.5

points so that the most recent tree represents the most recent concept in data stream. The main propose of this paper is to improve the performance of decision tree clas- sifier in concept drifting data stream classification prob- lems. The decision tree classifier is very popular super- vised learning algorithm that has several advantages such as it is easy to implement and requires little prior knowledge. We tested the performance of proposed ap- proach on several benchmark datasets, which proved proposed approach efficiently detect novel class and im- prove the classification accuracy. The future work focuses on addressing this problem under dynamic attribute sets.

APPENDIX , AN ILLUSTRATIVE EXAMPLE

CONCLUSION

In this paper, we introduce decision tree classifier based novel class detection in concept drifting data stream clas- sification, which builds a decision tree from data stream. The decision tree continuously updates with new data

In large soybean database from UCI machine learning repos- itory [26], there are total 35 attributes and all the attribute values are nominal-valued. There are 683 data points in this dataset, which are categorized into 19 class attribute values. We split the dataset into 3 sub datasets: sub-dataset A con- tains 356 instances with 10 class attribute values, sub-dataset B contains 107 instances with 5 class attribute values, and sub-dataset C contains 220 instances with 4 class attribute values. We built a decision tree, DTA using sub-dataset A, which is shown in figure 1.

leafspot-size

lt-1/8

gt-1/8

dna

canker-lesion

rm no dk w ro -b

fruit-pods

tan

lk

sed disea

int-discolor

dn t

cystnematode herbicideinjury

dn

few -pr ese n

none

black brow n

brown

b n-

leafspots-marg

arg -m w-s now-s -m arg

norm

no

dn

absent

a

Bacterial -blight

bla ck

absen t

Bacterial -blight

bacterialblight

purple-seedstain

int-discolor

bro

leaves

o abn

leaves

abn

leaf-malf

sen pre

leaf-malf

sen pre

ne

wn

no

orm

rm

rm

seed-size

norm

bacterialpustule

precip

no gt rm

lt-norm

brown-stemrot

alternarialeaf -spot

frog-eyeleaf-spot

no

diaporthepod-&-stemblight

stemcankers

veabo oil s

leaf-malf

abo vet absen

brownstem-rot

2-4-dinjury

charcoalrot

2-4-dinjury

en t

rm

Bacterial -blight

bacterialpustule

plant-growth

m nor abn o

phyllostictaleaf-spot

seed-discolor

t en es pr

abs ent

canker-lesion anthracno

se

bro

ab s

tan

purple-seedstain

no r ma l

frog-eyeleaf-spot

alternarialeaf -spot

plant-stand

lt-n

alternarialeaf -spot

area-damaged

sc att ere d

low

frog-eyeleaf-spot

eas -ar

up

per -ar eas

phyllostictaleaf-spot

powderymildew

no

orm al

diaporthe- purple-seedpod-&-stemstain blight

purple-seedstain

plant-growth

abnorm

norm

dn

wn

rm

cystdiaporthediaporthenematode stem-canker anthracnose stem-canker anthracnose

frog-eyeleaf-spot

germination

-10 90

80-89

frog-eyeleaf-spot

frog-eyeleaf-spot

alternarialeaf alternarialeaf -spot -spot

Fig. 2. Decision Tree DTX using Sub Dataset XA+B.

Then we classified the 356 instances of sub-dataset A by applying the decision tree, DTA that correctly classified 323 instances and misclassified 33 instances. After that we classi- fied 107 instances of sub-dataset B [sub-dataset B contains 5 novel classes] by applying the decision tree DTA that detect novel class arrived. For example, leafspot-size = lt-1/8 and seed = norm: bacterial-blight, this leaf node satisfied 20 instances from sub-dataset A and 10 instances from subdataset B. The other attributes value of 10 instances from sub-dataset B are quite dissimilar than 20 instances from sub-dataset A, which confirms novel class arrived. Then we merged sub-dataset A and sub-dataset B to generate a new dataset XA+B. Next we rebuild the decision tree DTX, which is shown in figure 2. Similarly, we merged dataset XA+B with sub-dataset C [sub-dataset C contains 220 instanc- es with 4 novel classes] and again generate a new dataset XA+B+C. Final, we again rebuild the decision tree DTY, which correctly classified all the 683 instances of dataset XA+B+C to 91.5081% and all the 220 instances of sub-dataset C to 98.6364%. Decision tree, DTY is shown in figure 3.

REFERENCES

[1] M. M. Masud, J. Gao, L. Khan, J. Han, and B. Thuraisingham, Classification and Novel Class Detection in Concept Drifting Data Streams under Time Constraints, IEEE Transactions on Knowledge and Data Engineening, Vol. 23, No. 6, pp. 859-874, June 2011. R. Elwell, and R. Polikar, Incremental Learning of Concept Drift in Nonstationary Environment, IEEE Transactions on Neural Networks, Vol. 22, No. 10, pp. 1517-1531, October 2011. A. Zhou, F. Cao, W. Qian, and C. Jin, Tracking Clusters in Evolving Data Streams over Sliding Window, Knowledge and Information Systems, Vol. 15, No. 2, pp. 181-214, May 2008. E. J. Spinosa, A. P. de Leon, F. de Carvalho, and J. Gama, Cluster-Based Novel Concept Detection in Data Streams Applied to Intrusion Detection in Computer Networks," Proc. 2008 ACM Symp. Applied Computing, pp. 976-980, 2008. J. Z. Kolter, and M. A. Maloof, "Dynamic Weighted Majority: An Ensemble Method for Drifting Concept," Journal of Machine Learning Research, Vol. 8, pp. 2755-2790, 2007. B. R. Dai, J. W. Huang, M. Y. Yeh, and M. S. Chen, Adaptive Clustering for Multiple Evolving Streams, IEEE Transactions on Knowledge and Data Engineening, Vol. 18, No. 9, pp. 1166-1180, September 2006. C. C. Aggarwal, J. Han, J. Wang, and P. S. yu, A Framework for On-Demand Classification of Evolving Data Streams, IEEE Transactions on Knowledge and Data Engineening, Vol. 18, No. 5, pp. 577-589, May 2006.

[2]

[3]

[4]

[5]

[6]

ACKNOWLEDGMENT

This research work was supported by Department of Computer Science and Engineering, United International University, Dhaka, Bangladesh.

[7]

dn

n ltm or

rm

sec - nd

pre sen

t

2-4-dinjury

anthracno se

stem

abn orm

wn bro dk- blk -

fruit-spots

brow n-w/b col lk-sp ore ecks d

t absen

wh ole ld -fie

8 lt0

leafspot-size

lt-1/8

dna int-discolor

none

gt-1/8

canker-lesion

dn a

no

roots

tan

rotted

bro w

black

n

rm

leafspots-marg

w-s-m arg

Bacterial -blight

dk o -br k - bl wn

Bacterial -blight

sts -cy lls ga

brown

phytophthora purple-seed-rot stain

mold-growth

ab sen t

norm

dn

pre sen

cystnematode

area-damaged

red scatte

leaves

ab no rm

t wh

low

leaf-malf

absen

charcoalrot

seed-size

o lt-n

norm

lk-sp ecks

ab sen t

nor m

rg -ma w-s no-

sen pre

bacterialpustule

fruit-spots

t sen ab

leaves

brow

m nor

n-w/b

disto

dna

rt same

brownspot

herbicideherbicideinjury phytophth injury ora-rot phytophth ab ora-rot n

ole -fi eld

s rea r-a pe up eas -ar

stemcankers

below soil

stem

abov e -secn ab

brownstem-rot

2-4-dinjury

d colore

orm

nde

rm

bacterialpustule

orm

ye ar

Bacterial -blight

leaf-malf

ab sen t

fruit-pods

rm

crop-hist

s lstsameyr dif f-l st-

fruiting-bodies

abs ent

pre sen

ab sen t

prese nt

phyllostictaleaf-spot

brownspot

frog-eyeleaf-spot

frog-eyeleaf-spot

frog-eyeleaf-spot

brownspot

brownspot

brownspot

frog-eyeleaf-spot

purple-seed- purple-seedpowderystain stain mildew

no

ent

diaporthepod-&-stem- purple-seedstain blight

cystnematode

leaf-malf

t

normal

bro

pre sen

no

rm

few-p res

wn

dna

-lst-s

ev-yr s

brown- diaporthestem-rot pod-&-stem- downymildew blight

canker-lesion

dna

now -br k dk bl

anthracno se rhizoctoni anthracno a-root-rot plant-growth se ta

o ab

il -so ve

plant-stand

lt-n orm al

o tw ste-l rs am y

dis

n ab

ed eas

orm

fruiting-bodies

sen pre

t absen

brown-spot

date

ma y

seed

o abn

2-4-d-injury

rm

septe mber

rott ed

ril ap

rm

phytophthora -rot

roots

-cy lls ga

augu st

octob

er

rm no

y jul

no

jun e

norm

sts

brown-spot brown-spot

precip

gt-n

precip

lt-n orm

leaf-shread

absen

stem

m no r

alternarialeaf -spot

diaporthestem-canker

anthracnose

anthracnose

phytophthora phytophthora -rot -rot

o lt-n

rm

rm no gt- rm

n ab

sen pre

no

orm

orm

phyllostictabrown-spot leaf-spot

brown-spot phyllosticta- phyllostictaleaf-spot leaf-spot

frog-eyeleaf-spot

seed-tmt

fungic ide

alternarialeaf alternarialeaf -spot -spot

frog-eyeleaf-spot

no

ne

oth er

frog-eyeleaf-spot

alternarialeaf -spot

plant-stand

o lt-n l rma

frog-eyeleaf-spot

no

rm al

alternarialeaf -spot

Fig. 3. Decision Tree DTY using Sub Dataset XA+B+C.

[8] M. M. Gaber, and P. S. Yu, "Detection and Classification of Changes in Evolving Data Streams," Intl Journal of Information Technology & Decision Making, Vol. 5, No. 4, pp. 659-670, 2006. Y. Yang, X. Wu, and X. Zhu, Combining Proactive and Reactive Predictions for Data Streams," Proc. ACM SIGKDD, pp. 710715, 2005. W. Fan, Mining Concept Drifting Data Streams using Ensemble Classifiers," Proc. 10th ACM SIGKDD Intl Conference on Knowledge Discovery and Data Mining, pp. 128-137, 2004. M. Markou, and S. Singh, "Novelty Detection: A Review Part 2: Neural Network based Approaches," Signal Processing, Vol. 83, Issue 12, pp. 2499-2521, December 2003. H. Wang, W. Fan, P. S. Yu, and J. Han, Mining Concept Drifting Data Streams using Ensemble Classifiers, IBM T. J. Watson Research, Hawthorne, NY 10532, Association for Computing Machinery Aug. 24, 2003. G. Hulten, L. Spencer, and P. Domingos, Mining Time Changing Data Streams," Proc. 7th ACM SIGKDD Intl Conference on Knowledge Discovery and Data Mining, ACM New York, NY, USA, pp. 97-106, 2001. [14] G. Widmer, and M. Kubat, "Laerning in the Presence of Concept Drift and Hidden Contests," Machine Learning, Vol. 23, No. 1, pp. 69-101, April 1996. [15] J. R. Quinlan, C4.5: Programs for Machine Learning, Morgan Kaufmann Publishers, San Mateo, CA, 1993. [16] J. R. Quinlan, Induction of Decision Tree, Machine Learning, Vol. 1, pp. 81-106, 1986. [17] L. Breiman, J. H. Friedman, R. A. Olshen, and C. J. Stone, Classification and Regression Trees, Statistics Probability Series, Wadsworth, Belmont, 1984. [18] J. Shafer, R. Agrawal, and M. Meha, SPRINT: A Scalable Parallel Classifier for Data Mining, Morgan Kaufmann, pp. 544-555, 1996. [19] D. Turney, Cost-Sensitive Classification: Empirical Evaluation of a Hybrid Genetic Decision Tree Induction Algorithm, Journal of Artificial Intelligence Research, pp. 369-409, 1995. [20] J. Bala, J. Huang, H. Vafaie, K. DeJong, and H. Wechsler, Hybrid Learning using Genetic Algorithms and Decision Trees for Pattern Classification, Proc. 14th Intl Con. On Artificial Intelligence, Montreal, pp. 1-6, 19-25 August 1995. [21] C. G. Salcedo, S. Chen, D. Whitley, and S. Smith, Fast and Accurate Feature Selection using Hybrid Genetic Strategics, Proc. Genetic and Evolutionary Computation Conference, pp. 1-8, 1999. [22] S. R. Safavian, and D. Landgrebe, A Survey of Decision Tree Classifier Methodology, IEEE Transactions on Systems, Man. and Cybermetics, Vol. 21, No. 3, pp. 660-674, 1991.

[9]

[10]

[11]

[12]

[13]

[23] W. Y. Loh, and X. Shih, Split selection methods for classification tree, Statistica Sininca, Vol. 7, pp. 815-840, 1997. [24] J. McHugh, Testing Intrusion Detection Systems: A critique of the 1998 and 1999 darpa intusion detection evaluations as performed by lincoln laboratory, ACM Transactions on Information and System Security, Vol. 3, No. 4, pp. 262-294, 2000. [25] The KDD Archive. KDD99 cup dataset, http://kdd.ics.uci.edu/databases/kddcup99/kddcup99.html, 1999. [26] A. Frank, and A. Asuncion, UCI Machine Learning Repository, University of California, Irvine, School of Information and Computer Sciences, 2010, http://archive.ics.uci.edu/ml

Amit Biswas is currently completing Master of Science in Computer Science and Engineering from United International University, Bangladesh. He obtained Bachelor of Computer Application (BCA) from Bangalore University, India in 2004. He is an IT professional working as a Team Leader of Software department in a reputed IT company named BASE Limited. He has also worked for Access to Information Programme (A2I), Prime Ministers Office, supported UNDP Bangladesh. He has extensive experience and knowledge on Software Development and Database. Some of his developed software successfully using by PLAN Bangladesh, CARE Bangladesh, Bangladesh Small and Cottage Industries Corporation (BSCIC), Habib Bank Limited, Dutch Bangla Bank, Rahimafrooz, etc. Dr. Dewan Md. Farid received B.Sc. in Computer Science and Engineering from Asian University of Bangladesh in 2003, M.Sc. in Computer Science and Engineering from United International University, Bangladesh in 2004, and Ph.D. in Computer Science and Engineering from Jahangirnagar University, Bangladesh in 2012. He is a part-time faculty member in the Department of Computer Science and Engineering, United International University, Bangladesh and Daffodil International University, Bangladesh. He has published 1 book chapter, 8 journals, and 10 conferences in machine learning, data mining, and intrusion detection. He has participated and presented his papers in international conferences at Malaysia, Portugal, Italy, and France. Dr. Farid is a member of IEEE and IEEE Computer Society. He worked as a visiting researcher at ERIC Laboratory, University Lumire Lyon 2 France from 01-09-2009 to 3006-2010. He received Senior Fellowship I & II awarded by National Science & Information and Communication Technology (NSICT), Ministry of Science & Information and Communication Technology, Government of Bangladesh, in 2008 and 2011 respectively.

Professor Dr. Chowdhury Mofizur Rahman had his B.Sc. (EEE) and M.Sc. (CSE) from Bangladesh University of Engineering and Technology (BUET) in 1989 and 1992 respectively. He earned his Ph.D. from Tokyo Institute of Technology in 1996 under the auspices of Japanese Government scholarship. Prof Chowdhury is presently working as the Pro Vice Chancellor and acting treasurer of United International University (UIU), Dhaka, Bangladesh. He is also one of the founder trustees of UIU. Before joining UIU he worked as the head of Computer Science & Engineering department of Bangladesh University of Engineering & Technology which is the number one technical public university in Bangladesh. His research area covers Data Mining, Machine Learning, AI and Pattern Recognition. He is active in research activities and published around 100 technical papers in international journals and conferences. He was the Editor of IEB journal and worked as the moderator of NCC accredited centers in Bangladesh. He worked as the organizing chair and program committee member of a number of international conferences held in Bangladesh and abroad. At present he is acting as the coordinator from Bangladesh for EU sponsored eLINK project. Prof Chowdhury has been working as the external expert member for Computer Science departments of a number of renowned public and private universities in Bangladesh. He is actively contributing towards the national goal of converting the country towards Digital Bangladesh.

S-ar putea să vă placă și

- Analytic Hierarchy Process (AHP)Document32 paginiAnalytic Hierarchy Process (AHP)techarupÎncă nu există evaluări

- Distribution System ProblemDocument8 paginiDistribution System ProblemzakffblÎncă nu există evaluări

- Additional ProblemDocument3 paginiAdditional ProblemChau Minh NguyenÎncă nu există evaluări

- Unit 1 - BaDocument10 paginiUnit 1 - Baprem nathÎncă nu există evaluări

- 05 M.com Scheme of StudiesDocument22 pagini05 M.com Scheme of StudiesMuhammad ArslanÎncă nu există evaluări

- Question 1: Strategic Business Objectives of Information Systems. Although Many Managers Are Familiar With TheDocument2 paginiQuestion 1: Strategic Business Objectives of Information Systems. Although Many Managers Are Familiar With TheDedar HossainÎncă nu există evaluări

- Performance and Compensation ManagementDocument1 paginăPerformance and Compensation ManagementAkhilesh007Încă nu există evaluări

- TCS iON NPT - Human Resource Manager Test Syllabus: Level: FoundationalDocument6 paginiTCS iON NPT - Human Resource Manager Test Syllabus: Level: FoundationalSankar EswaranÎncă nu există evaluări

- Stnews 49Document2 paginiStnews 49spsarathyÎncă nu există evaluări

- Factor AnalysisDocument8 paginiFactor AnalysisKeerthana keeruÎncă nu există evaluări

- New Approaches To Organizing Human ResourcesDocument12 paginiNew Approaches To Organizing Human ResourcesĐào Minh Quân TrầnÎncă nu există evaluări

- Normal BinomialDocument12 paginiNormal BinomialAira Alaro100% (1)

- Multi-Criteria Group Decision-Making (MCGDM) For Verification of HydroGIS Model Development FrameworkDocument12 paginiMulti-Criteria Group Decision-Making (MCGDM) For Verification of HydroGIS Model Development FrameworkScience DirectÎncă nu există evaluări

- Publicações Com DEADocument648 paginiPublicações Com DEAAnonymous b0cKPzDMqfÎncă nu există evaluări

- EC 203 Final Exam Assessment Question S1 2020 - s11158595Document14 paginiEC 203 Final Exam Assessment Question S1 2020 - s11158595Olly TainaÎncă nu există evaluări

- Measures of Central Tendency: Arithmetic Mean, Median and ModeDocument111 paginiMeasures of Central Tendency: Arithmetic Mean, Median and ModeVishal KumarÎncă nu există evaluări

- Functional Business Systems: Foundations of Information SystemsDocument40 paginiFunctional Business Systems: Foundations of Information SystemsSanjay YadavÎncă nu există evaluări

- SPSSnotesDocument60 paginiSPSSnotesKuthubudeen T MÎncă nu există evaluări

- Midterm Exam: Deadline: December 04, 2021Document13 paginiMidterm Exam: Deadline: December 04, 2021Jannatul FerdaousÎncă nu există evaluări

- MBA 6203 - Assessment 02 Document v3.0 (Group Report) - Group No 06Document14 paginiMBA 6203 - Assessment 02 Document v3.0 (Group Report) - Group No 06andreamuthulakshmiÎncă nu există evaluări

- Performance Management Practices, Employee Attitudes and Managed PerformanceDocument24 paginiPerformance Management Practices, Employee Attitudes and Managed PerformanceMuhammad Naeem100% (1)

- Macro NotesDocument491 paginiMacro NotesMzingayeÎncă nu există evaluări

- Notes For ChapterDocument5 paginiNotes For Chaptersahana gowda0% (1)

- ABM 508 Human Resource ManagementDocument13 paginiABM 508 Human Resource ManagementYogesh SharmaÎncă nu există evaluări

- Case Study: Yakkatech Pty LTD: Page - 1Document8 paginiCase Study: Yakkatech Pty LTD: Page - 1Suraj ShroffÎncă nu există evaluări

- Parameter EstimationDocument24 paginiParameter EstimationMina Arya100% (1)

- DS Sheet#7AnsDocument3 paginiDS Sheet#7AnsCasey Scott0% (2)

- Employee Attrition MiniblogsDocument15 paginiEmployee Attrition MiniblogsCodein100% (1)

- Dabm LabDocument2 paginiDabm LabAnonymous WtjVcZCgÎncă nu există evaluări

- Sequence Diagram Tutorial: UML Distilled, Third Edition, Chapter 4Document17 paginiSequence Diagram Tutorial: UML Distilled, Third Edition, Chapter 4Ravi MittalÎncă nu există evaluări

- MISDocument2 paginiMISvksdsatmÎncă nu există evaluări

- Non-Standard Employment PDFDocument112 paginiNon-Standard Employment PDFMallinatha PNÎncă nu există evaluări

- Study On Pert and CPMDocument14 paginiStudy On Pert and CPMGANAPATHY.S0% (1)

- Understanding Customer Segments through Data MiningDocument24 paginiUnderstanding Customer Segments through Data MiningBiazi100% (1)

- Getting Started TIMES-VEDA V2p7Document145 paginiGetting Started TIMES-VEDA V2p7Kudaibergenov Assylbek100% (1)

- The Final PDFDocument10 paginiThe Final PDFVikas singh RajputÎncă nu există evaluări

- Sta301 Final Quizz by SarfrazDocument67 paginiSta301 Final Quizz by SarfrazSarfraz AliÎncă nu există evaluări

- NUML MBA Marketing Principles Course OutlineDocument3 paginiNUML MBA Marketing Principles Course OutlineCupyCake MaLiya HaSanÎncă nu există evaluări

- Sroi Impact Map TemplateDocument11 paginiSroi Impact Map TemplateMichelle LimÎncă nu există evaluări

- Midterm ReviewDocument72 paginiMidterm ReviewOgonna NwabuikwuÎncă nu există evaluări

- Introduction To DBMS & ER-Diagram: Rishu Gupta & Manish SrivastavaDocument23 paginiIntroduction To DBMS & ER-Diagram: Rishu Gupta & Manish SrivastavaAdarsh SrivastavaÎncă nu există evaluări

- Study On Consumer Behaviour Towards Big Baazar ChennaiDocument7 paginiStudy On Consumer Behaviour Towards Big Baazar Chennaikiran rajÎncă nu există evaluări

- Mob - Square PharmaDocument28 paginiMob - Square PharmaMd Nasir HossainÎncă nu există evaluări

- DEA2013 Proceedings PDFDocument443 paginiDEA2013 Proceedings PDFГалина Йорданова-ЧавговаÎncă nu există evaluări

- Basic Estimation Techniques: Ninth Edition Ninth EditionDocument16 paginiBasic Estimation Techniques: Ninth Edition Ninth EditionNiraj SharmaÎncă nu există evaluări

- ISM and MICMAC Analysis for Supply Chain Complexity FactorsDocument26 paginiISM and MICMAC Analysis for Supply Chain Complexity FactorsGirish Ranjan Mishra100% (1)

- QM Notes SajinJDocument34 paginiQM Notes SajinJSAKETH TUNGALAÎncă nu există evaluări

- Independent University, Bangladesh: Course ID: HRM 543 Autumn 2017 Assignment OnDocument3 paginiIndependent University, Bangladesh: Course ID: HRM 543 Autumn 2017 Assignment OnRejaul Karim100% (1)

- Queeing Theorem - ExampleDocument5 paginiQueeing Theorem - ExamplebizÎncă nu există evaluări

- SLM-Unit 04Document27 paginiSLM-Unit 04Margabandhu NarasimhanÎncă nu există evaluări

- Emmanuel Thanassoulis Auth. Introduction To The Theory and Application of Data Envelopment Analysis A Foundation Text With Integrated SoftwareDocument296 paginiEmmanuel Thanassoulis Auth. Introduction To The Theory and Application of Data Envelopment Analysis A Foundation Text With Integrated SoftwareLeonardo Borges Koslosky0% (2)

- Discussion Ch07Document3 paginiDiscussion Ch07Alberto Lozano Rivas100% (1)

- Demand Estimation of Full-Cut Promotion On E-Commerce CompanyDocument73 paginiDemand Estimation of Full-Cut Promotion On E-Commerce CompanyMd RashidunnabiÎncă nu există evaluări

- Lecture 15 - Microfinance and Poverty ReductionDocument16 paginiLecture 15 - Microfinance and Poverty ReductionSamara ChaudhuryÎncă nu există evaluări

- Red BullDocument23 paginiRed BullMohona Abedeen0% (1)

- Management Accounting - Lecture 1Document15 paginiManagement Accounting - Lecture 1Farzana AkhterÎncă nu există evaluări

- 2887-Article Text-5228-1-10-20180103Document6 pagini2887-Article Text-5228-1-10-20180103Vijay ManiÎncă nu există evaluări

- Data Mining and Visualization Question BankDocument11 paginiData Mining and Visualization Question Bankghost100% (1)

- Novel Mechanisms For Variable Floor of Swimming PoolsDocument5 paginiNovel Mechanisms For Variable Floor of Swimming PoolsJournal of Computer Science and EngineeringÎncă nu există evaluări

- Development of A Localized Mobile Application For Indigenous Information SharingDocument5 paginiDevelopment of A Localized Mobile Application For Indigenous Information SharingJournal of Computer Science and EngineeringÎncă nu există evaluări

- A Conceptual Design of A Low Cost Identification Management System For NigeriaDocument8 paginiA Conceptual Design of A Low Cost Identification Management System For NigeriaJournal of Computer Science and EngineeringÎncă nu există evaluări

- MACE Correlation Filter Algorithm For Face Verification in Surveillance ScenarioDocument10 paginiMACE Correlation Filter Algorithm For Face Verification in Surveillance ScenarioJournal of Computer Science and EngineeringÎncă nu există evaluări

- Flexible Multi-Core Processor DesignDocument8 paginiFlexible Multi-Core Processor DesignJournal of Computer Science and EngineeringÎncă nu există evaluări

- A Comparative Analysis On The Wavelet-Based Image Compression TechniquesDocument6 paginiA Comparative Analysis On The Wavelet-Based Image Compression TechniquesJournal of Computer Science and EngineeringÎncă nu există evaluări

- C-Based Design of Window Join For Dynamically Reconfigurable HardwareDocument9 paginiC-Based Design of Window Join For Dynamically Reconfigurable HardwareJournal of Computer Science and EngineeringÎncă nu există evaluări

- Combining Temporal Abstraction and Integrity Constraint Checking in Clinical DatabasesDocument5 paginiCombining Temporal Abstraction and Integrity Constraint Checking in Clinical DatabasesJournal of Computer Science and EngineeringÎncă nu există evaluări

- Integrating Clustering With Different Data Mining Techniques in The Diagnosis of Heart DiseaseDocument10 paginiIntegrating Clustering With Different Data Mining Techniques in The Diagnosis of Heart DiseaseJournal of Computer Science and EngineeringÎncă nu există evaluări

- Graph-Based Morphological AnalysisDocument4 paginiGraph-Based Morphological AnalysisJournal of Computer Science and EngineeringÎncă nu există evaluări

- A Study of Genetic - Principal Component Analysis in The Feature Extraction and Recognition of Face ImagesDocument5 paginiA Study of Genetic - Principal Component Analysis in The Feature Extraction and Recognition of Face ImagesJournal of Computer Science and EngineeringÎncă nu există evaluări

- Short Message Service (SMS) User Interface System To Support University Portal ServicesDocument5 paginiShort Message Service (SMS) User Interface System To Support University Portal ServicesJournal of Computer Science and Engineering100% (2)

- A Comparative Study of Biobutanol Processes As Biofuel From Agricultural SubstratesDocument11 paginiA Comparative Study of Biobutanol Processes As Biofuel From Agricultural SubstratesJournal of Computer Science and EngineeringÎncă nu există evaluări

- A Framework For Removing Snow From An Image Via Image DecompositionDocument3 paginiA Framework For Removing Snow From An Image Via Image DecompositionJournal of Computer Science and EngineeringÎncă nu există evaluări

- Document Image Authentication and Data Repairing Using Secret Sharing MethodDocument4 paginiDocument Image Authentication and Data Repairing Using Secret Sharing MethodJournal of Computer Science and EngineeringÎncă nu există evaluări

- Hybrid Clonal Selection Algorithm With Curriculum Based Statistics For University CourseTime TablingDocument8 paginiHybrid Clonal Selection Algorithm With Curriculum Based Statistics For University CourseTime TablingJournal of Computer Science and EngineeringÎncă nu există evaluări

- Super Resolution Image Using Dictionary TechniqueDocument9 paginiSuper Resolution Image Using Dictionary TechniqueJournal of Computer Science and EngineeringÎncă nu există evaluări

- Resolving Semantic Heterogeneity in Healthcare: An Ontology Matching ApproachDocument7 paginiResolving Semantic Heterogeneity in Healthcare: An Ontology Matching ApproachJournal of Computer Science and EngineeringÎncă nu există evaluări

- Error-Detecting Code-Based Minimum Logic of Guaranteed Timing/Soft Error ResilienceDocument10 paginiError-Detecting Code-Based Minimum Logic of Guaranteed Timing/Soft Error ResilienceJournal of Computer Science and EngineeringÎncă nu există evaluări

- Enhancement of Knowledge Based Authentication Mechanism Using Graphical Password Via PersuasionDocument4 paginiEnhancement of Knowledge Based Authentication Mechanism Using Graphical Password Via PersuasionJournal of Computer Science and EngineeringÎncă nu există evaluări

- Parallel Collections: A Free Lunch?Document5 paginiParallel Collections: A Free Lunch?Journal of Computer Science and EngineeringÎncă nu există evaluări

- Generic Hybrid Semantic Search ApproachDocument7 paginiGeneric Hybrid Semantic Search ApproachJournal of Computer Science and EngineeringÎncă nu există evaluări

- A Reduction Model For Natural Language Text RetrievalDocument8 paginiA Reduction Model For Natural Language Text RetrievalJournal of Computer Science and EngineeringÎncă nu există evaluări

- Comparison of Different Methods For The Detection Bearing FaultsDocument6 paginiComparison of Different Methods For The Detection Bearing FaultsJournal of Computer Science and EngineeringÎncă nu există evaluări

- The Privacy Implications of Cloud Computing in The Context of Software Reverse EngineeringDocument7 paginiThe Privacy Implications of Cloud Computing in The Context of Software Reverse EngineeringJournal of Computer Science and EngineeringÎncă nu există evaluări

- Revised General Test/Gross Point Average System Via Fuzzy Logic TechniquesDocument9 paginiRevised General Test/Gross Point Average System Via Fuzzy Logic TechniquesJournal of Computer Science and Engineering100% (1)

- Virtual Distance Sensor Information For Effective Obstacle Avoidance of Mobile RobotsDocument5 paginiVirtual Distance Sensor Information For Effective Obstacle Avoidance of Mobile RobotsJournal of Computer Science and EngineeringÎncă nu există evaluări

- Conceptual Design Process Model For Executive Information System Data Store: A Communication-Driven PerspectiveDocument6 paginiConceptual Design Process Model For Executive Information System Data Store: A Communication-Driven PerspectiveJournal of Computer Science and EngineeringÎncă nu există evaluări

- Future Request Predicting Disk Scheduler For VirtualizationDocument6 paginiFuture Request Predicting Disk Scheduler For VirtualizationJournal of Computer Science and EngineeringÎncă nu există evaluări

- Mqr-Tree: A 2-Dimensional Spatial Access MethodDocument12 paginiMqr-Tree: A 2-Dimensional Spatial Access MethodJournal of Computer Science and EngineeringÎncă nu există evaluări

- Principles of Differential RelayingDocument115 paginiPrinciples of Differential RelayingelitesankarÎncă nu există evaluări

- Short Term Load Forecast Using Fuzzy LogicDocument9 paginiShort Term Load Forecast Using Fuzzy LogicRakesh KumarÎncă nu există evaluări

- Java Array, Inheritance, Exception Handling Interview QuestionsDocument14 paginiJava Array, Inheritance, Exception Handling Interview QuestionsMuthumanikandan Hariraman0% (1)

- Transportation Installation R2000iC210FDocument25 paginiTransportation Installation R2000iC210FMeet PAtel100% (2)

- The Role of Pets in PreadolescentDocument17 paginiThe Role of Pets in PreadolescentshimmyÎncă nu există evaluări

- 913-2174-01 - Ibypass VHD User Guide Version 1.5 - Revh - VanphDocument218 pagini913-2174-01 - Ibypass VHD User Guide Version 1.5 - Revh - Vanphpham hai van100% (1)

- Model SRX-101A: Operation ManualDocument31 paginiModel SRX-101A: Operation ManualSebastian SamolewskiÎncă nu există evaluări

- Laing Electric Heater-CirculatorDocument20 paginiLaing Electric Heater-Circulatorkamilawehbe100% (1)

- Introducing Congestion Avoidance: Implement The Diffserv Qos ModelDocument39 paginiIntroducing Congestion Avoidance: Implement The Diffserv Qos ModelrajkumarlodhÎncă nu există evaluări

- Basic Symbols and Fundamental Elements of Technical Drawing 1Document26 paginiBasic Symbols and Fundamental Elements of Technical Drawing 1seanmatthewferrerÎncă nu există evaluări

- GAS-RELEASE CALCULATORDocument3 paginiGAS-RELEASE CALCULATOREduardo Paulini VillanuevaÎncă nu există evaluări

- Js4n2nat 4Document2 paginiJs4n2nat 4tingÎncă nu există evaluări

- LED Linear Highbay LightingDocument7 paginiLED Linear Highbay LightingMohammed YasarÎncă nu există evaluări

- The Structure of MatterDocument3 paginiThe Structure of MatterFull StudyÎncă nu există evaluări

- Ace Signal and System PDFDocument144 paginiAce Signal and System PDFYash Rai100% (1)

- Quasi VarianceDocument2 paginiQuasi Varianceharrison9Încă nu există evaluări

- Discussion Exp 2 Chm674Document4 paginiDiscussion Exp 2 Chm674Eva Lizwina MatinÎncă nu există evaluări

- Peabody y Movent ABCDocument11 paginiPeabody y Movent ABCIngrid BarkoÎncă nu există evaluări

- The Chemistry of Gemstone Colours 2016Document1 paginăThe Chemistry of Gemstone Colours 2016Lukau João PedroÎncă nu există evaluări

- Science - Abm8566 SMDocument51 paginiScience - Abm8566 SMBhabaniÎncă nu există evaluări

- Arduino Library For The AM2302 Humidity and Temperature SensorDocument2 paginiArduino Library For The AM2302 Humidity and Temperature SensorMallickarjunaÎncă nu există evaluări

- Schindler Drive Chain MaintenanceDocument9 paginiSchindler Drive Chain MaintenanceKevin aliÎncă nu există evaluări

- Components of A BarrageDocument21 paginiComponents of A BarrageEngr.Hamid Ismail CheemaÎncă nu există evaluări

- Instantaneous Waterhammer EquationDocument10 paginiInstantaneous Waterhammer EquationkiranÎncă nu există evaluări

- User's Manual: Electrolyte AnalyzerDocument25 paginiUser's Manual: Electrolyte AnalyzerNghi NguyenÎncă nu există evaluări

- Problem Solving 1 Arithmetic SequenceDocument62 paginiProblem Solving 1 Arithmetic SequenceCitrus National High SchoolÎncă nu există evaluări

- MOBICON Mobile Controller For GSM/GPRS Telemetry: 6ai 16-28di /12doDocument2 paginiMOBICON Mobile Controller For GSM/GPRS Telemetry: 6ai 16-28di /12doĐặng Trung AnhÎncă nu există evaluări

- GGGDocument23 paginiGGGWarNaWarNiÎncă nu există evaluări

- Foundations On Friction Creep Piles in Soft ClaysDocument11 paginiFoundations On Friction Creep Piles in Soft ClaysGhaith M. SalihÎncă nu există evaluări

- POWER Nav1 - MIDTERM - Topic 1 & 2Document31 paginiPOWER Nav1 - MIDTERM - Topic 1 & 2CLIJOHN PABLO FORD100% (1)