Documente Academic

Documente Profesional

Documente Cultură

L13

Încărcat de

Marco Deezy Di SanoDescriere originală:

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

L13

Încărcat de

Marco Deezy Di SanoDrepturi de autor:

Formate disponibile

02/02/2011

CODE OPTIMIZATIONS

Optimization for scalar machines is a problem that was solved ten years ago David Kuck - 1990 Rice University

Machines have changed since 1980 Changes in architecture changes in compilers

new features present new problems changing costs lead to different concerns must re-engineer well-known solutions

Compiler optimizations can significantly improve performance

02/02/2011

Why Optimizations?

Compiler optimization is essential for modern computer architectures.

Without optimization, most applications would perform very poorly on modern architectures

Even with optimization, most applications do not get a high fraction of peak performance

Optimization techniques are also basis for exploiting SIMD components, vector units, hyperthreading, other forms of multithreading

What is it that compilers can exploit?

What does the compiler (writer) need to know about architectures?

Why Study Optimizations?

A better understanding of optimization techniques, and especially their limitations, can help us become better programmers.

do I = n, m, p Program OhNoNotPtrsAndFuncs k=2*i+q*q ptr p, q ; var x, y, z , w[20] ; x( i + f(n)) = x(k + q) y = DoSomething ( x, y, z, w ) ; ..... x = SomeFunc ( p, q, y, z ) ; w[x + *p] = w[ *q] ; What can compilers exploit? What defeats optimization?

02/02/2011

The Word Optimization

A clear misnomer!! Even for simple programs, we cannot prove that a given version is optimal on a specific machine, let alone create a compiler to generate that optimal version.

produce improved code, not optimal code can sometimes produce worse code range of speedup might be from 1.01 to 4 (or more )

Definition

An optimization is a transformation that is expected to:

1. improve the running time of a program, or 2. decrease its space requirements

Classical optimizations

reduce number of instructions reduce cost of instructions change ordering of instructions (latency) execute instructions in parallel modify data placement (registers, cache)

reduce power consumption

02/02/2011

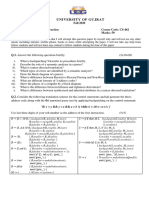

Traditional Two-pass Compiler

Source code Front End Back End

IR

Target code

Error messages

Front end maps legal source code into IR Back end maps IR into target machine code Admits multiple front ends & multiple passes Typically, front end is O(n) or O(n log n), while back end is NPC

Where Optimization is Performed

Very little scope for optimization in front end

Computation of arithmetic expressions, simplification of logical expressions Any work performed here is dependent on parsing process: syntaxdirected Semantic analysis begins to gather information that may help in optimization

Important optimizations take place in back end

Adapt code to use actual registers provided, or to better exploit functional units

02/02/2011

Complexity of Modern Compilers

A commercial compiler is usually a very large and sophisticated piece of software developed over years or even decades. A complete industrial compiler may have some millions of lines of code E.g.

1. 2. 3.

Suns Fortran compiler has ca. 4 MLOC (Million Lines of Code) Open64 Fortran/C/C++ has ca. 7 MLOC No one person understands the complete system.

Pro64/Open64 Framework

Fortran 95 front end common multilevel IR

MIPS code gen.

CC front end front end C++ front end C++ front end

IA 64 code generator

02/02/2011

Sun Compiler Components

F77 source

F90/f95 source

CC source

Intermediate Representation

IR Opt

CG

object

How can optimizations improve code quality?

Machine independent transformations 1. replace a redundant computation with a reference

2. 3. 4. 5. move evaluation to a less frequently executed place specialize some general purpose code find useless code and remove it expose an opportunity for another optimization

Machine dependent transformations 1. replace a costly operation with a cheaper one 2. replace a sequence of instructions with a more powerful one

3.

4. 5.

hide latency

improve locality lower power consumption

02/02/2011

Middle End

Source code

Error messages I I Front End R Middle End R Back End

Target code

Middle end is dedicated to code improvement

Analyzes IR and rewrites (or transforms) IR Primary goal is assumed to be to reduce running time of the compiled code May also improve space, power consumption, Improvements must provably preserve meaning of the code

Some people distinguish middle end from back end, some dont

The goal : produce fast code

What is optimality? Many problems are hard, or intractable, or NP- complete Which optimizations should we use? Lots of optimizations overlap and/ or interact.

02/02/2011

A few examples common subexpression elimination constant folding dead code elimination instruction scheduling register allocation loop transformations Analysis versus Optimization knowledge doesnt make code run faster; changing the code can sometimes make it run faster. We use analysis to transform code.

The Optimizer (or Middle End)

IR

Opt 1

IR

Opt 2

IR

Opt 3

IR ...

Opt n

IR

Errors

There are

aModerncommon transformations as a series of passes lot of optimizers are structured

Discover and propagate some constant value Move a computation to a less frequently executed place Specialize some computation based on context Discover and remove a redundant computation Remove useless or unreachable code Encode an idiom in some particularly efficient form

02/02/2011

Structure of an Optimizing Compiler

Source code

Compiler

For the average user, a compiler is a black box.

Target code

Source code

Front End Back End

Target code

For the informed user (or computer scientist), the compiler is structured as a front and back end

Structure of an Optimizing Compiler Source code Target code

Front End Back End

A real compiler has a more complex internal structure. it consists of a series of phases that analyze and transform a program Analysis and transformation

Source code

phase1

phase2

phase3

phase4

Target code

02/02/2011

What does an Optimizing Compiler do?

Performs a good deal of program analysis Gathers facts about program and its data Analyzes individual procedures Studies use of data between procedures Examines references to arrays Compares symbolic expressions Applies transformations Provably correct program modifications with a specific purpose. Rewrite regions of IR if appropriate and legal. Analysis must prove that application of transformation is legal

We want to improve the code some how. Improvements should be objective

easy to quantify concrete, i. e., measurements seem easy to take

Code either gets faster or slower! But,

linear time heuristics for hard problems unforeseen consequences multiple ways to achieve the same end

Experimental science takes a lot of work.

10

02/02/2011

Scope of Optimization

Local (or single block)

confined to straight line code simplest to analyze time frame: sixties, seventies, maybe now?

Intraprocedural (or global)

consider the whole procedure

What do we need to optimize an entire procedure? classical data- flow analysis, dependence analysis time frame: seventies thru to now. analyze whole programs What do we need to optimize an entire program? analysis and representation still not clear time frame: late seventies thru to now.

Interprocedural (or whole program)

Desirable properties for an optimizing compiler

code as good as or better than an assembler programmer stable, robust performance (predictability ) architectural strengths fully exploited architectural weaknesses fully hidden broad, efficient support for language features instantaneous compiles Unfortunately, modern compilers often drop the ball.

11

02/02/2011

Good compilers are crafted, not assembled

consistent philosophy careful selection of transformations thorough application coordination between transformations and data structures attention to the results (code, time, space) Compilers are engineered objects

minimize running time of compiled code minimize compile time use reasonable compile time space (serious problem)

Transformation and

Optimization improves a program by making a number of modifications to it.

Such modifications are formalized as program transformations. They are applied to an intermediate representation Some optimizations are local, and may affect a few operations Others may affect entire regions of a program

rewrite rules

12

02/02/2011

Analysis

conditions governing rewrite

Transformations must be provably correct

it must be guaranteed that they do not change semantics of program

In general, an analysis of program is needed to prove that application of a given transformation is permitted in a specific context (i.e. to prove its correctness). The quality of the analysis (results) may have a profound impact on the ability of the compiler to optimize by applying transformations

Implementing Analysis

There are many trade-offs to be considered in practice

Ability to apply a transformation depends on whether compiler can prove it is correct So quality of optimization depends on results of analysis Can the information be derived from the IR?

Depends upon how the program was written and what the IR represents

Execution of some analyses very time-consuming Cost of analysis in terms of programming effort and execution time are major issues in compiler design

13

02/02/2011

Machine independent optimization

Redundant Expression Elimination (Common Subexpression Elimination) Use an address or value that has been previously computed. Consider control and data dependencies

x : = a + b => x := a+b

y := a +b y := x Can also eliminate redundant memory references, branch tests

Common Subexpression Some transformations are well Elimination applicable. known and widely

Goal: Eliminate redundant (multiple) computations. Two expressions are equivalent only if they produce same result. True if they are identical and none of the operands are redefined in the intervening code.

t3 = a * t2 t4 = t3 * t1 t5 = t4 + b t6 = t3 * t1

t7 = t6 + b

c = t5 * t7

Here, we can eliminate the computation of t6.

14

02/02/2011

Machine independent optimization

Partially Redundant Expression (PRE) Elimination A variant of Redundant Expression Elimination. If a value or address is redundant along some execution paths, add computations to other paths to create a fully redundant expression (which is then removed).

Like CSE but earlier expression available only along some path if then => if then x := a+b t :=a+b; x:=t end else t:=a+b I end else I y := a+b y := t

Machine independent optimization

Constant Propagation If a variable is known to contain a particular constant value at a particular point in the program, replace references to the variable at that point with that constant value.

x:=5 y := x+2

=> =>

x:=5 y :=5+2 => y := 7

15

02/02/2011

Machine independent optimization

Copy Propagation After the assignment of one variable to another, a reference to one variable may be replaced with the value of the other variable (until one or the other of the variables is reassigned). (This may also set up dead code elimination. Why?)

x := y w := w+ x

=>

x := y w := w+y

Machine independent optimization

Constant Folding An expression involving constant (literal) values may be evaluated and simplified to a constant result value. Particularly useful when constant propagation is performed.

x: = 3+4 =>

x := 7

16

02/02/2011

Machine independent optimization

Dead Code Elimination Expressions or statements whose values or effects are unused may be eliminated.

if (false) Istruzione

Machine independent optimization

Strength Reduction Replace an expensive instruction with an equivalent but cheaper alternative. For example a division may be replaced by multiplication of a reciprocal, or a list append may be replaced by cons operations.

17

02/02/2011

Machine independent optimization

Loop Invariant Code Motion An expression that is invariant in a loop may be moved to the loops header, evaluated once, and reused within the loop. Safety and profitability issues may be involved.

Loop-invariant code motion for j := 1 to 10 for i := 1 to 10 a[i] := a[i] + b[j] for j := 1 to 10 { t := b[j] for i := 1 to 10 a[i] := a[i] + t }

Machine independent optimization

Scalarization (Scalar Replacement) A field of a structure or an element of anv array that is repeatedly read or written may be copied to a local variable, accessed using the local, and later (if necessary) copied back. This optimization allows the local variable (and in effect the field or array component) to be allocated to a register.

18

02/02/2011

Machine independent optimization:loop unrolling

Replace a loop body executed N times with an expanded loop body consisting of M copies of the loop body. This expanded loop body is executed N/M times, reducing loop overhead and increasing optimization possibilities within the expanded loop body.

for i := 1 to N a[i] := a[i] + 1 for i := 1 to N by 4 a[i] := a[i] + 1 a[i+1] := a[i+1] + 1 a[i+2] := a[i+2] + 1 a[i+3] := a[i+3] + 1 Creates more optimization opportunities in loop body

DO Loop Normalization

DO I = N, M, 2 A(I) = = .. I + 1 END DO

This transformation makes it easier to specify and apply transformations.

DO I = 1, (M-N+2)/2 A(N + (I-1)*2) = = ..N + (I-1)*2 + 1 END DO

Iteration space is standardized, but subscript expressions are more complex. This may temporarily worsen performance of code.

19

02/02/2011

Hoisting Code out of Loop is Guaranteed to Improve Speed

DO I = 1, 10 J=2 A(I) = A(I) + J END DO J J=2 DO I = 1, 10 A(I) = A(I) + J END DO J

Call Inlining

At the site of a call, insert the body of a subprogram, with actual parameters initializing formal parameters.

Inlining l := w:=4 a := area(l,w) l := w := 4 a := l*w l := w := 4 a := l <<2 Many simple optimizations become important after inlining Interprocedural constant propagation

20

02/02/2011

Subroutine Inlining Increases Programs Size

DO I = 1, N J = A(I) CALL SUB(A(I),J,N) CALL SUB(B(I),J,N) CALL SUB(C(I),J,N) CALL SUB(D(I),J,N) .. SUBROUTINE SUB(X,J,M) TEMP = X + 2 * J TEMP = TEMP / M X = TEMP * TEMP END

DO I = 1, N J = A(I) TEMP = A(I) + 2 * J TEMP = TEMP / M A(I) = TEMP * TEMP TEMP = B(I) + 2 * J TEMP = TEMP / M B(I) = TEMP * TEMP TEMP = C(I) + 2 * J TEMP = TEMP / M C(I) = TEMP * TEMP TEMP = D(I) + 2 * J TEMP = TEMP / M D(I) = TEMP * TEMP

Code Hoisting and Sinking If the same code sequence appears in two or more alternative execution paths, the code may be hoisted to a common ancestor or sunk to a common successor. (This reduces code size, but does not reduce instruction count.)

21

02/02/2011

Call Optimizations

Static binding of dynamic calls

Calls

through function pointers in imperative languages Call of computed function in functional language OO-dispatch in OO languages (e.g., COOL) If receiver class can be deduced, can replace with direct call Other optimizations possible even when multiple targets (e.g., using PICs = polymorphic inline caches)

Procedure specialization

Partial

evaluation

Machine-dependent Optimizations

1. 2. 3. 4. 5.

Register allocation Instruction selection Important for CISCs Instruction scheduling Particularly important with long-delay instructions and on wide-issue machines (superscalar + VLIW)

22

02/02/2011

Machine dependent optimizaation

Local Register Allocation Within a basic block (a straight line sequence of code) track register contents and reuse variables and constants from registers.

Global Register Allocation Within a subprogram, frequently accessed variables and constants are allocated to registers. Usually there are many more register candidates than available registers. Interprocedural Register Allocation Variables and constants accessed by more than one subprogram are allocated to registers. This can greatly reduce call/return overhead.

Register Allocation

Performed when the object code is almost ready Goal is to minimize CPU delay waiting for data (typically 60% of total execution time is actually waiting for data)

Symbolic registers may be used initially But careful choice of data to put in registers is necessary Algorithms are heuristic, this is NP-complete

23

02/02/2011

Machine dependent optimizaation

Register Targeting Compute values directly into the intended target register. Interprocedural Code Motion Move instructions across subprogram boundaries.

Software Pipelining A value needed in iteration i of a loop is computed during iteration i-1 (or i-2, ...). This allows long latency operations (floating point divides and square roots, low hit-ratio loads) to execute in parallel with other operations. Software pipelining is sometimes called symbolic loop unrolling.

Machine dependent optimizaation

Data Cache Optimizations Locality Optimizations Cluster accesses of data values both spacially (within a cache line) and temporally (for repeated use). Loop interchange and loop tiling improve temporal locality. Conflict Optimizations Adjust data locations so that data used consecutively and repeatedly dont share the same cache location.

24

02/02/2011

Machine dependent optimizaation

Instruction Cache Optimizations Instructions that are repeatedly reexecuted should be accessed from the instruction cache rather than the secondary cache or memory. Loops and hot instruction sequences should fit within the cache. Temporally close instruction sequences should not map to conflicting cache locations.

The Phase Ordering Problem

In what order should optimizations be performed? Some optimizations create opportunities for others (order according to this dependence) Can make some optimizations simple

Later optimization will clean up Common subexpression elimination register allocation Register allocation instructions scheduling Constant folding constant propagation

What about adverse interactions

What about cyclic dependences?

25

02/02/2011

Kinds of Analysis

Classification of analysis according to kind of information derived

Control flow analysis Scalar data flow analysis (Data) dependence analysis

Classification of analysis according to scope of results: 1. Basic block analysis 2. Intraprocedural analysis 3. Interprocedural analysis

Analysis determines the applicability of transformations

Transformation

There are many potentially useful transformations When a compiler is written, the developers must consider

Trade-off between the cost of implementing and executing a transformation versus its likely usefulness

Question without a proper answer: what is the input program like? Transformations are usually combined into a general strategy for optimizing: an individual transformation may be applied more than once to a program as part of a strategy

26

02/02/2011

Kinds of Transformation

Many transformations have been proposed for a variety of purposes. They may be

specific to a source language. The best developed set of these is for Fortran (especially Fortran 77) source language and target machine neutral (more-or-less) target machine specific

Optimization Challenges

Problem with Analysis

We want as much information as possible But we dont want the compiler to be too slow or to take up too much space We dont know what the optimal program is like We dont know too much about an input program So what are the useful changes?

Problem with Transformations

27

02/02/2011

Another Challenge

Some transformations are guaranteed to improve program performance when applied, but

Most are not They may occasionally degrade performance They may negatively affect size of program

Optimization Strategy

So compiler writer makes some assumptions about the form of input program

And bases selection of analyses and optimizations upon it

Optimizations may work well on some input programs and not on others Compiler usually provides choices of strategy Variety of optimization flags used by smart application developer to get custom strategy

Optimization strategies are strongly heuristic

28

02/02/2011

Role of IR

Analyses gather information on the program represented by the IR

Information not available in the IR cannot be exploited E.g. some IRs represent arrays and loops, others do not

Transformations modify IR, possibly changing what it represents

Optimizing compilers use several different IRs

Using IR

int a, b, c, d ; c=a+b; d=c+1;

ldw a,r1

ldw b,r2 add r1,r2,r3 stw r3,c ldw c,r3 add r3,1,r4 stw r4,d add r1,r2,r3 add r3,1,r4

29

02/02/2011

Role of User In Optimization

Most compilers allow the user to decide what optimizations are carried out

This usually includes some optimization levels but may also include flags or options that specify the application of specific optimizations

O0, O1, O2, O3, Flags are compiler-specific, there are no standards

Example: Sun Compiler

The compiler supports the xOn option to specify the level of optimization you wish to apply Each level includes the lower optimization levels Choices for n are:

n=1 Basic block optimization n=2 Global optimization n=3 Loop unrolling and modulo scheduling n=4 - Intra-file inlining and pointer tracking n=5 Aggressive optimizations

30

02/02/2011

Example, ctd: Minimal Effort Flags

There may be other macros One gets good performance out of SUN compilers by just using 3 options on compile and link line:

For UltraSparc- II : -fast xtarget=ultra2 xarch=v8plusa -fast xtarget=ultra2 xarch=v9a

For UltraSparc- III : -fast xtarget=ultra3 xarch=v8plusb -fast xtarget=ultra3 xarch=v9b

Example, ctd.: The fast macro

It tries to give optimal performance with one single option

-xdepend -xvector=yes Both Languages -xtarget= native -xO5

Fortran

-xprefetch=yes -ftrap=common -xlibmopt -pad=local -f (align common) -fsingle

-fns

-fsimple=2 -dalign -xlibmil -ftrap=%none C

-xbuiltin=%all -xalias_level=basic

31

02/02/2011

Example, ctd.: Use of Flags

The SUN compilers use rightmost wins rule In case of conflicting options, the option most to the right on the compile line wins ( this usually happens if compiler macros are used) When in doubt use the v option to see the macro expansions You can also use the dryrun option to see the macro expansion

Role of User In Optimization

If a program does not perform well on a given machine, it can be hard to understand why Factors

The way the program was written Features of the compiler The compiler flags used The hardware

Each compiler is different!

32

02/02/2011

Goals of Optimization

Memory hierarchy inefficiencies lead to very high stall rate in current machines

CPU spends ca. 60% of total execution time waiting for data

Early on in compilation, reorganize execution of loops to get better cache hit rate Later, examine simplified code and try to reduce number of variables, reduce work of computing array addresses At end of compilation, put heavily used variables into registers

Applying Transformations

Order of application of transformations may influence outcome. Some transformations may be applied with the purpose of enabling other transformations.

We may deliberately degrade performance in an enabling transformation if we expect it will subsequently lead to a dramatic improvement.

33

02/02/2011

Selecting Transformations

Compiler developer must decide whether to implement a known transformation:

Is it essential for the optimization goals? If not, how frequently is it likely to be used to modify code ? Does it simplify the implementation of some essential part of the optimization process? How hard is it to implement? How time-consuming is its execution? How often will this lead to a program improvement? How great is that improvement likely to be?

Summary: Optimizations

Optimization is not a single process or procedure.

Rather it is a collection of strategies for program improvement that may be applied at various stages during compilation. One compiler may implement a number of distinct strategies that may be used according to the needs of the user and the kind of application.

Compilers are rather more complex than introductory courses suggest.

34

02/02/2011

Putting the Pieces Together

Optimizations in the back end or in the middle end of a modern compiler are usually performed in a series of phases. Some of them are executed multiple times. In a real world compiler, this can be pretty complex We look at some examples

Modern Restructuring Compiler

Source Code

Front End

HL HL AST Restructure AST

IR Gen

IR

Opt + Back End

Machine code

Modern restructuring transformations: Full and partial inlining of routines Blocking for memory hierarchy and register reuse Vectorization Parallelization All based on dependence analysis

Errors

35

02/02/2011

Classic Compilers

1957: The FORTRAN Automatic Coding System

Front End

Index Optimizn

Code Merge

bookkeeping

Flow Analysis

Register Allocation

Final Assembly

Front End

Middle End

Back End

Six passes in a fixed order Generated good code

Assumed unlimited index registers Moved code out of loops, with ifs and gotos Did flow analysis & register allocation

Classic Compilers

1969: IBMs FORTRAN H Compiler

Scan & Parse Build CFG & DOM Find Busy Vars CSE Loop Inv Code Motn Copy Elim. OSR Re Reg. assoc Alloc.

(consts)

Final Assy.

Front End

Middle End

Back End

Used low-level IR (quads), identified loops with dominators Focused on optimizing loops (inside out order)

Fairly modern set of passes

Simple front end, simple back end for IBM 370

36

02/02/2011

Classic Compilers

1980: IBMs PL.8 Compiler

Front End

Middle End

Back End

Dead code elimination, code motion, constant folding, strength reduction, value numbering, dead store elimination, code straightening, algebraic re-association

Classic Compilers

1986: HPs PA-RISC Compiler

Front End

Middle End

Back End

Several front ends, an optimizer, a back end Four fixed-order choices for optimization (9 passes) Coloring allocator, instruction scheduler, peephole optimizer

37

02/02/2011

Modern Compilers

The SGI Pro64 Compiler (now Open64)

Fortran C C++ Front End Middle End Back End Interpr. Anal. & Optimn Loop Nest Optimn Global Optimn Code Gen.

Open source optimizing compiler for IA 64 Multiple front ends, 1 back end Five levels of IR Gradual lowering of abstraction level

Modern Compilers

The SGI Pro64 Compiler (now Open64)

Fortran C C++ Front End Middle End Back End Interpr. Anal. & Optimn Loop Nest Optimn Global Optimn Code Gen.

Interprocedural analysis Inlining (user and library code) Cloning (for constants and locality) Dead function, dead variable elimination

38

02/02/2011

Modern Compilers

The SGI Pro64 Compiler (now Open64)

Fortran C C++ Front End Middle End Back End Interpr. Anal. & Optimn Loop Nest Optimn Global Optimn Code Gen.

Loop nest optimizations Dependence analysis Loop transformations: interchange, fission, fusion, peeling, tiling, unroll and jam Parallelization

The SGI Pro64 Compiler (now Open64)

Fortran C C++ Front End Interpr. Anal. & Optimn

Modern Compilers

Loop Nest Optimn Global Optimn Code Gen.

Global analysis Intraprocedural Data flow analysis (using SSA form) Constant propagation

Middle End

Back End

39

02/02/2011

Modern Compilers

The SGI Pro64 Compiler for IA64 (now Open64)

Fortran C C++ Front End Middle End Back End Interpr. Anal. & Optimn Loop Nest Optimn Global Optimn Code Gen.

Code generation If conversion and predication Code motion Instruction scheduling, register allocation Peephole optimizations

40

S-ar putea să vă placă și

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- Compiler Design-Code OptimizationDocument150 paginiCompiler Design-Code OptimizationmanicheeseÎncă nu există evaluări

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Compiler Construction: Lab Report # 08Document5 paginiCompiler Construction: Lab Report # 08malik shahzaibÎncă nu există evaluări

- Compiler Ch10Document30 paginiCompiler Ch10api-3712520Încă nu există evaluări

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Code Optimization IIIDocument12 paginiCode Optimization IIIAakash D.VÎncă nu există evaluări

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- Compiler DesignDocument23 paginiCompiler DesignNikodimos EndeshawÎncă nu există evaluări

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Value NumberingDocument28 paginiValue NumberingMust BastaniÎncă nu există evaluări

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (399)

- Impri MirDocument16 paginiImpri MirDeliana DelianaÎncă nu există evaluări

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- Chapter 4 Syntax Directed TranslationDocument37 paginiChapter 4 Syntax Directed Translationbiruk tsegayeÎncă nu există evaluări

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- Grahneclass12 PDFDocument41 paginiGrahneclass12 PDFvaralakshmiÎncă nu există evaluări

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- Pass and Phase DifferenceDocument1 paginăPass and Phase DifferenceJoinKingÎncă nu există evaluări

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Code OptimizationDocument90 paginiCode Optimizationbrahmesh_sm0% (1)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Compiler Construction CS-4207: Instructor Name: Atif IshaqDocument13 paginiCompiler Construction CS-4207: Instructor Name: Atif IshaqFaisal ShehzadÎncă nu există evaluări

- CS-462 - Compiler Construction - Final TermDocument2 paginiCS-462 - Compiler Construction - Final TermarslanshahzadseffÎncă nu există evaluări

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- Lecture - 03 Compiler Overview - Lexical Analysis, Tokens, Ad-Hoc LexerDocument50 paginiLecture - 03 Compiler Overview - Lexical Analysis, Tokens, Ad-Hoc LexerMehtab HashimÎncă nu există evaluări

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- SAP Material Movement CodesDocument16 paginiSAP Material Movement CodesokherreraÎncă nu există evaluări

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- Unit 2. The Parts of A CompilerDocument24 paginiUnit 2. The Parts of A CompilerHuy Đỗ QuangÎncă nu există evaluări

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- Lec00 OutlineDocument17 paginiLec00 OutlineRavi MaggonÎncă nu există evaluări

- Osyv/.J (: TB RSS GF/L NTDocument19 paginiOsyv/.J (: TB RSS GF/L NTZaman SalilÎncă nu există evaluări

- TBO VII-chomsky Normal FormDocument38 paginiTBO VII-chomsky Normal FormInel HasnelyÎncă nu există evaluări

- Principles of Code OptimizationDocument28 paginiPrinciples of Code OptimizationTIJUKAÎncă nu există evaluări

- Compiler Optimizations PresentationDocument105 paginiCompiler Optimizations PresentationShubham DixitÎncă nu există evaluări

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- Lex and YaccDocument10 paginiLex and YaccAliMirzaieÎncă nu există evaluări

- Lecture 11 - Syntax Directed TranslationDocument57 paginiLecture 11 - Syntax Directed TranslationMD Habibul Basar FaruqÎncă nu există evaluări

- SDD Compiler DesignDocument102 paginiSDD Compiler DesignDeepak MishraÎncă nu există evaluări

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- Compier DesignDocument18 paginiCompier Designchakri1989Încă nu există evaluări

- Lecture 04Document35 paginiLecture 04Abdul MateenÎncă nu există evaluări

- Ss Mod4 PDFDocument37 paginiSs Mod4 PDFLokeshwari TharaniÎncă nu există evaluări

- Week 3 Lec 6 CCDocument19 paginiWeek 3 Lec 6 CCSohaib KashmiriÎncă nu există evaluări

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (120)

- Flex BisonDocument80 paginiFlex BisonMilorad Rankić100% (1)

- Coursenotes Lesson1Document59 paginiCoursenotes Lesson1Aland BravoÎncă nu există evaluări

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)