Documente Academic

Documente Profesional

Documente Cultură

תקשורת ספרתית- הרצאה 2 - גילוי חד-מימדי

Încărcat de

RonTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

תקשורת ספרתית- הרצאה 2 - גילוי חד-מימדי

Încărcat de

RonDrepturi de autor:

Formate disponibile

1

2 :

2

I. Summary of last lecture

A communication system can be modeled by a block diagram

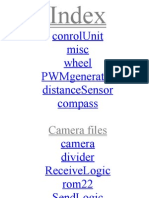

Figure 1: Block diagram of communication system

We talk on:

The Communication Problem:

What is the massage?

The Radar Problem:

Is there a target Yes or No?

The Estimation Problem:

What is the value of an unknown parameter ?

3

The Communication Problem

A digital communication system transmits information by

sending ones and zeros. when

"one" is sent as

"zero" is sent as

apriori probabilities of transmission are known

1

H

0

H

1 1

0 0 1

( ) ( )

( ) ( ) 1

P one was transmitted P H p

P zeor one was transmitted P H p p

=

= =

1 0 i

Given P(H ), P(H ), and P(received signal | H ) , i = 0,1

Design an optimal receiver in the sense of minimum probability of error

4

1 1 0 0

e

Pe = P(H )P(error | H ) + Cos P(H )P(error | H ).

P is the probabil

t fu

ity o

nction:

f error

The Communication Problem

The communication problem:

1 0 i

Given P(H ), P(H ), and P(received signal | H ) , i = 0,1

Design an optimal receiver in the sense of minimum probability of error

5

A simple model of a Communication System

( ) ( ) * ( ) ( ) r t x t h t n t = + ( ) ( )

i

i

x t s t iT =

1 2

( , ,... ,....) =

i

s s s s

1 2

( , ,... ,....)

i

s s s = s

6

Simple Case:

The Binary Channel, the decision rule

The input get value in

the set

A={0,1 },

P(a=0)=q; P(a=1)=1-q

The output get value in

the set

B={x, y)

The transition Probability

of the channel is 1-p

and p

q

1-q

What is the decision rule in order to minimize the error ?

7

The Binary Channel, the decision rule

D(x) is the decision rule when x was received

If D(X) = 0 -> P(e|x)=(1-q)p

If D(X) = 1 -> P(e|x)=q(1-p)

What is the decision rule in order to minimize the error ?

0

1

x

y

1-p

1-p

p

x

x

c

q

1-q

D(X) =0

8

How to make the decision

Let us minimize the probability of error when x

was received

( ) 0

( )

1

1

( ) 0

1

0

( )

(1 ) (1

(

)

1 | 0)

| )

1

( 1

D x

D x

D x

D x

P r x p

P

q p q p

or

r x p

p q

p q

=

=

=

=

<

=

=

=

< =

Ratio of aprior

probabilities

q

1-q

D(X)

=0

9

The decision Rule for y

The same type of decision rule

( ) 0

( ) 1

( ) 0

( ) 1

1

0

(1 )(

( | 0)

( | 1)

1 )

1

1

D y

D y

D y

D y

p P r y

P

qp q p

or

p

p r p

q

q y

=

=

=

=

<

=

=

<

=

=

q

1-q D(y) =1

10

The Probability of Error

The error probability depends on

the decision rule . Note:

1

0

0

1

0 1

0 1

( , ) ( ( ), ) ( , ) ( ( ), )

[ ( | 0) ( ( ), 0) ( | 0) ( ( ), 0)]

[ ( | 1) ( ( ),1) ( | 1) ( ( ),1)]

( | 0) ( , 0

(

) ( | 1) ( ,1)

( | 0) ( , 0) ( | 0) ( , 0

( ) ( )

( ) ) )

e

k

P p x k W D x k p y k W D y k

p p x W D x p y W D y

p p x W D x p y W D y

p p x W p p x W

p p y W p p y

D x D x

D y y W D

=

= +

= +

+ +

= +

+ +

0

( , )

1

a b

W a b

a b

=

11

1-D Detection Problem / Binary Case

Given a communication system with the following

two hypotheses:

are known constants which are transmitted

with a priori probabilities

n is a discrete or continuous random variable with a

known probability density function

1 1

0 0 1

( ) ( )

( ) ( ) 1

P one was transmitted P H p

P zeor was transmitted P H p p

=

= =

0 0

1 1

:

:

H r s n

H r s n

= +

= +

( ) ( )

n n

P x n x dx f x dx < < + =

, 0,1

i

p i =

, 0,1

i

s i =

i

s

n

r

12

Math Lab code

% Parameters.

Delay = 3; DataL = 20; R = .5; Fs = 8; Fd = 1; PropD = 0;

% Generate random data.

x = .75*sign(randsrc(DataL, 1, [], 1245));

% at time 0, 1/Fd, 2/Fd, ...

tx = [PropD: PropD + DataL - 1] ./ Fd;

% Plot data.

figure

stem(tx, x, 'kx');

% Set axes and labels.

axis([0 30 -1.6 1.6]); xlabel('Time'); ylabel('Amplitude');

y=x+(nx-1/2)

figure

axis([0 30 -1.6 1.6]); xlabel('Time'); ylabel('Amplitude');

stem(tx, nx, 'kx');

figure

axis([0 30 -1.6 1.6]); xlabel('Time'); ylabel('Amplitude');

stem(tx, y, 'kx');

13

Example

A

m

p

l

i

t

u

d

e

14

Objective of the Decision Problem

1 1 0 0

e

Pe = P(H )P(error | H ) + Cos P(H )P(error | H ).

P is the probabil

t fu

ity o

nction:

f error

1 0 i

Given P(H ), P(H ), and P(received signal | H ) , i = 0,1

Design an optimal receiver in the sense of minimum probability of error

15

Bayes Decision Rule

The channel mapping from the

message set A={s

0,

s

1

) to a

received signal r

The receiver has to decided for

each value of r either

r

0 1

or H H

0 0

1 1

( ) { : ( ) , }

( ) { : ( ) , }

A r r D r H r

A r r D r H r

= =

= =

r

0 0

( ) Receiver decides when r was received D r H H =

1

( ) A r

Let us denote by

0

( ) r

16

The Subsets

The subsets

satisfy

1 0

( ) and ( ) A r A r

1 0

1. ( ) ( ) A r A r = I

We must make a

decision either 0 or 1

For each value of r

we have to make a

decision

1 0

2. ( ) ( ) A r A r = U

r

0

( ) r

1

( ) A r

17

r

0

( ) r

1

( ) A r

How to make decision ?

Find the optimal receiver (in the sense of minimum probability of

error) which will make a decision based on the observation of r

such that P

e

is minimal

and

0 1 0

1 0 1

0 1 0 1 0 1

( ) P(H was decided| )

( ) P(H was decided| )

P(r A (r)| ) P(r A (r)| )

e

e

P P H sent H sent

P H sent H sent

P p H sent p H sent

=

+

= +

1

c e

P P =

18

The General Decision Problem

We have the following relation

r

0

( ) r

1

( ) A r

0 0 0 0

( )

( | ) ( | ) ( ( ) | ) = =

o

A r

o

P H H f r H dr P r A r H

1 0 0 0 0 1 0

( )

1

( | ) ( | ) 1 ( | ) ( ( ) | ) = = =

A r

P H H f r H dr P H H P r A r H

1 1 1 1 1

( )

1

( | ) ( | ) ( ( ) | ) = =

n

A r

P H H f r H dr P r A r H

0 1 1 1 1 0 1

( )

0

( | ) ( | ) 1 ( | ) ( ( ) | ) = = =

A r

P H H f r H dr P H H P r A r H

19

The probability of correct decision

0 0 0 1 1 1

0 0 1 1

( ) ( | ) ( ) ( | )

( ) ( | ) ( ) ( | )

= +

= +

c c c

P P H P H H P H P H H

P H f r H dr P H f r H dr

A (r) r)

o

A (

1

r

0

( ) r

1

( ) A r

We need to maximize it!!

20

The Optimal Decision

Now we must choose the decision regions for H

0

and H

1

in such a manner that the probability of a correct decision

will be maximized

Let us define the function

Thus

r

( ) 1 G r =

( ) 0 G r =

1

1 ( )

( )

0 ( )

o

r A r

G r

r A r

.

0 0 0 1 1 1

0 0 1 1

( ) ( | ) ( ) ( | )

( ) ( ) ( | ) ( ) (1 ( )) ( | )

c c c

P P H P H H P H P H H

P H G r f r H dr P H G r f r H dr

= +

= +

1

A (r) (r)

21

The Result

Now we can write everything under the same integral

The probability of a correct decision will be maximized if

for every r the integrand will be maximized. Therefore,

the decision rule will be:

( )

0 0 1 1

0 0 1 1

( ) ( ) ( | ) ( ) (1 ( )) ( | )

( ) ( ) ( | ) ( )(1 ( )) ( | )

= +

= +

c

r r

r

P P H G r f r H dr P H G r f r H dr

P H G r f r H P H G r f r H dr

0 0 1 1

1 0 0 1 1

( ) { : ( ) ( | ) ( ) ( | )}

( ) { : ( ) ( | ) ( ) ( | )}

o

A r r P H f r H P H f r H

A r r P H f r H P H f r H

= >

=

r

( ) 1 G r =

( ) 0 G r =

22

Likelihood Ratio Test

An equivalent test is (why ?)

1

0

( ) log ( ) log

e e

H

r r

H

= =

<

%

1

1 0

0 1

0

( | ) ( )

( | ) ( )

H

f r H P H

f r H P H

H

<

Alternately, we may write

This is called a Bayes test

1

0

( | )

( )

( | )

f r H

r

f r H

=

The quantity on the left is called the Likelihood ratio and denoted by

0

1

( )

( )

P H

P H

=

The quantity on the right is the threshold

23

The optimal receiver

The optimal receiver can be implement as follows

and the probability of error is given by

1

0

e

1

0

( )

( ) log

( )

H

P H

r

P H

H

<

%

( ) log ( )

e

r r =

%

0 0 1 1

( ) ( )

( ) ( | ) ( ) ( | )

e

r r

P P H f r H dr P H f r H dr

<

= +

% %

24

Comments

The optimal decision rule is a function of the aprior

probabilities of the source and the

conditional probabilities for discrete outputs

conditional density functions for the case that the output is

continuous random variable

We did not use the assumption that the channel is additive,

we actually give the solution for the more general case

( , ), 0,1

i

r g s n i = =

25

Example 1: The additive Gaussian random

noise

Assumption:

Source:

Channel:

Noise: n is Gaussian random variable independent of

the source,

i.e.,

Note that

1

0

:

:

H r s n

H r s n

= +

= +

2

2

2

1

( ) exp( )

2

2

n

n

f n

=

1 1

0 0

: , ( ) 1 ;

: , ( )

=

=

H s P H p

H s P H p

2

(0, ) N

1 1

( | ) ( | ) ( )

n

f r H f r s n H f n r s = = + = =

2

2

2

2

( )

2

n

n

e

P n

=

26

The optimal receiver

The decision rule can also be written as

2

2

2

2

( )

exp( )

2

( ) log ( ) log

( )

exp( )

2

= =

+

%

e

r s

r r

r s

2 2

2 2 2

( ) ( )

( ) 2

2 2

+

= + =

%

r s r s s

r r

,

1

0

( ) log ( ) log

e e

H

r r

H

= =

<

%

1

2

0

log

2 1

<

e

H

p

r

s p

H

27

The decision region for p=1/2

0

p

0

p +

0

( )

r H

f r

1

( )

r H

f r

1

s

0

{ } 1 0

Pr H Pr H error error

| |

|

\

28

Error Probability for

Let us first compute

1

2

0

log

2 1

e

H

p

r T

s p

H

=

<

2

0 0

2

2

1 ( )

[ | ] ( | ) exp( )

2

2

T T

r s

P e H f r H dr dr

+

= =

Change variables: z=

r s dr

dz

+

=

Lower bound is replaced by log

2 1

+

= +

e

T s p s

s p

2

0

1

[ | ] exp( ) ( )

2

2

T s

z T s

P e H dz Q

+

+

= =

0

[ | ] P e H

0

[ | ] P e H

29

Error Probability for

Let us first compute

1

2

0

log

2 1

=

<

e

H

p

r T

s p

H

2

1 1

2

2

1 ( )

[ | ] ( | ) exp( )

2

2

T T

r s

P e H f r H dr dr

= =

Cange variables: z= -

r s dr

dz

=

2

1

1

[ | ] exp( ) ( )

2

2

T s

z T s

P e H dz Q

= =

1

[ | ] P e H

Upper bound is replaced by

- log

2 1

e

T s p s

s p

= +

30

The Total probability of error

0 0 1 1

( ) ( | ) ( ) ( | )

e

P P H P e H P H P e H = +

0 1

[ ] ( ) [ ] ( )

e

T s T s

P P H Q P H Q

+

= +

1

2

0

log

2 1

e

H

p

r T

s p

H

=

<

0 1

[ ] ( log ) [ ] ( log )

2 1 2 1

e e e

s p s p

P P H Q P H Q

s p s p

= + +

( log ) (1 ) ( log )

2 1 2 1

e e e

d p d p

P pQ p Q

d p d p

= + +

2 d s = Let us denote by the Euclidean

distance between the two symbols

31

Conclusions (I)

For p=1/2

When p>1/2 the decision line is moving to the right

0

p

0

p +

0

( )

r H

f r

1

( )

r H

f r

1

s

( )

2

e

d

P Q

=

P>1/2

P<1/2

0

32

Conclusions (II)

The error probability depends on the distance between the

two symbols and not on the actual values of the symbols

0 0 0

1 1 1

1

: :

: :

2

= + = +

= + = +

=

o

H r s n H r A n

H r s n H r A n

for

s s

A

0

p

0

p +

0

( )

r H

f r

1

( )

r H

f r

1

s

equivalent for d= 2A

33

On the Q(x) function

Q(x) is called Q function or Gaussian integral function

The Q(x) is related to the complementary error function

Erfc(x) as follows

There are several well known bounds

(*)

to Q(x)

2

1

Pr [ ] exp( ) ( ), (0,1)

2

2

x

z

ob n x dz Q x n N

> = = =

2

2

( ) exp( )

1

( ) ( / 2)

2

x

Erfc x z dz

Q x Erfc x

=

=

(*) P. O. Borjesson and Catl-Erik W. Sundberg, " Simple Approximations of the error function Q(x) for

Communication Applications," IEEE Trans. of Comm. Com-27, pp. 639-642, No. 3, March 1979

2

2

2

1

( ) min(1/ 2, ) exp( )

2

2

1 1

( ) (1 ) exp( )

2 2

x

Q x

x

x

Q x

x x

34

The error probability function Q(x)

2

1

z /2

2

x

Q(x) e dz

=

2

x

2

1

2 x

Q(x) e

2

x

2

1

2

Q(x) e

35

Example 2: The Poisson Channel

The Poisson distribution of events is used frequently as

a model of shot noise and other diverse phenomena.

We have to design a light detector using photon

counter with the following probability law:

Pr [ ] ; ( )

!

= =

n

ob n pulses e E n

n

0

1

0

0 0

1

1 1

: ( | )

!

: ( | )

!

=

=

n

n

H f n H e

n

H f n H e

n

We observe only this number which ranges from 0 to and

obeys Poisson distribution on both hypotheses. Aprior

probabilities are known

1 0 >

0 1

( ) ( ) 1/ 2 P H P H = =

36

Poisson Case: The Decision rule

Find the Likelihood ratio

1

0 1

0

1

1 1

0 0

0

( | )

!

( )

( | )

!

| |

= = =

|

\

n

n

n

e

f n H

n

n e

f n H

e

n

The decision rule

1

0 1

0 1

0 1

0

( )

log( ( )) log log

( )

| |

= + =

|

<

\

e

H

P H

n n

P H

H 1

1 0

1 0

0

log log

+

<

H

n

H

or

37

Poisson Case: The probability of Error

The probability of error is given by

where N* is a solution to the equation

0 1

* *

0 1

0 1

( ) ( )

! !

<

= +

n n

E

n N n N

P P H e P H e

n n

*

1 0

1 0

min( : )

log log

+

= >

N n n

38

Example 3: Random Source

Assumption:

Source:

Noise: n is Gaussian random variable independent

from the source,

i.e.,

0 0

1 1

:

:

H r s n

H r s n

= +

= +

2

2

2

1

( ) exp( )

2

n

n

f n

=

0 0 0

1 1 1

1/ 2

: ( ) 1/ 2

1/ 2

3 1/ 2

: ( ) 1/ 2

3 1/ 2

a probability

H s P H

a probability

a probability

H s P H

a probability

= =

= =

2

(0, ) N

-6 -4 -2 2 4 6

0.1

0.2

0.3

0.4

0.5

-3a -a a 3a

39

The optimal receiver

The decision rule can also be written as

2

1

2 2 2

2

2 2 2

0

3 3 9

exp( ) exp( ) exp( )

2

( ) 1

exp( ) exp( ) exp( )

2

H

ra ra a

r

ra ra a

H

| |

+

|

\

=

<

| |

+

|

\

2 2

1

2 2

0

2 2

1

2 2

0

( 3 ) ( 3 )

exp( ) exp( )

( )

2 2

( ) 1

( ) ( ) ( )

exp( ) exp( )

2 2

H

r a r a

P H

r

r a r a P H

H

+

+

= =

+ <

+

,

2

2

1

4

2

2

0

3

cosh( )

cosh( )

a

H

ra

e

ra

H

| |

|

|

<

|

\

1

0

2

H

for a r a

H

>>>

<

40

The decision region

{ }

1 0

Pr H Pr H error error

| |

|

\

r

-6 -4 -2 2 4 6

0.1

0.2

0.3

0.4

0.5

-3a -a a 3a

41

Summary

The quantity on the left is called the log Likelihood ratio

and denoted by

1

0

( | )

log ( ) log

( | )

f r H

r

f r H

=

0 0

1 1

: ( , )

: ( , )

H r g s n

H r g s n

=

=

A solution for the binary decision case

The optimal receiver

1

0

e

1

0

( )

( ) log

( )

H

P H

r

P H

H

<

%

S-ar putea să vă placă și

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- Yehuda Berg Satan PDFDocument77 paginiYehuda Berg Satan PDFOswaldo Archundia100% (7)

- Management Science - Lecture 2Document9 paginiManagement Science - Lecture 2Nicole SallanÎncă nu există evaluări

- Caeneus and PoseidonDocument77 paginiCaeneus and PoseidonSabrina CareyÎncă nu există evaluări

- מבוא לחישוב- תרגיל כיתה 14 - קריאה וכתיבה לקובץDocument2 paginiמבוא לחישוב- תרגיל כיתה 14 - קריאה וכתיבה לקובץRonÎncă nu există evaluări

- מבוא לחישוב- הצעת פתרון לתרגיל בית 6 - 2010Document12 paginiמבוא לחישוב- הצעת פתרון לתרגיל בית 6 - 2010RonÎncă nu există evaluări

- מבוא לחישוב- תרגיל כיתה 5 - לולאות, ENUM, CASTINGDocument3 paginiמבוא לחישוב- תרגיל כיתה 5 - לולאות, ENUM, CASTINGRonÎncă nu există evaluări

- מבוא לחישוב- הצעת פתרון לתרגיל בית 5 - 2010Document20 paginiמבוא לחישוב- הצעת פתרון לתרגיל בית 5 - 2010RonÎncă nu există evaluări

- מבוא לחישוב- הצעת פתרון לתרגיל בית 3 - 2010Document3 paginiמבוא לחישוב- הצעת פתרון לתרגיל בית 3 - 2010RonÎncă nu există evaluări

- מערכות הפעלה- תרגיל בית 1 - 2013Document2 paginiמערכות הפעלה- תרגיל בית 1 - 2013RonÎncă nu există evaluări

- משובצות- קוד רפרנסDocument29 paginiמשובצות- קוד רפרנסRonÎncă nu există evaluări

- שפות סימולציה- הצעת פתרון למעבדה 1Document5 paginiשפות סימולציה- הצעת פתרון למעבדה 1RonÎncă nu există evaluări

- VLSI- תרגול 0 - Mosfet CapacitanceDocument2 paginiVLSI- תרגול 0 - Mosfet CapacitanceRonÎncă nu există evaluări

- VLSI- הרצאה 1 - מבואDocument51 paginiVLSI- הרצאה 1 - מבואRonÎncă nu există evaluări

- סיכום תמציתי של פקודות ותחביר ב-VHDLDocument4 paginiסיכום תמציתי של פקודות ותחביר ב-VHDLRonÎncă nu există evaluări

- Quick Start Guide: For Quartus II SoftwareDocument6 paginiQuick Start Guide: For Quartus II SoftwareRonÎncă nu există evaluări

- מדריך modelsim למתחיליםDocument22 paginiמדריך modelsim למתחיליםRonÎncă nu există evaluări

- עיבוד תמונה- תרגיל כיתה 10 - morphology, חלק 1Document31 paginiעיבוד תמונה- תרגיל כיתה 10 - morphology, חלק 1RonÎncă nu există evaluări

- עיבוד תמונה- תרגיל כיתה 4 - sobel and harrisDocument15 paginiעיבוד תמונה- תרגיל כיתה 4 - sobel and harrisRonÎncă nu există evaluări

- עיבוד תמונה- הרצאות - Canny Edge DetectionDocument24 paginiעיבוד תמונה- הרצאות - Canny Edge DetectionRonÎncă nu există evaluări

- תכנון מיקרו מעבדים- הספר המלא של בראנובDocument267 paginiתכנון מיקרו מעבדים- הספר המלא של בראנובRonÎncă nu există evaluări

- שפות סימולציה- חומר נלווה - Tips for Successful Practice of SimulationDocument7 paginiשפות סימולציה- חומר נלווה - Tips for Successful Practice of SimulationRonÎncă nu există evaluări

- ARTS 9 Q2 M2 Wk2Document21 paginiARTS 9 Q2 M2 Wk2Matt LimÎncă nu există evaluări

- Catalyst Worksheet - SHHSDocument3 paginiCatalyst Worksheet - SHHSNerd 101Încă nu există evaluări

- Miltel - Case Study, Steven Age, UKDocument2 paginiMiltel - Case Study, Steven Age, UKAnit SahuÎncă nu există evaluări

- Audio Spot LightingDocument20 paginiAudio Spot LightingLavanya Vaishnavi D.A.Încă nu există evaluări

- Responsible Living: Mantri DevelopersDocument15 paginiResponsible Living: Mantri Developersnadaf8Încă nu există evaluări

- Modern Residential Housing in UAEDocument15 paginiModern Residential Housing in UAEBee Dan BudhachettriÎncă nu există evaluări

- The Relaxation Solution Quick Start GuideDocument17 paginiThe Relaxation Solution Quick Start GuideSteve DiamondÎncă nu există evaluări

- Modeling of Conducted EMI (Model Motor Simulink Bun)Document8 paginiModeling of Conducted EMI (Model Motor Simulink Bun)Ioan ŢileaÎncă nu există evaluări

- Action, Desire and Subjectivity in Prabhakara MimamsaDocument28 paginiAction, Desire and Subjectivity in Prabhakara Mimamsasiddy_777Încă nu există evaluări

- Aabroo Recyclable Waste Program Survey: 1. Demographic InformationDocument2 paginiAabroo Recyclable Waste Program Survey: 1. Demographic InformationIqra KhaliqÎncă nu există evaluări

- Identifying - Explaining Brake System FunctionsDocument39 paginiIdentifying - Explaining Brake System FunctionsJestoni100% (1)

- Nestle Internship ResumeDocument2 paginiNestle Internship ResumeHasnain AshrafÎncă nu există evaluări

- Analisis Keterampilan Berpikir Kritis Mahasiswa Pada Materi KinematikaDocument11 paginiAnalisis Keterampilan Berpikir Kritis Mahasiswa Pada Materi KinematikaTrisnaÎncă nu există evaluări

- Band Structure Engineering in Gallium Sulfde NanostructuresDocument9 paginiBand Structure Engineering in Gallium Sulfde NanostructuresucimolfettaÎncă nu există evaluări

- Lecture Notes Unit-1 (Network Operating System) : Session: 2021-22Document17 paginiLecture Notes Unit-1 (Network Operating System) : Session: 2021-22Pradeep BediÎncă nu există evaluări

- 500 Word LIST Synonim of TOEFLDocument22 pagini500 Word LIST Synonim of TOEFLNurul JulinarÎncă nu există evaluări

- 8v92ta DetroitDocument554 pagini8v92ta Detroit10000389% (9)

- Servo Controlled FBW With Power Boost Control, Operations & Maint. ManualDocument126 paginiServo Controlled FBW With Power Boost Control, Operations & Maint. ManualKota NatarajanÎncă nu există evaluări

- Power SupplyDocument79 paginiPower SupplySharad KumbharanaÎncă nu există evaluări

- B2 - Effects of UV-C Treatment and Cold Storage On Ergosterol and Vitamin D2 Contents in Different Parts of White and Brown Mushroom (Agaricus Bisporus)Document6 paginiB2 - Effects of UV-C Treatment and Cold Storage On Ergosterol and Vitamin D2 Contents in Different Parts of White and Brown Mushroom (Agaricus Bisporus)Nadya Mei LindaÎncă nu există evaluări

- Institute of Metallurgy and Materials Engineering Faculty of Chemical and Materials Engineering University of The Punjab LahoreDocument10 paginiInstitute of Metallurgy and Materials Engineering Faculty of Chemical and Materials Engineering University of The Punjab LahoreMUmairQrÎncă nu există evaluări

- 0707-Passive VoiceDocument6 pagini0707-Passive VoiceKhôi TrầnÎncă nu există evaluări

- Math Diagnostic ExamDocument4 paginiMath Diagnostic ExamMananquil JeromeÎncă nu există evaluări

- tmpE0D4 TMPDocument7 paginitmpE0D4 TMPFrontiersÎncă nu există evaluări

- MFI 2 - Unit 3 - SB - L+SDocument10 paginiMFI 2 - Unit 3 - SB - L+SHoan HoàngÎncă nu există evaluări

- Flooding Deagon Flood Flag MapDocument1 paginăFlooding Deagon Flood Flag MapNgaire TaylorÎncă nu există evaluări

- Indian Standard: Methods of Chemical Testing of LeatherDocument75 paginiIndian Standard: Methods of Chemical Testing of LeatherAshish DixitÎncă nu există evaluări