Documente Academic

Documente Profesional

Documente Cultură

A Closure Looks To Iris Recognition System: Sukhwinder Singh, Ajay Jatav

Titlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

A Closure Looks To Iris Recognition System: Sukhwinder Singh, Ajay Jatav

Drepturi de autor:

Formate disponibile

IOSR Journal of Engineering (IOSRJEN) e-ISSN: 2250-3021, p-ISSN: 2278-8719 Vol. 3, Issue 3 (Mar.

2013), ||V1 || PP 43-49

A closure looks to Iris Recognition system

Sukhwinder Singh, Ajay Jatav

Assistant professor, at PEC Chandigarh, ME student at PEC Chandigarh, Abstract: Iris Recognition Has Become A Popular Research In Recent Years. Due To Its Reliability And Nearly Perfect Recognition Rates, It Is Used In High Security Areas. Some Of The Applications Of Iris Recognition System Are Border Control In Airports And Harbors, Access Control In Laboratories And Factories, Identification For Automatic Teller Machines (Atms) And Restricted Access To Police Evidence Rooms. This Paper Provides A Review Of Major Iris Recognition Researches. There Are Four Main Stages In Iris Recognition System Namely: Segmentation, Normalization, Feature Extraction And Matching. A Literature Review Of The Most Prominent Algorithms Implemented Is Presented In This Paper And Lastly We Made A Compression Of Some More Iris Recognition Algorithms. Keyword: Iris Recognition, Segmentation, Normalization, Features Extraction, Matching.

I.

INTRODUCTION

Biometric authentication has been receiving extensive attention over the past decade with increasing demands in automated personal identification. The most popular biometric features are based on individuals signature, retinal, faces, iris, fingerprints, hand and voices. Among all these biometric techniques, iris recognition is one of the most promising approaches due to its high reliability for personal identification. The iris is a thin circular diaphragm, which lies between the cornea and the lens of the human eye. A front-on view of the iris is shown in Figure 1. The iris is perforated close to its centre by a circular aperture known as the pupil. The function of the iris is to control the amount of light entering through the pupil, and this is done by the sphincter and the dilator muscles, which adjust the size of the pupil. The average diameter of the iris is 12 mm, and the pupil size can vary from 10% to 80% of the iris diameter.

Figure 1. front view of human eye The iris has unique features and complex enough to be used as biometric signature. This means that the probability of finding two people with identical iris pattern is almost zero. Image processing techniques can be employed to extract the unique iris pattern from a digitized image of the eye, and encode it into a biometric template, which can be stored in a database. This biometric template contains an objective mathematical representation of the unique information stored in the iris, and allows comparisons to be made between templates. When a subject wishes to be identified by an Iris recognition system, their eye is first photographed, and then a template created for their iris region. This template is then compared with the other templates stored in a database until either a matching template is found and the subject is identified, or no match is found and the subject remains unidentified. John Daugman, was first implemented a working automated iris recognition system [1]. The Daugman system is patented [2] and the rights are now owned by the company Iridian Technologies. Even though the Daugman system is the most successful and most well known, many other systems have been developed. The most notable include the systems of Wildes et al. [3][4], Boles and Boashash [5], Lim et al. [6], and Noh et al. [7]. The algorithms by Lim et al. are used in the iris recognition system developed by the Ever media and Senex companies. Also, the Noh et al. algorithm is used in the IRIS2000 system, sold by IriTech.

www.iosrjen.org

43 | P a g e

A closure looks to Iris Recognition system

II. DAUGMAN,S WORK In daugmans work [1] the visible texture of a persons in real -time video image is encoded into compact sequence of multi-scale quadrature 2-D Gabor Wavelet coefficients, whose most-significant bits comprise a 256-byte iris code. The final outcome of this work was a mathematical proof that there were sufficient degrees-offreedom, or form of variation in the iris among individuals, to impart to it the same singularity as a conventional fingerprint. Also uncertain was whether efficient algorithms could be developed to extract a detailed iris description reliably from a live video image, generate a compact code for the iris (of minuscule length compared with image data size), and render a decision about individual identity with high statistical confidence. The final problem was whether the algorithms involved could be executed in real time on a general-purpose microprocessor. During his work all of these question were resolved and a working system was described. Daugmans work comprises four main parts namely 1- Segmentation. 2- Normalization. 3- Feature extraction. 4- Matching. 1.1. Segmentation Daugman makes use of an integro-differential operator for locating the circular iris and pupil regions, and also the arcs of the upper and lower eyelids. The integro-differential operator is defined as (, ) 2

( , 0 ,0 )

, 0 0

whereI(x,y) is the eye image, r is the radius to search for, ()is a Gaussian smoothing function, and s is the contour of the circle given by r,0 , 0 . The operator searches for the circular path where there is maximum change in pixel values, by varying the radius and centre x and y position of the circular contour. The operator is applied iteratively with the amount of smoothing progressively reduced in order to attain precise localization. Eyelids are localized in a similar manner, with the path of contour integration changed from circular to an arc. 1.2. Normalization For normalization Daugmans[1] invented rubber sheet model in which he remaps each point within the iris region to a pair of polar coordinates (r,) where r is on the interval [0,1] and is angle [0,2].

Figure 2. Daugmans rubber sheet model The remapping of the iris region from (x,y) Cartesian coordinates to the normalised non-concentric polar representation is modeled as ( , , , ) (, ) With , = 1 + , = 1 + () whereI(x,y) is the iris region image, (x,y) are the original Cartesian coordinates, (r,) are the corresponding normalized polar coordinates, , and , are the coordinates of the pupil and iris boundaries along the direction. The rubber sheet model takes into account pupil dilation and size inconsistencies in order to produce a normalized representation with constant dimensions. In this way the iris region is modeled as a flexible rubber sheet anchored at the iris boundary with the pupil centre as the reference point.

www.iosrjen.org

44 | P a g e

A closure looks to Iris Recognition system

III. FEATURE ENCODING Daugman makes uses of a 2D version of Gabor filters [1] in order to encode iris pattern data. A 2D Gabor filter over the an image domain (x,y) is represented as 2 2 2 2 G x, y = e[(x x 0 ) /x +(y y 0 ) / ] e2i[u 0 x x 0 +v 0 y y 0 ] Where (0 , 0 )specify position in the image, (, ) specify the effective width and length, and (0 , 0 ) specify 2 2 modulation, which has spatial frequency 0 = 0 + 0 The odd symmetric and even symmetric 2D Gabor filters are shown in Figure 3

Figure 3. A quadrature pair of 2D Gabor filters left) real component or even symmetric filter characterized by a cosine modulated by a Gaussian right) imaginary component or odd symmetric filter characterized by a sine modulated by a Gaussian Daugman demodulates the output of the Gabor filters in order to compress the data. This is done by quantizing the phase information into four levels, for each possible quadrant in the complex plane. Taking only the phase will allow encoding of discriminating information in the iris, while discarding redundant information such as illumination, which is represented by the amplitude component. These four levels are represented using two bits of data, so each pixel in the normalized iris pattern corresponds to two bits of data in the iris template. A total of 2,048 bits are calculated for the template, and an equal number of masking bits are generated in order to mask out corrupted regions within the iris. This creates a compact 256-byte template, which allows for efficient storageand comparison of irises. The Daugman system makes use of polar coordinates for normalization, therefore in polar form the filters are given as , = (0 ) (0 )

2 / 2

(0 )

2 / 2

Where ( , ) are the same as describe earlier, and (0 , 0 ) specify the centre frequency of the filter. 1.3. Matching Duagmans use hamming distance a matching metric developed by him, and calculation of the Hamming distance is taken only with bits that are generated from the actual iris region. The Hamming distance gives a measure of how many bits are the same between two bit patterns. Using the Hamming distance of two bit patterns, a decision can be made as to whether the two patterns were generated from different irises or from the same one. In comparing the bit patterns X and Y, the Hamming distance, HD, is defined as the sum of disagreeing bits (sum of the exclusive-OR between X and Y) over N, the total number of bits in the bit pattern. 1 =

( )

=1

Since an individual iris region contains features with high degrees of freedom, each iris region will produce a bit-pattern which is independent to that produced by another iris, on the other hand, two iris codes produced from the same iris will be highly correlated. If two bits patterns are completely independent, such as iris templates generated from different irises, the Hamming distance between the two patterns should equal 0.5. This occurs because independence implies the two bit patterns will be totally random, so there is 0.5 chance of setting any bit to 1, and vice versa. Therefore, half of the bits will agree and half will disagree between the two patterns. If two patterns are derived from the

www.iosrjen.org

45 | P a g e

A closure looks to Iris Recognition system

same iris, the Hamming distance between them will be close to 0.0, since they are highly correlated and the bits should agree between the two iris codes.

IV.

BOLES WORK

The boles proposed a iris recognition system which is designed to handle noisy condition as well as possible variation in illumination and camera-to-face distance. His work is based on zero crossing of wavelet transform. In his work apart from the iris location a normalization algorithm brings the iris to have the same diameter and the same number of data points. From the grey levels of the sample images one-dimentional signals are obtained and referred to as the iris signature. Then a zero-crossing representation is calculated based on the wavelet transform. These representations are stored ax templets and are used for the matching algorithm. In this way the auther claims that the noise influence will be eliminated since zero crossing campared to data points could speed the computations. An interesting aspect of this system is the ability to wavelet transform to eliminate glares due to reflection of the light source on the surface of the iris. Boless work comprises mainly four parts namely 1. Segmentation 2. Normalization 3. Feature extraction and 4. Matching 1.4. Segmentation The process of information extraction starts by locating the pupil of the eye, which can be done using any edge detection technique. Knowing that it has a circular shape, the edges defining it are connected to form a closed contour. The grey level values on the contour of virtual concentric circles, which are centered at the centroid of the pupil, are recorded and stored in circular buffersIn what follows, for simplicity, one such data set will be used to explain the process and will be referred to as the iris signature. Using the edge-detected image, the maximum diameter of the iris in any image is calculated. In comparing two images, one will be considered to be a reference image. The ratio of the maximum diameter of the iris in this image to that of the other image is also calculated. This ratio is then used to make the virtual circles, which extract the iris features, have the same diameter. In other words, the dimensions of the irises in the images will be scaled to have the same constant diameter regardless of the original size in the images. 1.5. Normalization In the Boles [4] system, iris images are first scaled to have constant diameter so that when comparing two images, one is considered as the reference image. This works differently to the other techniques, since normalization is not performed until attempting to match two iris regions, rather than performing normalization and saving the result for later comparisons. Once the two irises have the same dimensions, features are extracted from the iris region by storing the intensity values along virtual concentric circles, with origin at the centre of the pupil. A normalization resolution is selected, so that the number of data points extracted from each iris is the same. This is essentially the same as Daugmans rubber sheet model, however scaling is at match time, and is relative to the comparing iris region, rather than scaling to some constant dimensions. Also, it is not mentioned by Boles, how rotational invariance is obtained. 1.6. Feature extraction Boles and Boashash [5] make use of 1D wavelets for encoding iris pattern data. The mother wavelet is defined as the second derivative of a smoothing function (x). 2 ( ) = 2 The zero crossings of dyadic scales of these filters are then used to encode features.The wavelet transform of a signal f(x) at scale s and position x is given by 2 = 2 2 2 = 2 2 () Where = 1 ( ) f(x) is proportional to the second derivative of f(x) smoothed by (x), and the zero crossings of the transform correspond to points of inflection in (x). The motivation for this technique is that zero-crossings correspond to significant features with the iris region.

www.iosrjen.org

46 | P a g e

A closure looks to Iris Recognition system

1.7. Matching Boles and Boashash proposed a modal based algorithm in which the original signature of different irises to be recognized are represented by their zero-crossing representations. These representations are then stored in the database of the system and are referred to as models. The main task is to match an iris in an image, which is referred to as an unknown, with one of the models whose representations are stored in the database. In order to classify unknown signatures, they consider two alternative dissimilarity functions that compare the unknown signature g and candidate model f at a particular resolution level j as

, = min

=1

Zj g(n + m)

[ ()] [ ()] [ ()] [ ()] 0, 1 Where is the scale factor and equals the ratio between the radius of virtual circle of the candidate model and that of unknown signature. Note that the first dissimilarity function is computed using every point of the representation, whereas the second uses only the zero-crossing points. The overall dissimilarity value over the resolution interval [K; L] will be the average of the dissimilarity functions calculated at each resolution level in this interval, i.e., ( ) (, ) ( ) =

2 , = min

0, 1 2 {[ ( )] [ ] [ ( + )] [ ( + )] } =1

=1

wherep = 1, 2 refers to one of the dissimilarity functions defined above. The first dissimilarity function 1 makes a global measurement of the difference of energy between two zero-crossing representations over the entire spatial domain at a particular level j.In order to grasp the most essential information selectively and (2) effectively and to simplify the computation, they introduce the other dissimilarity function , which compares two representations basedon the dimensions of the rectangular pulses of the zero-crossing representations. With this function, the amount of computation is significantly reduced since the number of zero crossings is much less than the total number of data points. However, the main problem of using this function is that it requires the compared representations to have the same number of zero crossings at each resolution level. To overcome this problem, one can do either of the following. a) Check the number of zero crossings of the representations of the unknown signature and candidate model. If the above condition is satisfied for at least two adjacent resolution levels, then use 2 . The overall dissimilarity value (2) is calculated in the interval where the number of zero-crossings is the same. b) Alternatively, use a false zero-crossing elimination algorithm to remove unimportant (or false) zero crossings in order to make the number of zero crossings of the unknown and model representations be the same before calculating the dissimilarity function.

V.

COMPARISON

The first system was awarded US patents. During the evolvement process of Daugmans(1,2) system perfect result were reported testing 592 irises. However the few shortcoming of daugmans system are During segmentation the duagmans Integro-differential algorithm fail where there is noise in the eye image, such as from reflections, since it works only on a local scale. Even though the homogenous rubber sheet model accounts for pupil dilation, imaging distance and nonconcentric pupil displacement, it does not compensate for rotational inconsistencies. In the Daugman system, rotation is accounted for during matching by shifting the iris templates in the direction until two iris templates are aligned. Boles(5) tried to implement a system extending the current capabilities of the existing methods. He basically tries to create a system that would not need irises being in the same location within the image of glare free under fixed illuminations. This problem was already solved in daugmans project by the sequential processing of various frames until the edge detector for the iris location reported that an iris was present. Nevertheless, the wavelet transform provide the ability of pattern matching under local distortion hoping to compensate for glare resulting from reflection of light on the surface of the iris and translation and size variations. He also tries to test the tolerance of his system in different noise levels. Even though the results were good the system was tested with a very small number of images that limits the further conclusions. Something that differentiates his work is the analysis of the information in the various resolution levels. In his

www.iosrjen.org

47 | P a g e

A closure looks to Iris Recognition system

attempt to degrease the computational needs he discovered that intermediate levels contained most of the energy. .

VI.

CONCLUSION

Iris identification was considered one of the most robust ways to identify humans. It provides enough Degrees-of-Freedom for accurate and safe recognition. Iris is considered the most unique, data rich physical structure on the human body. It works even when people were sunglasses or contact lenses. The properties of the iris that enhance its suitability for use in automatic identification include its natural protection from the external environment, impossibility of surgically modifying without the risk of vision, physiological response to light that is unique, permanent features and ease of registering its image at some distance. Iris Recogn ition Systems involve acquiring a picture of a persons iris, digitally encode it, and compare it with one already on file. Iris identification provides convenience to the human users and is widely accepted. Dr. John Daugman developed the theoretical research and algorithms. Theoretically the problem involves edge detection operators (locate the iris), representation and correspondence function (the same parts of the different irises need to be compared, and a matching algorithm. The information contained in the iris structure lies throughout its frequency spectrum. Small and large structures, small and large edges are all distinguishable features (Freckles, Contractile Furrow). In order to exploit this, 2-D decomposition (Gabor Phasor Coefficients, Wavelets) is applied. The frequency components of the various bands are used to extract feature vectors suitable for robust recognition. The main problems that had to be solved apart from common computer vision drawbacks (scaling, illumination, and rotation) were the reflections of the eye to the light source. The main disadvantage of Iris Recognition was the designing and manufacturing cost of such a system.

www.iosrjen.org

48 | P a g e

A closure looks to Iris Recognition system REFERENCES

[1]. [2]. [3].

[4]. [5]. [6]. [7].

[8]. [9].

[10]. [11]. [12].

[13].

J. Daugman. How iris recognition works. Proceedings of 2002 International Conference on Image Processing, Vol.1, 2002. J. Daugman. Biometric personal identification system based on iris analysis. United States Patent, Patent Number: 5,291,560, 1994. R. Wildes, J. Asmuth, G. Green, S. Hsu, R. Kolczynski, J. Matey, S. McBride. A system for automated iris recognition. Proceedings IEEE Workshop on Applications of Computer Vision , Sarasota, FL, pp. 121-128, 1994. R. Wildes. Iris recognition: an emerging biometric technology. Proceedings of the IEEE, Vol. 85, No. 9, 1997. W. Boles, B. Boashash. A human identification technique using images of the iris and wavelet transform. IEEE Transactions on Signal Processing, Vol. 46, No. 4, 1998. S. Lim, K. Lee, O. Byeon, T. Kim. Efficient iris recognition through improvement of feature vector and classifier. ETRI Journal, Vol. 23, No. 2, Korea, 2001. S. Noh, K. Pae, C. Lee, J. Kim. Multiresolution independent component analysis for iris identification. The 2002 International Technical Conference on Circuits/Systems, Computers and Communications, Phuket, Thailand, 2002. L. Ma, T. Tan, Y. Wang, and D. Zhang, Personal identification based on iris texture analysis, IEEE Trans. Pattern Anal. Mach. Intell., vol. 25, no. 12, pp. 15191533, Dec. 2003. C. Sanchez-Avila and R. Sanchez-Reillo, Two different approaches for iris recognition using Gabor filters and multiscale zero-crossing represen-tation, Pattern Recognit., vol. 38, no. 2, pp. 231240, Feb. 2005. M. Vatsa, R. Singh, and A. Noore, Reducin g the false rejection rate of iris recognition using textural and topological features, Int. J. SignalProcess., vol. 2, no. 1, pp. 6672, 2005. D. M. Monro, S. Rakshit, and D. Zhang, DCT-based iris recognition, IEEE Trans. Pattern Anal. Mach. Intell., vol. 29, no. 4, pp. 586596, Apr. 2007. A. Poursabery and B. N. Araabi, Iris recognition for partially occluded images: Methodology and sensitivity analysis, EURASIP J.Adv. Signal Process., vol. 2007, no. 1, p. 20, Jan. 2007. ArticleID 36751. J. Daugman, New methods in iris recognition, IEEE Trans. Syst., Man,Cybern. B, Cybern. , vol. 37, no. 5, pp. 11681176, Oct. 2007.

www.iosrjen.org

49 | P a g e

S-ar putea să vă placă și

- Biometric Identification Using Matching Algorithm MethodDocument5 paginiBiometric Identification Using Matching Algorithm MethodInternational Journal of Application or Innovation in Engineering & ManagementÎncă nu există evaluări

- Implementation of Reliable Open SourceDocument6 paginiImplementation of Reliable Open Sourcenilesh_092Încă nu există evaluări

- Feature Extraction of An Iris For PatternRecognitionDocument7 paginiFeature Extraction of An Iris For PatternRecognitionJournal of Computer Science and EngineeringÎncă nu există evaluări

- Iris Recognition Using Modified Fuzzy Hyperline Segment Neural NetworkDocument6 paginiIris Recognition Using Modified Fuzzy Hyperline Segment Neural NetworkJournal of ComputingÎncă nu există evaluări

- A Novel Approach For Iris Recognition: Manisha M. Khaladkar, Sanjay R. GanorkarDocument4 paginiA Novel Approach For Iris Recognition: Manisha M. Khaladkar, Sanjay R. GanorkarIjarcet JournalÎncă nu există evaluări

- Fcrar2004 IrisDocument7 paginiFcrar2004 IrisPrashant PhanseÎncă nu există evaluări

- Fast Identification of Individuals Based On Iris Characteristics For Biometric SystemsDocument4 paginiFast Identification of Individuals Based On Iris Characteristics For Biometric SystemsJonathan RogeriÎncă nu există evaluări

- Fusion of IrisDocument6 paginiFusion of IrisGaurav DiyewarÎncă nu există evaluări

- 13 - PradeepKumar - Finalpaper - IISTE Research PaperDocument13 pagini13 - PradeepKumar - Finalpaper - IISTE Research PaperiisteÎncă nu există evaluări

- A Human Iris Recognition Techniques To Enhance E-Security Environment Using Wavelet TrasformDocument8 paginiA Human Iris Recognition Techniques To Enhance E-Security Environment Using Wavelet Trasformkeerthana_ic14Încă nu există evaluări

- Iris Recognition For Personal Identification Using Lamstar Neural NetworkDocument8 paginiIris Recognition For Personal Identification Using Lamstar Neural NetworkAnonymous Gl4IRRjzNÎncă nu există evaluări

- Iris Pattern Recognition Using Wavelet Method: Mr.V.Mohan M.E S.HariprasathDocument5 paginiIris Pattern Recognition Using Wavelet Method: Mr.V.Mohan M.E S.HariprasathViji VasanÎncă nu există evaluări

- Iris Based Authentication System: R.Shanthi, B.DineshDocument6 paginiIris Based Authentication System: R.Shanthi, B.DineshIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Biometric Course:: RashaDocument43 paginiBiometric Course:: Rashabhavani gongaliÎncă nu există evaluări

- Robust and Fast Iris Localization Using Contrast Stretching and Leading Edge DetectionDocument7 paginiRobust and Fast Iris Localization Using Contrast Stretching and Leading Edge DetectionInternational Journal of Application or Innovation in Engineering & ManagementÎncă nu există evaluări

- Generation of Cryptographic Key and Fuzzy VaultDocument8 paginiGeneration of Cryptographic Key and Fuzzy Vaultpriyakapoor77Încă nu există evaluări

- Soc Par 2009Document5 paginiSoc Par 2009Shrinidhi SanthanamÎncă nu există evaluări

- Iris Recognition System Using Support Vector MachinesDocument16 paginiIris Recognition System Using Support Vector MachinesEmy BadescuÎncă nu există evaluări

- An Atm With EyeDocument24 paginiAn Atm With EyeunweltedÎncă nu există evaluări

- Biometric Atm Iris Recognition 11193 YWYOufvDocument41 paginiBiometric Atm Iris Recognition 11193 YWYOufvRushikesh PalavÎncă nu există evaluări

- Biometric Course:: Rasha Tarawneh Omamah ThunibatDocument43 paginiBiometric Course:: Rasha Tarawneh Omamah ThunibatS.kalaivaniÎncă nu există evaluări

- Palmprint Identification Using Feature-Level Fusion: Adams Kong, David Zhang, Mohamed KamelDocument10 paginiPalmprint Identification Using Feature-Level Fusion: Adams Kong, David Zhang, Mohamed KamelSally SamehÎncă nu există evaluări

- Implementing Security Using Iris Recognition Using MatlabDocument10 paginiImplementing Security Using Iris Recognition Using MatlabDeepak Kumar100% (1)

- A Hybrid Method For Iris SegmentationDocument5 paginiA Hybrid Method For Iris SegmentationJournal of ComputingÎncă nu există evaluări

- A Novel and Efficient Feature Extraction Method For Iris RecognitionDocument3 paginiA Novel and Efficient Feature Extraction Method For Iris RecognitionkaleysureshÎncă nu există evaluări

- Multispectral Palmprint Recognition Using Wavelet-Based Image FusionDocument8 paginiMultispectral Palmprint Recognition Using Wavelet-Based Image Fusionsaran52_eceÎncă nu există evaluări

- 3Document14 pagini3amk2009Încă nu există evaluări

- An Effective Segmentation Technique For Noisy Iris ImagesDocument8 paginiAn Effective Segmentation Technique For Noisy Iris ImagesInternational Journal of Application or Innovation in Engineering & ManagementÎncă nu există evaluări

- A Literature Review On Iris Segmentation Techniques For Iris Recognition SystemsDocument5 paginiA Literature Review On Iris Segmentation Techniques For Iris Recognition SystemsInternational Organization of Scientific Research (IOSR)Încă nu există evaluări

- Iris Based Authentication ReportDocument5 paginiIris Based Authentication ReportDragan PetkanovÎncă nu există evaluări

- Iris Recognition: Existing Methods and Open Issues: NtroductionDocument6 paginiIris Recognition: Existing Methods and Open Issues: NtroductionDhimas Arief DharmawanÎncă nu există evaluări

- International Journals Call For Paper HTTP://WWW - Iiste.org/journalsDocument14 paginiInternational Journals Call For Paper HTTP://WWW - Iiste.org/journalsAlexander DeckerÎncă nu există evaluări

- 1.1. Motivation For A Currency Identification SystemDocument25 pagini1.1. Motivation For A Currency Identification SystemPravat SatpathyÎncă nu există evaluări

- IrisDocument36 paginiIrisMUDIT_10Încă nu există evaluări

- Formella 2003 AutomaticDocument8 paginiFormella 2003 AutomaticAdnan RahicÎncă nu există evaluări

- Biometric Identification Using Fast Localization and Recognition of Iris ImagesDocument7 paginiBiometric Identification Using Fast Localization and Recognition of Iris ImageslukhachvodanhÎncă nu există evaluări

- The Importance Ofbeing Random: Statistical Principles Ofiris RecognitionDocument13 paginiThe Importance Ofbeing Random: Statistical Principles Ofiris RecognitionnehaAnkÎncă nu există evaluări

- Automated System For Criminal Identification Using Fingerprint Clue Found at Crime SceneDocument10 paginiAutomated System For Criminal Identification Using Fingerprint Clue Found at Crime SceneInternational Journal of Application or Innovation in Engineering & ManagementÎncă nu există evaluări

- A Novel Technique in Fingerprint Identification Using Relaxation Labelling and Gabor FilteringDocument7 paginiA Novel Technique in Fingerprint Identification Using Relaxation Labelling and Gabor FilteringIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Iris V9Document26 paginiIris V9arakhalÎncă nu există evaluări

- Literature Review: Iris Segmentation Approaches For Iris Recognition SystemsDocument4 paginiLiterature Review: Iris Segmentation Approaches For Iris Recognition SystemsInternational Journal of computational Engineering research (IJCER)Încă nu există evaluări

- Thesis Report On Iris RecognitionDocument4 paginiThesis Report On Iris Recognitionafloaaffbkmmbx100% (2)

- Nust Thesis FormsDocument8 paginiNust Thesis Formsiinlutvff100% (2)

- Hamming Cut Matching AlgorithmDocument10 paginiHamming Cut Matching Algorithmjnanesh582100% (1)

- A G F P I F E: Nalysis of Abor Ilter Arameter For RIS Eature XtractionDocument4 paginiA G F P I F E: Nalysis of Abor Ilter Arameter For RIS Eature XtractionpowerÎncă nu există evaluări

- Iris Recognition With Fake IdentificationDocument12 paginiIris Recognition With Fake IdentificationiisteÎncă nu există evaluări

- Athipathy Bio MetricsDocument9 paginiAthipathy Bio MetricsathipathyÎncă nu există evaluări

- An Efficient Technique For Iris Recocognition Based On Eni FeaturesDocument6 paginiAn Efficient Technique For Iris Recocognition Based On Eni FeaturesKampa LavanyaÎncă nu există evaluări

- A Review Paper On Biometric Recognition Using Iris ScannerDocument3 paginiA Review Paper On Biometric Recognition Using Iris ScannerEditor IJRITCCÎncă nu există evaluări

- MOBILE IRIS TECHNOLOGYDocument7 paginiMOBILE IRIS TECHNOLOGYPrashanth VictorÎncă nu există evaluări

- Iris Segmentation Methodology For Non-Cooperative RecognitionDocument7 paginiIris Segmentation Methodology For Non-Cooperative Recognitionanon_447732652Încă nu există evaluări

- A Novel Fingerprints Identification System Based On The Edge DetectionDocument4 paginiA Novel Fingerprints Identification System Based On The Edge DetectionKampa Lavanya100% (1)

- Dual Integration of Iris and Palmprint For RealizationDocument6 paginiDual Integration of Iris and Palmprint For Realizationeditor_ijarcsseÎncă nu există evaluări

- A Novel Approach To Iris Localization For IrisDocument19 paginiA Novel Approach To Iris Localization For IrisRajasree PkÎncă nu există evaluări

- LJMU Research Online: Intend To Cite From This Work)Document12 paginiLJMU Research Online: Intend To Cite From This Work)Ion HyngaruÎncă nu există evaluări

- Quality Assessment For Online Iris ImagesDocument13 paginiQuality Assessment For Online Iris ImagesCS & ITÎncă nu există evaluări

- 3D Computer Vision: Efficient Methods and ApplicationsDe la Everand3D Computer Vision: Efficient Methods and ApplicationsÎncă nu există evaluări

- Canny Edge Detector: Unveiling the Art of Visual PerceptionDe la EverandCanny Edge Detector: Unveiling the Art of Visual PerceptionÎncă nu există evaluări

- Pattern Recognition and Artificial Intelligence, Towards an Integration: Proceedings of an International Workshop held in Amsterdam, May 18-20, 1988De la EverandPattern Recognition and Artificial Intelligence, Towards an Integration: Proceedings of an International Workshop held in Amsterdam, May 18-20, 1988Încă nu există evaluări

- G 05913234Document3 paginiG 05913234IOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Effectiveness in Addressing The Sustainability and Environmental Issue Involving Use of Coal Ash As Partial Replacement of Cement in ConcreteDocument6 paginiEffectiveness in Addressing The Sustainability and Environmental Issue Involving Use of Coal Ash As Partial Replacement of Cement in ConcreteIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- MMSE Adaptive Receiver vs. Rake Receiver Performance Evaluation For UWB Signals Over Multipath Fading ChannelDocument13 paginiMMSE Adaptive Receiver vs. Rake Receiver Performance Evaluation For UWB Signals Over Multipath Fading ChannelIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Random Fixed Point Theoremsin Metric Space: Balaji R Wadkar, Ramakant Bhardwaj, Basantkumar SinghDocument9 paginiRandom Fixed Point Theoremsin Metric Space: Balaji R Wadkar, Ramakant Bhardwaj, Basantkumar SinghIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Application of The Multi-Curve Reconstruction Technology in Seismic InversionDocument4 paginiApplication of The Multi-Curve Reconstruction Technology in Seismic InversionIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Study On Logging Interpretation Model and Its Application For Gaotaizi Reservoir of L OilfieldDocument6 paginiStudy On Logging Interpretation Model and Its Application For Gaotaizi Reservoir of L OilfieldIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- The Identification of Lithologic Traps in Nanpu Sag D3 Member of Gaonan RegionDocument5 paginiThe Identification of Lithologic Traps in Nanpu Sag D3 Member of Gaonan RegionIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Introduction To Accounting Professional Judgment ManipulationDocument3 paginiIntroduction To Accounting Professional Judgment ManipulationIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- The Experimental Study On The Compatibility Relationship of Polymer and Oil LayerDocument5 paginiThe Experimental Study On The Compatibility Relationship of Polymer and Oil LayerIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Strength Properties of Commercially Produced Clay Bricks in Six Different Locations/ States in NigeriaDocument10 paginiStrength Properties of Commercially Produced Clay Bricks in Six Different Locations/ States in NigeriaIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- The Research of Source System On Chang 6 Sand Formation in Ansai OilfieldDocument5 paginiThe Research of Source System On Chang 6 Sand Formation in Ansai OilfieldIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Construction of Self-Regulated EFL Micro-Course Learning: Zhongwen LiuDocument4 paginiConstruction of Self-Regulated EFL Micro-Course Learning: Zhongwen LiuIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- I 05815457Document4 paginiI 05815457IOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- G 05813848Document11 paginiG 05813848IOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- "Sign Language Translator": Mr. Sangam Mhatre, Mrs. Siuli DasDocument4 pagini"Sign Language Translator": Mr. Sangam Mhatre, Mrs. Siuli DasIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- A 05910106Document6 paginiA 05910106IOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Constructions Quality Versus Bad Components in Jordanian CementDocument3 paginiConstructions Quality Versus Bad Components in Jordanian CementIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- H 05814953Document5 paginiH 05814953IOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- B 05810814Document7 paginiB 05810814IOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Validating The Theories of Urban Crime in The City of RaipurDocument10 paginiValidating The Theories of Urban Crime in The City of RaipurIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Modeling and Design of Hybrid Power Plants: Ahmed KH A. E. AlkhezimDocument5 paginiModeling and Design of Hybrid Power Plants: Ahmed KH A. E. AlkhezimIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- I 05745368Document16 paginiI 05745368IOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- A 05810107Document7 paginiA 05810107IOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- G 05743742Document6 paginiG 05743742IOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- C 05740912Document4 paginiC 05740912IOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Detecting Spammers in Youtube: A Study To Find Spam Content in A Video PlatformDocument5 paginiDetecting Spammers in Youtube: A Study To Find Spam Content in A Video PlatformIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- D 05741325Document13 paginiD 05741325IOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Assessment of The Life Quality of Urban Areas Residents (The Case Study of The City of Fahraj)Document6 paginiAssessment of The Life Quality of Urban Areas Residents (The Case Study of The City of Fahraj)IOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalÎncă nu există evaluări

- Removal Processes and Machine Tools: Indian Institute of Technology DelhiDocument28 paginiRemoval Processes and Machine Tools: Indian Institute of Technology DelhiEthan HuntÎncă nu există evaluări

- Current Office Phone Number Vijayawada, Andhra Pradesh (A.p.)Document11 paginiCurrent Office Phone Number Vijayawada, Andhra Pradesh (A.p.)Manoj Digi Loans100% (1)

- Reports On TECHNICAL ASSISTANCE PROVIDED by The Teachers To The Learners / Learning FacilitatorsDocument2 paginiReports On TECHNICAL ASSISTANCE PROVIDED by The Teachers To The Learners / Learning FacilitatorsJerv AlferezÎncă nu există evaluări

- Multidisciplinary Research: EPRA International Journal ofDocument5 paginiMultidisciplinary Research: EPRA International Journal ofMathavan VÎncă nu există evaluări

- The Big Table of Quantum AIDocument7 paginiThe Big Table of Quantum AIAbu Mohammad Omar Shehab Uddin AyubÎncă nu există evaluări

- (Type The Documen T Title) : (Year)Document18 pagini(Type The Documen T Title) : (Year)goodluck788Încă nu există evaluări

- Mazada Consortium LimitedDocument3 paginiMazada Consortium Limitedjowila5377Încă nu există evaluări

- Supg NS 2DDocument15 paginiSupg NS 2DruÎncă nu există evaluări

- Mathematics 10 Performance Task #1 Write The Activities in A Short Bond Paper Activities Activity 1: Go Investigate!Document2 paginiMathematics 10 Performance Task #1 Write The Activities in A Short Bond Paper Activities Activity 1: Go Investigate!Angel Grace Diego Corpuz100% (2)

- Refrigeration and Air ConditioningDocument41 paginiRefrigeration and Air Conditioningrejeesh_rajendranÎncă nu există evaluări

- The Ultimate Guide To The Gemba WalkDocument9 paginiThe Ultimate Guide To The Gemba WalkĐan VũÎncă nu există evaluări

- Ug Vol1Document433 paginiUg Vol1Justin JohnsonÎncă nu există evaluări

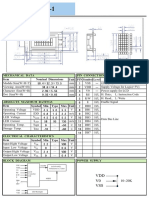

- V0 VSS VDD: Unit PIN Symbol Level Nominal Dimensions Pin Connections Function Mechanical Data ItemDocument1 paginăV0 VSS VDD: Unit PIN Symbol Level Nominal Dimensions Pin Connections Function Mechanical Data ItemBasir Ahmad NooriÎncă nu există evaluări

- B737 SRM 51 - 40 - 08 Rep - Fiberglass OverlaysDocument6 paginiB737 SRM 51 - 40 - 08 Rep - Fiberglass OverlaysAlex CanizalezÎncă nu există evaluări

- EER Model: Enhance Entity Relationship ModelDocument12 paginiEER Model: Enhance Entity Relationship ModelHaroon KhalidÎncă nu există evaluări

- Unit 2-Translation Practice MarkDocument3 paginiUnit 2-Translation Practice MarkHương ThảoÎncă nu există evaluări

- GCE A - AS Level Biology A Topic Test - Biodiversity, Evolution and DiseaseDocument25 paginiGCE A - AS Level Biology A Topic Test - Biodiversity, Evolution and Diseasearfaat shahÎncă nu există evaluări

- Soft-Starter: Programming ManualDocument162 paginiSoft-Starter: Programming ManualThaylo PiresÎncă nu există evaluări

- Module 1-Grade 9Document19 paginiModule 1-Grade 9Charity NavarroÎncă nu există evaluări

- Prompt by NikistDocument4 paginiPrompt by NikistMãnoj MaheshwariÎncă nu există evaluări

- Implications of PropTechDocument107 paginiImplications of PropTechAnsar FarooqiÎncă nu există evaluări

- Report On Mango Cultivation ProjectDocument40 paginiReport On Mango Cultivation Projectkmilind007100% (1)

- B1 UNIT 1 Life Skills Video Teacher's NotesDocument1 paginăB1 UNIT 1 Life Skills Video Teacher's NotesXime OlariagaÎncă nu există evaluări

- TOC - Question AnswerDocument41 paginiTOC - Question AnsweretgegrgrgesÎncă nu există evaluări

- Haggling As A Socio-Pragmatic Strategy (A Case Study of Idumota Market)Document15 paginiHaggling As A Socio-Pragmatic Strategy (A Case Study of Idumota Market)Oshoja Tolulope OlalekanÎncă nu există evaluări

- Hatton National Bank PLC: Instance Type and TransmissionDocument2 paginiHatton National Bank PLC: Instance Type and TransmissiontaraÎncă nu există evaluări

- What's New: Contemporary Quiz#5Document2 paginiWhat's New: Contemporary Quiz#5Christian Castañeda100% (1)

- Art & Science: Meeting The Needs of Patients' Families in Intensive Care UnitsDocument8 paginiArt & Science: Meeting The Needs of Patients' Families in Intensive Care UnitsRiaÎncă nu există evaluări

- Planmeca Promax 3D Max CBVT Product PresentationDocument36 paginiPlanmeca Promax 3D Max CBVT Product PresentationAD TwentyOne DentalÎncă nu există evaluări

- Accenture United Nations Global Compact IndexDocument4 paginiAccenture United Nations Global Compact IndexBlasÎncă nu există evaluări