Documente Academic

Documente Profesional

Documente Cultură

Data Hiding in Motion Vectors of Compressed Video PDF

Încărcat de

choppersureTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Data Hiding in Motion Vectors of Compressed Video PDF

Încărcat de

choppersureDrepturi de autor:

Formate disponibile

Data Hiding in Motion Vectors Of Compressed Video

FINAL YEAR PROJ ECT REPORT

Submitted to the faculty of engineering of

NORTH MAHARASHTRA UNIVERSITY J ALGAON425001

in partial fulfillment of the requirement for the award of the bachelors degree of Engineering

In

Electronics & communication

By

HARSHIT ASHOKKUMAR PATADIA

PRIYANKA VISHWANATH PATIL

SONALI UDDHAVRAO MALI

Under guidance of

Prof. Mrs. D. R. BHAMARE

DEPARTMENT OF ELECTRONI CS ENGI NEERI NG

S.S.V.P.SS B.S.DEORE COLLEGE OF ENGI NEERI NG

DHULE-424005(M.S)

Year 2012 - 13

S.S.V.P.S.s B.S. DEORE COLLEGE OF ENGINEERING,

DHULE-424 005.

DEPARTMENT OF ELECTRONICS & COMMUNICATION

ENGINEERING

CERTI FI CATE

Date: / /2013

This is to certify that HARSHIT ASHOKKUMAR PATADIA, SONALI

UDDHAVRAO MALI, PRIYANKA VISHWANATH PATIL have completed

project Data Hiding in Motion Vectors of Compressed Video in partial

fulfillment of requirement for the award of the BACHELORS OF

ENGINEERING (Electronics & Communication Engineering) of North

Maharashtra University, J algaon.

The matter embodied in this report is a record of her own independent work

carried out by her under my supervision and guidance. The matter embodied in

this report is not been submitted of any degree or diploma.

Guide H.O.D (E&C)

Prof. Mrs. D.R. BHAMARE Prof. P.S. Patil

Principal

Prof. Dr. H.D. Patil

ABSTRACT

In this highly digitalized world, the Internet serves as an important role for

data transmission and sharing. However, since it is a worldwide and publicized

medium, some confidential data might be stolen, copied, modified, or destroyed

by an unintended observer. Therefore, security problems become an essential

issue. Although encryption achieves certain security effects, they make the secret

messages unreadable and unnatural or meaningless. These unnatural messages

usually attract some unintended observers attention. This is the reason a new

security approach called Steganography arises.

Steganography is the art of hiding the existence of data in another

transmission mediumto achieve secret communication. It does not replace

cryptography but rather boosts the security using its obscurity features.

Steganography method is used in this paper, here secret data is embedded within

a frame in the video. That will provide an excellent secure for data hiding. For

this video to frame conversion we are using matlab. Secret data is hidden in any

one of the frame in video. And also satisfactory PSNR (Peak- Signal-to-Noise

Ratio) is obtained.

CONTENT

List of Figures I

List of Table II

List of Abbreviations III

1. INTRODUCTION 1

1.1. Cryptography vs. Steganography 1

1.2. Block Diagram 2

1.3. Introduction to Digital Video 2

1.4. Advantages 3

1.5. Ease of Manipulation 3

1.6. Preservation of Data 4

2. LITERATURE SURVEY 6

2.1. Frame Separation 6

2.1.1Coding for Frame Separation 7

2.2. Fundamentals of Digital Image 7

2.2.1 Image Compression 7

2.2.2 Image Compression Model 8

2.2.3 Image Compression Types 9

3. SYSTEM DEVELOPMENT 17

3.1 Introduction to Wavelet 17

3.2 Wavelet Transform 19

3.3 Discrete Wavelet Transform 20

3.4 Wavelets and Perfect Reconstruction Filter Banks 21

3.5 Wavelet Image Processing 23

3.6 Wavelet Decomposition of Images 23

3.7 1-D Continuous Wavelet Transform 25

3.8 1-D Discrete Wavelet Transform 26

3.9 2-D Wavelet Wavelet Transform 27

3.10 Lifting Using HAAR 29

3.11 Introduction of LSB 30

3.12 Data Hiding by simple LSB substitution 31

3.13 Optical Pixel Adjustment Process 32

4. RESULT AND DISCUSSION 35

4.1 Applications 35

4.2 Advantages 35

4.3 Result 35

5. CONCLUSION 36

REFERENCE 37

I

LI ST OF FIGURE

Figure No. Title of Figure Page No.

1.1 Block Diagram Of Data Hiding in Vdeo 2

2.1 Frame Separation 6

2.2 Block Diagram of Image Compression and Decompression 8

2.3 PSNR of lossy compression in relation to compression ratio 10

2.4 Decoding time in relation to compression ratio 12

2.5 Lossless compression results of the Kodak test set 14

3.1 Discrete Wave Transform 20

3.2 A two level Wavelet Decomposition 21

3.3 A two level Wavelet Reconstruction 22

3.4 Pyramid Packet 22

3.5 Wavelet Packet Decomposition 22

3.6 Original Image one level 2 D Decomposition 24

3.7 3 popular Wavelet Decomposition Structures on Image 24

3.8 Block Diagram of DWT 27

3.9 DWT for Lena Image 28

4.1 Fig 4.1 Result Data hiding in Video 4.1

II

LI ST OF TABLE

Table No. Title of table Page

No.

2.1 Trade-off between quality and speed

for the Kodak test set

11

2.2 Lossless compression ratios of the PGF

test set

13

2.3 Runtime of lossless compression of the

PGF test set

13

3.1 Daubechies Wavelet Coefficient 20

III

LI ST OF ABBREVI ATI ONS

MPEG Moving Picture Experts Group

AVI Audio Video Interlaced

HSV Hue Saturation Value

RGB Red Green Blue

HSB Hue Saturation Brightness

CT Computer Tomography

MRI Magnetic Resonance Imaging

BMP Bitmap Image File

J PEG J oint Photographic Expert Group

PSNR Peak Signal-to-Noise Ratio

PGF Progressive Graphic File

PNG Portable Network Graphics

Enc Encoding

Dec Decoding

ISO International Standardization Organization

CCITT Consultative Committee of the International Telephone

and Telegraph

DWT Discrete wavelets transform

WT Wavelet Transform

FT Fourier Transform

STFT Short Time Fourier Transform

MRA Multi resolution Analysis

LPd Low Pass Decomposition Filter

HPd High Pass Decomposition Filter

LPr Low Pass Reconstruction Filter

HPr High Pass Reconstruction Filter

LPF Low Pass Filter

DCT Discrete Cosine Transform

IMGLIB Image Library

IV

ACKNOWLEDGEMENT

We express our deep gratitude to almighty, the supreme guide, for bestowing his

blessings up on me in my entire endeavor.

We would to like to express our sincere thanks to Prof. H. D. Patil Head of Department

of Electronics And Communication Engineering for all his assistance.

We wish to express our deep sense of gratitude Prof. Mrs. D. R. Bhamare Department

of Electronics & Communication Engineering who guided me throughout the seminar. Her

overall direction and guidance has been responsible for the successful completion of the

seminar.

We would like to thank all the faculty members of the Department of Electronics And

Communication Engineering and my friends for their constant support and encouragement.

HARSHIT A. PATADIA

PRIYANKA V. PATIL

SONALI U. MALI

CHAPTER 1

I NTRODUCTI ON

Steganography is the art of concealing the presence of information within an innocuous

container. Steganography has been used throughout history to protect important information

from being discovered by enemies. A very early example of Steganography comes from the

story of Demartus of Greece. He wished to inform Sparta that Xerces the King of Persia was

planning to invade. In ancient Greece wax covered wooden tablets were used to record written

text. In order to avoid detection by the Persians, Demartus scraped the wax from a tablet,

etched the message into the underlying wood, then recovered the tabled with wax. This

concealed the underlying message from the sentries who inspected the tablets as they left Persia

by courier for Greece.

Another historical examples of Steganography are the use of invisible inks. A common

experiment conducted by young kids everywhere is to use a toothpick dipped in vinegar to

write a message on a piece of paper. Once the vinegar dries, the presence of the message is not

obvious to a casual inspector (aside from the smell). Upon slight heating of the paper, a

chemical reaction occurs which darkens the vinegar and makes the message readable. Other,

less smelly, invisible inks have been used throughout history similarly even up until World

War II.

A more recently developed Steganography technique was invented by the Germans in

World War II, the use of microdots. Microdots were very small photographs, the size of a

printed period, which contain very clear text when magnified. These microdots contained

important information about German war plans and were placed in completely unrelated letters

as periods.

Although Steganography is related to Cryptography, the two are fundamentally

different.

1.1 Cryptography vs. Steganography

Cryptography is the practice of scrambling messages so that even if detected, they

are very difficult to decipher. The purpose of Steganography is to conceal the message such

that

2

The very existence of the hidden is camouflaged. However, the two techniques are not

mutually exclusive.

Steganography and Cryptography are in fact complementary techniques. No matter

how strong algorithm, if an encrypted message is discovered, it will be subject to cryptanalysis.

Likewise, no matter how well concealed a message is, it is always possible that it will be

discovered. By combining Steganography with Cryptography we can conceal the existence of

an encrypted message. In doing this, we make it far less likely that an encrypted message will

be found. Also, if a message concealed through Steganography is discovered, the discoverer

is still faced with the formidable task of deciphering it.

1.2 Block Diagram:

1.3 Introduction to Digital Video

Digital video refers to the capturing, manipulation, and storage of moving images that

can be displaced on computer screens. This requires that the moving images be digitally

handled by the computer. The word digital refers to a system based on discontinuous

events, as opposed to analog, a continuous event. Computers are digital systems; they do

not process images the way the human eye does. So how does it work?

3

Before the Digital Era, to display analog video images on a computer monitor, the video

signal had to first be converted from analog to digital form. A special video digitalizing

overlay board or hardware on the motherboard had to be installed in your computer to take

the video signal and convert it to digital information. To do this, however, required a very

powerful computer to be able to read and digitalize every frame repetitively. So the next

step in digital video evolution was to eliminate the analog videotape. Thus, the entire

procedure, including the capturing of video, is in digital form.

First, a camera and a microphone capture the picture and sound of a video session and

send analog signals to a video-capture adapter board. The board only captures half of the

number of frames per second that movies use in order to reduce the amount of data to be

processed. Second, there is an analog-to-digital converter chip on the video-capture

adapter card, and it converts the analog signals to digital patterns (0s and 1s).

Third, a compression/decompression chip or software reduces the data to a minimum

necessary for recreating the video signals. In this procedure, no analog was involved,

making the process more efficient.

1.4 Advantages

What is it about digital video that makes it so attractive? Isnt videotape good enough?

Here are three of many reasons that explain why digital videos are becoming more popular than

ever.

1.5 Ease Of Manipulation.

The difference between analog and digital is like comparing a typewriter with a word

processor. J ust like the cut and paste function is much easier and Faster with a word processor,

editing is easier and faster with a digital video. Also, many effects that were exclusive for

specialized post production houses are now easily achieved by bringing in files from

Photoshop, Flash, and Sound Edit as components in a video mix. In addition, the ability to

separate sound from image enables editing one without affecting the other.

4

1.6 Preservation of data.

It is not true that DV is better simply because it is digital. Big screen films are not digital

and are still highly esteemed as quality images. However, it is easier to maintain the quality of

a digital video. Traditional tapes are subject to wear and tear more so than DVD or hard drive

disks. Also, once done, a digital video can be copied over and over without losing its original

information. Analog signals can be easily distorted and will lose much of the original data after

a few transfers.

a) MPEG

(Pronounced em-peg) stands for Moving Picture Experts Group. The term is generally

used to name the set of digital video compression standards and file formats developed by this

group. MPEG uses lossy compression, and achieves a high compression rate by storing only

the changes from one frame to another (the delta), and not the entire frame. There are two major

standards. MPEG-1 provides a 352 x 240 resolution at 30 frames per second. The product

quality is a little below VCR videos. The MPEG-2 provides a 720 x480 and 1280 x 720

resolutions at 60 frames per second, with full CD-quality audio. It can compress a two hour

video into a few gigabytes.

MPEG-2 is sufficient for all major TV standards and DVD-ROM (Fisher & Schroeder).

MPEG-4 is in development (there is no MPEG-3), and will provide multiple views and multiple

soundtracks of a scene, as well as stereoscopic and 3-D views (Vaughan, 1998).

b) AVI

Stands for Audio Video Interlaced. It is one of the oldest formats. It was created by

Microsoft to go with Windows 3.1 and its Video for Windows application. Even though it is

widely used due to the number of editing systems and software that use AVI by default, this

format has many restrictions, especially the compatibility with operations systems and other

interface boards (Fisher & Schroeder).

5

c) MOV

Format, created by Macintosh, is the proprietary format of the QuickTime Application.

It can also run on PCs. Being able to store both video and sound simultaneously, the format

was once superior to AVI. The latest version of QuickTime also has streaming capabilities for

internet video. However, with the new MPEG-2 format, the MOV format started to lose its

popularity (Fisher & Schroeder, 1999), until it was decided that the MPEG-4 is to use the

QuickTime format as the basis of its standards.

6

CHAPTER 2

LITRATURE SURVAY

2.1 Frame Separation

Frame processing is the first step in the background subtraction algorithm, the purpose

of this step is to prepare the modified video frames by removing noise and unwanted objects

in the frame in order to increase the amount of information gained from the frame and the

sensitivity of the algorithm.

Preprocessing is a process of collecting simple image processing tasks that change the

raw input video info a format. This can be processed by subsequent steps. Preprocessing of the

video is necessary to improve the detection of moving objects For example, by spatial and

temporal smoothing, snow as moving leaves on a tree, can be removed by morphological

processing of the frames after the identification of the moving objects as shown in fig

Fig 2.1 frame separation

7

Another key issue in preprocessing is the data format used by the particular background

subtraction algorithm. Most of the algorithm handles luminance intensity, which is one scalar

value per each pixel, however, color image, in either RGB or HSV color space, and is becoming

more popular in the background subtraction algorithms.

2.1.1 Coding for Frame Separation

file=aviinfo('movie1.avi');

frm_cnt=file.NumFrames

str2='.bmp'

h =waitbar(0,'Please wait...');

for i=1:frm_cnt

frm(i)=aviread(filename,i);

frm_name=frame2im(frm(i));

frm_name=rgb2gray(frm_name);

filename1=strcat(strcat(num2str(i)),str2);

imwrite(frm_name,filename1);

waitbar(i/frm_cnt,h)

end

close(h)

2.2 Fundamentals of Digital Image

Digital image is defined as a two dimensional function f(x, y), where x and y are

spatial (plane) coordinates, and the amplitude of f at any pair of coordinates (x, y) is called

intensity or grey level of the image at that point. The field of digital image processing refers

to processing digital images by means of a digital computer. The digital image is composed

of a finite number of elements, each of which has a particular location and value. The

elements are referred to as picture elements, image elements, pels, and pixels. Pixel is the

term most widely used.

2.2.1 I mage Compression

Digital Image compression addresses the problem of reducing the amount of data

required to represent a digital image. The underlying basis of the reduction process is removal

of redundant data. From the mathematical viewpoint, this amounts to transforming a 2D pixel

array into a statically uncorrelated data set. The data redundancy is not an abstract concept but

a mathematically quantifiable entity. If n1 and n2 denote the number of information-carrying

units in two data sets that represent the same information, the relative data redundancy

D

R [2]

of the first data set (the one characterized by n1) can be defined as,

8

R

D

C

R

1

1 =

Where

R

C called as compression ratio [2]. It is defined as

R

C =

2

1

n

n

In image compression, three basic data redundancies can be identified and exploited:

Coding redundancy, inter pixel redundancy, and phychovisal redundancy. Image compression

is achieved when one or more of these redundancies are reduced or eliminated.

The image compression is mainly used for image transmission and storage. Image

transmission applications are in broadcast television; remote sensing via satellite, air-craft,

radar, or sonar; teleconferencing; computer communications; and facsimile transmission.

Image storage is required most commonly for educational and business documents, medical

images that arise in computer tomography (CT), magnetic resonance imaging (MRI) and digital

radiology, motion pictures, satellite images, weather maps, geological surveys, and so on.

2.2.2 I mage Compression Model

a) Block Diagram of Image compression

b) Block Diagram of Image Decompression

Fig 2.2 Block Diagram of Image Compression and Decompression

9

2.2.3 I mage Compression Types

There are two types image compression techniques.

1. Lossy Image compression

2. Lossless Image compression

Compression ratio:

1. Lossy I mage compression:

Lossy compression provides higher levels of data reduction but result in a less than

perfect reproduction of the original image. It provides high compression ratio. lossy image

compression is useful in applications such as broadcast television, videoconferencing, and

facsimile transmission, in which a certain amount of error is an acceptable trade-off for

increased compression performance.

Originally, PGF has been designed to quickly and progressively decode lossy

compressed aerial images. A lossy compression mode has been preferred, because in an

application like a terrain explorer texture data (e.g., aerial orthophotos) is usually mid-mapped

filtered and therefore lossy mapped onto the terrain surface. In addition, decoding lossy

compressed images is usually faster than decoding lossless compressed images.

10

Fig 2.3 PSNR of lossy compression in relation to compression ratio

In the next test series we evaluate the lossy compression efficiency of PGF. One of the

best competitors in this area is for sure J PEG 2000. Since J PEG 2000 has two different filters,

we used the one with the better trade-off between compression efficiency and runtime. On our

machine the 5/3 filter set has a better trade-off than the other. However, J PEG 2000 has in both

cases a remarkable good compression efficiency for very high compression ratios but also a

very poor encoding and decoding speed.

The other competitor is J PEG. J PEG is one of the most popular image file formats. It

is very fast and has a reasonably good compression efficiency for a wide range of compression

ratios. The drawbacks of J PEG are the missing lossless compression and the often missing

progressive decoding. Fig. 3.2 depicts the average rate-distortion behavior for the images in

the Kodak test set when fixed (i.e., nonprogressive) lossy compression is used. The PSNR of

PGF is on average 3% smaller than the PSNR of J PEG 2000, but 3% better than J PEG.

These results are also qualitative valid for our PGF test set and they are characteristic

for aerial ortho-photos and natural images. Because of the design of PGF we already know that

PGF does not reach the compression efficiency of J PEG 2000. However, we are interested in

the trade-off between compression efficiency and runtime. To report this trade-off we show in

Table 4 a comparison between J PEG 2000 and PGF and in Fig. 5 we show for the same test

series as in Fig. 4 the corresponding average decoding times in relation to compression ratios.

11

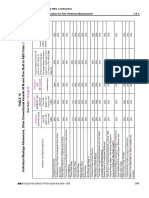

Table 2.1 contains for seven different compression ratios (mean values over the

compression ratios of the eight images of the Kodak test set) the corresponding average

encoding and decoding times in relation to the average PSNR values. In case of PGF the

encoding time is always slightly longer than the corresponding decoding time. The reason for

that is that the actual encoding phase takes slightly longer than the corresponding decoding

phase.

For six of seven ratios the PSNR difference between J PEG 2000 and PGF is within 3%

of the PSNR of J PEG 2000. Only in the first row is the difference larger (21%), but because a

PSNR of 50 corresponds to an almost perfect image quality the large PSNR difference

corresponds with an almost undiscoverable visual difference. The price they pay in J PEG 2000

for the 3% more PSNR is very high. The creation of a PGF is five to twenty times faster than

the creation of a corresponding J PEG 2000 file, and the decoding of the created PGF is still

five to ten times faster than the decoding of the J PEG 2000 file. This gain in speed is

remarkable, especially in areas where time is more important than quality, maybe for instance

in real-time computation.

Ratio

J PEG 2000 5/3

enc dec PS:CR

enc

PGF

dec P SNR

2.7 1.86 1.35 64.07 0.34 0.27 51.10

4.8 1.75 1.14 47.08 0.27 0.21 44.95

8.3 1.68 1.02 41.98 0.18 40.39

10.7 1.68 0.98 39.95 0.14 0.13 38.73

18.7 1.61 0.92 36.05 0.12 0.11 35.18

35.1 1.57 0.87 32.26 0.10 0.09 31.67

72.9 1.54 0.85 28.86 0.08 0.08 /S.37

Table 2.1 Trade-off between quality and speed for the Kodak test set

In Fig. 2.4 we see that the price we pay in PGF for the 3% more PSNR than J PEG is

low: for small compression ratios (<9) decoding in PGF takes two times longer than J PEG and

for higher compression ratios (>30) it takes only ten percent longer than J PEG. These test

results are characteristic for both natural images and aerial ortho-photos. Again, in the third

test series we only use the Lena image. We run our lossy coder with six different quantization

parameters and measure the PSNR in relation to the resulting

12

Compression ratios. The results (ratio: PSNR) are:

Fig 2.4 Decoding time in relation to compression ratio

2. Lossless I mage compression:

Lossless Image compression is the only acceptable amount of data reduction. It

provides low compression ratio while compared to lossy. In Lossless Image compression

techniques are composed of two relatively independent operations: (1) devising an alternative

representation of the image in which its inter pixel redundancies are reduced and (2) coding

the representation to eliminate coding redundancies.

Lossless Image compression is useful in applications such as medical imaginary,

business documents and satellite images.

Table 2.2 summarizes the lossless compression efficiency and Table 3 the coding times

of the PGF test set. For WinZip we only provide average runtime values, because of missing

source code we have to use an interactive testing procedure with runtimes measured by hand.

All other values are measured in batch mode.

13

WinZip

J PEG-

LS

J PEG

2000

PNG PGF

aerial 1.352 2.073

1

.383 1.944 2.314

compound 12.451 6.802 6.068 13.292 4.885

hibiscus 1.816 2.100 2.8

11

2.087 1.538

houses 1.241 1.518 2.155 1.500 1.965

logo 47.128 16.280 12.959 50.676 10.302

redbrush 2.433 4.041 4.494 3.564 3.931

woman 1.577 1.920 2.564 1.858 2.556

average 9.71 4.98 4.78 10.70 4.07

Table 2.2 Lossless compression ratios of the PGF test set

In Table 2.2 it can be seen that in almost all cases the best compression ratio is obtained

by J PEG 2000, followed by PGF, J PEG-LS, and PNG. This result is different to the result in

[SEA+00], where the best performance for a similar test set has been reported for J PEG-LS.

PGF performs between 0.5% (woman) and 21.3% (logo) worse than J PEG 2000. On average

it is almost 15% worse. The two exceptions to the general trend are the compound and the

logo images. Both images contain for the most part black text on a white background. For

this type of images, J PEG-LS and in particular WinZip and PNG provide much larger

compression ratios. However, in average PNG performs the best, which is also reported in

[SEA+00].

These results show, that as far as lossless compression is concerned, PGF performs

reasonably well on natural and aerial images. In specific types of images such as compound

and logo PGF is outperformed by far in PNG.

winzip

J PEG-LS J PEG 2000 PNG

PGF

enc dec enc dec enc dec enc dec enc dec

a 1.11 0.8 5.31 4.87 3.7 0.19 0.99 0.77

c 1.61 0.98 3.46 3.06 2.95 0.18 0.95 0.8

hi 0.69 0.3 1.45 1.29 1.77 0.1 0.35 0.27

ho 0.65 0.3 1.62 1.47 0.85 0.11 0.41 0.32

l 0.09 0.02 0.26 0.21 0.16 0.01 0.07 0.06

r 0.65 0.44 4.29 4.01 3.61 0.16 0.66 0.59

w 0.39 0.3 1.76 1.63 1.08 0.08 0.35 0.27

av 1.14 0.37 0.74 0.36 2.59 2.36 2.02 0.12 0.54 0.44

Table 2.3 Runtime of lossless compression of the PGF test set

Table 2.3 shows the encoding (enc) and decoding (dec) times (measured in seconds)

for the same algorithms and images as in Table 2. J PEG 2000 and PGF are both symmetric

14

algorithms, while WinZip, J PEG-LS and in particular PNG are asymmetric with a clearly

shorter decoding than encoding time. J PEG 2000, the slowest in encoding and decoding, takes

more than four times longer than PGF. This speed gain is due to the simpler coding phase of

PGF. J PEG-LS is slightly slower than PGF during encoding, but slightly faster in decoding

images.

WinZip and PNG decode even faster than J PEG-LS, but their encoding times are also

worse. PGF seems to be the best compromise between encoding and decoding times. Our PGF

test set clearly shows that PGF in lossless mode is best suited for natural images and aerial

orthophotos. PGF is the only algorithm that encodes the three MByte large aerial ortho-photo

in less than second without a real loss of compression efficiency. For this particular image the

efficiency loss is less than three percent compared to the best. These results should be

underlined with our second test set, the Kodak test set.

Fig 2.5 Lossless compression results of the Kodak test set

Fig. 2.5 shows the averages of the compression ratios (ratio), encoding (enc), and

decoding (dec) times over all eight images. J PEG 2000 shows in this test set the best

compression efficiency followed by PGF, J PEG-LS, PNG, and WinZip. In average PGF is

eight percent worse than J PEG 2000. The fact that J PEG 2000 has a better lossless compression

ratio than PGF does not surprise, because J PEG 2000 is more quality driven than PGF.

However, it is remarkable that PGF is clearly better than J PEG-LS (+21%) and PNG

(+23%) for natural images. J PEG-LS shows in the Kodak test set also a symmetric encoding

and decoding time behavior. Its encoding and decoding times are almost equal to PGF. Only

PNG and WinZip can faster decode than PGF, but they also take longer than PGF to encode.

15

If both compression efficiency and runtime is important, then PGF is clearly the best of the

tested algorithms for lossless compression of natural images and aerial orthophotos. In the third

test we perform our lossless coder on the Lena image. The compression ratio is 1.68 and the

encoding and decoding takes 0.25 and 0.19 seconds, respectively.

2.2.4. Image Compression Standards

There are many methods available for lossy and lossless, image compression. The

efficiency of these coding standardized by some Organizations. The International

Standardization Organization (ISO) and Consultative Committee of the International

Telephone and Telegraph (CCITT) are defined the image compression standards for both

binary and continuous tone (monochrome and Colour) images. Some of the Image

Compression Standards are

1. J BIG1

2. J BIG2

3. J PEG-LS

4. DCT based J PEG

5. Wavelet based J PEG2000

Currently, J PEG2000 [3] is widely used because; the J PEG-2000 standard supports

lossy and lossless compression of single-component (e.g., grayscale) and multicomponent

(e.g., color) imagery. In addition to this basic compression functionality, however, numerous

other features are provided, including: 1) progressive recovery of an image by fidelity or

resolution; 2) region of interest coding, whereby different parts of an image can be coded with

differing fidelity; 3) random access to particular regions of an image without the needed to

decode the entire code stream; 4) a flexible file format with provisions for specifying opacity

information and image sequences; and 5) good error resilience. Due to its excellent coding

performance and many attractive features, J PEG 2000 has a very large potential application

base. Some possible application areas include: image archiving, Internet, web browsing,

document imaging, digital photography, medical imaging, remote sensing, and desktop

publishing.

16

The main advantage of J PEG2000 over other standards, First, it would addresses a

number of weaknesses in the existing J PEG standard. Second, it would provide a number of

new features not available in the J PEG standard.

The preceding points led to several key objectives for the new standard, namely that it

should: 1) allow efficient lossy and lossless compression within a single unified coding

framework, 2) provide superior image quality, both objectively and subjectively, at low bit

rates, 3) support additional features such as region of interest coding, and a more flexible file

format, 4) avoid excessive computational and memory complexity. Undoubtedly, much of the

success of the original J PEG standard can be attributed to its royalty-free nature. Consequently,

considerable effort has been made to ensure that minimally-compliant J PEG- 2000 codec can

be implemented free of royalties.

17

CHAPTER 3

SYSTEM DEVELOPMENT

3.1. INTRODUCTION TO WAVELET

Over the past several years, the wavelet transform has gained widespread acceptance in

signal processing in general and in image compression research in particular. In applications

such as still image compression, discrete wavelets transform (DWT) based schemes have

outperformed other coding schemes like the ones based on DCT. Since there is no need to

divide the input image into non-overlapping 2-D blocks and its basis functions have variable

length, wavelet-coding schemes at higher compression ratios avoid blocking artifacts. Because

of their inherent multi resolution nature, wavelet-coding schemes are especially suitable for

applications where scalability and tolerable degradation are important. Recently the J PEG

committee has released its new image coding standard, JPEG-2000, which has been based upon

DWT.

Basically we use Wavelet Transform (WT) to analyze non-stationary signals, i.e.,

signals whose frequency response varies in time, as Fourier Transform (FT) is not suitable for

such signals.

To overcome the limitation of FT, Short Time Fourier Transform (STFT) was proposed.

There is only a minor difference between STFT and FT. In STFT, the signal is divided into

small segments, where these segments (portions) of the signal can be assumed to be stationary.

For this purpose, a window function "w" is chosen. The width of this window in time must be

equal to the segment of the signal where it is still be considered stationary. By STFT, one can

get time-frequency response of a signal simultaneously, which cant be obtained by FT. The

short time Fourier transform for a real continuous signal is defined as:

X (f, t) =

}

dt e t w t x

ft j

2 *

] ) ( ) ( [ ------------ (2.1)

Where the length of the window is (t-) in time such that we can shift the window by

changing value of t, and by varying the value we get different frequency response of the

signal segments.

18

The Heisenberg uncertainty principle explains the problem with STFT. This principle

states that one cannot know the exact time-frequency representation of a signal, i.e., one cannot

know what spectral components exist at what instances of times. What one can know are the

time intervals in which certain band of frequencies exists and is called resolution problem.

This problem has to do with the width of the window function that is used, known as the support

of the window. If the window function is narrow, then it is known as compactly supported. The

narrower we make the window, the better the time resolution, and better the assumption of the

signal to be stationary, but poorer the frequency resolution:

Narrow window ===> good time resolution, poor frequency resolution

Wide window ===>good frequency resolution, poor time resolution

The wavelet transform (WT) has been developed as an alternate approach to STFT to

overcome the resolution problem. The wavelet analysis is done such that the signal is multiplied

with the wavelet function, similar to the window function in the STFT, and the transform is

computed separately for different segments of the time-domain signal at different frequencies.

This approach is called Multi resolution Analysis (MRA) [4], as it analyzes the signal at

different frequencies giving different resolutions.

MRA is designed to give good time resolution and poor frequency resolution at high

frequencies and good frequency resolution and poor time resolution at low frequencies. This

approach is good especially when the signal has high frequency components for short durations

and low frequency components for long durations, e.g., images and video frames.

The wavelet transform involves projecting a signal onto a complete set of translated

and dilated versions of a mother wavelet +(t). The strict definition of a mother wavelet will be

dealt with later so that the form of the wavelet transform can be examined first. For now,

assume the loose requirement that +(t) has compact temporal and spectral support (limited by

the uncertainty principle of course), upon which set of basis functions can be defined.

The basis set of wavelets is generated from the mother or basic wavelet is defined as:

+a,b(t) = |

.

|

\

|

a

b t

a

1

; a, b e 9 and a>0 ------------ (2.2)

19

The variable a (inverse of frequency) reflects the scale (width) of a particular basis

function such that its large value gives low frequencies and small value gives high frequencies.

The variable b specifies its translation along x-axis in time. The term 1/ a is used for

normalization.

3.2. WAVELET TRANSFORM

Whether we like it or not we are living in a world of signals. Nature is talking to us with

signals: light, sounds Men are talking to each other with signals: music, TV, phones, etc.

The human body is equipped to survive in this world of signals with sensors such as

eyes and ears, which are able to receive and process these signals. Consider, for instance, our

ears: they can discriminate the volume and tone of a voice. Most of the information our ears

process from a signal is in the frequency content of the signal.

Scientists have developed mathematical methods to imitate the processing performed

by our body and extract the frequency information contained in a signal. These mathematical

algorithms are called transforms and the most popular among them is the Fourier Transform.

The second method to analyze non-stationary signals is to first filter different frequency

bands, cut these bands into slices in time, and then analyze them.

The wavelet transform uses this approach. The wavelet transform or wavelet analysis

is probably the most recent solution to overcome the shortcomings of the Fourier transform. In

wavelet analysis the use of a fully scalable modulated window solves the signal-cutting

problem. The window is shifted along the signal and for every position the spectrum is

calculated. Then this process is repeated many times with a slightly shorter (or longer) window

for every new cycle.

In the end the result is a collection of time-frequency representations of the signal, all

with different resolutions. Because of this collection of representations, we can speak of a

multi resolution analysis. In the case of wavelets, we normally do not speak about time-

frequency

3.3 Discrete Wavelet Transform

The discrete wavelet transform (DWT) was developed to apply the wavelet transform

to the digital world. Filter banks are used to approximate the behavior of the continuous wavelet

transform. The signal is decomposed with a high-pass filter and a low-pass filter. The

20

coefficients of these filters are computed using mathematical analysis and made available to

you. See Appendix B for more information about these computations.

Fig 3.1 Discrete Wave Transform

Where,

LPd: Low Pass Decomposition Filter

HPd: High Pass Decomposition Filter

LPr: Low Pass Reconstruction Filter

HPr: High Pass Reconstruction Filter

The wavelet literature presents the filter coefficients to you in tables. An example is

the Daubechies filters for wavelets. These filters depend on a parameter p called the

vanishing moment.

Table 3.1 Daubechies Wavelet Coefficient

The hp[n] coefficients are used as the low-pass reconstruction filter (LPr).

The coefficients for the filters HPd, LPd and HPr are computed from the h[n] coefficients as

follows:

High-pass decomposition filter (HPd) coefficients

g[n] =(1)n h[Ln] (L: length of the filter)

Low-pass reconstruction filter (LPr) coefficients

h[n] =h[Ln] (L: length of the filter)

21

High-pass reconstruction filter (HPr) coefficients

g[n] =g[Ln] (L: length of the filter)

The Daubechies filters for Wavelets are provided in the C55x IMGLIB for 2 p 10.

Since there are several sets of filters, we may ask ourselves what are the advantages and

disadvantages to using one set or another.

First we need to understand that we will have perfect reconstruction no matter what the

filter length is. However, longer filters provide smoother, smaller intermediate results. Thus, if

intermediate processing is required, we are less likely to lose information due to necessary

threshold or saturation. However, longer filters obviously involve more processing.

3.4 Wavelets and Perfect Reconstruction Filter Banks

Filter banks decompose the signal into high- and low-frequency components. The low-

frequency component usually contains most of the frequency of the signal. This is called the

approximation. The high-frequency component contains the details of the signal.

Wavelet decomposition can be implemented using a two-channel filter bank. Two-

channel filter banks are discussed in this section briefly. The main idea is that perfect

reconstruction filter banks implement series expansions of discrete-time signals.

Fig 3.2 A two level Wavelet Decomposition

Fig 3.3 A two level Wavelet Reconstruction

22

The input and the reconstruction are identical; this is called perfect reconstruction. Two

popular decomposition structures are pyramid and wavelet packet. The first one decomposes

only the approximation (low-frequency component) part while the second one decomposes

both the approximation and the detail (high-frequency component).

Fig 3.4 Pyramid Packet

Fig3.5. Wavelet Packet Decomposition

The C55x IMGLIB provides the following functions for one dimension pyramid and

packet decomposition and reconstruction. Complete information about these functions can be

found in the C55x IMGLIB.

1-D discrete wavelet transform

void IMG_wave_decom_one_dim(short *in_data, short *wksp, int *wavename, int length,int

level);

23

1-D inverse discrete wavelet transform

void IMG_wave_recon_one_dim(short *in_data, short *wksp, int *wavename, int length,int

level);

1-D discrete wavelet package transform

void IMG_wavep_decom_one_dim(short *in_data, short *wksp, int *wavename, int

length,int level);

1-D inverse discrete wavelet package transform

void IMG_wavep_recon_one_dim(short *in_data, short *wksp, int *wavename, int length,int

level);

3.5 Wavelets Image Processing

Wavelets have found a large variety of applications in the image processing field. The

J PEG 2000 standard uses wavelets for image compression. Other image processing

applications such as noise reduction, edge detection, and finger print analysis have also been

investigated in the literature.

3.6 Wavelet Decomposition of Images

In wavelet decomposing of an image, the decomposition is done row by row and then

column by column. For instance, here is the procedure for an N x M image. You filter each

row and then down-sample to obtain two N x (M/2) images. Then filter each column and

subsample the filter output to obtain four (N/2) x (M/2) images.

Of the four subimages obtained as seen in Figure 12, the one obtained by low-pass

filtering the rows and columns is referred to as the LL image.

The one obtained by low-pass filtering the rows and high-pass filtering the columns is

referred to as the LH images. The one obtained by high-pass filtering the rows and low-pass

filtering the columns is called the HL image. The subimage obtained by high-pass filtering the

rows and columns is referred to as the HH image. Each of the subimages obtained in this

fashion can then be filtered and subsampled to obtain four more subimages. This process can

be continued until the desired subband structure is obtained.

24

Fig 3.6 Original Image One-Level 2-D Decomposition

Three of the most popular ways to decompose an image are: pyramid, spacl, and wavelet

packet, as shown in Fig 3.7.

Fig 3.7 Three Popular Wavelet Decomposition Structures on Image

(a) Pyramid (b) Spacl, (c) Wavelet Packet

In the structure of pyramid decomposition, only the LL subimage is decomposed after each

decomposition into four more subimages.

In the structure of wavelet packet decomposition, each subimage (LL, LH,HL, HH) is

decomposed after each decomposition.

In the structure of spacl, after the first level of decomposition, each subimage is decomposed

into smaller subimages, and then only the LL subimage is decomposed.

In the part I development stage, the J PEG 2000 standard supports the pyramid

decomposition structure. In the future all three structures will be supported.

For two dimensions, the C55x IMGLIB provides functions for pyramid and packet

decomposition and reconstruction. Complete information about these functions can be found

in the C55x IMGLIB.

2-D discrete wavelet transform

void IMG_wave_decom_two_dim(short **image, short * wksp, int width, int height, int

*wavename, int level);

2-D inverse discrete wavelet transform

25

void IMG_wave_recon_two_dim(short **image, short * wksp, int width, int height, int

*wavename, int level);

2-D discrete wavelet package transform

void IMG_wavep_decom_two_dim(short **image, short * wksp, int width, int height, int

*wavename, int level);

2-D inverse discrete wavelet package transform

void IMG_wavep_recon_two_dim(short **image, short * wksp, int width, int height, int

*wavename, int level);

3.7 1-D Continuous wavelet transform

The 1-D continuous wavelet transform is given by:

Wf (a, b) =

}

dt t t x

b a

) ( ) (

,

------------ (2.3)

The inverse 1-D wavelet transform is given by:

x (t) =

} }

0

2

,

) ( ) , (

1

a

da

db t b a W

C

b a f

------------ (2.4)

Where C =

d

2

)

<

( ) + is the Fourier transform of the mother wavelet +(t). C is required to be finite,

which leads to one of the required properties of a mother wavelet. Since C must be finite, then

0 ) 0 ( = + to avoid a singularity in the integral, and thus the ) (t + must have zero mean. This

condition can be stated as

}

dt t) (

=0 and known as the admissibility condition.

26

1-D Discrete wavelet transform

The discrete wavelets transform (DWT), which transforms a discrete time signal to a

discrete wavelet representation. The first step is to discretize the wavelet parameters, which

reduce the previously continuous basis set of wavelets to a discrete and orthogonal /

orthonormal set of basis wavelets.

m,n(t) =2

m/2

(2

m

t n) ; m, n e Z such that - <m, n < -------- (2.5)

The 1-D DWT is given as the inner product of the signal x(t) being transformed with

each of the discrete basis functions.

Wm,n =<x(t), m,n(t) > ; m, n eZ ------------ (2.6)

The 1-D inverse DWT is given as:

x (t) =

m n

n m n m

t W ) (

, ,

; m, n eZ ------------- (2.7)

One Dimension Wavelet Applications

The 1D_Demo.c file presents applications of the one-dimension wavelet. A 128-point

sine wave is used as input for all these applications.

1-D Perfect Decomposition and Reconstruction Example

The third application shows a three-level pyramid decomposition and reconstruction

of the input signal:

// Perfect Reconstruction of Pyramid, Level 3

//==================================================

for( i =0; i <LENGTH; i++)

signal[i] =backup[i];

IMG_wave_decom_one_dim( signal, temp_wksp, db4, LENGTH, 3 );

27

IMG_wave_recon_one_dim( signal, temp_wksp, db4, LENGTH, 3 );

for( i =0; i <LENGTH; i++)

noise[i] =signal[i] backup[i];

//

3.9 2-D wavelet transform

The 1-D DWT can be extended to 2-D transform using separable wavelet filters. With

separable filters, applying a 1-D transform to all the rows of the input and then repeating on all

of the columns can compute the 2-D transform. When one-level 2-D DWT is applied to an

image, four transform coefficient sets are created. As depicted in Figure 3.8 (c), the four sets

are LL, HL, LH, and HH, where the first letter corresponds to applying either a low pass or

high pass filter to the rows, and the second letter refers to the filter applied to the columns.

Figure 3.8. Block Diagram of DWT (a)Original Image (b) Output image after the 1-D applied

on Row input (c) Output image after the second 1-D applied on row input

Figure 3.9. DWT for Lena image (a)Original Image (b) Output image after the 1-D

applied on column input (c) Output image after the second 1-D applied on row input

28

The Two-Dimensional DWT (2D-DWT) converts images from spatial domain to

frequency domain. At each level of the wavelet decomposition, each column of an image is

first transformed using a 1D vertical analysis filter-bank. The same filter-bank is then applied

horizontally to each row of the filtered and subsampled data. One-level of wavelet

decomposition produces four filtered and subsampled images, referred to as subbands. The

upper and lower areas of Fig. 3.9(b), respectively, represent the low pass and high pass

coefficients after vertical 1D-DWT and sub sampling. The result of the horizontal 1D-DWT

and sub sampling to form a 2D-DWT output image is shown in Fig.3.9(c).

We can use multiple levels of wavelet transforms to concentrate data energy in the

lowest sampled bands. Specifically, the LL subband in fig 3.8(c) can be transformed again to

form LL2, HL2, LH2, and HH2 subbands, producing a two-level wavelet transform. An (R-1)

level wavelet decomposition is associated with R resolution levels numbered from 0 to (R-1),

with 0 and (R-1) corresponding to the coarsest and finest resolutions.

The straight forward convolution implementation of 1D-DWT requires a large amount

of memory and large computation complexity. An alternative implementation of the 1D-DWT,

known as the lifting scheme, provides significant reduction in the memory and the computation

complexity. Lifting also allows in-place computation of the wavelet coefficients. Nevertheless,

the lifting approach computes the same coefficients as the direct filter-bank convolution.

In this application, the image is one-level decomposed and reconstructed. You notice

no difference between the original picture and the reconstructed picture.

3.10. LIFTING USING HARR

The lifting scheme is a useful way of looking at discrete wavelet transform. It is easy

to understand, since it performs all operations in the time domain, rather than in the frequency

domain, and has other advantages as well. This section illustrates the lifting approach using the

Haar Transform [6].

The Haar transform is based on the calculations of the averages (approximation co-

efficient) and differences (detail co-efficient). Given two adjacent pixels a and b, the principle

is to calculate the average

2

) ( b a

s

+

= and the difference b a d = . If a and b are similar, s will

29

be similar to both and d will be small, i.e., require few bits to represent. This transform is

reversible, since

2

d

s a = and

2

d

s b + = and it can be written using matrix notation as

\

|

=

2 / 1

2 / 1

) , ( ) , ( b a d s

|

|

.

|

1

1

=(a,b)A,

\

|

=

2 / 1

1

) , ( ) , ( d s b a

|

|

.

|

2 / 1

1

=

1

) , (

A d s

Consider a row of

n

2 pixels values

l n

S

,

for

n

l 2 0 < s . There are

1

2

n

pairs of pixels

2

1 2 , 2 ,

2 ,....., 4 , 2 , 0 ,

+

=

n

l n l n

forl S S . Each pair is transformed into an average

2 / ) (

1 2 , 2 , 1 , 1 +

+ =

l n l n n

S S S and the difference

l n l n l n

S S d

2 , 1 2 , , 1

=

+

. The result is a set

1

1

2

n

n

of S

averages and a set

1

1

2

n

n

of d differences.

3.11 Introduction of LSB

Data hiding is a method of hiding secret messages into a cover-media such that an

unintended observer will not be aware of the existence of the hidden messages. In this paper,

8-bit grayscale images are selected as the covermedia. These images are called cover-images.

Cover-images with the secret messages embedded in them are called stego-images. For data

hiding methods, the image quality refers to the quality of the stego-images.

In the literature, many techniques about data hiding have been proposed. One of the

common techniques is based on manipulating the least-signi7cant-bit (LSB) planes by

directly replacing the LSBs of the cover-image with the message bits. LSB methods typically

achieve high capacity. Wang et al. proposed to embed secret messages in the moderately

significant bit of the cover-image. A genetic algorithm is developed to 7nd an optimal

substitution matrix for the embedding of the secret messages.

They also proposed to use a local pixel adjustment process (LPAP) to improve the

image quality of the stego-image. Unfortunately, since the local pixel adjustment process

only considers the last three least significant bits and the fourth bit but not on all bits, the

local pixel adjustment process is obviously

30

not optimal. The weakness of the local pixel adjustment process is pointed out in Ref. As the

local pixel adjustment process modifies the LSBs, the technique cannot be applied to data

hiding schemes based on simple LSB substitution. Recently, Wang et al. further proposed a

data hiding scheme by optimal LSB substitution and genetic algorithm. Using the proposed

algorithm, the worst mean-square-error (WMSE) between the cover-image and the stego-

image is shown to be 1/2 of that obtained by the simple LSB substitution method.

In this paper, a data hiding scheme by simple LSB substitution with an optimal pixel

adjustment process (OPAP) is proposed. The basic concept of the OPAP is based on the

technique proposed. The operations of the OPAP are generalized. The WMSE between the

cover-image and the stego-image is derived. It is shown that the WMSE obtained by the OPAP

could be less than of that obtained by the simple LSB substitution method. Experimental

results

demonstrate that enhanced image quality can be obtained with low extra computational

complexity. The results obtained also show better performance than the optimal substitution

method described.

3.12 Data hiding by simple LSB substitution

In this section, the general operations of data hiding by simple LSB substitution

method are described.

Let C be the original 8-bit grayscale cover-image of Mc Nc pixels represented as

M be the n-bit secret message represented as

Suppose that the n-bit secret message M is to be embedded into the k-rightmost LSBs of the

cover-image C. Firstly, the secret message M is rearranged to form a conceptually k-bit

virtual image M_ represented as

Where The mapping between the n-bit secret message.

31

And the embedded message can be defined as follows:

Secondly, a subset of n_ pixels {xl1; xl2 ,.. xln_ }is chosen from the cover-image C in a

predefined sequence. The embedding process is completed by replacing the k LSBs of xli by

mi . Mathematically, the pixel value xli of the chosen pixel for storing the k-bit message mi

is modified to form the stego-pixel xli as follows:

In the extraction process, given the stego-image S, the embedded messages can be readily

extracted without referring to the original cover-image. Using the same sequence as in the

embedding process, the set of pixels {xl1, xl2,. Xln}storing the secret message bits are

selected from the stego-image. The k LSBs of the selected pixels are extracted and lined up to

reconstruct the secret message bits. Mathematically, the embedded message bits mi can be

recovered by

Suppose that all the pixels in the cover-image are used for the embedding of secret message

by the simple LSB substitution method. Theoretically, in the worst case, the

PSNR of the obtained stego-image can be computed by

32

Table 1 tabulates the worst PSNR for some k =15. It could be seen that the image quality of

the stego-image is degraded drastically when k>4.

3.13 Optimal pixel adjustment process

In this section, an optimal pixel adjustment process (OPAP) is proposed to enhance

the image quality of the stego-image obtained by the simple LSB substitution method. The

basic concept of the OPAP is based on the technique proposed.

Let pi , pi and pi be the corresponding pixel values of the ith pixel in the cover-image C,

the stego-image C obtained by the simple LSB substitution method and the refined stego-

image obtained after the OPAP. Let

Be the embedding error between pi and pi . According to the embedding process of the

simple LSB substitution method described in Section 2, pi is o

The value of can be further segmented into three intervals, such that

Based on the three intervals, the OPAP, which modifi7es pi to form the stego-pixel pi, can

be described as follows:

33

Let be the embedding error between pi and pi. can be computed as

follows:

From the above 7ve cases, it can be seen that the absolute value of may fall into the range

only when and ( case

5);while for other possible values of falls into the range , because

is obtained by the direct replacement of the k lab of Pi with the message bits

34

are equivalent to Pi<2^k and Pi >256-2^k, respectively in

general for gray scale natural images, when k<4, the no of pixel with pixel value smaller than

2^k or greater than 256-2^k, is insignificant. As a result it could be estimated that the absolute

embedded error between pixel in the cover image and in the stego image obtained after the

proposed OPAP is limited to

Let WMSE and WMSE be the worst case mean-squareerror between the stego-image

and the cover-image obtained by the simple LSB substitution method and the proposed method

with OPAP, respectively. WMSE* can be derived by

Reveals that WMSE*<WMSE, for k>2; and WMSE* WMSE when k=4. This

result also shows that the WMSE* obtained by the OPAP is better than that obtained by the

optimal substitution method proposed in which WMSE* = WMSE.

Moreover, the optimal pixel adjustment process only requires a checking of the

embedding error between the original cover-image and the stego-image obtained by the

simple LSB substitution method to form the final stego-image.

The extra computational cost is very small compared with Wangs method which requires

huge computation for the genetic algorithm to 7nd an optimal substitution matrix.

35

CHAPTER 4

RESULT AND DI SCUSSI ON

4.1 Advantages

1. High hiding capacity & Imperceptibility

2. Less Embedding Error

3. Here we avoided overflow & underflow error while embedding

4. High Robustness

4.2 Applications

1. Teleconference or video phone

- Very low delay (1/10 second is a standard)

2. Live Broad cast Video

- Modest delay is tolerable(second is normal)

- Error tolerance is needed

3. Video-in-a-can (DVD, Video on Demand)

- Random access to compressed data is desired

- Encoding can take a lot time

4. Decoding must always be at least the frame rate.

4.3 Result

Fig 4.1 Result Data hiding in Video

In general the ratio of PSNR should above 40 and we are obataining PSNR ratio above 60 so

PSNR is better

36

CHAPTER 5

CONCLUSI ON

In this paper we proposed a novel data hiding scheme that hides data into the integer

wavelet coefficients of an image. The system combines an adaptive data hiding technique and

the optimum pixel adjustment algorithm to increase the hiding capacity of the system

compared to other systems. The proposed system embeds secret data in a random order using

a secret key only known to both sender and receiver.

It is an adaptive system which embeds different number of bits in each wavelet

coefficient according to a hiding capacity function in order to maximize the hiding capacity

without sacrificing the visual quality of resulting stego image. The proposed system also

minimizes the difference between original coefficients values and modified values by using

the optimum pixel adjustment algorithm.

The proposed scheme was classified into three cases of hiding capacity according to

different applications required by the user. Each case has different visual quality of the stego-

image. Any data type can be used as the secret message since our experiments was made on a

binary stream of data. There was no error in the recovered message (perfect recovery) at any

hiding rate. From the experiments and the obtained results the proposed system proved to

achieve high hiding capacity up to 48% of the cover image size with reasonable image

quality and high security because of using random insertion of the secret message. On the

other hand the system suffers from low robustness against various attacks such as histogram

equalization and J PEG compression.

The proposed system can be further developed to increase its robustness by using

some sort of error correction code which increases the probability of retrieving the message

after attacks, also investigating methods to increase visual quality of the stego-image (PSNR)

with the obtained hiding capacity.

Hope to add support files to hide all file formats. This allows for much broader

spectrum of uses that one would able to encode .mp4,mov, 3gp,.

37

REFERENCES

1. G. J . Simmons, "The prisoners' problern and the subliminal channel," in Proceedings

of Crypto' 83, pp. 51-67, 1984.

2. N. Wu and M. Hwang. "Data Hiding: Current Status and Key Issues," International

J ournal of Network Security, Vol.4, No.1, pp. 1-9, J an. 2007.

3. W. Chen, "A Comparative Study of Information Hiding Schernes Using Amplitude,

Frequency and Phase Embedding," PhD Thesis, National Cheng Kung University,

Tainan, Taiwan, May 2003.

4. C. Chan and L. M. Cheng, "Hiding data in images by simple LSB substitution,

Pattern Recognition, pp. 469-474, Mar. 2004.

5. Changa, C. Changa, P. S. Huangb, and T. Tua, "A Novel bnage Steganographic

Method Using Tri-way Pixel-Value Differencing," J ournal of Multimedia, Vol. 3,

No.2, June 2008.

6. H. H. Zayed, "A High-Hiding Capacity Technique for Hiding Data in images Based

on K- Bit LSB Substitution," The 30th International Conference on Artificial

Intelligence Applications (ICAIA 2005) Cairo, Feb. 2005.

7. S. Katzenbeisser and F.A.P. Petitcolas, Information Hiding Techniques for

Steganography and Digital Watermarking, Artech House, Boston, 2000.

8. H.K. Pan, Y.Y. Chen, and Y.C. Tseng, A Secure Data Hid-ing Scheme for Two-

Color Images, Proc. Fifth IEEE Symp. Computers and Comm., IEEE Press,

Piscataway, N.J ., 2000

9. S. Lee, C.D. Yoo and T. Kalker, "Reversible image watermarking based on integer-

to-integer wavelet transform," IEEE Transactions on Information Forensics and

Security, Vol. 2, No.3, Sep. 2007, pp. 321-330.

10. M. K. Ramani, Dr. E. V. Prasad and Dr. S. Varadarajan, "Steganography Using

BPCS to the Integer Wavelet Transformed bnage", UCSNS International J ournal

of Computer Science and Network Security, VOL. 7 No.7, J uly 2007.

11. A. R. Calderbank, 1. Daubechies, W. Sweldens and B. Yeo., "Wavelet transforms

that map integers to integers". Applied and Computational Harmonic Analysis, vol.5,

noJ , pp.332-369, 1998.

12. G. Xuan, J . Zhu, Y. Q. Shi, Z. Ni, and W. Su., "Distortionless data hiding based on

integer wavelet transform," IEE Electronic Letters, 38(25): 1646--1648, Dec. 2002.

S-ar putea să vă placă și

- Children of AlcoholicsDocument7 paginiChildren of AlcoholicschoppersureÎncă nu există evaluări

- Unclos DraftDocument19 paginiUnclos DraftchoppersureÎncă nu există evaluări

- Nutritional Needs of AdolescentsDocument6 paginiNutritional Needs of AdolescentschoppersureÎncă nu există evaluări

- Cost Process DefinationsDocument8 paginiCost Process DefinationschoppersureÎncă nu există evaluări

- Multi Purpose Ic QT113Document12 paginiMulti Purpose Ic QT113choppersureÎncă nu există evaluări

- EogDocument16 paginiEogchoppersureÎncă nu există evaluări

- Callanta Notes (Criminal Law1)Document166 paginiCallanta Notes (Criminal Law1)aer0211100% (2)

- Power Generation PakistanDocument16 paginiPower Generation PakistanchoppersureÎncă nu există evaluări

- ApendixDocument1 paginăApendixchoppersureÎncă nu există evaluări

- SupplementsDocument1 paginăSupplementschoppersureÎncă nu există evaluări

- VirtualDJ 7 - Audio Setup GuideDocument29 paginiVirtualDJ 7 - Audio Setup GuideLek ChongÎncă nu există evaluări

- Final ReportDocument87 paginiFinal ReportchoppersureÎncă nu există evaluări

- How To Make A Simplest Inverter CircuitDocument4 paginiHow To Make A Simplest Inverter CircuitchoppersureÎncă nu există evaluări

- Power ControlDocument49 paginiPower ControlchoppersureÎncă nu există evaluări

- Third Party LicensesDocument14 paginiThird Party LicenseschoppersureÎncă nu există evaluări

- Adaptive ThesisDocument28 paginiAdaptive ThesischoppersureÎncă nu există evaluări

- Basics of Profibus Operation Chapter1Document20 paginiBasics of Profibus Operation Chapter1kerusacba_2011Încă nu există evaluări

- Kinematics motion descriptionDocument1 paginăKinematics motion descriptionchoppersureÎncă nu există evaluări

- Box Sync ReadMeDocument1 paginăBox Sync ReadMechoppersureÎncă nu există evaluări

- 4-20 Ma. Cálculo de Distancia MáximaDocument3 pagini4-20 Ma. Cálculo de Distancia MáximaMarco Antonio Arroyo RiveraÎncă nu există evaluări

- Concrete ReportDocument2 paginiConcrete ReportchoppersureÎncă nu există evaluări

- ConcreteDocument5 paginiConcretechoppersureÎncă nu există evaluări

- 5 - Lipids 2Document4 pagini5 - Lipids 2choppersureÎncă nu există evaluări

- Concrete ReportDocument2 paginiConcrete ReportchoppersureÎncă nu există evaluări

- 5 - Lipids 4Document3 pagini5 - Lipids 4choppersureÎncă nu există evaluări

- Ventilated FacadesDocument31 paginiVentilated Facadeschoppersure100% (1)

- Concrete ReportDocument2 paginiConcrete ReportchoppersureÎncă nu există evaluări

- 5 - Lipids 3Document4 pagini5 - Lipids 3choppersureÎncă nu există evaluări

- 5 - Lipids 1Document3 pagini5 - Lipids 1choppersureÎncă nu există evaluări

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (119)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Nba Sar B.tech. Electronics UgDocument171 paginiNba Sar B.tech. Electronics UgSaurabh BhiseÎncă nu există evaluări

- Acetylated Castor Oil - Preparation and Thermal DecompositionDocument7 paginiAcetylated Castor Oil - Preparation and Thermal DecompositionPee Hai NingÎncă nu există evaluări

- Dual vs Core 2 DuoDocument5 paginiDual vs Core 2 Duomahisharma123456Încă nu există evaluări

- Lab 3 - Series and Parallel CircuitsDocument7 paginiLab 3 - Series and Parallel CircuitsHảo PhùngÎncă nu există evaluări

- Lightning Protection SystemDocument5 paginiLightning Protection SystemRifki FathurrahmanÎncă nu există evaluări

- EJB 3.0 Final PreparedDocument243 paginiEJB 3.0 Final PreparedjayavardhankotiÎncă nu există evaluări

- Finite Element Analysis of The In-Service-Welding of T Joint Pipe ConnectionDocument12 paginiFinite Element Analysis of The In-Service-Welding of T Joint Pipe ConnectionMohamed SamyÎncă nu există evaluări

- Design of Connetiomn Chankara AryaDocument21 paginiDesign of Connetiomn Chankara AryaMohamed AbdÎncă nu există evaluări

- Conversion Factors GuideDocument2 paginiConversion Factors GuideAndri MPÎncă nu există evaluări

- VP Director Finance Controller in Washington DC Resume Brenda LittleDocument2 paginiVP Director Finance Controller in Washington DC Resume Brenda LittleBrendaLittleÎncă nu există evaluări

- Maintenance & Trouble Shooting of Voith CouplingDocument20 paginiMaintenance & Trouble Shooting of Voith Couplingsen_subhasis_58100% (1)

- Air Sentry Guardian-BreathersDocument14 paginiAir Sentry Guardian-BreathersNelson PeraltaÎncă nu există evaluări

- Sound AttenuatorsDocument24 paginiSound Attenuatorsadeel_akhtarÎncă nu există evaluări

- Part Number Part Name Part Number Part Name: Weichai Power WD10 Engine Spare Parts For EngineeringDocument3 paginiPart Number Part Name Part Number Part Name: Weichai Power WD10 Engine Spare Parts For EngineeringMd. Arefin Shawon100% (1)

- Nabll Mix DesignDocument29 paginiNabll Mix DesignPIDCL WITH SUBUDHIÎncă nu există evaluări

- Documentation For: Bank - MasterDocument6 paginiDocumentation For: Bank - MastervijucoolÎncă nu există evaluări

- Brocade Ciscomds Cli ComparisonDocument17 paginiBrocade Ciscomds Cli ComparisonprocomphysÎncă nu există evaluări

- Well Control - Fundamental - W2 - Well Control PrincipleDocument17 paginiWell Control - Fundamental - W2 - Well Control PrincipleGilbert SunaryoÎncă nu există evaluări

- Python Question Paper Mumbai UnivercityDocument5 paginiPython Question Paper Mumbai UnivercityRahul PawarÎncă nu există evaluări

- Lincoln FC ElectrodesDocument44 paginiLincoln FC ElectrodeszmcgainÎncă nu există evaluări

- Tivizen Iplug Greek GuideDocument13 paginiTivizen Iplug Greek GuideDenexoÎncă nu există evaluări

- AC 43-9-1 Amdt 0Document15 paginiAC 43-9-1 Amdt 0Harry NuryantoÎncă nu există evaluări

- EC2151 Electric Circuit Important QuestionsDocument47 paginiEC2151 Electric Circuit Important QuestionschitragowsÎncă nu există evaluări

- Manual E-Claw EngDocument18 paginiManual E-Claw Engshyampillai2007Încă nu există evaluări

- 02 - Heat ExchangersDocument88 pagini02 - Heat ExchangerssanjaysyÎncă nu există evaluări

- Data Acquisition Systems (DAS) in GeneralDocument3 paginiData Acquisition Systems (DAS) in GeneralArjun SinghÎncă nu există evaluări

- Multisite ErpDocument5 paginiMultisite ErparavindhsekarÎncă nu există evaluări

- Vol 4 Div G ODS Part 1 of 2-Pages-18-55Document38 paginiVol 4 Div G ODS Part 1 of 2-Pages-18-55debapriyoÎncă nu există evaluări

- ABS Thickness Measurement Requirement For Ship in Operation PDFDocument2 paginiABS Thickness Measurement Requirement For Ship in Operation PDFMohd Fouzi AbdullahÎncă nu există evaluări

- Iec 62097-2009Document170 paginiIec 62097-2009luis.alvaradoÎncă nu există evaluări