Documente Academic

Documente Profesional

Documente Cultură

Digital Terrain Modelling

Încărcat de

Patricia DiazDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Digital Terrain Modelling

Încărcat de

Patricia DiazDrepturi de autor:

Formate disponibile

DIGITAL TERRAIN MODELLING

ENGO 573

Digital Terrain

Modelling

2

THE UNI VERSI TY OF CALGARY

Geomatics Engineering Department

Digital Terrain Modelling

By

Naser El-Sheimy

Department of Geomatics Engineering

The University of Calgary

Tel: (403) 220 7587

E-mail: naser@ensu.ucalgary.ca

September 1999

Table of Contents

1. INTRODUCTION.................................1

1.1 Functional surfaces ........................................................................................................................................................1

1.2 Surface Continuity..........................................................................................................................................................2

1.3 Solid models....................................................................................................................................................................2

1.4 Surface smoothness.........................................................................................................................................................3

2. INTERPOLATION: WHAT AND WHY........................................................................................................................4

2.1 Methods for Interpolation...............................................................................................................................................6

2.2 Exact and Inexact Interpolators......................................................................................................................................6

3. GLOBAL FIT INTERPOLATION METHODS............................................................................................................6

3.1 Trend surface analysis.....................................................................................................................................................6

3.1.1 Problems with trend surfaces......7

3.1.2 Trend-Surface Analysis Mathematics:.....................................................................................................................8

3.1.3 Analysis of Variance (ANOVAR) Mathematics for Trend-Surface Regressions:.................................................11

3.1.4 Advantages/Disadvantages and When to use TSA:...............................................................................................13

4. LOCAL DETERMINISTIC METHODS FOR INTERPOLATION..........................................................................13

4.1 Tin interpolators............................................................................................................................................................15

4.1.1 LINEAR interpolation...............15

4.1.2 2nd Exact Fit Surface................17

4.1.3 Quintic interpolation.................17

4.2 Grid Interpolation.........................................................................................................................................................18

4.2.1 Nearest Neighbor ......................18

4.2.2 Linear Interpolation..................18

4.2.3 Bilinear interpolation ...............19

4.2.4 Cubic convolution ....................20

4.2.5 Inverse-Distance Mathematics:.20

5. GRIDDING .........................................22

5.1 Moving averaging gridding..........................................................................................................................................23

5.2 Linear projection gridding............................................................................................................................................24

6. SEARCH ALGORITHMS.................25

7. GRID RESAMPLING ........................27

N A S E R E L - S H E I M Y , 1 9 9 8

Surface Representation from

Point Data

This Chapter explains methods of creating discretized, continuous surfaces

for mapping the variation of elevation over space. Emphasis will be given to

gridding, spatial sampling strategies and methods of spatial prediction

including global methods of classifications and methods of local

deterministic interpolation methods.

1. Introduction

There is no ideal DEM, because the DEM generation techniques can not

capture the full complexity of a surface; there is always a sampling problem

and a representation problem. Various sampling schemes and

representations may lead to very different results both in the DEM itself and

especially in its derivatives. The accuracy of the individual elevations does

not necessary ensure the accuracy of derivatives such as slope maps.

There are two general ways to represent a surface: by a mathematical

function that expresses elevation as a function of the horizontal coordinates,

and by an image of the surface, explicitly giving the elevation at some set of

points, with no functional dependence with horizontal coordinates.

Before we go into details, we will first introduces some of the surface

characteristics based on ARC/INFO definitions:

1.1 Functional surfaces

Functional surfaces have the characteristic that they store a single z value,

as opposed to multiple z values as for the discontinuous surfaces, for any

given X,Y location. Probably the most common example of a functional

surface is terrestrial surfaces representing the Earths surface. Other

examples of terrestrial functional surfaces include bathymetric data and

water table depths. Functional surfaces can also be used to represent

statistical surfaces describing climatic and demographic data, concentration

of resources, and other biologic data. Functional surfaces can also be used

to represent mathematical surfaces based on arithmetic expressions such as

Naser El-Sheimy

1

Chapter

2

N A S E R E L - S H E I M Y , 1 9 9 8

Z = a + bX + cY. Functional surfaces are often referred to as 2.5-

dimensional surfaces.

1.2 Surface Continuity

Functional surfaces are considered to be continuous. That is, if you

approach a given x,y location on a functional surface from any direction, you

will get the same z value at the location. This can be contrasted with a

discontinuous surface, where different z values could be obtained depending

on the approach direction. An example of a discontinuous surface is a

vertical fault across the surface of the Earth.

Figure 1: Surface Continuity

A location at the top of a fault has one elevation, but immediately below this

point at the bottom of the fault you can observe another elevation. As you

can see, a model capable of storing a discontinuous surface must be able to

store more than one Z value for a given (X, Y) location.

1.3 Solid models

Functional surface models can be contrasted with solid models which are

true 3D models capable of storing multiple Z values for any given (X, Y)

location. Solid models are common in Computer Assisted Design (CAD),

engineering, and other applications representing solid objects.

Examples of objects suited to solid modeling are machine parts, highway

structures, buildings, and other objects placed on the Earths surface. In

some cases it is possible to represent some three-dimensional objects such

as faults and buildings on a functional surface by slightly offsetting the

duplicate (X,Y) coordinates.

Naser El-Sheimy

2

Z1

Z2

Z value ?

N A S E R E L - S H E I M Y , 1 9 9 8

1.4 Surface smoothness

Smoothness can be described in terms of the perpendicular to the slope of

the surface. Mathematically, this vector is referred to as the normal to the

first derivative. In addition to being continuous, a smooth surface has the

additional property, that regardless of the direction from which you

approach a given point on the surface, the normal is constant.

Two surfaces with different levels of smoothness are shown below. The first

surface, represented by flat planar facets, is not smooth. The normal to the

surface is constant throughout the extent of an individual facet. However,

as the normal crosses an edge separating two adjacent facets, the normal

abruptly changes.

Figure 2: Surface normals change abruptly when crossing facets.

Figure 3: Surface normals on a smooth surface do not change abruptly.

Naser El-Sheimy

3

N A S E R E L - S H E I M Y , 1 9 9 8

Contrast the surface on the top with the smooth surface on the bottom. The

normal to the surface varies continuously across the surface. The normal

does not change abruptly as it crosses an edge in the surface.

Terrain surfaces vary in smoothness. Geologically young terrain typically

have sharp ridges and valleys, in contrast to older terrain which have been

smoothed by prolonged exposure to erosion forces. Statistical surfaces such

as a rainfall or air temperature surface are generally smooth.

2. Interpolation: What and Why

The word Interpolation comes from the Latin words "inter" means

between and "polire" means refine.

Interpolation = Refining by putting in between.

Interpolation is the process of predicting the value of attributes at

unsampled sites from measurements made at point locations within the

same area or region. Predicting the value of an attribute at sites out side the

are covered by existing observations is called extrapolation.

Interpolation is used to convert data from point observations to continuos

fields so that the spatial patterns by these measurements can be compared

with the spatial pattern of other spatial entities. Interpolation is necessary

when (Burrough and McDonnell, 1998),

The discretized surface has a different level of resolution, cell size or

orientation from that required. For example, the conversion of scanned

data (satellite images, scanned aerial photographs) from one gridded

tessellation with one given size and/or orientation to another. This

process is known generally as convolution.

A continuous surface is represented by data model that is different from

required. For example, the transformation of continuous surface from one

kind of tessellation to another (e.g. Irregular data to grid).

The data we have do not cover the domain of interest completely. For

example, the conversion of discretized data to continuous surfaces.

To construct the DTM, we need the estimation of elevation for each point of

the grid. To do this, we need to know first whether the point is exactly at a

point where the sampling data is available, or between the sampling points.

In the first case, the elevation can be taken directly from the database (or

Naser El-Sheimy

4

N A S E R E L - S H E I M Y , 1 9 9 8

original observation), while in the second case, some method for estimating

elevation need to be used. One of such methods is called interpolation.

In digital terrain modelling, interpolation is mainly used for the following

operations:

Computation of elevation (Z) at single point locations;

Computation of elevation (Z) of a rectangular grid from original

sampling points (so-called gridding);

Computation of locations (X,Y) of points along contours (in contour

interpolation); and

Densification or coarsening of rectangular grids (so-called

resampling).

Abundant literature exists on methods for interpolation of DTM, some of

these interpolation algorithms will be discussed in the next sections. Some

characteristics and peculiarities of DTM interpolation from topographic

samples can be listed as follows:

There is no 'best' interpolation algorithm that is clearly superior to all

others and appropriate for all applications;

The quality of the resulting DTM is determined by the distribution and

accuracy of the original data points, and the adequacy of the

underlying interpolation model (i.e. a hypothesis about the behaviour

of the terrain surface);

The most important criteria for selecting a DTM interpolation method

are the degree to which (1) structural features can be taken into

account, and (2) the interpolation function can be adapted to the

varying terrain character;

Suitable interpolation algorithms must adapt to the character of data

elements (type, accuracy, importance, etc.) as well as the context (i.e.

distribution of data elements).

Other criteria that may influence the selection of a particular method

are the degree of accuracy desired and the computational effort

involved.

The two general classes of techniques for estimating a regular grid of points

on a surface from scattered observations are methods called "global fit" and

Naser El-Sheimy

5

N A S E R E L - S H E I M Y , 1 9 9 8

"local fit." As the name suggests, global-fit procedures calculate a single

function describing a surface that covers the entire map area. The function

is evaluated to obtain values at the grid nodes. In contrast, local-fit

procedures estimate the surface at successive nodes in the grid using only a

selection of the nearest data points.

2.1 Methods for Interpolation

The two general classes of techniques for estimating the elevation of points

on a surface from scattered and regular observations are methods called

"global fit" and "local fit." As the name suggests, global-fit procedures

calculate a single function describing a surface that covers the entire map

area. The function is evaluated to obtain values at the grid nodes. In

contrast, local-fit procedures estimate the surface at successive nodes in the

grid using only a selection of the nearest data points.

2.2 Exact and Inexact Interpolators

An interpolation method that estimates a value of an elevation at a sample

points which are identical to that measured is called an exact interpolator.

This is the ideal situation, because it is only at the data we have direct

knowledge of the elevation in question. All other methods are inexact

interpolators. The statistics differences (absolute and squared) between

measured and estimated values at data points are often used an indicator of

the quality of an inexact interpolator.

Terminology: through this course we shall use the following:

Z X Y

i i i

( , )

: The measured elevation at point (Xi, Yi)

Z X Y

i i i

^

( , )

: The estimate elevation at point (Xi, Yi)

3. Global Fit Interpolation Methods

3.1 Trend surface analysis

Trend surface analysis is the most widely used global surface-fitting

procedure. The mapped data are approximated by a polynomial expansion

of the geographic coordinates of the control points, and the coefficients of

the polynomial function are found by the method of least squares, insuring

that the sum of the squared deviations from the trend surface is a minimum.

Naser El-Sheimy

6

N A S E R E L - S H E I M Y , 1 9 9 8

Each original observation is considered to be the sum of a deterministic

polynomial function of the geographic coordinates plus a random error.

The polynomial can be expanded to any desired degree, although there are

computational limits because of rounding error. The unknown coefficients

are found by solving a set of simultaneous linear equations which include

the sums of powers and cross products of the X, Y, and Z values. Once the

coefficients have been estimated, the polynomial function can be evaluated

at any point within the map area. It is a simple matter to create a grid

matrix of values by substituting the coordinates of the grid nodes into the

polynomial and calculating an estimate of the surface for each node.

Because of the least-squares fitting procedure, no other polynomial equation

of the same degree can provide a better approximation of the data.

Figure 4: Principle of Trend Surface, A surface = trend + residuals

3.1.1 Problems with trend surfaces

There are a number of disadvantages to a global fit procedure. The most

obvious of these is the extreme simplicity in form of a polynomial surface as

compared to most natural surfaces. A first-degree polynomial trend surface

is a plane. A second-degree surface may have only one maximum or

Naser El-Sheimy

7

+

Trend

Residuals

Surface

N A S E R E L - S H E I M Y , 1 9 9 8

minimum. In general, the number of possible inflections in a polynomial

surface is one less than the number of coefficients in the trend surface

equation. As a consequence, a trend surface generally cannot pass through

the data points, but rather has the characteristics of an average.

Polynomial trend surfaces also have an unfortunate tendency to accelerate

without limit to higher or lower values in areas where there are no control

points, such as along the edges of maps. All surface estimation procedures

have difficulty extrapolating beyond the area of data control, but trend

surfaces seem especially prone to the generation of seriously exaggerated

estimates.

Computational difficulties may be encountered if a very high degree

polynomial trend surface is fitted. This requires the solution of a large

number of simultaneous equations whose elements may consist of

extremely large numbers. The matrix solution may become unstable, or

rounding errors may result in erroneous trend surface coefficients.

Nevertheless, trend surfaces are appropriate for estimating a grid matrix in

certain circumstances. If the raw data are statistical in nature, with perhaps

more than one value at an observation point, trend surfaces provide

statistically optimal estimates of a linear model that describes their spatial

distribution.

3.1.2 Trend-Surface Analysis Mathematics:

Trend-surface analysis is different than local estimation techniques which try

to estimate "local" features; trend-surface analysis is a mathematical

method used to separate "regional" from "local" fluctuations (Davis, 1973).

What is defined as "local" and "regional" is also often subjective and a

function of scale, and the regional trend may vary with scale. The use of

trend analysis allows observed data points to be divided into these two

components. A trend can be defined by three components (Davis, 1973):

1. It is based of geographic coordinates; i.e. the distribution of material

properties can be considered to be a function of location.

2. The trend is a linear function. That is, it has the form:

Z x y a a X a Y ( , ) ........ + + +

0 1 2

Where Z(X,Y) is the data value at the described location, the a's are

coefficients, and X and Y are combinations of geographic location.

Naser El-Sheimy

8

N A S E R E L - S H E I M Y , 1 9 9 8

3. The optimum trend, or linear function, must minimize the squared

deviations from the trend.

For the purpose of illustration, consider the set of points (X, Z) in Figure

5. These may be separated into "regional" and "local" components into a

variety of ways, 1st order (Figure 5.b), 2nd order (Figure 5.c), or 3rd

order (Figure 5.d).

Figure 5: Example of trend surface in 2D (After Davis, 1986)

Trend-surface analysis is basically a linear regression technique, but it is

applied to two- and three-dimensions instead of just fitting a line. A first

order linear trend surface equation has the form:

Z x y a a X a Y

o

( , ) + +

1 2

That is, an observation, with a value Z, can be described as a linear function

of a constant value (a

0

) related to the data set mean, and east-west (a

1

),

and north-south (a

2

) components (Davis, 1986). To solve for these three

unknowns, three normal equations are available:

Naser El-Sheimy

9

A. Original Surface

D. 3

rd

Order Trend C. 2

nd

Order Trend

B.1

st

Order Trend

N A S E R E L - S H E I M Y , 1 9 9 8

Z x y n a a X a Y

i

i

n

o i

i

n

i

i

n

( , )

+ +

1

1

1

2

1

X Z x y a X a X a X Y

i i

i

n

o i

i

n

i

i

n

i i

i

n

( , )

+ +

1 1

1

2

1

2

1

Y Z x y a Y a Y X a Y

i i

i

n

o i

i

n

i i

i

n

i

i

n

( , )

+ +

1 1

1

1

2

2

1

Where n is the number of data points. Solving these equations

simultaneously will yield a "best-fit", defined by least-squares regression, for

a two-dimensional, first-order (a plane) trend surface. This can be rewritten

in matrix format:

n X Y

X X X Y

Y X Y Y

a

a

a

Z

X Z

Y Z

i

i

n

i

i

n

i

i

n

i

i

n

i i

i

n

i

i

n

i i

i

n

i

i

n

o

i

i

n

i i

i

n

i i

i

n

1

1

1

1

1

1

1

1

1

1

1

]

1

1

1

1

]

1

1

1

1

1

1

1

1

1 1

1

2

1 1

1 1

2

1

1

2

1

1

1

For second-order polynomial trend surfaces, the general equation is:

Z x y a a X a Y a X a XY a Y

o

( , ) + + + + +

1 2 3

2

4 5

2

Third-order polynomial tend surfaces:

Z x y a a X a Y

a X a XY a Y

a X a X Y a XY a Y

o

( , ) + + +

+ + +

+ + +

1 2

3

2

4 5

2

6

3

7

2

8

2

9

3

Forth-order, two-dimensional surfaces:

Z x y a a X a Y

a X a XY a Y

a X a X Y a XY a Y

a X a X Y a X Y a XY a Y

o

( , ) + + +

+ + +

+ + +

+ + + +

1 2

3

2

4 5

2

6

3

7

2

8

2

9

3

10

4

11

3

12

2 2

13

3

14

4

Naser El-Sheimy

10

N A S E R E L - S H E I M Y , 1 9 9 8

3.1.3 Analysis of Variance (ANOVAR) Mathematics for Trend-

Surface Regressions:

Having obtained the coefficients of trend surface, we can now calculate

the expected or trend values,

Z X Y

i i i

^

( , )

, at any location (X, Y). A trend-

surface of a given order can be fit to any set of data, but that does not

mean that it is a meaningful or worthwhile model. Each of the terms in

the trend- surface polynomial equation has an associated error, and the

trend-surface itself is only used to estimate the "regional" trend. The

"local" fluctuations can be considered to be errors in the trend-surface

regression estimate. To evaluate the "worthiness" of the trend-surface

several terms must be calculated, in particular the total variation as sum

of squares of the dependent variable, Z:

Total Sum of Squares =

SS Z

Z

n

T

2

2

( )

For the estimated values,

Z X Y

i i i

^

( , )

, we can calculate the sum of squares

due to residuals or deviation from the trend:

Regression Sum of Squares =

SS Z

Z

n

R

^

^

( )

2 2

The difference between these gives the sum of squares due to residuals

or deviations from the trend:

Error Sum of Squares =

SS SS SS Z Z

D T R i i

( )

^

2

Now, the percentage of goodness-of-fit of the trend is given by:

R

SS

SS

R

D

2

%

and Multiple Correlation Coefficient is

R R

2

The significance of a trend surface may be tested by performing an Analysis

of Variance (ANOVA), which is the process of separating the total variation of

a set of observations into components associated with defined sources of

variation. This has been done by dividing the total variation of Z into two

components, the trend (regression) and the residuals (deviations). The

degrees of freedom associated with the total variation in a trend analysis is

(n-1), where n is the number of observations. The degrees of freedom

associated with the regression is m, where m is the number of coefficients in

Naser El-Sheimy

11

N A S E R E L - S H E I M Y , 1 9 9 8

the polynomial fit to the data not including the constant term (a

0

). Degrees

of freedom for deviations equal to difference between both, i.e. (n-m-1).

The mean squares are found by dividing the various sums of squares by the

appropriate degrees of freedom, which is:

Variance of SS

R

= Variance of the regression function about its mean =

MS

SS

m

R

R

Variance of SS

D

= = Variance about the regression function =

MS

SS

n m

D

D

1

Variance of SS

T

= = Variance of the total variation MS

SS

n

T

T

1

A test statistic used to define the "worth" of the regression =

F

MS

MS

statistic

R

D

In a general test of a trend-surface equation, the ratio of interest is that

between variance due to regression (trend) and variance due to residuals

(deviations). A test statistic used to define the "worth" of the regression. If

the regression is significant, the deviation about the regression will be small

compared to the variance of the regression itself.

The F

statistic

is used with the F-test to determine if the group of trend-surface

coefficients are significantly different than zero; i.e. the regression effect is

not significantly different from the random effect of the data. In formal

statistical terms, the F-test for significance of fit tests the hypothesis (H0)

and alternative (H1):

H a a a b

H a a a b

m

m

0 1 2 3

0 1 2 3

0

0

: .............

: , , ,......................,

The hypothesis tested is that the partial regression coefficients equal zero,

i.e. there is no regression. If the computed F value exceeds the table value

of F (Critical values for F for v1 = m and v2= m-n-1 Degrees of Freedom and

Level of Significance e.g. 5.0% ( = 0.05) ) the NULL hypothesis is rejected

and the alternative is accepted, i.e. all the coefficients in the regression are

significant and the regression is worthwhile.

In addition to the problems of getting a "good" fit for the trend surface,

there are a number of pit-falls to the technique (Davis, 1986):

Naser El-Sheimy

12

N A S E R E L - S H E I M Y , 1 9 9 8

1. There must be adequate data control. The number of observations

should be much greater then the number of coefficients.

2. The spacing of the observation points is important. It can affect the

size and resolution of features seen. Clustering can cause problems or

bias. The distribution can affect the shape of the surface.

3. There are problems near boundaries. The surface can "blow-up" in the

corners. For this reason it is important to have a buffer around the

area of concern. It amounts to a problem of interpolating between

data to one of extrapolating beyond data observations.

3.1.4 Advantages/Disadvantages and When to use TSA:

Advantages:

Unique surface generated

Easy to program

Same surface estimated regardless of orientation of reference

geographic

Calculation time for low order surfaces is low

Disadvantages:

Statistical assumptions of the model are rarely met in practice

Local Anomalies can not be seen on contour maps of low order

polynomials (but can be seen on contour maps of residuals)

The surfaces are highly susceptible edge effects

Difficulty to ascribe a physical meaning to complex higher order

polynomial

When to use TSA:

As a pre-processor to remove regional trend prior to kriging or IDW

Generating data in sparse data areas

4. Local Deterministic Methods for Interpolation

Global methods presented so far have imposed external, global spatial

structures on the interpolation. Local variations have been considered as

random, unstructured noise. Intuitively, this is not sensible as one expects to

the Z value of the interpolated points to be similar to the Z values measured

close by (Burrough and McDonnell, 1998). Local methods of interpolation

Naser El-Sheimy

13

N A S E R E L - S H E I M Y , 1 9 9 8

are those methods which make use of the information from nearest data

points. This involves the following:

1. Defining a search area or neighborhood around the point to be

interpolated

2. Finding the data points within this neighborhood

3. Choosing a mathematical model to represent the variation over this

limited number of points

4. Evaluating it for the point of interest (most probably regular grid)

The following issues need to be addressed:

The kind of interpolation function to use

The size, shape, and orientation of the neighborhood

The number of data points

The distribution of the data points: regular grid, irregularly distributed

or TIN

The possible incorporation of external information on trends or

different domains.

We will examine all these points in terms of different interpolation functions.

These functions will be subdivided as follows:

1. Interpolation from TIN data

Linear Interpolation

2

nd

Exact Fitted Surface Interpolation

Quintic Interpolation

2. Interpolation from grid/irregular data

Nearest neighbor assignment

Linear Interpolation

Bilinear interpolation

Cubic convolution

Inverse distance weighting (IDW)

Optimal functions using geostatistics (Kriging)

All these methods smooth the data to some degree in that they compute

some kind of average value within a window. All the methods, except Kriging

which will be discussed in Chapter 3, are examined below.

Naser El-Sheimy

14

N A S E R E L - S H E I M Y , 1 9 9 8

4.1 Tin interpolators

Tin interpolators make use of the fact that the data is already stored in a TIN

structures (see Chapter 5). Three interpolators are used for TIN surface

models: Linear, 2

nd

exact fit surface and bivariate quintic. All the three

interpolation methods honor the Z values at the triangle nodes (i.e., both

surfaces pass through all of the data points used to create the TIN). If you

were to sample a linear, 2

nd

exact fitted or quintic surface at exactly the

same (x, y) location as a point used to construct the TIN, the interpolated Z

value would be the same as the input value (ARC/INFO, 1998).

4.1.1 LINEAR interpolation

The liner interpolation method considers the surface as a continuous faceted

surface formed by triangles. The normal, or perpendicular to the slope of

the surface, is constant throughout the extent of each triangle facet.

However, as you cross over an edge separating two adjacent triangles, the

normal changes abruptly to that of the next triangle.

In linear interpolation, the surface value to be interpolated is calculated

based solely on the Z values for the nodes of the triangle within which the

point lies. If breaklines are present in the TIN, they do not influence

interpolation because linear interpolation is not affected by the surface

behavior of adjacent triangles.

The surface value at the certain point is obtained by intersecting a vertical

line with the plane defined by the three nodes of the triangle. The

generalized equation for linear interpolation of a point (X, Y, Z) in a triangle

facet is:

Ax + By + Cz + D = 0

where A, B, C, and D are constants determined by the coordinates of the

triangles three nodes.

Equation of plan using vector algebra:

Given: The position vectors

a b c

, ,

of the three non-collinear nodes (A ,B,C)

of a TIN triangle.

Required: the vector equation of the plane passing through the three nodes.

This can be simply obtained from the mixed products of vectors

( ), ( ), ( ) b a c a and p a

, that is:

Naser El-Sheimy

15

N A S E R E L - S H E I M Y , 1 9 9 8

( ) ( ) ( ) b a x c a p a

0

.Where

p

is the position vector of a point P(X,Y,Z) which belong to the

plane ABC. The graphical presentation of the last formula is shown in Figure

6.

Figure 6: Vector Representation of a Plane Through Three Points

Note:

( ) a x b c

a a a

b b b

c c c

x y z

x y z

x y z

Mathematical Formulation

General formulation:

Z x y a a X a Y

o

( , ) + +

1 2

Computational Procedures:

1. Search for the triangle the intermediate point (X, Y) falls into, i.e. get (X

1

,

Y

1

, Z

1

), (X

2

, Y

2

, Z

2

), and (X

3

, Y

3

, Z

3

)

2. Solve the set of equations F = A x

Naser El-Sheimy

16

C

A

B

P

Y

X

Z

a

c

b

p

b-a

(b-a) x (c-a)

p-a

N A S E R E L - S H E I M Y , 1 9 9 8

F

Z

Z

Z

A

X Y

X Y

X Y

x

a

a

a

1

]

1

1

1

1

]

1

1

1

1

]

1

1

1

1

2

3

1 1

2 2

3 3

0

1

2

1

1

1

3. Estimate (Z) for the location (X, Y)

[ ] Z X Y X Y

a

a

a

( , )

1

]

1

1

1

1

0

1

2

Linear Interpolation produces continuous but not smooth

surface

4.1.2 2

nd

Exact Fit Surface

The assumption that the triangles represent tilted flat plates obviously

results in a very crude approximation. A better approximation can be

achieved using curved or bent triangle plates, particularly if these can be

these can be made to join smoothly across the edges of the triangles.

Several procedures can have been used for this purpose (David, 1986). One

of the earliest involved finding the three neighbors closest to the faces of

the triangle, then fitting a second-degree polynomial trend surface to these

and to the points at the vertices of the triangle. A 2

nd

degree trend surface is

defined by six coefficients. This means that the fitted surface is exactly

passing through all six points. The equation can be then used to estimate

series of locations having a specified elevation. Contour maps derived from

this method will be curved rather than straight lines as in the linear

interpolation method.

Even though adjacent plates are fitted using common points, their trend

surfaces will not coincide exactly along lines of overlap. This means there

may be abrupt changes in direction crossing from one triangular plate to

another.

4.1.3 Quintic interpolation

Similar to the linear and 2

nd

degree exact fit surface interpolation methods,

quintic interpolation considers the surface model to be continuous. In

addition, quintic interpolation also considers the surface model to be

smooth, that is, the normal to the surface varies continuously within each

triangle. In addition, there are no abrupt changes in the normal as it crosses

an edge between triangles. This smooth characteristic is accomplished by

Naser El-Sheimy

17

N A S E R E L - S H E I M Y , 1 9 9 8

considering the geometry of the neighboring triangles when interpolating

the z value of a point in a tin triangle.

Quintic interpolation employs a bivariate 5

th

degree polynomial in x and y.

The generalized equation for quintic interpolation of the z value of a point on

a surface is (H. Akima, 1978):

Z X Y a X Y

ij

i j

j

i

i

( , )

0

5

0

5

There are 21 coefficients to be determined. The values of the function and

its first-order and second-order partial derivatives are given at each node of

the triangle, yielding 18 coefficients. The three remaining coefficients are

determined by considering the surface to be both smooth and continuous in

the direction perpendicular to the three triangle edges.

Note:

The derivative of a polynomial of degree n is a polynomial

of degree n-1. The 2

nd

derivative of a polynomial of degree

n is a polynomial of degree n-2, and so on.

4.2 Grid Interpolation

4.2.1 Nearest Neighbor

Nearest neighbor assignment assigns the value of the nearest mesh point in

the input lattice or grid to the output mesh point or grid cell. No actual

interpolation is performed based on values of neighboring mesh points. For

example, a value 2 in the input grid will always be the value 2; it will never

be 2.2 or 2.3. Nearest neighbor assignment is the preferred resampling

technique for categorical data since it does not alter the value of the input

cells. It is not usually used for surface interpolation.

4.2.2 Linear Interpolation

In 1-D case two adjacent reference points values are connected by a straight

line which is used for interpolation. In case of a 2-D space, three reference

points are used to define a plane (as in the TIN linear interpolation), which

serves as interpolation function on the respective grid. The whole

interpolation domain is subdivided into triangles, the corners at the

reference points.

Naser El-Sheimy

18

N A S E R E L - S H E I M Y , 1 9 9 8

Computational Procedures, See Figure 7:

1. Search for the grid where the intermediate point (X, Y) falls into, i.e. get

(X

1

, Y

1

, Z

1

), (X

2

, Y

2

, Z

2

), and (X

3

, Y

3

, Z

3

), and (X

3

, Y

3

, Z

3

).

2. Affine transformation of the intermediate point

X

X X

X

1

_

and

Y

Y Y

Y

1

_

3. Determination of :

'

Otherwise

Y X

0

1

_ _

4. Evaluate the formula

Z X Y Z Z Z X Z Z Y Z Z Z X Z Z Y ( , ) ( ) ( ) ( ) ( ) ( )

_ _ _ _ _ _

+ +

'

+ + +

'

1 3 2 2 1 1 4 1 3 4

1

Figure 7: Linear Interpolation from Grids

Linear Interpolation produces continuous but not smooth

surface

4.2.3 Bilinear interpolation

Instead of dividing the girds into triangles, as in the linear interpolation,

bilinear interpolation take the grid mesh as basic unit to define a piecewise

polynomial function. A bilinear polynomial of the form;

Z X Y a X Y a a X a Y a XY a a X a Y a XY

ij

i j

j i

( , ) + + + + + +

0

1

0

1

00 10 01 11 0 1 2 3

Naser El-Sheimy

19

X

n

X

Y

i

j

X

1

Y

1

Y

n

= 1

= 0

=0

=1

X

Y

1

2 3

4

N A S E R E L - S H E I M Y , 1 9 9 8

defined on every mesh will yield a surface which is continuous on the whole

domain, but not smooth (discontinuous first derivative)

Computational Procedures:

1. Search for the grid where the intermediate point (X, Y) falls into, i.e. get

(X

1

, Y

1

, Z

1

), (X

2

, Y

2

, Z

2

), and (X

3

, Y

3

, Z

3

), and (X

4

, Y

4

, Z

4

).

2. Affine transformation of the intermediate point

X

X X

X

1

_

and

Y

Y Y

Y

1

_

3. Evaluate the formula

_ _

4 3 2 1

_

1 2

_

1 4 1

_ _

) ( ) ( ) ( ) , ( Y X Z Z Z Z Y Z Z X Z Z Z Y X Z + + + +

Figure 8: Bilinear Interpolation

4.2.4 Cubic convolution

Cubic convolution calculates the output Z value using the values of the

sixteen nearest input mesh points. A cubic spline curve is fit horizontally

through each column of four points, and a final curve is fit vertically to the

four horizontal curves, through the data point location. Using a smooth

curve and a larger neighborhood gives cubic convolution a tendency to

smooth the data, and can in some cases result in output mesh point values

which are outside the range of values in the input lattice.

4.2.5 Inverse-Distance Mathematics:

The technique estimates the Z value at a point by weighting the

influence of nearby data the most, and more distant data the least. This

can be described mathematically by:

Naser El-Sheimy

20

X

Y

1

2

3

4 X

Y

N A S E R E L - S H E I M Y , 1 9 9 8

Z X Y

Z

d

d

i

i

p

i

n

i

p

i

n

( , )

1

]

1

1

1

]

1

1

1

1

1

or

1 ) , (

i

With

i i

Z Y X Z

where Z(X,Y) is the estimated Z value at the location (X,Y), Z

i

is the Z

value at the grid location(X

i

, Y

i

), d

i

is the distance between the grid

location (X

i

, Y

i

) and point (X,Y), and p is the power to which the distance

is raised. The basis of this technique is that nearby data are most similar

to the actual field conditions at the grid location. Depending on the site

conditions the distance may be weighted in different ways. If p = 1, this

is a simple linear interpolation between points. Many people have found

that p = 2 produce better results. In this case, close points are heavily

weighted, and more distant point are lightly weighted (points are

weighted by 1 / d2). At other sites, p has been set to other powers and

yielded reasonable results.

Inverse-distance is a simple and effective way to estimate parameter

values at grid locations of unknown value. Beside the directness and

simplicity, however, inverse-distance techniques have a number of

shortcomings. Some of these are:

If a data point is coincident with a grid location, d = 0, a division by 0

occurs, unless it is treated specially with the following: condition:

if d

i

= 0, Z(X,Y) = Z

i

.

4. 2. 5. 1 WEI GHTI NG FUNCTI ONS

The data points (or the slopes at the points) used in the estimation

procedure are weighted according to the distances between the grid node

being estimated and the points. Figure 9shows four different weighting

functions. The four weighting functions decrease at increasing rates with

distance.

The weights that are assigned to the control points according to the

weighting function are adjusted to sum to 1.0. Therefore, the weighting

function actually assigns proportional weights and expresses the relative

Naser El-Sheimy

21

1/D

4

1/D

1/D

2

1/D

6

Distance

Weight

1

N A S E R E L - S H E I M Y , 1 9 9 8

influence of each control point. A widely used version of the weighting

process assigns a function whose exact form depends upon the distance

from the location being estimated and the most distant point used in the

estimation. This inverse distance-squared weighting function is then scaled

so that it extends from one to zero over this distance.

Figure 9: Distance Weighting Functions

5. Gridding

Gridding is the estimation of values of the surface at a set of locations that

are arranged in a regular pattern which completely covers the mapped area.

In general, values of the surface are not known at these uniformly spaced

locations, and so must be estimated from the irregularly located control

points where values of the surface are known. The locations where

estimates are made are referred to as "grid points" or "grid nodes."

The grid nodes usually are arranged in a square pattern so the distance

between nodes in one direction is the same as the distance between them in

the perpendicular direction. In most commercial software, the spacing is

under user control, and is one of many parameters that must be chosen

before a surface can be gridded and mapped. The area enclosed by four grid

nodes is called a "grid cell." If a large size is chosen for the grid cells, the

resulting map will have low resolution and a coarse appearance, but can be

computed quickly. Conversely, if the grid cells are small in size, the contour

map will have a finer appearance, but will be more time consuming and

expensive to produce.

The estimation process involves three essential steps. First, the control

points must be sorted according to their geographic coordinates. Second,

from the sorted coordinates, the control points surrounding a grid node to

be estimated must be searched out. Third, the program must estimate the

value of that grid node by some mathematical function, as described in the

previous sections, of the values at these neighboring control points. Sorting

greatly affects the speed of operation, and hence the cost of using a

contouring program. However, this step has no effect on the accuracy of the

estimates. Both the search procedure and the mathematical function do

have significant effects on the form of the final map.

Naser El-Sheimy

22

N A S E R E L - S H E I M Y , 1 9 9 8

5.1 Moving averaging gridding

The most obvious function that can be used to estimate the value of a

surface at a specific grid node is simply to calculate an average of the

known values of the surface at nearby control points. In effect, this projects

all of the surrounding known values horizontally to the grid node location.

Then, a composite estimate is made by averaging these, usually weighting

the closest points more heavily than distant points. The resulting

mathematical model of the surface, and the corresponding contour map, will

have certain characteristics. The highest and lowest areas on the surface will

contain control points, and most grid nodes will have intermediate values,

since an average cannot be outside the range of the numbers from which it

is calculated. At grid nodes beyond the outermost control points, the grid

nodes must be estimated by extrapolation; their values will be close to those

of the nearest control points.

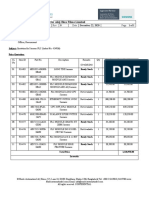

Figure 10: Steps in Computation of Grid Value using Moving Average

Figure 10 shows a series of observations; each point is characterized by its X

coordinate (east-west or across the page), its Y coordinate (north-south or

down the page), and its Z coordinate (the value to be mapped). On the

Naser El-Sheimy

23

12

13

11

12

10

11

16

18

15

14

15

17

12

13

11

12

10

11

16

18

15

14

15

17

13

11

12

10

11

15

14

11.1 12.3 13.3 15.1

10.9 13.1 13.8 15.7

10.8 12.6 14.3 16.2

11.4 12.3 63.3 17.6

(a) (b)

(c)

(d)

N A S E R E L - S H E I M Y , 1 9 9 8

illustration, values of Z are noted beside each point. The observations may

be identified by numbering them sequentially as they are read by the

program, from 1 to i. Therefore, an original data point i has coordinates X(i)

in the east-west direction, Y(i) in the north-south direction, and value Z(i). In

(b), a regular grid of nodes has been superimposed on the map. These grid

nodes also are numbered sequentially from 1 to k. Grid node k has

coordinates X(k) and Y(k) and has an estimated elevation Z(k). To estimate

the grid node value Z(k) from the nearest n data points, these points must

be found and the distances between them and the node calculated. The

search procedure may be simple or elaborate. Assume that by some method

the n data points nearest to grid node k have been located. The distance

D(k) from observation i to grid node k is found by the Pythagorean equation

Having found the distances D(ik) to the nearest data points, the grid point

elevation Z is estimated from these. The completed grid with all values of Z

is shown in (d).

This type of algorithm is sometimes called a "moving average," because

each node in the grid is estimated as the average of values at control points

within a neighborhood that is "moved" from grid node to grid node. In effect,

values at the control points are projected horizontally to the location of the

grid node, where they are weighted and averaged.

5.2 Linear projection gridding

The linear projection gridding is a two-part procedure, in which a weighted

average of slopes projected from the nearest neighboring data points

around each grid node are used to estimate the value at the node.

Initially, the slope of the surface at every data point must be estimated. The

nearest n neighboring observations around a data point are found and each

is weighted inversely to its distance from the data point. A linear trend

surface is then fitted to these weighted observations. The constant term of

the fitted regression equation is adjusted so the plane passes exactly

through the data point. The slope of the trend surface is used as the local

dip. If at least five points cannot be found around the data point or if the set

of simultaneous equations for the fitted plane cannot be solved, the

coefficients of a global linear trend are used to estimate the local slope. The

slope coefficients are saved for each data point.

The second part of the algorithm estimates the value of the surface at the

grid nodes. A search procedure finds n nearest neighboring data points

around the node to be estimated. The X,Y coordinates of the grid node are

substituted into each of the local trend surface equations associated with

these data points, in effect projecting these local dipping planes to the

Naser El-Sheimy

24

N A S E R E L - S H E I M Y , 1 9 9 8

location of the node. An average of these estimates is then calculated,

weighting each slope by the inverse of the distance between the grid node

and the data point associated with the slope. If a data point lies at or very

near a grid intersection, the value of the data point is used directly as the

value of the grid node.

The projection of slopes may be disadvantageous in some circumstances.

For example, the method may tend to create spurious highs or lows both in

areas of limited density of control points and along the edges of maps if

surface dips are projected from areas where there are tight clusters of

observations. Also, the two-phase algorithm obviously requires more time

for computation than simpler procedures.

Variants of the linear projection algorithm are among the most popular of

those used for constructing the mathematical model used in contouring.

These algorithms are especially good within areas that are densely

controlled by uniformly spaced data points. However, like the piecewise

linear least squares methods, they have the distressing habit of creating

extreme projections when used to estimate grid nodes beyond the

geographic limits of the data.

6. Search algorithms

One critical difference between various local-fit algorithms for interpolating

to a regular grid is the way in which "nearest neighbors" are defined and

found. Because some search techniques may be superior to others in certain

situations (primarily reflecting the arrangement of the data points), most

commercial software provides a variety of search procedures that may be

selected by the user. The simplest method finds the n nearest neighboring

data points, in a Euclidean distance sense, regardless of their angular

distribution around the grid node being estimated. This method is fast and

satisfactory if observations are distributed in a comparatively uniform

manner, but provides poor estimates if the data are closely spaced along

widely separated traverses.

An objection to a simple nearest neighbor search is that all the nearest

points may lie in a narrow wedge on one side of the grid node. The resulting

estimate of the node is essentially unconstrained, except in one direction.

This may be avoided by restricting the search in some way which ensures

that the control points are equitably distributed about the grid node being

estimated.

Naser El-Sheimy

25

N A S E R E L - S H E I M Y , 1 9 9 8

Figure 11: Search Techniques those Locates N Nearest Neighbors around a

Grid Node

The simplest method that introduces a measure of radial is a quadrant

search. Some minimum number of control points must be taken from each

of the four quadrants around the grid node being calculated. An elaboration

on the quadrant search is an octant search, which introduces a further

constraint on the radial distribution of the points used in the estimating

equation. A specified number of control points must be found in each of the

45 degrees segments surrounding the grid node being estimated. This

search method is one of the more elegant procedures and is widely used in

commercial contouring programs.

These constrained search procedures require finding and testing more

neighboring control points than in a simple search, which increases the time

required. Most commercial software provides a number of different types of

search procedure which finds all points within a radius r of the point being

estimated. Estimates made using the other search procedures are based on

a fixed number of points collected at variable distances from the grid node;

this search algorithm uses a variable number of points found within a fixed

distance of the node.

Any constraints on the search for the nearest control points, such as a

quadrant or octant requirement, will obviously expand the size of the

neighborhood around the grid node being estimated. This occurs because

some nearby control points are likely to be passed over in favor of more

distant points in order to satisfy the requirement that only a few points may

be taken from a single sector. Unfortunately, the autocorrelation of a surface

decreases with increasing distance, so these more remote control points are

less closely related to the location being estimated. This means the estimate

Naser El-Sheimy

26

12

13

11

12

10

11

16

18

15

14

15

17

12

13

11

12

10

11

16

18

15

14

15

17 12 12

Quadrant Search

Octant Search

N A S E R E L - S H E I M Y , 1 9 9 8

may be poorer than if a simple nearest neighbor search procedure were

used.

7. Grid Resampling

Resampling is the process of determining new values for grid cells in an

output grid that result from applying some geometric transformation to an

input grid. The input grid may be in a different coordinate system, at a

different resolution, or may be rotated with respect to the output grid.

Interpolation techniques, nearest neighbor assignment, bilinear

interpolation, cubic convolution, and IDW, discussed in previous Sections,

are usually used in the resampling process.

Resampling grids of different resolutions should be done when using grids of

different resolutions as input. The default resampling is to the coarsest

resolution of the input grids. This resampling on the fly uses the nearest

neighbor assignment as the resampling method. The following Figures

shows typical examples of when resampling is needed.

Naser El-Sheimy

27

Output Grid

Input Grid

Input Grid

Output Grid

N A S E R E L - S H E I M Y , 1 9 9 8

Figure 12: (a) Resampling when changing the grid size (b) Resampling when

rotating the grid plus changing the grid size.

Figure 13: Example of Nearest Neighbor Resampling

Naser El-Sheimy

28

References

1. Akima, H. (1978), " A Method of Bivariate Interpolation and Smooth Surface

Fitting For Irregularly Distributed Data Points", ACM Transactions on

Mathematical Software, Vol. 4, No.2, June 1978, pp. 148-159.

2. Davis, John, 1986, "Statistics and data analysis in geology", New York, Wiley,

2

nd

ed , 1986, (UofC Call # QE48.8 .D38 1986 )

3. ESRI, Inc. 1998, "Arc/Info v7.0.2 Manual", Redlands, CA: Environmental

Systems Research Institute

4. P.A. Burrough and McDonnell R, 1998, "Principles of geographical information

systems for land resources assessment", Oxford, Clarendon Press, 1998.

(UofC Call # HD108.15 .B87 1986)

S-ar putea să vă placă și

- Digital Terrain ModelsDocument9 paginiDigital Terrain ModelsIrwan EPÎncă nu există evaluări

- Function Point EstimationDocument37 paginiFunction Point EstimationPavan Kumar RÎncă nu există evaluări

- Optical Character Recognition A Complete Guide - 2020 EditionDe la EverandOptical Character Recognition A Complete Guide - 2020 EditionÎncă nu există evaluări

- 04 - Chapter-4 PDFDocument8 pagini04 - Chapter-4 PDFsaronÎncă nu există evaluări

- Function Point Analysis A Complete Guide - 2020 EditionDe la EverandFunction Point Analysis A Complete Guide - 2020 EditionÎncă nu există evaluări

- Digital Terrain Modelling (Benin City)Document13 paginiDigital Terrain Modelling (Benin City)Prince SegebeeÎncă nu există evaluări

- Taiwan's Statesman: Lee Teng Hui and Democracy in AsiaDe la EverandTaiwan's Statesman: Lee Teng Hui and Democracy in AsiaÎncă nu există evaluări

- Lecture 4 Number SystemDocument226 paginiLecture 4 Number SystemNajmul IslamÎncă nu există evaluări

- Guests of the Emperor: Allied Pow's of Wwll in Rangoon BurmaDe la EverandGuests of the Emperor: Allied Pow's of Wwll in Rangoon BurmaÎncă nu există evaluări

- Digital Terrain ModelDocument13 paginiDigital Terrain ModelJunior RoqueÎncă nu există evaluări

- The Lady and the Tiger: A Memoir of Taiwan, the Republic of ChinaDe la EverandThe Lady and the Tiger: A Memoir of Taiwan, the Republic of ChinaÎncă nu există evaluări

- Software Estimation TechniquesDocument10 paginiSoftware Estimation Techniquesanirban_surÎncă nu există evaluări

- Peaceful War: How the Chinese Dream and the American Destiny Create a New Pacific World OrderDe la EverandPeaceful War: How the Chinese Dream and the American Destiny Create a New Pacific World OrderÎncă nu există evaluări

- Progressive Function Point AnalysisDocument19 paginiProgressive Function Point AnalysisRubenÎncă nu există evaluări

- Exceptional States: Chinese Immigrants and Taiwanese SovereigntyDe la EverandExceptional States: Chinese Immigrants and Taiwanese SovereigntyÎncă nu există evaluări

- The New Cambridge Modern History: Advisory CommitteeDocument846 paginiThe New Cambridge Modern History: Advisory CommitteeDevangana Kalita100% (1)

- Milovan Đilas, The New Class - An Analysis of The Communist System, 1957Document112 paginiMilovan Đilas, The New Class - An Analysis of The Communist System, 1957Vi DoÎncă nu există evaluări

- They Left Us Behind: The Story of a Young Girl’S Family and the Struggle to Reach AmericaDe la EverandThey Left Us Behind: The Story of a Young Girl’S Family and the Struggle to Reach AmericaÎncă nu există evaluări

- Digital Elevation Model (DEM) WhitepaperDocument120 paginiDigital Elevation Model (DEM) Whitepaper정관용Încă nu există evaluări

- Wiley - Signal Analysis - Wavelets, Filter Banks, Time-Frequency Transforms and Applications - MERTINSDocument328 paginiWiley - Signal Analysis - Wavelets, Filter Banks, Time-Frequency Transforms and Applications - MERTINSRushi DesaiÎncă nu există evaluări

- Geocurrents - Info-Ralph Peters Thinking The Unthinkable PDFDocument2 paginiGeocurrents - Info-Ralph Peters Thinking The Unthinkable PDFshahidacÎncă nu există evaluări

- Vietnam Laying The Path For The 10th National CongressDocument21 paginiVietnam Laying The Path For The 10th National Congressnvh92Încă nu există evaluări

- The Three People's PrinciplesDocument3 paginiThe Three People's Principlesvsop_bluezzÎncă nu există evaluări

- Myth, Nationalism and Genocide: Ben KiernanDocument20 paginiMyth, Nationalism and Genocide: Ben KiernanWahyudi BurhanÎncă nu există evaluări

- István Rév The Unnoticed ContinuityDocument15 paginiIstván Rév The Unnoticed ContinuityBéla BakóÎncă nu există evaluări

- An Introduction To Second Language Acquisition Research by Gass SelinkerDocument246 paginiAn Introduction To Second Language Acquisition Research by Gass SelinkerMuuammad100% (1)

- David Chandler - Genocide Education in Cambodia David Chandler For University Lecturer Training FinalDocument15 paginiDavid Chandler - Genocide Education in Cambodia David Chandler For University Lecturer Training FinalkilettersÎncă nu există evaluări

- What Was The Impact of Zheng He's Exploration On China?Document1 paginăWhat Was The Impact of Zheng He's Exploration On China?Pang Wen Yuen100% (1)

- Main Terms of The Chinese Civil WarDocument6 paginiMain Terms of The Chinese Civil Warneharika2Încă nu există evaluări

- Lichbach - Kurs Z Comparative PoliticsDocument59 paginiLichbach - Kurs Z Comparative PoliticsRobert SkylineÎncă nu există evaluări

- Map Projections: Types of DistortionDocument6 paginiMap Projections: Types of DistortionArenPrajapatiÎncă nu există evaluări

- Map Projections and Coordinate SystemDocument7 paginiMap Projections and Coordinate SystemjparamÎncă nu există evaluări

- Map Projections: Types of DistortionDocument6 paginiMap Projections: Types of DistortionraghurmiÎncă nu există evaluări

- SVG 401 Map Projection Real2 PDFDocument28 paginiSVG 401 Map Projection Real2 PDFIghotegwono EfetoborÎncă nu există evaluări

- 1-Description of Natural Surfaces PDFDocument11 pagini1-Description of Natural Surfaces PDFadre traÎncă nu există evaluări

- Shading Performance of Curved Forms: Giamal MashhinaDocument6 paginiShading Performance of Curved Forms: Giamal MashhinaKhaled AbdusamadÎncă nu există evaluări

- Jo Wood (1996), The Geomorphological Characterisation of Digital Elevation ModelsDocument30 paginiJo Wood (1996), The Geomorphological Characterisation of Digital Elevation ModelsFlori PaizsÎncă nu există evaluări

- Map ProjectionDocument36 paginiMap ProjectionBeherawy WondimagegnehuÎncă nu există evaluări

- Dem TheoryDocument8 paginiDem TheoryAbdirahmanÎncă nu există evaluări

- GIS Unit 3Document30 paginiGIS Unit 333 ABHISHEK WAGHÎncă nu există evaluări

- Curvature Attributes and Their Application To 3D Interpreted Horizons - RobertsDocument16 paginiCurvature Attributes and Their Application To 3D Interpreted Horizons - RobertsMohamed Ibrahim ShihataaÎncă nu există evaluări

- Map ProjectionsDocument3 paginiMap ProjectionsSarath Babu M GÎncă nu există evaluări

- Slope AnalysisDocument18 paginiSlope AnalysisNiharika Sharma67% (6)

- Sigismundi and Soldo 2003 TLEDocument5 paginiSigismundi and Soldo 2003 TLEPatrick EllisÎncă nu există evaluări

- Complete Urban Surface TemperaturesDocument16 paginiComplete Urban Surface Temperaturesafrilyani kontryanaÎncă nu există evaluări

- Auvinet2000 Three Dimensional Realibility Analysis of Earth SlopesDocument15 paginiAuvinet2000 Three Dimensional Realibility Analysis of Earth SlopesrifaniÎncă nu există evaluări

- Complete Urban Surface TemperaturesDocument16 paginiComplete Urban Surface TemperaturesAbraão MascarenhasÎncă nu există evaluări

- Linear Algebra in EarthSci.Document30 paginiLinear Algebra in EarthSci.Muzmmil Mian100% (1)

- Mapping Surveys & GISDocument44 paginiMapping Surveys & GISdeemaÎncă nu există evaluări

- Geo CH 7Document24 paginiGeo CH 7weldsvÎncă nu există evaluări

- Surface Integral: T in The Plane. Then, The Surface Integral Is Given byDocument6 paginiSurface Integral: T in The Plane. Then, The Surface Integral Is Given byShahnaj ParvinÎncă nu există evaluări

- Mapuse Map Projections PDFDocument26 paginiMapuse Map Projections PDFskÎncă nu există evaluări

- Miura OriDocument14 paginiMiura OrikivancsibbÎncă nu există evaluări

- Surface Reconstruction From Unorganized Points: Hugues Hoppe Tony Derose Tom Duchamp John Mcdonald Werner StuetzleDocument8 paginiSurface Reconstruction From Unorganized Points: Hugues Hoppe Tony Derose Tom Duchamp John Mcdonald Werner StuetzleCocias AdrianÎncă nu există evaluări

- CSIS Discussion Paper No. 31: Event-Based Analysis of Changes in SurfacesDocument30 paginiCSIS Discussion Paper No. 31: Event-Based Analysis of Changes in SurfacesSudharsananPRSÎncă nu există evaluări

- Part Iv: Coordinates and Map Projections: Projection SurfaceDocument22 paginiPart Iv: Coordinates and Map Projections: Projection SurfaceAnonymous VNgAfnhRÎncă nu există evaluări

- Application of High-Resolution Stereo Satellite Images To Detailed Landslide Hazard AssessmentDocument8 paginiApplication of High-Resolution Stereo Satellite Images To Detailed Landslide Hazard AssessmentBakryRantoeÎncă nu există evaluări

- Geographic Information System (GIS)Document14 paginiGeographic Information System (GIS)Patricia DiazÎncă nu există evaluări

- GIS El - Dabaa Nucular - Site Wessam 2007BDocument15 paginiGIS El - Dabaa Nucular - Site Wessam 2007BPatricia DiazÎncă nu există evaluări

- Patterns Beadedearrings27 PDFDocument1 paginăPatterns Beadedearrings27 PDFPatricia Diaz100% (1)

- Technical Issues in GisDocument31 paginiTechnical Issues in GisPatricia DiazÎncă nu există evaluări

- GIS GlossaryDocument11 paginiGIS GlossaryPatricia DiazÎncă nu există evaluări

- CAD Vs GISDocument5 paginiCAD Vs GISPatricia DiazÎncă nu există evaluări

- What Is A GISDocument3 paginiWhat Is A GISPatricia DiazÎncă nu există evaluări

- GIS GlossaryDocument11 paginiGIS GlossaryPatricia DiazÎncă nu există evaluări

- CAD Vs GISDocument5 paginiCAD Vs GISPatricia DiazÎncă nu există evaluări

- Marking Scheme For Term 2 Trial Exam, STPM 2019 (Gbs Melaka) Section A (45 Marks)Document7 paginiMarking Scheme For Term 2 Trial Exam, STPM 2019 (Gbs Melaka) Section A (45 Marks)Michelles JimÎncă nu există evaluări

- 123Document3 pagini123Phoebe AradoÎncă nu există evaluări

- Superlab Manual PDFDocument76 paginiSuperlab Manual PDFGabriela Soares100% (1)

- Blake Mouton Managerial GridDocument3 paginiBlake Mouton Managerial GridRashwanth Tc100% (1)

- A Project Report ON Strategic Purchasing Procedure, Systems and Policies (Hospital Industry)Document20 paginiA Project Report ON Strategic Purchasing Procedure, Systems and Policies (Hospital Industry)amitwin1983Încă nu există evaluări

- (Gray Meyer) Analysis and Design of Analog Integrated Circuits 5th CroppedDocument60 pagini(Gray Meyer) Analysis and Design of Analog Integrated Circuits 5th CroppedvishalwinsÎncă nu există evaluări

- There Will Come Soft RainsDocument8 paginiThere Will Come Soft RainsEng ProfÎncă nu există evaluări

- Service Quality Dimensions of A Philippine State UDocument10 paginiService Quality Dimensions of A Philippine State UVilma SottoÎncă nu există evaluări

- Names of Planner(s) : Style of Experience: (Whole Class, Small Group, Etc.) Lab: KindergartenDocument4 paginiNames of Planner(s) : Style of Experience: (Whole Class, Small Group, Etc.) Lab: Kindergartenapi-428128701Încă nu există evaluări

- ABS Service Data SheetDocument32 paginiABS Service Data SheetMansur TruckingÎncă nu există evaluări

- Atomberg Gorilla FanDocument12 paginiAtomberg Gorilla FanjothamÎncă nu există evaluări

- WWW Ranker Com List Best-Isekai-Manga-Recommendations Ranker-AnimeDocument8 paginiWWW Ranker Com List Best-Isekai-Manga-Recommendations Ranker-AnimeDestiny EasonÎncă nu există evaluări

- CM2192 - High Performance Liquid Chromatography For Rapid Separation and Analysis of A Vitamin C TabletDocument2 paginiCM2192 - High Performance Liquid Chromatography For Rapid Separation and Analysis of A Vitamin C TabletJames HookÎncă nu există evaluări

- Management Accounting by Cabrera Solution Manual 2011 PDFDocument3 paginiManagement Accounting by Cabrera Solution Manual 2011 PDFClaudette Clemente100% (1)

- 12 Step Worksheet With QuestionsDocument26 pagini12 Step Worksheet With QuestionsKristinDaigleÎncă nu există evaluări

- Maximum and Minimum PDFDocument3 paginiMaximum and Minimum PDFChai Usajai UsajaiÎncă nu există evaluări

- solidworks ขั้นพื้นฐานDocument74 paginisolidworks ขั้นพื้นฐานChonTicha'Încă nu există evaluări

- Kübler 5800-5820 - enDocument5 paginiKübler 5800-5820 - enpomsarexnbÎncă nu există evaluări

- Cap1 - Engineering in TimeDocument12 paginiCap1 - Engineering in TimeHair Lopez100% (1)

- File RecordsDocument161 paginiFile RecordsAtharva Thite100% (2)

- Assignment: Residual Leakage Protection Circuit Circuit DiagramDocument2 paginiAssignment: Residual Leakage Protection Circuit Circuit DiagramShivam ShrivastavaÎncă nu există evaluări

- Raiders of SuluDocument1 paginăRaiders of SuluBlexx LagrimasÎncă nu există evaluări

- Eccentric FootingDocument3 paginiEccentric FootingVarunn VelÎncă nu există evaluări

- Abilash - Subramanian CV - 003 PDFDocument4 paginiAbilash - Subramanian CV - 003 PDFAbilash SubramanianÎncă nu există evaluări

- Logistic RegressionDocument7 paginiLogistic RegressionShashank JainÎncă nu există evaluări

- ISO Position ToleranceDocument15 paginiISO Position ToleranceНиколай КалугинÎncă nu există evaluări

- Life and Works of Jose Rizal Modified ModuleDocument96 paginiLife and Works of Jose Rizal Modified ModuleRamos, Queencie R.Încă nu există evaluări

- LC For Akij Biax Films Limited: CO2012102 0 December 22, 2020Document2 paginiLC For Akij Biax Films Limited: CO2012102 0 December 22, 2020Mahadi Hassan ShemulÎncă nu există evaluări

- .IAF-GD5-2006 Guide 65 Issue 3Document30 pagini.IAF-GD5-2006 Guide 65 Issue 3bg_phoenixÎncă nu există evaluări