Documente Academic

Documente Profesional

Documente Cultură

External Sorting Query

Încărcat de

Eli_HuxDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

External Sorting Query

Încărcat de

Eli_HuxDrepturi de autor:

Formate disponibile

CSD Univ.

of Crete

Fall 2005

EXTERNAL SORTING

CSD Univ. of Crete

Fall 2005

Sorting

l A classic problem in computer science! l Data requested in sorted order (sorted output)

u e.g.,

find students in increasing grade point average (gpa) order u SELECT A,B, C FROM R ORDER BY A

l Sorting is first step in bulk loading B+ tree index l Sorting useful for eliminating duplicates in a collection of records (Why?)

u SELECT

DISTINCT A,B, C FROM R

l Some operators rely on their input files being already sorted, or, more

often than not, sorted input files boost some operators performance u Sort-merge join algorithm involves sorting

CSD Univ. of Crete

Fall 2005

Sorting

l A file of records is sorted with respect to sort key k and ordering , if

for any two records r1, r2 with r1 preceding r2 in the file, we have that their corresponding keys are in -order: u r1 r2 <=> r1.k r2.k

l A key may be a single attribute as well as an ordered list of attributes.

In the latter case, order is defined lexicographically u Example: k = (A,B), = <: r1 < r2 <=> r1.A < r2.A or r1.A = r2.A and r1.B < r2.B

CSD Univ. of Crete

Fall 2005

External Sorting

l Definition: Data lives on disk!

u

external vs. internal sort: the collection of data items to be sorted is not stored in main memory

l External Sorting is a challenge even if data << memory

u u

The challenge is to overlap disk I/Os with sorting in memory Most benchmarks (see next slide) are of this type, since memory is so cheap

l Examples in textbooks: data >> memory

u

These are classical examples, when memory was expensive, and are still common Why not use virtual memory?

4

CSD Univ. of Crete

Fall 2005

External Sorting Benchmarks

l Sorting has become a blood sport!

sorting is the name of the game (www.research.microsoft.com/barc/SortBenchmark) l How fast we can sort 1M records of size 100 bytes? u Typical DBMS: 5 minutes u World record: 1.18 seconds DCOM, cluster of 16 dual 400MHz Pentium II l New benchmarks proposed: u Minute Sort: How many can you sort in 1 minute? Typical DBMS: 10MB (~100,000 records) Current world record: 21.8 GB 64 dual-processor Pentium-III PCs (1999) u Dollar Sort: How many can you sort for $1.00? Current world record: 12GB 1380 seconds on a $672 Linux/Intel system (2001) $672 spread over 3 years = 1404 seconds/$

CSD Univ. of Crete

u Parallel

Fall 2005

External Sorting Example

assumption that data >> memory) u relation R: 10.000.000 tuples u one of the fields in each tuple is the sort key (not necessarily a key in the db sense) u Records are of fixed length: 100 bytes; Total size of R: 1GB u Available main memory: 50MB u Block size: 4.096 (= 212) bytes u 40 records can fit in a block, hence R occupies 250.000 blocks u Main memory can hold 12.800 blocks (= 50*220/212) l If data were kept in main memory, efficient sorting algorithms (e.g., Quicksort) could be employed to perform sorting on the sort keys l This approach does not perform well for data in secondary storage: u need to move each block between secondary and main memory a number of times, in a regular pattern 6

l Sort a relation in increasing order of the sort key values (under the

CSD Univ. of Crete

Fall 2005

Two-Way Merge Sort

we can sort it by breaking it into smaller subfiles (called runs), sorting these subfiles and merging them into a larger subfiles using a minimal amount of main memory at any given time l Idea: to merge sorted runs, repeatedly compare the smallest remaining keys of each run and output the record with the smaller key, until one of the runs is exhausted l Two-way Merge Sort: requires 3 buffers u Pass 0: Read a page (one after the other), sort it, write it only one buffer page is used u Pass 1, 2, 3, , etc.: sort runs and merge three buffer pages are used

INPUT 1 OUTPUT INPUT 2 l Goal: even if the entire file does not fit into the available main memory,

Disk

CSD Univ. of Crete

Main memory buffers

Disk

Fall 2005

Two-Way Merge Sort

lDivide and conquer

runs and merge in different passes

Current page File Y: Run 1

usort

Bf1 p1 Bf2 p2 Current page

min(Bf1[p1], Bf2[p2])

Bfo po

Read, when p1 = B (p2 = B)

Write, when Bfo full EOF

Run 2

File X: Merged run

l Pass 0 writes

sorted runs to disk, only one page of buffer space is used l Pass 1 writes 2s/2 = 2s1 runs to disk, three pages of buffer space are used l Pass n writes 2s/2 = 2sn runs to disk, three pages of buffer space are used l Pass s writes a single sorted run (i.e., the complete sorted file) of size 8 2s = N to disk

2s

CSD Univ. of Crete

Fall 2005

Cost of Two-Way Merge Sort

l In each pass we read all N

pages in the file, sort/merge, and write N pages out again

3,4 3,4

6,2 2,6

9,4 4,9

8,7 7,8

5,6 5,6

3,1 1,3

2 2

Input file PASS 0 1-page runs PASS 1 2 2-page runs PASS 2

l N pages in the file => the

number of passes (S)

2,3 4,6 2,3 4,4 6,7 8,9

4,7 8,9

1,3 5,6

= log2 N +1

l So total cost is:

1,2 3,5 6

4-page runs

2 N log 2 N + 1

1 read & 1 write # of pages

PASS 3 1,2 2,3 3,4 4,5 6,6 7,8 9 8-page runs

# of merge passes

CSD Univ. of Crete

Fall 2005

Multi-way Merge Sort

l Plain two-way merge sort algorithm uses no more than three pages of

buffer space at any point in time u How we can use more than 3 buffer pages?

l (External) Multi-way Merge Sort aims at two improvements:

to reduce the number of initial runs (avoid creating one-page runs in Pass 0), u Try to reduce the number of passes (merge more than 2 runs at a time)

l As before, let N denote the number of pages in the file to be sorted and B

u Try

buffer pages shall be available for sorting

10

CSD Univ. of Crete

Fall 2005

Multi-way Merge Sort

To sort a file with N pages using B buffer pages: Pass 0 use B buffers: Read input data in B pages at the time and produce N / B sorted runs of B pages each Last run may contain fewer pages Pass 1, 2, , (until only a single run is left) use B-1 buffers for input and 1 for output: Select B 1 runs from previous pass (B-1)-way merge in each pass Read each run into an input buffer; one page at a time Merge the runs and write to output buffer Force output buffer to disk one page at the time

INPUT 1 ... INPUT 2 ... INPUT B-1 OUTPUT ...

Disk

CSD Univ. of Crete

B Main memory buffers

Disk

11

Fall 2005

Multi-way Merge Sort

l Merging phase

groups of B-1 runs at a time to produce longer runs until only one run (containing all records of input file) is left Read the first page of each sorted run into an input buffer u Use a buffer for an output page holding as many elements of the first elements in the generated sorted run (at each pass) as it can hold l Runs are merged into one sorted run as follows: u Find the smallest key among the first remaining elements of all the runs u Move the smallest element to the first available position in the output buffer u If the output buffer is full, write it to disk and empty the buffer to hold the next output page of the generated sorted run (at each pass) u If the page from which the smallest element was chosen has no more records, read the next page of the same run into the same input buffer if no blocks remain, leave the runs buffer empty and do not consider 12 this run in further comparisons

u Merge

CSD Univ. of Crete

Fall 2005

Multi-way Merge Sort

Bf1 p1 Read, when pi = B Bf2 p2 min(Bf1[p1], Bf2[p2], , Bfk[pk]) Bfo po

Bfk pk Current page File Y: Run 1 Current page Current page

Write, when Bfo full

Run 2 File X:

Run k=n/m Merged run

EOF

13

CSD Univ. of Crete

Fall 2005

Cost of Multi-way Merge Sort

l E.g., with 5 buffer pages, to sort 108 page file:

u u u u

Pass 0: 108 / 5 = 22 sorted runs of 5 pages each (last run is only 3 pages) Pass 1: 22 / 4 = 6 sorted runs of 20 pages each (last run is only 8 pages) Pass 2: 2 sorted runs, 80 pages and 28 pages Pass 3: Sorted file of 108 pages

l Number of passes:

= logB-1 N / B +1

Pass 0 with B=4+1, N=7

14

l Cost = 2N * (# of passes)

u

per pass = N input + N output = 2 N

CSD Univ. of Crete

Fall 2005

Example (Contd)

l According to the available number of buffers and the relation size we need 2 passes (log12799 250000 / 12800 + 1 = 2) l Pass 0: sort main memory-sized portions of the data so that every record

belongs to a run that fits in main memory u Need to create 20 runs (19*12800 pages+ 1*6800 pages = 250000) u I/O required: 500000 I/O ops u If each I/O op takes 15msec, we need 7500 secs (125 mins) for phase 1 l Pass 1: merge the sorted runs into a single sorted run u run pages are read in an unpredictable order but exactly once u hence, 250000 page reads are needed for phase 2 u each record is placed only once in the output page u hence, 250000 page writes are needed u phase 2 requires 125 mins l In total, 250 minutes will be required for the entire sort of our relation

CSD Univ. of Crete

15

Fall 2005

Multi-way Merge Sort: Number of Passes

N 100 1000 10000 100000 1000000 10000000 100000000 1000000000 B=3 7 10 13 17 20 23 26 30 B=5 4 5 7 9 10 12 14 15 B=9 3 4 5 6 7 8 9 10 B=17 2 3 4 5 5 6 7 8 B=129 1 2 2 3 3 4 4 5 B=257 1 2 2 3 3 3 4 4

I/O cost is 2N times number of passes

16

CSD Univ. of Crete

Fall 2005

Multi-way Merge Sort I/O Savings

17

CSD Univ. of Crete

Fall 2005

Minimizing the Number of Initial Runs

l Recall that the number of initial runs determines the number of passes

we need to make:

2 N log

Pass 0

u consider

B -1

N / B + 1

(i.e., r = 0 . . . N / B 1)

l Reducing the number of initial runs is a very desirable optimization for

an alternative of QuickSort that minimizes the required number of passes by generating longer runs

l Replacement (tournament) Sort

u Assume u Ignore

all tuples are the same size

18

for simplicity double buffering (more latter)

CSD Univ. of Crete

Fall 2005

Replacement (Tournament) External Sort

l Replacement Sort:

u u

Produce runs of 2*(B-2) pages long on average (snowplow analogy) Assume one input and one output buffer; The remaining B 2 buffer pages are called the current set

l Keep two heaps in memory, H1 and H2

Top: Read in B-2 blocks into H1 Output: Move smallest record in H1 to output buffer Read in a new record r into H1 If input buffer is empty, read another page If r not smallest, then GOTO Output else remove r from heap Output run H2; GOTO Top B

19

CSD Univ. of Crete

Fall 2005

Replacement (Tournament) External Sort

l Pick tuple in the current set with

set are smaller than the largest tuple smallest k value that is still in output buffer greater than the largest k value in u Write out output buffer page (it output buffer becomes the last page of the u Append tuple to output buffer currently created run) u Output buffer remain sorted u Start a new run by reading tuples u Add tuple from input buffer to from input buffer, moving them to current set and writing to output current set Current set Input Output buffer (H1) (1 buffer) (H2) Average length of run 2 3 12 is 2(B-2) 8 5 4 10 Append to current set Append to output

20

l Terminate when all tuples in current

CSD Univ. of Crete

Fall 2005

I/O for External Merge Sort

l Actually, in previous algorithms we considered simple page-by-page I/Os

u Much

better than an I/O per record !

l Transfer rate increases 40% per year; seek time and latency time

decreases by only 8% per year u Is minimizing passes optimal for Pass 1, 2, ? u Would merging as many runs as possible the best solution?

l In fact, read a block of pages sequentially!

u For

minimizing seek time and rotational delay

l Suggests we should make each (input/output) buffer be a block of pages

this will reduce fan-out during merge passes! u In practice, most files still sorted in 2-3 passes

21

u But

CSD Univ. of Crete

Fall 2005

Sequential vs Random I/Os for External Merge Sort

of buffer space l We can merge all 80 runs in a single pass u each page requires a seek to access (Why?) u there are 80 pages per run, so 80 seeks per run u total cost = 80 runs * 80 seeks = 6400 seeks l We can merge all 80 runs in two steps u 5 sets of 16 runs each read 80/16=5 pages of one run 16 runs result in sorted run of 1280 pages each merge requires 80/5 * 16 = 256 seeks for 5 sets, we have 5 * 256 = 1280 seeks u merge 5 runs of 1280 pages read 80/5=16 pages of one run => 1280/16=80 seeks in total 5 runs => 5*80 = 400 seeks u total: 1280+400=1680 seeks!!! l Number of passes increases, but number of seeks decreases!

l Suppose we have 80 runs, each 80 pages long and we have 81 pages

22

CSD Univ. of Crete

Number of Passes of Replacement Sort using Buffer Blocks

N 100 1000 10000 100000 1000000 10000000 100000000 1000000000 B=1000 1 1 2 3 3 4 5 5 B=5000 1 1 2 2 2 3 3 4 B=10000 B=50000 1 1 1 2 2 3 3 3 1 1 1 2 2 2 2 3

Fall 2005

l Buffer block = 32 pages and initial pass produces runs of size 2(B-2)

23

CSD Univ. of Crete

Fall 2005

Streaming Data Through Main Memory

l An important detail for sorting & other DB operations l Simple case: Compute f(x) for each record, write out the result

a page from INPUT to Input Buffer u Write f(x) for each item to Output Buffer u When Input Buffer is consumed, read another page u When Output Buffer fills, write it to OUTPUT

l Reads and Writes are not coordinated

u Read

INPUT

Input

Input

Output

f(x)

Memory Buffers

Output

24

CSD Univ. of Crete

Fall 2005

Double Buffering

l Issue one read for 1024 bytes instead of 2 reads of 512 bytes (i.e. use a

large buffer size) u A larger block allows more data to processed with each I/O l To reduce wait time for I/O request to complete, can prefetch into `shadow block l The idea is to avoid waiting for input (or output) buffer while CPU is idle u Keep the CPU busy while the input buffer is reloaded (the output buffer is appended to the current run)

INPUT 1 INPUT 1' OUTPUT OUTPUT' b block size

Disk

Disk

25

B main memory buffers, two-way merge

CSD Univ. of Crete

Fall 2005

Double Buffering while Sorting

l Potentially, more passes (because youre effectively using fewer buffers);

but, in practice, most files still sorted in 2-3 passes

INPUT 1 INPUT 1' INPUT 2 INPUT 2' OUTPUT OUTPUT' b block size

Disk

INPUT k INPUT k'

Disk

B main memory buffers, multi-way merge

26

CSD Univ. of Crete

Fall 2005

Using B+ Trees for Sorting

l Scenario: Table to be sorted has B+ tree index on sorting column(s)

u Idea:

Can retrieve records in order by traversing leaf pages

l Is this a good idea? l Cases to consider:

u B+

tree is clustered -- Good idea! u B+ tree is not clustered -- Could be a very bad idea!

27

CSD Univ. of Crete

Fall 2005

Clustered B+ Tree Used for Sorting

l Cost: root to the left-most leaf,

then retrieve all leaf pages (<key,record> pair organization)

l If <key, rid> pair

Index (Directs search) Data Entries ("Sequence set")

organization is used? u Additional cost of retrieving data records: each page fetched just once

Data Records

Always better than external sorting!

28

CSD Univ. of Crete

Fall 2005

Unclustered B+ Tree Used for Sorting

l each data entry contains <key,rid> of a data record

u In

the worst case, one I/O per data record!

Index (Directs search) Data Entries ("Sequence set")

Data Records

29

CSD Univ. of Crete

Fall 2005

External Sorting vs. Unclustered Index

N 100 1000 10000 100000 1000000 10000000 Sorting 200 2000 40000 600000 8000000 80000000 p=1 100 1000 10000 100000 1000000 10000000 p=10 1000 10000 100000 1000000 10000000 100000000 p=100 10000 100000 1000000 10000000 100000000 1000000000

p is the # of records per page (p = 100 is realistic) B = 1000 and block buffer=32 pages for sorting Cost : p * N (compared to N when index is clustered

30

CSD Univ. of Crete

Fall 2005

External Sorting vs. Unclustered Index

l The plot assumes available buffer space for sorting of B = 257 pages l For even modest file sizes, therefore, sorting by using an unclustered

B+ tree index is clearly inferior to external sorting

31

CSD Univ. of Crete

Fall 2005

Summary

l External sorting is important l

l l

DBMS may dedicate part of buffer pool for sorting! External merge sort minimizes disk I/O cost: u Pass 0: Produces sorted runs of size B (# buffer pages). Later passes: merge runs u # of runs merged at a time depends on B, and block size u Larger block size means less I/O cost per page u Larger block size means smaller # runs merged u In practice, # of runs rarely more than 2 or 3 Choice of internal sort algorithm may matter: u Quicksort: Quick! u Replacement sort: slower (2x), longer runs The best sorts are wildly fast: u Despite 40+ years of research, were still improving! Clustered B+ tree is good for sorting u unclustered tree is usually very bad

u

32

CSD Univ. of Crete

Fall 2005

Complexity of Main Memory Sort Algorithms

stability Bubble Sort Insertion Sort Quick Sort Merge Sort Heap Sort Radix Sort List Sort Table Sort stable stable untable stable untable stable ? ? space little little O(logn) O(n) little O(np) O(n) O(n) best O(n) O(n) O(nlogn) O(nlogn) O(nlogn) O(nlogn) O(1) O(1) time average O(n2) O(n2) O(nlogn) O(nlogn) O(nlogn) O(nlogn) O(n) O(n) worst O(n2) O(n2) O(n2) O(nlogn) O(nlogn) O(nlogn) O(n) O(n)

33

CSD Univ. of Crete

Fall 2005

References

l Based on slides from:

u R.

Ramakrishnan and J. Gehrke u J. Hellerstein u M. H. Scholl

34

S-ar putea să vă placă și

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- SAP HANA Calculation ViewDocument3 paginiSAP HANA Calculation ViewEli_HuxÎncă nu există evaluări

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Learn The Essentials of DebuggingDocument4 paginiLearn The Essentials of DebuggingEli_HuxÎncă nu există evaluări

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- Introduction To SAP HANA Database-For BeginnersDocument3 paginiIntroduction To SAP HANA Database-For BeginnersEli_HuxÎncă nu există evaluări

- Sample Questions C AUDSEC 731Document5 paginiSample Questions C AUDSEC 731Eli_HuxÎncă nu există evaluări

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- IBM Rational Rose: Make Your Vision RealityDocument8 paginiIBM Rational Rose: Make Your Vision RealityOscarBuÎncă nu există evaluări

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- C TAW12 731 Sample QuestionsDocument5 paginiC TAW12 731 Sample QuestionsVic VegaÎncă nu există evaluări

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- C BobipDocument4 paginiC BobipEli_HuxÎncă nu există evaluări

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Boc330 en Col91 FV Part LTRDocument124 paginiBoc330 en Col91 FV Part LTRamondaca100% (1)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- C BOBIP 40 Sample QuestionsDocument5 paginiC BOBIP 40 Sample QuestionsMuralidhar MuppaneniÎncă nu există evaluări

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- P SOA EA 70 Sample ItemsDocument4 paginiP SOA EA 70 Sample ItemsEli_HuxÎncă nu există evaluări

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Sample Questions C DS 41Document5 paginiSample Questions C DS 41Eli_HuxÎncă nu există evaluări

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- Sap Abap BasicsDocument9 paginiSap Abap BasicsErshad ShaikÎncă nu există evaluări

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- Sample Questions C DS 41Document5 paginiSample Questions C DS 41Eli_HuxÎncă nu există evaluări

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- Data Modeler Tutor I ADocument5 paginiData Modeler Tutor I AEli_HuxÎncă nu există evaluări

- Application Server For DevelopersDocument15 paginiApplication Server For DevelopersEli_HuxÎncă nu există evaluări

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- C BOBIP 40 Sample QuestionsDocument5 paginiC BOBIP 40 Sample QuestionsMuralidhar MuppaneniÎncă nu există evaluări

- Sabsa Matrix 2009Document1 paginăSabsa Matrix 2009Eli_HuxÎncă nu există evaluări

- CEN and CENELEC Position Paper On The Proposal For CPR RevisionDocument15 paginiCEN and CENELEC Position Paper On The Proposal For CPR Revisionhalexing5957Încă nu există evaluări

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (345)

- QuinnmcfeetersresumeDocument1 paginăQuinnmcfeetersresumeapi-510833585Încă nu există evaluări

- The BetterPhoto Guide To Creative Digital Photography by Jim Miotke and Kerry Drager - ExcerptDocument19 paginiThe BetterPhoto Guide To Creative Digital Photography by Jim Miotke and Kerry Drager - ExcerptCrown Publishing GroupÎncă nu există evaluări

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- Internship ReportDocument36 paginiInternship ReportM.IMRAN0% (1)

- Calculus HandbookDocument198 paginiCalculus HandbookMuneeb Sami100% (1)

- Department of Education: Income Generating ProjectDocument5 paginiDepartment of Education: Income Generating ProjectMary Ann CorpuzÎncă nu există evaluări

- Grade9 January Periodical ExamsDocument3 paginiGrade9 January Periodical ExamsJose JeramieÎncă nu există evaluări

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- Meniere's Disease - Retinal Detatchment - GlaucomaDocument3 paginiMeniere's Disease - Retinal Detatchment - Glaucomaybet03Încă nu există evaluări

- SEC CS Spice Money LTDDocument2 paginiSEC CS Spice Money LTDJulian SofiaÎncă nu există evaluări

- Specification Sheet: Case I Case Ii Operating ConditionsDocument1 paginăSpecification Sheet: Case I Case Ii Operating ConditionsKailas NimbalkarÎncă nu există evaluări

- How Can Literary Spaces Support Neurodivergent Readers and WritersDocument2 paginiHow Can Literary Spaces Support Neurodivergent Readers and WritersRenato Jr Bernadas Nasilo-anÎncă nu există evaluări

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- Christena Nippert-Eng - Watching Closely - A Guide To Ethnographic Observation-Oxford University Press (2015)Document293 paginiChristena Nippert-Eng - Watching Closely - A Guide To Ethnographic Observation-Oxford University Press (2015)Emiliano CalabazaÎncă nu există evaluări

- Geotechnical Aspects of Open Stope Design at BHP Cannington: G C StreetonDocument7 paginiGeotechnical Aspects of Open Stope Design at BHP Cannington: G C StreetonJuan PerezÎncă nu există evaluări

- Lista de Precios Agosto 2022Document9 paginiLista de Precios Agosto 2022RuvigleidysDeLosSantosÎncă nu există evaluări

- Lego Maps ArtDocument160 paginiLego Maps ArtВячеслав КозаченкоÎncă nu există evaluări

- Toxemias of PregnancyDocument3 paginiToxemias of PregnancyJennelyn LumbreÎncă nu există evaluări

- Chem Resist ChartDocument13 paginiChem Resist ChartRC LandaÎncă nu există evaluări

- Clash of Clans Hack Activation CodeDocument2 paginiClash of Clans Hack Activation Codegrumpysadness7626Încă nu există evaluări

- Q4 Music 6 Module 2Document15 paginiQ4 Music 6 Module 2Dan Paolo AlbintoÎncă nu există evaluări

- Bachelors - Harvest Moon Animal ParadeDocument12 paginiBachelors - Harvest Moon Animal ParaderikaÎncă nu există evaluări

- Historical Exchange Rates - OANDA AUD-MYRDocument1 paginăHistorical Exchange Rates - OANDA AUD-MYRML MLÎncă nu există evaluări

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Toi Su20 Sat Epep ProposalDocument7 paginiToi Su20 Sat Epep ProposalTalha SiddiquiÎncă nu există evaluări

- BioremediationDocument21 paginiBioremediationagung24864Încă nu există evaluări

- Timetable - Alton - London Timetable May 2019 PDFDocument35 paginiTimetable - Alton - London Timetable May 2019 PDFNicholas TuanÎncă nu există evaluări

- Feds Subpoena W-B Area Info: He Imes EaderDocument42 paginiFeds Subpoena W-B Area Info: He Imes EaderThe Times LeaderÎncă nu există evaluări

- War at Sea Clarifications Aug 10Document4 paginiWar at Sea Clarifications Aug 10jdageeÎncă nu există evaluări

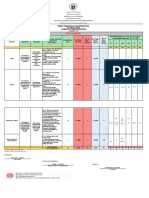

- Revised Final Quarter 1 Tos-Rbt-Sy-2022-2023 Tle-Cookery 10Document6 paginiRevised Final Quarter 1 Tos-Rbt-Sy-2022-2023 Tle-Cookery 10May Ann GuintoÎncă nu există evaluări

- Ginger Final Report FIGTF 02Document80 paginiGinger Final Report FIGTF 02Nihmathullah Kalanther Lebbe100% (2)

- BS en Iso 06509-1995 (2000)Document10 paginiBS en Iso 06509-1995 (2000)vewigop197Încă nu există evaluări

- Le Chatelier's Principle Virtual LabDocument8 paginiLe Chatelier's Principle Virtual Lab2018dgscmtÎncă nu există evaluări