Documente Academic

Documente Profesional

Documente Cultură

A General Purpose Queue Architecture For An ATM Switch

Încărcat de

Jinal DhobiTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

A General Purpose Queue Architecture For An ATM Switch

Încărcat de

Jinal DhobiDrepturi de autor:

Formate disponibile

Mitsubishi Electric Research Laboratories Cambridge Research Center

Technical Report 94-17

September 30, 1994

A General Purpose Queue Architecture for an ATM Switch

Hugh C. Lauer Abhijit Ghosh Chia Shen

Abstract This paper describes a general purpose queue architecture for an ATM switch capable of supporting both real-time and non-real-time communication. The central part of the architecture is a kind of searchable, self-timed FIFO circuit into which are merged the queues of all virtual channels in the switch. Arriving cells are tagged with numerical values indicating the priorities, deadlines, or other characterizations of the order of transmission, then they are inserted into the queue. Entries are selected from the queue both by destination and by tag, with the earliest entry being selected from among a set of equals. By this means, the switch can schedule virtual channels independently, but without maintaining separate queues for each one. This architecture supports a broad class of scheduling algorithms at ATM speeds, so that guaranteed qualities of service can be provided to real-time applications. It is programmable because the tag calculations are done in microprocessors at the interface to each physical link connected to the switch. Presented at Massachusetts Telecommunications Conference, University of Massachusetts at Lowell, Lowell, MA, October 25,1994.

This work may not be copied or reproduced in whole or in part for any commercial purpose. Permission to copy in whole or in part without payment of fee is granted for nonprofit educational and research purposes provided that all such whole or partial copies include the following: a notice that such copying is by permission of Mitsubishi Electric Research Laboratories of Cambridge, Massachusetts; an acknowledgment of the authors and individual contributions to the work; and all applicable portions of the copyright notice. Copying, reproduction, or republishing for any other purpose shall require a license with payment of fee to Mitsubishi Electric Research Laboratories. All rights reserved.

Copyright Mitsubishi Electric Research Laboratories, Inc., 1994 201 Broadway, Cambridge, Massachusetts 02139

Revision History: 1. First version: July 28, 1994 2. Revised September 30, 1994

A General Purpose Queue Architecture for an ATM Switch

Hugh C. Lauer, Abhijit Ghosh, Chia Shen Mitsubishi Electric Research Laboratories 201 Broadway Cambridge, MA 02139 Abstract This paper describes a general purpose queue architecture for an ATM switch capable of supporting both real-time and non-real-time communication. The central part of the architecture is a kind of searchable, self-timed FIFO circuit into which are merged the queues of all virtual channels in the switch. Arriving cells are tagged with numerical values indicating the priorities, deadlines, or other characterizations of the order of transmission, then they are inserted into the queue. Entries are selected from the queue both by destination and by tag, with the earliest entry being selected from among a set of equals. By this means, the switch can schedule virtual channels independently, but without maintaining separate queues for each one. This architecture supports a broad class of scheduling algorithms at ATM speeds, so that guaranteed qualities of service can be provided to real-time applications. It is programmable because the tag calculations are done in microprocessors at the interface to each physical link connected to the switch. Problem Statement A modern high speed network needs to support hard real-time applications such as plant control, continuous media such as audio or video, as well as ordinary data communications. In order to do this, it is necessary to be able to schedule the real-time communications to meet quality of service goals and to protect them from the unpredictable demands of the non-real-time communications, all the while providing the remaining bandwidth to the non-real-time communications. There are two parts to the real-time scheduling problem: an off-line part dealing with the setting up of connections (admission control); and an on-line part involving the actual scheduling of individual messages, packets, or cells as they arrive. A large body of theory about real-time scheduling has yielded many results predicting that certain combinations of off-line and on-line scheduling algorithms can guarantee certain qualities of service if certain a priori conditions are satisfied. In real-time ATM networks, the challenge is the on-line part, i.e., cell-by-cell scheduling. For a network operating at 622 megabits per second, an ATM switch must make a scheduling decision for each physical link within each cell cycle of 680 nanoseconds. In the general case, there will be n virtual channels with cells awaiting transmission, each of which has its own scheduling requirements. Therefore, each decision involves selecting the first cell from the virtual channel with the highest priority, earliest deadline, most frequent rate, or other parameter.

Address: Mitsubishi Electric Research Laboratories, 1050 E. Arques Avenue, Sunnyvale, CA 94086

A General Purpose Queue Architecture for an ATM Switch

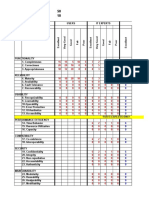

This scheduling decision can be reduced to a comparison to select the minimum or maximum from among a set of n numerical values. There is no way around this n-way comparison. It is the fundamental complexity of real-time scheduling, and it must be done once during every scheduling interval. Obviously, if n is very small, it is not difficult. But if n is large, as is likely to be the case in a network with many real-time channels with widely varying characteristics, the comparison becomes a significant part of the design of the switch. Shared-buffer Switch Architecture At Mitsubishi Electric, ATM switches use a shared buffer architecture, an unfolded view of which is shown in Figure 1. The central fabric of the switch is a 2k -port memory, where k is the number of physical links connected to the switch. During each cell cycle, k cells arrive at the k input sides of the physical links, shown on the left, and are concurrently written into the shared cell buffer memory. At the same time, k cells are read from the same memory for transmission to the k output sides of the links. Scheduling is merely a matter of selecting which cells to transmit at which time. The advantage of this architecture over the more common input- or output-buffered switches is that less total memory is required because it can be statistically multiplexed among the physical links. The total bandwidth of the memory must be 2 * k * B cells per second, where B is the bandwidth of the individual links. This is only slight more stringent than the buffers of input- and output-buffered switches, which require k separate buffers with bandwidth ( k + 1 ) * B each in

Control Destination Info Tags Buffer Addresses Queue and Search Mechanism

Input Processing Input Processing

Scheduling Info

Output Processing Output Processing

Cell Buffers

Input Processing Cell Headers and Bodies

Output Processing

Figure 1 Shared-buffer ATM Switch Architecture

September 30, 1994

Technical Report 94-17

A General Purpose Queue Architecture for an ATM Switch

order to be non-blocking. Therefore, the shared-buffer architecture makes better use of the physical resources of the switch [Oshima92]. Selectable Queue Circuit One way to do cell-by-cell scheduling is to use a self-timed FIFO with a search circuit such as that described in [Kondoh94] and illustrated in Figure 2. This circuit contains a linear array of registers, as many as there are cell buffers in the memory. Each register contains a cell address, a priority value, and a k-bit destination vector in which one bit represents each physical link. A value of one in a position of the destination vector means that the cell addressed by the entry waiting to be transmitted to that link. During each cell cycle, the circuit is searched k times, once for each link. Each search finds the first entry containing a one bit in the corresponding position of the destination vector i.e., nearest the head of the queue (at the bottom of Figure 2). The cell address is retrieved, the cell at the buffer address is transmitted, and the bit of destination vector is reset. When all of the destination vector bits of an entry are zero, the entry is deemed to be vacant. Then the internal, self-timed circuitry propagates all subsequent entries forward to fill up vacant spaces. In this sense, it acts like a traditional FIFO circuit. New entries are always added to the tail of the queue (shown at the top in the Figure).

from tail of queue cell address pri 0 destination bits 1 0 0 0 0

Search Direction

0 Bus / Read Circuit

0 Search Circuit

output

to head of queue

self-timed propagation circuitry

Figure 2: Self-time FIFO with search circuit To support real-time communication, we use a modification of this circuit in which selection takes account of the priority information encoded in numerical tags. Instead of merely selecting the first entry with a particular destination bit set, this circuit selects the entry with both the smallest tag value and a destination bit of one. A block diagram of the circuit is shown in Figure 3.

September 30, 1994

Technical Report 94-17

A General Purpose Queue Architecture for an ATM Switch

This figure shows only one column of destination bits (corresponding to one physical link), a tag register for each entry, comparators between adjacent entries, and a simplified selection circuit. Not shown are the cell addresses or self-timed circuitry which causes entries to propagate from the tail to the head of the queue. The comparators all operate in parallel. Each compares the value of its tag register with the output of the previous comparator (towards the tail of the queue). If the destination bit is one, it returns the smaller of these two values for use by the comparator of the next entry (toward the head of the queue); otherwise, it simply propagates the value from the previous entry to the next entry. Each comparator also outputs a single bit, which has a value of one if and only if (a) the destination bit of that entry is one, and (b) the tag of that entry is not greater than any tag propagated from entries toward the tail of the queue. The selection circuitry on the right is identical to that of Figure 2 and finds the first such entry.

Dest. bit from tail of queue Value Mi+3 ... Tag Ti+2

Comparator

to upper stage lookahead block 1 0

(1)

Ci+2

1

... (0) Tag Ti+1

Comparator

...

Ci+1

1

(1)

Tag Ti ... Tag Ti-1

Comparator

Ci

0

(0)

0 Selection Outputs

Comparator

Ci-1

0 from lower stage

toward head of queue Value Mi-1

Figure 3 Tag-based Selectable Queue Circuit The queue circuit of Figure 3 is an extremely simplified one. For a practical circuit in a k-by- k shared-buffer switch operating at 622 megabits per second, the circuit must search the queue k times in each 680 nanosecond cell cycle. If k = 16, for example, each search must be completed in about 40 nanoseconds. Even with look ahead, the ripple effect of the comparators takes too long. Therefore, we actually partition the search into sections, each one producing a minimum tag value. Then these compare the results of the sections hierarchically to select the minimum overall tag value. Experience with an evaluation circuit reported by Kondoh, plus estimates based on 0.8 CMOS design rules, indicates that search can be completed in less than 30 nanoseconds for 512 queue entries with 16-bit tags.

September 30, 1994

Technical Report 94-17

A General Purpose Queue Architecture for an ATM Switch

Implementation of Real-time Scheduling Algorithms This scheduling mechanism is extremely flexible and general and can support a broad class of real-time and non-real-time scheduling algorithms. The key feature is the ability to compute tags for individual cells when they arrive at the switch. For example, In rate monotonic systems, tags represent intervals between periodic messages. Tasks requiring messages with higher frequencies are assigned smaller tags. Tags can be pre-computed when virtual channels are set up, then assigned by table look-up when cells arrive. In the Virtual Clock algorithm [Zhang91], tags represent the logical arrival times of cells. These are computed by taking the actual arrival time of each cell, then adjusting them as necessary to reflect early arrivals, etc. In the Earliest Deadline First algorithm [Zheng94], tags represent deadlines by which time cells must be transmitted. They are calculated by adding pre-computed delay values to logical arrival times. In addition, round robin, weighted fair queuing, and other algorithms can be supported by defining appropriate tag-calculation functions to be applied to incoming cells arriving at the switch. Moreover, multiple classes of traffic can be supported simultaneously by partitioning the space of tag values and assigning tags in the different subspaces by different algorithms. In some of the algorithms, such as Virtual Clock and Earliest Deadline First, tag values increase monotonically for each virtual channel. Eventually, they will overflow the tag field of the registers in the queue. To cope with this, a control signal is provided which, when raised, causes each tag to be treated as having one additional high order bit. The value of this bit is the opposite of the actual high-order bit of the tag. Initially, all tags are zero. After a while, tags of newer entries in the queue have high-order bits of one. Assuming that there are enough bits in the tag fields and that all entries are chosen within a predictable period of time, it will be the case that all tags in the queue will have high order bits of one. I.e., earlier entries will have been selected and transmitted and the tag values of new entries will not have wrapped around back to zero again. At this point, the control signal is raised. When the tag values eventually do wrap around back to zero, they will be treated as being larger than the earlier queue entries with ones in the high-order bits of theirs tags. Later still, all entries with ones in the high order bits of their tags will have been removed from the queue, at which time the control signal can be lowered again. Note that this depends upon the tag values having enough bits so that the interval between wrap around is greater than the lifetime of any entry in the queue. Summary A queue architecture for a shared-buffer ATM switch has been described. This architecture supports real-time communication traffic by allowing cell-by-cell scheduling according to a wide variety of scheduling algorithms. The central feature of the architecture is a tag-based FIFO queue circuit containing entries for all cells to all destinations. It can be searched for the cell with the smallest tag for a particular destination. In effect, it provides the necessary queuing without

September 30, 1994

Technical Report 94-17

A General Purpose Queue Architecture for an ATM Switch

requiring separate queues for individual virtual channels. The queue circuit can be implemented in VLSI and can control a 16-by-16 switch at 622 megabits per second. References [Kondoh94] H. Kondoh, H. Yamanaka, M. Ishiwaki, Y. Matsuda, and M. Nakaya, An Efficient, SelfTimed Queue Architecture for ATM Switch LSIs, Proceedings. of Custom Integrated Circuit Conference, San Diego, May 1994. [Oshima92] K. Oshima, H. Yamanaka, H. Saito, H. Yamada, S. Kohama, H. Kondoh, Y. Matsuda, A New ATM Switch Architecture based on STS-type Shared Buffering and its LSI Implementation, Proceedings of International Switching Symposium 92, Yokohama, Japan, October 1992, pp. 359-363. [Zhang91] L. Zhang, Virtual Clock: A new traffic control algorithm for packet-switched networks, ACM Transactions on Computer Systems, vol. 9, No. 2, May 1991, pp. 101-124. [Zheng94] Q. Zheng, K. Shin, and C. Shen, Real-time Communication in ATM Networks, to appear in 19th Annual Local Computer Network Conference , Minneapolis, Oct. 2 - 5, 1994.

September 30, 1994

Technical Report 94-17

S-ar putea să vă placă și

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- A Software-Defined Radio Implementation of Maritime AISDocument126 paginiA Software-Defined Radio Implementation of Maritime AISAfdhal HanifÎncă nu există evaluări

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Ccna Notes KitDocument81 paginiCcna Notes KitMukesh BhakarÎncă nu există evaluări

- Canon MP390 Service ManualDocument149 paginiCanon MP390 Service ManualGrigore AndreiÎncă nu există evaluări

- Requirements Template Software EngrngDocument9 paginiRequirements Template Software EngrngJenny Yu CaramolÎncă nu există evaluări

- Data Science and Big Data Computing - Frameworks and MethodologiesDocument332 paginiData Science and Big Data Computing - Frameworks and MethodologiesMihailo Majk Žikić89% (9)

- Understanding Message BrokersDocument71 paginiUnderstanding Message Brokerssgsfak50% (2)

- In SQLAdminDocument332 paginiIn SQLAdminjhoar1987Încă nu există evaluări

- Microsoft Azure Fundamentals: Microsoft AZ-900 Dumps Available Here atDocument9 paginiMicrosoft Azure Fundamentals: Microsoft AZ-900 Dumps Available Here atCharudatt SatputeÎncă nu există evaluări

- 4 SchedulingDocument37 pagini4 Schedulingreply2amit1986Încă nu există evaluări

- ReportDocument7 paginiReportJinal DhobiÎncă nu există evaluări

- 10.vehicle Accident Automatic Detection and Remote Alarm DeviceDocument4 pagini10.vehicle Accident Automatic Detection and Remote Alarm DeviceAnonymous p0bBEKÎncă nu există evaluări

- Presentation On Li-Fi (Light Fidelity) The Future Technology in Wireless CommunicationDocument28 paginiPresentation On Li-Fi (Light Fidelity) The Future Technology in Wireless CommunicationJinal Dhobi33% (3)

- SteganalysisDocument26 paginiSteganalysisJinal Dhobi100% (1)

- ATM Switches: Broadband Integrated Services Digital Network Switching PlatformDocument6 paginiATM Switches: Broadband Integrated Services Digital Network Switching PlatformdeltadasÎncă nu există evaluări

- Shared BufferDocument22 paginiShared BufferJinal DhobiÎncă nu există evaluări

- Presentation For Major ProjectDocument28 paginiPresentation For Major ProjectJinal DhobiÎncă nu există evaluări

- Arkin - NID Entrance Exam Sample PapersDocument16 paginiArkin - NID Entrance Exam Sample PapersArkin Institute for NID, CEED, NATA, NIFTÎncă nu există evaluări

- Arkin - NID Entrance Exam Sample PapersDocument16 paginiArkin - NID Entrance Exam Sample PapersArkin Institute for NID, CEED, NATA, NIFTÎncă nu există evaluări

- Securing Wireless Network1Document8 paginiSecuring Wireless Network1Jinal DhobiÎncă nu există evaluări

- Manual EclipseDocument40 paginiManual EclipseElcio Dias Junior100% (2)

- Pricelist Dahua Update-End of 2022Document125 paginiPricelist Dahua Update-End of 2022dom baÎncă nu există evaluări

- Summary of Data Gathered ISO25010Document8 paginiSummary of Data Gathered ISO25010edgar magtanggolÎncă nu există evaluări

- Oracle Database Appliance - Deep DiveDocument12 paginiOracle Database Appliance - Deep DivetnjuliusÎncă nu există evaluări

- SRM Valliammai Engineering College: Department of Electronics and Communication EngineeringDocument11 paginiSRM Valliammai Engineering College: Department of Electronics and Communication EngineeringRamaÎncă nu există evaluări

- Index PDFDocument8 paginiIndex PDFrajesh gantaÎncă nu există evaluări

- Data SheetDocument2 paginiData SheetMichael HurniÎncă nu există evaluări

- Boot LoopDocument2 paginiBoot LoopAnonymous 0eum9UÎncă nu există evaluări

- Bluetooth Based Patient Monitoring System: Tabish Azad, Saima Beg, Naushin Kulsum, Shaquib Khan, Atif PashaDocument3 paginiBluetooth Based Patient Monitoring System: Tabish Azad, Saima Beg, Naushin Kulsum, Shaquib Khan, Atif PashaerpublicationÎncă nu există evaluări

- N301V2.0-TDE01-Restore Configurations Using TpUpgrade User GuideDocument10 paginiN301V2.0-TDE01-Restore Configurations Using TpUpgrade User GuideRay OrtegaÎncă nu există evaluări

- AcQuire GIM Suite Server Installation GuideDocument31 paginiAcQuire GIM Suite Server Installation GuideDavid La Torre MendozaÎncă nu există evaluări

- HP Data Protector 6.0 and Oracle 10g RAC Configuration Best PracticesDocument61 paginiHP Data Protector 6.0 and Oracle 10g RAC Configuration Best Practicesleonardoroig8796Încă nu există evaluări

- Lab Manual 1 ExpDocument21 paginiLab Manual 1 ExpSujana Kalyan50% (2)

- G540 - 840 ManualDocument26 paginiG540 - 840 ManualSandro Àkúnlèyàn Òmó OrisáÎncă nu există evaluări

- Data Communications and Computer Networks: A BusinessDocument39 paginiData Communications and Computer Networks: A Businessmamtaarora491224Încă nu există evaluări

- C, CPP Compiler & Lib Manual For Blackfin ProcessorsDocument856 paginiC, CPP Compiler & Lib Manual For Blackfin ProcessorscycleinmarsÎncă nu există evaluări

- 8050/55 Rs 232 Setup and Analysis Setup For Fagor DNC 50/55 Setup For DNCDocument3 pagini8050/55 Rs 232 Setup and Analysis Setup For Fagor DNC 50/55 Setup For DNCDiego ChiliquingaÎncă nu există evaluări

- TAP Testing ToolkitDocument3 paginiTAP Testing ToolkitJuma BasilioÎncă nu există evaluări

- Amarino-Android Meets Arduino Full Paper (Ieee Format)Document3 paginiAmarino-Android Meets Arduino Full Paper (Ieee Format)Bala VishnuÎncă nu există evaluări

- M8128 User's Manual: ISO9001:2008 CERTIFIEDDocument22 paginiM8128 User's Manual: ISO9001:2008 CERTIFIEDChandru ChristurajÎncă nu există evaluări

- Modbus Messaging On Tcp/Ip Implementation Guide V1.0bDocument46 paginiModbus Messaging On Tcp/Ip Implementation Guide V1.0bBruno SilvaÎncă nu există evaluări