Documente Academic

Documente Profesional

Documente Cultură

FPGA Vs Micro Control

Încărcat de

senthilvlTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

FPGA Vs Micro Control

Încărcat de

senthilvlDrepturi de autor:

Formate disponibile

FPGAs vs Microcontrollers

by Aftab Sarwar under Articles, Professional There has always been hot discussion between what to choose, an FPGA or a conventional hard IP microcontroller. It seems that FPGAs are going to rule in the future because of their flexibility, increasingly better power efficiency and decreasing prices. Often a soft processor is added in the FPGA design to get microcontroller like functionality along-with other concurrent processing.

FPGAs are concurrent. You can take sequential functionality like adding soft processor core. While the microcontroller as always sequential. This makes FPGAs better suited for real-time applications such as executing DSP algorithms. FPGA are flexible, you can add subtract the functionality as required. This can not be done in microcontroller. FPGAs are liked in military applications. There are two main reasons of that. The first is that FPGAs are hard-wired and the random attack of alpha rays can not destroy/corrupt the memory areas hence collapse the device functionality. The second reason is that the life time of FPGA based development is longer. It can be adopted for advanced chip is required. Microcontrollers change too often and there is lots re-work required to do in order to keep pace with changing technology. This is necessary to save the design from being obsolete. The development time in case of conventional microcontroller is, I think, shorter and that of FPGA takes time because you need to glue-up different modules yourself and test them to perfection before doing anything. In case of a microcontroller, the peripherals are readily available and you can choose the microcontroller with your desire peripherals. These peripherals are pre-tested thoroughly by vendor and you need not to worry about their functionality, just need to use them. You will find ready made open source softperipherals for FPGAs as well but still gluing them up and testing is as task. Microcontroller, up-til now, are power efficient. Microcontroller are low-cost, much lower than FPGAs. This is specially true for small applications and large quantities. Microcontrollers are available in easy to solder SOIC and QFP package like one of the 32 bit Stellaris microcntroller from TI is available in SOIC28. Many vendors have TQFP48/64 packages with enriched peripheral options. You have limited choice in case of FPGAs. Those of the class of Spartan4/5 and above need to outsource the PCB/PCBA services which is expensive, difficult to debug and out of the reach an entry level professional or hobbyist.

What if we use microcontrollers and FPGAs are used simultaneously? It happens! Infact some vendors like Altera, Xilinx, Atmel provide configurable logic along-with processor core as well. But for an electronics engineer (or the embedded systems developer) working on both the niches is really difficult. I remember that I had taken an FPGA course back in 2008 but could not fully utilize my training even now. This is because the expertise in microcontrollers are rarely going to help you in the field of FPGA unless you only do the

embedded development and no advanced digital logic design. Also the HDL like Verilog may look similar to C in syntax, its very different in use and often confuses just after a session of C coding. The very mechanism of sequential microcontrollers and concurrent FPGAs is very different. There are many other factors which decide what you choose. Like your company is a pure R&D or a services based company. You educational upbringing, some universities teach heavy FPGAs and DSP while other emphasize on microcontrollers. Your own personal interest and the level of your comfort. The nature of project, small volume cost insensitive or large volume cost sensitive. And, may be, most importantly the end application or customer requirement. There would many other pro and cons which you can search further on internet but, I think that, if your field demands heavy DLD work and lots of DSP is involved, use FPGAs, otherwise, use microcontrollers; although only you are the right person to decide what to do keeping all the above mentioned circumstances in view.

Microprocessors or FPGAs?: Making the Right Choice

The right choice is not always either/or. The right choice can be to combine the best of both worlds by analyzing which strengths of FPGA and CPU best fit the different demands of the application. STEVE EDWARDS, CURTISS-WRIGHT CONTROLS EMBEDDED COMPUTING There has been stunning growth in the size and performance of FPGAs in recent years thanks to a number of factors, including the aggressive adoption of finer chip geometries down to 28nm, higher levels of integration, the use of faster serial and communication links, specialized cores, enhanced logic and innovative designs from the major FPGA vendors. Meanwhile, the overall performance growth curve of traditional microprocessors has somewhat flattened due to power density hurdles, which have limited clock rates to around 1.5-2 GHz because the faster the new processors go the hotter they get. This power density barrier has been somewhat mitigated by the emergence of multicore processors, but as the number of cores increases these devices bring their own set of issues including how to make optimal use of their parallelism while operating systems and automatic parallelization tools lag far behind. Improvements in FPGAs have driven a huge increase in their use in space, weight and power (SWaP) constrained embedded computing systems for military and aerospace applications. They are ideal for addressing many classes of military applications, such as Radar, SIGINT, image processing and signal processing where high-performance DSP and other vector or matrix processing is required. SWaP performance rather than cost is the key driver for this. Given this trend, system designers are more frequently asking the question, Should I use FPGAs or microprocessors for my next embedded computing project? And the answer today is, it depends. FPGAs are an increasingly attractive solution path for demanding applications due to their ability to handle massively parallel processing. FPGAs tend to operate at relatively modest clock rates

measured in a few hundreds of megahertz, but they can perform sometimes tens of thousands of calculations per clock cycle while operating in the low tens of watts range of power. Compared to FPGAs, microprocessors that operate in the same power range have significantly lower processing functionality. Typically, a similarly power rated microprocessor may run at 1-2 GHz clock rate, or roughly 4 or 5 times as fast as an FPGA, but it will be much more limited in how many operations it can perform per clock cycle, with a maximum typically in the range of four or eight operations per clock. This means that FPGAs can provide 50 to 100 times the performance per watt of power consumed than a microprocessor. This might seem to give FPGAs an unbeatable edge over microprocessors, but the advantage of FPGAs in these applications is, unfortunately, not that clear cut. Despite their apparent computational strengths, there are three key factors that determine the utility of FPGAs for a particular application. These factors are algorithm suitability, floating point vs. fixed point number representation, and general difficulties associated with developing FPGA software. The embedded system designer must carefully weigh the benefits of FPGAs against the real-world limits and the challenges of hosting their application on these devices. The first question to consider is for which types of algorithms FPGAs are best suited? FPGAs work best on problems that can be described as embarrassingly parallel, that is, problems that can be easily and efficiently divided into many parallel, often repetitive, computational tasks. Many applications in the military and aerospace environment fall into this class of problem, including radar range and azimuth compression, beamforming and image processing. On the other hand, there are types of computational problems for which FPGAs are not ideal, such as target classification and moving target indication problems. Problems like these, that are by nature unpredictable and dynamic, are much better performed on traditional microprocessors because they require a more dynamic type of parallelization that is unsuitable for FPGAs, whose strongpoint is repetitive operations. With the advent of multicore processors that will soon be offered with 16, 32, or more cores, the question of how parallel an algorithm is will become more and more important. With more cores microprocessors become increasingly less suitable to address dynamic parallelization problems because the challenge of distributing the problem between the various cores can become intractable. A second key issue when considering a choice between FPGAs and microprocessors, is the fact that FPGAs are not particularly well suited to floating point calculations, which microprocessors address with well developed vector math engines (Intels AVX and Power Architectures Altivec). While FPGAs can perform these types of calculations, it requires an undue amount of logic to implement them, which then limits the calculation density of the FPGA and negates much its computational advantage and value. If an application requires high-precision floating point calculations, then it is probably not a good candidate for implementation on an FPGA. Even high-precision fixed-point calculations require a large quantity of logic cells to implement (Figure 1).

Figure 1 Chart comparing the amount of logic needed to implement several different fixed point resolution calculations and floating point. The third key factor in determining whether an FPGA or a microprocessor is better suited for a project is the degree of difficulty that confronts a system designer tasked with implementing the application and the talent and resources available for the work. The challenges of designing with traditional microprocessors are well established and long familiar. On the other hand, while FPGA development tools have improved dramatically over the last few years, it still takes specialized talent to develop code for an FPGA. And even with expert talent, FPGA development often takes much longer than an equivalent development task for a microprocessor using a highlevel language like C or C++. This is partly due to the time and tedium demands of the iterative nature of FPGA code development and the associated long synthesis/simulation/execution design cycle. System developers need to weigh the computational or SWaP density benefits that an FPGA brings to an application against the cost of development. In addition to these three main issues, there are other less critical considerations, such as which sensor interfaces are required by an application, which may also play into the FPGA vs. microprocessor decision. For example, if custom or legacy interfaces such as serial front panel data port (SFPDP) are needed for the application and which may not be supported on a modern processor, it might be preferable to use an FPGA. FPGAs have an inherent flexibility that enables them to be tailored to connect directly to a sensor stream as in the above case of legacy or custom interfaces. Microprocessors are limited to the interfaces provided on-chip, such as PCI Express, Serial RapidIO, or Gigabit Ethernet. FPGAs, on the other hand, often provide highspeed SERDES links that can be configured as a wide range of standard interfaces. In addition, the large number of discrete I/O pins on an FPGA can often be used to implement standard or custom parallel bus interfaces. When this I/O flexibility is combined with directly attached memories, SRAM for speed and random-access, SDRAM for memory depth, etc., FPGAs can be a powerful tool for front-end processing and a much better choice than a microprocessor. Once these factors have been weighed, it is clear that both FPGAs and microprocessors have clear, competitive advantages for different applications. Given all this, it seems that a system designer might have difficulty deciding whether to pick FPGAs or microprocessors for their application. The good news is that the designer can, in fact, have both. In the past, systems tended to be homogeneousthat is, composed of one type of processing element. Today, systems designers have a wide range of products to choose from and can often mix-and-match computing components to get just the right mix of computing and I/O to meet their application, SWaP and ruggedization requirements. They can choose from a mixture of board types based on a common communications fabric like Serial RapidIO or PCI Express. Another option, which provides the best of both worlds, is a hybrid FPGA/microprocessor-based board like Curtiss-Wright Controlss CHAMP-FX3. This rugged OpenVPX 6U VPX board features dual Xilinx Virtex-6 FPGAs and an AltiVec-enabled dual-core Freescale Power Architecture MPC8640D processor (Figure 2). It provides dense FPGA resources combined with general-purpose processing, I/O flexibility and support for multiprocessing applications to speed and simplify the integration of advanced digital signal and image processing into embedded

systems designed for demanding Radar Processing, Signal Intelligence (SIGINT), ISR, Image Processing, or Electronic Warfare applications.

Figure 2 Champ-FX3. Using a hybrid FPGA/microprocessor board, designers can customize their system, providing FPGA components where they make sense with more general-purpose microprocessors or DSPs for the portions of an application for which they are best suited, all provided by standards-based components. This is an especially powerful approach when there is communications middleware and validated software drivers available to provide all of the common data movement and synchronization functions. Todays embedded computing system designer has a wide range of computational and I/O components to choose from in a number of standards-based form factors. Suppliers are offering more choices with greater flexibility to mix components to solve any particular problem. In the near future, the market will likely see more and more product type fragmentation, with a myriad of different mixes of microprocessors and FPGAs offered on both the component and the board level. FPGAs will likely feature many features of traditional microprocessors and vice versa. While more options will surely make the decision process more complex, the wider range of choices in FPGAs and microprocessors promises to empower innovative designers, enabling them to solve more difficult problems than they could solve before. adkrabang, Thailand (PressExposure) May 20, 2010 -- What are FPGA and CPLD? A FPGA or Field Programmable Gate Array is an integrated circuit designed to be configured after manufacturing, hence it is called field programmable. FPGAs contain programmable logic components termed as "logic blocks", and a hierarchy of reconfigurable interconnects that allow the blocks to be "wired together". This logic blocks can be configured to perform complex combinational functions or merely simple logic gates. In most FPGAs, the logic blocks also include memory elements. The FPGA has this definite advantage that it can be programmed or updated by the user at site for which the recurring cost remains insignificant in reference to an ASIC design. The ASIC designs also have a unit price tag in the higher side. Therefore FPGAs offer various advantages to numerous applications. The full form of CPLD is Complex Programmable Logic Device. It is a programmable logic device. It has the architectural features of both PAL and FPGA but less complex than FPGA. Macro cell is the building block of CPLD, which contains logic implementing disjunctive normal form expressions and more specialized logic operations. The difference between FPGA and CPLD The primary differences between CPLD and FPGA are architectural. A CPLD has a restrictive structure which results in less flexibility. The FPGA architecture is dominated by interconnect which makes them not only far more flexible but also far more complex to design.

Most FPGAs have higher-level functions like adders, multipliers and embedded memories, also logic blocks, which implement decoders or mathematical functions. This is not the case with CPLDs. The major difference between the architecture of FPGA and CPLD device is that FPGAs are internally based on Look-up tables (LUTs) while CPLDs form the logic functions with sea-ofgates. The difference between FPGA and Microcontroller. A microcontroller is computing system. Microcontroller has lots of hierarchical rules and commands over its input and output. It has its own processing unit. Microprocessor can perform loops, timings, conditioned branching, and calculations like a small PC under program control. Microprocessors are used where the operation is relatively complex but processing speed relatively less than FPGA. FPGA is only an array of gate that can be connected as the user wishes. FPGA is no computing system. FPGA are used for relatively simpler operations but higher processing speed in comparison to microcontrollers. The applications of FPGA in Embedded System. The part played by FPGA in context of embedded system is getting more crucial every day. This is due to the reason that capability of FPGA is on the rise and now it has powerful FPGA design software as well. The digital video application domain is the main consumer for FPGA systems and is instrumental in increasing its market share as well as forcing development in FPGA for bigger spectrum of applications. In current years FPGA is finding its application for fast signal processing. FPGA is an embedded platform with soft processors. This makes it possible to design FPGA based system on design applications. The decreasing price and increasing size and capability combined with an easier designing software tools is positioning FPGA in the embedded system context to take up a crucial role. One way that silicon suppliers reduce manufacturing costs is by discontinuing older product portfolios leading to microprocessor obsolescence. Multiple versions of those processor cores and a mix of integrated peripherals complicate the situation, leading to a plethora of silicon incarnations for each particular processor architecture. By integrating peripherals into a single die with a microprocessor, the supplier could target the microcontroller to a particular application domain with high volume needs. (Throughout this article, the term "microcontroller" refers to the entire component, including the processor core and integrated peripherals.) As applications and the standards by which they operate evolve over time, the narrower market space of the microcontroller design makes it more vulnerable to obsolescence. This article examines the options that are available to designers facing microprocessor (and microcontroller) obsolescence using FPGAs. Before considering those options, however, it is helpful first to consider FPGA technology as a means to address the challenge of microprocessor obsolescence. On a LatticeXP2 FPGA, for

example, the CPU core of a 68HC11 compatible can be implemented as fully synthesizable code in just 2600 slices. Implementing all the peripherals integrated on this component as illustrated in the 68HC11 compatible microcontroller shown in Fig 1 will increase this by a few hundred slices.

1. D68HC11 microcontroller implementation. In addition to easily fitting onto today's FPGAs, this implementation can run, if desired, up to 5 times faster than the original 8-12 MHz component. The technology curve FPGAs let designers take advantage of the technology curve while protecting their designs from microprocessor obsolescence. The peripherals, or some combination of them, may also be in danger of obsolescence. In addition to handling obsolete microcontrollers, the FPGA technology curve provides options for integrating surrounding functionality onto the FPGA and so reduces the overall system cost and eliminates concern over other components that may be discontinued. While both SoC-based microcontrollers and FPGAs follow a technology curve as illustrated in Fig 2, with synthesizable RTL and FPGAs designers are protected from future obsolescence, not to mention the huge NREs associated with ASICs.

2. Technology curve adds integration headroom. The technology curve graph in Fig 2 demonstrates the relative equivalent logic complexity of microprocessors and microcontrollers implemented in silicon versus a soft implementation in an FPGA. As we see, at the time the original microcontroller becomes obsolete, available FPGA logic density is much greater than what is required to implement the microprocessor and its peripherals. This additional logic, called Peripheral Integration Headroom, will only grow with time as FPGA densities continue to increase. Indeed, the D86HC11 illustrated in Fig 1 includes the DoCD block, which provides real time, non intrusive system debugging; a function not originally integrated into the original microcontroller. FPGAs offer a range of design trade-offs, so the designer is given choices in implementing a solution. Designers have the following options:

Option 1: Complete redesign (and "future-proof" the design) In this scenario, the device supplier might offer a replacement component that may or may not be a similar instruction set architecture (ISA). Choosing this option will require a complete redesign of the hardware and software, using either another component-based

microcontroller solution or an FPGA-based solution. If this route is chosen, it is the perfect time to "future-proof" the design with an open source soft processor such as the 32-bit, Harvard architecture LatticeMico32. Unlike the GNU General Public License (GPL) with which the software community is familiar, the innovative open source license for the LatticeMico32 is hardware implementation friendly. It may be ported to any FPGA or ASIC free of charge.

Option 2: Same ISA, Higher Integration A soft implementation of the original microcontroller component (P core and integrated peripherals) is programmed onto an FPGA. The soft processor implementation and/or its peripherals may run at a higher speed than in the original component. With this option, the software may require only the minor changes associated with any changes in the peripherals or timing loops. The benefits of this option are that software changes are minimized and the cost of the board is reduced, since other board functions are now integrated into the FPGA. Option 3: Binary Compatibility, Higher Integration A soft implementation of the original microcontroller component (P core and integrated peripherals) with cycle exact timing is programmed onto an FPGA. The goal here is to use the original binary code without modification. This option enables cost reduction of the board by integrating other original board functionality into the FPGA. Option 4: Binary Compatibility, Socket Compatibility (typical scenario) This option uses a mezzanine board to implement the FPGA and minimal associated logic as an exact pin-for-pin replacement for the original microcontroller. This approach eliminates both software and hardware changes, except for the mezzanine board itself. For example, a 68HC11 MCU in production use is discontinued by the vendor. In evaluating possible approaches to this problem, a customer might stipulate that the replacement part has to work identically as the original in terms of functionality and timing, and also that the same binary program has to run without modification. The customer decides to use a D68HC11 IP Core from Digital Core Design (DCD) in a LatticeXP2 FPGA from Lattice Semiconductor. The on-chip Flash of the LatticeXP2 allows for a simpler design with a lower parts count (Fig 3), because the non-volatile LatticeXP2 does not require an external Flash memory or other circuitry to load the FPGA configuration.

3. Typical implementation for Option 4. The customer initially might have considered replacing the original 68HC11 hardware with a completely different processor, but this approach would have required replacing the application software. That would have been a daunting task, because the software was written in tight relation to 68HC11 instructions and internal peripherals. Consequently, switching to a new processor would have required considerable effort and time just for the software redesign. As a result, the most sensible solution would be to replace the discontinued 68HC11 with an FPGA implementation. This option would allow the design team to focus on validation of the D68HC11 IP core inside the FPGA, and avoid changes to any other part

of the system (software application, external on-board components) that had worked perfectly for over 15 years. The most important requirement is to achieve complete software compatibility with the original microprocessor. Not only must the same binary code run on the D68HC11, any change to the binary code is unacceptable. In such cases, the additional restriction is to maintain cycle accuracy of the instructions. This is critical, because the legacy software may use timing delays made with instruction loops. In addition, some of its functionality or peripheral interfaces may rely on exact execution time measured by instructions and clock cycles. Peripheral compatibility can be difficult to achieve when using this approach. In this case, however, all the digital parts were readily implemented with identical functional and timing behavior. Most of them were off-the-shelf IP core components already designed and verified in the DCD core library. A related common problem is the replacement of the analog components of the microcontroller, such as Analog-to-Digital converters (ADC), power-up/power-fail reset generators and clock oscillators. The FPGA, being a digital programmable device, can implement any digital interface logic related to these functions, but not the analog circuitry itself. Replacing the digital functionality of a previously integrated ADC is possible using the FPGA implementation. It is necessary to take an external ADC converter and design a D68HC11 interface to it. Since no currently available ADC has identical conversion accuracy to the original component, one with superior characteristics is selected. The requirement is to have register functionality that is identical to the original ADC interface, including all translation of the control and status signals between the ADC and CPU. The conversion timing must be the same as well. This is the main job of the ADCinterface, implemented inside the FPGA as an additional module of D68HC11. So, a direct replacement of the legacy part would consist of a small PCB containing the FPGA chip, ADC chip, clock generator and original PLCC socketed for direct connection to the customer's system. No software development or changes to the original board would be necessary. Most of the IP cores used in this FPGA implementation would come from the DCD library and already be fully verified. Any new peripherals needed would be designed to match the requirements of the legacy 68HC11. These new peripherals would be carefully verified against the original peripherals' timing and functionality. The use of an FPGA would also be ideal for verification, since changes made in the HDL code would be verified on the FPGA almost immediately. Conclusion In this article we first highlighted the real problem of microprocessor obsolescence and the challenge it presents. We then presented the FPGA-based options available to designers who must deal with discontinued microcontroller components, and we offered a way to future-proof new designs that require a microcontroller. We demonstrated how FPGAs offer a solution to the problem of obsolescence as well as offer a range of options that can integrate functionality, reduce board cost and real estate, and increase visibility into the design for debugging.

Field Programmable Gate Arrays or FPGAs were once simple blocks of gates that can be configured by the user to implement the logic that he or she wants. In comparison, a microprocessor is a simplified CPU or Central Processing Unit. It executes a program that contains a specific set of instructions. The main difference between FPGAs and microprocessors is the complexity. Although both vary in complexity depending on the scale, microprocessors tend to be more complex than FPGAs. This is because of the various processes already implemented in it. Microprocessors already have a fixed set of instructions, which the programmers need to learn in order to create the appropriate working program. Each of these instructions has their own corresponding block that is already hardwired into the microprocessor. An FPGA doesnt have any hardwired logic blocks because that would defeat the field programmable aspect of it. An FPGA is laid out like a net with each junction containing a switch that the user can make or break. This determines how the logic of each block is determined. Programming an FPGA involves learning HDL or the Hardware Description Language; a low level language that some people say to be as difficult as assembly language. The development and drop in price of semiconductors and electronics in general has slowly blurred the lines between FPGAs and microprocessors by literally combining the two in a single package. This gives the combined package a lot more flexibility. The microprocessor does most of the actual processing but it passes off the more specific tasks to an FPGA block. This lets you obtain the best of both worlds. The microprocessor can handle the general tasks while custom FPGA blocks give you the ability to incorporate unique blocks. The improvement in electronics has broadened the coverage of microprocessors and FPGAs. If you really want to, you can use a microprocessor and make it do the work of an FPGA. You can also take an FPGA and make it work as a single logic gate. So for most tasks where you are choosing between a microprocessor and FPGA, you can probably make do with either one. Summary: 1. Microprocessors are more complex than FPGAs 2. Microprocessors have fixed instructions while FPGAs dont 3. FPGAs and microprocessors are often mixed into a single package

Read more: Difference Between FPGA and Microprocessor | Difference Between | FPGA vs Microprocessor http://www.differencebetween.net/technology/hardware-technology/differencebetween-fpga-and-microprocessor/#ixzz2YWN2aw9o

The Mode-S data signal has 1MBit/sec, so it is pretty fast. It does not have a standard data format that would allow reception with a microcontroller's internal hardware interface like RS232, IC or SPI, so it must be sampled using the CPU and standard processor commands step-by-step. Therewith and and even then just by using a couple of tricks, all these C are just able to look at the signal once per each signal information, mostly in the middle. Even worse, the C just gets a 0 or a 1 information from the comparator, so it either has a 01 or a 10 in order to decide each data bit's value. Next, the C has just one CPU and it cannot do several tasks at the same time. So while calculating the checksum, handling the serial interface or while serving the USB port, it cannot handle frames at the input. There is always a gap in the reception when performing these other, also important tasks. With the FPGA one actually constructs a dedicated hardwarecircuit for the given task, same as many years ago one took TTL or CMOS gates, flipflops, shiftregisters from the shelf. The "code" is not a sequence that is executed by a pre-given hardware, instead hardware pieces are connected in order to work as needed for the task. With such a circuit it is easily possible to sample the incoming signal much more often. The Mode-S Beast samples 16 times more often, and then does averaging over each 8 samples per half bit information. So if just one sample was correct and 7 wrong, still a valid result can be concluded. But, even better: Since there is a AD converter on the board, the FPGA gets level information within each sample, ranging from 0 to 255. So each half bit generates a value from 0 to 2040. While a C has just a decision resolution between 0 and 1, the FPGA has a decision resolution between 2040 different values. It can resolve the signal much better, and so it can read much better from noise. Second, in the FPGA there is no CPU that can do just one task at a time, instead everything functionality is built up by dedicated hardware gates. The receiver is always working, and in parallel there is a RS232 frame builder working all the time. All units do not interfer with each other because they all do have their own hardware and don't share any ressources. So there is no loss of information while other tasks are performed.

S-ar putea să vă placă și

- Microcontrollers: Compact Integrated Circuits for Embedded SystemsDocument4 paginiMicrocontrollers: Compact Integrated Circuits for Embedded SystemsElaine YosoresÎncă nu există evaluări

- Wikipedia Handbook of Biomedical InformaticsDocument709 paginiWikipedia Handbook of Biomedical InformaticsMonark Huny100% (1)

- HEALTH TRACKING ANALYZER USING IOT: LITERATURE SURVEYDocument39 paginiHEALTH TRACKING ANALYZER USING IOT: LITERATURE SURVEYsai chaithanyaÎncă nu există evaluări

- IotDocument121 paginiIotablemathew173974Încă nu există evaluări

- What is a Microcontroller? Types, Classification & ApplicationsDocument7 paginiWhat is a Microcontroller? Types, Classification & ApplicationsItronix MohaliÎncă nu există evaluări

- Healthcare Monitoring System Using IoTDocument7 paginiHealthcare Monitoring System Using IoTIJRASETPublicationsÎncă nu există evaluări

- MicrocontrollerDocument13 paginiMicrocontrollerseyfiÎncă nu există evaluări

- IT Audit PDFDocument135 paginiIT Audit PDFEri MejiaÎncă nu există evaluări

- 15CS81 Internet of Things Course OverviewDocument76 pagini15CS81 Internet of Things Course OverviewShameek MangaloreÎncă nu există evaluări

- Big DataDocument957 paginiBig Datamughni ghazaliÎncă nu există evaluări

- Iterative machine learning design process for problem-framing and model selectionDocument9 paginiIterative machine learning design process for problem-framing and model selectionrubenÎncă nu există evaluări

- Industry 4.0Document45 paginiIndustry 4.0Gilang Ramadhan IPBÎncă nu există evaluări

- Simulation and Modeling Simulation and Modeling: CPE428/CSC425Document22 paginiSimulation and Modeling Simulation and Modeling: CPE428/CSC425Mau BachÎncă nu există evaluări

- Create An MQTT Dashboard Using ThingsboardDocument30 paginiCreate An MQTT Dashboard Using ThingsboardsahmadaÎncă nu există evaluări

- Cloud TechnologiesDocument56 paginiCloud TechnologiesNarmatha ThiyagarajanÎncă nu există evaluări

- Distributed Systems Characterization and DesignDocument35 paginiDistributed Systems Characterization and DesignkhoadplaÎncă nu există evaluări

- Smart Healthcare Monitoring and Patient Report Generation System Using IOTDocument9 paginiSmart Healthcare Monitoring and Patient Report Generation System Using IOTIJRASETPublicationsÎncă nu există evaluări

- WWW - Xilinx-What Is A CPLD-261016Document45 paginiWWW - Xilinx-What Is A CPLD-261016abdulyunus_amirÎncă nu există evaluări

- System Analysis and DesignDocument25 paginiSystem Analysis and DesignCraig HoodsÎncă nu există evaluări

- The Curse of Dimensionality and Dimensionality ReductionDocument51 paginiThe Curse of Dimensionality and Dimensionality ReductiondbsolutionsÎncă nu există evaluări

- Slurm GuideDocument78 paginiSlurm GuideaaupcÎncă nu există evaluări

- Design and Implementation of A Mini-Size Search Robot PDFDocument4 paginiDesign and Implementation of A Mini-Size Search Robot PDFsrcembeddedÎncă nu există evaluări

- System Testing MethodologyDocument6 paginiSystem Testing Methodologydfdfd100% (1)

- Floating Point ArithDocument8 paginiFloating Point ArithVishnupriya Akinapelli100% (1)

- M.tech CPLD & Fpga Architecture & ApplicationsDocument2 paginiM.tech CPLD & Fpga Architecture & ApplicationssrinivasÎncă nu există evaluări

- Data Profiling Overview: What Is Data Profiling, and How Can It Help With Data Quality?Document3 paginiData Profiling Overview: What Is Data Profiling, and How Can It Help With Data Quality?Nilesh PatilÎncă nu există evaluări

- Big Data & IoTDocument66 paginiBig Data & IoTSadman KabirÎncă nu există evaluări

- Support Vector Machine: Suraj Kumar DasDocument10 paginiSupport Vector Machine: Suraj Kumar DasSuraj Kumar DasÎncă nu există evaluări

- The Curse of Dimensionality - Towards Data Science PDFDocument9 paginiThe Curse of Dimensionality - Towards Data Science PDFLucianoÎncă nu există evaluări

- Complex Event Processing - A SurveyDocument7 paginiComplex Event Processing - A SurveyJournal of ComputingÎncă nu există evaluări

- Ai IntroDocument33 paginiAi IntroNithishÎncă nu există evaluări

- PROJECT REPORT Vidoe TrackingDocument27 paginiPROJECT REPORT Vidoe TrackingKunal KucheriaÎncă nu există evaluări

- Network Security PDFDocument4 paginiNetwork Security PDFSUREKHA SÎncă nu există evaluări

- Security of Internet of ThingsDocument4 paginiSecurity of Internet of ThingsKannan AlagumuthiahÎncă nu există evaluări

- 3 SrsDocument42 pagini3 Srsapi-3775463100% (2)

- Ics2307 Simulation and ModellingDocument79 paginiIcs2307 Simulation and ModellingOloo PunditÎncă nu există evaluări

- Intelligent AgentDocument60 paginiIntelligent AgentMuhd SolihinÎncă nu există evaluări

- Data Science & ML SyllabusDocument12 paginiData Science & ML SyllabusAdityaÎncă nu există evaluări

- Microrobotics: Design, Implementation, Applications, Challenges and FutureDocument11 paginiMicrorobotics: Design, Implementation, Applications, Challenges and FutureSai KrishnaÎncă nu există evaluări

- ITIL v3 Foundations 4 STDocument61 paginiITIL v3 Foundations 4 STjuanatoÎncă nu există evaluări

- Embedded SystemDocument52 paginiEmbedded SystemamitÎncă nu există evaluări

- Technical Seminar Report on Cloud ComputingDocument13 paginiTechnical Seminar Report on Cloud ComputingRajesh KrishnaÎncă nu există evaluări

- Chapter Two (Network Thesis Book)Document16 paginiChapter Two (Network Thesis Book)Latest Tricks100% (1)

- Fast Numerical Methods For Mixed-Integer Nonlinear Model-Predictive ControlDocument380 paginiFast Numerical Methods For Mixed-Integer Nonlinear Model-Predictive ControlDaniel Suarez ReyesÎncă nu există evaluări

- Class vs Reg TreesDocument3 paginiClass vs Reg TreesAnonymousÎncă nu există evaluări

- ICS 2305 Systems ProgrammingDocument20 paginiICS 2305 Systems ProgrammingMartin AkulaÎncă nu există evaluări

- Big Data Question BankDocument15 paginiBig Data Question Bankkokiladevirajavelu0% (2)

- SeminarDocument21 paginiSeminarBalakrushna SahuÎncă nu există evaluări

- Distributed Shared Memory Distributed MemoryDocument19 paginiDistributed Shared Memory Distributed MemorymuraridreamsÎncă nu există evaluări

- PHD Thesis On Big Data in Official Stati PDFDocument134 paginiPHD Thesis On Big Data in Official Stati PDFJoel Yury Vargas SotoÎncă nu există evaluări

- Data-Level Parallelism in Vector, SIMD, And: GPU ArchitecturesDocument29 paginiData-Level Parallelism in Vector, SIMD, And: GPU ArchitecturesMarcelo AraujoÎncă nu există evaluări

- Project and Process MetricesDocument5 paginiProject and Process MetricesSahay AlokÎncă nu există evaluări

- A Seminar Presentation ON: CyborgsDocument53 paginiA Seminar Presentation ON: CyborgsRajesh KesapragadaÎncă nu există evaluări

- Tools and Methods Used in Cybercrime PDFDocument3 paginiTools and Methods Used in Cybercrime PDFVishwanath CrÎncă nu există evaluări

- Health Informatics An International Journal HIIJDocument2 paginiHealth Informatics An International Journal HIIJhiijjournalÎncă nu există evaluări

- 9 Deep Learning Papers that Shaped CNNsDocument19 pagini9 Deep Learning Papers that Shaped CNNsjatin1514Încă nu există evaluări

- IOT Unit3 Web Comm ProtocolsDocument31 paginiIOT Unit3 Web Comm ProtocolsSaumitra PandeyÎncă nu există evaluări

- Lecture 2Document49 paginiLecture 2Ahmad ShdifatÎncă nu există evaluări

- Software Modeling A Complete Guide - 2020 EditionDe la EverandSoftware Modeling A Complete Guide - 2020 EditionÎncă nu există evaluări

- Quartus Ii V7.2: The Signaltap Ii Logic Analyzer LabDocument15 paginiQuartus Ii V7.2: The Signaltap Ii Logic Analyzer LabsenthilvlÎncă nu există evaluări

- Fadd ExtendedDocument36 paginiFadd ExtendedsenthilvlÎncă nu există evaluări

- REF Probabilistic Gate-Level Power Estimation Using A NovelWaveform Set MethodDocument6 paginiREF Probabilistic Gate-Level Power Estimation Using A NovelWaveform Set MethodsenthilvlÎncă nu există evaluări

- CNS QB 2 MK WaDocument25 paginiCNS QB 2 MK WasenthilvlÎncă nu există evaluări

- Operational Description and Message Format of PGPDocument1 paginăOperational Description and Message Format of PGPsenthilvlÎncă nu există evaluări

- Genetic Algorithm Model ExamDocument1 paginăGenetic Algorithm Model ExamsenthilvlÎncă nu există evaluări

- Pre Internal 1Document2 paginiPre Internal 1senthilvlÎncă nu există evaluări

- Int 1Document1 paginăInt 1senthilvlÎncă nu există evaluări

- Application LayerDocument31 paginiApplication LayersenthilvlÎncă nu există evaluări

- Intruders, Passwords, and Intrusion DetectionDocument41 paginiIntruders, Passwords, and Intrusion DetectionsenthilvlÎncă nu există evaluări

- Unit 1 QuestionDocument1 paginăUnit 1 QuestionsenthilvlÎncă nu există evaluări

- Fundamentals & Link LayerDocument38 paginiFundamentals & Link LayersenthilvlÎncă nu există evaluări

- Internal 1 Answer KeyDocument2 paginiInternal 1 Answer KeysenthilvlÎncă nu există evaluări

- Syllabus CNSDocument2 paginiSyllabus CNSsenthilvlÎncă nu există evaluări

- Data Link Layer ConceptsDocument16 paginiData Link Layer ConceptssenthilvlÎncă nu există evaluări

- Internal Examination - 1 Internal Examination - 1Document1 paginăInternal Examination - 1 Internal Examination - 1senthilvlÎncă nu există evaluări

- Department of Electronics and CommunicationDocument3 paginiDepartment of Electronics and CommunicationsenthilvlÎncă nu există evaluări

- Introduction To ADCs, TutorialDocument58 paginiIntroduction To ADCs, Tutorialsenthilvl100% (1)

- Model ExamDocument1 paginăModel ExamsenthilvlÎncă nu există evaluări

- Simulated Output: Wallace:tree MR (3..0) MD (3..0) Booth - Encoder:booth Partial:ppDocument5 paginiSimulated Output: Wallace:tree MR (3..0) MD (3..0) Booth - Encoder:booth Partial:ppsenthilvlÎncă nu există evaluări

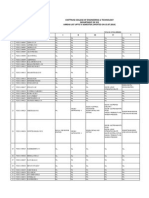

- Arrer List With Subject Name III Ece-NewDocument18 paginiArrer List With Subject Name III Ece-NewsenthilvlÎncă nu există evaluări

- MEIDocument1 paginăMEIsenthilvlÎncă nu există evaluări

- Cover PAGEDocument1 paginăCover PAGEsenthilvlÎncă nu există evaluări

- IT 3 QuestionDocument1 paginăIT 3 QuestionsenthilvlÎncă nu există evaluări

- Ex. No: Date: Creating Virtual Instrumentation For Simple Application AimDocument3 paginiEx. No: Date: Creating Virtual Instrumentation For Simple Application AimsenthilvlÎncă nu există evaluări

- Embedded SystemsDocument2 paginiEmbedded SystemssenthilvlÎncă nu există evaluări

- Analog To Digital Converters PresentationDocument31 paginiAnalog To Digital Converters PresentationJitendra MishraÎncă nu există evaluări

- Expt No.: 2 Design and Implementation of Code Onvertor AimDocument18 paginiExpt No.: 2 Design and Implementation of Code Onvertor AimsenthilvlÎncă nu există evaluări

- MEIDocument1 paginăMEIsenthilvlÎncă nu există evaluări

- Course WorkDocument7 paginiCourse WorksenthilvlÎncă nu există evaluări

- DeepSpeech 0Document15 paginiDeepSpeech 0eranhyzoÎncă nu există evaluări

- Error 2501 Outputto PDFDocument2 paginiError 2501 Outputto PDFDennisÎncă nu există evaluări

- IP Configuration Questions for Network Technician ExamDocument18 paginiIP Configuration Questions for Network Technician ExamИлиян БързановÎncă nu există evaluări

- Memjet C6010 Windows UserGuideDocument107 paginiMemjet C6010 Windows UserGuiderendangenakÎncă nu există evaluări

- ResumeDocument2 paginiResumeHafiz Mujadid KhalidÎncă nu există evaluări

- HP Xw4400 Customer FaqsDocument16 paginiHP Xw4400 Customer FaqsHarryÎncă nu există evaluări

- fs19 LogDocument19 paginifs19 LogFubeszÎncă nu există evaluări

- Mellanox OFED Linux User Manual v2.3-1.0.1Document207 paginiMellanox OFED Linux User Manual v2.3-1.0.1Jason HlavacekÎncă nu există evaluări

- I2C Master/Slave VerificationDocument4 paginiI2C Master/Slave VerificationMeghana VeggalamÎncă nu există evaluări

- Hadoop - The Final ProductDocument42 paginiHadoop - The Final ProductVenkataprasad Boddu100% (2)

- MS1500L LPR Data Logger: Metal Samples CompanyDocument68 paginiMS1500L LPR Data Logger: Metal Samples CompanyfornowisÎncă nu există evaluări

- CP E80.50 SecuRemoteClient UserGuideDocument16 paginiCP E80.50 SecuRemoteClient UserGuideGabino PampiniÎncă nu există evaluări

- Lightroom Shortcuts ClassicDocument17 paginiLightroom Shortcuts ClassicmitoswrcÎncă nu există evaluări

- Sensus Ultra: Developer's GuideDocument16 paginiSensus Ultra: Developer's GuideAngel David PinedaÎncă nu există evaluări

- GeneTouchMonitor User Guide-2.3Document28 paginiGeneTouchMonitor User Guide-2.3Gas2shackÎncă nu există evaluări

- InPage 2009 Professional Uninstall LogDocument13 paginiInPage 2009 Professional Uninstall LogsolypkÎncă nu există evaluări

- 11.4.3.6 Packet Tracer - Troubleshooting Connectivity IssuesDocument3 pagini11.4.3.6 Packet Tracer - Troubleshooting Connectivity IssuesyhonelÎncă nu există evaluări

- Exercises 2017-2018Document6 paginiExercises 2017-2018tuyambaze jean claudeÎncă nu există evaluări

- A Join Vs Database JoinDocument21 paginiA Join Vs Database JoinPradeep KothakotaÎncă nu există evaluări

- Modified Python Lesson PlanDocument3 paginiModified Python Lesson PlanValavala SrinivasuÎncă nu există evaluări

- Kit For The 12F675 Book PDFDocument29 paginiKit For The 12F675 Book PDFCelso Silva SivaÎncă nu există evaluări

- NSButton ClassDocument29 paginiNSButton ClassQamar SaleemÎncă nu există evaluări

- HiAlgo ReadMeDocument3 paginiHiAlgo ReadMeraymond_db_elvizzzÎncă nu există evaluări

- TMC420 Operation Manual PDFDocument152 paginiTMC420 Operation Manual PDFJason Roberts80% (5)

- Apple Product Catalog Fall 1993Document68 paginiApple Product Catalog Fall 1993cinemafia100% (5)

- Isaac Olukunle Resume Edit 2Document2 paginiIsaac Olukunle Resume Edit 2motaÎncă nu există evaluări

- SortDocument204 paginiSortganip007Încă nu există evaluări

- General SAP Tips and TricksDocument17 paginiGeneral SAP Tips and Trickssmithakota100% (1)

- Java Magazine Mar Apr 2019Document104 paginiJava Magazine Mar Apr 2019Kiran KumarÎncă nu există evaluări

- RB750GL ManualDocument2 paginiRB750GL ManualAxel CarcamoÎncă nu există evaluări