Documente Academic

Documente Profesional

Documente Cultură

Lecture8 - Greedy Algorithms

Încărcat de

MrZaggyDescriere originală:

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Lecture8 - Greedy Algorithms

Încărcat de

MrZaggyDrepturi de autor:

Formate disponibile

COMP2230 Introduction to

Algorithmics

Lecture 8

Slides based on:

A. Levitin. The Design and Analysis of Algorithms

R. Johnsonbaugh & M. Schefer. Algorithms

Slides by P. Moscato and Y. Lin

Lecture Overview

Greedy Algorithms Text, Chapter 7

Change-Making Problem

We have an infinite supply of coins of different

denominations d

1

> d

2

> . . . > d

m

.

We want to pay any given amount, using the

minimum number of coins.

(This problem is faced every day by millions and

millions of cahiers . . .)

Change-Making Problem

For example, we have an infinite supply of each of the

following

1c, 2c, 5c, 10c, 20c, 50c, 1, 2

How would you pay for

1.05

2.40

30c

85c

Making change (cont)

This algorithm makes change for an amount A using coins of denominations

denom[1] > denom[2] > > denom[n] = 1.

Input Parameters: denom, A

Output Parameters: None

greedy_coin_change(denom,A) {

i = 1

while (A > 0) {

c = A/denom[i]

println(use+ c + coins of denomination+ denom[i])

A = A - c * denom[i]

i = i + 1

}

}

Making change (cont)

greedy_coin_change is a Greedy Algorithm

keeps track of sum of coins paid so far

checks whether there is still more to pay

If there is more to pay, algorithm adds coins of largest amount

possible (so as not to exceed amount needed)

It never changes its mind

Be greedy seems like a good idea!

Could you see when greed is bad?

You should expect this

Bad cases

Greed might end up with a solution which is not optimal (check

coin denominations 1, 6 and 10 and use greedy for 22).

Greed might end up with a solution which is not feasible (if

the smallest coin denomination is larger than 1, solution might

not always be feasible, e.g. coin denominations 3, 4 and 5 and

amount 6).

General characteristics

of greedy algorithms

On each step, Greedy algorithms make a choice that is:

Feasible (satisfies the constraints)

Locally Optimal (the best choice among all feasible choices)

Irrevocable (cannot be changed latter)

General characteristics

of greedy algorithms

Greedy algorithms generally involve:

A computable selection function used to prioritize (which

candidate is most desirable?)

set of candidates to be considered for inclusion in solution

a set of candidates which have already been chosen

a set of candidates which have already been rejected

solution function (to check if our set is a solution)

feasible function, to check if our set is feasible, i.e. can be

extended to make a solution

For optimization problems an objective function

(what is our score ?)

Greed: the form

Make-change version:

candidates

(large) set of coins

solution function

check if coins chosen =

exact amount paid

feasible set

total value of coins < total

amount to be paid

selection function

choose highest valued coin

of remaining set

objective function

count # of coins

(C,S)

{C is the set of candidates}

S C { will hold the solution}

C = C and not (S)

x (C)

C C \ {x}

(S {x})

S (S {x})

(S)

S

no solution

Greed: the general form

(C,S)

{C is the set of candidates}

S C { will hold the solution}

C = C and not (S)

x (C)

C C \ {x}

(S {x})

S (S {x})

(S)

S

no solution

( )

const = {c

0

, c

1

, ....}

C { will hold the solution}

0 {sum of coins in S}

=

x largest item in C

such that s + x s val

no such item

No solution

S S {a coin of value x}

s s + x

Minimum Spanning Trees

MST-Problem

Input: G=(V,E,W) a connected

undirected graph with non-

negative weights on edges

Output: a connected sub-graph

G=(V,T) of G such that the sum

of the edge lengths of G is

minimized.

10

5

8

7

16

2

1

8

MST (cont)

MST must be a tree.

Why ?

Applications:

minimum cost networks

cabling

transport

5

8

2

1

Greedy strategies for MST

Identify what are the

candidates

What would the starting

point be?

How do we select the next

candidate?

what about rejection?

When are we finished?

edges? vertices?

something else?

Greedy by edges

start with an empty edge

set T

select next shortest edge

(not already seen)

reject if creates a cycle

stop when we have

selected n-1 edges

what does the selected

set represent?

Greedy strategies for MST

What are our candidates?

What would the starting

point be?

How do we select the next

candidate?

what about rejection?

When are we finished?

Greedy by vertices

Start with a single node

is there a special one we

should start at?

Select shortest edge

Reject if it doesn't connect

a node in current set to a

node we havent seen yet

Stop when we have

connected all |V| nodes

What are our candidates?

What would the starting

point be?

How do we select the next

candidate?

what about rejection?

When are we finished?

Greedy strategies for MST

Kruskal: Greedy by edges

Example

Connected Components:

start: {1} {2} {3} {4} {5} {6} {7} {8}

1 2

5

6

4

3

4

8

6

4

3

7

1 2 3

4 5 6

7

Prims algorithm: Greedy by

vertices - Example

starting set: V

T

= {1}

1 2

5

6

4

3

4

8

6

4

3

7

1 2 3

4 5 6

7

The greedy template on MST

Candidates are edges in G

Set of edges is a solution if it is a tree which spans V

Set of edges is feasible if doesnt contain a cycle

Objective function:

minimize total length of edges in the solution

Terminology:

Promising set of edges: one which can be extended to form optimal

solution

Leaving edge: edge with exactly one end in particular set of vertices

Promising Edges

Promising Lemma

BTW, lemmas are auxiliary statements used to form a basis for more

relevant statements (theorems) to be used later on; both lemmas and

theorems need to be proved otherwise they are just conjectures

Given: G = (V,E,W) as before

Given V

T

c V

Given T _ E a promising set of edges, such that no edge

leaves V

T

Let e be the shortest edge leaving V

T

Then T {e} is promising

Proof of Promising Lemma

The proof will be by contradiction

we assume that what were

going to prove is false, and then

show that that assumption is

false

assume T {e} is not

promising

We have a promising set (T)

and the edge e leaving V

T

T

e

V

T

Proof (cont)

T is promising, so there is a MST U containing

edges of T

T is the section of the MST U that weve already

found

consider U

T {e} not promising, so U {e} must have a cycle

- why?

because T {e} not promising and thus U must

have another edge u leaving V

T

on the path

between the end vertices of e AND u must be at

least as large as e, that is, w(u) > w(e) (because e

is the shortest edge leaving V

T

)

So, lets create a new tree T, by deleting u from

U and adding in e

T= U\{u}{e}

Since U was MST, so is T

Now T{e} _ T,

So T{e} is promising - so we have our proof !

T

e

u

Kruskals Algorithm

Initially, set of edges T is empty

Each node of G = (V,T) is in itself a connected component

Each step: smallest not-yet-chosen candidate edge e is considered

If T {e} contains no cycle, that is, if e bridges two different

connected components, then e is added to T

Otherwise, e is rejected (and never considered again!)

Each addition to T reduces the number of connected components by 1

(why?)

Terminate when only one connected component remains.

Kruskals Algorithm - Pseudocode

Algorithm Kruskal(G)

//Input: A weighted connected graph G

//Output: A minimum spanning tree of G, given by its set of edges E

T

Sort E in non-decresing order of the edge weights

w(e

i1

) s . . . s w(e

i|E|

)

E

T

C; //initializing the set of tree edges

counter 0; //initializing the number of tree edges

k 0; //initializing the number of processed edges

while counter < |V|-1 do

k k + 1;

if E

T

{e

ik

} is acyclic

E

T

E

T

{e

ik

};

counter counter + 1;

return E

T

Kruskal - an example

Connected Components:

start: {1} {2} {3} {4} {5} {6} {7}

Shortest edge: (1,2); no cycle accept; connected components: {1,2} {3} {4} {5} {6} {7}

Shortest edge: (2,3); no cycle accept; connected components: {1,2,3} {4} {5} {6} {7}

Shortest edge: (4,5); no cycle accept; connected components: {1,2,3} {4,5} {6} {7}

Shortest edge: (6,7); no cycle accept; connected components: {1,2,3} {4,5} {6,7}

Shortest edge: (1,4); no cycle accept; connected components: {1,2,3,4,5} {6,7}

Shortest edge: (2,5); creates cycle reject

Shortest edge: (4,7); no cycle accept; connected components:{1,2,3,4,5,6,7}

1 2

5

6

4

3

4

8

6

4

3

7

1 2 3

4 5 6

7

Correctness of Kruskals Algorithm

Apply Promising Lemma and use induction

Empty set is promising

Assume by inductive hypothesis that set T of k edges

selected by the Kruskals algorithm is promising. If a

shortest edge e does not create a cycle then by

Promising Lemma T {e} is also promising.

Implementing Kruskals Algorithm

Kruskals algorithm can be efficiently implemented

using disjoint sets.

Remember operations on disjoint sets:

makeset(x)

find(x) tells us which component x is in

union(a,b) merges two components

Kruskal algorithm

Kruskals algorithm finds a minimum spanning tree in a

connected, weighted graph with vertex set {1, ... , n} .

The input to the algorithm is edgelist, an array of

edge, and n. The members of edge are

v and w, the vertices on which the edge is incident.

weight, the weight of the edge.

The output lists the edges in a minimum spanning

tree. The function sort sorts the array edgelist in

nondecreasing order of weight.

Algorithm 7.2.4 Kruskals Algorithm

Input Parameters: edgelist, n

Output Parameters: None

kruskal(edgelist,n) {

sort(edgelist)

for i = 1 to n

makeset(i)

count = 0

i = 1

while (count < n - 1) {

if (findset(edgelist[i].v) != findset(edgelist[i].w)) {

println(edgelist[i].v + + edgelist[i].w)

count = count + 1

union(edgelist[i].v,edgelist[i].w)

}

i = i + 1

}

}

Analysis of Kruskals Algorithm

Assume we have n nodes and |E| edges.

Worst case: we have to look at all edges and nodes.

O(|E| log |E|) to sort edges

O(n) to initialize disjoint node sets

O(|E|log n) for findset and union operations

At most 2 |E| findset

n-1 unions

|E| > n-1, so n-1 = O(|E|)

Each findset or union takes O(log n)

log |E| is O( log n)

the total is:

O(|E| log n)

Prims Algorithm

Greedy by verteces

Initially set V

T

consists of a single vertex of V and T is empty

Each step: the smallest not-yet-chosen candidate edge e is

considered

If e leaves V

T

then e is added to T and the endpoint not in V

T

is

added to V

T

otherwise e is rejected.

Terminate when V

T

=V (all nodes used up)

Prims algorithm Pseudocode

algorithm Prim(G)

//Input: A weighted connected graph G

//Output: A minimum spanning tree of G, given by its set of edges E

T

V

T

{v

0

}

E

T

C

for i = 1 to |V|-1

find a minimum weight edge e*=(v*,u*), among all

edges leaving V

T

(that is, v*e V

T

, u* e V

T

)

V

T

V

T

{u*}

E

T

E

T

{e*}

return E

T

Prims algorithm - example

starting set: V

T

= {1}

{1,2}

{1,2,3}

{1,2,3,4}

{1,2,3,4,5}

{1,2,3,4,5,7}

{1,2,3,4,5,7,6}

1 2

5

6

4

3

4

8

6

4

3

7

1 2 3

4 5 6

7

Is Prims Algorithm Correct?

Apply Promising Lemma and

use induction

Tree containing a single

vertex is promising

Assume by inductive

hypothesis that T = (V

T

, E

T

)

of k edges selected by the

Prims algorithm is promising.

Let e*=(v*,u*), be a shortest

edge leaving V

T

. Then by

Promising Lemma T = (V

T

{u*}, E

T

{e*} ) is also

promising.

e

v

u

Analysis of Prims algorithm

To improve complexity, for each vertex u not in the current tree

we can maintain the nearest tree vertex and the weight of the

corresponding edge; we call this the label of vertex u

When we add a new vertex u* to the tree, we need to:

add u* to V

T

for each vertex u in V-V

T

which is connected to u* by a shorter

edge

then its label, we need to update the label

Time Complexity of Prims algorithm will depend on the data

structures used

If we use adjacency matrix for the graph and an unordered

array for the list of labels then time complexity is O(n

2

)

If we use adjacency lists for graph G and min-heap for list of

labels, then the time complexity is O(|E| log n)

Greedy Shortest Path

Whats difference in this to

Prim/Kruskal Algorithms?

Is this just another MST?

What are our candidates?

What do we start with?

When are we finished?

What do we return?

What does a general step in the

algorithm look like?

Set of C = candidate nodes (yet

to be considered)

Set of S = nodes already chosen

S initially contains source

S maintained so that current

shortest paths are stored

At each step, select closest

node to S in C

Stop when all nodes in S

Dijkstras Algorithm

algorithm Dijakstra(G,s)

//Diajkstras algorithm for a single source (s) shortest paths

//Input: A weighted connected graph G = (V,E,W) with non-

negative weights and a vertex s

//Output: The length d

v

of a shortest path from s to v for each

vertex v

for every vertex v in V do

d

v

; p

v

null

Penqueue(Q,v,d) //initialise vertex priority in the priority queue

d

s

0; decrease(Q,v,d

v

) //update priority of s with ds

V

T

C

for i = 1 to |V|-1 do

u* Pdequeue(Q) //dequeue the minimum priority element

V

T

V

T

{u*}

for every vertex in V-V

T

that is adjacent to u* do

if d

u*

+ w(u*,u) < d

u

d

u

d

u*

+ w(u*,u); p

u

u*;

decrease(Q,v,d

v

)

Dijkstra Example

6

5

1 2

4 3

25

10

50

200

20

30

20

5

12

1

0

4

6

5

1 2

4 3

25 50

200

20

30

20

5

1

0

1

node 1 PQ = [50,200,10,,25]

4

node 4 PQ = [40,30,-x-,,22]

6

node 6 PQ = [40,30,-x-,22,-x-]

5

node 5 PQ = [40,30,-x-,-x-,-x-]

3

node 3 PQ = [35,-x-,-x-,-x-,-x-]

stop

10

12

Is it correct?

Terminology:

A path from source to v is

special if all intermediate nodes

belong to S

We use an array D to store

length of shortest special paths

We need to prove 2 things:

If node i = 1 is in S, then D[i]

gives length of shortest path

from 1 to i

If node i is not in S, then D[i]

gives shortest special path from

1 to i

How could we prove this?

consider the proof techniques

weve seen so far, which style is

more appropriate?

Why is this a good thing to prove?

Why might this proof be more

straight forward than proving

correctness after its all finished?

Proof

(1) If node i = 1 is in S, then D[i]

gives length of shortest path from

1 to i

(2) If node i is not in S, then D[i]

gives shortest special path from 1

to i

Basis?

Inductive assumption?

assume both conditions are true just

before we add node v

(1) Prove path to v passes only through

nodes in S.

How?

What other options are there?

(2) Consider node w =v which is not in S

How do we know the special path to

w is still shortest

if v is included in it?

if v is not included?

Another look at greedy algorithms

Prims Algorithm: Greedy by vertices

We build the spanning tree T through a sequence of subtrees

T

i

Initially set V

Ti

consists of a single vertex of V and T

i

is

empty

At each step we select a vertex that is nearest to the

current subtree T

i

Terminate when V

T

=V (all nodes used up)

Prims algorithm Pseudocode

algorithm Prim(G)

//Input: A weighted connected graph G

//Output: A minimum spanning tree of G, given by its

set of edges E

T

V

T

{v

0

}

E

T

C

for i = 1 to |V|-1

find a minimum weight edge e*=(v*,

u*), among all edges leaving V

T

(that is, v*e V

T

, u* e V

T

)

V

T

V

T

{u*}

E

T

E

T

{e*}

return E

T

To improve complexity, for each vertex u not in the

current tree we can maintain the nearest tree vertex

and the weight of the corresponding edge; we call

this the label of vertex u.

When we add a new vertex u* to the tree, we need to:

add u* to V

T

for each vertex u in V-V

T

which is connected to u*

by a shorter edge

then its label, we need to update

the label

Example

Tree vertices:

a(-,-)

Fringe vertices:

b(a,3), e(a,6), f(a,5)

Unseen vertices:

c(-,), d(-, )

Nearest vertex: b(a,3)

a f

d

b

e

c

1

3 4 4

6

5

5

6

2

8

Example

Tree vertices:

a(-,-), b(a,3)

Fringe vertices:

c(b,1), e(a,6), f(b,4)

Unseen vertices:

d(-, )

Nearest vertex: c(b,1)

a f

d

b

e

c

1

3 4 4

6

5

5

6

2

8

Example

Tree vertices:

a(-,-), b(a,3), c(b,1)

Fringe vertices:

e(a,6), f(b,4), d(c, 6)

Unseen vertices:

Nearest vertex: f(b,4)

a f

d

b

e

c

1

3 4 4

6

5

5

6

2

8

Example

Tree vertices:

a(-,-), b(a,3), c(b,1), f(b,4)

Fringe vertices:

e(f,2), d(f, 5)

Unseen vertices:

Nearest vertex: e(f,2)

a f

d

b

e

c

1

3 4 4

6

5

5

6

2

8

Example

Tree vertices:

a(-,-), b(a,3), c(b,1), f(b,4),

e(f,2)

Fringe vertices:

d(f, 5)

Unseen vertices:

Nearest vertex: d(f, 5)

a f

d

b

e

c

1

3 4 4

6

5

5

6

2

8

Example

Tree vertices:

a(-,-), b(a,3), c(b,1), f(b,4),

e(f,2), d(f, 5)

Fringe vertices:

Unseen vertices:

Nearest vertex:

a f

d

b

e

c

1

3 4 4

6

5

5

6

2

8

Analysis of Prims algorithm

Time Complexity of Prims algorithm will depend on

the data structures used; note that the list of labels

on fringe vertices is a priority queue

If we use adjacency matrix for the graph and an

unordered array for the list of labels then time

complexity is O(n

2

):

To create a list of labels O(n)

At each step finding a minimum in the list and updating the

list takes O(n)

There are n-1 steps, thus O(n

2

) in total

Analysis of Prims algorithm

If we use adjacency lists for graph G and min-heap

for list of labels, then the time complexity is O(|E|

log n):

To create a heap: O(n)

At each step min element is deleted from the heap and heap

is restored O(lg n); also up to d

i

elements are updated, where

d

i

is the degree of the vertex currently being added to the

tree and each update takes O(lg n)

In total, there will be at most one update for each edge, thus

O(|E| log n)

It can be shown also that O(|E| log n), thus we have O (|E|

log n)

Dijkstras algorithm is very similar to Prims. To

improve complexity, for each vertex u not in the

current tree we can maintain the parent tree vertex

and the distance from the source; we call this the

label of vertex u.

When we add a new vertex u* to the tree, we need to:

add u* to V

T

for each vertex u in V-V

T

which is connected to u*

by an edge

of the weight w(u*,u) such that d

u*

+

w(u*,u) < d

u

then we need to update the label of u

by d

u*

+ w(u*,u) and set parent to u*

Dijkstras Algorithm for computing a single-

source shortest paths

Dijkstras Algorithm

algorithm Dijakstra(G,s)

//Diajkstras algorithm for a singlesource (s) shortest paths

//Input: A weighted connected graph G = (V,E,W) with non-negative weights and a

vertex s

//Output: The length d

v

of a shortest path from s to v for each vertex v, and its

parent vertex p

v

for every vertex v in V do

d

v

; p

v

null

Penqueue(Q,v,d) // initialise vertex priority in the priority queue

d

s

0; decrease(Q,v,d

v

) \\update priority of s with d

s

V

T

C

for i = 0 to |V|-1 do

u* Pdequeue(Q) \\dequeue the minimum priority element

V

T

V

T

{u*}

for every vertex in V-V

T

that is adjacent to u* do

if d

u*

+ w(u*,u) < d

u

d

u

d

u*

+ w(u*,u); p

u

u*;

decrease(Q,v,d

v

)

Example

Tree vertices:

Fringe vertices:

a(-,0)

Unseen vertices:

b(-, ),c(-,), d(-,), e(-,), f(-,)

Nearest vertex: a(-,0)

a f

d

b

e

c

1

3 4 4

6

5

5

6

2

8

Example

Tree vertices:

a(-,0)

Fringe vertices:

b(a,3), e(a,6), f(a,5)

Unseen vertices:

c(-,), d(-, )

Nearest vertex: b(a,3)

a f

d

b

e

c

1

3 4 4

6

5

5

6

2

8

Example

Tree vertices:

a(-,0), b(a,3)

Fringe vertices:

e(a,6), f(a,5), c(b,1+3)

Unseen vertices:

d(-, )

Nearest vertex: c(b,1+3)

a f

d

b

e

c

1

3 4 4

6

5

5

6

2

8

Example

Tree vertices:

a(-,0), b(a,3), c(b,4)

Fringe vertices:

e(a,6), f(a,5), d(c,6+4)

Unseen vertices:

Nearest vertex: f(a,5)

a f

d

b

e

c

1

3 4 4

6

5

5

6

2

8

Example

Tree vertices:

a(-,0), b(a,3), c(b,4), f(a,5)

Fringe vertices:

e(a,6), d(c,6+4)

Unseen vertices:

Nearest vertex: e(a,6)

a f

d

b

e

c

1

3 4 4

6

5

5

6

2

8

Example

Tree vertices:

a(-,0), b(a,3), c(b,4), f(a,5), e(a,6)

Fringe vertices:

d(c,6+4)

Unseen vertices:

Nearest vertex: d(c,10)

a f

d

b

e

c

1

3 4 4

6

5

5

6

2

8

Example

Tree vertices:

a(-,0), b(a,3), c(b,4),

f(a,5), e(a,6), d(c,10)

Fringe vertices:

Unseen vertices:

Nearest vertex:

a f

d

b

e

c

1

3 4 4

6

5

5

6

2

8

S-ar putea să vă placă și

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- Power4Home Wind Power GuideDocument72 paginiPower4Home Wind Power GuideMrZaggyÎncă nu există evaluări

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Hand Loading For Long Range PDFDocument44 paginiHand Loading For Long Range PDFMrZaggyÎncă nu există evaluări

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Scale Aircraft Modelling Dec 2014Document86 paginiScale Aircraft Modelling Dec 2014MrZaggy94% (17)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

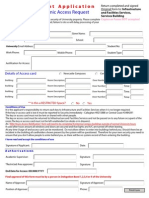

- Student Electronic Access Application PDFDocument1 paginăStudent Electronic Access Application PDFMrZaggyÎncă nu există evaluări

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- UAV GNC Chapter PDFDocument30 paginiUAV GNC Chapter PDFMrZaggyÎncă nu există evaluări

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- Lecture 5Document33 paginiLecture 5MrZaggyÎncă nu există evaluări

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- Lecture4 - Binary Search, Topological Sort and BacktrackingDocument53 paginiLecture4 - Binary Search, Topological Sort and BacktrackingMrZaggyÎncă nu există evaluări

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Lecture1 - Introduction To Algorithmics and Maths RevisionDocument87 paginiLecture1 - Introduction To Algorithmics and Maths RevisionMrZaggyÎncă nu există evaluări

- INFT1004 Visual Programming: Sound and Arrays (Guzdial & Ericson Chapters 6 and 7)Document77 paginiINFT1004 Visual Programming: Sound and Arrays (Guzdial & Ericson Chapters 6 and 7)MrZaggyÎncă nu există evaluări

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- INFT1004 Visual Programming: Programs, Arrays and Iteration (Guzdial & Ericson Chapter 3)Document83 paginiINFT1004 Visual Programming: Programs, Arrays and Iteration (Guzdial & Ericson Chapter 3)MrZaggyÎncă nu există evaluări

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- Inft1004 Lec1 Introduction SmallDocument18 paginiInft1004 Lec1 Introduction SmallMrZaggyÎncă nu există evaluări

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- Kruskal's Algorithm - WikipediaDocument22 paginiKruskal's Algorithm - WikipediaSubhadeep DasÎncă nu există evaluări

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- 4 Greedy UpdatedDocument139 pagini4 Greedy UpdatedRyan DolmÎncă nu există evaluări

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- Module 3 - DAA VtuDocument80 paginiModule 3 - DAA VtuSATYAM JHAÎncă nu există evaluări

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Cs - 502 Final Term Solve by Vu - ToperDocument54 paginiCs - 502 Final Term Solve by Vu - Topermirza adeelÎncă nu există evaluări

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- Clustering: Sridhar S Department of IST Anna UniversityDocument91 paginiClustering: Sridhar S Department of IST Anna UniversitySridhar SundaramurthyÎncă nu există evaluări

- Kruskal's Algorithm: Melanie FerreriDocument21 paginiKruskal's Algorithm: Melanie FerreriWork 133Încă nu există evaluări

- UNIT - IV (Graphs)Document17 paginiUNIT - IV (Graphs)varun MAJETIÎncă nu există evaluări

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- DAA - Unit IV - Greedy Technique - Lecture Slides PDFDocument50 paginiDAA - Unit IV - Greedy Technique - Lecture Slides PDFkennygoyalÎncă nu există evaluări

- Minimum Spanning TreeDocument10 paginiMinimum Spanning TreeAlejandrina Jiménez GuzmánÎncă nu există evaluări

- Daa Practical File RachitDocument26 paginiDaa Practical File RachitRachit SharmaÎncă nu există evaluări

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- Experiment No. 4 Design and Analysis Spanning Tree: Solve Minimum Cost Spanning Tree Problem Using Greedy MethodDocument4 paginiExperiment No. 4 Design and Analysis Spanning Tree: Solve Minimum Cost Spanning Tree Problem Using Greedy Methoddalip kumarÎncă nu există evaluări

- Management Science 123Document46 paginiManagement Science 123Makoy BixenmanÎncă nu există evaluări

- Graph Algorithms (1x4)Document17 paginiGraph Algorithms (1x4)Abraham AlemsegedÎncă nu există evaluări

- Brute Force Approach in AlgotihmDocument10 paginiBrute Force Approach in AlgotihmAswin T KÎncă nu există evaluări

- Tugas PipaDocument13 paginiTugas PipaGinanjar Hadi SukmaÎncă nu există evaluări

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- DAA Unit 3Document23 paginiDAA Unit 3Loganayagi PÎncă nu există evaluări

- Data Structures Coding Interview 90+ ChaptersDocument35 paginiData Structures Coding Interview 90+ Chaptersvenkatesh T100% (1)

- Lab FileDocument29 paginiLab FileHarshita MeenaÎncă nu există evaluări

- Minimum Spanning TreeDocument21 paginiMinimum Spanning Treetylerminecraft0906Încă nu există evaluări

- Minimum Spanning TreeDocument15 paginiMinimum Spanning TreeNeha VermaÎncă nu există evaluări

- Minimum Spanning Tree (Prim's and Kruskal's Algorithms)Document17 paginiMinimum Spanning Tree (Prim's and Kruskal's Algorithms)HANISH THARWANI 21BCE11631Încă nu există evaluări

- HN Daa m3 Questions PDFDocument5 paginiHN Daa m3 Questions PDFAbdallahi SidiÎncă nu există evaluări

- COMP2521 Week 6 - Minimum Spanning TreesDocument24 paginiCOMP2521 Week 6 - Minimum Spanning TreesHysan DaxÎncă nu există evaluări

- Locally Optimal Choice at Each Stage in Hope of Getting A Globally Optimal SolutionDocument13 paginiLocally Optimal Choice at Each Stage in Hope of Getting A Globally Optimal Solutionsai kumarÎncă nu există evaluări

- Greedy Algorithm LectueNoteDocument33 paginiGreedy Algorithm LectueNotedagiÎncă nu există evaluări

- Om Phat Swaha: Tatya PDFDocument1.647 paginiOm Phat Swaha: Tatya PDFShekhar JadhavÎncă nu există evaluări

- VLSI Logic Circuits and Graph Theory-V3Document5 paginiVLSI Logic Circuits and Graph Theory-V3rpimsrocksÎncă nu există evaluări

- 19BCS099 - Assignment On Prims and Kruskal AlgorithmDocument5 pagini19BCS099 - Assignment On Prims and Kruskal AlgorithmRohit ShindeÎncă nu există evaluări

- Traveling Salesman ProblemDocument53 paginiTraveling Salesman ProblemAnkita RamcharanÎncă nu există evaluări

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- CSE2208-Lab ManualDocument28 paginiCSE2208-Lab Manualnadif hasan purnoÎncă nu există evaluări