Documente Academic

Documente Profesional

Documente Cultură

43 1 RGRSSN

Încărcat de

EbookcrazeTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

43 1 RGRSSN

Încărcat de

EbookcrazeDrepturi de autor:

Formate disponibile

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

Contents

Correlation

Regression and

43.1 Regression 2

43.2 Correlation 17

Learning

You will learn how to explore relationships between variables and how to measure the

strength of such relationships. You should note from the outset that simply establishing

a relationship is not enough. You may establish, for example, a relationship between the

number of hours a person works in a week and their hat size. Should you conclude that

working hard causes your head to enlarge? Clearly not, any relationship existing here is

not causal!

outcomes

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

Regression

_

`

43.1

Introduction

Problems in engineering often involve the exploration of the relationship(s) between two or more

variables. The technique of regression analysis is very useful and well-used in this situation. This

Section will look at the basics of regression analysis and should enable you to apply regression

techniques to the study of relationships between variables. Just because a relationship exists between

two variables does not necessarily imply that the relationship is causal. You might nd, for example

that there is a relationship between the hours a person spends watching TV and the incidence of

lung cancer. This does not necessarily imply that watching TV causes lung cancer.

Assuming that a causal relationship does exist, we can measure the strength of the relationship

by means of a correlation coecient discussed in the next Section. As you might expect, tests of

signicance exist which allow us to interpret the meaning of a calculated correlation coecient.

_

Prerequisites

Before starting this Section you should . . .

have knowledge of Descriptive Statistics

( 36)

be able to nd the expectation and variance

of sums of variables ( 39.3)

understand the terms independent and

dependent variables

understand the terms biased and unbiased

estimators

_

`

Learning Outcomes

On completion you should be able to . . .

dene the terms regression analysis and

regression line

use the method of least squares for nding a

line of best t

2 HELM (2005):

Workbook 43: Regression and Correlation

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

1. Regression

As we have already noted, relationship(s) between variables are of interest to engineers who may

wish to determine the degree of association existing between independent and dependent variables.

Knowing this often helps engineers to make predictions and, on this basis, to forecast and plan.

Essentially, regression analysis provides a sound knowledge base from which accurate estimates of

the values of a dependent variable may be made once the values of related independent variables are

known.

It is worth noting that in practice the choice of independent variable(s) may be made by the engineer

on the basis of experience and/or prior knowledge since this may indicate to the engineer which

independent variables are likely to have a substantial inuence on the dependent variable. In summary,

we may state that the principle objectives of regression analysis are:

(a) to enable accurate estimates of the values of a dependent variable to be made from known

values of a set of independent variables;

(b) to enable estimates of errors resulting from the use of a regression line as a basis of

prediction.

Note that if a regression line is represented as y = f(x) where x is the independent variable, then

the actual function used (linear, quadratic, higher degree polynomial etc.) may be obtained via the

use of a theoretical analysis or perhaps a scatter diagram (see below) of some real data. Note that

a regression line represented as y = f(x) is called a regression line of yyy on xxx.

Scatter diagrams

A useful rst step in establishing the degree of association between two variables is the plotting of a

scatter diagram. Examples of pairs of measurements which an engineer might plot are:

(a) volume and pressure;

(b) acceleration and tyre wear;

(c) current and magnetic eld;

(d) torsion strength of an alloy and purity.

If there exists a relationship between measured variables, it can take many forms. Even though an

outline introduction to non-linear regression is given at the end of this Workbook, we shall focus on

the linear relationship only.

In order to produce a good scatter diagram you should follow the steps given below:

1. Give the diagram a clear title and indicate exactly what information is being displayed;

2. Choose and clearly mark the axes;

3. Choose carefully and clearly mark the scales on the axes;

4. Indicate the source of the data.

HELM (2005):

Section 43.1: Regression

3

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

Examples of scatter diagrams are shown below.

+

+ +

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+ +

+

+

+

Figure 1 Figure 2

Figure 3

Figure 1 shows an association which follows a curve, possibly exponential, quadratic or cubic;

Figure 2 shows a reasonable degree of linear association where the points of the scatter diagram

lie in an area surrounding a straight line;

Figure 3 represents a randomly placed set of points and no linear association is present between

the variables.

Note that in Figure 2, the word reasonable is not dened and that while points close to the

indicated straight line may be explained by random variation, those far away may be due to assignable

variation.

The rest of this Section will deal with linear association only although it is worth noting that techniques

do exist for transforming many non-linear relationships into linear ones. We shall investigate linear

association in two ways, rstly by using educated guess work to obtain a regression line by eye and

secondly by using the well-known technique called the method of least squares.

Regression lines by eye

Note that at a very simple level, we may look at the data and, using an educated guess, draw

a line of regression by eye through a set of points. However, nding a regression line by eye is

unsatisfactory as a general statistical method since it involves guess-work in drawing the line with

the associated errors in any results obtained. The guess-work can be removed by the method of least

squares in which the equation of a regression line is calculated using data. Essentially, we calculate

the equation of the regression line by minimising the sum of the squared vertical distances between

the data points and the line.

4 HELM (2005):

Workbook 43: Regression and Correlation

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

The method of least squares - an elementary view

We assume that an experiment has been performed which has resulted in n pairs of values, say

(x

1

, y

1

), (x

2

, y

2

), , (x

n

, y

n

) and that these results have been checked for approximate linearity on

the scatter diagram given below.

x

y

Q

1

Q

2

Q

n

y = mx + c

P

1

(x

1

, y

1

)

P

2

(x

2

, y

2

)

P

n

(x

n

, y

n

)

O

Figure 4

The vertical distances of each point from the line y = mx + c are easily calculated as

y

1

mx

1

c, y

2

mx

2

c, y

3

mx

3

c y

n

mx

n

c

These distances are squared to guarantee that they are positive and calculus is used to minimise the

sum of the squared distances. Eectively we are minimizing the sum of a two-variable expression

and need to use partial dierentiation. If you wish to follow this up and look in more detail at

the technique, any good book (engineering or mathematics) containing sections on multi-variable

calculus should suce. We will not look at the details of the calculations here but simply note that

the process results in two equations in the two unknowns m and c being formed. These equations

are:

xy m

x

2

c

x = 0 (i)

and

y m

x nc = 0 (ii)

The second of these equations (ii) immediately gives a useful result. Rearranging the equation we

get

y

n

m

x

n

c = 0 or, put more simply y = m x + c

where ( x, y) is the mean of the array of data points (x

1

, y

1

), (x

2

, y

2

), , (x

n

, y

n

).

This shows that the mean of the array always lies on the regression line. Since the mean is easily

calculated, the result forms a useful check for a plotted regression line. Ensure that any regression

line you draw passes through the mean of the array of data points.

Eliminating c from the equations gives a formula for the gradient m of the regression line, this is:

HELM (2005):

Section 43.1: Regression

5

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

m =

xy

n

x

n

y

n

x

2

n

x

n

2

often written as m =

S

xy

S

2

x

The quantity S

2

x

is, of course, the variance of the x-values. The quantity S

xy

is known as the

covariance (of x and y) and will appear again later in this Workbook when we measure the degree

of linear association between two variables.

Knowing the value of m enables us to obtain the value of c from the equation y = m x + c

Key Point 1

Least Squares Regression - yyy on xxx

The least squares regression line of y on x has the equation y = mx + c. Remember that:

m =

xy

n

x

n

y

n

x

2

n

x

n

2

and c is given by the equation c = y m x

It should be noted that the coecients m and c obtained here will give us the regression line of y

on x. This line is used to predict y values given x values. If we need to predict the values of x from

given values of y we need the regression line of x on y. The two lines are not the same except in the

(very) special case where all of the points lie exactly on a straight line. It is worth noting however,

that the two lines cross at the point ( x, y). It can be shown that the regression line of x on y is

given by Key Point 2:

Key Point 2

Least Squares Regression - xxx on yyy

The regression line of x on y is

x = m

y + c

where

m

xy

n

x

n

y

n

y

2

n

y

n

2

and c

= x m

y

6 HELM (2005):

Workbook 43: Regression and Correlation

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

Example 1

A warehouse manager of a company dealing in large quantities of steel cable needs

to be able to estimate how much cable is left on his partially used drums. A

random sample of twelve partially used drums is taken and each drum is weighed

and the corresponding length of cable measured. The results are given in the table

below:

Weight of drum and cable (x) kg. Measured length of cable (y) m.

30 70

40 90

40 100

50 120

50 130

50 150

60 160

70 190

70 200

80 200

80 220

80 230

Find the least squares regression line in the form y = mx +c and use it to predict

the lengths of cable left on drums whose weights are:

(i) 35 kg (ii) 85 kg (iii) 100 kg

In the latter case state any assumptions which you make in order to nd the length

of cable left on the drum.

Solution

Excel calculations give

x = 700,

x

2

= 44200,

y = 1860

xy = 118600 so that

the formulae

m =

xy

n

x

n

y

n

x

2

n

x

n

2

and c = y m x

give m = 3 and c = 20. Our regression line is y = 3x 20.

Hence, the required predicted values are:

y

35

= 3 35 20 = 85 y

85

= 3 85 20 = 235 y

100

= 3 100 20 = 280

all results being in metres.

To obtain the last result we have assumed that the linearity of the relationship continues beyond

the range of values actually taken.

HELM (2005):

Section 43.1: Regression

7

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

Task

An article in the Journal of Sound and Vibration 1991 (151) explored a possible

relationship between hypertension (dened as blood pressure rise in mm of mer-

cury) and exposure to noise levels (measured in decibels). Some data given is as

follows:

Noise Level (x) Blood pressure rise (y) Noise Level (x) Blood pressure rise (y)

60 1 85 5

63 0 89 4

65 1 90 6

70 2 90 8

70 5 90 4

70 1 90 5

80 4 94 7

90 6 100 9

80 2 100 7

80 3 100 6

(a) Draw a scatter diagram of the data.

(b) Comment on whether a linear model is appropriate for the data.

(c) Calculate a line of best t of y on x for the data given.

(d) Use your regression line predict the expected rise in blood pressure for

a exposure to a noise level of 97 decibels.

Your solution

8 HELM (2005):

Workbook 43: Regression and Correlation

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

Answer

(a) Entering the data into Microsoft Excel and plotting gives

Blood Pressure increase versus recorded sound level

0

1

2

3

4

5

6

7

8

9

50 60 70 80 90 100

Level (Db)

B

l

o

o

d

P

r

e

s

s

u

r

e

r

i

s

e

(

m

m

M

e

r

c

u

r

y

)

Sound

(b) A linear model is appropriate.

(c) Excel calculations give

x = 1656,

x

2

= 140176,

y = 86,

xy = 7654

so that m = 0.1743 and c = 10.1315. Our regression line is y = 0.1743x 10.1315.

(d) The predicted value is: y

97

= 0.1743 97 10.1315 = 6.78 mm mercury.

The method of least squares - a modelling view

We take the dependent variable Y to be a random variable whose value, for a xed value of x depends

on the value of x and a random error component say e and we write

Y = mx + c + e

Adopting the notation of conditional probability, we are looking for the expected value of Y for a

given value of x. The expected value of Y for a given value of x is denoted by

E(Y |x) = E(mx + c + e) = E(mx + c) + E(e)

The variance of Y for a given value of x is given by the relationship

V(Y |x) = V(mx + c + e) = V(mx + c) + V(e), assuming independence.

If

Y |x

represents the true mean value of Y for a given value of x then

Y |x

= mx + c, assuming a linear relationship holds,

is a straight line of mean values. If we now assume that the errors e are distributed with mean 0 and

variance

2

we may write

E(Y |x) = E(mx + c) + E(e) = mx + c since E(e) = 0.

HELM (2005):

Section 43.1: Regression

9

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

and

V(Y |x) = V(mx + c) + V(e) =

2

since V(mx + c) = 0.

This implies that for each value of x, Y is distributed with mean mx + c and variance

2

. Hence

when the variance is small the observed values of Y will be close to the regression line and when the

variance is large, at least some of the observed values of Y may not be close to the line. Note that

the assumption that the errors e are distributed with mean 0 may be made without loss of generality.

If the errors had any other mean, we could subtract it and then add the mean to the value of c. The

ideas are illustrated in the following diagram.

(y|x) = mx + c

e

p

x

x

1 x

2

x

3

x

p

y

O

y

p

E

Figure 5

The regression line is shown passing through the means of the distributions for the individual values

of x. Any randomly selected value of y may be represented by the equation

y

p

= mx

p

+ c + e

p

where e

p

is the error of the observed value of y that is the dierence from its expected value, namely

E(Y |x

p

) =

y|x

p

= mx

p

+ c

Note that

e

p

= y

p

mx

p

c

so that the sum of the squares of the errors is given by

S =

e

2

p

=

(y

p

mx

p

c)

2

and we may minimize the quantity S by using the method of least squares as before. The mathe-

matical details are omitted as before and the equations obtained for m and c are as before, namely

m =

xy

n

x

n

y

n

x

2

n

x

n

2

and c = y m x.

Note that since the error e

p

in the pth observation essentially describes the error in the t of the

model to the pth observation, the sum of the squares of the errors

e

2

p

will now be used to allow

us to comment on the adequacy of t of a linear model to a given data set.

10 HELM (2005):

Workbook 43: Regression and Correlation

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

Adequacy of t

We now know that the variance V(Y |x) =

2

is the key to describing the adequacy of t of our

simple linear model. In general, the smaller the variance, the better the t although you should note

that it is wise to distinguish between poor t and a large error variance. Poor t may suggest, for

example, that the relationship is not in fact linear and that a fundamental assumption made has been

violated. A large value of

2

does not necessarily mean that a linear model is a poor t.

It can be shown that the sum of the squares of the errors say SS

E

can be used to give an unbiased

estimator

2

of

2

via the formula

2

=

SS

E

n p

where p is the number of independent variables used in the regression equation. In the case of simple

linear regression p = 2 since we are using just x and c and the estimator becomes:

2

=

SS

E

n 2

The quantity SS

E

is usually used explicitly in formulae whose purpose is to determine the adequacy

of a linear model to explain the variability found in data. Two ways in which the adequacy of a

regression model may be judged are given by the so-called Coecient of Determination and the

Adjusted Coecient of Determination.

The coefcient of determination

Denoted by R

2

, the Coecient of Determination is dened by the formula

R

2

= 1

SS

E

SS

T

where SS

E

is the sum of the squares of the errors and SS

T

is the sum of the squares of the totals

given by

(y

i

y

i

)

2

=

y

2

i

n y

2

. The value of R

2

is sometimes described as representing the

amount of variability explained or accounted for by a regression model. For example, if after a

particular calculation it was found that R

2

= 0.884, we could say that the model accounts for about

88% of the variability found in the data. However, deductions made on the basis of the value of

R

2

should be treated cautiously, the reasons for this are embedded in the following properties of the

statistic. It can be shown that:

(a) 0 R

2

1

(b) a large value of R

2

does not necessarily imply that a model is a good t;

(c) adding a regressor variable (simple regression becomes multiple regression) always in-

creases the value of R

2

. This is one reason why a large value of R

2

does not necessarily

imply a good model;

(d) models giving large values of R

2

can be poor predictors of new values if the tted model

does not apply at the appropriate x-value.

Finally, it is worth noting that to check the t of a linear model properly, one should look at plots of

residual values. In some cases, tests of goodness-of-t are available although this topic is not covered

in this Workbook.

HELM (2005):

Section 43.1: Regression

11

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

The adjusted coefcient of determination

Denoted (often) by R

2

adj

, the Adjusted Coecient of Determination is dened as

R

2

adj

= 1

SS

E

/(n p)

SS

T

/(n 1)

where p is the number of variables in the regression equation. For the simple linear model, p = 2

since we have two unknown parameters in the regression equation, the intercept c and the coecient

m of x. It can be shown that:

(a) R

2

adj

is a better indicator of the adequacy of predictive power than R

2

since it takes into

account the number of regressor variables used in the model;

(b) R

2

adj

does not necessarily increase when a new regressor variable is added.

Both coecients claim to measure the adequacy of the predictive power of a regression model and

their values indicate the proportion of variability explained by the model. For example a value of

R

2

or R

2

adj

= 0.9751

may be interpreted as indicating that a model explains 97.51% of the variability it describes. For

example, the drum and cable example considered previously gives the results outlined below with

R

2

= 96.2 and R

2

adj

= 0.958

In general, R

2

adj

is (perhaps) more useful than R

2

for comparing alternative models. In the context

of a simple linear model, R

2

is easier to interpret. In the drum and cable example we would claim

that the linear model explains some 96.2% of the variation it describes.

Drum & Cable x

2

Cable Length y

2

xy Predicted Error

(x) (y) Values Squares

30 900 70 4900 2100 70 0.00

40 1600 90 8100 3600 100 100.00

40 1600 100 10000 4000 100 0.00

50 2500 120 14400 6000 130 100.00

50 2500 130 16900 6500 130 0.00

50 2500 150 22500 7500 130 400.00

60 3600 160 25600 9600 160 0.00

70 4900 190 36100 1330 190 0.00

70 4900 200 40000 14000 190 100.00

80 6400 200 40000 16000 220 400.00

80 6400 220 48400 17600 220 0.00

80 6400 230 52900 18400 220 100.00

Sum of x Sum of x

2

Sum of y Sum of y

2

Sum of xy SSE =

= 700 = 44200 = 1860 = 319800 = 118600 1200.00

m = 3 c = 20 SST = R

2

= R

2

adj

=

31500 0.962 0.958

12 HELM (2005):

Workbook 43: Regression and Correlation

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

Task

Use the drum and cable data given in Example 1 (page 7) and set up a spreadsheet

to verify the values of the Coecient of Determination and the Adjusted Coecient

of Determination calculated on page 12.

Your solution

Answer

As per the table on page 12 giving R

2

= 0.962 and R

2

adj

= 0.958.

Signicance testing for regression

Note that the results in this Section apply to the simple linear model only. Some additions are

necessary before the results can be generalized.

The discussions so far pre-suppose that a linear model adequately describes the relationship between

the variables. We can use a signicance test involving the distribution to decide whether or not y is

linearly dependent on x. We set up the following hypotheses:

H

0

: m = 0 and H

1

: m = 0

Key Point 3

Signicance Test for Regression

The test statistic is

F

test

=

SS

R

SS

E

/(n 2)

where SS

R

= SS

T

SS

E

and rejection at the 5% level of signicance occurs if

F

test

> F

0.05,1,n2

Note that we have one degree of freedom since we are testing only one parameter (m) and that n

denotes the number of pairs of (x, y) values. A set of tables giving the 5% values of the F-distribution

is given at the end of this Workbook (Table 1).

HELM (2005):

Section 43.1: Regression

13

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

Example 2

Test to determine whether a simple linear model is appropriate for the data previ-

ously given in the drum and cable example above.

Solution

We know that

SS

T

= SS

R

+ SS

E

where SS

T

=

y

2

y)

2

n

is the total sum of squares (of y) so that (from the spreadsheet

above) we have:

SS

R

= 31500 1200 = 30300

Hence

F

test

=

SS

R

SS

E

/(n 2)

=

30300

1200/(12 2)

= 252.5

From Table 1, the critical value is F

0.05,1,10

= 241.9.

Hence, since F

test

> F

0.05,1,10

, we reject the null hypothesis and conclude that m = 0.

Regression curves

The Section should be regarded as introductory only. The reason for including non-linear regression

is to demonstrate how the method of least squares can be extended to deal with cases where the

relationship between variables is, for example, quadratic or exponential.

A regression curve is dened to be the curve passing through the expected value of Y for a set of

given values of x. The idea is illustrated by the following diagram.

Regression curve of y on x

Distribution of y for given x

f(y)

0 x

1

x

2

x

3

x

n x

y

Figure 6

14 HELM (2005):

Workbook 43: Regression and Correlation

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

We will look at the quadratic and exponential cases in a little detail.

The quadratic case

We are looking for a functional relation of the form

y = a + bx + cx

2

and so, using the method of least squares, we require the values of a, b and c which minimize the

expression

f(a, b, c) =

n

r=1

(y

r

a bx

r

cx

2

r

)

2

Note here that the regression described by the form

y = a + bx + cx

2

is actually a linear regression since the expression is linear in a, b and c.

Omitting the subscripts and using partial dierentiation gives

f

a

= 2

(y a bx cx

2

)

f

b

= 2

x(y a bx cx

2

)

f

c

= 2

x

2

(y a bx cx

2

)

At a minimum we require

f

a

=

f

b

=

f

c

= 0

which results in the three linear equations

y na b

x c

x

2

= 0

xy a

x b

x

2

c

x

3

= 0

x

2

y a

x

2

b

x

3

c

x

4

= 0

which can be solved to give the values of a, b and c.

The exponential case

We use the same technique to look for a functional relation of the form

y = ae

bx

As before, using the method of least squares, we require the values of a and b which minimize the

expression

f(a, b) =

n

r=1

(y

r

ae

bx

r

)

2

Again omitting the subscripts and using partial dierentiation gives

HELM (2005):

Section 43.1: Regression

15

www.ebookcraze.blogspot.com

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

w

w

w

.

e

b

o

o

k

c

r

a

z

e

.

b

l

o

g

s

p

o

t

.

c

o

m

f

a

= 2

e

bx

(y ae

bx

)

f

b

= 2

axe

bx

(y ae

bx

)

At a minimum we require

f

a

=

f

b

= 0

which results in the two non-linear equations

ye

bx

a

e

2bx

= 0

xye

bx

a

xe

2bx

which can be solved by iterative methods to give the values of a and b.

Note that it is possible to combine (for example) linear and exponential regression to obtain a

regression equation of the form

y = (a + bx)e

x

The method of least squares may then be used to nd the values of a and b.

16 HELM (2005):

Workbook 43: Regression and Correlation

www.ebookcraze.blogspot.com

S-ar putea să vă placă și

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Students GuideDocument64 paginiStudents GuideEbookcrazeÎncă nu există evaluări

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (587)

- Helm Wb40 IndexDocument1 paginăHelm Wb40 IndexEbookcrazeÎncă nu există evaluări

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (894)

- Basic Concepts of FunctionsDocument10 paginiBasic Concepts of FunctionsEbookcrazeÎncă nu există evaluări

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Tutors GuideDocument161 paginiTutors GuideEbookcrazeÎncă nu există evaluări

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (399)

- Helm Wb39 IndexDocument1 paginăHelm Wb39 IndexEbookcrazeÎncă nu există evaluări

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- Helm Wb45 IndexDocument1 paginăHelm Wb45 IndexEbookcrazeÎncă nu există evaluări

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- Helm Wb47 3 IndexDocument1 paginăHelm Wb47 3 IndexEbookcrazeÎncă nu există evaluări

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Helmet Symposium ReportDocument62 paginiHelmet Symposium ReportEbookcrazeÎncă nu există evaluări

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Helm Wb48 IndexDocument1 paginăHelm Wb48 IndexEbookcrazeÎncă nu există evaluări

- Helm Wb44 IndexDocument1 paginăHelm Wb44 IndexEbookcrazeÎncă nu există evaluări

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Helm Wb47 1 IndexDocument1 paginăHelm Wb47 1 IndexEbookcrazeÎncă nu există evaluări

- Helm Wb38 IndexDocument1 paginăHelm Wb38 IndexEbookcrazeÎncă nu există evaluări

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- Helm Wb47 2 IndexDocument1 paginăHelm Wb47 2 IndexEbookcrazeÎncă nu există evaluări

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- Helm Wb46 IndexDocument1 paginăHelm Wb46 IndexEbookcrazeÎncă nu există evaluări

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2219)

- Helm Wb43 IndexDocument1 paginăHelm Wb43 IndexEbookcrazeÎncă nu există evaluări

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- Helm Wb42 IndexDocument1 paginăHelm Wb42 IndexEbookcrazeÎncă nu există evaluări

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Helm Wb34 IndexDocument1 paginăHelm Wb34 IndexEbookcrazeÎncă nu există evaluări

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (265)

- Helm Wb41 IndexDocument1 paginăHelm Wb41 IndexEbookcrazeÎncă nu există evaluări

- Helm Wb32 IndexDocument1 paginăHelm Wb32 IndexEbookcrazeÎncă nu există evaluări

- Helm Wb33 IndexDocument1 paginăHelm Wb33 IndexEbookcrazeÎncă nu există evaluări

- Helm Wb36 IndexDocument1 paginăHelm Wb36 IndexEbookcrazeÎncă nu există evaluări

- Helm Wb37 IndexDocument1 paginăHelm Wb37 IndexEbookcrazeÎncă nu există evaluări

- Helm Wb35 IndexDocument1 paginăHelm Wb35 IndexEbookcrazeÎncă nu există evaluări

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- Helm Wb31 IndexDocument1 paginăHelm Wb31 IndexEbookcrazeÎncă nu există evaluări

- Helm Wb29 IndexDocument1 paginăHelm Wb29 IndexEbookcrazeÎncă nu există evaluări

- Helm Wb30 IndexDocument1 paginăHelm Wb30 IndexEbookcrazeÎncă nu există evaluări

- Helm Wb27 IndexDocument1 paginăHelm Wb27 IndexEbookcrazeÎncă nu există evaluări

- Helm Wb25 IndexDocument1 paginăHelm Wb25 IndexEbookcrazeÎncă nu există evaluări

- Helm Wb26 IndexDocument1 paginăHelm Wb26 IndexEbookcrazeÎncă nu există evaluări

- Helm Wb24 IndexDocument1 paginăHelm Wb24 IndexEbookcrazeÎncă nu există evaluări

- Quezon City Department of The Building OfficialDocument2 paginiQuezon City Department of The Building OfficialBrightNotes86% (7)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (119)

- Dinsmore - Gantt ChartDocument1 paginăDinsmore - Gantt Chartapi-592162739Încă nu există evaluări

- SDNY - Girl Scouts V Boy Scouts ComplaintDocument50 paginiSDNY - Girl Scouts V Boy Scouts Complaintjan.wolfe5356Încă nu există evaluări

- C 7000Document109 paginiC 7000Alex Argel Roqueme75% (4)

- Fundamentals of Marketing NotebookDocument24 paginiFundamentals of Marketing NotebookMorrisa AlexanderÎncă nu există evaluări

- 3.4 Spending, Saving and Borrowing: Igcse /O Level EconomicsDocument9 pagini3.4 Spending, Saving and Borrowing: Igcse /O Level EconomicsRingle JobÎncă nu există evaluări

- Deed of Sale - Motor VehicleDocument4 paginiDeed of Sale - Motor Vehiclekyle domingoÎncă nu există evaluări

- The Human Resource Department of GIK InstituteDocument1 paginăThe Human Resource Department of GIK InstitutexandercageÎncă nu există evaluări

- PTAS-11 Stump - All About Learning CurvesDocument43 paginiPTAS-11 Stump - All About Learning CurvesinSowaeÎncă nu există evaluări

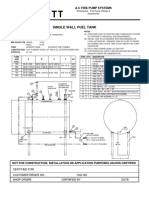

- Single Wall Fuel Tank: FP 2.7 A-C Fire Pump SystemsDocument1 paginăSingle Wall Fuel Tank: FP 2.7 A-C Fire Pump Systemsricardo cardosoÎncă nu există evaluări

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- International Convention Center, BanesworDocument18 paginiInternational Convention Center, BanesworSreeniketh ChikuÎncă nu există evaluări

- Aptio ™ Text Setup Environment (TSE) User ManualDocument42 paginiAptio ™ Text Setup Environment (TSE) User Manualdhirender karkiÎncă nu există evaluări

- Cars Should Be BannedDocument3 paginiCars Should Be BannedIrwanÎncă nu există evaluări

- An Overview of National Ai Strategies and Policies © Oecd 2021Document26 paginiAn Overview of National Ai Strategies and Policies © Oecd 2021wanyama DenisÎncă nu există evaluări

- Overhead Door Closers and Hardware GuideDocument2 paginiOverhead Door Closers and Hardware GuideAndrea Joyce AngelesÎncă nu există evaluări

- LPM 52 Compar Ref GuideDocument54 paginiLPM 52 Compar Ref GuideJimmy GilcesÎncă nu există evaluări

- C.C++ - Assignment - Problem ListDocument7 paginiC.C++ - Assignment - Problem ListKaushik ChauhanÎncă nu există evaluări

- Mayor Byron Brown's 2019 State of The City SpeechDocument19 paginiMayor Byron Brown's 2019 State of The City SpeechMichael McAndrewÎncă nu există evaluări

- Bob Wright's Declaration of BeingDocument1 paginăBob Wright's Declaration of BeingBZ Riger100% (2)

- Multiple Choice Question (MCQ) of Alternator and Synchronous Motors PageDocument29 paginiMultiple Choice Question (MCQ) of Alternator and Synchronous Motors Pagekibrom atsbha0% (1)

- Group 4 HR201 Last Case StudyDocument3 paginiGroup 4 HR201 Last Case StudyMatt Tejada100% (2)

- BlueDocument18 paginiBluekarishma nairÎncă nu există evaluări

- CAP Regulation 20-1 - 05/29/2000Document47 paginiCAP Regulation 20-1 - 05/29/2000CAP History LibraryÎncă nu există evaluări

- 7th Kannada Science 01Document160 pagini7th Kannada Science 01Edit O Pics StatusÎncă nu există evaluări

- Sample Property Management AgreementDocument13 paginiSample Property Management AgreementSarah TÎncă nu există evaluări

- Defect Prevention On SRS Through ChecklistDocument2 paginiDefect Prevention On SRS Through Checklistnew account new accountÎncă nu există evaluări

- PRE EmtionDocument10 paginiPRE EmtionYahya JanÎncă nu există evaluări

- Social EnterpriseDocument9 paginiSocial EnterpriseCarloÎncă nu există evaluări

- Fabric Bursting StrengthDocument14 paginiFabric Bursting StrengthQaiseriqball100% (5)

- Customer Satisfaction and Brand Loyalty in Big BasketDocument73 paginiCustomer Satisfaction and Brand Loyalty in Big BasketUpadhayayAnkurÎncă nu există evaluări

- The Smartest Book in the World: A Lexicon of Literacy, A Rancorous Reportage, A Concise Curriculum of CoolDe la EverandThe Smartest Book in the World: A Lexicon of Literacy, A Rancorous Reportage, A Concise Curriculum of CoolEvaluare: 4 din 5 stele4/5 (14)

- You Can't Joke About That: Why Everything Is Funny, Nothing Is Sacred, and We're All in This TogetherDe la EverandYou Can't Joke About That: Why Everything Is Funny, Nothing Is Sacred, and We're All in This TogetherÎncă nu există evaluări

- A Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormDe la EverandA Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormEvaluare: 5 din 5 stele5/5 (5)

- The Importance of Being Earnest: Classic Tales EditionDe la EverandThe Importance of Being Earnest: Classic Tales EditionEvaluare: 4.5 din 5 stele4.5/5 (42)

- Welcome to the United States of Anxiety: Observations from a Reforming NeuroticDe la EverandWelcome to the United States of Anxiety: Observations from a Reforming NeuroticEvaluare: 3.5 din 5 stele3.5/5 (10)