Documente Academic

Documente Profesional

Documente Cultură

Radial Basis Function Networks: Applications: Introduction To Neural Networks: Lecture 14

Încărcat de

scribdkhatnTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Radial Basis Function Networks: Applications: Introduction To Neural Networks: Lecture 14

Încărcat de

scribdkhatnDrepturi de autor:

Formate disponibile

Radial Basis Function Networks: Applications

Introduction to Neural Networks : Lecture 14

John A. Bullinaria, 2004

1. Supervised RBF Network Training

2. Regularization Theory for RBF Networks

3. RBF Networks for Classification

4. The XOR Problem in RBF Form

5. Comparison of RBF Networks with MLPs

6. Real World Application EEG Analysis

L14-2

Supervised RBF Network Training

Supervised training of the basis function parameters will generally give better results

than unsupervised procedures, but the computational costs are usually enormous.

The obvious approach is to perform gradient descent on a sum squared output error

function as we did for our MLPs. The error function would be

E y t w t

k

p

k

p

k p

kj j

p

j j

j

M

k

p

k p

= =

( )

=

( ( ) ) ( , , ) x x

2

0

2

and we would iteratively update the weights/basis function parameters using

w

E

w

jk w

jk

=

ij

ij

E

=

j

i

E

=

We have all the problems of choosing the learning rates , avoiding local minima and

so on, that we had for training MLPs by gradient descent. Also, there is a tendency for

the basis function widths to grow large leaving non-localised basis functions.

L14-3

Regularization Theory for RBF Networks

Instead of restricting the number of hidden units, an alternative approach for preventing

overfitting in RBF networks comes from the theory of regularization, which we saw

previously was a method of controlling the smoothness of mapping functions.

We can have one basis function centred on each training data point as in the case of

exact interpolation, but add an extra term to the error measure which penalizes

mappings which are not smooth. If we have network outputs y

k

(x

p

) and sum squared

error measure, we can introduce some appropriate differential operator Pand write

E y t y d

k

p

k

p

k p

k

k

+

1

2

2

2

( ( ) ) ( ) x x x P

where is the regularization parameter which determines the relative importance of

smoothness compared with error. There are many possible forms for P, but the general

idea is that mapping functions y

k

(x) which have large curvature should have large values

of |Py

k

(x)|

2

and hence contribute a large penalty in the total error function.

L14-4

Computing the Regularized Weights

Provided the regularization term is quadratic in y

k

(x), the second layer weights can still

be found by solving a set of linear equations. For example, the regularizer

1

2

2

2

2

y

x

k

p

i i p k

( ) x

_

,

1

]

1

1

certainly penalizes large output curvature, and minimizing the error function E now leads to

linear equations for the output weights that are no harder to solve than we had before

MW T

T T

= 0

In this we have defined the same matrices with components (W)

kj

= w

kj

, ( )

pj

=

j

(x

p

),

and (T)

pk

= {t

k

p

} as before, and also the regularized version of

T

M +

T

T

2

2

2

2

x x

i i i

Clearly for = 0 this reduces to the un-regularized result we derived in the last lecture.

L14-5

RBF Networks for Classification

So far we have concentrated on RBF networks for function approximation. They are

also useful for classification problems. Consider a data set that falls into three classes:

An MLP would naturally separate the classes with hyper-planes in the input space (as

on the left). An alternative approach would be to model the separate class distributions

by localised radial basis functions (as on the right).

L14-6

Implementing RBF Classification Networks

In principle, it is easy to have an RBF network perform classification we simply need

to have an output function y

k

(x) for each class k with appropriate targets

t

p k

k

p

'

1

0

if pattern belongs to class

otherwise

and, when the network is trained, it will automatically classify new patterns.

The underlying justification is found in Covers theorem which states that A complex

pattern classification problem cast in a high dimensional space non-linearly is more

likely to be linearly separable than in a low dimensional space. We know that once we

have linear separable patterns, the classification problem is easy to solve.

In addition to the RBF network outputting good classifications, it can be shown that the

outputs of such a regularized RBF network classifier will also provide estimates of the

posterior class probabilities.

L14-7

The XOR Problem Revisited

We are already familiar with the non-linearly separable XOR problem:

p x

1

x

2

t

1 0 0 0

2 0 1 1

3 1 0 1

4 1 1 0

We know that Single Layer Perceptrons with step or sigmoidal activation functions cannot

generate the right outputs, because they are only able to form a single decision boundary. To

deal with this problem using Perceptrons we needed to either change the activation function,

or introduce a non-linear hidden layer to give an Multi Layer Perceptron (MLP).

x

2

x

1

L14-8

The XOR Problem in RBF Form

Recall that sensible RBFs are M Gaussians

j

( ) x centred at random training data points:

j j

M

d

( ) exp

max

x x

_

,

2

2

where { } { }

j

p

x

To perform the XOR classification in an RBF network, we start by deciding how many

basis functions we need. Given there are four training patterns and two classes, M = 2

seems a reasonable first guess. We then need to decide on the basis function centres.

The two separated zero targets seem a good bet, so we can set

1

0 0 ( , ) and

2

1 1 ( , )

and the distance between them is d

max

= 2. We thus have the two basis functions

1 1

2

1

( ) exp x x

( )

with = (0, 0)

2 2

2

2

( ) exp x x

( )

with = (1,1)

This will hopefully transform the problem into a linearly separable form.

L14-9

The XOR Problem Basis Functions

Since the hidden unit activation space is only two dimensional we can easily plot how

the input patterns have been transformed:

p x

1

x

2

1

2

1 0 0 1.0000 0.1353

2 0 1 0.3678 0.3678

3 1 0 0.3678 0.3678

4 1 1 0.1353 1.0000

We can see that the patterns are now linearly separable. Note that in this case we did

not have to increase the dimensionality from the input space to the hidden unit/basis

function space the non-linearity of the mapping was sufficient. Exercise: check what

happens if you chose two different basis function centres.

3

1

4

2

1

L14-10

The XOR Problem Output Weights

In this case we just have one output y(x), with one weight w

j

to each hidden unit j, and

one bias -. This gives us the networks input-output relation for each input pattern x

y w w ( ) ( ) ( ) x x x +

1 1 2 2

Then, if we want the outputs y(x

p

) to equal the targets t

p

, we get the four equations

1 0000 0 1353 1 0000 0

1 2

. . . w w +

0 3678 0 3678 1 0000 1

1 2

. . . w w +

0 3678 0 3678 1 0000 1

1 2

. . . w w +

0 1353 1 0000 1 0000 0

1 2

. . . w w +

Three are different, and we have three variables, so we can easily solve them to give

w w

1 2

2 5018 2 8404 . . ,

This completes our training of the RBF network for the XOR problem.

L14-11

Comparison of RBF Networks with MLPs

There are clearly a number of similarities between RBF networks and MLPs:

Similarities

1. They are both non-linear feed-forward networks

2. They are both universal approximators

3. They are used in similar application areas

It is not surprising, then, to find that there always exists an RBF network capable of

accurately mimicking a specified MLP, or vice versa. However the two networks do

differ from each other in a number of important respects:

Differences

1. An RBF network (in its natural form) has a single hidden layer, whereas MLPs

can have any number of hidden layers.

L14-12

2. RBF networks are usually fully connected, whereas it is common for MLPs to be

only partially connected.

3. In MLPs the computation nodes (processing units) in different layers share a

common neuronal model, though not necessarily the same activation function. In

RBF networks the hidden nodes (basis functions) operate very differently, and

have a very different purpose, to the output nodes.

4. In RBF networks, the argument of each hidden unit activation function is the

distance between the input and the weights (RBF centres), whereas in MLPs it

is the inner product of the input and the weights.

5. MLPs are usually trained with a single global supervised algorithm, whereas RBF

networks are usually trained one layer at a time with the first layer unsupervised.

6. MLPs construct global approximations to non-linear input-output mappings with

distributed hidden representations, whereas RBF networks tend to use localised

non-linearities (Gaussians) at the hidden layer to construct local approximations.

Although, for approximating non-linear input-output mappings, the RBF networks can

be trained much faster, MLPs may require a smaller number of parameters.

L14-13

Real World Application EEG Analysis

One successful RBF network detects epileptiform artefacts in EEG recordings:

For full details see the original paper by: A. Saastamoinen, T. Pietil, A. Vrri, M.

Lehtokangas, & J. Saarinen, (1998). Waveform detection with RBF network

Application to automated EEG analysis. Neurocomputing, vol. 20, pp. 1-13

L14-14

Overview and Reading

1. We began by looking at how supervised RBF training would work.

2. Then we considered using regularization theory for RBF networks.

3. We then saw how we can use RBF networks for classification tasks and

noted the relevance of Covers theorem on the separability of patterns.

4. As a concrete example, we considered how the XOR problem could be

dealt with by an RBF network. We explicitly computed all the basis

functions and output weights for our network.

5. Then we went through a full comparison of RBF networks and MLPs.

6. We ended by looking at a real world application EEG analysis.

Reading

1. Bishop: Sections 5.3, 5.4, 5.7, 5.8, 5.10

2. Haykin: Sections 5.2, 5.5, 5.6, 5.11, 5.14, 5.15

S-ar putea să vă placă și

- RBFN Xor ProblemDocument16 paginiRBFN Xor ProblemCory SparksÎncă nu există evaluări

- RBF Network XOR ProblemDocument16 paginiRBF Network XOR ProblemSaurabh KumarÎncă nu există evaluări

- Radial Basis Function Networks: Algorithms: Neural Computation: Lecture 14Document18 paginiRadial Basis Function Networks: Algorithms: Neural Computation: Lecture 14Bobby JasujaÎncă nu există evaluări

- LipsolDocument26 paginiLipsolCmpt CmptÎncă nu există evaluări

- Neural Networks - Basics Matlab PDFDocument59 paginiNeural Networks - Basics Matlab PDFWesley DoorsamyÎncă nu există evaluări

- A Computational Comparison of Two Different Approaches To Solve The Multi-Area Optimal Power FlowDocument4 paginiA Computational Comparison of Two Different Approaches To Solve The Multi-Area Optimal Power FlowFlores JesusÎncă nu există evaluări

- Major Classes of Neural NetworksDocument21 paginiMajor Classes of Neural Networksbhaskar rao mÎncă nu există evaluări

- Lecture Five Radial-Basis Function Networks: Associate ProfessorDocument64 paginiLecture Five Radial-Basis Function Networks: Associate ProfessorWantong LiaoÎncă nu există evaluări

- NN Lab2Document5 paginiNN Lab2Anne WanningenÎncă nu există evaluări

- Radial Basis FunctionDocument35 paginiRadial Basis FunctionNicolas De NadaiÎncă nu există evaluări

- Networks of Artificial Neurons Single Layer PerceptronsDocument16 paginiNetworks of Artificial Neurons Single Layer PerceptronsSurenderMalanÎncă nu există evaluări

- Radial Basis Function Networks: Yousef AkhlaghiDocument28 paginiRadial Basis Function Networks: Yousef Akhlaghimoh_750571194Încă nu există evaluări

- Normalized Gaussian Radial Basis Function NetworksDocument17 paginiNormalized Gaussian Radial Basis Function NetworkschauhohoangÎncă nu există evaluări

- Cp5191 MLT Unit IIDocument27 paginiCp5191 MLT Unit IIbala_07123Încă nu există evaluări

- Radial Basis Function Networks ExplainedDocument8 paginiRadial Basis Function Networks ExplainedMaryam FarisÎncă nu există evaluări

- Simple Mesh Generator in MATLAB Generates High-Quality MeshesDocument17 paginiSimple Mesh Generator in MATLAB Generates High-Quality MeshesBubblez PatÎncă nu există evaluări

- NNN ProectDocument12 paginiNNN Proectkumar219Încă nu există evaluări

- Khosravi2012 Article ANovelStructureForRadialBasisFDocument10 paginiKhosravi2012 Article ANovelStructureForRadialBasisFMaxÎncă nu există evaluări

- Hybrid Training Algorithm For RBF Network: M. Y. MashorDocument17 paginiHybrid Training Algorithm For RBF Network: M. Y. MashormauricetappaÎncă nu există evaluări

- What is dynamic programming and how does it work? (39Document8 paginiWhat is dynamic programming and how does it work? (39Shadab Ahmed GhazalyÎncă nu există evaluări

- Enhanced SLAM using UKF and NNDocument6 paginiEnhanced SLAM using UKF and NNAqilAbbasiÎncă nu există evaluări

- An Alternative Approach For Generation of Membership Functions and Fuzzy Rules Based On Radial and Cubic Basis Function NetworksDocument20 paginiAn Alternative Approach For Generation of Membership Functions and Fuzzy Rules Based On Radial and Cubic Basis Function NetworksAntonio Madueño LunaÎncă nu există evaluări

- Rodriguez Report PDFDocument38 paginiRodriguez Report PDFAbdel DaaÎncă nu există evaluări

- Ann Lab Manual 2Document7 paginiAnn Lab Manual 2Manak JainÎncă nu există evaluări

- Automated Classification of Power Quality Disturbances using SVM and RBF NetworksDocument7 paginiAutomated Classification of Power Quality Disturbances using SVM and RBF NetworksbajricaÎncă nu există evaluări

- Fast Training of Multilayer PerceptronsDocument15 paginiFast Training of Multilayer Perceptronsgarima_rathiÎncă nu există evaluări

- Training Neural Networks With GA Hybrid AlgorithmsDocument12 paginiTraining Neural Networks With GA Hybrid Algorithmsjkl316Încă nu există evaluări

- My Dhsch6 RBFDocument17 paginiMy Dhsch6 RBFNegoescu Mihai CristianÎncă nu există evaluări

- Stacking MF Networks To Combine The Outputs Provided by RBF NetworksDocument10 paginiStacking MF Networks To Combine The Outputs Provided by RBF NetworkssbduibÎncă nu există evaluări

- Radial Basis Networks: Fundamentals and Applications for The Activation Functions of Artificial Neural NetworksDe la EverandRadial Basis Networks: Fundamentals and Applications for The Activation Functions of Artificial Neural NetworksÎncă nu există evaluări

- Unit Iv DMDocument58 paginiUnit Iv DMSuganthi D PSGRKCWÎncă nu există evaluări

- 4 Multilayer Perceptrons and Radial Basis FunctionsDocument6 pagini4 Multilayer Perceptrons and Radial Basis FunctionsVivekÎncă nu există evaluări

- Digital Neural Networks: Tony R. MartinezDocument8 paginiDigital Neural Networks: Tony R. MartinezzaqweasÎncă nu există evaluări

- Learning and Generalization in Single Layer Perceptrons: Neural Computation: Lecture 4Document16 paginiLearning and Generalization in Single Layer Perceptrons: Neural Computation: Lecture 4I careÎncă nu există evaluări

- Supervised Training Via Error Backpropagation: Derivations: 4.1 A Closer Look at The Supervised Training ProblemDocument32 paginiSupervised Training Via Error Backpropagation: Derivations: 4.1 A Closer Look at The Supervised Training ProblemGeorge TsavdÎncă nu există evaluări

- Exercise 2: Hopeld Networks: Articiella Neuronnät Och Andra Lärande System, 2D1432, 2004Document8 paginiExercise 2: Hopeld Networks: Articiella Neuronnät Och Andra Lärande System, 2D1432, 2004pavan yadavÎncă nu există evaluări

- instructor-solution-manual-to-neural-networks-and-deep-learning-a-textbook-solutions-3319944622-9783319944623_compressDocument40 paginiinstructor-solution-manual-to-neural-networks-and-deep-learning-a-textbook-solutions-3319944622-9783319944623_compressHassam HafeezÎncă nu există evaluări

- Instructor's Solution Manual For Neural NetworksDocument40 paginiInstructor's Solution Manual For Neural NetworksshenalÎncă nu există evaluări

- A Relational Approach To The Compilation of Sparse Matrix ProgramsDocument16 paginiA Relational Approach To The Compilation of Sparse Matrix Programsmmmmm1900Încă nu există evaluări

- MorphDocument20 paginiMorphYosueÎncă nu există evaluări

- Charotar University of Science and Technology Faculty of Technology and EngineeringDocument10 paginiCharotar University of Science and Technology Faculty of Technology and EngineeringSmruthi SuvarnaÎncă nu există evaluări

- PerssonAndStrang MeshGeneratorDocument17 paginiPerssonAndStrang MeshGeneratorJeff HÎncă nu există evaluări

- Matlab FileDocument16 paginiMatlab FileAvdesh Pratap Singh GautamÎncă nu există evaluări

- Pattern Classification of Back-Propagation Algorithm Using Exclusive Connecting NetworkDocument5 paginiPattern Classification of Back-Propagation Algorithm Using Exclusive Connecting NetworkEkin RafiaiÎncă nu există evaluări

- Contents MLP PDFDocument60 paginiContents MLP PDFMohit SharmaÎncă nu există evaluări

- Learning and Generalization in Single Layer Perceptrons: Introduction To Neural Networks: Lecture 4Document16 paginiLearning and Generalization in Single Layer Perceptrons: Introduction To Neural Networks: Lecture 4I careÎncă nu există evaluări

- Learning 2004 1 019Document6 paginiLearning 2004 1 019tasos_rex3139Încă nu există evaluări

- Three Learning Phases For Radial-Basis-Function Networks: Friedhelm Schwenker, Hans A. Kestler, Guènther PalmDocument20 paginiThree Learning Phases For Radial-Basis-Function Networks: Friedhelm Schwenker, Hans A. Kestler, Guènther PalmashenafiÎncă nu există evaluări

- Neural NetworksDocument37 paginiNeural NetworksOmiarÎncă nu există evaluări

- Introduction of The Radial Basis Function (RBF) Networks: February 2001Document8 paginiIntroduction of The Radial Basis Function (RBF) Networks: February 2001jainam dudeÎncă nu există evaluări

- Haseeb Lab05 PSADocument6 paginiHaseeb Lab05 PSAEng TariqÎncă nu există evaluări

- A Multi-Layer Adaptive Function Neural Network (Madfunn) For Letter Image RecognitionDocument6 paginiA Multi-Layer Adaptive Function Neural Network (Madfunn) For Letter Image RecognitionAnonymous bcDacMÎncă nu există evaluări

- Multilayer Perceptron: Fundamentals and Applications for Decoding Neural NetworksDe la EverandMultilayer Perceptron: Fundamentals and Applications for Decoding Neural NetworksÎncă nu există evaluări

- Backpropagation: Fundamentals and Applications for Preparing Data for Training in Deep LearningDe la EverandBackpropagation: Fundamentals and Applications for Preparing Data for Training in Deep LearningÎncă nu există evaluări

- Hopfield Networks: Fundamentals and Applications of The Neural Network That Stores MemoriesDe la EverandHopfield Networks: Fundamentals and Applications of The Neural Network That Stores MemoriesÎncă nu există evaluări

- Industrial Diaphragm Valves: SaundersDocument14 paginiIndustrial Diaphragm Valves: SaundersscribdkhatnÎncă nu există evaluări

- Connection of Redundant I/O Devices To S7-1500: Lredio LibraryDocument31 paginiConnection of Redundant I/O Devices To S7-1500: Lredio LibraryscribdkhatnÎncă nu există evaluări

- Manual de Utilizare PT DELCOS Pro PDFDocument28 paginiManual de Utilizare PT DELCOS Pro PDFLucyan Ionescu100% (2)

- 6th Central Pay Commission Salary CalculatorDocument15 pagini6th Central Pay Commission Salary Calculatorrakhonde100% (436)

- 6.6 Wiring Diagram 6.6.1 Version GCP-20 & AMG 3/NEBDocument1 pagină6.6 Wiring Diagram 6.6.1 Version GCP-20 & AMG 3/NEBscribdkhatnÎncă nu există evaluări

- Connection of Redundant I/O Devices To S7-1500: Lredio LibraryDocument31 paginiConnection of Redundant I/O Devices To S7-1500: Lredio LibraryscribdkhatnÎncă nu există evaluări

- 3500 Monitoring SystemsDocument12 pagini3500 Monitoring SystemsscribdkhatnÎncă nu există evaluări

- Interfax Equipos Deiff y GobernadoresDocument40 paginiInterfax Equipos Deiff y Gobernadoreshumcata100% (2)

- REM610 Ref Manual IECDocument160 paginiREM610 Ref Manual IECfiatraj1Încă nu există evaluări

- 6.6 Wiring Diagram 6.6.1 Version GCP-20 & AMG 3/NEBDocument1 pagină6.6 Wiring Diagram 6.6.1 Version GCP-20 & AMG 3/NEBscribdkhatnÎncă nu există evaluări

- Interfax Equipos Deiff y GobernadoresDocument40 paginiInterfax Equipos Deiff y Gobernadoreshumcata100% (2)

- Eaton's MTL product range and the ATEX directive explainedDocument1 paginăEaton's MTL product range and the ATEX directive explainedscribdkhatnÎncă nu există evaluări

- Diesel ECU and Fuel Injector DriversDocument32 paginiDiesel ECU and Fuel Injector DriversscribdkhatnÎncă nu există evaluări

- 2078 V1.0 ESD 5100 - Series PTI1000XC Datasheet 08 09 23 MH enDocument4 pagini2078 V1.0 ESD 5100 - Series PTI1000XC Datasheet 08 09 23 MH ennhocti007Încă nu există evaluări

- 10 Vedlegg DDocument14 pagini10 Vedlegg DscribdkhatnÎncă nu există evaluări

- Overview of The Oil and Gas ExplorationDocument7 paginiOverview of The Oil and Gas ExplorationArcenio Jimenez MorganÎncă nu există evaluări

- Neural Network Monitoring System Used For The Frequency Vibration Prediction in Gas TurbineDocument5 paginiNeural Network Monitoring System Used For The Frequency Vibration Prediction in Gas TurbinescribdkhatnÎncă nu există evaluări

- Extinguishing Control Unit A6V10061857 HQ enDocument38 paginiExtinguishing Control Unit A6V10061857 HQ enscribdkhatn0% (1)

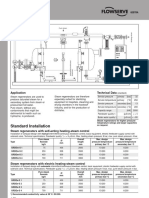

- Steam Regenerators: Application Technical DataDocument1 paginăSteam Regenerators: Application Technical DatascribdkhatnÎncă nu există evaluări

- Extinguishing Control Unit A6V10061857 HQ en PDFDocument4 paginiExtinguishing Control Unit A6V10061857 HQ en PDFscribdkhatnÎncă nu există evaluări

- Boiler Controls and Systems: An OverviewDocument8 paginiBoiler Controls and Systems: An OverviewscribdkhatnÎncă nu există evaluări

- Lal 125Document20 paginiLal 125Zvonko TrajkovÎncă nu există evaluări

- Study of Selected Petroleum Refining Residuals Industry StudyDocument60 paginiStudy of Selected Petroleum Refining Residuals Industry StudyOsama AdilÎncă nu există evaluări

- Fan FactsDocument28 paginiFan FactsmadbakingÎncă nu există evaluări

- flwch2pg PDFDocument2 paginiflwch2pg PDFscribdkhatnÎncă nu există evaluări

- Study of Selected Petroleum Refining Residuals Industry StudyDocument60 paginiStudy of Selected Petroleum Refining Residuals Industry StudyOsama AdilÎncă nu există evaluări

- Bently Nevada 22MDocument9 paginiBently Nevada 22MscribdkhatnÎncă nu există evaluări

- Steam Regenerators: Application Technical DataDocument1 paginăSteam Regenerators: Application Technical DatascribdkhatnÎncă nu există evaluări

- Gas Turbine ModellingDocument12 paginiGas Turbine ModellingscribdkhatnÎncă nu există evaluări

- ISO 18436 Category IV-4page-V04Document4 paginiISO 18436 Category IV-4page-V04scribdkhatnÎncă nu există evaluări

- The Hypnotic Language FormulaDocument25 paginiThe Hypnotic Language FormulaCristian Pop80% (5)

- My Transformational Journey Into A CoachDocument7 paginiMy Transformational Journey Into A CoachRamanathan RamÎncă nu există evaluări

- ZangeDocument3 paginiZangeMiki Broad100% (1)

- UTS bsn1Document55 paginiUTS bsn1Sweetzel SenielÎncă nu există evaluări

- RE-HMRM2121 Remedial Exam ResultsDocument4 paginiRE-HMRM2121 Remedial Exam ResultsAlmirah Makalunas0% (1)

- Ltu Ex 2012 36549405Document153 paginiLtu Ex 2012 36549405Nighat ImranÎncă nu există evaluări

- Future of UNO: An Analysis of Reforms to Strengthen the UNDocument4 paginiFuture of UNO: An Analysis of Reforms to Strengthen the UNPearl Zara33% (3)

- Informal FallaciesDocument3 paginiInformal FallaciesAnonymous prV8VRhpEyÎncă nu există evaluări

- The Novels and Letters of Jane AustenDocument330 paginiThe Novels and Letters of Jane AustenOmegaUserÎncă nu există evaluări

- Physics 1 Code PH-101 Contacts 3+1 Credits 4Document2 paginiPhysics 1 Code PH-101 Contacts 3+1 Credits 4Dr. Pradeep Kumar SharmaÎncă nu există evaluări

- Mies van der Rohe's Minimalist Residential DesignDocument15 paginiMies van der Rohe's Minimalist Residential Designsameer salsankar100% (1)

- 1 Singular&plural Nouns Lesson PlanDocument3 pagini1 Singular&plural Nouns Lesson Planapi-279690289Încă nu există evaluări

- Ucsp UPDATEDDocument173 paginiUcsp UPDATEDLJ PescaliaÎncă nu există evaluări

- Module 1 Project ManagementDocument37 paginiModule 1 Project ManagementDavid Munyaka100% (2)

- Portfolio IntroductionDocument3 paginiPortfolio Introductionapi-272102596Încă nu există evaluări

- Impact of Motivations On Employee Performance (A Case of Aqua Rapha Company)Document46 paginiImpact of Motivations On Employee Performance (A Case of Aqua Rapha Company)Henry OzobialuÎncă nu există evaluări

- Social and Historical Factors of Bilingual and Multilingual Development in The Society and IndividualDocument16 paginiSocial and Historical Factors of Bilingual and Multilingual Development in The Society and IndividualGeneva Glorioso100% (5)

- Preboard English KeyDocument16 paginiPreboard English KeyGeraldine DensingÎncă nu există evaluări

- Ionization RangeDocument6 paginiIonization RangeMichael Bowles100% (1)

- CELEBRATION OF THE 2550th ANNIVERSARY OF THE BUDDHA (English)Document92 paginiCELEBRATION OF THE 2550th ANNIVERSARY OF THE BUDDHA (English)AmitabhaÎncă nu există evaluări

- Importance of peer groups and self-fulfilling propheciesDocument3 paginiImportance of peer groups and self-fulfilling propheciesCee BeeÎncă nu există evaluări

- Rationalism ScriptDocument2 paginiRationalism Scriptbobbyforteza92Încă nu există evaluări

- L430-Lucian VI Dipsads Saturnalia Herodotus or Aetion Zeuxis or Antiochus Harmonides HesiodDocument524 paginiL430-Lucian VI Dipsads Saturnalia Herodotus or Aetion Zeuxis or Antiochus Harmonides HesiodFreedom Against Censorship Thailand (FACT)100% (1)

- Power of MantraDocument15 paginiPower of Mantraphani1978Încă nu există evaluări

- Robbers Cave Experiment and Anne of Green GablesDocument4 paginiRobbers Cave Experiment and Anne of Green GablesAlexandra AlexievaÎncă nu există evaluări

- Survey and Critical Review of Recent Innovative Energy Conversion TechnologiesDocument6 paginiSurvey and Critical Review of Recent Innovative Energy Conversion TechnologiesRichardolsen1Încă nu există evaluări

- The Sea of MercyDocument124 paginiThe Sea of MercyMohammed Abdul Hafeez, B.Com., Hyderabad, India100% (1)

- Introduction To Entrepreneurship: Module 1: What Is Entrepreneurship?Document12 paginiIntroduction To Entrepreneurship: Module 1: What Is Entrepreneurship?robeÎncă nu există evaluări

- Research Verb TenseDocument2 paginiResearch Verb TensePretty KungkingÎncă nu există evaluări

- Psalm 1 SermonDocument6 paginiPsalm 1 SermonLijo DevadhasÎncă nu există evaluări