Documente Academic

Documente Profesional

Documente Cultură

Methodological Study of Opinion Mining and Sentiment Analysis Techniques

Încărcat de

ijscTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Methodological Study of Opinion Mining and Sentiment Analysis Techniques

Încărcat de

ijscDrepturi de autor:

Formate disponibile

International Journal on Soft Computing (IJSC) Vol. 5, No.

1, February 2014

METHODOLOGICAL STUDY OF OPINION MINING AND SENTIMENT ANALYSIS TECHNIQUES

Pravesh Kumar Singh1, Mohd Shahid Husain2

1

M.Tech, Department of Computer Science and Engineering, Integral University, Lucknow, India 2 Assistant Professor, Department of Computer Science and Engineering, Integral University, Lucknow, India

ABSTRACT

Decision making both on individual and organizational level is always accompanied by the search of others opinion on the same. With tremendous establishment of opinion rich resources like, reviews, forum discussions, blogs, micro-blogs, Twitter etc provide a rich anthology of sentiments. This user generated content can serve as a benefaction to market if the semantic orientations are deliberated. Opinion mining and sentiment analysis are the formalization for studying and construing opinions and sentiments. The digital ecosystem has itself paved way for use of huge volume of opinionated data recorded. This paper is an attempt to review and evaluate the various techniques used for opinion and sentiment analysis.

KEYWORDS

Opinion Mining, Sentiment Analysis, Feature Extraction Techniques, Nave Bayes Classifiers, Clustering, Support Vector Machines

1. INTRODUCTION

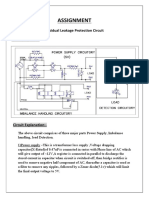

Generally individuals and companies are always interested in others opinion like if someone wants to purchase a new product, then firstly, he/she tries to know the reviews i.e., what other people think about the product and based on those reviews, he/she takes the decision. Similarly, companies also excavate deep for consumer reviews. Digital ecosystem has a plethora for same in the form of blogs, reviews etc. A very basic step of opinion mining and sentiment analysis is feature extraction. Figure 1 shows the process of opinion mining and sentiment analysis .

DOI: 10.5121/ijsc.2014.5102

11

International Journal on Soft Computing (IJSC) Vol. 5, No. 1, February 2014

There are various methods used for opinion mining and sentiment analysis among which following are the important ones: 1) 2) 3) 4) Nave Bays Classifier. Support Vector Machine (SVM). Multilayer Perceptron. Clustering.

In this paper, categorization of work done for feature extraction and classification in opinion mining and sentiment analysis is done. In addition to this, performance analysis, advantages and disadvantages of different techniques are appraised.

2. DATA SETS

This section provides brief details of datasets used in experiments.

2.1. Product Review Dataset

Blitzer takes the review of products from amazon.com which belong to a total of 25 categories like videos, toys etc. He randomly selected 4000 +ve and 4000 ve reviews.

2.2. Movie Review Dataset

The movie review dataset is taken from the Pang and Lee (2004) works. It contains movie review with feature of 1000 +ve and 1000 ve processed movie reviews.

3. CLASSIFICATION TECHNIQUES

3.1. Nave Bayes Classifier

Its a probabilistic and supervised classifier given by Thomas Bayes. According to this theorem, if there are two events say, e1 and e2 then the conditional probability of occurrence of event e1 when e2 has already occurred is given by the following mathematical formula:

P (e1 | e2 ) =

P (e2 | e1 ) P (e1 ) e2

This algorithm is implemented to calculate the probability of a data to be positive or negative. So, conditional probability of a sentiment is given as:

P(Sentiment | Sentence) = P(Sentiment)P(Sentence | Sentiment) P(Sentence)

And conditional probability of a word is given as: Numberof wordoccurence in class+ 1 P(Word| Sentiment )= Numberof wordsbelongingto a class+ Totalnos of Word Algorithm S1: Initialize P(positive) num popozitii (positive)/ num_total_propozitii

12

International Journal on Soft Computing (IJSC) Vol. 5, No. 1, February 2014

S2: Initialize P(negative) num popozitii (negative) / num_total_propozitii S3: Convert sentences into words for each class of {positive, negative}: for each word in {phrase} P(word | class) < num_apartii (word | class) 1 | num_cuv (class) + num_total_cuvinte P (class) P (class) * P (word | class) Returns max {P(pos), P(neg)} The above algorithm can be represented using figure 2

+ve Sentence Training Set ve Sentence Sentence Review Classifier Classifier Classifier

Book Review

Figure 2. Algorithm of Nave Bayes

3.1.1. Evaluation of Algorithm

To evaluate the algorithm following measures are used: Accuracy Precision Recall Relevance

Following contingency table is used to calculate the various measures. Relevant Detected Opinions True Positive (tp) Irrelevant False Positive (fp)

Undetected Opinions False Negative (fn) True Negative (tn) Now, Precision =

tp tp + fp

Accuracy =

tp tp + tn 2 * Pr ecision * Re call ; Recall = ,F = tp + tn + fp + fn tp + fn Pr ecision + Re call

13

International Journal on Soft Computing (IJSC) Vol. 5, No. 1, February 2014

3.1.2. Accuracy

On the 5000 sentences [1] Ion SMEUREANU, Cristian BUCUR train the Nave Gauss Algorithm and got 0.79939209726444 accuracy; Where number of groups (n) is 2.

3.1.3. Advantages of Nave Bayes Classification Method

1. Model is easy to interpret. 2. Efficient computation.

3.1.4. Disadvantage of Nave Bayes Classification Method

Assumptions of attributes being independent, which may not be necessarily valid.

3.2

Support Vector Machine (SVM)

SVM is a supervised learning model. This model is associated with a learning algorithm that analyzes the data and identifies the pattern for classification. The concept of SVM algorithm is based on decision plane that defines decision boundaries. A decision plane separates group of instances having different class memberships. For example, consider an instance which belongs to either class Circle or Diamond. There is a separating line (figure 3) which defines a boundary. At the right side of boundary all instances are Circle and at the left side all instances are Diamond.

Support Vectors

Support Vectors

Figure 3. Principle of SVM

Is there is an exercise/training data set D, a set of n points is written as:

D = (x i , c i ) x i R p , c i {1, 1}

i 1

.......(1)

Where, xi is a p-dimensional real vector. Find the maximum-margin hyper plane i.e. splits the points having ci = 1 from those having ci = -1. Any hyperplane can be written as the set of points satisfying:

w x - b =1 ........(2)

14

International Journal on Soft Computing (IJSC) Vol. 5, No. 1, February 2014

Finding a maximum margin hyperplane, reduces to find the pair w and b, such that the distance between the hyperplanes is maximal while still separating the data. These hyperplanes are described by:

w x b =1 and w x b = 1

The distance between two hyperplanes is

b and therefore w needs to be minimized. The w

minimized w in w, b subject to ci (w.xi b) 1 for any i = 1 n. Using Lagranges multipliers (i) this optimization problem can be expressed as:

min w, b

max 1 { w 2

[c (w.x

i i i =1

- b) - 1]} ..... (3)

3.2.1. Extensions of SVM There are some extensions which makes SVM more robust and adaptable to real world problem. These extensions include the following: 1. Soft Margin Classification In text classification sometimes data are linearly divisible, for very high dimensional problems and for multi-dimensional problems data are also separable linearly. Generally (in maximum cases) the opinion mining solution is one that classifies most of the data and ignores outliers and noisy data. If a training set data say D cannot be separated clearly then the solution is to have fat decision classifiers and make some mistake. Mathematically, a slack variable i are introduced that are not equal to zero which allows xi to not meet the margin requirements with a cost i.e., proportional to . 2. Non-linear Classification Non-linear classifiers are given by the Bemhard Boser, Isabelle Guyon and Vapnik in 1992 using kernel to max margin hyperplanes. Aizeman given a kernel trick i.e., every dot product is replaced by non-linear kernel function. When this case is apply then the effectiveness of SVM lies in the selection of kernel and soft margin parameters. 3. Multiclass SVM Basically SVM relevant for two class tasks but for the multiclass problems there is multiclass SVM is available. In the multi class case labels are designed to objects which are drawn from a finite set of numerous elements. These binary classifiers might be built using two classifiers: 1. Distinguishing one versus all labels and 2. Among each pair of classes one versus one. 3.2.2. Accuracy When pang take unigrams learning method then it gives the best output in a presence based frequency model run by SVM and he calculated 82.9% accuracy in the process.

15

International Journal on Soft Computing (IJSC) Vol. 5, No. 1, February 2014

3.2.3. Advantages of Support Vector Machine Method 1. Very good performance on experimental results. 2. Low dependency on data set dimensionality. 3.2.4. Disadvantages of Support Vector Machine Method 1. One disadvantages of SVM is i.e. in case of categorical or missing value it needs pre-processed. 2. Difficult interpretation of resulting model.

3.3.

Multi-Layer Perceptron (MLP)

Multi-Layer perceptron is a feed forward neural network, with one or N layers among inputs and output. Feed forward means i.e, uni-direction flow of data such as from input layer to output layer. This ANN which multilayer perceptron begin with input layer where every node means a predicator variable. Input nodes or neurons are connected with every neuron in next layer (named as hidden layers). The hidden layer neurons are connected to other hidden layer neuron. Output layer is made up as follows: 1. When prediction is binary output layer made up of one neuron and 2. When prediction is non-binary then output layer made up of N neuron. This arrangement makes an efficient flow of information from input layer to output layer. Figure 4 shows the structure of MLP. In figure 4 there is input layer and an output layer like single layer perceptron but there is also a hidden layer work in this algorithm.

MLP is a back propagation algorithm and has two phases: Phase I: It is the forward phase where activation are propagated from the input layer to output layer. Phase II: In this phase to change the weight and bias value errors among practical & real values and the requested nominal value in the output layer is propagate in the backward direction. MLP is popular technique due to the fact i.e. it can act as universal function approximator. MLP is a general, flexible and non-linear tool because a back propagation network has minimum one hidden layer with various non-linear entities that can learn every function or relationship between group of input and output variable (whether variables are discrete or continuous).

16

International Journal on Soft Computing (IJSC) Vol. 5, No. 1, February 2014

An advantage of MLP, compare to classical modeling method is that it does not enforce any sort of constraint with respect to the initial data neither does it generally start from specific assumptions. Another benefit of the method lies in its capability to evaluation good models even despite the presence of noise in the analyzed information, as arises when there is an existence of omitted and outlier values in the spreading of the variables. Hence, it is a robust method when dealing with problems of noise in the given information. 3.3.1. Accuracy On the health care data Ludmila I. Kuncheva, (IEEE Member) calculate accuracy of MLP as 84.25%-89.50%. 3.3.2. Advantages of MLP 1. It acts as a universal function approximator. 2. MLP can learn each and every relationship among input and output variables. 3.3.3. Disadvantages of MLP 1. MLP needs more time for execution compare to other technique because flexibility lies in the need to have enough training data. 2. It is considered as complex black box.

3.4 Clustering Classifier

Clustering is an unsupervised learning method and has no labels on any point. Clustering technique recognizes the structure in data and group, based on how nearby they are to one another.

So, clustering is process of organizing objects and instances in a class or group whose members are similar in some way and members of class or cluster is not similar to those are in the other cluster This method is an unsupervised method, so one does not know that how many clusters or groups are existing in the data. Using this method one can organize the data set into different clusters based on the similarities and distance among data points.

17

International Journal on Soft Computing (IJSC) Vol. 5, No. 1, February 2014

Clustering organization is denoted as a set of subsets C = C1 . . . Ck of S, such that:

S=

k i =1

C i and C i C j = for i j . Therefore, any object in S related to exactly one and only one

subset. For example, consider figure 5 where data set has three normal clusters. Now consider the some real-life examples for illustrating clustering: Example 1: Consider the people having similar size together to make small and large shirts. 1. Tailor-made for each person: expensive 2. One-size-fits-all: does not fit all. Example 2: In advertising, segment consumers according to their similarities: To do targeted advertising. Example 3: To create a topic hierarchy, we can take a group of text and organize those texts according to their content matches. Basically there are two types of measures used to estimate the relation: Distance measures and similarity measures. Basically following are two kinds of measures used to guesstimate this relation: 1. Distance measures and 2. Similarity measures Distance Measures To get the similarity and difference between the group of objects distance measures uses the various clustering methods. It is convenient to represent the distance between two instances let say xi and xj as: d (xi, xj). A valid distance measure should be symmetric and gains its minimum value (usually zero) in case of identical vectors. If distance measure follows the following properties then it is known as metric distance measure: 1. Triangle inequality d ( x i , x k ) d ( x i , x j ) + d ( x j , x k )

x i , x j , x k S 2. d ( x i , x j ) = 0 x i = x j x i , x j S

There are variations in distance measures depending upon the attribute in question. 3.4.1. Clustering Algorithms A number of clustering algorithms are getting popular. The basic reason of a number of clustering methods is that cluster is not accurately defined (Estivill -Castro, 2000). As a result many clustering methods have been developed, using a different induction principle. 1. Exclusive Clustering In this clustering algorithm, data are clusters in an exclusive way, so that a data fits to only one certain cluster. Example of exclusive clustering is K-means clustering.

18

International Journal on Soft Computing (IJSC) Vol. 5, No. 1, February 2014

2. Overlapping Clustering This clustering algorithm uses fuzzy sets to grouped data, so each point may fit to two or more groups or cluster with various degree of membership. 3. Hierarchical Clustering Hierarchical clustering has two variations: agglomerative and divisive clustering Agglomerative clustering is based on the union among the two nearest groups. The start state is realized by setting every data as a group or cluster. After some iteration it gets the final clusters needed. It is a bottom-up version. Divisive clustering begins from one group or cluster containing all data items. At every step, clusters are successively fragmented into smaller groups or clusters according to some difference. It is a top-down version. 4. Probabilistic Clustering It is a mix of Gaussian, and uses totally a probabilistic approach. 3.4.2. Evaluation Criteria Measures for Clustering Technique Basically, it is divided into two groups internal quality criteria and external quality criteria . 1. Internal Quality Criteria Using similarity measure it measures the compactness if clusters. It generally takes into consideration intra-cluster homogeneity, the inter-cluster separability or a combination of these two. It doesnt use any exterior information beside the data i tself. 2. External Quality Criteria External quality criteria are important for observing the structure of the cluster match to some previously defined classification of the instance or objects. 3.4.3. Accuracy Depending on the data accuracy of the clustering techniques varied from 65.33% to 99.57%. 3.4.4. Advantages of Clustering Method The most important benefit of this technique is that it offers the classes or groups that fulfill (approximately) an optimality measure. 3.4.5. Disadvantages of Clustering Method 1. There is no learning set of labeled observations. 2. Number of groups is usually unknown. 3. Implicitly, users already choose the appropriate features and distance measure.

4. CONCLUSION

The important part of gathering information always seems as, what the people think. The rising accessibility of opinion rich resources such as online analysis websites and blogs means that, one can simply search and recognize the opinions of others. One can precise his/her ideas and opinions concerning goods and facilities. These views and thoughts are subjective figures which signify opinions, sentiments, emotional state or evaluation of someone.

19

International Journal on Soft Computing (IJSC) Vol. 5, No. 1, February 2014

In this paper, different methods for data (feature or text) extraction are presented. Every method has some benefits and limitations and one can use these methods according to the situation for feature and text extraction. Based on the survey we can find the accuracy of different methods in different data set using N-gram feature shown in table 1.

Table 1: Accuracy of Different Methods

N-gram Feature

Movie Reviews NB MLP SVM 75.50 81.05 81.15

Product Reviews NB MLP SVM 62.50 79.27 79.40

According to the survey, accuracy of SVM is better than other three methods when N-gram feature was used. The four methods discussed in the paper are actually applicable in different areas like clustering is applied in movie reviews and SVM techniques is applied in biological reviews & analysis. Although the field of opinion mining is new, but still diverse methods available to provide a way to implement these methods in various programming languages like PHP, Python etc. with an outcome of innumerable applications. From a convergent point of view Nave Bayes is best suitable for textual classification, clustering for consumer services and SVM for biological reading and interpretation.

ACKNOWLEDGEMENTS

Every good writing requires the help and support of many people for it to be truly good. I would take the opportunity of thanking all those who extended a helping hand whenever I needed one. I offer my heartfelt gratitude to Mr. Mohd. Shahid Husain, who encouraged, guided and helped me a lot in the project. I extent my thanks to Miss. Ratna Singh (fiancee) for her incandescent help to complete this paper. A vote of thanks to my family for their moral and emotional support. Above all utmost thanks to the Almighty God for the divine intervention in this academic endeavor.

REFERENCES

[1] [2] [3] [4] Ion SMEUREANU, Cristian BUCUR, Applying Supervised Opinion Mining Techniques on Online User Reviews, Informatica Economic vol. 16, no. 2/2012. Bo Pang and Lillian Lee, Opinion Mining and Sentiment Analysis, Foundations and TrendsR_ in Information Retrieval Vol. 2, Nos. 12 (2008). Abbasi, Affect intensity analysis of dark web forums, in Proceedings of Intelligence and Security Informatics (ISI), pp. 282288, 2007. K. Dave, S. Lawrence & D. Pennock. \Mining the Peanut Gallery: Opinion Extraction and Semantic Classi_cation of Product Reviews." Proceedings of the 12th International Conference on World Wide Web, pp. 519-528, 2003. B. Liu. \Web Data Mining: Exploring hyperlinks, contents, and usage data," Opinion Mining. Springer, 2007. B. Pang & L. Lee, \Seeing stars: Exploiting class relationships for sentiment categorization with respect to rating scales." Proceedings of the Association for Computational Linguistics (ACL), pp. 15124,2005. Nilesh M. Shelke, Shriniwas Deshpande, Vilas Thakre, Survey of Techniques for Opinion Mining, International Journal of Computer Applications (0975 8887) Volume 57 No.13, November 2012. 20

[5] [6]

[7]

International Journal on Soft Computing (IJSC) Vol. 5, No. 1, February 2014 [8] Nidhi Mishra and C K Jha, Classification of Opinion Mining Techniques, International Journal of Computer Applications 56 (13):1-6, October 2012, Published by Foundation of Computer Science, New York, USA. [9] Oded Z. Maimon, Lior Rokach, Data Mining and Knowledge Discovery Handbook Springer, 2005. [10] Bo Pang, Lillian Lee, and Shivakumar Vaithyanathan. Sentiment classification using machine learning techniques. In Proceedings of the 2002 Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 7986. [11] Towards Enhanced Opinion Classication using NLP Techniques, IJCNLP 2011, pages 101107, Chiang Mai, Thailand, November 13, 2011

Author

Pravesh Kumar Singh is a fine blend of strong scientific orientation and editing. He is a Computer Science (Bachelor in Technology) graduate from a renowned gurukul in India called Dr. Ram Manohar Lohia Awadh University with excellence not only in academics but also had flagship in choreography. He mastered in Computer Science and Engineering from Integral University, Lucknow, India. Currently he is acting as Head MCA (Master in Computer Applications) department in Thakur Publications and also working in the capacity of Senior Editor.

21

S-ar putea să vă placă și

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (399)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- International Journal On Soft Computing, Artificial Intelligence and Applications (IJSCAI)Document2 paginiInternational Journal On Soft Computing, Artificial Intelligence and Applications (IJSCAI)ijscaiÎncă nu există evaluări

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)ijscÎncă nu există evaluări

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- IJSCDocument2 paginiIJSCijscÎncă nu există evaluări

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- ISSN: 2320-7493 (Online) 2320 - 8449 (Print)Document2 paginiISSN: 2320-7493 (Online) 2320 - 8449 (Print)Alejandro CarverÎncă nu există evaluări

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- IJSCDocument2 paginiIJSCijscÎncă nu există evaluări

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- International Journal of Peer-To-Peer Networks (IJP2P)Document2 paginiInternational Journal of Peer-To-Peer Networks (IJP2P)Aircc KarlonÎncă nu există evaluări

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- International Journal On Soft Computing (IJSC)Document1 paginăInternational Journal On Soft Computing (IJSC)Matthew JohnsonÎncă nu există evaluări

- Approvals Management Responsibilities and Setups in AME.B PDFDocument20 paginiApprovals Management Responsibilities and Setups in AME.B PDFAli LoganÎncă nu există evaluări

- Assignment: Residual Leakage Protection Circuit Circuit DiagramDocument2 paginiAssignment: Residual Leakage Protection Circuit Circuit DiagramShivam ShrivastavaÎncă nu există evaluări

- Source:: APJMR-Socio-Economic-Impact-of-Business-Establishments - PDF (Lpubatangas - Edu.ph)Document2 paginiSource:: APJMR-Socio-Economic-Impact-of-Business-Establishments - PDF (Lpubatangas - Edu.ph)Ian EncarnacionÎncă nu există evaluări

- Man Bni PNT XXX 105 Z015 I17 Dok 886160 03 000Document36 paginiMan Bni PNT XXX 105 Z015 I17 Dok 886160 03 000Eozz JaorÎncă nu există evaluări

- 147 Amity Avenue Nampa, ID 81937 (999) 999-9999 William at Email - ComDocument4 pagini147 Amity Avenue Nampa, ID 81937 (999) 999-9999 William at Email - ComjeyesbelmenÎncă nu există evaluări

- BDocument28 paginiBLubaÎncă nu există evaluări

- Functions of Theory in ResearchDocument2 paginiFunctions of Theory in ResearchJomariMolejonÎncă nu există evaluări

- The Pneumatics of Hero of AlexandriaDocument5 paginiThe Pneumatics of Hero of Alexandriaapi-302781094Încă nu există evaluări

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- SCD Course List in Sem 2.2020 (FTF or Online) (Updated 02 July 2020)Document2 paginiSCD Course List in Sem 2.2020 (FTF or Online) (Updated 02 July 2020)Nguyễn Hồng AnhÎncă nu există evaluări

- Ethernet/Ip Parallel Redundancy Protocol: Application TechniqueDocument50 paginiEthernet/Ip Parallel Redundancy Protocol: Application Techniquegnazareth_Încă nu există evaluări

- Trucks Part NumbersDocument51 paginiTrucks Part NumbersBadia MudhishÎncă nu există evaluări

- Community Profile and Baseline DataDocument7 paginiCommunity Profile and Baseline DataEJ RaveloÎncă nu există evaluări

- Hofstede's Cultural DimensionsDocument35 paginiHofstede's Cultural DimensionsAALIYA NASHATÎncă nu există evaluări

- Stability Analysis of Geocell Reinforced Slopes by Considering Bending EffectDocument13 paginiStability Analysis of Geocell Reinforced Slopes by Considering Bending EffectRakesh KapoorÎncă nu există evaluări

- HRM Practices in NepalDocument22 paginiHRM Practices in NepalBodhiÎncă nu există evaluări

- Spanish Greeting Card Lesson PlanDocument5 paginiSpanish Greeting Card Lesson Planrobert_gentil4528Încă nu există evaluări

- Management PriniciplesDocument87 paginiManagement Priniciplesbusyboy_spÎncă nu există evaluări

- Transportation of CementDocument13 paginiTransportation of CementKaustubh Joshi100% (1)

- Cash Flow July 2021Document25 paginiCash Flow July 2021pratima jadhavÎncă nu există evaluări

- Service Quality Dimensions of A Philippine State UDocument10 paginiService Quality Dimensions of A Philippine State UVilma SottoÎncă nu există evaluări

- Multiple Choice Practice Questions For Online/Omr AITT-2020 Instrument MechanicDocument58 paginiMultiple Choice Practice Questions For Online/Omr AITT-2020 Instrument Mechanicمصطفى شاكر محمودÎncă nu există evaluări

- Executive Summary: 2013 Edelman Trust BarometerDocument12 paginiExecutive Summary: 2013 Edelman Trust BarometerEdelman100% (4)

- School Based Management Contextualized Self Assessment and Validation Tool Region 3Document29 paginiSchool Based Management Contextualized Self Assessment and Validation Tool Region 3Felisa AndamonÎncă nu există evaluări

- Industrial ReportDocument52 paginiIndustrial ReportSiddharthÎncă nu există evaluări

- "Tell Me and I Forget, Teach Me and I May Remember, Involve MeDocument1 pagină"Tell Me and I Forget, Teach Me and I May Remember, Involve MeBesufkad Yalew YihunÎncă nu există evaluări

- Blackberry: Terms of Use Find Out MoreDocument21 paginiBlackberry: Terms of Use Find Out MoreSonu SarswatÎncă nu există evaluări

- 2014 - Intelligence As A Predictor of Outcome in Short-And Long-Term PsychotherapyDocument9 pagini2014 - Intelligence As A Predictor of Outcome in Short-And Long-Term PsychotherapyZayne CarrickÎncă nu există evaluări

- Puma PypDocument20 paginiPuma PypPrashanshaBahetiÎncă nu există evaluări

- Wine TourismDocument9 paginiWine Tourismyarashovanilufar1999Încă nu există evaluări

- Principled Instructions Are All You Need For Questioning LLaMA-1/2, GPT-3.5/4Document24 paginiPrincipled Instructions Are All You Need For Questioning LLaMA-1/2, GPT-3.5/4Jeremias GordonÎncă nu există evaluări

- ChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindDe la EverandChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindÎncă nu există evaluări

- ChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessDe la EverandChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessÎncă nu există evaluări

- The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldDe la EverandThe Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldEvaluare: 4.5 din 5 stele4.5/5 (107)

- ChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveDe la EverandChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveÎncă nu există evaluări

- Scary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldDe la EverandScary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldEvaluare: 4.5 din 5 stele4.5/5 (55)

- Generative AI: The Insights You Need from Harvard Business ReviewDe la EverandGenerative AI: The Insights You Need from Harvard Business ReviewEvaluare: 4.5 din 5 stele4.5/5 (2)