Documente Academic

Documente Profesional

Documente Cultură

T0086

Încărcat de

lakshmiseenaDescriere originală:

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

T0086

Încărcat de

lakshmiseenaDrepturi de autor:

Formate disponibile

AN ANALYTICAL APPROACH FOR REAL TIME

INTRUSION DETECTION USING MACHINE

LEARNING PARADIGM

A THESIS

Submitted by

NAVEEN N C

In Partial Fulfillment of the Requirements

for the Degree of

DOCTOR OF PHILOSOPHY

DEPARTMENT OF COMPUTER SCIENCE

AND ENGINEERING

SRM UNIVERSITY, KATTANKULATHUR- 603 203

JANUARY 2013

ii

DECLARATION

I hereby declare that the dissertation entitled AN ANALYTICAL

APPROACH FOR REAL TIME INTRUSION DETECTION USING

MACHINE LEARNING PARADIGM submitted for the Degree of Doctor of

Philosophy is my original work and the dissertation has not formed the basis for the

award of any degree, diploma, associateship or fellowship of similar other titles.

It has not been submitted to any other University or Institution for the award of any

degree or diploma.

Place: Chennai

Date: NAVEEN N C

iii

SRM UNIVERSITY, KATTANKULATHUR 603 203

BONAFIDE CERTIFICATE

Certified that this thesis titled AN ANALYTICAL APPROACH FOR

REAL TIME INTRUSION DETECTION USING MACHINE LEARNING

PARADIGM is the bonafide work of Mr. N.C. Naveen who carried out the

research under my supervision. Certified further, that to the best of my knowledge

the work reported herein does not form part of any other thesis or dissertation on the

basis of which a degree or award was conferred on an earlier occasion for this or any

other candidate.

Dr. R.SRINIVASAN

SUPERVISOR

Professor- Emeritus

Directorate of Research

SRM University

Kattankulathur - 603203

iv

ACKNOWLEDGEMENTS

It gives me immense pleasure to thank and express my gratitude towards

my supervisor Dr. R Srinivasan, for his support throughout the course of my study.

His constant motivation, support and expert guidance have helped me to overcome

all odds making this journey a truly rewarding experience in my life. I thank him

from the bottom of my heart.

I would like to thank Dr. S Natarajan, Professor, Department of ISE,

PESIT for his valuable feedback and critical reviews which has highly contributed

towards the quality of the thesis. His wide knowledge and guidance have been a

great help and has provided a good basis for the present thesis. I also express my

sincere gratitude for spending his valuable time in reviewing and evaluating my

research work.

I am extremely thankful to Sir. Dr. M Ponnavaikko, Vice Chancellor,

SRM University, for having given the necessary importance to research at the

University and who has always wished SRM to be on the top of research fraternity.

I would like to express my heartfelt gratitude and thanks to my most

respectful Dr. C Muthamizhchelvan, Director of Faculty of Engineering and

Technology, SRM University, for having provided the necessary infrastructure for

carrying out this research successfully.

My thanks are due to Dr. S Ponnusamy, Controller of Examinations,

SRM University, for providing the necessary and timely support in the preparation

of synopsis of my research and this thesis. His advice and kind approach towards

research scholars shall not be forgotten by those who have interacted with him.

I am indebted to the Dr. E Poovammal, Head of the Department, and

staff both teaching and non teaching of Department of Computer Science who

have been extremely helpful at numerous occasions.

v

I am grateful for the support received from Dr. S V Kasmir Raja, Dean

(Research), SRM University, Chennai and for the tremendous support from the

staff at the university libraries and various other university resources.

I am extremely grateful to R V College of Engineering, Bangalore for

providing the support in the form of sponsorship and provide the audit data set to

carry out my research work.

I am grateful to all the members of doctoral committee for their remarks

and comments, without their insightful suggestions, this thesis would not have been

complete.

I would like to thank all my colleagues in ISE Department, RVCE for

their constant support throughout my research work. I am thankful to all the

students, faculty, non-teaching staff of RVCE who have directly or indirectly helped

me in completing the work.

Special thanks to my family for their patience and inspiration that

supported me in successfully completing my research. Finally, I would like to

express my deepest gratitude to my parents and siblings for their unwavering

confidence in my ability that helped me to accomplish my academic dreams.

vi

ABSTRACT

Recent work adopts a wide range of Artificial Intelligence techniques in

Intrusion Detection Systems. Another domain of research in this paradigm is Data

Mining that offers flexibility and has been a focus of research in the recent years.

Intrusion detection can be automated by making the system learn using

classifiers/clusters from a training set. A benefit of Machine Learning is that the

techniques are capable of generalizing from known attacks to variations, or even can

detect new types of intrusion. Recent research focuses more on the hybridization of

techniques to improve the detection rates of Machine Learning classifiers. Artificial

Neural Networks and Decision Trees have been applied to develop Intrusion

Detection Systems and have become popular. Several evaluations performed to date

indicate that Intrusion Detection Systems are moderately successful in identifying

known intrusions and quite a bit worse at identifying those that have not been seen

before. This provides a prospect area for research and commercial communities to

design Intrusion Detection Systems.

The research work presented in this thesis models the Intrusion Detection

System by ensemble approach using Outlier Detection, Change Point and Relevance

Vector Machines. The current new hybrid detection model developed combines the

individual base classifiers and Machine Learning paradigms to maximize detection

accuracy and minimize computational complexity. Results illustrate that the

proposed hybrid systems provide more accurate detection rate. Real time dataset is

used in the experiments to demonstrate that Relevance Vector Machines can greatly

improve the classification accuracy and the approach achieves higher detection rate

with low false alarm rates and is scalable for large datasets, resulting in an effective

Intrusion Detection System.

We first introduce the Single Layer Feedforward Network as the core

intrusion detector. This can be used to build a scalable and efficient network

Intrusion Detection Systems which are accurate in attack detection. Experimental

vii

results further demonstrate that our system is robust and is better than other systems

such as the Decision Trees and the Naive Bayes. We then introduce a logging

framework for network data collection and perform modeling using Change Point

and Outlier Detection to build real time Intrusion Detection System. Experimental

results performed on data sets collected in real time confirm that Change Point and

Outlier Detection algorithms are particularly well suited to detect attacks.

Experiments were also conducted with Support Vector Machines and compared

performance of the Decision Trees with this model.

In my research work a new method for developing Intrusion Detection

System using Relevance Vector Machine is implemented. Compared with other

classifier algorithms, Relevance Vector Machine has the advantage of utilizing the

sparseness and reduces the false alarms while still maintaining desirable detection

rate.

viii

TABLE OF CONTENTS

CHAPTER NO. TITLE PAGE NO.

ABSTRACT vi

LIST OF TABLES xiv

LIST OF FIGURES xv

LIST OF SYMBOLS AND ABBREVIATIONS xvii

1 INTRODUCTION 1

1.1 INTRUSION DETECTION 3

1.2 DEFINITIONS AND TERMINOLOGY 4

1.3 OVERVIEW OF POTENTIAL INTRUSIONS 5

1.3.1 Network - Based 5

1.3.2 Network Behavior Anomaly

Detection (NBAD) 5

1.3.3 Host Based IDS 6

1.3.4 Types of Attacks 6

1.3.4.1 DoS Attacks 6

1.3.4.2 User to Root (U2R) 6

1.3.4.3 Remote to Local (R2L) 7

1.3.5 A General IDS Architecture 7

1.3.6 Characteristics of IDS 9

1.3.6.1 Audit Source Location 10

1.3.6.2 Detection Methods 11

1.3.6.3 Behavior of Detection 14

1.3.6.4 Usage Frequency 14

1.4 DETECTION APPROACHES 16

1.4.1 Stateful (Event Correlation)

Intrusion Detection 16

1.4.2 Stateless Intrusion Detection 17

ix

CHAPTER NO. TITLE PAGE NO.

1.5 ARTIFICIAL INTELLIGENCE (AI)

TECHNIQUES APPLIED TO IDS 19

1.5.1 Change Point (CP) 20

1.5.2 Decision Trees 21

1.5.3 Feature Selection 22

1.5.4 Support Vector Machines (SVM) 22

1.5.5 Relevance Vector Machines (RVM) 23

1.6 AREAS OF RESEARCH 23

1.7 CONTRIBUTION AND NOVELTY 25

1.8 STRUCTURE OF THE THESIS 26

2 LITERATURE SURVEY 27

2.1 CURRENT IDS PRODUCTS 28

2.2 OUTLIER DETECTION (OD) 32

2.3 STATISTICAL BASED ANOMALY DETECTION 32

2.4 MACHINE LEARNING FOR ANOMALY

DETECTION 34

2.5 MACHINE LEARNING VERSUS

STATISTICAL TECHNIQUES 35

2.6 INSTANCE BASED LEARNING (IBL) 35

2.7 CHANGE POINT TECHNIQUE 36

2.7.1 Coefficient of Variation 37

2.7.2 Chauvenets Criterion 37

2.7.3 Peirces Criterion 37

2.7.4 CUSUM (CUmulative SUM) 38

2.7.5 Generalized Likelihood Ratio (GLR) 38

2.7.6 DDR (Direct Density Ratio) 38

2.8 APPLICATION OF DATA MINING (DM)

IN DEVELOPING IDS 39

2.8.1 Artificial Neural Networks (ANN) 40

x

CHAPTER NO. TITLE PAGE NO.

2.8.2 Feed Forward Neural Networks (FFNN) 40

2.8.2.1 Multi Layered Feed Forward (MLFF)

Neural Networks 40

2.8.2.2 Radial Basis Function Neural

Networks (RBFNN) 41

2.8.3 Recurrent Neural Networks (RNN) 42

2.8.4 Self Organizing Maps (SOM) 42

2.8.5 Bayesian Networks (BN) 44

2.8.6 Decision Trees (DT) 45

2.8.7 Support Vector Machines (SVM) 46

2.9 IMPORTANCE OF FEATURE

SELECTION FOR IDS 51

2.10 RELEVANCE VECTOR MACHINES (RVM) 52

2.11 CURRENT STATE OF IDS 53

2.11.1 Intrusion Prevention System (IPS) 54

2.11.1.1 Rate based IPS 55

2.11.1.2 Content based IPS 56

2.11.2 Intrusion Response System (IRS) 56

2.11.2.1 Static Decision Making 57

2.11.2.2 Dynamic Decision Making 57

2.11.3 Artificial Immune Systems 57

3 LOG AND AUDIT DATA COLLECTION

FRAMEWORK 58

3.1 INTRODUCTION 58

3.2 REQUIREMENTS OF IDS 61

3.3 PROBLEMS IN DETECTING

NETWORK ATTACKS 61

3.4 GENERAL FRAMEWORK OF

PROPOSED IDS 62

xi

CHAPTER NO. TITLE PAGE NO.

3.4.1 Data Collection Framework 64

3.4.1.1 TCP/IP Protocol 66

3.5 ARCHITECTURE OF COMPUTING

INFRASTRUCTURE 67

3.5.1 Normal Data Collection 68

3.5.2 Collection of Attack Data 68

3.6 PACKET CAPTURE MODULE 71

3.7 SUMMARY 82

4 ANOMALY DETECTION USING

NEURAL NETWORKS 83

4.1 INTRODUCTION 83

4.2 ENSEMBLE OF APPROACHES 84

4.2.1 Offline Processing 85

4.2.2 Multi Sensor Correlation 85

4.3 SURVEY OF AVAILABLE IDS PACKAGES 86

4.4 OPEN PROBLEMS IN THE DESIGN OF IDS 87

4.4.1 Feature Selection 87

4.4.2 Visualization 88

4.4.3 Predictive Analysis 88

4.5 APPLICATION OF ARTIFICIAL NEURAL

NETWORKS (ANN) IN IDS 89

4.5.1 Bayesian Networks (BN) 92

4.5.2 Nave Bayes (NB) Classification 93

4.6 HYBRID OR ENSEMBLE CLASSIFIERS 96

4.7 CONSTRUCTION OF CLASSIFIER MODEL 97

4.8 MULTI LAYER PERCEPTRONS (MLP) 99

4.9 ARCHITECTURE OF THE MODEL USING SLFN 100

4.9.1 Training Phase 103

4.9.2 Detection Phase 103

xii

CHAPTER NO. TITLE PAGE NO.

4.9.3 Selection of Layers in MLP 103

4.9.3.1 The Input Layer 103

4.9.3.2 The Output Layer 103

4.9.3.3 The Hidden Layers 103

4.10 PROPOSED SLFN ALGORITHM 108

4.11 EXPERIMENTAL RESULTS 108

4.12 SUMMARY 113

5 CHANGE POINT AND OUTLIER DETECTION

FROM NETWORK DATA 114

5.1 INTRODUCTION 114

5.1.1 Signature Detection (SD) 115

5.1.2 Anomaly Detection (AD) 115

5.1.3 Denial of Service (DoS) Detection 116

5.1.4 Data Mining and Machine Learning 117

5.2 MACHINE LEARNING BASED IDS 118

5.3 STATISTICS BASED IDS 121

5.4 MACHINE LEARNING VERSUS STATISTICAL

TECHNIQUES 122

5.5 OUTLIER DETECTION (OD) 122

5.6 PROPOSED ARCHITECTURE OF OUTLIER

DETECTION AND CLASSIFICATION MODULE 127

5.6.1 Dataset Description 130

5.6.2 Proposed Change Point Outlier

Detection (CPOD) Algorithm 131

5.7 STRUCTURE CHART 135

5.8 IMPORTANCE OF SELECTING OPTIMAL

SUBSET OF FEATURES 135

5.9 EXPERIMENTAL RESULTS 137

5.10 SUMMARY 142

xiii

CHAPTER NO. TITLE PAGE NO.

6 APPLICATION OF SVM AND RVM

IN DEVELOPING IDS 143

6.1 INTRODUCTION 143

6.2 RELATION BETWEEN DM, ML

AND STATISTICS 145

6.3 SVM TRAINING AND CLASSIFICATION 149

6.4 THE KERNEL MAPPING 153

6.5 RVM TRAINING AND CLASSIFICATION 155

6.6 METHODOLOGY AND PROPOSED

ARCHITECTURE 158

6.6.1 R Statistical Tool 161

6.6.2 RBF Networks 162

6.7 EXPERIMENTAL RESULTS 165

6.8 PERFORMANCE ANALYSIS OF RVM AND SVM 167

7 CONCLUSION 169

7.1 MAJ OR CONTRIBUTIONS AND NOVELTY 171

7.2 FUTURE WORK 173

7.3 SIGNIFICANT CHALLENGES AND OPEN ISSUES 174

REFERENCES 175

APPENDIX 1 GLOSSARY OF TECHNICAL TERMS 188

APPENDIX 2 ATTACK DESCRIPTION 190

LIST OF PUBLICATIONS 192

VITAE 193

xiv

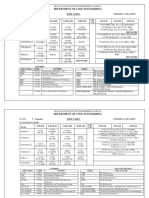

LIST OF TABLES

TABLE NO. TITLE PAGE NO.

2.1 Ideal requirements of IDS 27

2.2 Leading IDS products currently available 29

3.1 Types of attacks generator 69

3.2 List of network dataset attributes selected 74

3.3 Data set statistics for a month 81

4.1 Types of attack classes 110

4.2 Number of examples considered 110

4.3 Comparison results 111

4.4 Result analysis of SLFN v/s Nave Bayesian algorithm 111

5.1 Real time data collected 137

5.2 Average packet rate for one week 138

6.1 The error obtained by the RVM with different

parametric values 166

6.2 The error obtained by the SVM with different

parametric values 166

6.3 Comparison between RVM and SVM models 166

xv

LIST OF FIGURES

FIGURE NO. TITLE PAGE NO.

1.1 Cyber intrusion during Mar 2012 Sep 2012 2

1.2 General architecture of IDS 7

3.1 General framework of proposed IDS 63

3.2 Framework for packet capture process 64

3.3 Campus network diagram for collecting

normal and attack data 67

3.4 Flow chart for packet capture 72

3.5 Flow chart for the packet preprocessing

and Feature extractor 75

3.6 Traffic pattern in the course of a working day (Monday) 76

3.7 TCP Packet count in the course of a day (Monday) 76

3.8 TCP statistics in a course of a working day (Monday) 77

3.9 UDP Packet count in the course of a day (Monday) 77

3.10 UDP statistics in a course of a working day (Monday) 78

3.11 Number of connections in a course of a working

day (Monday) 78

3.12 Connection statistics in the course of a week 79

3.13 Traffic statistics in the course of a week 79

3.14 Traffic statistics in the course of a month 80

3.15 Average packet count in the course of a day 80

4.1 General block diagram of ANN 90

4.2 A framework of SLFN 99

4.3 Block diagram of the model using SLFN 99

4.4 Flow chart for the training phase of neural networks 102

4.5 Flow chart for the anomaly detection using

neural networks 105

4.6 Initial screen 109

xvi

FIGURE NO. TITLE PAGE NO.

4.7 Packet capture screen 109

4.8 Use of WEKA tool for analysis 110

4.9 ROC Curves for 3 weeks data 112

5.1 Architecture of the model using CP and OD 127

5.2 Flow chart for change point and outlier detection 129

5.3 Structure chart of the research work 135

5.4 Initial packet capture screen 137

5.5 Outlier detected for Table 5.2 data 138

5.6 Plot of real time data and detection of change

point and outliers 139

5.7 Plot of real time data and detection of change

point and outliers 139

5.8 Plot of real time data collected for week 1 140

5.9 Plot of real time data collected for week 2 140

5.10 CUSUM analysis 140

5.11 Standard deviation analysis 141

5.12 Outlier detected for the data given in Table 5.2 141

5.13 Snapshot showing threshold of IP addresses captured 141

6.1 Relation between DM, ML and statistics 145

6.2 (a) Original data in the input space.

(b) Mapped data in the feature space 151

6.3 Architecture of the RVM model 159

6.4 RBF networks 163

6.5 ROC curve for the data 165

6.6 Performance chart of SVM 165

6.7 ROC curve obtained for RVM and SVM models 167

xvii

LIST OF SYMBOLS AND ABBREVIATIONS

AD - Anomaly Detection

ADS - Active Directory Service

AI - Artificial Intelligence

AIS - Artificial Immune Systems

ANN - Artificial Neural Networks

ARP - Address Resolution Protocol

BBID - Behavior Based Intrusion Detection

BN - Bayesian Networks

CBR - Case Based Reasoning

CP - Change Point

CUSUM - CUmulative SUM

CV - Coefficient of Variation

DDIS - Distributed Intrusion Detection System

DDoS - Distributed Denial of Service

DHCP - Dynamic Host Control Protocol

DM - Data Mining

DNA - Dynamic Network Analysis

DoS - Denial of Service

DR - Detection Rates

DT - Decision Trees

ELM - Extreme Learning Machines

ENN - Evolutionary Neural Network

ERM - Empirical Risk Minimization

ES - Expert Systems

FFNN - Feed Forward Neural Networks

FL - Fuzzy Logic

FN - False Negative

FP - False Positive

xviii

FPR - False Positive Rate

FS - Feature Selection

FSM - Finite State Machines

GA - Genetic Algorithms

GLR - Generalized Likelihood Ratio

HIDS - Host - based Intrusion Detection Systems

HMM - Hidden Markov Models

IBL - Instance Based Learning

ICMP - Internet Control Message Protocol

IDES - Intrusion Detection Expert System

IDS - Intrusion Detection Systems

IDS/IPS - Intrusion Prevention and Detection System

IG - Information Gain

ILP - Inductive Logic Procedures

IP - Internet Protocol

IPS - Intrusion Prevention System

IRS - Intrusion Response System

KB - Knowledge Based

KDD - Knowledge Discovery Databases

KNN - k-Nearest Neighbor

LSSVM - Least Squares SVM

MD - Misuse Detection

MFFNN - Multilayer Feed Forward Neural Networks

ML - Machine Learning

MLP - Multi Layer Perceptron

NA - Network Analyzers

NB - Nave Bayes

NBAD - Network Behavior Anomaly Detection

NIDES - Next-generation Intrusion Detection Expert System

NIDS - Network Intrusion Detection System

NN - Neural Network

OD - Outlier Detection

xix

OSI - Open Systems Interconnection

POD - Ping Of Death

PSVM - Proximal SVM

R2L - Remote to Local

RBF - Radial Basis Function

RBFNN - Radial Basis Function Neural Networks

RBS - Rule Based Systems

RL - Reinforcement Learning

RNN - Recurrent Neural Networks

ROC - Receiver Operating Characteristics

RSVM - Robust SVM

RV - Relevance Vectors

RVM - Relevance Vector Machine

SD - Signature Detection

SLFFN - Single Layer Feed Forward Networks

SLFN - Single-Hidden Layer Feed Forward Neural Network

SOM - Self Organizing Maps

SRM - Structural Risk Minimization

SSH - Secure Shell

SSL - Secure Socket Layer

SV - Support Vectors

SVM - Support Vector Machine

TCP - Transmission Control Protocol

TN - True Negative

TP - True Positive

U2R - User to Root

UDP - User Datagram Protocol

WEKA - Waikato Environment for Knowledge Analysis

WIPS - Wireless Intrusion Prevention Systems

1

CHAPTER 1

INTRODUCTION

With the advances in network based technology reliable operation of

network based systems plays a prominent role. The ability to detect intruders in

computer systems is important as computers are increasingly integrated into the

systems that we rely on. Internet security is a critical factor in the performance of an

enterprise which affects everything from business to cost management. Catastrophic

Internet attacks can disrupt business operations and hence security expertise is more

valuable. Recent research shows number of attacks on networks has dramatically

increased and consequently interest in analysis of network intrusion has increased

among the researchers. During the past decade as well as current, it has become

important to evaluate Machine Learning (ML) techniques for network based

Intrusion Detection Systems (IDS).

Several factors, such as choice of data set, method of validation and data

preprocessing, are found to affect the detection results significantly. These findings

have also enabled a better interpretation of the current body of research. Due to the

nature of the intrusion detection domain, there is an extreme discrepancy among the

data set, which poses a significant challenge to ML. Researchers have demonstrated

that well known techniques such as Artificial Neural Networks (ANN) and Decision

Trees (DT) have often failed to learn. However, this is not been recognized as an

issue in intrusion detection previously. But investigation demonstrates that it is the

class imbalance that causes the poor detection of some classes of intrusion.

Internet with its numerous benefits has also created different ways to

compromise the security and stability of the systems connected to it. Recently,

137529 incidents were reported to CERT/CC while in 1999, there were 9859

2

reported incidents. Around 86 attacks are reported on computer systems in the

United States that control critical infrastructure, factories and databases, according

to the Department of Homeland Security during Oct 2011 May 2012, compared

with 11 over the same period a year ago [1]. Security management operations

protect computer networks against Denial of Service (DoS) attacks, unauthorized

access to critical information, and modification, updation or destruction of data. The

automated detection and immediate reporting of these unauthorized events are

required to provide a timely response to attacks.

Figure 1.1 Cyber Intrusion during Mar 2012 Sep 2012: CERT-In monthly

security bulletin

With an ever growing use of computer technology, security for computer

has become important, both for work as well as for personal use. People using

computers are at some risk of intrusion, even if the computer is not connected to the

Internet or any other network. Intruder can attempt to access and misuse the system

if the computer is left unattended for a longer time. The problem is more if the

computer is connected to a network, particularly the Internet. Users from around the

world can reach the computer remotely and may attempt to access

private/confidential information or launch some form of an attack to bring the

system to a halt or cease to function effectively.

Section 1.1 provides an introduction to intrusion detection. Related

terminology and definitions that are used in this thesis are presented in Section 1.2.

Section 1.3 presents an overview of potential intrusions to computers and computer

3

networks. Taxonomy of IDS is provided in Section 1.4 and Section 1.5, which

includes a discussion of the main approaches to detecting intrusions. Main areas of

research and emerging area of research in intrusion detection is discussed in

Section 1.6.

1.1 INTRUSION DETECTION

Intrusion detection is the process of monitoring and analyzing events that

occur in a computer or networked computer system. Detection is carried out by

analyzing the behavior of users that conflict with the intended use of the system.

Any user using a computer will be at some risk of intrusion, even though the

computer is not connected to the Internet. If the computer is left unattended, any

intruder can attempt to access and try to misuse the system. The problem is much

more if the computer is connected to a network, particularly the Internet. Any user

from around the world can reach the computer remotely. Intruder may attempt to

access important private or confidential information or launch a form of attack to

bring the system to a halt or cease to function effectively. An intrusion to a

computer system does not need to be executed manually by a person. It may be

executed remotely and automatically with engineered software. A well known

example of this is the Slammer worm also known as Sapphire, which performed a

global DoS attack in 2003. The worm exploited vulnerability in Microsofts SQL

Server that disabled database servers and overloaded networks. Moore et. al [2] refer

to Slammer as the fastest computer worm in history, that infected many computer

systems around the world within ten minutes. Not only did the Slammer worm

restrict the general Internet traffic, it caused network outages and unforeseen

consequences such as cancelled airline flights, interference with elections, and

communication failures.

Malware is a kind of malicious software that intentionally harms a

computer or computer system. Individuals may not have much at stake if they are

targeted by a cyber attack, but it is a serious threat to enterprises and government

organizations. A survey conducted by the Web Application Security Consortium

(2008) revealed that more than 60% of attacks are profit motivated. There are many

4

examples in recent news of cyber attacks. For example, early in 2010, US power

grid revealed that they had been infiltrated by an intruder, leaving malware that was

capable of shutting down the entire grid. Later in the same year, a major spy

network GhostNet located in China claimed to have infiltrated to more than

1000 computers around the world. Similarly the Russian military was accused of

launching DoS attacks against Georgia during the war over South Ossetia. From

these examples, it is very clear that cyber attacks are threat to national security. This

has prompted President Barack Obama to initiate a national cyber security body in

the USA in May 2009, followed shortly by the UK.

There are several mechanisms that can be adopted to increase the security

in computer systems. Three levels of protection is of more importance namely attack

prevention, attack avoidance and attack detection that is explained in

Section 1.3.6.2.

1.2 DEFINITIONS AND TERMINOLOGY

IDS are software or a physical medium that monitor network traffic in

order to detect unwanted activity and events such as illegal and malicious traffic and

traffic that violates security policy. IDS employ techniques for modeling and

recognizing intrusive behavior in a computer system. Intrusive behavior is

considered as any behavior that deviates from normal, expected, use of the system.

IDS share many of the challenges of detecting fraud and fault management or

localization. Although, these are not the major focus of the thesis, there is an overlap

between these domains, especially for event correlation. There are many types of

intrusion, which makes it difficult to define it in a single term.

The capability and performance of IDS is normally measured using the

following terms:

a. True Positive (TP): A system that classifies an intrusion as an

intrusion. The TP rate also known as detection rate, sensitivity and

recall often used in several literature.

5

b. False Positive (FP): A system that incorrectly classifies normal

data as an intrusion that is also known as a false alarm.

c. True Negative (TN): A system that correctly classifies normal data

as normal. The TN rate is also known as specificity.

d. False Negative (FN): A system that incorrectly classifies an

intrusion as normal.

1.3 OVERVIEW OF POTENTIAL INTRUSIONS

There are several types of IDS technologies due to the variance of

network configurations. Each type has advantages and disadvantages in terms of

detection, configuration, and cost.

1.3.1 Network - Based

A Network Intrusion Detection System (NIDS) is one common type of

IDS that analyzes network traffic at all seven layers of the Open Systems

Interconnection (OSI) model and analyzes for suspicious activity. NIDS are easy to

deploy on any network and are capable of viewing traffic from many systems at

once. A new research area is Wireless Intrusion Prevention System (WIPS) that

monitors and analyzes the wireless radio spectrum in a network for intrusions and

performs countermeasures.

1.3.2 Network Behavior Anomaly Detection (NBAD)

NBAD analyzes traffic on network segments to determine if anomaly

exists in the amount or type of traffic. If an unwanted event occurs, segments that

see very little traffic transform the amount or type of traffic. NBAD requires sensors

that create a good snapshot of a network and requires benchmarking and base lining

to determine the nominal amount of a segments traffic.

6

1.3.3 Host Based IDS

Host based Intrusion Detection Systems (HIDS) analyze network traffic

and system specific settings such as software calls, local security policy, local log

audits, and more. A HIDS must be installed on every machine and requires a

configuration which is specific to that operating system and software.

1.3.4 Types of Attacks

1.3.4.1 DoS Attacks

These attacks interrupt services on a host by preventing it from dealing

with certain requests. This is a step in multi stage attack which is destructive that

crash a host or prevents it from functioning properly. There are three types of DoS

attacks:

1. Bugs in trusted programs can be used by an attacker to gain

unauthorized access to a computer system. Specific examples of

implementation bugs are buffer overflows, race conditions, and

mishandling of temporary files.

2. Creation of malformed packets that confuse the Transmission

Control Protocol/Internet Protocol (TCP/IP) stack of the machine

that is trying to reconstruct the packet.

3. Fooling a system by misrepresenting oneself and giving access.

1.3.4.2 User to Root (U2R)

These attacks exploit vulnerabilities in operating systems and software to

obtain root or administrator access to the system. For example consider the buffer

overflow attack. A buffer overflow occurs when the program writes more

information into the buffer space than the memory it has allocated. This allows an

attacker to overwrite data that controls the program execution path and seize the

control of the program to execute the attackers code instead the process code. Poor

7

programming practices and software bugs are the major risk factors. An effective

solution to the buffer overflow problem is to employ secure coding. While no

security measure is perfect, avoiding programming errors is always the best solution.

1.3.4.3 Remote to Local (R2L)

There are some similarities between this class of intrusion and U2R, as

similar kind of attacks may be carried out. In this case the attacker does not have an

account on the host and attempts to obtain local access across a network connection.

To achieve this, the attacker can execute buffer overflow attacks and exploit

configurations. With this the attacker may obtain data by misguiding a human

operator, rather than targeting software flaws. These classes may be used in IDS for

classifying intrusions, rather than only differentiating between normal and

intrusion. This will give more information about the type of intrusion, which may

affect the chosen method of reporting and acting on the suspected detection. Some

known events may be classified as an intrusion while other events needs to be

observed in the context of one or more events before they are classified as intrusion.

This could lead to repetition of the same event or a completely different event but

still IDS should be able to recognize simple, single event, attacks as well as

complex, multiple event attacks. As an example, Ping of Death attack may cause the

system to crash by sending large ping packets to a host.

1.3.5 A General IDS Architecture

In this section the general IDS architecture is discussed. In general the

common building blocks of IDS are as in Figure 1.2.

Figure 1.2 General architecture of IDS

Network Sniffers

Log Files

Special Monitoring

Module

Network Adapters and

Parsers

Data Preprocessing

Diagnosis and

Analysis of Data

Post Processing and

Expected Alarm class

ALARM

8

IDS in general consist of the following components:

1. Data Collection Phase: For an accurate detection of intrusion,

reliable and complete data about the target systems activities is

essential. Reliable data collection is a very complex issue and most

operating systems offer some form of auditing. Data used for

intrusion detection can be collected as:

a. User access patterns: The sequence of commands issued at the

terminal and the resources requested.

b. Network packet level features: Source and destination IP

addresses, type of packets and rate of occurrence of packets.

c. Application and system level behavior: Data collected from the

sequence of system calls generated by a process, which is also

known as audit patterns. These logs might contain security

relevant events, such as failed login attempts or they might log a

complete report on every system call invoked by every process.

Similarly, routers and firewalls provide event logs for network

activity that logs information, such as network connection

openings and closings, or a complete record of each packet. The

amount of system activity information that a system collects is a

tradeoff between overhead and effectiveness. A system that

records every action in detail might substantially degrade the

performance and requires huge disk storage. Collecting

information is an expensive task but collecting the right

information is important. Determining what information to log

and where and how to collect it is an open research problem.

2. Data Preprocessing Phase: This phase is responsible for collecting

and providing the log data in the specified form that will be used by

the Diagnosis phase to make a decision. Data preprocessor is, thus,

concerned with collecting the data from the desired source and

converting it into a format that is comprehensible by the analyzer.

9

3. Diagnosis and Analysis Phase: The analysis or the intrusion detector

phase is the core component which analyzes the audit patterns to

detect attacks. This is a critical component and one of the most

researched phases. Various pattern matching algorithms, ML, Data

Mining (DM) and statistical techniques can be used as intrusion

detectors. The capability of the analyzer to detect an attack often

determines the strength of the overall IDS.

4. Post Processing and Expected Alarm class: This phase controls the

mechanism to react and determine the best way to respond when the

analyzer detects an attack. The system either raises an alert without

taking any action against the source or blocks the source for a

predefined period of time. This action depends upon the security

policy that is predefined in the IDS.

Issues to validate whether the predictions made is correct and related to

the actual behavior of IDS implementations is the real challenge. A systematic and

complete validation would require that the predictions made by the approach are

compared with the behavior of actual IDS implementations. Such an activity would

represent an enormous challenge and precisely exemplifies the problem, which the

work attempts to address. It would be required that one or several rather complex

environments are built such that IDS can be analyzed under different conditions.

However, the most challenging aspect of any such undertaking of validation would

be the number and diversity of individual tests to be executed.

1.3.6 Characteristics of IDS

IDS can be classified into four characteristics as:

1. Audit source location : Host based Detection or Network based

detection

2. Detection method : Misuse Detection or Anomaly Detection

3. Behavior of detection : Passive or Active Detection

4. Usage frequency : Real time or Off line Detection

10

1.3.6.1 Audit Source Location

Normally IDS operates on one of two levels either on a host or a

network. A host based IDS monitors the local behavior on a single host. This is

performed by analyzing status or performance of a system. Similarly application

based IDS detect attacks against specific applications.

Recent research trend is towards network based IDS that analyze network

traffic. Currently there are IDSs that support both host based and network based

intrusion detection. Some of the limitations and challenges of network based IDS

include:

a. Not able to detect all forms of intrusion attacks, since some may not

generate network traffic.

b. Not able to deal with encrypted data via Secure Socket Layer (SSL)

connections and Secure Shell (SSH).

c. Detecting attacks on different operating system platforms.

Some of the limitations of host based IDS are:

a. Analysis of network activity that may be a part of an attack process

on the host.

b. Vulnerability of the system if an attacker obtains root/administrator

access.

From the points discussed, it is clear that the two types of IDS can

complement each other that achieve a broader coverage of detection.

11

1.3.6.2 Detection Methods

There are two main detection methods:

1. Misuse Detection (MD) or Knowledge Based (KB): Attempts to

detect knowledge by encoding known intrusions, typically as rules,

and use this to analyze events.

2. Anomaly Detection (AD) or Behavior Based Intrusion Detection

(BBID): Attempts to learn from the features of event patterns that

constitute normal behavior.

By observing patterns that deviate from established norms the system

detects that an intrusion has occurred. Some IDS offer both capabilities by

hybridization techniques. However, a system may also be modeled according to both

normal and intrusive data, which has become a common approach in recent research

which adopts ML techniques. MD is successful commercially but cannot detect

attacks for which it has not been programmed. It is prone to issue false negatives if

the system is not kept up to date with the latest intrusions. On the other hand MD

systems generally produce few false positives. Currently MD has incorporated

techniques that allow more flexibility and capability of detecting more variations of

attacks. This is made possible with ML techniques such as ANN which are built to

be able to generalize their models of known attacks to classify unseen cases. The

major benefit of AD is the ability to detect new attacks. But with this approach it is

more prone to issuing false positives.

There are different levels of data sources to model anomaly detection of

which some classifications are based on:

a. Keyboard level: Determination of the key that is hit, time since the

last hit, etc.

b. Command level: Analysis of the commands issued and the sequence

of execution. Researchers also consider output parameters and

arguments that are passed to system calls.

12

c. Session level: Data is collected to analyze end of session events that

produce data such as length of session, overall CPU usage, memory

and input-output usage, terminal name used, login time. However this

approach may not be suitable to build a real time IDS as the data is

obtained after the user has completed the session. Hence by this time,

the attacker may have completed the intrusion.

d. Group level : Aggregating users into groups.

Based on any of the above levels, AD system may build up several

profiles of users. This can be implemented as either considering one profile per user

or as groups of users who may have particular rights in the system. A challenge of

developing host based anomaly detection systems is to keep IDS up to date with

changes in environment. Continuous training and updating is required to avoid false

alarms that may increase, which is also referred as behavioral drift. It is possible to

model or train an anomaly system over time but one particular issue with this is that

there is a danger of learning intrusive behavior as well.

Three levels of protection may be adopted in computer system namely:

a. Prevention of Attack: Firewalls, user names and passwords, and

user rights.

b. Avoidance of Attack: Encryption and Decryption.

c. Detection of Attack: Using IDS.

Simply by adopting cryptography and protocols it is not possible to

prevent all intrusions and also control the communication between computers.

Firewalls block and filter certain types of data or services from users by enforcing

restrictions, but still they are unable to handle misuse that occurs within the network

or on a host computer. IDS complements detection of malicious behavior by adding

features to the current security mechanisms.

13

The purpose of IDS is to detect intrusions by analyzing the behavior of a

user conflicts with the intended use of the computer, or computer network. This

includes committing fraud, hacking into the system to steal information, conducting

an attack to prevent the system from functioning properly or even system break

down. Earlier the intrusion detection was performed by system administrators,

manually analyzing logs of user behavior and system messages, with poor chances

of being able to detect intrusions in progress. This gradually changed by developing

applications that can automatically analyze the data for the system administrators.

The first IDS to achieve this in real time were developed in the early 1990s. As the

magnitude of data in computer networks is increasing, developing IDS is still a

significant challenge.

Recent work adopts a wide range of Artificial Intelligence (AI)

techniques in IDSs. Rule Based Systems (RBS) were the first to be employed

successfully, and are still used in many IDSs. RBS allows for IDSs to automatically

filter network traffic and/or analyze user data to identify patterns of known

intrusions. Suspected intrusions can be reported to the administrator as rules in

detailed manner which lead to the network misbehavior. The major drawback of

RBSs is that they are having a set of rigid rules hence may not be able to detect new

intrusions, or variations of known intrusions. Recent research focuses more on the

hybridization of techniques to improve the detection rates of ML classifiers. ANNs

and DTs have been applied to develop IDS and have become popular.

The need for effective intrusion detection mechanisms as part of a

security mechanism for computer systems was recommended by Denning and

Neumann. They identified four reasons for utilizing intrusion detection within a

secure computing framework:

1. Many existing systems have security flaws which make them

vulnerable. Detection of this is very difficult because of technical and

economic reasons.

14

2. Secure systems cannot be installed in place of existing system with

security flaws because of application and economic considerations.

3. The development of completely secure systems is probably

impossible as the number of attacks is growing.

4. Highly secured systems are still vulnerable to be misused by

legitimate users.

Even though adopting mechanisms such as cryptography and protocols to

control the communication between computers and users, it is impossible to prevent

all intrusions. Firewalls serve to block and filter certain types of data or services

from users on a host computer or a network of computers, aiming to stop some

potential misuse by enforcing restrictions. However, firewalls cannot handle any

form of misuse occurring within the network or on a host computer.

1.3.6.3 Behavior of Detection

Active IDS is a system that is configured automatically to block

suspected attacks without any intervention by an analyst. IDS have the advantage of

providing real time solution in response to an attack. Passive IDS is a system is

configured to monitor and analyze network traffic activity and alert analyst to

potential vulnerabilities in case of attack. It will not have any capability of

performing any protective or corrective functions on its own. The major advantage

of passive IDS is that these systems can be easily and rapidly deployed.

1.3.6.4 Usage Frequency

Furthermore, intrusion scan occur in traffic that appears normal. IDS will

not replace the other security mechanisms, but compliment them by attempting to

detect when malicious behavior occurs. The main purpose of IDS is to detect the

conflict users behavior like committing fraud, steal information by hacking,

conduct an attack to prevent the system from functioning properly or even break

down. In the early days detection was performed by system administrators, manually

15

analyzing logs of user behavior and system messages. This led to poor chances of

being able to detect intrusions in progress. Anderson and Denning developed

software that can automatically analyze the data for the system administrators. The

first IDS to achieve this in real time were developed in the early 1990s. However,

due to the increased use of computers, the magnitude of data in contemporary

computer networks still renders this a significant challenge.

Several AI techniques have been adopted in IDS and RBS were the first

to be employed successfully, and are still at the core of many IDS. RBS

automatically filters network traffic and/or analyze user data and identify patterns of

known intrusions. Suspected intrusions are reported to an administrator in a detailed

manner by a set of rules that lead to the detection of intrusion. The drawback of

RBSs is that they are inflexible due to the rigid rules and cannot detect new

intrusions as well as variations of known intrusions.

The Knowledge Discovery in Databases (KDD) Cup 99 data set has

been widely used to evaluate intrusion detection prototypes in the last decade.

Although many researchers apply the same ML techniques to the data set,

contradictory findings have been reported in the literature. Despite the criticisms,

researchers continue to use the data due to a lack of better publicly available

alternatives. Hence, it is important to identify the value of the data set which has

largely been ignored. However by applying ML techniques detection of attacks still

remains a challenging task.

By providing appropriate training it is observed that ANNs are capable of

learning from imbalanced data. Multi Layer Perceptron (MLP) that is commonly

trained by the back propagation algorithm aims to minimize the error of the

classifier by correctly identifying the class(es). Evolutionary Neural Network (ENN)

approach trains MLPs by evolving their weights using Genetic Algorithms (GA).

The ENN can successfully learn from imbalanced data, which demonstrates that

MLPs are capable of detecting the minor classes with more appropriate training.

ENN only offers one solution that may have an inadequate trade off in performance.

It is been identified as a general problem with existing ML algorithms, that they

16

produce a single solution. Still different solutions may be obtained by changing the

training data set, changing the configuration parameters, or assigning weights to the

class apriori. Unless optimal weights are known it is not likely to offer the user the

ideal performance.

1.4 DETECTION APPROACHES

There are two main approaches in detecting intrusions

1. Stateful: In this approach attack is considered as being composed of

several events or stages. Event correlation is also considered

synonymous with stateful approach.

2. Stateless: This approach attempts to classify single events as being

an intrusion or not.

Event correlation and stateless intrusion detection are discussed further

followed by a discussion of the advantages and disadvantages of the two approaches.

1.4.1 Stateful (Event Correlation) Intrusion Detection

Stateful refers to processing low level events to identify significant

patterns that can be aggregated into a single higher level event. The principle is to

reduce the number of events and/or increase the semantic level. Aggregated events

will contain more meaning than individual low level events. Stateful approach is the

process of identifying events as forming part of an attack pattern, in which the

aggregated higher level event suggests the type of attack identified.

Event correlation systems may analyze data both spatially and

temporally, building deterministic and/or probabilistic models of intrusions. Spatial

systems analyze events from different sources simultaneously. Temporal systems

consider the order of events and the time between them. For example, event B must

occur within 50 milliseconds after event A has occurred to qualify as an intrusion X.

Rule based systems are commonly used for event correlation. This can be treated as

17

signature based approach, since the system filters the events according to a set of

rules or signatures to determine the pattern of intrusions. Model based systems such

as Bayesian networks are also available and several non-AI techniques have adopted

event correlation. Some of the popular methods are the Codebook, Finite State

Machines (FSM) and other state based approaches like alert management,

localization, Petri nets, dependency graphs and hyper bipartite networks.

1.4.2 Stateless Intrusion Detection

A stateless IDS attempts to classify the data collected from network

connections as intrusive or normal. Stateless intrusion detection is normally adopted

in DM, ML communities treating the intrusion detection problem as a classification

task. The raw data collected from network based IDSs needs to be transformed into

suitable feature vectors. The feature vectors may also include some apriori

knowledge, such as the count feature that contains information about the number of

connections from a particular user for a specific duration.

The major drawback of stateless intrusion detection is that multi stage

attacks cannot be detected. Despite this drawback a benefit of this approach is that

they are faster, require less memory, and can offer real time intrusion detection. The

event correlation is complicated by the existence of many possible attacks which

makes it difficult to determine which attacks are in progress. The computational

requirements are more as it is necessary to keep track of many events concurrently.

If implemented with ML, intrusions are automatically trained from the data set

instead of conducting knowledge engineering to produce a rule base for event

correlation. Many other techniques like ANN also offer some flexibility by allowing

detecting variations in an attack. Conventional rule based approaches need a rule for

every single intrusion and variation thereof. Not only does this require a large

knowledge base, but variations of known attacks may go undetected. However such

systems will be able to give accurate information when detecting an intrusion,

contrary to many ML techniques. Another benefit of state based systems is that they

can execute responses for potential intrusions before they are completed.

18

A challenge to use either of these approaches is updating the system. For

RBS, this involves adding new rules by updating old rules. The problem is that the

knowledge base of rules may grow very large with time and may not scale well. For

some ML techniques, updating may involve comprehensive re-training that involves

a process to gather data concerned to new intrusions. Some new techniques are able

to learn continuously online but the danger is that intrusive behavior may also be

trained. Event correlation can be effectively used to develop misuse detection while

stateless approaches provide an opportunity for misuse and anomaly detection. It is

clear that both approaches have certain pros and cons but neither approach can be

said to be better than the other. As with network based and host based intrusion

detection, state based and stateless implementations complement each other.

Recent research is on attacks against the learning phase implemented by

some IDS. When ML techniques are implemented to construct the IDS, the attacker

may try to pollute the learning data on which the IDS are trained. The attack is

launched by forcing the IDS to learn from properly crafted data, so that during the

operational phase the IDS will not be able to detect certain attacks against the

protected network. Once the events are analyzed and attacks are detected IDS

responds as passive or active. In passive response reports are sent to administrators

who will then take action on the matter depending on the severity. In the case of

active response IDS automatically initiates replies to attacks. In the case of passive

response it is possible for an attacker to monitor the email of the organization or can

use a false IP for initiating an attack. In that case it would be of no use to alert

administrators. Active responses initiate an automatic action that is taken when

certain types of intrusions are detected. Before alerting, additional information may

be collected in order to gain more clues of the possible attack. Another way of active

response is to stop the attack in order to avoid future attacks. Detection of intrusions

can be classified as real time or in line / off line which refer to not in real time. Some

systems combine both types of detection and are called hybrids.

Given the diverse type of attacks it is really a challenge for any IDS to

detect a wide variety of attacks with very few false alarms in real time environment.

The system must detect all intrusions with broad attack detection coverage and at the

19

same time resulting in very few false alarms. The system must also be efficient

enough to handle large amount of data set without affecting performance. A high

level of security can be ensured by disabling all resource sharing and

communication between computers. Compared with todays highly networked

computing environment this may not be a good solution and hence there is a need to

develop better IDS.

1.5 ARTIFICIAL INTELLIGENCE (AI) TECHNIQUES APPLIED

TO IDS

There are several aspects of intrusion detection to be considered when

developing IDS to be deployed in real life, such as:

1. Architecture: The main focus here is on what could be referred to as

a detection module that would exist in a larger IDS framework.

Especially in wireless and mobile ad hoc networks, the architecture is

very important. This includes determining where to deploy the IDS,

which is considered to be a research challenge.

2. Data collection: Many researchers have used the KDD Cup 99 data

set for the work since data collection is not required. It is necessary to

collect data from the environment in which IDS is to be deployed.

This also includes a process of labeling data for supervised learning.

3. Data preprocessing: Data preprocessing is necessary mainly for the

detection methodology that are suitable for ML. Although the

availability of the data set is very convenient for researchers,

challenge is in the transformation applied.

4. Performance: There are several methods that can be adopted to help

achieve better performing IDS. This could be in terms of detection

rates, usage of memory usage, feature selection and sampling of data.

Related to the data transformation, different transformations and

feature sets may facilitate to improve intrusion detection. Different

20

ways of preprocessing the data are available, which may yield

improved performance.

5. Other issues: Detecting new intrusions is always a challenge. This is

due to new software available, which inevitably has vulnerabilities

that can be exploited. Therefore, re-training IDS is necessary once

new data set is available. When and how this is to be done and

whether to have online training or unsupervised learning is still an

open research challenge.

Related to achieving real time intrusion detection, researchers have

investigated several methods of performing feature selection. The major benefit of

feature selection is that the amount of data required to process is reduced, ideally

without compromising the performance of the detector. In some cases, feature

selection may improve the performance of the detector as it simplifies the

complexity problem by reducing its dimensionality. Many different methods are

available for adopting AI based feature selection techniques for intrusion detection.

1.5.1 Change Point (CP)

The CP detection method has been studied extensively by statisticians.

CP method will to verify if the observed data set is statistically homogeneous. If it

detects any changes the point in time is recorded as CP. There have been various

research work done for off line data set. In this method entire data is collected first

and then a decision of a CP is made based on the analysis. On the other hand CP can

be applied to real-time or on-line data set where decisions are made on the fly.

In my research work, attack detection algorithm belongs to the real-time

CP detection. The challenge in developing such a system is to reduce memory usage,

computation time and design a model which will collect packets in real time. When

designing dynamic and complex systems that need to work on Internet, it may not be

possible to model the total number of session request arrivals. Hence a robust design

has to be done that is specific to the requirement and can detect different attacks.

Many potential application areas still exists, where it is necessary to consider these

21

models for developing network security with an early detection of attacks in

computer networks that lead to changes in network traffic. Most of the detection

algorithms are based on the CP detection theory. They utilize the threshold to test

statistics and achieve reduced false alarms. The real challenge in designing the CP

algorithms usually involves optimizing the measures such as average detection delay

and frequency of false alarms.

1.5.2 Decision Trees (DT)

DM techniques can be applied to IDS once the collected data set is

preprocessed and converted to the format suitable for mining process. The data that

is formatted can be used to develop a classification or cluster model. The

classification model can be a rule based, DT based or association rule based. This

model can be used for both misuse detection and anomaly detection, but it is

predominantly used for misuse detection. Classification and clustering are both

similar as they partition the data set into distinct segments called classes. But unlike

clustering, classification analysis requires that the user should have prior knowledge

of how classes are defined. It is also necessary that each dataset used to build the

classifier should have a value for the attribute used to define the classes. The main

objective of a classification model is to explore the data and classify, so from the

new unknown data set it is possible to discover interesting patterns. Classification is

used to assign data set to a pre-defined class and ML technique performs this task by

extracting rules from examples of correctly classified data. Classification models can

be built using a wide variety of algorithms. DT has been used to detect intrusions but

has to be applied by fine tuning the techniques, so that the false alarms are reduced.

They are among the well known ML techniques. A DT is a collection of a decision

node that specifies a test attribute, an edge that corresponds to one of the possible

attribute values and a leaf which contains the class to which the data belongs. The

two major phases of DT are building the tree and classification that is repeated until

a leaf is encountered. Several well known algorithms are developed in which ID3

and C4.5 algorithms being the most popular ones. Information gain is used as a

22

measure to select the test attribute at each node in the tree. The attribute with the

highest information gain is chosen as the test attribute and referred as a measure for

goodness of split. This attribute minimizes the information needed to classify the

samples in the resulting partitions. DM techniques will dynamically model and can

use classification techniques to accurately predict probable intrusions.

1.5.3 Feature Selection

Another challenge in developing IDS is to obtain feature set that is

comprehensive enough to separate normal data from intrusive data by keeping the

size of the data set as small as possible. More the features more difficult it is to

detect intrusions. For many ML algorithms increasing the number of features may

significantly increase the training time required to learn the intrusion task. Also the

run time will slow down and memory requirements will increase with more features,

commonly referred to as the curse of dimensionality. Hence, much research has

been devoted to developing efficient techniques to perform feature selection.

1.5.4 Support Vector Machines (SVM)

SVM has been extensively used in developing IDS applications. The

main advantages of using SVM is that

a. The standard optimization method can be used to find the solution of

maximizing the margin that separate two different classes

b. Minimize the training errors.

SVM permits the training errors as some training data may not be

linearly separable in the conventional SVM feature space. Using SVM the training

data can be mapped into the SVM feature space. There exists a hyper plane that can

separate these data with a maximal margin. This is made possible by the

introduction of kernel method, which is equivalent to a transformation of the vector

space for locating a nonlinear boundary.

23

1.5.5 Relevance Vector Machines (RVM)

A related ML classifier that may be used is the RVM that unlike SVM

incorporates probabilistic output through Bayesian inference. Its decision function

depends on fewer input variables than SVM. This allows for a better classification

estimates with small data sets having high dimensionality. In this thesis the RVM is

chosen as a tool for prediction. The RVM is a kernel based learning machine that

has the same functionality as SVM. Its form is a linear combination of data centered

basis functions that are generally nonlinear. The RVM model has shown to provide

equivalent and often superior results as compared to the SVM. The comparison is

with respect to generalization ability and sparseness of the model.

For the given data set, the process of deploying RVM is as follows:

a. Formulation of model for prediction

b. Finding the new feature vector with the given large data set and

c. Design of a model to generate the lowest prediction error, which is a

real challenge.

In this thesis a comparison of the performance of RVM and SVM, for

classifying network data as normal and attack has been carried out using real

time data.

1.6 AREAS OF RESEARCH

There are several areas of research in the domain of intrusion detection.

The following parameters will help move the field towards looking at developing an

ideal IDS:

a. Accuracy : No false positives.

b. Completeness : No false negatives.

c. Performance : Detection in real time.

24

d. Fault tolerance : IDS not becoming security vulnerability by

itself.

e. Timeliness : Handle large amount of data. How quickly the

IDS can propagate the information through the

network to react to potential intrusions. This is

also referred as scalability.

A large proportion of research in the development of IDS focuses on new

system architectures and detectors to improve the accuracy and completeness of the

IDS. Event correlation is a very important research area, which has been established

well for misuse detection. Related to system architectures, an emerging research area

is intrusion detection in wireless/mobile ad hoc and sensor networks. There is a

trend in applying ML to intrusion detection, which offers flexible detectors and

lends itself conveniently to anomaly detection. It is now common to develop hybrid

systems, which combine misuse and anomaly detectors, host based and network

based modules, and event correlation and stateless detectors. With the increasing

research on hybrid IDS much of the recent research focuses on correlating effective

alerts between the different modules. Related to alert correlation, alert aggregation is

also the focus of recent research that attempts to group similar events into a single

generalized event. This can significantly reduce the false positive rates and the

amount of alerts a system administrator is required to investigate. Much research

addresses scalability by distributing or decentralizing the IDS. This can be done with

event correlation as well as with other detection mechanism based on mobile agents

and artificial immune systems. Probes are issued to gather information about the

performance of the distributed system and utilize Bayesian reasoning to determine

how many probes should be issued and the number of tests they should perform. The

idea for developing this scheme is to reduce the computational costs and achieve real

time detection of the system. An inconsistency with intrusion detection is that the

IDS itself may become a security vulnerability. Many researchers suggest that state

based IDSs are more prone to attacks than stateless approaches, as they can be

25

flooded with events that prevent them from functioning efficiently. The applications

of ML algorithms, which learn over time, are particularly vulnerable to adversarial

attacks. There is a danger that an adversary may manipulate the training process by

gradually changing the behavior over time so that a new planned attack will not be

detected. Several research papers give an overview of threats to learning algorithms

themselves and discuss ways to protect against and detect attacks.

1.7 CONTRIBUTION AND NOVELTY

The research work presented in this thesis, models the IDS by ensemble

approach using Outlier Detection (OD), CP and RVM. The current new hybrid IDS

model developed combines the individual base classifiers and ML paradigms to

maximize detection accuracy and minimize computational complexity. The results

illustrate that the proposed hybrid systems provide more accurate IDS. Real time

dataset is used in the experiments to demonstrate that RVM can greatly improve the

classification accuracy. The approach achieves higher detection rate with low false

alarm rates and is scalable for large datasets, resulting in an effective IDS. Several

contributions from researchers have been made to the general ML domain in order to

develop a real time IDS. In this thesis, contributions are made both in intrusion

detection and ML domains.

The system developed aims to:

1. Detect broad attack range and that is not specific in detecting only the

previously known attack.

2. Reduce the number of false alarms generated by IDS thereby

improving the attack detection accuracy.

3. Work efficiently in real time.

Issues such as scalability, availability of training data, robustness to noise

and feature selection in the training data are also addressed.

26

1.8 STRUCTURE OF THE THESIS

The remainder of this thesis is organized as follows.

Chapter 2 provides literature review of IDS. The chapter also reviews

the commonly known attacks and defensive mechanisms.

Chapter 3 provides an introduction to the domain of intrusion detection

followed by literature review.

Chapter 4 describes the framework that is used to build effective and

efficient IDS. The framework developed can identify the network features related to

each packet encountered in the network. The real challenge was to manage and

process large volume of data, recognize misbehavior, low false alarms and react in

real time to avert an intrusion.

Chapter 5 describes how ML techniques can be integrated in the

framework along with the experimental results. To demonstrate the feasibility of the

architecture a prototype implementation has been developed and its performance has

been evaluated on synthetic as well as real intrusion data. This has shown that

applying ML technique is possible and even yields low false alarms.

Chapter 6 explains the use of CP, OD and RVM that is used to build a

real time IDS. Results obtained from the model provide accurate classification.

RVM may be preferable to SVM as it provides a Bayesian derived probability as an

output. These results suggest that these ML classifiers show good potential for

developing IDS. Our experimental results suggest that by using RVM, attacks can be

detected by analyzing only a small number of events which results in an efficient

and an accurate system.

Chapter 7 concludes and offers possible directions for future research.

27

CHAPTER 2

LITERATURE SURVEY

A large proportion of research in developing IDS focuses on developing

new system architectures to improve the accuracy and completeness of the IDS.

Several research areas in the domain of IDS help move the field towards

a set of ideal requirements as listed in Table 2.1.

Table 2.1 Ideal requirements of IDS

Accuracy No false positives

Completeness No false negatives

Performance Real time detection

Fault Tolerance IDS not becoming security vulnerability itself

Timeliness Handling large amounts of data

Scalability Quick propagation of information in the network to react to

potential intrusions using IDS

Intrusion Detection procedures are classified into three categories and

they differ in the reference data that is used for detecting unusual activity. Signature

based or MD considers signatures of unusual activity for detection. AD mechanism

considers a profile of normal system activity and Protocol-Based or Specification

based detection considers constraints that characterize the normal behavior of a

particular protocol or a program. The trend is to apply ML to IDS that offers

flexibility for detection and lends itself conveniently to AD. The AD operates

assuming that the attacks are different from the normal activity and try to focus on

28

identifying unusual behavior in a host or a network. However it is now common to

develop hybrid systems, which may combine misuse and anomaly detectors, host

based and network based modules, and event correlation and stateless detectors.

With increasing research on hybrid IDS, recent research focuses on correlating alerts

between the different modules in an efficient manner [3, 4]. Alert aggregation is one

such area in which similar alerts/events are grouped into a single generalized event.

With this method, data required to analyze to detect intrusion by the system

administrator gets reduced. Event correlation is another research area, which has

been established well for MD. MD based IDS often uses a set of rules or signatures

as attack model, with each rule usually dedicated to detect a different attack. Earlier

research work emphasized that data set for analysis can be obtained by real traffic,

sanitized traffic and simulated traffic [5,6]. But in real time fast response to external

events within an extremely short time is demanded and expected. Therefore, an

alternative algorithm to implement real time learning is imperative for critical

applications for fast changing environments. Even for offline applications, speed is

still a need. A real time learning algorithm that reduces training time and human

effort to nearly zero would always be of considerable value. The advent of new

technologies has greatly increased the ability to monitor and resolve the details of

changes in order to analyze better. Analyzing large amount of data is still a new

challenge. For identifying frequently changing trend data need to be analyzed and

corrected. In some cases, feature selection may improve the performance of the

detection as it simplifies the complexity problem by reducing the dimensions.

Researchers have proposed several methods of feature selection to achieve real time

IDS. The major benefit of feature selection is that the amount of data required to

process is significantly reduced, without compromising the performance of the

detection.

2.1 CURRENT IDS PRODUCTS

IDS can be classified according to many different features [7,8].

Table 2.2 lists some of the currently available IDS with features.

29

Table 2.2 Leading IDS products currently available

Name Description

SNORT

An open source network Intrusion Prevention and Detection

System (IDS/IPS). SNORT is developed by Sourcefire that

combines the benefits of signature, protocol and anomaly

based inspection. SNORT is one of the widely deployed

IDS/IPS technologies worldwide.

COUNTERACT

Delivers an entirely unique approach to prevent network

intrusions. The system stops attackers based on their

proven intent to attack. It will not use signatures, AD or

pattern matching of any kind. To launch an attack, an

attacker needs knowledge about a network's resources. Prior

to attack, intruders compile vulnerability and configuration

information by scanning and probing. This information is

used to launch attacks based on the unique structure and

characteristics of the targeted network. These characteristics

of intruders are used by COUTERACT to prevent

intrusions.

AIRMAGNET

Provides a simple, scalable WLAN monitoring solution This

enables an organization to proactively mitigate all types of

wireless threats.

BRO IDS

An open source, Unix based NIDS. Bro will passively