Documente Academic

Documente Profesional

Documente Cultură

40120140503006

Încărcat de

IAEME PublicationDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

40120140503006

Încărcat de

IAEME PublicationDrepturi de autor:

Formate disponibile

International Journal of Electronics and Communication Engineering & Technology (IJECET), ISSN 0976 INTERNATIONAL JOURNAL OF ELECTRONICS AND

D 6464(Print), ISSN 0976 6472(Online), Volume 5, Issue 3, March (2014), pp. 34-42 IAEME

COMMUNICATION ENGINEERING & TECHNOLOGY (IJECET)

ISSN 0976 6464(Print) ISSN 0976 6472(Online) Volume 5, Issue 3, March (2014), pp. 34-42 IAEME: www.iaeme.com/ijecet.asp Journal Impact Factor (2014): 7.2836 (Calculated by GISI) www.jifactor.com

IJECET

IAEME

MATLAB BASED MOTION ESTIMATION AND COMPRESSION IN VIDEO FRAMES USING TRUE MOTION TRACKER

Rekhanshi Raghava1 and Dr. Anil Kumar Sharma2 M. Tech. Scholar1, Professor & Principal2 Department of Electronics & Communication Engineering Institute of Engineering & Technology, Alwar-301030 (Raj.), India

ABSTRACT Motion estimation is the process of determining motion vectors that describe the transformation from one 2D image to another; usually from adjacent frames in a video sequence. The motion vectors may relate to the whole image (global motion estimation) or specific parts, such as rectangular blocks, arbitrary shaped patches or even per pixel. Motion can be rotation and translation in all three dimensions and zoom. We are concerned with the "projected motion" of 3-D objects onto the 2-D plane of an imaging sensor. By motion n estimation, we mean the estimation of the displacement (or velocity) of image structures from one frame to another in a time sequence of 2D images. Motion estimation is a video compression technique, which exploits temporal redundancy of the video sequence. Successive pictures in the motion video sequence tend to be highly correlated and consecutive video frames will be similar except for the change induced with the objects moving within the frames. This implies that the arithmetic difference between these pictures is small. In contrast, the objects that is in motion increase the arithmetic difference between the frames which in turn implies that more bits are required to encode the sequence. For this reason motion estimation technique is used to determine displacement of the object Motion Estimation and Compensation, The motion estimation creates a model by modifying one or more reference frames to match the current frame as closely as possible. The current frame is motion compensated by subtracting the model from the frame to produce a motion-compensated residual frame. This is coded and transmitted, along with the information required for the decoder to recreate the model (typically a set of motion vectors). At the same time, the encoded residual is decoded and added to the model to reconstruct a decoded copy of the current frame (which may not be identical to the original frame because of coding losses). This reconstructed frame is stored to be used as reference frame for further predictions. Keywords: BMA, DFD, JPEG, MPEG, TMT.

34

International Journal of Electronics and Communication Engineering & Technology (IJECET), ISSN 0976 6464(Print), ISSN 0976 6472(Online), Volume 5, Issue 3, March (2014), pp. 34-42 IAEME

1. INTRODUCTION With the advent of the multimedia age and the spread of Internet, video storage on CD/DVD and streaming video has been gaining a lot of popularity. The ISO Moving Picture Experts Group (MPEG) video coding standards pertain towards compressed video storage on physical media like CD/DVD, whereas the International Telecommunications Union (ITU) addresses real-time point-topoint or multi-point communications over a network. The former has the advantage of having higher bandwidth for data transmission. In either standard the basic flow of the entire compression decompression process is largely the same. The encoding side estimates the motion in the current frame with respect to a previous frame. A motion compensated image for the current frame is then created that is built of blocks of image from the previous frame. The motion vectors for blocks used for motion estimation are transmitted, as well as the difference of the compensated image with the current frame is also JPEG encoded and sent. The encoded image that is sent is then decoded at the encoder and used as a reference frame for the subsequent frames. The decoder reverses the process and creates a full frame. The whole idea behind motion estimation based video compression is to save on bits by sending JPEG encoded difference images which inherently have less energy and can be highly compressed as compared to sending a full frame that is JPEG encoded. Motion JPEG, where all frames are JPEG encoded, achieves anything between 10:1 to 15:1 compression ratio whereas MPEG can achieve a compression ratio of 30:1. The algorithms that have been implemented are Exhaustive Search (ES), this algorithm, also known as Full Search, is the most computationally expensive block matching algorithm of all. This algorithm calculates the cost function at each possible location in the search window. As a result of which it finds the best possible match and gives the highest PSNR amongst any block matching algorithm. The obvious disadvantage to ES is that the larger the search window gets the more computations it requires. Implementing an integrated perspective of ES and true motion estimation. Taking the pictures of 3D real-world scene generates sequences of video images. When an object in the three-dimensional real world moves, there are corresponding changes in the brightnessor luminance intensityof its two-dimensional image. The physical three-dimensional motion projected onto the two dimensional image space is referred to as true motion. The ability to track true motion by observing changes in luminance intensity is critical to many video applications. 2. MOTION ESTIMATION A video sequence can be considered to be a discretized three-dimensional projection of the real four dimensional continuous space-time. The objects in the real world may move, rotate, or deform. The movements cannot be observed directly. Changes between frames are mainly due to the movement of these objects. Using a model of the motion of objects between frames, the encoder estimates the motion that occurred between the reference frame and the current frame. This process is called motion estimation (ME). The encoder then uses this motion model and information to move the contents of the reference frame to provide a better prediction of the current frame. This process is known as motion compensation (MC), and the prediction so produced is called the motioncompensated prediction (MCP) or the displaced-frame (DF). In this case, the coded prediction error signal is called the displaced-frame difference (DFD). A block diagram of a motion compensated coding system. This is the most commonly used interframe coding method. The underlying supposition behind motion estimation is that the patterns corresponding to objects and background in a frame of video sequence move within the frame to form Corresponding objects on the subsequent frame. The idea behind block matching is to divide the current frame into a matrix of macro blocks that are then compared with corresponding block and its adjacent neighbours in the previous frame to create a vector that stipulates the movement of a macro block from one location to another in the

35

International Journal of Electronics and Communication Engineering & Technology (IJECET), ISSN 0976 6464(Print), ISSN 0976 6472(Online), Volume 5, Issue 3, March (2014), pp. 34-42 IAEME

previous frame. This movement calculated for all the macro blocks comprising a frame, constitutes the motion estimated in the current frame. The search area for a good macro block match is constrained up to p pixels on all fours sides of the corresponding macro block in previous frame.

Fig. 1: Motion estimation process This p is called as the search parameter. Larger motions require a larger p, and the larger the search parameter the more computationally expensive the process of motion estimation becomes. Usually the macro block is taken as a square of side 16 pixels, and the search parameter p is 7 pixels. The matching of one macro block with another is based on the output of a cost function. The macro block that results in the least cost is the one that matches the closest to current block. There are various cost functions, of which the most popular and less computationally expensive is Mean Absolute Difference (MAD) given by equation (i). Another cost function is Mean Squared Error (MSE) given by equation (ii) where N is the side of the macro bock, Cij and Rij are the pixels being compared in current macro block and reference macro block, respectively. Peak-Signal-to-NoiseRatio (PSNR) given by equation (iii) characterizes the motion compensated image that is created by using motion vectors and macro clocks from the reference frame.

MAD 1/

2 MSE 1/

(i) (ii) (iii)

PSNR= 10 log 10[ (peak to peak value of original data)2/ MSE]

Fig. 2. Motion Compensated Video Coding

36

International Journal of Electronics and Communication Engineering & Technology (IJECET), ISSN 0976 6464(Print), ISSN 0976 6472(Online), Volume 5, Issue 3, March (2014), pp. 34-42 IAEME

3. MOTION ESTIMATION ALGORITHMS IN VIDEO There are two kinds of motion estimation algorithms: the first identifies the true motion of a pixel (or a block) between video frames, and the second removes temporal redundancies between video frames. Tracking the true motion: The first kind of motion estimation algorithms aims to accurately track the true motion of objects/features in video sequences. Video sequences are generated by projecting a 3D real world onto a series of 2D images (e.g., using CCD). When objects in the 3D real world move, the brightness (pixel intensity) of the 2D images change correspondingly. The 2D motion projected from the movement of a point in the 3D real world is referred to as the true motion. For example, Fig. 3(a) and (b) show two consecutive frames of a ball moving upright and Fig. 3(c) shows the corresponding true motion of the ball. Computer vision, the goal of which is to identify the unknown environment via the moving camera, is one of the many potential applications of true motion. Removing temporal redundancy: The second kind of motion estimation algorithm aims to remove temporal redundancy in video compression. In motion pictures, similar scenes exist between a frame and its previous frame. In order to minimize the amount of information to be transmitted, blockbased video coding standards (such as MPEG and H.263) encode the displaced difference block instead of the original block. The residue (difference) is coded together with the motion vector. Since the actual compression ratio depends on the removal of temporal redundancy, conventional blockmatching algorithms use minimal-residue as the criterion to find the motion vectors.

Fig. 3: (a) (b) Show two consecutive frames of a ball moving upright and (c) Shows the true motion the physical motion in 2D images. Although the minimal-residue motion estimation algorithms are good at removing temporal redundancy, they are not sufficient for finding the true motion vector. The motion estimation algorithm for removing temporal redundancy is happy with finding any of the two motion vectors. However, the motion estimation for tracking the true motion is targeted at finding the only one. In general, motion vectors for the minimal residue, though good for the redundancy removal, may not actually be true motion.

37

International Journal of Electronics and Communication Engineering & Technology (IJECET), ISSN 0976 6464(Print), ISSN 0976 6472(Online), Volume 5, Issue 3, March (2014), pp. 34-42 IAEME

Fig. 4: (a) 2D image comes from projection of a 3D real world. Here, we assume a pinhole camera is used. (b) The 2D projection of the movement of a point in the 3D real world is referred as the true motion 4. TRUE MOTION TRACKER AND VIDEO COMPRESSION Video compression can make use of the true motion tracker (TMT) in various shapes, such as rate-optimized motion vector coding, object-based video coding, and object-based global motion compensation [19, 24, 52]. In this chapter, we demonstrate that the proposed true motion tracker (TMT) can provide higher coding efficiency and better subjective visual quality than conventional minimal-residue block-matching algorithms. Video compression plays an important role in many multimedia applications, from video-conferencing and video-phone to video games. The key to achieving compression is to remove temporal and spatial redundancies in video images. Blockmatching motion estimation algorithms (BMAs) have been widely exploited in various international video compression standards to remove temporal redundancy. For differentially encoded motion vectors, we observe that a piecewise continuous motion field reduces the bit-rate. Hence, we propose a rate-optimized motion estimation algorithm based on the neighbourhood relaxation TMT. The unique features of this algorithm come from two parts: (1) we incorporate the number of bits for encoding motion vectors into the minimization criterion, and (2) instead of counting the actual number of bits for motion vectors; we approximate the number of bits by the residues of the neighborhood. In addition, we present a motion-compensated frame-rate up-conversion scheme using the decoded motion. Such use of the decoded motion can save computation on the decoder side. The more accurate the motion information is, the better the performance of frame-rate up-conversion will turn out to be. Hence, using the true motion vectors for the compression, results in a better picture quality of frame-rate up-conversion than using the motion vectors estimated by the minimal-residue block-matching algorithms (BMA).We use a motion-vector refinement scheme in which small changes to the estimated motion vectors are allowed, to increase the precision of correct motion vectors given the assurance of the correctness. The use of multiple resolutions in the recognition process is computationally and conceptually interesting. In the analysis of signals, it is often useful to observe a signal in successive approximations. For instance, in pattern recognition applications the vision system attempts to classify an object from a coarse approximation. If the classification does not succeed, additional details are added such that a more accurate view of the object is obtained. This process can be continued until the object has been recognized. Multiresolution Technique with Different Image Sizes for Previous Frame: Reducing the number of search positions and the number of pixels in residual calculation can also reduce computation. The

38

International Journal of Electronics and Communication Engineering & Technology (IJECET), ISSN 0976 6464(Print), ISSN 0976 6472(Online), Volume 5, Issue 3, March (2014), pp. 34-42 IAEME

multi resolution motion estimation algorithms rely on the technique of predicting an approximate large scale motion vector in a coarse-resolution video and refining the estimated motion vector in a multi resolution fashion to achieve the motion vector in the finer resolution. The size of the image is smaller at a coarser level (i.e., of a pyramid form). Since a block at the coarser level represents a larger region than a block with the same number of pixels at the finer level, a smaller search area can be used at coarser levels. In addition, multi resolution motion estimation algorithms also reduce the number of pixels in residual calculation. These algorithms can be further divided into two groups: constant block size and variable block size. (i) The same block size is used at each level. If the image size is reduced to half as the level becomes coarser, one block at a coarser level covers four corresponding blocks at the next finer level. In this way, the motion vector of the coarser-level block is either directly used as the initial estimate for the four corresponding finer-level blocks or interpolated to obtain four motion vectors of the finer level. In different block sizes are employed at each level to maintain a one-to-one correspondence between blocks in different levels. As a result, the motion vector of each block can directly be used as an initial estimate for the corresponding block at the finer level.

(ii)

Multi resolution Technique with Same Image Size for Previous Frame: Instead of reducing the number of search locations, the multi resolution method trades the number of search locations for better estimation quality. This method uses different image resolutions with the same image size of a pyramid form. Since the same image size is used at each level, the number of possible motion candidates is the same at each level. The block size is not the same at each level and is reduced by half as the level becomes coarser. A block at the coarser level represents the same region as that at the finer level. Then, in the coarsest level, a set of motion candidates is selected from the maximum motion candidate set using a full search with fewer pixels in residual calculation. In each of the finer levels, the motion candidate set is further screened. At the last level, only a single motion vector is selected.

Fig.5: 3-level multi resolution motion estimation schemes The first level images are the images of original resolution. The second level images are the images of a quarter resolution of the first level. (A pixel in second level corresponds to the low-pass filtering of four pixels in the corresponding position.) The third level images are a quarter of the second level.

39

International Journal of Electronics and Communication Engineering & Technology (IJECET), ISSN 0976 6464(Print), ISSN 0976 6472(Online), Volume 5, Issue 3, March (2014), pp. 34-42 IAEME

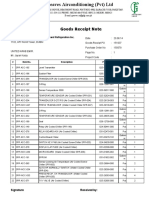

Fig. 6: Three different images of foreman series under multi resolution compression 5. STIMULATIONS AND RESULT ANALYSIS We perform our simulations under MPEG-4 test conditions as shown in Table-1where each sequence has 300 frames. These sequences cover a wide range of motion contents and have various formats including QCIF and CIF. The original frame-rate is 30 frames per second (or fps). They have been tested at various bit rates (10 1024 kilobits per second or Kbps) and sub-sampled frame-rates (7.5 30 fps). When the coding bit-rate is lower than 512 Kbps, only the first frame in each sequence is coded as I frame all the remaining frames are coded as P frames. At high bit-rates (512 Kbps and 1024 Kbps. Search range means that the search will be performed within a square region of [-P, +P] around the position of the current block. For comparison, the performances of FS, DS, and ARPS are reported as follows. Average peak signal-to-noise ratio (PSNR) per frame of each reconstructed video sequence is computed for quality comparison and documented in Table 1. Fig 7 shows the stimulation graph for various discussed algorithms. Table-1 MPEG 4 test conditions Video Forma t QCIF CIF CIF QCIF CIF Bit rate(Kbp s) 24 512 1024 48 112 Frame rate(fbps ) 10 15 30 10 10 Search range 16 32 32 16 16

MotherDaughter Foreman Foreman Coast guard Coast guard

Fig. 7: Frame-based PSNR performance of FS, DS and ARPS in Foreman Fast camera panning with scene change happens during frames 160_220

40

International Journal of Electronics and Communication Engineering & Technology (IJECET), ISSN 0976 6464(Print), ISSN 0976 6472(Online), Volume 5, Issue 3, March (2014), pp. 34-42 IAEME

On Comparing with FS(Full Search), ARPS(Adaptive Rood Pattern Search) greatly improves the search speed with computational gain in the range of 94 447.ARPS maintains similar PSNR performance of FS in most sequences with less than 0.12 dB degradation (except 0.23 dB in Coastguard at 112 Kbps and 0.49 dB in Foreman at 512 Kbps).When compared with DS, ARPS is constantly around 2 times faster with similar PSNR achieved. Even for difficult test sequences such as Foreman and Coastguard where large and/or complex motion contents are involved, ARPS still achieves superior PSNR to that of DS, by 0.27 dB and 0.38 dB, respectively as described in table 2 & 3. Table-2: Average PSNR (dB) performance of FS, DS ARPS Video FS DS ARPS Mother-Daughter(24) Foreman(512) Foreman(1024) Coast guard(48) Coast guard(112) 34.82 35.01 35.70 28.88 27.05 34.76 34.26 35.12 28.72 26.44 34.61 34.50 35.40 28.77 26.82

Simple fast block-matching algorithm called adaptive rood pattern search (ARPS). By exploiting higher distribution of MVs in the horizontal and vertical directions and the spatial interblock correlation, ARP adaptively exploits adjustable rood-shaped search pattern (which is powerful in tracking motion trend), together with the search point indicated by the predicted MV, to match different motion contents of video sequence for each macro block. Table 3: Average number of search points per MV generation Video FS DS ARPS Mother-Daughter(24) Foreman(512) Foreman(1024) Coast guard(48) Coast guard(112) 6. CONCLUSION This work has explored the theory of true motion tracking in digital video with respect to its applications. We have examined basic features of true motion estimation algorithms. This true motion tracker has a number of advantageous properties when applied to motion analysis: Dependable trackingthe neighborhood helps to single out erroneous motion vectors Motion flexibilitythe relaxation helps to accommodate non-translation motion High implementation efficiency i.e. 99% of the computations are integer additions. Consequently, it may be used as a cost-effective motion estimation algorithm for video coding, video interpolation, and video-object analysis. 1024 4096 4096 1024 1024 13.84 22.58 18.54 17.46 20.77 6.12 11.76 9.16 8.78 10.82

41

International Journal of Electronics and Communication Engineering & Technology (IJECET), ISSN 0976 6464(Print), ISSN 0976 6472(Online), Volume 5, Issue 3, March (2014), pp. 34-42 IAEME

REFERENCES [1] Z. Zhang and O. D. Faugeras, Three-dimensional motion computation and object segmentation in a long sequence of stereo frames, Rapports de Recherche, INRIA, Juillet, France, 1991. X. Q. Gao, C. J. Duanmu and C. R. Zou, A multilevel successive elimination algorithm for block matching motion estimation, IEEE Trans. Image Processing, vol. 9, pp. 501504, Mar. 2000. Gao, X.Q., Duanmu, C.J. and Zou, C.R., 2000, A multilevel successive elimination algorithm for block matching motion estimation, IEEE Trans. Image Processing, March 2000, vol. 9, pp. 501-504. Zhu, S. and Ma, K.K., 2000. A new diamond search algorithm for fast block matching motion estimation, IEEE Trans. Image Processing, Feb. 2000, vol. 9, pp. 287-290. Zhu, C., Lin, X. and Chau, L.P., 2002. Hexagon-based search pattern for fast block motion estimation, IEEE Trans. Circuits Syst. Video Technology, May 2002, vol. 12, pp. 349-355. Yao Nie and Kai-Kuang Ma Adaptive Rood Pattern Search for Fast Block-Matching Motion EstimationIEEE transactions on Image processing, vol. 11, no. 12, Dec. 2002. Shan Zhu, and Kai-Kuang Ma, A New Diamond Search Algorithm for Fast BlockMatching Motion Estimation, IEEE Trans. Image Processing, vol 9, no. 2, pp. 287-290, February 2000 Chun-Ho Cheung, and Lai-Man Po, A Novel Small Cross-Diamond Search Algorithm for Fast Video Coding and Video Conferencing Applications, Proc. IEEE ICIP, Sep. 2002. Xuan Jing and Lap-Pui Chau An Efficient Three-Step Search Algorithm for Block Motion EstimationIEEE Transactions on Multimedia, Vol. 6, No. 3, June 2004. F. Essannounietal Fast exhaustive block-based motion vector estimation algorithm using fftThe Arabian Journal for Science and Engineering, Volume 32, Number 2C, 2007 BingXiong and Ce Zhu, 2009. Efficient block matching motion estimation using multilevel intra and inter subblock features, IEEE Trans. Evolutionary Computation, Vol. 19, No. 7, pp. 1039-1050. Reeja S R and Dr. N. P Kavya, Motion Detection for Video Denoising The State of Art and the Challenges, International Journal of Computer Engineering & Technology (IJCET), Volume 3, Issue 2, 2012, pp. 518 - 525, ISSN Print: 0976 6367, ISSN Online: 0976 6375. Gopal Thapa, Kalpana Sharma and M.K.Ghose, Multi Resolution Motion Estimation Techniques for Video Compression: A Survey, International Journal of Computer Engineering & Technology (IJCET), Volume 3, Issue 2, 2012, pp. 399 - 406, ISSN Print: 0976 6367, ISSN Online: 0976 6375.

[2]

[3]

[4] [5] [6] [7]

[8] [9] [10] [11]

[12]

[13]

42

S-ar putea să vă placă și

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- Number CardsDocument21 paginiNumber CardsCachipún Lab CreativoÎncă nu există evaluări

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Classical Theories of Economic GrowthDocument16 paginiClassical Theories of Economic GrowthLearner8494% (32)

- Effect of Minor and Trace Elements in Cast IronDocument2 paginiEffect of Minor and Trace Elements in Cast IronsachinguptachdÎncă nu există evaluări

- Teachers Guide Lower Secondary Science PDFDocument141 paginiTeachers Guide Lower Secondary Science PDFNuzhat IbrahimÎncă nu există evaluări

- Bombas KMPDocument42 paginiBombas KMPReagrinca Ventas80% (5)

- Progressive Muscle Relaxation ExerciseDocument2 paginiProgressive Muscle Relaxation Exerciseraghu2362704100% (1)

- A Study On Talent Management and Its Impact On Employee Retention in Selected It Organizations in ChennaiDocument16 paginiA Study On Talent Management and Its Impact On Employee Retention in Selected It Organizations in ChennaiIAEME PublicationÎncă nu există evaluări

- Second Periodical Test in Organization and Management SY 2018-2019Document3 paginiSecond Periodical Test in Organization and Management SY 2018-2019Merida BravoÎncă nu există evaluări

- CNC Milling ReportDocument15 paginiCNC Milling ReportStarscream Aisyah78% (37)

- Career Orientation QuestionnaireDocument5 paginiCareer Orientation QuestionnaireApple May100% (1)

- Impact of Emotional Intelligence On Human Resource Management Practices Among The Remote Working It EmployeesDocument10 paginiImpact of Emotional Intelligence On Human Resource Management Practices Among The Remote Working It EmployeesIAEME PublicationÎncă nu există evaluări

- Voice Based Atm For Visually Impaired Using ArduinoDocument7 paginiVoice Based Atm For Visually Impaired Using ArduinoIAEME PublicationÎncă nu există evaluări

- Broad Unexposed Skills of Transgender EntrepreneursDocument8 paginiBroad Unexposed Skills of Transgender EntrepreneursIAEME PublicationÎncă nu există evaluări

- A Study of Various Types of Loans of Selected Public and Private Sector Banks With Reference To Npa in State HaryanaDocument9 paginiA Study of Various Types of Loans of Selected Public and Private Sector Banks With Reference To Npa in State HaryanaIAEME PublicationÎncă nu există evaluări

- Modeling and Analysis of Surface Roughness and White Later Thickness in Wire-Electric Discharge Turning Process Through Response Surface MethodologyDocument14 paginiModeling and Analysis of Surface Roughness and White Later Thickness in Wire-Electric Discharge Turning Process Through Response Surface MethodologyIAEME PublicationÎncă nu există evaluări

- A Study On The Impact of Organizational Culture On The Effectiveness of Performance Management Systems in Healthcare Organizations at ThanjavurDocument7 paginiA Study On The Impact of Organizational Culture On The Effectiveness of Performance Management Systems in Healthcare Organizations at ThanjavurIAEME PublicationÎncă nu există evaluări

- Various Fuzzy Numbers and Their Various Ranking ApproachesDocument10 paginiVarious Fuzzy Numbers and Their Various Ranking ApproachesIAEME PublicationÎncă nu există evaluări

- Influence of Talent Management Practices On Organizational Performance A Study With Reference To It Sector in ChennaiDocument16 paginiInfluence of Talent Management Practices On Organizational Performance A Study With Reference To It Sector in ChennaiIAEME PublicationÎncă nu există evaluări

- A Multiple - Channel Queuing Models On Fuzzy EnvironmentDocument13 paginiA Multiple - Channel Queuing Models On Fuzzy EnvironmentIAEME PublicationÎncă nu există evaluări

- Application of Frugal Approach For Productivity Improvement - A Case Study of Mahindra and Mahindra LTDDocument19 paginiApplication of Frugal Approach For Productivity Improvement - A Case Study of Mahindra and Mahindra LTDIAEME PublicationÎncă nu există evaluări

- EXPERIMENTAL STUDY OF MECHANICAL AND TRIBOLOGICAL RELATION OF NYLON/BaSO4 POLYMER COMPOSITESDocument9 paginiEXPERIMENTAL STUDY OF MECHANICAL AND TRIBOLOGICAL RELATION OF NYLON/BaSO4 POLYMER COMPOSITESIAEME PublicationÎncă nu există evaluări

- Attrition in The It Industry During Covid-19 Pandemic: Linking Emotional Intelligence and Talent Management ProcessesDocument15 paginiAttrition in The It Industry During Covid-19 Pandemic: Linking Emotional Intelligence and Talent Management ProcessesIAEME PublicationÎncă nu există evaluări

- Role of Social Entrepreneurship in Rural Development of India - Problems and ChallengesDocument18 paginiRole of Social Entrepreneurship in Rural Development of India - Problems and ChallengesIAEME PublicationÎncă nu există evaluări

- Financial Literacy On Investment Performance: The Mediating Effect of Big-Five Personality Traits ModelDocument9 paginiFinancial Literacy On Investment Performance: The Mediating Effect of Big-Five Personality Traits ModelIAEME PublicationÎncă nu există evaluări

- Analysis of Fuzzy Inference System Based Interline Power Flow Controller For Power System With Wind Energy Conversion System During Faulted ConditionsDocument13 paginiAnalysis of Fuzzy Inference System Based Interline Power Flow Controller For Power System With Wind Energy Conversion System During Faulted ConditionsIAEME PublicationÎncă nu există evaluări

- Knowledge Self-Efficacy and Research Collaboration Towards Knowledge Sharing: The Moderating Effect of Employee CommitmentDocument8 paginiKnowledge Self-Efficacy and Research Collaboration Towards Knowledge Sharing: The Moderating Effect of Employee CommitmentIAEME PublicationÎncă nu există evaluări

- Optimal Reconfiguration of Power Distribution Radial Network Using Hybrid Meta-Heuristic AlgorithmsDocument13 paginiOptimal Reconfiguration of Power Distribution Radial Network Using Hybrid Meta-Heuristic AlgorithmsIAEME PublicationÎncă nu există evaluări

- Moderating Effect of Job Satisfaction On Turnover Intention and Stress Burnout Among Employees in The Information Technology SectorDocument7 paginiModerating Effect of Job Satisfaction On Turnover Intention and Stress Burnout Among Employees in The Information Technology SectorIAEME PublicationÎncă nu există evaluări

- Dealing With Recurrent Terminates in Orchestrated Reliable Recovery Line Accumulation Algorithms For Faulttolerant Mobile Distributed SystemsDocument8 paginiDealing With Recurrent Terminates in Orchestrated Reliable Recovery Line Accumulation Algorithms For Faulttolerant Mobile Distributed SystemsIAEME PublicationÎncă nu există evaluări

- Analysis On Machine Cell Recognition and Detaching From Neural SystemsDocument9 paginiAnalysis On Machine Cell Recognition and Detaching From Neural SystemsIAEME PublicationÎncă nu există evaluări

- A Proficient Minimum-Routine Reliable Recovery Line Accumulation Scheme For Non-Deterministic Mobile Distributed FrameworksDocument10 paginiA Proficient Minimum-Routine Reliable Recovery Line Accumulation Scheme For Non-Deterministic Mobile Distributed FrameworksIAEME PublicationÎncă nu există evaluări

- Sentiment Analysis Approach in Natural Language Processing For Data ExtractionDocument6 paginiSentiment Analysis Approach in Natural Language Processing For Data ExtractionIAEME PublicationÎncă nu există evaluări

- A Review of Particle Swarm Optimization (Pso) AlgorithmDocument26 paginiA Review of Particle Swarm Optimization (Pso) AlgorithmIAEME PublicationÎncă nu există evaluări

- A Overview of The Rankin Cycle-Based Heat Exchanger Used in Internal Combustion Engines To Enhance Engine PerformanceDocument5 paginiA Overview of The Rankin Cycle-Based Heat Exchanger Used in Internal Combustion Engines To Enhance Engine PerformanceIAEME PublicationÎncă nu există evaluări

- Formulation of The Problem of Mathematical Analysis of Cellular Communication Basic Stations in Residential Areas For Students of It-PreparationDocument7 paginiFormulation of The Problem of Mathematical Analysis of Cellular Communication Basic Stations in Residential Areas For Students of It-PreparationIAEME PublicationÎncă nu există evaluări

- Quality of Work-Life On Employee Retention and Job Satisfaction: The Moderating Role of Job PerformanceDocument7 paginiQuality of Work-Life On Employee Retention and Job Satisfaction: The Moderating Role of Job PerformanceIAEME PublicationÎncă nu există evaluări

- Prediction of Average Total Project Duration Using Artificial Neural Networks, Fuzzy Logic, and Regression ModelsDocument13 paginiPrediction of Average Total Project Duration Using Artificial Neural Networks, Fuzzy Logic, and Regression ModelsIAEME PublicationÎncă nu există evaluări

- Ion Beams' Hydrodynamic Approach To The Generation of Surface PatternsDocument10 paginiIon Beams' Hydrodynamic Approach To The Generation of Surface PatternsIAEME PublicationÎncă nu există evaluări

- Evaluation of The Concept of Human Resource Management Regarding The Employee's Performance For Obtaining Aim of EnterprisesDocument6 paginiEvaluation of The Concept of Human Resource Management Regarding The Employee's Performance For Obtaining Aim of EnterprisesIAEME PublicationÎncă nu există evaluări

- Dredge Yard Gate Valve BrochureDocument5 paginiDredge Yard Gate Valve BrochureFederico BabichÎncă nu există evaluări

- Scope and Sequence 2020 2021...Document91 paginiScope and Sequence 2020 2021...Ngọc Viễn NguyễnÎncă nu există evaluări

- Corrosion Protection PT Tosanda Dwi SapurwaDocument18 paginiCorrosion Protection PT Tosanda Dwi SapurwaYoga FirmansyahÎncă nu există evaluări

- Human Development and Performance Throughout The Lifespan 2nd Edition Cronin Mandich Test BankDocument4 paginiHuman Development and Performance Throughout The Lifespan 2nd Edition Cronin Mandich Test Bankanne100% (28)

- Learning Plans in The Context of The 21 ST CenturyDocument29 paginiLearning Plans in The Context of The 21 ST CenturyHaidee F. PatalinghugÎncă nu există evaluări

- Goods Receipt Note: Johnson Controls Air Conditioning and Refrigeration Inc. (YORK) DateDocument4 paginiGoods Receipt Note: Johnson Controls Air Conditioning and Refrigeration Inc. (YORK) DateSaad PathanÎncă nu există evaluări

- Hemax-530 PDFDocument2 paginiHemax-530 PDFNice BennyÎncă nu există evaluări

- WT Capability Statement PE 2020Document1 paginăWT Capability Statement PE 2020Muhannad SuliemanÎncă nu există evaluări

- KSP Solutibilty Practice ProblemsDocument22 paginiKSP Solutibilty Practice ProblemsRohan BhatiaÎncă nu există evaluări

- (14062020 0548) HF Uniform Logo GuidelinesDocument4 pagini(14062020 0548) HF Uniform Logo GuidelinesBhargaviÎncă nu există evaluări

- Sustainable Development Precautionary PR 2Document12 paginiSustainable Development Precautionary PR 2PramodÎncă nu există evaluări

- Slide 7 PV NewDocument74 paginiSlide 7 PV NewPriyanshu AgrawalÎncă nu există evaluări

- CTRLX Automation BrochureDocument60 paginiCTRLX Automation BrochureNinja do SofáÎncă nu există evaluări

- Tecsun Pl310et PDFDocument30 paginiTecsun Pl310et PDFAxel BodemannÎncă nu există evaluări

- Solar-range-brochure-all-in-one-Gen 2Document8 paginiSolar-range-brochure-all-in-one-Gen 2sibasish patelÎncă nu există evaluări

- TransistorDocument3 paginiTransistorAndres Vejar Cerda0% (1)

- Evidence MODULE 1 Evidence DefinitionDocument8 paginiEvidence MODULE 1 Evidence Definitiondave BarretoÎncă nu există evaluări

- Kat-A 4102 Rotovalve Edition3!12!02-2013 enDocument4 paginiKat-A 4102 Rotovalve Edition3!12!02-2013 enWalter PiracocaÎncă nu există evaluări

- Emerson Mentor MP ManualDocument182 paginiEmerson Mentor MP ManualiampedrooÎncă nu există evaluări

- JCPS School Safety PlanDocument14 paginiJCPS School Safety PlanDebbie HarbsmeierÎncă nu există evaluări

- Research Project Presentation of Jobairul Karim ArmanDocument17 paginiResearch Project Presentation of Jobairul Karim ArmanJobairul Karim ArmanÎncă nu există evaluări