Documente Academic

Documente Profesional

Documente Cultură

Polyphonic Music Transcription Using Note Event Modeling

Încărcat de

joesie1234Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Polyphonic Music Transcription Using Note Event Modeling

Încărcat de

joesie1234Drepturi de autor:

Formate disponibile

2005 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics

October 16-19, 2005, New Paltz, NY

POLYPHONIC MUSIC TRANSCRIPTION USING NOTE EVENT MODELING Matti P. Ryyn anen and Anssi Klapuri Institute of Signal Processing, Tampere University of Technology P.O.Box 553, FI-33101 Tampere, Finland {matti.ryynanen, anssi.klapuri}@tut.fi

ABSTRACT This paper proposes a method for the automatic transcription of real-world music signals, including a variety of musical genres. The method transcribes notes played with pitched musical instruments. Percussive sounds, such as drums, may be present but they are not transcribed. Musical notations (i.e., MIDI les) are produced from acoustic stereo input les using probabilistic note event modeling. Note events are described with a hidden Markov model (HMM). The model uses three acoustic features extracted with a multiple fundamental frequency (F0) estimator to calculate the likelihoods of different notes and performs temporal segmentation of notes. The transitions between notes are controlled with a musicological model involving musical key estimation and bigram models. The nal transcription is obtained by searching for several paths through the note models. Evaluation was carried out with a realistic music database. Using strict evaluation criteria, 39% of all the notes were found (recall) and 41% of the transcribed notes were correct (precision). Taken the complexity of the considered transcription task, the results are encouraging. 1. INTRODUCTION Transcription of music refers to the process of generating symbolic notations, i.e., musical transcriptions, for musical performances. Conventionally, musical transcriptions have been written by hand, which is time-consuming and requires musical education. In addition to the transcription application itself, the computational transcription methods facilitate automatic music analysis, automatic search and annotation of musical information in large music databases, and interactive music systems. The automatic transcription of real-world music performances is an extremely challenging task. Humans (especially musicians) are able to recognize time-evolving acoustic cues as musical notes and larger musical structures, such as melodies and chords. A musical note is here dened by a discrete note pitch with a specic onset and an offset time. Melodies are consecutive note sequences with organized and recognizable shape whereas chords are combinations of simultaneously sounding notes. Transcription systems to date have either considered only limited types of musical genres [1], [2], [3], or attempted only partial transcription [4]. For a more complete review of different systems for polyphonic music transcription, see [5]. To our knowledge, there exists no system transcribing music performances without setting any restrictions on the instruments, musical genre, maximum polyphony, or the presence of percussive sounds or sound effects in the performances. The proposed method transcribes the pitched notes in music performances without limiting the target material in any of the above-mentioned ways. Goto has previ-

AUDIO LEFT

AUDIO RIGHT

ACOUSTIC MODEL

NOTE MODEL PARAMETERS FEATURES COMPUTE OBSERVATION LIKELIHOODS FOR MODELS

MULTIPLEF0 ESTIMATION

ESTIMATE MUSICAL KEY

CHOOSE BETWEENNOTE TRANSITION PROBABILITIES

FIND BEST PATHS THROUGH THE MODELS NOTE SEQUENCES

MUSICOLOGICAL MODEL

Figure 1: The block diagram of the transcription method.

ously proposed methods for the partial transcription of such complex material [4]. The proposed transcription method is based on probabilistic modeling of note events and their relationships. The approach was previously applied in monophonic singing transcription [6]. Figure 1 shows the block diagram of the method. First, both the left and the right channel of an audio recording are processed frame-byframe with a multiple-F0 estimator to obtain several F0s and their related features. The F0 estimates are processed by a musicological model which estimates musical key and chooses between-note transition probabilities. Note events are described with HMMs which allow the calculation of the likelihoods for different note events. Finally, a search algorithm nds multiple paths through the models to produce transcribed note sequences. We use the RWC (Real World Computing) music genre database which consists of stereophonic acoustic recordings sampled at 44.1 kHz from several musical genres, including popular, rock, dance, jazz, classical, and world music [7]. For each recording, the database includes a reference MIDI le which contains a manual annotation of the note events in the acoustic recording. The annotated note events are here referred to as the reference notes. Since there exist slight time deviations between the recordings and the reference notes, all the notes within one reference le are collectively time-scaled to synchronize them with the acoustic signal. In particular, the synchronization can be performed more reliably for the beginning of a recording. Therefore, we use the rst 30 seconds of 91 acoustic recordings for training and testing our transcription system. The MIDI notes for drums, percussive instruments, and sound effects are excluded from the set of reference notes.

2005 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics

October 16-19, 2005, New Paltz, NY

2. MULTIPLE-F0 ESTIMATION The front-end of the transcription method is a multiple-F0 estimator proposed in [5], [8]. The estimator applies an auditory model where an input signal is passed through a 70-channel bandpass lterbank and the subband signals are compressed, half-wave rectied, and lowpass ltered with a frequency response close to 1/f . Short-time Fourier transforms are then computed within the bands and the magnitude spectra are summed across channels to obtain a summary spectrum where all the subsequent processing takes place. Periodicity analysis is carried out by simulating a bank of comb lters in the frequency domain. F0s are estimated one at a time, the found sounds are canceled from the mixture, and the estimation is repeated for the residual. In addition, the method performs detection of the onsets of pitched sounds by observing positive changes in the estimated strengths of different F0 values. Here we used the estimator to analyze audio signal in 92.9 ms frames overlapped with 11.6 ms interval between the beginnings of successive frames. In each frame, the estimator produces ve distinct fundamental frequency values. Both the left and the right channels are independently analyzed from the input stereo signal, resulting in ten F0 estimates at each analysis frame t. As an output, the estimator produces four matrices X , S , Y , and D of size 10 tmax (tmax is the number of analysis frames): F0 estimates X and their salience values S . For a F0 estimate xit = [X ]it , the salience value sit = [S ]it roughly expresses how prominent xit is in the analysis frame t. Onsetting F0 estimates Y and their onset strengths D. If a sound with F0 estimate yit = [Y ]it sets on in frame t, the onset strength value dit = [D]it is high. The F0 values in both X and Y are expressed in units of unrounded MIDI note numbers by F0 MIDI note number = 69 + 12 log2 . (1) 440 Hz Logarithm is taken from the elements of S and D to compress their dynamic range. If an onset strength value dit is small, the onsetting F0 estimate yit is random valued. Therefore, those yit values are set to zero for which the onset strength dit is below a xed threshold. We empirically chose a threshold value of ln 3. 3. PROBABILISTIC MODELS The transcription system applies three probabilistic models: a note event HMM, a silence model, and a musicological model. The note HMM uses the output of the multiple-F0 estimator to calculate likelihoods for different notes, and the silence model corresponds to time regions where no notes are sounding. The musicological model controls transitions between note HMMs and the silence model, analogous to a language model in automatic speech recognition. Transcription is done by searching for disjoint paths through the note models and the silence model. 3.1. Note event model Note events are described with a three-state HMM. The note HMM is a state machine where state qi , 1 i 3, represents the typical values of the features in the i:th temporal segment of note events. The model allocates one note HMM for each MIDI note number n = 29, . . . , 94, i.e., from note F1 to B6. Given the matrices X ,

S , Y , and D, the observation vector on,t R3 is dened for a note HMM with nominal pitch n at frame t as on,t = (xn,t , sjt , dn,t ) , where xn,t dn,t j k = = = = xjt n , dkt , if |ykt n| 1 0, otherwise

i

(2)

(3) , (4) (5) (6)

arg min{|xit n|} , 1 i 10 , arg min{|yit n|} , 1 i 10 .

i

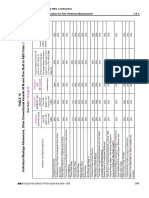

The observation vectors thus consist of three features: the pitch difference xn,t between the measured F0 and the nominal pitch n of the modeled note, the salience sjt , and the onset strength dn,t . For a note HMM n, the nearest F0 estimate and its salience values sjt are associated with the note by (3), (5). The onset strength feature is used only if its corresponding F0 value is closer than one semitone to the nominal pitch n of the model (4), (6). Otherwise, onset strength feature value dn,t is set to zero, i.e., no onset measurement is available for the particular note. We use the pitch difference as a feature instead of the absolute F0 value so that only one set of note-HMM parameters needs to be trained. In other words, we have a distinct note HMM for each nominal pitch n = 29, . . . , 94 but they all share the same trained parameters. This can be done since the observation vector itself is tailored to be different for each note model (2). The HMM parameters include (i) state-transition probabilities P (qj |qi ), i.e., the conditional probability that state qi is followed by state qj , and (ii) the observation likelihood distributions, i.e., the likelihoods P (on |qi ) that observation on is emitted by the state qi from note model n. For the time region of a reference note with nominal pitch n, the observation vectors by (2) form an observation sequence for training the acoustic note event model. The observation sequence is accepted for the training only if the median of the absolute pitch differences |xn,t | is smaller than one semitone during the reference note. This type of selection is necessary, since for some reference notes there are no reliable F0 measurements available in X . The note HMM parameters are then obtained using the BaumWelch algorithm [9]. The observation likelihood distributions are modeled with a four-component Gaussian mixture model. Figure 2 shows the parameters of the trained note HMM. On top, the HMM states are connected with arrows to show the HMM topology and the state-transition probabilities. Below each state, the gure shows the observation likelihood distributions for the three features. The rst state is interpreted as the attack state where pitch difference has a larger variance, the salience feature gets lower values, and the onset strength has a prominent peak at 1.2, thus indicating note onsets. The second state, here called as the sustain state, includes small variance of the pitch difference feature and large salience values. The nal state is a noise state, where F0s with small salience values dominate. The sustain and the noise states are full-connected, thus allowing note HMM to visit the noise state and switch back to the sustain state, if a note event contains noisy regions. 3.2. Silence model We use a silence model to skip the time regions where no notes are sounding. The silence model is a 1-state HMM for which the

2005 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics

October 16-19, 2005, New Paltz, NY

NOTE EVENT HMM

0.86 ATTACK STATE

0.90 0.14 SUSTAIN STATE

0.98 0.10 0.02 NOISE STATE

OBSERVATION LIKELIHOOD DISTRIBUTIONS IN EACH STATE: PITCH DIFFERENCE (SEMITONES)

4 4 4

0 1 3

0 1 3 2 1

0 1 3 2 1

2 1 0 0.5 1 1.5 2 2.5

0 3 2 1 0

0.5

1.5

2.5

0 3 2 1 0

0.5

1.5

2.5

ONSET STRENGTH

previous note and the most probable relative-key pair k, the note bigram probability P (nt = j |nt1 = i, k) gives a transition probability to move from note i to note j . This means that a possible visit in the silence model is skipped and only the previous note accounts. The musicological model assumes that it is more probable both to start and to end a note sequence with a note which is frequently occurring in the musical key. A silence-to-note transition (if there is no previously visited note, e.g., in the beginning of the piece) corresponds to starting a note sequence and a note-to-silence transition corresponds to ending a note sequence. Krumhansl reported the occurrence distributions of different notes with respect to musical key estimated from a large amount of classical music [11, p. 67]. The musicological model applies these distributions as probabilities for the note-to-silence and the silenceto-note transitions so that the most probable relative-key pair is taken into account. A transition from the silence model to itself is prohibited. 3.4. Finding several note sequences The note models and the silence model constitute a network of models. The optimal path through the network can be found using the Token-passing algorithm [12] after calculating the observation likelihoods for note model states and the silence model, and choosing the transition probabilities between different models. The Token-passing algorithm propagates tokens through the network. Each model state contributes to the overall likelihood of a token by the observation likelihood and the transition probabilities between the states. When a token is emitted out of a model, a note boundary is recorded. Between the models, the musicological model contributes to the likelihood of the token by considering the previous note, or the silence model. Eventually, the token with the greatest likelihood denes the optimal note sequence. In order to transcribe polyphonic music, we need to nd several paths through the network. We apply the Token-passing algorithm iteratively as follows. As long as the desired number of paths has not yet been found and the found paths contain notes and not just silence, (i) nd the optimal path with Token-passing, and (ii) prohibit the use of any model (except the silence model) on the found path during the following iterations. After each iteration, recalculate the observation likelihoods for the silence model with (7) by discarding the observation likelihoods for note models on the found paths. As a result, several disjoint note sequences have been transcribed. In the simulations, the maximum number of iterations was set to 10, meaning that the system can transcribe at most 10 simultaneously sounding notes. Figure 3 shows a transcription example from the beginning of a jazz ballad, including piano, a contrabass, and drums. 4. SIMULATION RESULTS The proposed transcription system was evaluated using a threefold cross validation. Three evaluation criteria were used: the recall rate, the precision rate, and mean overlap ratio. Given that c(ref) is the number of reference notes, c(trans) is the total number of transcribed notes, and c(cor) is the number of correctly transcribed notes, the rates are dened as recall = c(cor) , c(ref) precision = c(cor) . c(trans) (8)

SALIENCE

3 2 1 0

0.5

1.5

0.5

1.5

0.5

1.5

Figure 2: The parameters of the trained note event HMM.

observation likelihood at time t is dened as P (silence)t = 1 max{P (on,t |qj )} ,

n,j

(7)

i.e., the observation likelihood for the silence model is the negation of the greatest observation likelihood in any state of any note model at time t. Here, the observation likelihood value P (on,t |qj ) is on linear scale, and its maximum value is unity. If a note state has a large observation likelihood, the observation likelihood for the silence model is small at that time. 3.3. Musicological model The musicological model controls transitions between note HMMs and the silence model in a manner similar to [6]. The musicological model is based on the fact that some note sequences are more common than others in a certain musical key. A musical key is roughly dened by the basic note scale used in a song. A major key and a minor key are called a relative-key pair if they consist of scales with the same notes (e.g., the C major and the A minor). The musicological model rst nds the most probable relativekey pair using a musical key estimation method proposed and evaluated in [10]. The method produces likelihoods for different major and minor keys from those F0 estimates xit (rounded to the nearest MIDI note numbers) for which salience value is larger than a xed threshold. Based on empirical investigation of the data, we chose a threshold value of ln 2. The most probable relative-key pair is estimated for the whole recording and this key pair is then used to choose transition probabilities between note models and the silence model. The transition probabilities between note HMMs are dened by note bigrams which were estimated from a large database of monophonic melodies, as reported in [6]. As a result, given the

2005 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics

October 16-19, 2005, New Paltz, NY

70

PIANO CHORDS

65

MIDI NOTE NUMBERS

60

model. The proposed transcription method and the presented evaluation results give a reliable estimate of the accuracy of state-ofthe-art music-transcription systems which address all music types and attempt to recover all the notes in them. The results are very encouraging and serve as a baseline for the further development of transcription systems for realistic music signals. Transcription examples are available at

http://www.cs.tut.fi/sgn/arg/matti/demos/polytrans.html

55

50

REFERENCE NOTES TRANSCRIPTION ERRORS TRANSCRIBED NOTES

45

6. REFERENCES [1] K. Kashino, K. Nakadai, T. Kinoshita, and H. Tanaka, Organization of hierarchial perceptual sounds: Music scene analysis with autonomous processing modules and a quantitative information integration mechanism, in Proc. International Joint Conference on Articial Intelligence (IJCAI), vol. 1, Aug. 1995, pp. 158164. [2] K. D. Martin, Automatic transcription of simple polyphonic music: Robust front end processing, Massachusetts Institute of Technology Media Laboratory Perceptual Computing Section, Tech. Rep. 399, 1996. [3] M. Davy and S. J. Godsill, Bayesian harmonic models for musical signal analysis, in Seventh Valencia International meeting (Bayesian Statistics 7). Oxford University Press, 2002. [4] M. Goto, A real-time music-scene-description system: Predominant-F0 estimation for detecting melody and bass lines in real-world audio signals, Speech Communication, vol. 43, no. 4, pp. 311329, 2004. [5] A. Klapuri, Signal processing methods for the automatic transcription of music, Ph.D. dissertation, Tampere University of Technology, 2004. [6] M. P. Ryyn anen and A. Klapuri, Modelling of note events for singing transcription, in Proc. ISCA Tutorial and Research Workshop on Statistical and Perceptual Audio, Oct. 2004. [7] M. Goto, H. Hashiguchi, T. Nishimura, and R. Oka, RWC music database: Music genre database and musical instrument sound database, in Proc. 4th International Conference on Music Information Retrieval, Oct. 2003. [8] A. Klapuri, A perceptually motivated multiple-F0 estimation method, in Proc. IEEE Workshop on Applications of Signal Processing to Audio and Acoustics, Oct. 2005. [9] L. R. Rabiner, A tutorial on hidden markov models and selected applications in speech recognition, Proc. IEEE, vol. 77, no. 2, pp. 257289, Feb. 1989. [10] T. Viitaniemi, A. Klapuri, and A. Eronen, A probabilistic model for the transcription of single-voice melodies, in Proc. 2003 Finnish Signal Processing Symposium, May 2003, pp. 5963. [11] C. Krumhansl, Cognitive Foundations of Musical Pitch. Oxford University Press, 1990. [12] S. J. Young, N. H. Russell, and J. H. S. Thornton, Token passing: a simple conceptual model for connected speech recognition systems, Cambridge University Engineering Department, Tech. Rep., July 1989.

40

35 0

CONTRABASS LINE

1 2 3 4 5 TIME (SECONDS) 6 7 8

Figure 3: A transcription from the beginning of a jazz ballad, including piano, a contrabass, and drums. The grey bars indicate the reference notes and the black lines are the transcribed notes.

A reference note is correctly transcribed by a note in the transcription if (i) their MIDI notes are equal, and (ii) the absolute difference between their onset times is smaller than or equal to a given maximum onset interval , and (iii) the transcribed note is not already associated with another reference note. In other words, one transcribed note can transcribe only one reference note. The overlap ratio is dened for an individual correctly transcribed note as overlap ratio = min{offsets} max{onsets} , max{offsets} min{onsets} (9)

where onsets refers to the onset times of both the reference and the corresponding transcribed note, and offsets accordingly to the offset times. The mean overlap ratio is then calculated as the average of overlap ratios for all the correctly transcribed notes within one transcription. Because of the timing deviations between the recordings and the manually annotated reference notes, = 150 ms was used. Although this is a rather large value, it is acceptable in this situation. For example, the reference note 50 in Fig. 3 is not correctly transcribed due to the = 150 ms criterion. The criteria are otherwise very strict. In addition, the reference notes with colliding pitch and onset (on the average, 20% of reference notes) were required to be transcribed, although our transcription system performs no instrument recognition and is thus incapable of transcribing such unison notes. The recall rate, the precision rate, and the mean overlap ratio are calculated separately for the transcriptions of each recording. The average over all the transcriptions for each criterion are: recall 39%, precision 41%, and the mean overlap ratio 40%. 5. CONCLUSIONS This paper described a method for transcribing realistic polyphonic audio. The method was based on the combination of an acoustic model for note events, a silence model, and a musicological

S-ar putea să vă placă și

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (119)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Performance-Creative Design Concept For Concrete InfrastructureDocument11 paginiPerformance-Creative Design Concept For Concrete InfrastructureTuan PnÎncă nu există evaluări

- G100-G102-Chassis-Manual #1Document151 paginiG100-G102-Chassis-Manual #1Daihatsu Charade BanjarnegaraÎncă nu există evaluări

- 9mm!ূຌዡገ๕ۉ࿋ഗ Rotary Potentiometers With Metal Shaft Series: WH9011A-1-18TDocument26 pagini9mm!ূຌዡገ๕ۉ࿋ഗ Rotary Potentiometers With Metal Shaft Series: WH9011A-1-18TpeterfunÎncă nu există evaluări

- Hdpe Alathon H5520 EquistarDocument2 paginiHdpe Alathon H5520 EquistarEric Mahonri PereidaÎncă nu există evaluări

- Capitulos 10 y 11Document34 paginiCapitulos 10 y 11mlpz188669Încă nu există evaluări

- CST Design Studio - WorkflowDocument102 paginiCST Design Studio - WorkflowHeber Bustos100% (7)

- Carbon Dioxide in RespirationDocument1 paginăCarbon Dioxide in Respirationlolay01Încă nu există evaluări

- Abs/Tcs/Esp Training GuideDocument136 paginiAbs/Tcs/Esp Training GuideKatapangTkrÎncă nu există evaluări

- Difference Between Industrial Revolution & French, Russian Revolution?Document10 paginiDifference Between Industrial Revolution & French, Russian Revolution?Anonymous 3owUL0ksVÎncă nu există evaluări

- ABS Thickness Measurement Requirement For Ship in Operation PDFDocument2 paginiABS Thickness Measurement Requirement For Ship in Operation PDFMohd Fouzi AbdullahÎncă nu există evaluări

- Mitsubishi forklift manual pdf downloadDocument3 paginiMitsubishi forklift manual pdf downloadDwi Putra33% (12)

- 02 - Heat ExchangersDocument88 pagini02 - Heat ExchangerssanjaysyÎncă nu există evaluări

- Nba Sar B.tech. Electronics UgDocument171 paginiNba Sar B.tech. Electronics UgSaurabh BhiseÎncă nu există evaluări

- United States Air Force: Next Generation Ejection SeatDocument2 paginiUnited States Air Force: Next Generation Ejection SeatChris EfstathiouÎncă nu există evaluări

- Undergraduate Architectural Thesis Site and Village Study PDFDocument4 paginiUndergraduate Architectural Thesis Site and Village Study PDFMalavika LaxmanÎncă nu există evaluări

- Thiourea PDFDocument43 paginiThiourea PDFMohamad EshraÎncă nu există evaluări

- Lincoln FC ElectrodesDocument44 paginiLincoln FC ElectrodeszmcgainÎncă nu există evaluări

- Plasmon CD-R ReviewDocument11 paginiPlasmon CD-R Reviewgrudva2Încă nu există evaluări

- Fobasv 6Document3 paginiFobasv 6Satyabrat Gaan100% (3)

- Motivation and Leadership in Engineering ManagementDocument24 paginiMotivation and Leadership in Engineering ManagementChen Marie DyÎncă nu există evaluări

- Part Ii - Particular Technical Specifications Chapter 13 - Permanent Access Bridge 13. PERMANENT ACCESS BRIDGE........................................................ 13-1Document11 paginiPart Ii - Particular Technical Specifications Chapter 13 - Permanent Access Bridge 13. PERMANENT ACCESS BRIDGE........................................................ 13-1Anonymous KHIyWRIWmaÎncă nu există evaluări

- P0562-Battery Voltage LowDocument7 paginiP0562-Battery Voltage Lowguillermoal539100% (1)

- MS Electrical Cable InstallationDocument11 paginiMS Electrical Cable InstallationAhmed Rashed AÎncă nu există evaluări

- University Institute of Information Technology: Ouick Learn - MCQDocument53 paginiUniversity Institute of Information Technology: Ouick Learn - MCQvimalÎncă nu există evaluări

- Cloud Radio Access Network Architecture Towards 5G NetworkDocument171 paginiCloud Radio Access Network Architecture Towards 5G NetworkwobblegobbleÎncă nu există evaluări

- Preparatory Year Program Computer Science (PYP 002)Document34 paginiPreparatory Year Program Computer Science (PYP 002)Hassan AlfarisÎncă nu există evaluări

- Manufacturing of Urea Through Synthetic Ammonia Project ReportDocument5 paginiManufacturing of Urea Through Synthetic Ammonia Project ReportvishnuÎncă nu există evaluări

- ZMD402AT-CT Technical DataDocument7 paginiZMD402AT-CT Technical DataCarlos SandersÎncă nu există evaluări

- 16CE125-Structural Analysis - IIDocument12 pagini16CE125-Structural Analysis - IIAnkur SinhaÎncă nu există evaluări

- WM 5.4 CLI Reference Guide PDFDocument1.239 paginiWM 5.4 CLI Reference Guide PDFHermes GuerreroÎncă nu există evaluări