Documente Academic

Documente Profesional

Documente Cultură

High Performance Computing

Încărcat de

tpabbasDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

High Performance Computing

Încărcat de

tpabbasDrepturi de autor:

Formate disponibile

High

Performance

Computing

(Lecture Notes)

Department of Computer Science

School Mathematical & Physical Sciences

Central University of Kerala

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

2

Module 1

Architectures and Models of

Computation

LEARNING OBJECTIVES

Shared and Distributed Memory Machines, PRAM Model,

Interconnection Networks: Crossbar, Bus, Mesh, Tree, Butterfly and

MINs, Hypercube, Shuffle Exchange, etc.; Evaluation based on

Diameter, Bisection Bandwidth, Number of Edges, etc., Embeddings:

Mesh, Tree, Hypercube; Gray Codes, Flynns Taxonomy

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

3

1. What is parallel computing? Why parallel computing? How does it

differ from concurrency? What are its application areas?

Parallel Computing What it is?

Parallel computing is the use of parallel computer to reduce the time needed to

solve a single computational problem. Parallel computers are computer systems

consisting of multiple processing units connected via some interconnection

network plus the software needed to make the processing units work together.

The processing units can communicate and interact with each other using either

shared memory or message passing methods. Parallel computing is now

considered a standard way for computational scientists and engineers to solve

computational problems that demands high performance computing power.

Parallel Computing Why it is?

Sequential computing systems have been with us for more than six decades since

John von Neumann introduced digital computing in the 1950s. The traditional

logical view of a sequential computer consists of a memory connected to a

processor via a datapath. In sequential computing, all the three components

processor, memory, and datapath present bottlenecks to the overall processing

rate of a computer system. To speed up the execution, one would need to either

increase the clock rate or to improve the memory performance by reducing its

latency or increasing the bandwidth. A number of architectural innovations like

multiplicity (in processing units, datapaths and memory units), cache memory,

pipelining, superscalar execution, multithreading, prefetching, etc., over the

years have been exploited to address these performance bottlenecks. Though,

these architectural innovations have brought about an average 50% performance

improvement per year during the period 1986 to 2002, the performance gain

recorded a low rate after 2002 primarily due to the fundamental architectural

limitations of the sequential computing and the computing industry came to the

realization that uniprocessor architectures cannot sustain the rate of realizable

performance increments in the future. This realization resulted in computing

industry to focus more on parallel computing for achieving the sustained

realizable performance improvement. And the idea of a single processor

computer is fast becoming outdated and old-fashioned.

Parallelism Vs Concurrency

In many fields, the words parallel and concurrent are synonyms; not so in

programming, where they are used to describe fundamentally different concepts.

A parallel program is one that uses a multiplicity of computational hardware

(e.g., several processor cores) to perform a computation more quickly. The aim is

to arrive at the answer earlier, by delegating different parts of the computation

to different processors that execute at the same time.

By contrast, concurrency is a program-structuring technique in which there are

multiple threads of control, which may be executed in parallel on multiple

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

4

physical processors or in interleaved fashion on a single processor. Whether they

actually execute in parallel or not is therefore an implementation detail.

While parallel programming is concerned only with efficiency, concurrent

programming is concerned with structuring a program that needs to interact

with multiple independent external agents (for example, the user, a database

server, and some external clients). Concurrency allows such programs to be

modular. In the absence of concurrency, such programs have to be written with

event loops and callbacks, which are typically more cumbersome and lack the

modularity that threads offer.

Parallel Computing Advantages.

The main argument for using multiprocessors is to create powerful computers by

simply connecting multiple processors. A multiprocessor is expected to reach

faster speed than the fastest single-processor system. In addition, a

multiprocessor consisting of a number of single processors is expected to be more

cost-effective than building a high-performance single processor. Another

advantage of a multiprocessor is fault tolerance. If a processor fails, the

remaining processors should be able to provide continued service, although with

degraded performance.

Parallel Computing The Limits.

A theoretical result known as Amdahls law says that the amount of performance

improvement that parallelism provides is limited by the amount of sequential

processing in your application. This may, at first, seem counterintuitive.

Amdahls law says that no matter how many cores you have, the maximum

speed-up you can ever achieve is (1 / fraction of time spent in sequential

processing).

Parallel Computing Application Areas

Parallel computing is a fundamental and irreplaceable technique used in todays

science and technology, as well as manufacturing and service industries. Its

applications cover a wide range of disciplines:

Basic science research, including biochemistry for decoding human genetic

information as well as theoretical physics for understanding the interactions

of quarks and possible unification of all four forces.

Mechanical, electrical, and materials engineering for producing better

materials such as solar cells, LCD displays, LED lighting, etc.

Service industry, including telecommunications and the financial industry.

Manufacturing, such as design and operation of aircrafts and bullet trains.

Its broad applications in oil exploration, weather forecasting, communication,

transportation, and aerospace make it a unique technique for national

economical defence. It is precisely this uniqueness and its lasting impact that

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

5

defines its role in todays rapidly growing technological society

2. Development of parallel software has traditionally been thought of

as time and effort intensive. Justify the statement.

Traditionally, computer software has been written for serial computation. To

solve a problem, an algorithm is constructed and implemented as a serial stream

of instructions. These instructions are executed on a central processing one after

another.

Parallel computing, on the other hand, uses multiple processing elements

simultaneously to solve a problem. This is accomplished by breaking the problem

into independent tasks so that each processing element can execute its part of

the algorithm simultaneously with the others. The processing elements can be

diverse and include resources such as a single computer with multiple

processors, several networked computers, specialized hardware, or any

combination of the above.

However, development of parallel software is traditionally considered as a time

and effort intensive activity due to the following reasons:

Complexity in specifying and coordinating concurrent tasks

lack of portable parallel algorithms

lack of standardized parallel environments, and

lack of parallel software development toolkits

Complexity in specifying and coordinating concurrent tasks: Concurrent

computing involves overlapping of the execution of several computations over

one or more processors. Concurrent computing often requires complex

interactions between the processes. These interactions are often communication

via message passing, which may be synchronous or asynchronous; or may be

access to shared resources. The main challenges in designing concurrent

programs are concurrency control: ensuring the correct sequencing of the

interactions or communications between different computational executions, and

coordinating access to resources that are shared among executions. Problems

that may occur include non-determinism (from race conditions), deadlock, and

resource starvation.

Lack of portable parallel algorithms: Because the interconnection scheme

among processors (or between processors and memory) signicantly affects the

running time, efcient parallel algorithms must take the interconnection scheme

into account. Because of this, most of the existing parallel algorithms for real

world applications suffer from a major limitation that these algorithms have

designed with a specific underlying parallel architecture in mind and are not

portable to different parallel architecture.

Lack of standardized parallel environments: The lack of standards in

parallel programming languages makes parallel programs difficult to port across

parallel computers.

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

6

Lack of standardized parallel software development toolkits: Unlike

sequential programming tools, the parallel programming tools available are

highly dependent on both on the characteristics of the problem and on the

parallel programming environment opted for. The lack of standard programming

tools makes parallel programming difficult and the resultant programs are not

portable across parallel computers.

However, in the last few decades, researchers have made considerable progress

in designing efficient and cost-effective parallel architectures and parallel

algorithms. Together with this, the factors such as

reduction in the turnaround time required for the development of

microprocessor based parallel machine and

standardization of parallel programming environments and parallel

programming tools to ensure a longer life-cycle for parallel applications

have made the parallel computing today less time and effort intensive.

3. Briefly explain some of the compelling arguments in favour of

parallel computing platforms

Though considerable progress has been made in the microprocessor technology in

the past few decades, the industry came to the realization that the implicit

parallel architecture alone cannot provide sustained realizable performance

increments. Together with this, the factors such as

reduction in the turnaround time required for the development of

microprocessor based parallel machine and

standardization of parallel programming environments and parallel

programming tools to ensure a longer life-cycle for parallel applications

present compelling arguments in favour of parallel computing platforms.

The major fascinating arguments in favour of parallel computing platforms

include:

a) The Computational Power Argument.

b) Memory/Disk speed Argument.

c) Data Communication Argument

The Computational Power Argument: Due to the sustained development in

the microprocessor technology, the computational powers of the systems are

doubling in every 18 months (called Moores law). This sustained development in

the microprocessor technology favours parallel computing platforms.

Memory/Disk Speed Argument: The overall speed of a system is determined

not just by the speed of the processor, but also by the ability of the memory

system to feed data to it. Considering the 40% annual increase in clock speed

coupled with the increases in instructions executed per clock cycle, the small 10%

annual improvement in memory access time (memory latency) has resulted in a

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

7

performance bottleneck. This growing mismatch between processor speed and

DRAM latency can be bridged to a certain level by introducing cache memory

that relies on locality of data reference. Besides memory latency, the effective

memory bandwidth also influences the sustained improvements in computation

speed. Compared uniprocessor systems, parallel platforms typically provide

better memory system performance because they provide (a) larger aggregate

caches, and (b) higher aggregate memory bandwidth. Besides, design of parallel

algorithms that can exploit the locality of data reference can also improve the

memory and disk latencies.

The Data Communication Argument: Many of the modern real world

applications in quantum chemistry, statistical mechanics, cosmology,

astrophysics, computational fluid dynamics and turbulence, superconductivity,

biology, pharmacology, genome sequencing, genetic engineering, protein folding,

enzyme activity, cell modelling, medicine, modelling of human organs and bones,

global weather and environmental modelling, data mining, etc., are massively

parallel and demands large scale wide area distributed heterogeneous

parallel/distributed computing environments.

4. Describe the classical von Neumann architecture of computing

systems.

The classical von Neumann architecture consists of main memory, a central

processing unit (also known as CPU or processor or core) and an

interconnection between the memory and the CPU. Main memory consists of a

collection of locations, each of which is capable of storing both instructions and

data. Every location consists of an address, which is used to access the

instructions or data stored in the location. The classical von Neumann

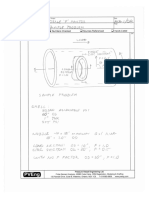

architecture is depicted below:

The central processing unit is divided into a control unit and an arithmetic

and logic unit (ALU). The control unit is responsible for deciding which

instructions in a program should be executed, and the ALU is responsible for

executing the actual instructions. Data in the CPU and information about the

state of an executing program are stored in special, very fast storage called

registers. The control unit has a special register called the program counter.

It stores the address of the next instruction to be executed.

Instructions and data are transferred between the CPU and memory via the

interconnect called bus. A bus consists of a collection of parallel wires and some

hardware controlling access to the wires. A von Neumann machine executes a

single instruction at a time, and each instruction operates on only a few pieces of

data.

The process of transferring data or instructions from memory to the CPU is

referred to as data or instructions fetch or memory read operation. The process

of transferring data from the CPU to memory is referred to as memory write.

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

8

Fig: The Classical von Neumann Architecture

The separation of memory and CPU is often called the von Neumann

bottleneck, since the interconnect determines the rate at which instructions

and data can be accessed. CPUs are capable of executing instructions more than

one hundred times faster than they can fetch items from main memory.

5. Explain the terms processes, multitasking, and threads.

Process

A process is an instance of a computer program that is being executed. When a

user runs a program, the operating system creates a process. A process consists

of several entities:

The executable machine language program

A block of memory, which will include the executable code, a call stack that

keeps track of active functions, a heap, and some other memory locations

Descriptors of resources that the operating system has allocated to the

process for example, file descriptors. .

Security information for example, information specifying which hardware

and software resources the process can access.

Information about the state of the process, such as whether the process is

ready to run or is waiting on some resource, the content of the registers, and

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

9

information about the process memory.

Multitasking

A task is a unit of execution. In some operating systems, a task is synonymous

with a process, in others with a thread. An operating system is called

multitasking if it can execute multiple tasks. Most modern operating systems are

multitasking. This means that the operating system provides support for the

simultaneous execution of multiple programs. This is possible even on a system

with a single core, since each process runs for a time slice (typically a few

milliseconds). After one running program has executed for a time slice, the

operating system can run a different program. A multitasking OS may change

the running process many times a minute, even though changing the running

process can results overheads. In a multitasking OS, if a process needs to wait

for a resource (for example, it needs to read data from external storage) the OS

will block the process and schedule another ready process to run. For example,

an airline reservation system that is blocked waiting for a seat map for one user

could provide a list of available flights to another user. Multitasking does not

imply parallelism but it involves concurrency.

Threads

A thread of execution is the smallest unit of a program that can be managed

independently by an operating system scheduler. Threading provides a

mechanism for programmers to divide their programs into more or less

independent tasks with the property that when one thread is blocked another

thread can be run. Furthermore, the context switching among threads is much

faster as compared context switching among processes. This is because threads

are lighter weight than processes. Threads are contained within processes, so

they can use the same executable, and they usually share the same memory and

the same I/O devices. In fact, two threads belonging to one process can share

most of the process resources. Different threads of a process need only to keep a

record of their own program counters and call stacks so that they can execute

independently of each other.

6. Describe the architectural innovations employed to overcome the

von Neumann bottleneck

The classical von Neumann architecture consists of main memory, a processor

and an interconnection between the memory and the processor. This separation

of memory and processor is called the von Neumann bottleneck, since the

interconnect determines the rate at which instructions and data can be accessed.

This has resulted in creating a large speed mismatch (of the order of 100 times or

more) between the processor and memory. Several architectural innovations

have been exploited as an extension to the classical von Neumann architecture

for hiding this speed mismatch and improving the overall system performance.

The prominent architectural innovations for hiding the von Neumann bottleneck

include:

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

10

1. Caching,

2. Virtual memory, and

3. Low-level parallelism Instruction-level and thread-level parallelisms.

Caching

Cache is a smaller and faster memory between the processor and the DRAM,

which stores copies of the data from frequently used main memory locations.

Cache acts as a low-latency high-bandwidth storage (improves both memory

latency and bandwidth).

Cache works by the principle of locality of reference, which state that programs

tend to use data and instructions that are physically close to recently used data

and instructions.

The data needed by the processor is first fetched into the cache. All subsequent

accesses to data items residing in the cache are serviced by the cache, thereby

reducing the effective memory latency.

In order to exploit the principle of locality, the memory access to cache operates

on blocks (called cache blocks or cache lines) of data and instructions instead of

individual instructions and individual data items (cache lines ranges from 8 to

16 words). A cache line of 16 means that 16 memory words can be accessed in

115ns (assuming 100ns memory latency) instead of 1600ns (if accessed one word

at a time), thereby increasing the memory bandwidth from 5MWords/Sec to

70MWords/Sec. Blocked access can also reduce the memory latency. A cache line

of 16 means that next 15 accesses to memory can be found from cache (if

program exhibits strong locality of reference), thereby hiding the effective

memory latency.

Rather than implementing CPU cache as a single monolithic structure, in

practice, the cache is usually divided into levels: the first level (L1) is the

smallest and the fastest, and higher levels (L2, L3, . . . ) are larger and slower.

Most systems currently have at least two levels. Caches usually store copies of

information in slower memory. For example, a variable stored in a level 1 cache

will also be stored in level 2. However, some multilevel caches dont duplicate

information thats available in another level. For these caches, a variable in a

level 1 cache might not be stored in any other level of the cache, but it would be

stored in main memory.

When the CPU needs to access an instruction or data, it works its way down the

cache hierarchy: First it checks the level 1 cache, then the level 2, and so on.

Finally, if the information needed isnt in any of the caches, it accesses main

memory.

When a cache is checked for information and the information is available, it is

called a cache hit. If the information is not available, it is called a cache miss.

Hit or miss is often modified by the level. For example, when the CPU attempts

to access a variable, it might have an L1 miss and an L2 hit.

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

11

When the CPU writes data to a cache, the value in the cache and the value in

main memory are different or inconsistent. There are two basic approaches to

dealing with the inconsistency. In write-through caches, the cache line is

written to main memory when it is written to the cache. In write-back caches,

the data is not written immediately. Rather, the updated data in the cache is

marked dirty, and when the cache line is replaced by a new cache line from

memory, the dirty line is written to memory.

Virtual Memory

Caches make it possible for the CPU to quickly access instructions and data that

are in main memory. However, if we run a very large program or a program that

accesses very large data sets, all of the instructions and data may not fit into

main memory. This is especially true with multitasking operating systems. In

order to switch between programs and create the illusion that multiple programs

are running simultaneously, the instructions and data that will be used during

the next time slice should be in main memory. Thus, in a multitasking system,

even if the main memory is very large, many running programs must share the

available main memory.

Virtual memory was developed so that main memory can function as a cache for

secondary storage. It exploits the principle of locality by keeping in main memory

only the active parts of the many running programs. Those parts that are idle

are kept in a block of secondary storage called swap space. Like CPU caches,

virtual memory operates on blocks of data and instructions. These blocks are

commonly called pages (size ranges from 4 to 16 kilobytes).

When a program is compiled, its pages are assigned virtual page numbers. When

the program is loaded into memory, a Page Map Table (PMT) is created that

maps the virtual page numbers to physical addresses. The virtual address

references made by a running program are translated into corresponding

physical addresses by using this PMT.

The drawback of storing PMT in main memory is that a virtual address

reference made by the running program requires two memory accesses: one to

get the appropriate page table entry of the virtual page to find its location in

main memory, and one to actually access the desired memory. In order to avoid

this problem, CPUs have a special page table cache called the translation look

aside buffer (TLB) that caches a small number of entries (typically 16512)

from the page table in very fast memory. Using the principle of locality, one can

expect that most of the memory references will be to pages whose physical

address is stored in the TLB, and the number of memory references that require

accesses to the page table in main memory will be substantially reduced.

If the running process attempts to access a page that is not in memory, that is,

the page table does not have a valid physical address for the page and the page is

only stored on disk, then the attempted access is called a page fault. In such

case, the running process will be blocked until the faulted page is brought into

memory and the corresponding entry is made in PMT.

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

12

When the running program look for an address and the virtual page number is in

the TLB, it is called a TLB hit. If it is not in the TLB, it is called a TLB miss.

Due to the relative slowness of disk accesses, virtual memory always uses a

write-back scheme for handling write accesses. This can be handled by keeping a

bit on each page in memory that indicates whether the page has been updated. If

it has been updated, when it is evicted from main memory, it will be written to

disk.

Low-Level Parallelism Instruction-Level parallelism

Low-level parallelisms are the parallelism that are not visible to the programmer

(i.e., programmer has no control). Two of the low-level parallelisms are

instruction-level parallelism and thread-level parallelism.

Instruction-level parallelism, or ILP, attempts to improve processor performance

by having multiple processor components or functional units simultaneously

executing instructions. There are two main approaches to ILP: pipelining, in

which functional units are arranged in stages with the output of one being the

input to the next, and multiple issue, in which the functional units are

replicated and issues multiple instructions simultaneously.

Pipelining: Pipelining is a technique used to increase their instruction

throughput of the processor. The main idea is to divide the basic instruction cycle

into a series of independent steps of micro-operations. Rather than processing

each instruction sequentially, the independent micro-operations of different

instructions are executed concurrently (by different functional units) in parallel.

Pipelining enables faster execution by overlapping various stages in instruction

execution (fetch, schedule, decode, operand fetch, execute, store, among others).

Multiple Issue (Superscalar Execution): Superscalar execution is an

advanced form of pipelined instruction-level parallelism that allows dispatching

of multiple instructions to multiple pipelines, processed concurrently by

redundant functional units on the processor. Superscalar execution can provide

better cost-effective performance as it improves the degree of overlapping of

parallel concurrent execution of multiple instructions.

Low-Level Parallelism Thread-Level parallelism

Thread-level parallelism, or TLP, attempts to provide parallelism through the

simultaneous execution of different threads. Thread level parallelism splits a

program into independent threads for running it concurrent. TLP is considered

as a coarser-grained parallelism than ILP, that is, the program units that are

being simultaneously executed (threads in TLP) are larger or coarser than the

finer-grained units (individual instructions in ILP).

Multithreading provides a means for systems to continue doing useful work by

switching the execution to another thread when the thread being currently

executed has stalled (for example, if the current task has to wait for data to be

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

13

loaded from memory). There are different ways to implement the multithreading.

In fine-grained multithreading, the processor switches between threads after

each instruction, skipping threads that are stalled. While this approach has the

potential to avoid wasted machine time due to stalls, it has the drawback that a

thread thats ready to execute a long sequence of instructions may have to wait

to execute every instruction.

Coarse-grained multithreading attempts to avoid this problem by only

switching threads that are stalled waiting for a time-consuming operation to

complete (e.g., a load from main memory).

Simultaneous multithreading is a variation on fine-grained multithreading.

It attempts to exploit superscalar processors by allowing multiple threads to

make use of the multiple functional units.

7. Explain how implicit parallelisms like pipelining and super scalar

execution results in better cost-effective performance gains

Microprocessor technology has recorded an average 50% annual performance

improvement over the last few decades. This development has also uncovered

several performance bottlenecks in achieving the sustained realizable

performance improvement. To alleviate these bottlenecks, microprocessor

designers have explored a number of alternate architectural innovations to cost-

effective performance gains. One of the most important innovations is

multiplicity in processing units, datapaths, and memory units. This

multiplicity is either entirely hidden from the programmer or exposed to the

programmer in different forms.

Implicit parallelism is an approach to provide multiplicity at the level of

instruction execution for achieving the cost-effective performance gain. In

implicit parallelism, parallelism is exploited by the compiler and/or the runtime

system and this type of parallelism is transparent to the programmer.

Two common approaches for the implicit parallelism are (a) Instruction

Pipelining and (b) Superscalar Execution.

Instruction Pipelining: Instruction pipelining is a technique used in the

design of microprocessors to increase their instruction throughput. The main

idea is to divide the basic instruction cycle into a series of independent steps of

micro-operations. Rather than processing each instruction sequentially, the

independent micro-operations of different instructions are executed concurrently

(by different functional units) in parallel. Pipelining enables faster execution by

overlapping various stages in instruction execution (fetch, schedule, decode,

operand fetch, execute, store, among others).

To illustrate how instruction pipelining enables faster execution, consider the

execution of the following code fragment:

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

14

load R1, @1000

load R2, @1008

add R1, @1004

add R2, @100C

add R1, R2

store R1, @2000

Sequential Execution

Instruction Sequential Execution Stages

load R1, @1000 IF ID OF

load R2, @1008 IF ID OF

add R1, @1004 IF ID OF E

add R2, @100C IF ID OF E

add R1, R2 IF ID E

store R1, @2000 IF ID WB

Clock Cycles 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

Pipelined Execution

Instruction Pipeline Stages

load R1, @1000 IF ID OF

load R2, @1008 IF ID OF

add R1, @1004 IF ID OF E

add R2, @100C IF ID OF E

add R1, R2 IF ID NOP E

Store R1, @2000 IF ID NOP WB

Clock Cycles 1 2 3 4 5 6 7 8 9

As seen in the example, the pipelined execution requires only 9 clock cycle, which

is a significant improvement over the 20 clock cycles needed in sequential

execution. However, the speed of a single pipelining is always limited by the

largest atomic task. Also, the pipeline performance is always dependent on the

efficiency of the dynamic branch prediction mechanism employed.

Superscalar Execution: Superscalar execution is an advanced form of

pipelined instruction-level parallelism that allows dispatching of multiple

instructions to multiple pipelines, processed concurrently by redundant

functional units on the processor. Superscalar execution can provide better cost-

effective performance as it improves the degree of overlapping of parallel

concurrent execution of multiple instructions.

To illustrate how the superscalar execution results in better performance gain,

consider the execution of the previous code fragment on a processor with two

pipelines and the ability to simultaneously issue two instructions.

Superscalar Execution

Instruction Pipeline Stages

load R1, @1000 IF ID OF

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

15

load R2, @1008 IF ID OF

add R1, @1004 IF ID OF E

add R2, @100C IF ID OF E

add R1, R2 IF ID NOP E

store R1, @2000 IF ID NOP WB

Clock Cycles 1 2 3 4 5 6 7

With the superscalar execution, the execution of the same code fragment takes

only 7 clock cycles instead of 9.

These examples illustrates that the implicit parallelisms like pipelining and

superscalar execution can results in better cost-effective performance gain.

8. Explain the concepts of pipelining and superscalar Execution with

suitable examples. Also explain their individual merits and demerits.

Pipelining and superscalar execution are two forms of instruction-level implicit

parallelism inherent in the design of modern microprocessors to increase their

instruction throughput.

Pipelining: The main idea of the instruction pipelining is to divide the

instruction cycle into a series of independent steps of micro-operations. Rather

than processing each instruction sequentially, the independent micro-operations

of different instructions are executed concurrently (by different circuitry) in

parallel. Pipelining enables faster execution by overlapping various stages in

instruction execution (fetch, schedule, decode, operand fetch, execute, store,

among others).

To illustrate how instruction pipelining executes instructions, consider the

execution of the following code fragment using pipelining:

load R1, @1000

load R2, @1008

add R1, @1004

add R2, @100C

add R1, R2

store R1, @2000

Pipelined Execution

Instruction Pipeline Stages

load R1, @1000 IF ID OF

load R2, @1008 IF ID OF

add R1, @1004 IF ID OF E

add R2, @100C IF ID OF E

add R1, R2 IF ID NOP E

store R1, @2000 IF ID NOP WB

Clock Cycles 1 2 3 4 5 6 7 8 9

As seen in the example, pipelining results in the overlapping in execution of the

various stages of different instructions.

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

16

Advantage of Pipelining:

The cycle time of the processor is reduced by overlapping the different

execution stages of various instruction, thereby increases the overall

instruction throughput.

Disadvantages of Pipelining:

Design Complexity: Pipelining involves adding hardware to the chip

Inability to continuously run the pipeline at full speed because of pipeline

hazards, such as data dependency, resource dependency and branch

dependency, which disrupt the smooth execution of the pipeline.

Superscalar Execution: Superscalar execution is an advanced form of

pipelined instruction-level parallelism that allows dispatching of multiple

instructions to multiple pipelines, processed concurrently by redundant

functional units on the processor. Superscalar execution can provide better cost-

effective performance as it improves the degree of overlapping of parallel

concurrent execution of multiple instructions.

To illustrate how the superscalar pipelining executes instructions, consider the

execution of the previous code fragment on a processor with two pipelines and

the ability to simultaneously issue two instructions.

Superscalar Execution

Instruction Pipeline Stages

load R1, @1000 IF ID OF

load R2, @1008 IF ID OF

add R1, @1004 IF ID OF E

add R2, @100C IF ID OF E

add R1, R2 IF ID NOP E

store R1, @2000 IF ID NOP WB

Clock Cycles 1 2 3 4 5 6 7

Advantage of Superscalar Pipelining:

Since the processor accepts multiple instructions per clock cycle,

superscalar execution results in better performance as compared single

pipelining.

Disadvantages of Pipelining:

Design Complexity: Design of superscalar processors are still more

complex as compared to single pipeline design

Inability to continuously run the pipeline at full speed because of pipeline

hazards, such as data dependency, resource dependency and branch

dependency, which disrupt the smooth execution of the pipeline.

The performance of superscalar architectures is limited by the available

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

17

instruction level parallelism and the ability of a processor to detect and

schedule concurrent instructions

9. Though the superscalar execution seems to be simple and natural,

there are a number of issues to be resolved. Elaborate on the issues

that need to be resolved.

Superscalar execution is an advanced form of pipelined instruction-level

parallelism that allows dispatching of multiple instructions to multiple pipelines,

processed concurrently by redundant functional units on the processor.

Superscalar execution can provide better cost-effective performance as it

improves the degree of overlapping of parallel concurrent execution of multiple

instructions. Since superscalar execution exploits multiple instruction pipelines,

it seems to be a simple and natural means for improving the performance.

However, it needs to resolve the following issues for achieving the expected

performance improvement.

a. Pipeline Hazards

b. Out of Order Execution

c. Available Instruction Level Parallelism

Pipeline Hazards: A pipeline hazard is the inability to continuously run the

pipeline at full speed because of various pipeline dependencies such as data

dependency (called Data Hazard), resource dependency (called Structural

Hazard) and branch dependency (called Control or Branch Hazard).

Data Hazards: Data hazards occur when instructions that exhibit data

dependence modify data in different stages of a pipeline. Ignoring potential data

hazards can result in race conditions. There are three situations in which a data

hazard can occur:

read after write (RAW), called true dependency

write after read (WAR), called anti-dependency

write after write (WAW), called output dependency

As an example, consider the superscalar execution of the following two

instructions i1 and i2, with i1 occurring before i2 in program order.

True dependency: i2 tries to read R2 before i1 writes to it

i1. R2 R1 + R3

i2. R4 R2 + R3

Anti-dependency: i2 tries to write R5 before it is read by i1

i1. R4 R1 + R5

i2. R5 R1 + R2

Output dependency: i2 tries to write R2 before it is written by i1

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

18

i1. R2 R4 + R7

i2. R2 R1 + R3

Structural Hazards: A structural hazard occurs when a part of the processor's

hardware is needed by two or more instructions at the same time. A popular

example is a single memory unit that is accessed both in the fetch stage where

an instruction is retrieved from memory, and the memory stage where data is

written and/or read from memory

Control hazards: Branching hazards (also known as control hazards) occur with

branches. On many instruction pipeline, the processor will not know the outcome

of the branch when it needs to insert a new instruction into the pipeline

(normally the fetch stage).

Dependencies of the above types must be resolved before simultaneous issue of

instructions. Pipeline Bubbling (also known as a pipeline break or a pipeline

stall) is the general strategy to prevent all the three kinds of hazards. As

instructions are fetched, control logic determines whether a hazard will occur. If

this is true, then the control logic inserts NOPs into the pipeline. Thus, before

the next instruction (which would cause the hazard) is executed, the previous

one will have had sufficient time to complete and prevent the hazard.

A variety of specific strategies are also available for handling the different

pipeline hazards. Examples include branch prediction for handling control

hazards and out-of-order execution for handling data hazards.

There are two implications to the pipeline hazards handling. First, since the

resolution is done at runtime, it must be supported in hardware; the complexity

of this hardware can be high. Second, the amount of instruction level parallelism

in a program is often limited and is a function of coding technique.

Out of Order Execution: The ability of a processor to detect and schedule

concurrent instructions is critical to superscalar performance. As an example,

consider the execution of the following code fragment on a processor with two

pipelines and the ability to simultaneously issue two instructions.

1. load R1, @1000

2. add R1, @1004

3. load R2, @1008

4. add R2, @100C

5. add R1, R2

6. store R1, @2000

In the above code fragment, there is a data dependency between the first two

instructions

load R1, @1000 and

add R1, @1004.

Therefore, these instructions cannot be issued simultaneously. However, if the

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

19

processor has the ability to look ahead, it will realize that it is possible to

schedule the third instruction

load R2, @1008

with the first instruction

load R1, @1000.

In the next issue cycle, instructions two and four

add R1, @1004

add R2, @100C

can be scheduled, and so on.

However, the processor needs the ability to issue instructions out-of-order to

accomplish the desired reordering. The parallelism available in in-order issue of

instructions can be highly limited as illustrated by this example. Most current

microprocessors are capable of out-of-order issue and completion.

Available Instruction Level Parallelism: The performance of superscalar

architectures is also limited by the available instruction level parallelism. As an

example, consider the execution of the following code fragment on a processor

with two pipelines and the ability to simultaneously issue two instructions.

load R1, @1000

add R1, @1004

add R1, @1008

add R1, @100C

store R1, @2000

Superscalar Execution

Instruction Pipeline Stages

load R1, @1000 IF ID OF

add R1, @1004 IF ID OF E

add R1, @1008 IF ID OF E

add R1, @100C IF ID OF E

store R1, @2000 IF ID NOP WB

Clock Cycles 1 2 3 4 5 6 7

For simplicity of discussion, let us ignore the pipelining aspects of the example

and focus on the execution aspects of the program. Assuming two execution units

(multiply-add units), the following figure illustrates that there are several zero-

issue cycles (cycles in which the floating point unit is idle).

Clock Cycles Exe.Unit 1 Exe.Unit 2

4 Vertical Waste

5 E E Full Issue Slot

6 E Horizontal Waste

7 Vertical Waste

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

20

These are essentially wasted cycles from the point of view of the execution unit.

If, during a particular cycle, no instructions are issued on the execution units, it

is referred to as vertical waste; if only part of the execution units are used during

a cycle, it is termed horizontal waste. In the example, we have two cycles of

vertical waste and one cycle with horizontal waste. In all, only three of the eight

available cycles are used for computation. This implies that the code fragment

will yield no more than three eighths of the peak rated FLOP count of the

processor.

In short, though the superscalar execution seems to be a simple and natural

means for improving the performance, due to limited parallelism, resource

dependencies, or the inability of a processor to extract parallelism, the resources

of superscalar processors are heavily under-utilized.

10. The ability of a processor to detect and schedule concurrent

instructions is critical to superscalar performance. Justify the

statement with example

Superscalar execution is an advanced form of pipelined instruction-level

parallelism that allows dispatching of multiple instructions to multiple pipelines,

processed concurrently by redundant functional units on the processor.

Superscalar execution can provide better cost-effective performance as it

improves the degree of overlapping of parallel concurrent execution of multiple

instructions. Since superscalar execution exploits multiple instruction pipelines,

it seems to be a simple and natural means for improving the performance.

However, the ability of a processor to detect and schedule concurrent

instructions is critical to superscalar performance.

To illustrate this point, consider the execution of the following two different code

fragments for adding four numbers on a processor with two pipelines and the

ability to simultaneously issue two instructions.

Code Fragment 1:

1. load R1, @1000

2. load R2, @1008

3. add R1, @1004

4. add R2, @100C

5. add R1, R2

6. store R1, @2000

Consider the execution of the above code fragment for adding four numbers. The

first and second instructions are independent and therefore can be issued

concurrently. This is illustrated in the simultaneous issue of the instructions

load R1, @1000 and

load R2, @1008

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

21

at t = 1. The instructions are fetched, decoded, and the operands are fetched.

These instructions terminate at t = 3. The next two instructions,

add R1, @1004 and

add R2, @100C

are also mutually independent, although they must be executed after the first

two instructions. Consequently, they can be issued concurrently at t = 2 since the

processors are pipelined. These instructions terminate at t = 5. The next two

instructions,

add R1, R2 and

store R1, @2000

cannot be executed concurrently since the result of the former (contents of

register R1) is used by the latter. Therefore, only the add instruction is issued at

t = 3 and the store instruction at t = 4. Note that the instruction

add R1, R2

can be executed only after the previous two instructions have been executed. The

instruction schedule is illustrated below.

Superscalar Execution of Code Fragment 1

Instruction Pipeline Stages

load R1, @1000 IF ID OF

load R2, @1008 IF ID OF

add R1, @1004 IF ID OF E

add R2, @100C IF ID OF E

add R1, R2 IF ID NOP E

store R1, @2000 IF ID NOP WB

Clock Cycles 1 2 3 4 5 6 7

Code Fragment 2:

1. load R1, @1000

2. add R1, @1004

3. load R2, @1008

4. add R2, @100C

5. add R1, R2

6. store R1, @2000

This code fragment is exactly equivalent to code fragment 1 and it computes the

sum of four numbers. In this code fragment, there is a data dependency between

the first two instructions

load R1, @1000 and

add R1, @1004

Therefore, these instructions cannot be issued simultaneously. However, if the

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

22

processor has the ability to look ahead, it will realize that it is possible to

schedule the third instruction

load R2, @1008

with the first instruction

load R1, @1000.

In the next issue cycle, instructions two and four

add R1, @1004

add R2, @100C

can be scheduled, and so on.

However, the processor needs the ability to issue instructions out-of-order to

accomplish the desired reordering. The parallelism available in in-order issue of

instructions can be highly limited as illustrated by this example. Most current

microprocessors are capable of out-of-order issue and completion.

11. Explain how the VLIW processors can achieve the cost effective

performance gain over uniprocessor. What are its merits and

demerits?

Microprocessor technology has recorded an unprecedented growth over the past

few decades. This growth has also unveiled various bottlenecks in achieving

sustained performance gain. To alleviate these performance bottlenecks,

microprocessor designers have explored a number of alternate architectural

innovations involving implicit instruction-level parallelisms like pipelining,

superscalar architectures and out-of-order execution. All these implicit

instruction-level parallelism approaches have the demerits that they involve

increased hardware complexity (higher cost, larger circuits, higher power

consumption) because the processor must inherently make all of the decisions

internally for these approaches to work (for example, the scheduling of

instructions and determining of interdependencies).

Another alternate architectural innovation to cost-effective performance gains is

the Very Long Instruction Word (VLIW) processors. VLIW is one particular style

of processor design that tries to achieve high levels of explicit instruction level

parallelism by executing long instruction words composed of multiple operations.

The long instruction word called a MultiOp consists of multiple arithmetic, logic

and control operations. The VLIW processor concurrently executes the set of

operations within a MultiOp thereby achieving instruction level parallelism.

A VLIW processor allows programs to explicitly specify instructions to be

executed in parallel. That is, a VLIW processor depends on the programs

themselves for providing all the decisions regarding which instructions are to be

executed simultaneously and how conflicts are to be resolved. A VLIW processor

relies on the compiler to resolve the scheduling and interdependencies at compile

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

23

time. Instructions that can be executed concurrently are packed into groups and

are passed to the processor as a single long instruction word (thus the name) to

be executed on multiple functional units at the same time. This means that the

compiler becomes much more complex, but the hardware is simpler than many

other approaches to parallelism.

Advantages:

Since VLIW processors depends on compilers for resolving scheduling and

interdependencies, the decoding and instruction issue mechanisms are

simpler in VLIW processors.

Since scheduling and interdependencies are resolved at compilation time,

instruction level parallelism can be exploited to maximum as the compiler

has a larger-scale view of the program as compared to the instruction-level

view of a superscalar processor for selecting parallel instructions. Further,

compilers can also use a variety of transformations to optimize parallelism

when compared to a hardware issue unit.

The VLIW approach executes operations in parallel based on a fixed schedule

determined when programs are compiled. Since determining the order of

execution of operations (including which operations can execute

simultaneously) is handled by the compiler, the processor does not need the

scheduling hardware. As a result, VLIW CPUs offer significant computational

power with less hardware complexity.

Disadvantages:

VLIW programs only work correctly when executed on a processor with the

same number of execution units and the same instruction latencies as the

processor they were compiled for, which makes it virtually impossible to

maintain compatibility between generations of a processor family. For

example, if number of execution units in a processor increases between

generations; the new processor will try to combine operations from multiple

instructions in each cycle, potentially causing dependent instructions to

execute in the same cycle. Similarly, changing instruction latencies between

generations of a processor family can cause operations to execute before their

inputs are ready or after their inputs have been overwritten, resulting in

incorrect behaviour

Since the scheduling and interdependencies are resolved at compilation

time, the compilers lack dynamic program states like branch history

buffer that helps making scheduling decisions. Since the static prediction

mechanism employed by the compiler may not be as effective as the

dynamic one, the branch and memory prediction made by the compiler

may not be accurate. Moreover, some runtime situations such as stalls on

data fetch because of cache misses are extremely difficult to predict

accurately. This limits the scope and performance of static compiler-based

scheduling

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

24

12. With an example, illustrate how the memory latency can be a

bottleneck in achieving the peak processor performance. Also

illustrate how the cache memory can reduce this performance

bottleneck.

The effective performance of a program on a computer relies not just on the

speed of the processor, but also on the ability of the memory system to feed data

to the processor. There are two figures that are often used to describe the

performance of a memory system: the latency and the bandwidth.

The memory latency is the time that elapses between the memory beginning to

transmit the data and the processor starting to receive the first byte. The

memory bandwidth is the rate at which the processor receives data after it has

started to receive the first byte. So if the latency of a memory system is l seconds

and the bandwidth is b bytes per second, then the time it takes to transmit a

message of n bytes is l+n/b.

To illustrate the effect of memory system latency on system performance,

consider a processor operating at 1 GHz (1/10

9

= 10

-9

= 1 ns clock) connected to a

DRAM with a latency of 100 ns (no caches). Assume that the size of the memory

block is 1 word per block. Also assume that the processor has two multiply-add

units and is capable of executing four instructions in each cycle of 1 ns. The peak

processor rating is therefore 4 GFLOPS (10

9

clock cycles4 FLOPS per clock

cycles=410

9

= 4 GFLOPS). Since the memory latency is equal to 100 cycles and

block size is one word, every time a memory request is made, the processor must

wait 100 cycles before it can process the data. That is, the peak speed processor

is limited to one floating point operation in every 100 ns, or a speed of 10

MFLOPS, a very small fraction of the peak processor rating.

This example highlights how the memory longer memory latency (hence larger

speed mismatch between memory and CPU) can be a bottleneck in achieving the

peak processor performance.

One of the architectural innovations in memory system design for reducing the

mismatch in processor and memory speeds is the introduction of a smaller and

faster cache memory between the processor and the memory. The cache acts as

low-latency high-bandwidth storage.

The data needed by the processor is first fetched into the cache. All subsequent

accesses to data items residing in the cache are serviced by the cache. Thus, in

principle, if a piece of data is repeatedly used, the effective latency of this

memory system can be reduced by the cache.

To illustrate the impact of caches on memory latency and system performance,

consider a 1 GHz processor with a 100 ns latency DRAM. Assume that the size of

the memory block is 1 word per block and that a cache memory of size 32 KB

with a latency of 1 ns is available. Assume that this setup is used to multiply two

matrices A and B of dimensions 32 32. Fetching the two matrices into the cache

from memory corresponds to fetching 2K words (one matrix = 32 32 words =

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

25

2

5

2

5

= 2

10

= 1K words, i.e., 2 matrices = 2K words), which takes approximately

200 s (Memory latency = 100 ns. Memory latency for 2K words = 210

3

100ns =

200000ns

= 200 s micro seconds). Multiplying two nn matrices takes 2n

3

operations. For our problem, this corresponds to 64K operations (232

3

=2(2

5

)

3

=

2

16

= 64K), which can be performed in 16K cycles (or 16 s) at four instructions

per cycle (64K/4 = 16K cycles = 16000ns = 16 s). The total time for the

computation is therefore approximately the sum of time for load/store operations

and the time for the computation itself, i.e., 200+16 s. This corresponds to a

peak computation rate of 64K/216 or 303 MFLOPS.

Note that this is a thirty-fold improvement over the previous example, although

it is still less than 10% of the peak processor performance. This example

illustrates that placing of a small cache memory improves the processor

utilization considerably.

13. With suitable example illustrate the effect of memory bandwidth on

improving processor performance gain

Memory bandwidth refers to the rate at which data can be moved between the

processor and memory. It is determined by the bandwidth of the memory bus as

well as the memory units. Memory bandwidth of system decides the rate at

which the data can be pumped to the processor and it has larger impact on

realizable peak time system performance.

One commonly used technique to improve memory bandwidth is to increase the

size of the memory blocks. Since bigger blocks can effectively utilize the special

locality, increasing the block size results in hiding memory latency.

To illustrate the effect of block size on hiding memory latency (improving system

performance), consider a 1 GHz processor with a 100 ns latency DRAM. Assume

that memory block size (cache line) is 1 word. Assume that this set up is used to

find the dot-product of two vectors. Since the block size is one word, the

processor takes 100 cycles to fetch each word. For each pair of words, the dot-

product performs one multiply-add, i.e., two FLOPs in 200 cycles. Therefore, the

algorithm performs one FLOP every 100 cycles for a peak speed of 10 MFLOPS.

Now let us consider what happens if the block size is increased to four words, i.e.,

the processor can fetch a four-word cache line every 100 cycles. For each pair of

four-words, the dot-product performs eight FLOPs in 200 cycles. This

corresponds to a FLOP every 25 ns, for a peak speed of 40 MFLOPS. Note that

increasing the block size from one to four words did not increase the latency of

the memory system. However, it increased the bandwidth four-fold.

The above example assumed a wide data bus equivalent to the size of the cache

line. In practice, such wide buses are expensive to construct. In a more practical

system, consecutive words are sent on the memory bus on subsequent bus cycles

after the first word is retrieved. For example, with a 32 bit data bus, the first

word is put on the bus after 100 ns (the associated latency) and one word is put

on each subsequent bus cycle. This changes our calculations above slightly since

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

26

the entire cache line becomes available only after 100 + 3 cycles. However, this

does not change the execution rate significantly.

The above examples clearly illustrate how increased bandwidth results in higher

peak computation rates.

14. Data reuse is critical on cache performance. Justify the statement

with the example.

The effective performance of a program on a computer relies not just on the

speed of the processor, but also on the ability of the memory system to feed data

to the processor. There are two figures that are often used to describe the

performance of a memory system: the latency and the bandwidth. Memory

latency has a larger role in controlling the speed mismatch between processor

and memory. One of the architectural innovations in memory system design for

reducing the mismatch in processor and memory speeds is the introduction of a

smaller and faster cache memory between the processor and the memory. The

data needed by the processor is first fetched into the cache. All subsequent

accesses to data items residing in the cache are serviced by the cache. Thus, in

principle, if a piece of data is repeatedly used, the effective latency of this

memory system can be reduced by the cache. The fraction of data references

satisfied by the cache is called the cache hit ratio of the computation on the

system.

The data reuse measured in terms of cache hit ratio is critical for cache

performance because if each data item is used only once, it would still have to be

fetched once per use from the DRAM, and therefore the DRAM latency would be

paid for each operation.

To illustrate this, consider a 1 GHz processor with a 100 ns latency DRAM with

a memory block size of 1 word. Assume that a cache memory of size 32 KB with a

latency of 1 ns is available. Also assume that the processor has two multiply-add

units and is capable of executing four instructions in each cycle of 1 ns. Assume

that this setup is used to multiply two matrices A and B of dimensions 32 32.

Fetching the two matrices into the cache from memory corresponds to fetching

2K words (one matrix = 32 32 words = 2

5

2

5

= 2

10

= 1K words, i.e., 2 matrices =

2K words). Multiplying two nn matrices takes 2n

3

operations (indicates data

reuse because 2K data words are used 64K times). For our problem, this

corresponds to 64K operations (232

3

=2(2

5

)

3

= 2

16

= 64K. This results in a cache

hit ratio:

Hit ratio =

i.e., Hit ratio =

(Of the 64K matrix operation, only 2K memory access is required and the rest

62K accesses are made from cache)

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

27

A higher hit ration results in lower memory latency and higher system

performance.

For example, in the earlier example of matrix multiplication, the peak

performance of the system would be 4GFLOPS per second at the rate of 4 FLOPS

per clock cycle (for a total of 1GHz =10

9

clock cycles per second). However, due to

the memory latency of 100ns, in the absence of cache memory, the realizable

peak performance will be 410

9

/100=0.0410

9

=4010

6

=40MFLOPS per second. In

the presence of a cache memory of size 32 KB with a latency of 1 ns, the increase

in the realizable peak performance can be illustrated with the matrix

multiplication example. Fetching the two 32 KB matrices into the cache from

memory corresponds to fetching 2K words (one matrix = 32 32 words = 2

5

2

5

=

2

10

= 1K words, i.e., 2 matrices = 2K words), which takes approximately 200 s

(2K words = 210

3

words = 210

3

10

2

ns=210

5

ns=200s). Multiplying two nn

matrices takes 2n

3

operations. For our problem, this corresponds to 64K

operations (232

3

=2(2

5

)

3

= 2

16

= 64K), which can be performed in 16K cycles or

16 s at four instructions per cycle (No. of cycles = 64K/4 = 16Kcycles =

16K1ns=16 s). The total time for the computation is therefore approximately

the sum of time for load/store operations and the time for the computation itself,

i.e., 200+16 s. This corresponds to a peak computation rate of 64K/216 or 303

MFLOPS. This results in a ten-fold improvement over the model where there is

no cache. This performance improvement is due to the data reuse because 2K

data words are used 64K times.

If the data items are used exactly one time only, then the number of memory

references becomes equal to the number of references found in cache (cache hit).

In our case, hit ratio becomes

Hit ratio =

i.e., Hit ratio =

A lower hit ratio results in higher memory latency and lower system

performance.

For example, consider the case of finding the dot product of the previous matrices

(instead of multiplication). Now the total operations will 32322=2

11

=2K (one

multiply and one add for each element), which can be performed in 0.5K cycles or

0.5 s at four instructions per cycle (No. of cycles = 2K/4 = 0.5Kcycles =

0.5K1ns=0.5 s). The total time for the computation is therefore 200+0.5 s.

This corresponds to a peak computation rate of 2K/200.5 or 9.97 MFLOPS. This

performance reduction is due to the absence of data reuse as 2K data words are

used only in 2K operations.

The above examples illustrate that the data reuse measured in terms of cache hit

ratio is critical for cache performance.

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

28

15. The performance of a memory bound program is critically

impacted by the cache hit ratio. Justify the statement with

example.

The effective performance of a program on a computer relies not just on the

speed of the processor, but also on the ability of the memory system to feed data

to the processor. There are two figures that are often used to describe the

performance of a memory system: the latency and the bandwidth. Memory

latency has a larger role in controlling the speed mismatch between processor

and memory. One of the architectural innovations in memory system design for

reducing the mismatch in processor and memory speeds is the introduction of a

smaller and faster cache memory between the processor and the memory. The

data needed by the processor is first fetched into the cache. All subsequent

accesses to data items residing in the cache are serviced by the cache. Thus, in

principle, if a piece of data is repeatedly used, the effective latency of this

memory system can be reduced by the cache. The fraction of data references

satisfied by the cache is called the cache hit ratio of the computation on the

system. The effective computation rate of many applications is bounded not by

the processing rate of the CPU, but by the rate at which data can be pumped into

the CPU. Such computations are referred to as being memory bound. The

performance of memory bound programs is critically impacted by the cache hit

ratio.

To illustrate this, consider a 1 GHz processor with a 100 ns latency DRAM.

Assume that a cache memory of size 32 KB with a latency of 1 ns is available.

Assume that this setup is used to multiply two matrices A and B of dimensions

32 32. Fetching the two matrices into the cache from memory corresponds to

fetching 2K words (one matrix = 32 32 words = 2

5

2

5

= 2

10

= 1K words, i.e., 2

matrices = 2K words). Multiplying two nn matrices takes 2n

3

operations

(indicates data reuse because 2K data words are used 64K times). For our

problem, this corresponds to 64K operations (232

3

=2(2

5

)

3

= 2

16

= 64K. This

results in a cache hit ratio:

Hit ratio =

i.e., Hit ratio =

A higher hit ration results in lower memory latency and higher system

performance.

If the data items are used exactly one time only, then the number of memory

references becomes equal to the number of references found in cache (cache hit).

In our case, hit ratio becomes

Hit ratio =

i.e., Hit ratio =

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

29

A lower hit ration results in higher memory latency and lower system

performance.

The above examples illustrate that the performance of a memory bound program

is critically impacted by the cache hit ratio.

16. How the locality of reference can influence the performance gain of

a processor.

Locality of reference (also known as the principle of locality) is a phenomenon

describing the same or related memory locations, being frequently accessed.

Two types of locality of references have been observed:

temporal locality and

spatial locality

Temporal locality is the tendency for a program to reference the same memory

location or a cluster several times during brief intervals of time. Temporal

locality is exhibited by program loops, subroutines, stacks and variables used for

counting and totalling.

Spatial locality is the tendency for program to reference clustered locations in

preference to randomly distributed locations. Spatial locality suggests that once

a location is referenced, it is highly likely that nearby locations will be referenced

in the near future. Spatial is exhibited by array traversals, sequential code

execution, the tendency to reference stack locations in the vicinity of the stack

pointer, etc.

Locality of reference is one type of predictable program behaviour and the

programs that exhibit strong locality of reference are great candidates for

performance optimization through the use of techniques such as the cache and

instruction prefetch technology that can improve the memory bandwidth and can

hide the memory latency.

Effect of Locality Reference in Hiding Memory Latency Using Cache:

Both the special and temporal locality of reference exhibited by program can

improve the cache hit ratio, which results in hiding memory latency and hence

improved system performance.

To illustrate how the locality of reference can improve the system performance

by hiding memory latency through the use of cache, consider the following

example:

Consider a processor operating at 1 GHz (1/10

9

= 10

-9

= 1 ns clock) connected to a

DRAM with a latency of 100 ns (no caches). Assume that the size of the memory

block is 1 word per block. Also assume that the processor has two multiply-add

units and is capable of executing four instructions in each cycle of 1 ns. The peak

processor rating is therefore 4 GFLOPS (10

9

clock cycles4 FLOPS per clock

Lecture Notes High Performance Computing

Department of Computer Science, Central University of Kerala

30

cycles=410

9

= 4 GFLOPS). Since the memory latency is equal to 100 cycles and

block size is one word, every time a memory request is made, the processor must

wait 100 cycles before it can process the data. That is, the peak speed processor

is limited to one floating point operation in every 100 ns, or a speed of 10

MFLOPS, a very small fraction of the peak processor rating.

The performance of the above processor can be improved at least 30 fold by

incorporating a cache memory of size 32 KB, as illustrated below:

Assume that the size of the memory block is 1 word per block and that a cache

memory of size 32 KB with a latency of 1 ns is available. Assume that this setup

is used to multiply two matrices A and B of dimensions 32 32. Fetching the two

matrices into the cache from memory corresponds to fetching 2K words (one

matrix = 32 32 words = 2

5

2

5

= 2

10

= 1K words, i.e., 2 matrices = 2K words),

which takes approximately 200 s (Memory latency = 100 ns. Memory latency for

2K words = 210

3

100ns = 200000ns

= 200 s micro seconds). Multiplying two

nn matrices takes 2n

3

operations. For our problem, this corresponds to 64K

operations (232

3

=2(2

5

)

3

= 2

16

= 64K), which can be performed in 16K cycles (or

16 s) at four instructions per cycle (64K/4 = 16K cycles = 16000ns = 16 s). The

total time for the computation is therefore approximately the sum of time for

load/store operations and the time for the computation itself, i.e., 200+16 s.

This corresponds to a peak computation rate of 64K/216 or 303 MFLOPS (a 30

fold increment).

Effect of Locality Reference in Improving the Memory Bandwidth:

The locality of reference also has an effect on improving the memory bandwidth

by allowing the blocks of larger size to be brought into the memory, as illustrated

in the following example:

Consider again a memory system with a single cycle cache and 100 cycle latency

DRAM with the processor operating at 1 GHz. If the block size is one word, the

processor takes 100 cycles to fetch each word. If use this set up to find the dot-

product of two vectors, for each pair of words, the dot-product performs one

multiply-add, i.e., two FLOPs. Therefore, the algorithm performs one FLOP

every 100 cycles for a peak speed of 10 MFLOPS. Now let us consider what

happens if the block size is increased to four words, i.e., the processor can fetch a

four-word cache line every 100 cycles. Assuming that the vectors are laid out

linearly in memory, eight FLOPs (four multiply-adds) can be performed in 200