Documente Academic

Documente Profesional

Documente Cultură

4 - 4 - 16. Turing Machines (34 Min.)

Încărcat de

Lalalalalala0 evaluări0% au considerat acest document util (0 voturi)

18 vizualizări13 paginiWe begin to study the other side of automata theory, the part that lets us show certain tasks are impossible, or in some cases, possible but intractable. We're going to meet the touring machine which in some sense the ultimate automaton. It is a formal computing model that can, can compute anything we can do with the computer or with any other realistic model that we might think of as computing.

Descriere originală:

Drepturi de autor

© © All Rights Reserved

Formate disponibile

TXT, PDF, TXT sau citiți online pe Scribd

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentWe begin to study the other side of automata theory, the part that lets us show certain tasks are impossible, or in some cases, possible but intractable. We're going to meet the touring machine which in some sense the ultimate automaton. It is a formal computing model that can, can compute anything we can do with the computer or with any other realistic model that we might think of as computing.

Drepturi de autor:

© All Rights Reserved

Formate disponibile

Descărcați ca TXT, PDF, TXT sau citiți online pe Scribd

0 evaluări0% au considerat acest document util (0 voturi)

18 vizualizări13 pagini4 - 4 - 16. Turing Machines (34 Min.)

Încărcat de

LalalalalalaWe begin to study the other side of automata theory, the part that lets us show certain tasks are impossible, or in some cases, possible but intractable. We're going to meet the touring machine which in some sense the ultimate automaton. It is a formal computing model that can, can compute anything we can do with the computer or with any other realistic model that we might think of as computing.

Drepturi de autor:

© All Rights Reserved

Formate disponibile

Descărcați ca TXT, PDF, TXT sau citiți online pe Scribd

Sunteți pe pagina 1din 13

We're not changing the subject matter

considerably. Until now, I've been talking

about simple models, such as finite

automata, or context free grammars, that

let us model mechanisms like communication

protocols, or processes like the parsing

of programming languages. Today we begin

to study the other side of automata

theory, the part that lets us show certain

tasks are impossible, or in some cases,

possible but intractable. That is, they

can be solved but only by very slow

algorithms. It might not be obvious but

just because we can state a problem

doesn't mean there is any algorithm at all

that can solve it. For example, can you

devise an algorithm to tell whether the

complement of a context free language is

empty? That is, does a grammar generate

all strings over its terminal alphabet? It

turns out you can't. And we're going to

learn the theory that lets us prove facts

of this kind. We begin with the central

theme of the study. Of what can be solved

by computer algorithms. The idea that all

data can be represented by integers. The

idea of countable sets. Those whose

members can be assigned integers one, two,

three, and so on, is essential here. We're

going to meet the touring machine which in

some sense the ultimate automaton. It is a

formal computing model that can, can

compute anything we can do with the

computer or with any other realistic model

that we might think of as computing.

Turing machines define the class of

recursively innumerable languages which is

thus the largest class of languages about

which we can compute anything. There's

another smaller class called the recursive

languages that can be thought of as

modeling algorithms. Computer programs

that answer a particular question and then

finish. I realize the concepts are vague

at this point, but we'll get to it all in

good time. If you took a modern course on

programming you're undoubtedly introduced

to the importance of data types or

classes. >> But when you look under the

hood, how these types are represented

inside the computer. You see that there is

only one type. Strings of bits. We could

base our whole theory on strings of bits,

but it is more convenient to convert

binary strings to integers. We can almost

see how to do that since integers can be

represented in binary notation, but there

is a small glitch we'll get to. And to

make the idea that all the world is

integers even more pervasive, remember

that programs that computers execute are

also, under the hood, just strings of 0's

and 1's, they therefore can be regarded as

integers. That lets up talk about the

hundredth program and things like that. As

we shall see, the fact that programs and

data are at heart the same thing is what

let's us build a powerful theory about

what is not computable. As a simple

example of a type that is really integers,

let's look at character strings. Strings

of ASCII characters can be thought of as

binary strings, eight bits to a character.

Or if you use Unicode, you need sixteen

bits per character, but the idea is the

same. Strings in any alphabet can be

represented by binary strings. And binary

strings are really integers. Does it make

sense to talk about the ith string in any

i. However, we have to be careful how we

do the conversion from binary strings to

integers. Because binary strings can have

leading zeroes, there is not a unique

representation of an integer as a binary

string. More to the point, if you do the

obvious conversion, then strings like the

ones shown, all seem to be the integers

five. But we can't call two or more

different strings by the same integer.

However, there is a correspondence between

binary strings and integers that is one to

one, and almost as simple. Put a one in

front of any binary string, and then treat

it as an integer. This way, no two strings

can correspond to the same integer. For

example, if we're given string 1-0-1. Then

put a one in front of it, and treat 1101

as a binary integer. And you'll get

thirteen, by the way. If you put a one in

front of 0101, then this integer is 21,

and so on. For a wilder example consider

images as a data type. There are many

representations of images. Let's talk

about gift for a [inaudible] example. A

gift file is just an ASCII string. So we

know how to convert ASCII to binary. And

then cover the binary string into an

integer in the way we just discussed. And

bingo, you have a notion of the

[inaudible] image. Here's another wild

example of proofs. A proof can be viewed

as a sequence of expressions or assertions

in mathematical form, each of which is,

are the given or follow some previous

statements in the sequence per certain

logical rules. We can encode mathematical

expressions in Unicode, it has pretty much

any symbol you use in mathematics. But we

can convert any expression to a binary

string, and hence to an integer. There is,

again, a small glitch. A proof is a

sequence of expressions, not a single

expression. So we need to fix on a way to

separate a sequence of expressions. We

also need to indicate whether an

expression is given, or follows from

previous expressions. We thus need to

introduce two new symbols into our

strings. One is a comma to separate

expressions. We can't just use the Unicode

comma, because the expression themselves

may use this symbol. The other is a marker

that says this is a given expression. The

following technique is useful not only

here but in general as a way to introduce

new symbols with any meaning into binary

strings while still keeping the strings

binary. First, given a binary string,

expand it by inserting a zero in front of

every byte of the string. For example. 101

becomes 01 that's the first one, 00 that's

the zero in the middle, and then 01. Now

as a consequence of this change strings

have no consecutive 1s. Use strings of two

or more 1s as the new symbols, for example

we'll use 111 to mark a given expression.

And one, one, as the marker for the end of

an expression. Here's an example of what a

proof as a binary string could look like.

The initial one, one, one, can only mean

that expression to follow is a given

expression. Here is the expression itself.

It was originally one zero one but we

expanded it with a zero in front of each

symbol. Of course one zero one is too

short to really be an expression since

even one unit code character takes sixteen

bytes, but not let's, let's not worry

about that. This one, one marks the end of

the first expression. Notice that this one

cannot be part of a special marker since

all expressions without the special

markers have even length and this one is

the sixth symbol in the expression. Here's

another given marker and another

expression. This one was zero, zero one,

one with zeros embedded. And another

expression ender and so on. As we said,

programs in a language like C or Java are

also a data type and can be represented by

integers as if they were data. First,

represent the program as an ASCII

[inaudible], or in some other alphabet

like Unicode, if the language requires it.

And then convert the character string to a

binary string, and then to an integer. Now

we can talk about the [inaudible] program.

And this should worry, there really aren't

more programs than there are integers.

While that number is infinite, you may be

aware that there are different orders of

infinity, and the integers of the smallest

one. For example, there are more real

numbers than there are integers, so there

are more real numbers than there are

programs. That immediately tells you that

some real numbers cannot be computed by

programs. We'll get to more concrete

explanation of what the [inaudible]

program really means shortly. We have an

intuitive notion of what a set of

[inaudible] are intimate. It says a finite

if there is a particular integer that is

the count of numbers on the set. The term

for the count of the number of members is

cardinality. For example, a set containing

A, B, and C is a finite set, and its

cardinality is three. And the formal

definition of a finite set is one for

which it is impossible to find a one to

one correspondence between the members of

the set, and a proper subset of that set.

Well, actually, the formal definition of

an infinite set is one for which there is

a one to one correspondence between its

members and a proper subset. Then. Finite

sets are formally the sets that are not

infinite. For example, the positive

integers are an infinite set. We can let

the proper subset be the even integers.

And the one to one correspondence matches

one with two, two with four, three with

six, and so on. Every positive integer I

is matched with the unique even integer,

2I. A countable set has a one to one

correspondence with the positive integers.

For example, the set of all integers,

positive and negative, is countable. A

correspondence maps zero to one minus I to

plus 2I for all I equal to or greater than

one, and plus I to 2I plus one for all I

equal to or greater than one. As a

consequence, the positive and negative

integers get [inaudible] in the ordering

as 0-1, 1-2, two and so on. The binary

strings are also countable. We saw how to

assign a unique positive integer to each

binary string. By putting a one in front.

And treating the result as a binary

integer. Likewise, the Java programs can

be put in one-to-one correspondence with

binary strings, and then with integers.

Many of these integers represent flawed

Java programs, things that don't compile.

But that is not important. The important

thing is that every working Java program

has you, a unique integer. A rather

surprising point is that pairs of integers

can be put in one-to-one correspondence

with the integers themselves. A simple way

to see this is to explain the ordering of

the pair as first pair, second pair and so

on. The ordering we want is first by sum

and then for pairs with the same sum by

first component. Thus one, one comes

first. It is the only pair with a sum of a

two. There are two pairs with a sum of

three, these guys. And we put two one

ahead of one two because it has the higher

first component. Then come three pairs

with sum four. These three, again ordered

by the first component. You can, in fact,

figure out a function F of IJ, such as the

pair of IJ is the F of IJ in that order.

But the important point is that some such

function exists. And therefore, it makes

sense to talk about the [inaudible] pair

of integers. We call the one to one

correspondence between a countable set and

the positive integers and enumeration of

the set. Thus, strings, programs, proofs,

and pairs of integers, for example, have

enumerations. Now, let's look at the set

of all languages over some fixed alphabet,

say, 01. Could this set be countable? The

answer is no and we can prove it. Suppose

that we are possible to innumerate the

languages so every language over zero one.

Was the ith language for some i. We

already know how to enumerate binary

strings. So we can talk about the ith

binary string. Now, I'm going to define a

language L. L is the language containing

those binary strings W such that W is the

Ith binary string. That must be true for

some I. And W is not in the Ith language.

L is really a language over alphabet 01.

Since we assume that the languages over

zero one are innumerable. That means that

for some j. L is the j language. So let X

be the J string. Surely some string is the

J in the enumeration of binary strings.

Now we can ask is X in L? It turns out

that if it is, then it isn't and vice

versa. To see why, remember the definition

of L. L contains W if and only if W is

not. In the language that corresponds to

the same integer I that W corresponds to

as a string. So let's focus on the case

where W is X, the Jth string. In that

case, the value of I is J because we know

X is the Jth string. Further we know that

L is the Jth language so both L, the

language being defined, and the quote Ith

language mentioned in the definition of L.

Are all the same. And they are L J. Thus

when we read the definition of L as a

reply is to X, it says, that X as in L,

which is Ls of J. If and only if, X is not

in L, again, which is Ls of J. We have a

contradiction. X is neither in L nor not

in L. That's an impossible situation. We

conclude that our assumptions are wrong.

The only unproved assumption we made. Was

that we could innumerate the languages

over zero one. So that is false. We can't

enumerate such languages. As a result, we

see that there are more languages than

programs, since the programs can be

enumerated. A particularly bad consequence

of this fact is that there are languages

for which no program can tell whether or

not a given string is in the language. On

the bright side, none of these strange

languages are context free or therefore

not regular, Since the CYK algorithm gives

us a membership testing program for any

context-free language. The process of

coming up with the language L that can't

be in any enumeration is called

diagonilization. The reason should be

apparent from this picture. Imagine an

infinite matrix in which the rows

correspond to languages, and the columns

to binary strings. A one in the entry for

row I in column J means that the Jth

string is in the Ith language. Zero means

it is not. If we could innumerate

languages then we could create such a

table. Look at the entries along the

diagonal and complement each one. That is,

replace zero by one and vice versa. Here's

what it looks like. A complement in

diagonal rotated 45 degrees looks like a

language. It tells which strings are its

members. Here strings one and two are not

in the language, the third and fourth are

and so on. But this language cannot be any

row because it disagrees with each row in

at least one position. In particular it

disagrees with the ith row in the ith

position. We're now ready to look at the

theory of Turing Machines. One important

purpose of this theory is to prove certain

particular languages to have no membership

algorithm. The first step is to prove

certain languages about Turing Machines

themselves, not to have membership

algorithms. We're then going to introduce

the important notion of a reduction. From

one problem to another. These are proved

through the form. If there is an algorithm

for problem P than there is an algorithm

for problem Q. We'll draw this as Q is

reduced to P. If we already know that

there's no algorithm for q, then how could

there be a algorithm for p. Because, if

there were an algorithm for p, then this

reduction would give us an algorithm for

q. By use of reductions, we don't have to

resort to diagonalization or another trick

to prove that P has no algorithm. Here is

the picture of a Turning Machine which you

should keep in mind. There is a state

which like all other automata can only be

in one of a finite number of states. There

is a tape, this. Infinite in both

directions, and partitioned into squares,

each of which can hold a symbol of a

finite tape alphabet. There's a tape head.

And always points to one of the tape

squares. The touring machine makes move

just like the automaton or the push down

automaton. For the touring machine. The

move is determined by the state and by the

tape symbol under the [inaudible]. In one

move, the touring machine can change its

state. Write a new tape symbol over the

old one. In the square [inaudible] its

scanning. And move the head one square

left or right. We might ask why we

introduce the turn machine, rather than

representing computation by C programs.

While you can develop a theory around

programs without using turn machines, it

would be much harder. The thing that we'll

come to appreciate about turn machines is

that they are so simple in their operation

compared to programs in a programming

language or even real computers. That

wouldn't matter if touring machines could

not mimic real computers, but they can, as

we shall argue. And in fact, one could

argue that, because Turing Machines have

infinite storage capacity on their tape,

they are even more powerful than

computers, since computers always have a

finite amount of storage, however large

that may be. But the difference is not

essential, since, in principle, we could

always by more storage for a computer. One

good counter argument is the universe is

finite so where are you going to get the

atoms from which to build all of those

discs? But even if you accept that the

universe is finite the limitation doesn't

seem to be effecting what we can compute

in practice. So we're never going to argue

that a computer is weaker than a Turing

machine in a meaningful way. So here is

the formula [inaudible] use for Turing

machines. There's a finite set of states.

We follow our tradition in using q for

this set. There's a finite input alphabet

for which we use the traditional sigma.

There's a [unknown] alphabet, gamma, which

always includes the input output alphabet.

The transition function is as always

delta, and we'll talk about how that works

next. There is a start state Q0, a member

of the set of states Q. Again, this is as

before. There is a blank symbol which we

usually represent by capital B. This

symbol is in the tape alphabet, but is

never an output symbol. There is a set of

final states. F, as usual. There are

several conventions about letters we shall

use. They are consistent with our earlier

conventions about finite and push down

automata. Lower case letters at the

beginning of the alphabet are still

symbols of the input alphabet. Now capital

letters at the end of the alphabet are

tape symbols which might or might not be

input symbols. Lower case letters at the

end of the alphabet or strings of input

symbols, again, that's as usual. And Greek

letters at the beginning of the alphabet

or strings of tape symbols which again may

include some input symbols. Now let's see

the transition function delta for a turn

machine. A turn machine is deterministic

and moreover does not have to have a move

in any situation. Delta takes two

arguments, the state and the symbol scan

by the tape head. Delta of QZ for state Q

and tape symbol Z. Can be undefined. In

which case, the touring machine can make

no more moves. Scanning a z in state q.

And the touring machine is set to halt.

But if delta of Q and Z is defined. Then

it is a triple P Y D, where P is the new

state. Y is the symbol that replaces Z in

the square being scanned on the tape head.

And D is the direction for the tape head

to move. This direction is either L for

left or R for right. And the move is by

one square. Here is an example of a

touring machine. It's input symbols are

zero and one. The touring machine scans

right on it's input looking for a one If

it finds a wanted change is it's a zero,

and goes to a final state F and halts.

However if it sees the blank symbol before

seeing a one, it changes the blank to one

and, and moves one square left repeating

the process just described. There are two

states for the Arcturing machine, Q and F.

Q is the start state, and F is the final

state. As we mentioned, the input alphabet

is 01. The tape symbols are only 01 and

the blank B. One move of the turn machine

is given here. It says that in state Q,

scanning a zero. In state Q, scanning

zero, it stays in state Q. Leaves the zero

on the square it was scanning and it moves

right. Another rule says that if it is in

state Q and sees a one. It goes to final

state F. Replaces the one by a zero and

moves right. It doesn't matter which way

the head moves in this situation since it

has accepted its input, but it always has

to move left or right, so we'll say right.

The last move of the touring machine is

this. It says that in state Q, while

scanning a blank, it replaces the blank by

one and moves left, staying in state Q.

Here's the beginning of a moving picture

of this touring machine. The three moves

are shown in the upper right with the move

that. Is applicable in a given situation

shown in red. Here, the touring machine

has just started. It is in a start state

and the tape head is at the left end of

the input which is 00 in this case. That

is, here's the input. The rule in red says

that in state Q scouting zero, it moves

right and leaves the state and symbol

unchanged. So we'll see that on the next

slide. Here it's done that. And it is in

the same situation, again scanning a zero,

so it again moves right. Now it sees a

blank on its tape so another rule is

applicable. This rule says that stay in

state Q and replace the blank by one and

move left. So it's made those changes and

the first rule is applicable again. The

touring machine is going to move right

while leaving the state and symbol

unchanged. Here the second rule applies.

In state Q it is scanning a one so it goes

to the final state F, replaces the one by

zero and moves right. Here, we see the

effect of the last move. The Turing

Machine has no move for when it is in

state F scanning a blank, so it halts. It

has also accepted, since F is the, in a

final state. Moving pictures of a Turing

Machine can get a bit cumbersome, so we're

going to develop an instantaneous

description or ID notation for Turing

Machine, similar to what we use for push

down automata. But in a sense, the touring

machine I.D.s are even simpler because the

input and paved contents are combined into

one stream. Moreover, we represent the

state in the head position by imbedding

the state to the left of the symbol being

scanned. We'll always assume that state

symbols are chosen so they do not conflict

with tape symbols and thus we can always

tell which symbol is the state. As we

suggested in our little example, the

touring machine gets its input on the

tape. The input, which is always a string

of input symbols, is placed on the tape

surrounded by an infinity of blanks to the

left and to the right. The touring machine

begins in it's start state with the head

at the left most input symbol. If the

input string happens to be epsilon then

the touring machine's tape is entirely

blanks and the head is scanning one of

them. An idea is a string of the form

alpha Q beta. Here alpha beta is the

contents of the tape between the left most

and right most non-blank symbols. That is,

the tape consists of an infinity of

blanks. Alpha, beta, and another infinity

of blanks. We should observe that after

any finite number of moves, the Turing

machine has only visited a finite number

of tape squares. So they can only be a

finite number of non blanks. Thus, we

always have a finite representation for

the tape, even though it is infinite in

extent. The state is Q, and it is placed

immediately to the left of the symbol the

head is now scanning. Remember we assumed

state symbols were chosen, so none of them

are taped symbols. Therefore, we can

always identify Q. As a special case Q can

be at the right end of beta. If so, it

means that the symbol being scanned is a

blank. This can only occur if the Turing

machine has just moved to the right, and

there are only blanks to the right. We use

the turn-style notation, as this, to

represent one move. And we use the

turn-style star This to represent any

number of moves including zero moves.

These are exactly as for PDAs. Here's an

example which corresponds to the earlier

touring machine example but now using the

ID notation. We start off in the start

state with a state to the left of the

input. At the first move, the touring

machine moves right, here, and then right

again. Now it is scanning a blank, and

it's action was to replace the blank by a

one and move left. Notice. That the state

is now two symbols to the left of the end

and it's scanning what used to be the

right most zero, that is this guy. It, it

again moves right and when it sees the

one, it enters state AF and again moves

right, changing that one to a zero. Now

we'll discuss the turnstile relation

formally. We have to discuss what happens

on rightward moves separately from what

happens on leftward moves. So suppose

there is a right move where in state Q,

scanning the Z, the touring machine goes

to state P. Writes y and moves right. Then

in any I d with q z as a sub string. Which

means that the touring machines is in

state q. And the z is being scanned. We

can replace q z by y p. That has the

effect of moving the head right. As well

as making the state and simple changes.

Called for. There's a special case when Z

is blank. Then it is additionally possible

that from an ID with Q at the right end,

that is this, which means that the blank

is being scanned, for the next ID to

replace the Q by YP. That is, the blank

which we didn't see in alpha Q. Has been

replaced by Y, that's here, and the state

Q became P. P, of course, moving to the

right of the Y that it just wrote. Now

consider a left move, where again, we are

in state Q scanning Z. The new state will

be P. And the symbol Y will be written

over the Z. If there is any symbol X, to

the left of the state in the current id,

such as this, in the next, in the next id,

the Z is replaced by Y, and the XQ is

replaced by PX. That means that X is being

scanned in the next ID. And of course, we

have gone to state P from state Q. But we

also have to take care of the case where

the ID has nothing to the left of the

state. Which means that there is an

infinity of blanks to the left of the

present head position. Then we reflect the

move by replacing the z by y. That's that.

And introducing a blank to be the symbol

immediately to the right of the state.

It's okay if the ID represents a blank

explicitly, and this is one of several

situations where we have to do so.

However, it is important to note that the

length of the ID can only grow by one at

each move, so it remains finite after any

finite number of moves. There are actually

two ways to define the language of a turn

machine. Final state is one of these ways,

of course. Formally, L of M, our Turing

Machine M, is the set of strings of input

symbols W, such that started in the

initial ID, that's Q, not W. With W on the

tape, M gets to some ID with a final

state. The other way to define a language

is by halting. We define each of them to

be the setup of input strings W, such that

started in the initial I.D. Again. M gets

the sum I For which no move is possible.

The two methods defining languages define

the same class of languages, as we can

show by simple Turing Machine

constructions that are not too different

from how we show that PDA is accepting by

final state and by empty stack at the same

language defining power. Given a Turing

Machine M, we are going to modify M into a

new Turing Machine, M Prime. The language

M prime accepts by final state. M prime

will accept by halting. We can easily make

the final states of M be halting states by

removing the rule for delta of Q and X

whenever Q is a final state. But we have

to protect against the possibility that M

halts without accepting, because the M

prime would then accidentally accept. So

for M prime, we introduce a new state S,

whose job is to make sure M Prime never

halts. One M Prime is in state S, on any

tape symbol X, M Prime stays in state S,

leaves the X as it is, and moves right.

Thus N prime will always have an X move in

state S. Eventually it will get to the

infinity of blanks to the right on the

blank and look at them one at a time. And

to make sure N prime doesn't hold

accidentally. Whenever delta of Q and X is

undefined for M, and Q is not a final

state, we'll have M prime enter the state

S. The construction in the other direction

is also pretty easy. Now we assume M

accepts some language L by halting, and we

want to modify it to become M double prime

which accepts L by final state. We

introduce a new state F, which is the

final state of M double prime. F has no

moves, so in fact, M double prime will

also halt when it accepts, but it doesn't

matter. Once a touring machine enters the

final state, if acceptance is by final

state then nothing it does in the future

will negate the fact that the input was

accepted. If M halts in any situation,

that is delta of Q in X is undefined, then

define it and make the next state be F. We

now have a proof that the class of

languages accepted by touring machines

using final state is the same as the class

of languages accepted by touring machines

using halting. The name for this, class of

language is, is the recursively

innumerable languages. You might rightly

wonder where this name came from. It

actually predates Alan Turing's paper

describing Turing machines. And it refers

to an earlier notion of anything we can

compute. I'm not gonna say more about

that. But there's another important class

related to touring machines. There are

called the recursive languages. These we

shall see are proper subset of the

recursively innumerable languages. An

algorithm formally is a touring machine

accepting by final state that halts on any

input regardless of whether that input is

accepted. This notion of an algorithm is

consistent with the information notions

you may have learned. However, it is

limited to accept and to reject

[inaudible] input. That is, all algorithms

in this sense render a yes no decision but

do not compute an output. We can extend

the notion of what a turn machine does to

allow it to produce output and then halt

in which case we have exactly the same

notion of algorithm that is taught in

freshman computing. The language that is

excepted by final state by some algorithm

is called a recursive language. This term

too comes from the history of attempts to

answer the question, "what can a

[inaudible] compute, prior to Turing's

insights?" For example, every context free

language is a recursive language. You can

implement the CY K algorithm for any

context free language on a Turing machine.

That Turning machine will halt once it has

computed the triangle of sets of

variables, and checked whether the start

symbol of the grammar is in the final set.

But the recursive languages go way beyond

the context free. In fact it is very hard

to come up with a language that is not

recursive. Except by using tricks like the

diagonalization that we discussed earlier.

S-ar putea să vă placă și

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- Past Life Oracle Cards GuidebookDocument56 paginiPast Life Oracle Cards GuidebookNhi Chan Canh66% (62)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Mystical Shaman Oracle CardsDocument161 paginiMystical Shaman Oracle CardsBiodanza Pamela Ubeda Cid100% (10)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (890)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (587)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Keepers of The Light Oracle CardsDocument131 paginiKeepers of The Light Oracle Cardslina89% (28)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (119)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2219)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (265)

- Python W3 SchoolDocument216 paginiPython W3 Schoolds0909@gmail83% (6)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- Disha 1700 Class 10 Mathematics Chapterwise Question BankDocument147 paginiDisha 1700 Class 10 Mathematics Chapterwise Question BankDhruv Jain50% (2)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- Essential Elements Math Pacing Guide AugustDocument96 paginiEssential Elements Math Pacing Guide Augustapi-271033247100% (1)

- Excerpt-from-UVS-Part-2-Powers-of-the-Vibrational-Bands Vesica PiscisDocument10 paginiExcerpt-from-UVS-Part-2-Powers-of-the-Vibrational-Bands Vesica PiscishaimkichikÎncă nu există evaluări

- 22 pnp3Document37 pagini22 pnp3LalalalalalaÎncă nu există evaluări

- 6 - 3 - 22. Specific NP-complete Problems (33 Min.)Document13 pagini6 - 3 - 22. Specific NP-complete Problems (33 Min.)LalalalalalaÎncă nu există evaluări

- The Satisfiability Problem: Cook's Theorem: An NP-Complete Problem Restricted SAT: CSAT, 3SATDocument44 paginiThe Satisfiability Problem: Cook's Theorem: An NP-Complete Problem Restricted SAT: CSAT, 3SATLalalalalalaÎncă nu există evaluări

- The Satisfiability Problem: Cook's Theorem: An NP-Complete Problem Restricted SAT: CSAT, 3SATDocument44 paginiThe Satisfiability Problem: Cook's Theorem: An NP-Complete Problem Restricted SAT: CSAT, 3SATLalalalalalaÎncă nu există evaluări

- 6 - 2 - 21. Satisfiability and Cook's Theorem (44 Min.)Document16 pagini6 - 2 - 21. Satisfiability and Cook's Theorem (44 Min.)LalalalalalaÎncă nu există evaluări

- 22 pnp3Document37 pagini22 pnp3LalalalalalaÎncă nu există evaluări

- PNP 1Document28 paginiPNP 1GauriÎncă nu există evaluări

- PNP 1Document28 paginiPNP 1GauriÎncă nu există evaluări

- 6 - 1 - 20. P and NP (25 Min.)Document10 pagini6 - 1 - 20. P and NP (25 Min.)LalalalalalaÎncă nu există evaluări

- 18 tm3Document26 pagini18 tm3LalalalalalaÎncă nu există evaluări

- More Undecidable Problems: Rice's Theorem Post's Correspondence Problem Some Real ProblemsDocument60 paginiMore Undecidable Problems: Rice's Theorem Post's Correspondence Problem Some Real ProblemsLalalalalalaÎncă nu există evaluări

- 18 tm3Document26 pagini18 tm3LalalalalalaÎncă nu există evaluări

- Properties of Context-Free Languages: Decision Properties Closure PropertiesDocument35 paginiProperties of Context-Free Languages: Decision Properties Closure PropertiesLalalalalalaÎncă nu există evaluări

- 19 tm4Document60 pagini19 tm4LalalalalalaÎncă nu există evaluări

- 5 - 2 - 18. Decidability (18 Min.)Document7 pagini5 - 2 - 18. Decidability (18 Min.)LalalalalalaÎncă nu există evaluări

- Turing Machines Programming TricksDocument54 paginiTuring Machines Programming TricksLalalalalalaÎncă nu există evaluări

- Turing Machines Programming TricksDocument54 paginiTuring Machines Programming TricksLalalalalalaÎncă nu există evaluări

- Properties of Context-Free Languages: Decision Properties Closure PropertiesDocument35 paginiProperties of Context-Free Languages: Decision Properties Closure PropertiesLalalalalalaÎncă nu există evaluări

- Pumping Lemma for CFLsDocument14 paginiPumping Lemma for CFLsLalalalalalaÎncă nu există evaluări

- 5 - 3 - 19. Specific Undecidable Problems (56 Min.)Document20 pagini5 - 3 - 19. Specific Undecidable Problems (56 Min.)LalalalalalaÎncă nu există evaluări

- 5 - 1 - 17. Extensions and Properties of Turing Machines (37 Min.)Document14 pagini5 - 1 - 17. Extensions and Properties of Turing Machines (37 Min.)LalalalalalaÎncă nu există evaluări

- Pumping Lemma for CFLsDocument14 paginiPumping Lemma for CFLsLalalalalalaÎncă nu există evaluări

- Conversion of CFG To PDA Conversion of PDA To CFGDocument23 paginiConversion of CFG To PDA Conversion of PDA To CFGLalalalalalaÎncă nu există evaluări

- 13 Pda2Document23 pagini13 Pda2LalalalalalaÎncă nu există evaluări

- Properties of Operations On The Set of IntegersDocument15 paginiProperties of Operations On The Set of IntegersEmmie Grace S. AbrahamÎncă nu există evaluări

- Classification of Numbers: Class Symbol DescriptionDocument5 paginiClassification of Numbers: Class Symbol DescriptionVous Étiez Ma VieÎncă nu există evaluări

- DLL Math Grade7 Quarter1 Week3 (Palawan Division)Document7 paginiDLL Math Grade7 Quarter1 Week3 (Palawan Division)Michael Peralta MahinayÎncă nu există evaluări

- PA - 1º SecDocument19 paginiPA - 1º SecRay Nikky Damian GronerthÎncă nu există evaluări

- Strategic Intervention Material: Addition of IntegersDocument11 paginiStrategic Intervention Material: Addition of IntegersLei Rni96% (80)

- IntegersDocument3 paginiIntegersAlfredo NavarroÎncă nu există evaluări

- 6.2 Modular ArithmeticDocument9 pagini6.2 Modular ArithmeticRoselyn Mayongue BrazaÎncă nu există evaluări

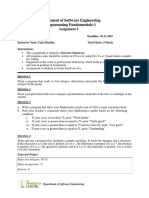

- Department of Software Engineering Programming Fundamentals-1Document2 paginiDepartment of Software Engineering Programming Fundamentals-1AsadÎncă nu există evaluări

- Computer Applications - Specimen - 2020 PDFDocument6 paginiComputer Applications - Specimen - 2020 PDFDuraiswamy AnandhanÎncă nu există evaluări

- Top SAS Functions for Data AnalysisDocument5 paginiTop SAS Functions for Data AnalysisSan DeepÎncă nu există evaluări

- Primes and Sequences: WarmupsDocument2 paginiPrimes and Sequences: WarmupsDrstrange CumbertachÎncă nu există evaluări

- Problems On Ring Theory Avishek Adhikari 1 Problem SetDocument8 paginiProblems On Ring Theory Avishek Adhikari 1 Problem SetRakesh MajumderÎncă nu există evaluări

- National Board For Higher MathematicsDocument3 paginiNational Board For Higher MathematicsCHAITANYA GUPTAÎncă nu există evaluări

- 11 CH 9 Finite PDFDocument40 pagini11 CH 9 Finite PDFjumperpc3000Încă nu există evaluări

- Eng'g MathDocument2 paginiEng'g MathLiezel ReyesÎncă nu există evaluări

- Learning Activity Sheet Mathematics 7 Quarter 1: Week 1: Schools Division of ZambalesDocument17 paginiLearning Activity Sheet Mathematics 7 Quarter 1: Week 1: Schools Division of ZambalesAbegail FortalezaÎncă nu există evaluări

- School of Scholars, AmravatiDocument9 paginiSchool of Scholars, Amravatilavanya kharabe0% (1)

- Math Variables Shorthand StatementsDocument5 paginiMath Variables Shorthand Statementserickson hernanÎncă nu există evaluări

- AP GP Revision JC2 PDFDocument13 paginiAP GP Revision JC2 PDFvincesee85Încă nu există evaluări

- Grade 6 - MathematicsDocument4 paginiGrade 6 - MathematicsArlene Hernandez-MañiboÎncă nu există evaluări

- G364Document18 paginiG364ramakrishnaÎncă nu există evaluări

- 2 Real NumbersDocument4 pagini2 Real NumbersAllona Vheb BargamentoÎncă nu există evaluări

- Sonifying Sieves with Python and CsoundDocument8 paginiSonifying Sieves with Python and CsoundxdimitrisÎncă nu există evaluări

- Math 121 HW 2Document6 paginiMath 121 HW 2Abdul TambunanÎncă nu există evaluări

- The Law of Vibration & The Archimedean SpiralDocument6 paginiThe Law of Vibration & The Archimedean Spiralfsolomon80% (5)

- Assignment 3Document5 paginiAssignment 3Touseef Ali KhanÎncă nu există evaluări

- 169 Functional EquationsDocument83 pagini169 Functional Equationshungchng100% (5)