Documente Academic

Documente Profesional

Documente Cultură

CPSC Algorithms Cheat Sheet

Încărcat de

Rya KarkowskiDescriere originală:

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

CPSC Algorithms Cheat Sheet

Încărcat de

Rya KarkowskiDrepturi de autor:

Formate disponibile

CPSC 2120 Cheat Sheet

Algorithmic Techniques

- Incremental Construction - Divide and Conquer

Build solution by adding input elements Deal with first element, then recursively

one by one, updating solution as we go. solve the rest of the problem.

Examples: Insertion Sort Examples: Mergesort, Quicksort

- Dynamic Programming/ Greedy

Greedy: Initial decision can be made safely

and irrevocably made according simple rule.

DP: Solve all possible sub-problems from

smallest to largest, making decisions easy

Example

Given intervals [a

i

, b

i

], select a disjoint subset

of intervals of maximum total length.

- Initially sort intervals so a1 a2 an.

- Let L[i] denote the value of an optimal

solution for just the intervals i n.

- L[i] = max(L[i+1], (b

i

a

i

) + max {L[j] : a

j

b

i

}

We now have a simple O(n

2

) algorithm:

Compute L[n], L[n-1], , L[1] in sequence

according to the formula above.

L[1] tells us the value of an optimal solution.

Decompose problem into successively larger

subproblems all of same form:

Let L[i] denote the value of an optimal solution for

just the intervals i n.

Recursively express optimal solution to a

large problem in terms of optimal solutions of

smaller subproblems:

L[i] = max(L[i+1], (b

i

a

i

) + max {L[j] : a

j

b

i

})

- Then just solve the problems in sequence

from smallest to largest, building up a table of optimal solutions.

Iterative Refinement

- Start with some arbitrary feasible solution (or better

yet, a solution obtained via some other heuristic)

As long as we can improve it, keep making it better.

Genetic Algorithms

Start with a population of initial solutions.

- Repeatedly:

Kill off some number of the least fit solutions

(probability of survival proportional to fitness)

Replace them with new solutions obtained by mating

pairs of surviving solutions, or by mutating single solutions.

Graph Algorithms

Breadth First Search O(n + m) time

- Used for finding paths in unweighted graphs.

- Checks one level at a time.

Depth First Search

- Goes as deep as possible first.

- Can only be used on unweighted graphs.

- Can be used to find strongly connected components.

Dijkstras Shortest Path Algorithm O(m logn)

- If edge costs are non-negative

- Speed depends on using a priority queue

Dijkstra Time Remove Min n-

times

Decrease Key

O(m) times

Total Runtime

Unsorted Array O(n) O(1) O(n

2

)

Binary Heap O(log n) O(log n) O(m log n)

Fibonacci Heap O(log n) O(1) amortized O(m + n log n)

Bellman-Ford O(mn)

- Used when there are negative edge weights

- Try to change the graph not the algorithm!

- Longest path problems convert to shortest path problems by negating edge lengths.

Graphs

Topologically sorting a Dag

- Find a node with no incoming edges, add it next to the ordering, remove it from our graph, repeat.

If we ever find that every node has an incoming edge, then our graph must contain a cycle (so

this gives an alternate way to do cycle detection).

- To topologically sort a DAG, just perform a Full- DFS and then output nodes in reverse order of

finishing times.

Network Flows

S-t cuts

- An s-t cut is a partition of the node in our graph into two

sets, one containing s and the other containing t.

The value of the maximum flow is at

most the capacity of any cut.

- So: max s-t flow value min s-t cut capacity.

Sorting Algorithms

Algorithm Runtime Stable In-place?

Bubble sort O(n

2

) Yes Yes

Selection Sort O(n

2

) Yes Yes

Insertion Sort O(n

2

) Yes Yes

Mergesort O(nlogn) Yes No

Randomized Quicksort (n log n), WHP No* Yes*

Deterministic Quicksort (n log n) No* Yes*

BST Sort (n log n) Yes No

Heap Sort (n log n) No* Yes*

Counting Sort (n) Yes No

Radix Sort (n) Yes No

Counting sort: O(n + C) time.

Sorts integers of magnitude C = O(n) in linear time.

- Radix sort: O(n max(1, lognC)) time.

Sorts integers of magnitude C = O(nk), k = O(1), in linear time.

Searching Algorithms

Linear Search (n)

Binary Search - O(log n)

Binary Search the Answer.

Selection (finding the kth smallest element)

Quick Select - (n). Used widely in divide and conquer.

Binary Search Trees Select and Rank operations.

QuickSelect

- To select for the item of rank k in an array A[1..n].

- As in quicksort, pick a pivot element and partition A

in linear time:

- After partitioning, the pivot element ends up being

placed where it would in the sorted ordering of A

So we know the rank, r, of the pivot!

If k = r, the pivot is the element we seek and were done.

If k < r, select for the element of rank k on the left side.

If k > r, select for the element of rank k r on the right side.

Set/Map Data Structures

Data Structure Insert Remove Find

Sorted Array O(n) O(n) O(log n)

Unsorted Array O(1) O(1), post-find O(n)

Sorted doubly linked list O(1), post-find O(1), post-find O(n)

Unsorted doubly linked list O(nlogn) O(1), post-find O(n)

Skip List O(log n) expected O(log n) expected O(log n) expected

Sorted Array with Gaps (n log n) O(log

2

n) amortized O(log n)

Balanced Binary Search tree (log n) O(log n) O(log n)

Universal Hash Table O(1) amortized O(1), post-find O(1) expected

Binary Search Trees

An in-order traversal prints the n elements

in a BST in sorted order in only O(n) time.

Therefore, we can use BSTs to sort:

insert n elements O(n log n). (*)

then do an inorder traversal. O(n)

Randomized quicksort runs in O(n log n) time with high probability

Randomized BST construction takes O(n log n) time with high probability

split(T, k) : Split the BST T into two BSTs, one

containing key k and the other keys > k.

join(T1, T2) : Take two BSTs T1 and T2, the keys in T1 all being less than the keys in T2, and join them into a

single BST.

- pred(e): If e has a left child, then pred(e) is the maximum element in es left subtree.

If e has no left child, then pred(e) is the first left parent we encounter when walking from e up to the root.

- succ(e): If e has a right child, then pred(e) is the minimum element in es right subtree.

If e has no right child, then succ(e) is the first right parent we encounter when walking from e up to the root.

- pred and succ take O(n) time.

Traversals

In-order: left, root, right

Pre-order: left, right root

Post-order: right, left, root

A BST augmented with subtree sizes can support

two additional useful operations:

select(k) : return a pointer to the kth largest element in

the tree (k = 1 is the min, k = n is the max, and k = n/2

is the median).

rank(e) : given a pointer to e, returns the number of

elements with keys es key.

- Rank takes O(log n)

Dynamic Sequences

Using a balanced BST augmented with subtree sizes, we can perform all the following operations in O(log n) time:

Insert / delete anywhere in the sequence.

Access or modify any element by its index in the

sequence.

Splay Trees

- All operations take O(log n) amortized time.

- Starting with an empty tree all operations take O(k log n) time where k is the # of ops

- find(e) : Find as e usual, then splay(e).

insert(e) : Insert as e usual, then splay(e).

- delete(e):

On a splay tree:

split(T, k) : Find the element e of key k. Then splay e

to root and remove its right subtree.

(if k doesnt exist, use pred(k) instead)

join(T1, T2) : Splay the maximum element in T1

to the root. Then attach T2

as its right subtree.

Red-black trees

- More Space efficient

- A pain in the butt to program

A BST is a red-black tree if:

The children of a red element are all black.

All leaves are black.

Every root-leaf path contains the

same number of black elements

(we call this the black height

of the tree).

Priority Queues

Data Structure Insert Remove-min

Unsorted Array or linked list O(1) O(n)

Sorted Array or linked list O(n) O(1)

Binary Heap O(log n) O(log n)

Balanced binary search tree O(log n) O(log n)

Binary Heaps

Parent(i) = floor((i-1)/2).

Left-child(i) = 2i + 1

Right-child(i) = 2i + 2.

- An almost-complete binary tree (all levels full except the last,

which is filled from the left side up to some point.

-Satisfies the heap property: for every element e, key(parent(e)) key(e).

Minimum element always resides at root.

- Physically stored in an array A[0...n-1].

sift-up(i) : Repeatedly swap element A[i] with its parent as long as A[i] violates the heap property with respect

to its parent (i.e., as long as A[i] < A[parent(i)]).

sift-down(i) : As long as A[i] violates the heap property with one of its children, swap A[i] with its smallest child.

- We could build a binary heap in O(n log n)

time using n successive calls to insert.

- Can be built in O(n) time.

Start with our n elements in arbitrary order in A[1..n],

then call sift-down(i) for each i from n down to 1.

Treaps

A treap is a binary tree in which each node contains two

keys, a heap key and a BST key.

It satisfies the heap property with respect to the heap

keys, and the BST property with respect to the BST keys.

The BST keys will store the elements of our BST; well

choose heap keys as necessary to assist in balancing

insert : Insert new element as leaf using the standard

BST insertion procedure (so BST property satisfied).

Assign new element a random heap key. Then restore

heap property (while preserving BST property) using

sift-up implemented with rotations.

delete : Give element a heap key of +, sift it down

(again using rotations, to preserve BST property) to a

leaf, then delete it.

Heapsort:

Start with an array A[1..n] of elements to sort.

Build a heap (bottom up) on A in O(n) time.

Call remove-min n times.

Afterwards, A will end up reverse-sorted (it would be

forward-sorted if we had started with a max heap)

String Matching

Goal: Find all occurrences of a length-M pattern

in a much larger length-N text.

- Approach #1: Linear scan through entire text.

Good for solving one off string matching

problems. Target running time O(N)

- Knuth Morris Pratt

Approach #2: Spend O(N) time preprocessing

entire text so that subsequent queries can be

answered in O(M+k) time (k = # of matches).

Suffix arrays, Suffix trees

- Rabin-Karp Hash the pattern against a moving window

in the text O(m + n) time. But very small chance of false

positives.

Typically use polynomial hash functions:

p(x) = (A[0] + A[1] x + A[2] x2 + + A[N-1] xN-1) mod Q

Running time O(N+M).

If Q large enough, chance of false positives is miniscule.

- Knuth-Morris-Pratt Nave implementation of brute-force.

But advance pattern by the length of the longest border

O(m + n) time.

- Suffix Array Sorted list of all suffixes of the text. Space

O(n). Construction time - O(n). Binary search for the

pattern. Can be done in O(m + log n + k) time.

- Suffix Tree Trie of all the suffixes. Space O(n).

Construction time O(n). Walk the pattern in the trie and

return all the leaves of the match O(m + k) time.

Amortized Analysis

- Mentally overcharge ourselves for earlier cheap

operations to build up sufficient credit to pay for

later expensive operations.

Example:

Recurrences

Templates

Vectors

vector<int> vec;

vector<int>::iterator itrv;

v.push_back(n);

for (itrv i = v.begin(); i != v.end(); ++i)

cout << *i << " ";

cout << endl;

template <typename T>

class Node {

template <typename U> friend class GenericSet;

private:

T key;

Node<T> *next;

public:

Node() : key(), next(NULL) {}

Node(T k, Node *n) : key(k), next(n) {}

};

S-ar putea să vă placă și

- CSC211 Data Structures and AlgorithmsDocument7 paginiCSC211 Data Structures and AlgorithmsSouban JavedÎncă nu există evaluări

- Analysis of Merge SortDocument6 paginiAnalysis of Merge Sortelectrical_hackÎncă nu există evaluări

- Full PDFDocument157 paginiFull PDFSumit BhanwalaÎncă nu există evaluări

- COP3530 Cheat Sheet Data StructuresDocument2 paginiCOP3530 Cheat Sheet Data StructuresAndy OrtizÎncă nu există evaluări

- Data Structures - Cheat SheetDocument2 paginiData Structures - Cheat SheetGabriele GattiÎncă nu există evaluări

- Basics of Algorithm Analysis: Slides by Kevin Wayne. All Rights ReservedDocument23 paginiBasics of Algorithm Analysis: Slides by Kevin Wayne. All Rights Reservedalex tylerÎncă nu există evaluări

- Nfa Epsilon DefinedDocument11 paginiNfa Epsilon DefinedJohn JohnstonÎncă nu există evaluări

- Minimum Spanning TreesDocument25 paginiMinimum Spanning TreesLavin sonkerÎncă nu există evaluări

- Categorical Data AnalysisDocument11 paginiCategorical Data Analysisishwar12173Încă nu există evaluări

- Push Down AutomataDocument41 paginiPush Down AutomataVideo TrendÎncă nu există evaluări

- Gate MCQ Questions On Unit IV-GraphsDocument16 paginiGate MCQ Questions On Unit IV-Graphsshubham gandhi100% (1)

- 11 Numpy Cheat SheetDocument1 pagină11 Numpy Cheat SheetToldo94Încă nu există evaluări

- Matlab Cheat Sheet PDFDocument3 paginiMatlab Cheat Sheet PDFKarishmaÎncă nu există evaluări

- Data Structures and Algorithm Course OutlineDocument2 paginiData Structures and Algorithm Course OutlineNapsterÎncă nu există evaluări

- Calculus 1 Analytic Geometry Cheat SheetDocument1 paginăCalculus 1 Analytic Geometry Cheat SheetStephanie DulaÎncă nu există evaluări

- Exercises of Design & AnalysisDocument7 paginiExercises of Design & AnalysisAndyTrinh100% (1)

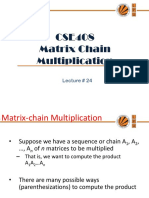

- Lecture 24 (Matrix Chain Multiplication)Document17 paginiLecture 24 (Matrix Chain Multiplication)Almaz RizviÎncă nu există evaluări

- Python RefcardDocument2 paginiPython Refcardsoft003Încă nu există evaluări

- Arsdigita University Month 8: Theory of Computation Professor Shai Simonson Exam 1 (50 Points)Document5 paginiArsdigita University Month 8: Theory of Computation Professor Shai Simonson Exam 1 (50 Points)brightstudentÎncă nu există evaluări

- Informed Search Algorithms: UNIT-2Document35 paginiInformed Search Algorithms: UNIT-2Tariq IqbalÎncă nu există evaluări

- Systems of Linear EquationsDocument39 paginiSystems of Linear EquationsRainingGirlÎncă nu există evaluări

- Compiler Design Left Recursion and Left FactoringDocument14 paginiCompiler Design Left Recursion and Left FactoringMadan Kumar ChandranÎncă nu există evaluări

- Cheatsheet Python A4Document7 paginiCheatsheet Python A4bobe100% (1)

- RecursionDocument38 paginiRecursionanjugaduÎncă nu există evaluări

- Sim 2D TRANSFORMATIONS FinalDocument34 paginiSim 2D TRANSFORMATIONS FinalVaishakh SasikumarÎncă nu există evaluări

- 2.1 Context-Free GrammarsDocument42 pagini2.1 Context-Free GrammarsSuphiyan RabiuÎncă nu există evaluări

- Greedy AlgorithmDocument30 paginiGreedy AlgorithmRahul Rahul100% (1)

- WWW Personal Kent Edu Rmuhamma Algorithms MyAlgorithms SortDocument20 paginiWWW Personal Kent Edu Rmuhamma Algorithms MyAlgorithms SortAhmad Imran Rafique100% (1)

- Answer:: Convert The Following To Clausal FormDocument10 paginiAnswer:: Convert The Following To Clausal Form5140 - SANTHOSH.KÎncă nu există evaluări

- MIPS Practice Questions - ANSWERSDocument5 paginiMIPS Practice Questions - ANSWERSRajeen VenuraÎncă nu există evaluări

- Practice Questions For Chapter 3 With AnswersDocument9 paginiPractice Questions For Chapter 3 With AnswersKHAL1DÎncă nu există evaluări

- Applications of Linear AlgebraDocument4 paginiApplications of Linear AlgebraTehmoor AmjadÎncă nu există evaluări

- Closure Properties of Regular Language Lecture-2Document20 paginiClosure Properties of Regular Language Lecture-2ali yousafÎncă nu există evaluări

- Turing Machine NotesDocument11 paginiTuring Machine NotesShivamÎncă nu există evaluări

- Big O MIT PDFDocument9 paginiBig O MIT PDFJoanÎncă nu există evaluări

- Maths: X STDDocument10 paginiMaths: X STDrashidÎncă nu există evaluări

- Linear Search, Binary SearchDocument5 paginiLinear Search, Binary SearchcjkÎncă nu există evaluări

- Cheat SheetDocument2 paginiCheat SheetVarun NagpalÎncă nu există evaluări

- MCQs by Ali Hassan SoomroDocument19 paginiMCQs by Ali Hassan SoomroMuhammad amir100% (1)

- Singular Value Decomposition Example PDFDocument9 paginiSingular Value Decomposition Example PDFawekeuÎncă nu există evaluări

- hw4 Soln PDFDocument3 paginihw4 Soln PDFPhilip MakÎncă nu există evaluări

- Algorithm Analysis Cheat Sheet PDFDocument2 paginiAlgorithm Analysis Cheat Sheet PDFGabriele GattiÎncă nu există evaluări

- Networking Essentials Exam NotesDocument6 paginiNetworking Essentials Exam NotesHelen Shi100% (1)

- Lecture 15-16 Intro of ProofDocument39 paginiLecture 15-16 Intro of ProofTayyab KhanÎncă nu există evaluări

- Machine Learning NNDocument16 paginiMachine Learning NNMegha100% (1)

- DS&Algo - Lab Assignment Sheet - NewDocument7 paginiDS&Algo - Lab Assignment Sheet - NewVarsha SinghÎncă nu există evaluări

- Daa MCQDocument3 paginiDaa MCQLinkeshwar LeeÎncă nu există evaluări

- Integration CheatSheetDocument4 paginiIntegration CheatSheetahmet mÎncă nu există evaluări

- CFG 2Document6 paginiCFG 2JunaidWahidÎncă nu există evaluări

- Lab Manual - Linear Linked ListDocument4 paginiLab Manual - Linear Linked ListJuiyyy TtttÎncă nu există evaluări

- Big-O Algorithm Complexity Cheat Sheet PDFDocument4 paginiBig-O Algorithm Complexity Cheat Sheet PDFKumar Gaurav100% (1)

- DSA - TreesDocument32 paginiDSA - TreesRajan JaiprakashÎncă nu există evaluări

- AllExercise FA-TOC SipserDocument17 paginiAllExercise FA-TOC SipserMahmudur Rahman0% (1)

- 3 Sem - Data Structure NotesDocument164 pagini3 Sem - Data Structure NotesNemo KÎncă nu există evaluări

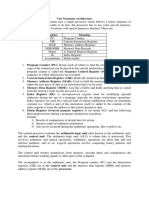

- Von Neumann ArchitectureDocument8 paginiVon Neumann ArchitectureDinesh KumarÎncă nu există evaluări

- Minimum and MaximumDocument28 paginiMinimum and MaximumDinoÎncă nu există evaluări

- Quick SorthvjnvDocument62 paginiQuick SorthvjnvdsrÎncă nu există evaluări

- Foca 1 - BcaDocument8 paginiFoca 1 - BcarahulÎncă nu există evaluări

- Sorting in Linear Time: Counting-SortDocument7 paginiSorting in Linear Time: Counting-Sortcristi_pet4742Încă nu există evaluări

- Genetic AlgorithmDocument14 paginiGenetic AlgorithmRoshan DahalÎncă nu există evaluări

- AI Fundamentals Midterm Exam - Attempt ReviewDocument17 paginiAI Fundamentals Midterm Exam - Attempt ReviewkielmorganzapietoÎncă nu există evaluări

- Using Fuzzy Logic Controller in Ant Colony OptimizationDocument2 paginiUsing Fuzzy Logic Controller in Ant Colony OptimizationkhaldonÎncă nu există evaluări

- 5.1-5.4 Quiz Review PDFDocument4 pagini5.1-5.4 Quiz Review PDFKimberly ConnerÎncă nu există evaluări

- 3 1 OverfittingDocument25 pagini3 1 OverfittingPriti YadavÎncă nu există evaluări

- ETCS 301 - Algorithms Design and Analysis - Unit - IDocument71 paginiETCS 301 - Algorithms Design and Analysis - Unit - IramanÎncă nu există evaluări

- AI Previous Years Question Papers Solved by Suresh S KoppalDocument84 paginiAI Previous Years Question Papers Solved by Suresh S Koppalsureshsalgundi344383% (6)

- Object-Oriented Programming For I.TDocument17 paginiObject-Oriented Programming For I.Tdavidco waiÎncă nu există evaluări

- C ++ ProgrammingDocument10 paginiC ++ ProgrammingSwadesh KumarÎncă nu există evaluări

- School of Electrical Engineering and Computing Department of Electronics and CommunicationDocument12 paginiSchool of Electrical Engineering and Computing Department of Electronics and CommunicationKeneni AlemayehuÎncă nu există evaluări

- Chapter 3 Simplex Method PDFDocument32 paginiChapter 3 Simplex Method PDFRafiqah RashidiÎncă nu există evaluări

- Experiment No. 1 Discretization of Signals: Sampling and ReconstructionDocument19 paginiExperiment No. 1 Discretization of Signals: Sampling and Reconstructionraghav dhamaniÎncă nu există evaluări

- Chaos - Based CryptographyDocument5 paginiChaos - Based CryptographyHenry Merino AcuñaÎncă nu există evaluări

- Optimization of Basic BlockDocument14 paginiOptimization of Basic BlockAKSHITA MISHRAÎncă nu există evaluări

- Arml Power 1996 Pt. 1Document7 paginiArml Power 1996 Pt. 1Alex YuÎncă nu există evaluări

- Python ExerciseDocument2 paginiPython ExercisegurjotstgÎncă nu există evaluări

- QuantumDocument1 paginăQuantumabcÎncă nu există evaluări

- The Chinese University of Hong Kong: Course Code: CSCI 2100A Final Examination 10f 2Document2 paginiThe Chinese University of Hong Kong: Course Code: CSCI 2100A Final Examination 10f 2energy0124Încă nu există evaluări

- Floyd Warshall Algorithm (Python) - Dynamic Programming - FavTutorDocument4 paginiFloyd Warshall Algorithm (Python) - Dynamic Programming - FavTutorAnu VarshiniÎncă nu există evaluări

- Datastructure Unit 1 SKMDocument110 paginiDatastructure Unit 1 SKMkrishna moorthyÎncă nu există evaluări

- Lesson Plan PHP 8Document5 paginiLesson Plan PHP 8Naima IbrahimÎncă nu există evaluări

- Ict AssignmentDocument3 paginiIct AssignmentShanmugapriyaVinodkumarÎncă nu există evaluări

- Project G2 EightPuzzleDocument22 paginiProject G2 EightPuzzleCarlos Ronquillo CastroÎncă nu există evaluări

- Airline Crew Scheduling Models Algorithm PDFDocument27 paginiAirline Crew Scheduling Models Algorithm PDFPoli ValentinaÎncă nu există evaluări

- Every CFG G can also be converted to an equivalent grammar in - A context-free grammar G = (V, Σ, P, S) is in Greibach Normal Form iff its productions are of the formDocument29 paginiEvery CFG G can also be converted to an equivalent grammar in - A context-free grammar G = (V, Σ, P, S) is in Greibach Normal Form iff its productions are of the formmanu manuÎncă nu există evaluări

- GFG Array Questions ImportantDocument4 paginiGFG Array Questions Importantakg299Încă nu există evaluări

- SIMPLEX METHOD - QTM PresentationDocument18 paginiSIMPLEX METHOD - QTM Presentationpratham guptaÎncă nu există evaluări

- Equivalence of Pushdown Automata With Context-Free GrammarDocument45 paginiEquivalence of Pushdown Automata With Context-Free GrammarAhmed BouchÎncă nu există evaluări

- CSTDocument111 paginiCSTAmirul AsyrafÎncă nu există evaluări

- CSI3104 S2011 Midterm1 SolnDocument7 paginiCSI3104 S2011 Midterm1 SolnQuinn JacksonÎncă nu există evaluări