Documente Academic

Documente Profesional

Documente Cultură

4599

Încărcat de

DrVishwanatha HNDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

4599

Încărcat de

DrVishwanatha HNDrepturi de autor:

Formate disponibile

IJCPE Vol.8 No.

4 (December 2007) 31

Iraqi Journal of Chemical and Petroleum Engineering

Vol.8 No.4 (December 2007) 31-37

ISSN: 1997-4884

Prediction of Fractional Hold-Up in RDC Column

Using Artificial Neural Network

Adel Al-Hemiri and Suhayla Akkar

Chemical Engineering Department - College of Engineering - University of Baghdad - Iraq

Abstract

In the literature, several correlations have been proposed for hold-up prediction in rotating disk contactor. However,

these correlations fail to predict hold-up over wide range of conditions. Based on a databank of around 611

measurements collected from the open literature, a correlation for hold up was derived using Artificial Neiral Network

(ANN) modeling. The dispersed phase hold up was found to be a function of six parameters: N,

c

v ,

d

v , ,

d c

/ ,

. Statistical analysis showed that the proposed correlation has an Average Absolute Relative Error (AARE) of 6.52%

and Standard Deviation (SD) 9.21%. A comparison with selected correlations in the literature showed that the

developed ANN correlation noticeably improved prediction of dispersed phase hold up. The developed correlation also

shows better prediction over a wide range of operation parameters in RDC columns.

Keywords: dispersed phase hold up, RDC, artificial neural networks (ANN).

Introduction

In the design and scale up of RDC, it is necessary to

explore the hydrodynamic behavior, mass transfer

mechanism, and hold up effect within the equipment

under different operating conditions. Dispersed phase

hold up represents the total drop population in RDC

column is defined as the ratio of dispersed phase to the

volume of the column. The effect of the hold up on the

performance of an extraction column is the most

important hydrodynamic characteristic, because hold up

is related to the interfacial area between the phases by:

32

6

d

x

a (1)

Where x is the dispersed phase hold up and

32

d is the

sauter mean diameter. And the hold up is related to the

rate of mass transfer (W) via (a) by:

c a V K W . . . (2)

Wher K is the mass transfer coefficient, V is volume of

the column and c is the concentration driving force.

In solvent extraction the re lationship between mass

transfer and hydrodynamic performance is complex and

there are many types of contactors each requiring a

special understanding. Numerous experimental studies of

dispersed phase hold up, drop size, mass transfer and

mixing behavior within contactors have been reported

[1].

In order to determine the interfacial area of the

dispersion for the mass transfer calculation using

equation (2) either of the following should be known:

1. The drop residence time in the contactor.

2. The fraction of the column occupied by the dispersed

phase hold up.

In agitated contactors the residence time distribution is

rather complex and dispersed phase hold up is therefore

usually used for the estimation of interfacial area.

Virmijs and Karmers [2] investigated performance of

RDC for various values of the rotor speed, total through

put and solvent to feed ratio by comparing the separating

efficiency with the fractional volume of the dispersed

University of Baghdad

College of Engineering

Iraqi Journal of Chemical

and Petroleum Engineering

Prediction of fractional hold up in RDC column using artificial neural networks

IJCPE Vol.8 No.4 (December 2007) 32

phase under the same circumstances it was found that

under certain condition the efficiency decreases although

the hold up of the dispersed phase increases. This effect

is ascribed to back mixing in continuous phase due to

entrainment by the dispersed phase.

The hold up increased by increasing the solvent to feed

ratio while the total through put is kept constant, and the

special kind of back mixing in the continuous phase

impairs the efficiency of the extraction operation.

Logsdail et.al [3] were the first to introduce the concept

of dispersed phase hold up for the characterization of

column design these authors modified the concept of

relating the slip velocity

s

v of the dispersed phase to the

hold up in a two phase system by:

x

v

x

v

v

c d

s

+

1

(3)

( )

x

v

x

v

x v

c d

o

+

1

1 (4)

o

v is called the characteristic velocity and is defined as

the mean velocity of the droplets extrapolated to

essentially zero flow rates at a fixed rotor speed. Many

correlations have been published relating the dispersed

phase hold- up to the characteristic velocity in the form of

equation 4 with additional factors for column size

constriction and droplets coalescence and break up which

could not be easily applied due to the amount of

information required specially for

o

v . Some selected

reliable correlations are given in table (1). However these

correlations fail to predict hold up over a wide range of

conditions. Thus this work was initiated in order to

develop a general correlation using artificial neural

network.

Artificial Neural Network (ANN)

From an engineering view point ANN can be viewed as

non linear empirical models that are especially useful in

Adel Al-Hemiri and Suhayla Akkar

IJCPE Vol.8 No.4 (December 2007) 33

representing input -output data. Making predication,

classifying data, reorganization patterns, and control

process. ANN which will be referred to as a node in this

work and is analogous to a single neuron in the human

brain. The advantages of using artificial neural network

in contrast with first principles models or other empirical

models are [4-6],

1. ANN can be highly non linear.

2. The structure can be more complex and hence more

representative than most other empirical models.

3. The structure does not have to be prespecified.

4. Quite flexible models.

(ANN) have been increasingly applied to many

problems in transport planning and engineering, and the

feed forward network with the error back propagation

learning rule, usually called simply Back propagation

(Bp), has been the most popular neural network [7].

Back-propagation

Back propagation was one of the first general

techniques developed to train multi-layer networks,

which does not have many of the inherent limitations of

the earlier, single -layer neural nets. A back propagation

net is a multilayer, feed forward network that is trained

by back propagating the errors using the generalized

Delta rule [8].

The steps for back- propagation training can be shown

as follows [9]:

1. Initialize the weights with small, random values.

2. Each input unit broadcasts its value to all of the hidden

units.

3. Each hidden unit sums its input signals and applies its

activation function to compute its output signal.

4. Each hidden unit sends its signal to the output units.

5. Each output unit sums its input signals and applies its

activation function to compute its output signal.

6. Each-output unit updates its weights and bias:

The conventional algorithm used for training a MLFF

is the Bp algorithm, which is an iterative gradient

algorithm designed to minimize the mean-squared error

between the desired output and the actual output for a

particular input to the network [10]. Basically, Bp

learning consists of two passes through the different

layers of the network: a forward pass and backward pass.

During the forward pass the synaptic weights of the

network are all fixed. During the backward pass, on the

other hand, the synaptic weights are all adjusted in

accordance with an error-correction rule [11].

The algorithm of the error back-propagation training is

as given below [10]:

Step 1: initialize network weight values.

Step 2: sum weighted input and apply activation function

to compute output of hidden layer

1

]

1

i

ij i j

W x f h (4)

Where, h

j

: The actual output of hidden neuron j for input

signals X.

X

i

: Input signal of input neuron (i).

W

ij

: Synaptic weights between input neuron hidden

neuron j and i.

f : The activation function.

Step3: sum weighted output of hidden layer and apply

activation function to compute output of output

layer.

1

]

1

j

jk j k

W h f O (5)

Where O

k

: The actual output of output neuron k.

W

jk

: Synaptic weight between hidden neuron j and output

neuron k.

Step 4: Compute back propagation error

( )

,

_

j

jk j k k k

W h f O d ' (6)

Where f: The derivative of the activation function.

d

k

: The desired of output neuron k.

Step 5: Calculate weight correlation term

( ) ( ) 1 + n W h n W

jk j k jk

(7)

Step 6: Sums delta input for each hidden unit and

calculate error term.

( )

ij i jk k j

W X f W ' (8)

Step 7: Calculate weight correction term

( ) ( ) 1 + n W X n W

ij i j ij

(9)

Step 8: Update weights

( ) ( ) ( ) n W n W n W

jk jk jk

+ +1 (10)

Step 9: Repeat step 2 for a given number of error

( )

1

]

1

p k

p

k

p

k

O d

p

MSE

2

2

1

Where p is the number of patterns in the training set.

Step 10: End

Bp is easy to implement, and has been shown to produce

relatively good results in many applications. It is capable

of approximating arbitrary non-linear mappings.

Prediction of fractional hold up in RDC column using artificial neural networks

IJCPE Vol.8 No.4 (December 2007) 34

However, it is noted that two serious disadvantages in the

Bp algorithm are the slow rate of convergence, requiring

very long training times, and getting stuck in local

minima. The success of Bp methods very much depends

on problem specific parameter settings and on the

topology of the network [9].

The Activation Function used with the Back-

Propagation

There are three transfer functions most commonly used for

back propagation, but other differentiable transfer functions

can be created and used with back propagation if desired.

These functions are tansig, logsig, and purelin. The function

logsig generates outputs between 0 and 1 as the neuron's net

input goes from negative to positive infinity. Alternatively,

multilayer networks may use the tan sigmoid transfer

function. Occasionally, the linear transfer function purelin is

used in back propagation networks. [8].

If the last layer of a multilayer network has sigmoid

neurons, then the outputs of the network are limited to a

small range. If linear output neurons are used the network

outputs can take any value. In the present simulation the

tansig is used.

Modeling Correlation of ANN

The modeling of ANN correlation began with the

collection of large data bank followed by the learning file

which was made by randomly selecting about 70% of the

data base to train the network. The remaining 30% of

data is then used to check the generalization capability of

the model. The last step is to perform a neural correlation

and to validate it statistically. So that the steps of

modeling are:-

Collection of Data

The first step is collection of data.. Many

investigators studied the hydrodynamics of RDC based

on the dispersed phase hold up. In this model about 611

experimental points have been collected for mass transfer

from continuous to dispersed phase (c>d), for mass

transfer from dispersed to continuous (d>c) and for the

case of no mass transfer in RDC. The data were divided

into training and test sets: the neural network was trained

on 70% of the data and tested on 30%. The data includes

nine chemical systems with a large range of rotary speed,

velocity of both continuous and dispersed phase as well

as the physical properties for each chemical system. All

of these parameters are input to neural network and there

is one output; it is the hold up of dispersed phase.

The Structure of Artificial Neural Network

In this work, a multilayer neural network has been

used, as it is effective in finding complex non-linear

relationships. It has been reported that mu ltilayer ANN

models with only one hidden layer are universal

approximates. Hence, a three layer feed forward neural

network is chosen as a correlation model. The weighting

coefficients of the neural network are calculated using

MATLAB programming. Structure of artificial neural

network built as:-

1. Input layer: A layer of neurons that receive information

from external sources and pass this information to the

network for processing. These may be either sensory

inputs or signals from other systems outside the one

being modeled. In this work six input neurons in the

layer and there is a set of (427) data points available for

the training set.

2. Hidden layer: A layer of neurons that receives

information from the input layer and processes them in

a hidden way. It has no direct connections to the

outside world (inputs or output). All connections from

the hidden layer are to other layers within the system.

The number of neuron in the hidden layer is twenty one

neurons. This gave best results and was found by trial

and error. If the number of neurons in the hidden layer

is more, the network becomes complicated. Results

probably indicate that, the present problem is not too

complex to have a complicated network routing.

Hence, the results can be satisfactorily achieved by

keeping the number of neurons in hidden layer at a best

value of twenty one neurons.

3. Output layer: A layer of one neuron that receives

processed information and sends output signals out of

the system. Here the output is the hold up of dispersed

phase in RDC.

4. Bias: The function of the bias is to provide a threshold

for activation of neurons. The bias input is connected

to each of hidden neurons in network.

The structure of muiti layer ANN modeling is

illustrated in figure (1).

Adel Al-Hemiri and Suhayla Akkar

IJCPE Vol.8 No.4 (December 2007) 35

Training of Artificial Neural Network

The training phase starts with randomly chosen initial

weight values. Then a back-propagation algorithm is

applied after each iteration, the weights are modified so

that the cumulative error decreases. In back-propagation,

the weight changes are proportional to the negative

gradient of error. More details about this learning

algorithm is shown in figure (1). Back-propagation may

have an excellent performance. This algorithm is used to

calculate the values of the weights and the following

procedure is then used (called "supervised learning") to

determine the values of weights of the network: -

1. For a given ANN architecture, the value of the weights

in the network is initialized as small random numbers.

2. The input of the training set is sent to the network and

resulting outputs are calculated.

3. The measure of the error between the outputs of the

network and the known correct (target) values is

calculated.

4. The gradients of the objective function with respect to

each of the individual weights are calculated.

5. The weights are changed according to the optimization

search direction.

6. The procedure returns to step 2.

7. The iteration terminates when the value of the

objective function calculated using the data in the test

approaches experimental value.

The trial and error to find the best ANN correlation

model is shown in table 2.

Table (2) Network parameters in ANN model

Network Parameters

Structure

MSE

No. of

iteration

Learning

rate

Momentum

coefficient

Transfer

function

[6-16-1]

0.1

2590

0.7

0.9

Tan sigmoid

[6-18-1]

0.01

4321

0.65

0.9

Tan sigmoid

[6-21-1]

0.0001

9103

0.75

0.9

Tan sigmoid

With reduced MSE (Mean Square Error) the network is

more accurate, because MSE is defined as:

( )

1

]

1

p k

p

k

p

k

O d

p

MSE

2

2

1

(13)

Where p is the number of patterns in training set, k is

the number of iterations,

p

k

d is the desired output,

p

k

O

is the actual output.

The learning process includes the procedure when the

data from the input neurons is propagated through the

network via the interconnections. Each neuron in a layer

is connected to every neuron in adjacent layers. A scalar

weight is associated with each interconnection.

Neurons in the hidden layers receive weighted inputs

from each of the neurons in the previous layer and they

sum the weighted inputs to the neuron and then pass the

resulting summation through a non-linear activation

function (tan sigmoid function).

Artificial neural networks learn patterns can be equated

to determining the proper values of the connection

strengths (i.e. the weight matrices w

h

and w

o

illustrated in

figure 1) that allow all the nodes to achieve the correct

state of activation for a given pattern of inputs. The

matrix, bias, and vector, given equations (14), (15), and

(16) illustrate the result of coefficient weights for ANN

correlation , where wl is the matrix containing the weight

vectors for the nodes in the hidden layer, W

o

is the vector

containing the weight for the nodes in the output layer

and is the bias.

(14)

(15)

(16)

Prediction of fractional hold up in RDC column using artificial neural networks

IJCPE Vol.8 No.4 (December 2007) 36

Simulation Results

The network architecture used for predicting hold up is

illustrated in figure (1) consist of six inputs neurons

corresponding to the state variables of the system, with

21 hidden neurons and one output neuron. All neurons in

each layer were fully connected to the neurons in an

adjacent layer. The prediction of ANN correlation result

is plotted in figure (2) compares the predicted hold up

with experimental hold up for training set

Figure (2) Comparison between experimental and

predicted hold up in training set

Figure (3) Comparison between experimental and

predicted hold up in testing set

Test of the Proposed ANN

The purely empirical model was tested on data that

were not used to train the neural network and yielded

very accurate predictions. Having completed the

successful training, another data set was employed to test

the network prediction hold up. We made use of the same

model to generate (184) new data values. The result of

prediction is plotted with experimental values as shown

in figure (3).

Statistical Analysis

Statistical analysis based on the test data is calculated

to validate the accuracy of the output for pervious

correlation model based on ANN. The structure for each

model should give the best output prediction, which is

checked by using statistical analysis. The statistical

analysis of prediction is based on the following criteria:

1. The AARE (Average Absolute Relative Error) should

be minimum:

N

erimental

erimental prediction

x

x x

N

AARE

1 exp

exp 1

(17)

Where N here is the number of data points. x is the hold

up.

2. The standard deviation should be minimum.

( ) [ ]

1

/

2

exp exp

N

AARE x x x

SD

erimental erimental prediction

(18)

3. The correlation coefficient R between input and output

should be around unity.

( )( )

( ) ( )

N

i

prediction prediction

N

i

erimental erimental

N

i

prediction i prediction erimental i erimental

x x x x

x x x x

R

1

2

1

2

exp exp

1

) ( exp ) ( exp

(19)

Where

erimental

x

exp

=hold up mean of experimental points,

prediction

x =hold up mean for prediction points.

The literature correlations (in table 1) were used to

estimate the hold up. These correlations show a poor

agreement between the prediction and experimental hold

up value compared with ANN correlation. Table (3) gives

information of comparing these correlation with ANN

prediction in testing set.

Table (3) Comparison of ANN and previous literature

correlations in testing set

Correlation AARE% S.D% R

Kastkin(1962) 51.93 32.55 0.695

Murakami(1978) 41.29 23.94 0.7914

Hartland(1987) 32.79 22.59 0.778

Kalaichelvi(1998) 32 27.63 0.726

ANN (this work) 6.52 9.21 0.998

Adel Al-Hemiri and Suhayla Akkar

IJCPE Vol.8 No.4 (December 2007) 37

Conclusions

The ANN correlation shows noticeable improvement in

the prediction of dispersed phase hold up. The neural

network correlation yield an AARE of 6.52% and

standard deviation of 9.21%, which is better than those,

obtained for the selected literature correlations. Also

ANN correlation yielded improved predictions for variety

of liquid systems and a wide range of operating

parameters. The number of input units and output units

are fixed to a problem (here, 6 and 1 respectively) but the

choice of the number of the hidden units is flexible. In

this work best results were obtained employing 21 hidden

neurons.

Nomenclature

a Interfacial mass transfer area m

2

/m

3

b Bias

c

Concentration driving force kg/m

3

32

d

Sauter mean diameter

D

r

Diameter of rotary disk m

D

s

Stator ring opening m

D

t

Diameter of RDC column M

f The activation function

f' The derivation of the activation function

g Gravitational constant m/s

2

h

i

The actual output of hidden neuron j

K Mass transfer coefficient` m/s

n Number of input neurons

N Speed of rotor dist rps

O

k

The actual output of neuron k

P The number of patterns in the training set

R Correlation coefficient

V Volume of column m

3

v

c

Velocity of continuous phase m/s

v

d

Velocity of dispersed phase m/s

v

o

Characteristic velocity m/s

v

s

Slip velocity m/s

W Rate of mass transfer kg/s

W

ij

Synaptic weights between input and hidden

neuron

W

j k

Synaptic weights between input and output

neuron

x Hold up

X

i

Input vector

x

Mean hold up

z

c

Height of compartment m

z

t

Height of RDC column M

Greek symbols

Momentum to accelerate the network

convergence process

k

The error term

The learning rate

Viscosity kg/m.s

Interfacial tension N/m

Density kg/m

3

Density difference kg/m

3

Subscripts

c Continuous phase

d Dispersed phase

References

1. Bailes, P.J., Gledhill, J., Godfrey, J.C and Slater, M.J,

"Hydrodynamic behavior of packed, rotating disc

contactor, and Kuhn", Chem. Eng. Res. Des, 64, 43-55,

(1986).

2. Vermijs, H.J., and Kramers, H., "Liquid-liquid

extraction in rotating disc contactor", Chem. Eng. Sci.,

3, 55-64, (1954).

3. Logsdail, D.H., Thomton, J.D., and Pratt, H.R.C.,

"liquid-liquid extraction part XII: flooding rates and

performance data for a rotating disc contactor", Trans.

Inst. Chem. Eng., 35, 301-315, (1957).

4. David M.H., "Applications of artificial neural networks

in chemical engineering", Korean. J. Chem. Eng., 17,

373-392, (2002).

5. Patterson, "Artificial neural networks theory and

applications". Prentice Hall, (1996).

6. Sivanadam, S.N., "Introduction to artificial neural

networks", Vikas publishing House Pvt. Ldt, (2003).

7. Freeman, J.A., and Skapura, D.M., "Neural networks",

Jordan University of Science and Technology, July

(1992).

8. MATLAB, Version 7, June 2003, "Neural network

toolbox"

9. Leonard, J., and Kramer, M.A., "Improvement of the

back-propagation algorithm for training neural

networks", Comp. Chem. Eng, 14, 337-341, (1990).

10. Lendaris, G., "Supervised learning in ANN from

introduction to artificial intelligence". New York,

April 7, (2004).

11. Lippmann, R.P., "An introduction to computing with

neural nets", IEEE Magazine, April, pp.4-22,(1987).

12. Kalaichelvi, P., Murugesan, T., "Dispersed phase

hold up in rotating disc co ntactor", Bioprocess

Engineering, 18,105-111, (1998).

13. Kasatkin, A.G., and Kagan, S.Z Appl., Chem.,

USSR, 35, 1903, (1962) [Cited in Murakami, A., and

Misonou, A., and Inoue, A., 1978].

14. Kumar, A., and Hartland, S., "Independent prediction

of slip velocity and hold up in liquid-liquid

extraction columns", Can. J. Chem. Eng., 67, 17,

(1987).

15. Murakam, A., Misonou, A., Inoue, K., " Dispersed

phase hold up in a rotating disc extraction column",

International Chemical Engineering, 18,1, (1978).

S-ar putea să vă placă și

- Content For Moringa Powder Product Label DesignDocument2 paginiContent For Moringa Powder Product Label DesignDrVishwanatha HNÎncă nu există evaluări

- Is 6608 2004Document13 paginiIs 6608 2004DrVishwanatha HNÎncă nu există evaluări

- Distillation PlantDocument3 paginiDistillation PlantDrVishwanatha HN100% (1)

- Operation and Cleaning of Glass Lined ReactorDocument2 paginiOperation and Cleaning of Glass Lined ReactorDrVishwanatha HN100% (1)

- Sarshika CostingDocument3 paginiSarshika CostingDrVishwanatha HNÎncă nu există evaluări

- Vitamin CDocument3 paginiVitamin CDrkrishnasarma pathyÎncă nu există evaluări

- CalculationDocument5 paginiCalculationDrVishwanatha HNÎncă nu există evaluări

- CH 6503 Cet IiDocument66 paginiCH 6503 Cet IiDrVishwanatha HNÎncă nu există evaluări

- Ideal Non Ideal SolutionDocument6 paginiIdeal Non Ideal SolutionDrVishwanatha HNÎncă nu există evaluări

- Fertilizer, Micronutrients, Biopesticieds and Bio FertilizersDocument6 paginiFertilizer, Micronutrients, Biopesticieds and Bio FertilizersDrVishwanatha HNÎncă nu există evaluări

- Sneha IndustriesDocument13 paginiSneha IndustriesDrVishwanatha HNÎncă nu există evaluări

- 10 1 1 529 7411 PDFDocument39 pagini10 1 1 529 7411 PDFDrVishwanatha HNÎncă nu există evaluări

- 987 PDFDocument5 pagini987 PDFDrVishwanatha HNÎncă nu există evaluări

- Effective Utilization of ZincDocument1 paginăEffective Utilization of ZincDrVishwanatha HNÎncă nu există evaluări

- Material BalanceDocument2 paginiMaterial BalanceDrVishwanatha HNÎncă nu există evaluări

- Chapter - Vii: Moringa Oleifera Seed PowderDocument18 paginiChapter - Vii: Moringa Oleifera Seed PowderDrVishwanatha HNÎncă nu există evaluări

- 987 PDF PDFDocument5 pagini987 PDF PDFDrVishwanatha HNÎncă nu există evaluări

- TT RCT: No - TC I/2 /2 /2Document3 paginiTT RCT: No - TC I/2 /2 /2DrVishwanatha HNÎncă nu există evaluări

- ReviewDocument1 paginăReviewDrVishwanatha HNÎncă nu există evaluări

- BIS Specification Updation 2015Document10 paginiBIS Specification Updation 2015DrVishwanatha HNÎncă nu există evaluări

- Effective Utilization of ZincDocument1 paginăEffective Utilization of ZincDrVishwanatha HNÎncă nu există evaluări

- Project ProfileDocument3 paginiProject ProfileDrVishwanatha HNÎncă nu există evaluări

- Packing TechnologyDocument5 paginiPacking TechnologyDrVishwanatha HNÎncă nu există evaluări

- Introduction To Chemical EngineeringDocument138 paginiIntroduction To Chemical EngineeringDrVishwanatha HN0% (1)

- FluorideDocument63 paginiFluorideSuresh SinghÎncă nu există evaluări

- Chapter02 PDFDocument20 paginiChapter02 PDFDrVishwanatha HNÎncă nu există evaluări

- PFA-Act & RulesDocument216 paginiPFA-Act & RulesSougata Pramanick100% (2)

- Introduction To Chemical EngineeringDocument138 paginiIntroduction To Chemical EngineeringDrVishwanatha HN0% (1)

- Partitioning Studies of A-Lactalbumin in Environmental Friendly Poly (Ethylene Glycol) - Citrate Salt Aqueous Two Phase SystemsDocument7 paginiPartitioning Studies of A-Lactalbumin in Environmental Friendly Poly (Ethylene Glycol) - Citrate Salt Aqueous Two Phase SystemsDrVishwanatha HNÎncă nu există evaluări

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Sri Ramakrishna Engineering College: CircularDocument4 paginiSri Ramakrishna Engineering College: CircularDhaha RoohullaÎncă nu există evaluări

- Mental Status Exam Report OutlineDocument3 paginiMental Status Exam Report OutlineRahman Akinlusi100% (1)

- Lab Report Evaluation Form - Revise 2 0Document5 paginiLab Report Evaluation Form - Revise 2 0markÎncă nu există evaluări

- Homework Strategies For Students With DyslexiaDocument9 paginiHomework Strategies For Students With Dyslexiaert78cgp100% (1)

- Penilaian Preceptorship (Mini-Cex, Dops, Longcase, Soca) 2020Document49 paginiPenilaian Preceptorship (Mini-Cex, Dops, Longcase, Soca) 2020Dian tri febrianaÎncă nu există evaluări

- Detection of Flood Images Using Different ClassifiersDocument5 paginiDetection of Flood Images Using Different ClassifiersInternational Journal of Innovative Science and Research TechnologyÎncă nu există evaluări

- PN Initial Assessment and Triage Questionnaire Coach VersionDocument13 paginiPN Initial Assessment and Triage Questionnaire Coach Versionpraveen100% (1)

- Learning Styles and PreferencesDocument5 paginiLearning Styles and Preferencesapi-3716467Încă nu există evaluări

- Interchange4thEd IntroLevel Unit11 Listening Worksheet PDFDocument2 paginiInterchange4thEd IntroLevel Unit11 Listening Worksheet PDFFlux MillsÎncă nu există evaluări

- Let Review 2023 Teaching Profession Legal Issues in EducationDocument10 paginiLet Review 2023 Teaching Profession Legal Issues in EducationJeah Asilum Dalogdog100% (2)

- Types of UniversitiesDocument2 paginiTypes of Universitiesashtikar_prabodh3313Încă nu există evaluări

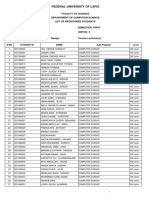

- Final Schedule of Faculty Teachers 2018Document25 paginiFinal Schedule of Faculty Teachers 2018Maripres SalvaÎncă nu există evaluări

- 1-Business Analysis Fundamentals BACCM PDFDocument8 pagini1-Business Analysis Fundamentals BACCM PDFZYSHANÎncă nu există evaluări

- Discontinuation Discharge NoteDocument5 paginiDiscontinuation Discharge Noteapi-582004078Încă nu există evaluări

- Valley Lesson PlanDocument3 paginiValley Lesson Planapi-258572357Încă nu există evaluări

- Topic 8 Team LeadershipDocument45 paginiTopic 8 Team Leadershipfariez79Încă nu există evaluări

- Career After Electrical EngineeringDocument28 paginiCareer After Electrical EngineeringNileshÎncă nu există evaluări

- Portfolio PresentationDocument9 paginiPortfolio Presentationdilhaninperera47Încă nu există evaluări

- ASEAN Work Plan On Youth 2021 2025Document94 paginiASEAN Work Plan On Youth 2021 2025Gregor CleganeÎncă nu există evaluări

- Work Report Human ValuesDocument12 paginiWork Report Human ValuesTeen BodybuildingÎncă nu există evaluări

- IE Wri Task 1 (IE Wri Corner) - Bài giảng LiteracyDocument11 paginiIE Wri Task 1 (IE Wri Corner) - Bài giảng Literacyhiep huyÎncă nu există evaluări

- Rsync: (Remote File Synchronization Algorithm)Document3 paginiRsync: (Remote File Synchronization Algorithm)RF786Încă nu există evaluări

- Theory of Valuation-DeweyDocument75 paginiTheory of Valuation-Deweyyuxue1366100% (1)

- Lesson Plan Day 1Document5 paginiLesson Plan Day 1api-313296159Încă nu există evaluări

- B.Tech - Civil-SyllabusDocument126 paginiB.Tech - Civil-SyllabusSrinivas JupalliÎncă nu există evaluări

- Other ActivitiesDocument2 paginiOther ActivitiesdarshanasgaikwadÎncă nu există evaluări

- Mechanical Engineer Resume For FresherDocument5 paginiMechanical Engineer Resume For FresherIrfan Sayeem SultanÎncă nu există evaluări

- Kindergarten: LessonsDocument6 paginiKindergarten: Lessonslourein ancogÎncă nu există evaluări

- CSC 316Document5 paginiCSC 316Kc MamaÎncă nu există evaluări

- University of The East - College of EngineeringDocument2 paginiUniversity of The East - College of EngineeringPaulAndersonÎncă nu există evaluări