Documente Academic

Documente Profesional

Documente Cultură

Multiple Regression Analysis

Încărcat de

MD AbdulMoid MukarramDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Multiple Regression Analysis

Încărcat de

MD AbdulMoid MukarramDrepturi de autor:

Formate disponibile

CORRELATION AND

MULTIPLE REGRESSION

ANALYSIS

The application of correlation analysis is to

measure the degree of association between two

sets of quantitative data.

The degree of relationship between two

variables is known as correlation coefficient

It has a value ranging from 0 (no correlation) to

1 (perfect positive correlation), or -1 (perfect

negative correlation).

Correlation can be done for any two sets of data

even with out any relevance.

How are sales of seven up correlated with sales of

Mirinda?

How is the advertising expenditure correlated with other

promotional expenditure?

Are daily ice cream sales correlated with daily maximum

temperature?

Correlation does not necessarily mean there is a causal

effect.

Given any two strings of numbers, there will be some

correlation among them. It does not imply that one

variable is causing a change in another, or is dependent

upon another.

Positive Correlation

wt

0

10

20

30

40

50

60

70

80

90

0 50 100 150 200

Negative Correlation

0

10

20

30

40

50

60

70

80

0 20 40 60 80 100

In regression analysis, we have dependent

variable and independent variable

Prediction one (dependent) variable with

other variable (Independent) variables.

The main objective of regression analysis is

to explain the variation in one variable (called

the dependent variable), based on the

variation in one or more other variables

(called the independent variables).

Regression analysis examines associative relationships between a

metric dependent variable and one or more independent variables

in the following ways:

Determine whether the independent variables explain a significant

variation in the dependent variable: whether a relationship exists.

Determine how much of the variation in the dependent variable can

be explained by the independent variables: strength of the

relationship.

The applications areas are in explaining variations in sales of a

product based on advertising expenses, or number of sales people,

or number of sales offices, or on all the above variables.

Determine the structure or form of the relationship: the

mathematical equation relating the independent and dependent

variables.

Predict the values of the dependent variable.

If there is only one dependent variable and

one independent variable is used to explain

the variation in it, then the model is known

as a simple linear regression.

If multiple independent variables are used to

explain the variation in a dependent

variable, it is called a multiple regression

model.

Y = a + b

1

x

1

+ b

2

x

2

+.+ b

n

x

n

where y is the dependent variable and

x

1

, x

2

, x

3

.x

n

are the independent variables expected to be related to y

and expected to explain or predict y.

b

1

, b

2

, b

3

b

n

are the partial regression coefficients of the respective

independent variables, which will be determined from the input

data.

The interpretation of the partial regression coefficient, b

1

, is that it

represents the expected change in Y when X

1

is changed by one unit

but X

2

is held constant or otherwise controlled.

If you increase one unit in x

1

, Y will get increase or decrease b

1

times

Likewise, b

2

represents the expected change in

Y for a unit change in X

2

, when X

1

is held constant. Thus, calling b

1

and b

2

partial regression coefficients is appropriate.

It can also be seen that the combined effects of X1 and X2 on Y are

additive. In other words, if X1 and X2 are each changed by one

unit, the expected change in Y would be (b1+b2).

Statistics in Multiple Regression

analysis

F test. The F test is used to test the null hypothesis.

This is equivalent to testing the null hypothesis .i.e.

P' value should be less than 0.05 (95% confidence)

or 0.1 (90% confidence level).

Adjusted R

2

. R

2

, coefficient of multiple

determination: Percentage or proportion of the total

variance in Y explained by all the independent

variables in the regression equation. It indicates how

well the independent variables can predict the

dependent variable .

The output also gives you the results of a t test for

the significance of each variable in the model. It will

test whether each individual independent variable

can predict the dependent variable .

Regression Model establishes the

relationship between loyalty and satisfaction

& purchase

Loyalty y = a + B

1

X

1

+ B

2

X

2

.

X

1

= Satisfaction

X

2

= Purchase

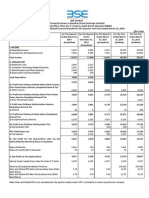

Multiple Regression

Table 17.3

Multiple R 0.97210

R

2

0.94498

Adjusted R

2

0.93276

Standard Error 0.85974

ANALYSIS OF VARIANCE

df Sum of Squares Mean Square

Regression 2 114.26425 57.13213

Residual 9 6.65241 0.73916

F = 77.29364 Significance of F = 0.0000

VARIABLES IN THE EQUATION

Variable b SE

b

Beta () T Significance

of T

satisfaction 0.28865 0.08608 0.31382 3.353 0.0085

Purchase 0.48108 0.05895 0.76363 8.160 0.0000

(Constant) 0.33732 0.56736 0.595 0.5668

Loyalty y = 0.33732 + 0.28865 X

1

+

0.48108X

2.

(satisfaction)

(purchase)

S-ar putea să vă placă și

- Example How To Perform Multiple Regression Analysis Using SPSS StatisticsDocument14 paginiExample How To Perform Multiple Regression Analysis Using SPSS StatisticsMunirul Ula100% (1)

- Color Order SystemsDocument30 paginiColor Order SystemsBeyene DumechaÎncă nu există evaluări

- AnovaDocument55 paginiAnovaFiona Fernandes67% (3)

- Regression Analysis A Complete Guide - 2020 EditionDe la EverandRegression Analysis A Complete Guide - 2020 EditionÎncă nu există evaluări

- Multiple RegressionDocument31 paginiMultiple Regressionkhem_singhÎncă nu există evaluări

- Correlation and RegressionDocument36 paginiCorrelation and Regressionanindya_kundu100% (3)

- Regression Analysis Explained: Estimating Credit Cards from Family SizeDocument48 paginiRegression Analysis Explained: Estimating Credit Cards from Family SizeUmair Khan NiaziÎncă nu există evaluări

- 5682 - 4433 - Factor & Cluster AnalysisDocument22 pagini5682 - 4433 - Factor & Cluster AnalysisSubrat NandaÎncă nu există evaluări

- Session2-Simple Linear Model PDFDocument56 paginiSession2-Simple Linear Model PDFindrakuÎncă nu există evaluări

- Mann Whitney Wilcoxon Tests (Simulation)Document16 paginiMann Whitney Wilcoxon Tests (Simulation)scjofyWFawlroa2r06YFVabfbajÎncă nu există evaluări

- Multiple Regression AnalysisDocument19 paginiMultiple Regression AnalysisSachen KulandaivelÎncă nu există evaluări

- Regression and Multiple Regression AnalysisDocument21 paginiRegression and Multiple Regression AnalysisRaghu NayakÎncă nu există evaluări

- Regression Analysis HandoutsDocument12 paginiRegression Analysis HandoutsandersonÎncă nu există evaluări

- Hypothesis Testing RoadmapDocument2 paginiHypothesis Testing RoadmapRam KumarÎncă nu există evaluări

- Econometrics EnglishDocument62 paginiEconometrics EnglishATHANASIA MASOURAÎncă nu există evaluări

- Alpha New Bp12Document54 paginiAlpha New Bp12AUTO HUBÎncă nu există evaluări

- Multiple Regression Analysis and Model BuildingDocument57 paginiMultiple Regression Analysis and Model BuildingReader88% (8)

- The Fundamentals of Regression Analysis PDFDocument99 paginiThe Fundamentals of Regression Analysis PDFkatie farrellÎncă nu există evaluări

- Statistics IntroductionDocument55 paginiStatistics IntroductionRahul DhakerÎncă nu există evaluări

- Factor Analysis: Exploring Underlying Variables in DataDocument8 paginiFactor Analysis: Exploring Underlying Variables in DataKritika Jaiswal100% (1)

- Forecasting Cable Subscriber Numbers with Multiple RegressionDocument100 paginiForecasting Cable Subscriber Numbers with Multiple RegressionNilton de SousaÎncă nu există evaluări

- Chi Squared Test for Association Between Two Fir Tree SpeciesDocument5 paginiChi Squared Test for Association Between Two Fir Tree SpeciesToman Chi To LamÎncă nu există evaluări

- Appliance ShipmentsDocument10 paginiAppliance ShipmentsnÎncă nu există evaluări

- Two-Way ANOVA Explained for Pollution Filter ExperimentDocument26 paginiTwo-Way ANOVA Explained for Pollution Filter ExperimentRaluca NegruÎncă nu există evaluări

- Simple Regression Analysis: Key Concepts ExplainedDocument51 paginiSimple Regression Analysis: Key Concepts ExplainedGrace GecomoÎncă nu există evaluări

- Vtol Design PDFDocument25 paginiVtol Design PDFElner CrystianÎncă nu există evaluări

- Quantitative Research Methods Lecture 3: ANOVA 1Document39 paginiQuantitative Research Methods Lecture 3: ANOVA 1PolinaÎncă nu există evaluări

- Parametric Statistical Analysis in SPSSDocument56 paginiParametric Statistical Analysis in SPSSKarl John A. GalvezÎncă nu există evaluări

- Sample Size DeterminationDocument19 paginiSample Size Determinationsanchi rajputÎncă nu există evaluări

- Analysis Analysis: Multivariat E Multivariat EDocument12 paginiAnalysis Analysis: Multivariat E Multivariat Epopat vishalÎncă nu există evaluări

- Anova (Quality Management)Document62 paginiAnova (Quality Management)yttan1116Încă nu există evaluări

- Master of Business AdministrationDocument7 paginiMaster of Business AdministrationMD AbdulMoid MukarramÎncă nu există evaluări

- Assumptions of RegressionDocument16 paginiAssumptions of RegressionWaqar AhmadÎncă nu există evaluări

- Sample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignDe la EverandSample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignÎncă nu există evaluări

- Ranjana Project Report On Inventory ManagementDocument93 paginiRanjana Project Report On Inventory Managementranjanachoubey90% (10)

- Multiple RegressionDocument41 paginiMultiple RegressionSunaina Kuncolienkar0% (1)

- Multiple Regression AnalysisDocument27 paginiMultiple Regression AnalysisEmmy SukhÎncă nu există evaluări

- Runs Test for Randomness ExplainedDocument5 paginiRuns Test for Randomness ExplaineddilpalsÎncă nu există evaluări

- Multiple Regression With SPSSDocument16 paginiMultiple Regression With SPSSapi-19973711Încă nu există evaluări

- BCBB Workshop Applied Statistics II-2 & IIIDocument59 paginiBCBB Workshop Applied Statistics II-2 & IIIMagnifico FangaWoroÎncă nu există evaluări

- Multiple RegressionDocument21 paginiMultiple RegressionShanyaRastogiÎncă nu există evaluări

- Multiple Regression MSDocument35 paginiMultiple Regression MSWaqar AhmadÎncă nu există evaluări

- Chapter 14, Multiple Regression Using Dummy VariablesDocument19 paginiChapter 14, Multiple Regression Using Dummy VariablesAmin HaleebÎncă nu există evaluări

- AP Statistics 1st Semester Study GuideDocument6 paginiAP Statistics 1st Semester Study GuideSusan HuynhÎncă nu există evaluări

- Regression AnalysisDocument28 paginiRegression AnalysisAsad AliÎncă nu există evaluări

- Instalinotes - PREVMED IIDocument24 paginiInstalinotes - PREVMED IIKenneth Cuballes100% (1)

- The Binomial, Poisson, and Normal Distributions ExplainedDocument39 paginiThe Binomial, Poisson, and Normal Distributions Explaineddela2100% (1)

- Session-Correlation and RegressionDocument24 paginiSession-Correlation and RegressionDhivya SivananthamÎncă nu există evaluări

- Chi Square TestDocument45 paginiChi Square TestdostoviskyÎncă nu există evaluări

- Lecture 11 Factor AnalysisDocument21 paginiLecture 11 Factor AnalysisKhurram SherazÎncă nu există evaluări

- Multiple RegressionDocument51 paginiMultiple Regressionalbertnow8Încă nu există evaluări

- Non Parametric TestsDocument49 paginiNon Parametric TestsriyazÎncă nu există evaluări

- Recommended Sample Size For Conducting Exploratory Factor AnalysiDocument11 paginiRecommended Sample Size For Conducting Exploratory Factor AnalysimedijumÎncă nu există evaluări

- Chapter 6 Estimates and Sample SizesDocument121 paginiChapter 6 Estimates and Sample SizesMirra Hasna Nurdini100% (2)

- Multivariate Statistical Methods with RDocument11 paginiMultivariate Statistical Methods with Rqwety300Încă nu există evaluări

- Regression Modeling Strategies: Frank E. Harrell, JRDocument11 paginiRegression Modeling Strategies: Frank E. Harrell, JRCipriana Gîrbea50% (2)

- Bowerman CH15 APPT FinalDocument38 paginiBowerman CH15 APPT FinalMuktesh Singh100% (1)

- Introduction To Multiple Linear RegressionDocument49 paginiIntroduction To Multiple Linear RegressionRennate MariaÎncă nu există evaluări

- Regression Analysis Predicts Travel TimeDocument12 paginiRegression Analysis Predicts Travel Timekuashask2Încă nu există evaluări

- Correlation and RegressionDocument31 paginiCorrelation and RegressionDela Cruz GenesisÎncă nu există evaluări

- Two Sample Hotelling's T SquareDocument30 paginiTwo Sample Hotelling's T SquareJoselene MarquesÎncă nu există evaluări

- Prof. R C Manocha Autocorrelation: What Happens If The Error Terms Are Correlated?Document21 paginiProf. R C Manocha Autocorrelation: What Happens If The Error Terms Are Correlated?Priyamvada ShekhawatÎncă nu există evaluări

- RegressionDocument6 paginiRegressionooveÎncă nu există evaluări

- Exploratory and Confirmatory Factor Analysis PDFDocument2 paginiExploratory and Confirmatory Factor Analysis PDFRobÎncă nu există evaluări

- Roles of Entrepreneurs: Spotting Opportunities and Creating WealthDocument2 paginiRoles of Entrepreneurs: Spotting Opportunities and Creating WealthMD AbdulMoid MukarramÎncă nu există evaluări

- Form-6A-Application For Inclusion of Name in Electoral Roll by An Overseas Indian Elector. (English)Document4 paginiForm-6A-Application For Inclusion of Name in Electoral Roll by An Overseas Indian Elector. (English)Ilamaran HemalathaÎncă nu există evaluări

- CAIT Whitepaper On FDI in RetailDocument28 paginiCAIT Whitepaper On FDI in RetailMD AbdulMoid MukarramÎncă nu există evaluări

- Form-6A-Application For Inclusion of Name in Electoral Roll by An Overseas Indian Elector. (English)Document2 paginiForm-6A-Application For Inclusion of Name in Electoral Roll by An Overseas Indian Elector. (English)MD AbdulMoid MukarramÎncă nu există evaluări

- Airtel 140323032138 Phpapp01Document14 paginiAirtel 140323032138 Phpapp01MD AbdulMoid MukarramÎncă nu există evaluări

- Standalone Financial ResultsDocument4 paginiStandalone Financial ResultsMD AbdulMoid MukarramÎncă nu există evaluări

- T TestDocument4 paginiT TestMD AbdulMoid MukarramÎncă nu există evaluări

- Consolidated FinancialDocument4 paginiConsolidated FinancialMD AbdulMoid MukarramÎncă nu există evaluări

- Admission ListingDocument2 paginiAdmission ListingMD AbdulMoid MukarramÎncă nu există evaluări

- M.B.a. I Sem Exam Result Nov-Dec 2013, Bangalore University, Karnataka - IndiaResultsDocument1 paginăM.B.a. I Sem Exam Result Nov-Dec 2013, Bangalore University, Karnataka - IndiaResultsMD AbdulMoid MukarramÎncă nu există evaluări

- Agriculture Sector Group - 3Document49 paginiAgriculture Sector Group - 3MD AbdulMoid MukarramÎncă nu există evaluări

- Battle Between BSE NSEDocument39 paginiBattle Between BSE NSERamesh LaishettyÎncă nu există evaluări

- Preparing a Synopsis for a DissertationDocument2 paginiPreparing a Synopsis for a DissertationMashum AliÎncă nu există evaluări

- Brand Equity Mba Dissertation George RossolatosDocument111 paginiBrand Equity Mba Dissertation George RossolatosgrossolattosÎncă nu există evaluări

- 058 - Slide 77 728Document1 pagină058 - Slide 77 728MD AbdulMoid MukarramÎncă nu există evaluări

- ReferencesDocument1 paginăReferencesMD AbdulMoid MukarramÎncă nu există evaluări

- Faris Al SaidDocument380 paginiFaris Al SaidMD AbdulMoid MukarramÎncă nu există evaluări

- B.A III Yr Result 2014Document242 paginiB.A III Yr Result 2014MD AbdulMoid MukarramÎncă nu există evaluări

- ING ZIP Application FormDocument2 paginiING ZIP Application FormMD AbdulMoid MukarramÎncă nu există evaluări

- PatelDocument2 paginiPatelMD AbdulMoid MukarramÎncă nu există evaluări

- Farm Science Courses (B.SC (Ag), BVSC, BTech (Food), BTech (Ag) )Document1 paginăFarm Science Courses (B.SC (Ag), BVSC, BTech (Food), BTech (Ag) )AnweshaBoseÎncă nu există evaluări

- List of Bollywood movies from 2011-2014Document9 paginiList of Bollywood movies from 2011-2014MD AbdulMoid MukarramÎncă nu există evaluări

- Comparative Analysis of Indian Stock Market With2258Document33 paginiComparative Analysis of Indian Stock Market With2258Subrat SarÎncă nu există evaluări

- KCET 2014 Seat Matrix - Engineering - Hyd-Kar QuotaDocument6 paginiKCET 2014 Seat Matrix - Engineering - Hyd-Kar QuotaAnweshaBoseÎncă nu există evaluări

- Fees StructureDocument1 paginăFees StructureMD AbdulMoid MukarramÎncă nu există evaluări

- Architecture - Hyd-Kar QuotaDocument1 paginăArchitecture - Hyd-Kar QuotaAnweshaBoseÎncă nu există evaluări

- User GuideDocument109 paginiUser GuideMD AbdulMoid MukarramÎncă nu există evaluări

- A Design and Analysis of A Morphing Hyper-Elliptic Cambered Span (HECS) WingDocument10 paginiA Design and Analysis of A Morphing Hyper-Elliptic Cambered Span (HECS) WingJEORJEÎncă nu există evaluări

- c1Document19 paginic1vgnagaÎncă nu există evaluări

- Cantors Paradox PDFDocument16 paginiCantors Paradox PDFColectivo Utopía MoreliaÎncă nu există evaluări

- Introduction To Curve FittingDocument10 paginiIntroduction To Curve FittingscjofyWFawlroa2r06YFVabfbajÎncă nu există evaluări

- MITRES 6 002S08 Chapter2Document87 paginiMITRES 6 002S08 Chapter2shalvinÎncă nu există evaluări

- Eurotech IoT Gateway Reliagate 10 12 ManualDocument88 paginiEurotech IoT Gateway Reliagate 10 12 Manualfelix olguinÎncă nu există evaluări

- Unit-3 BioinformaticsDocument15 paginiUnit-3 Bioinformaticsp vmuraliÎncă nu există evaluări

- Great Lakes PGDM Interview Access DetailsDocument1 paginăGreat Lakes PGDM Interview Access DetailsJaswanth konkepudiÎncă nu există evaluări

- Lecture 1: Encoding Language: LING 1330/2330: Introduction To Computational Linguistics Na-Rae HanDocument18 paginiLecture 1: Encoding Language: LING 1330/2330: Introduction To Computational Linguistics Na-Rae HanLaura AmwayiÎncă nu există evaluări

- Craig Vaughan CHPTR 07Document44 paginiCraig Vaughan CHPTR 07Jorge CananeaÎncă nu există evaluări

- Introduction to Continuous Optimization for Machine LearningDocument10 paginiIntroduction to Continuous Optimization for Machine LearningMarcos OliveiraÎncă nu există evaluări

- Epoxy Hardeners, Water-Reducible: ProductsDocument1 paginăEpoxy Hardeners, Water-Reducible: ProductsDhruv SevakÎncă nu există evaluări

- ITTC Validation of ManoeuvringDocument11 paginiITTC Validation of ManoeuvringFelipe IlhaÎncă nu există evaluări

- Eltek PSR 327Document2 paginiEltek PSR 327fan liuÎncă nu există evaluări

- Indian Standards List As On Jan2009Document216 paginiIndian Standards List As On Jan2009Vasudeva Pavan VemuriÎncă nu există evaluări

- Die Science - Developing Forming Dies - Part I - The FabricatorDocument6 paginiDie Science - Developing Forming Dies - Part I - The FabricatorSIMONEÎncă nu există evaluări

- Microstation V8I Accudraw Basics: Bentley Institute Course GuideDocument80 paginiMicrostation V8I Accudraw Basics: Bentley Institute Course Guideh_eijy2743Încă nu există evaluări

- Spesifikasi ACER Travelmate P2-P245Document12 paginiSpesifikasi ACER Travelmate P2-P245Sebastian Fykri AlmuktiÎncă nu există evaluări

- SPPID QuestionsDocument2 paginiSPPID Questionsvivek83% (12)

- Probability Statistics and Random Processes Third Edition T Veerarajan PDFDocument3 paginiProbability Statistics and Random Processes Third Edition T Veerarajan PDFbhavyamÎncă nu există evaluări

- Smart Dustbin using ArduinoDocument22 paginiSmart Dustbin using ArduinoEr Dinesh TambeÎncă nu există evaluări

- Data Warehousing: Modern Database ManagementDocument49 paginiData Warehousing: Modern Database ManagementNgọc TrâmÎncă nu există evaluări

- 3BSE079234 - en 800xa 6.0 ReleasedDocument7 pagini3BSE079234 - en 800xa 6.0 ReleasedFormat_CÎncă nu există evaluări

- Service Manual: S&T Motors Co., LTDDocument94 paginiService Manual: S&T Motors Co., LTDJuliano PedrosoÎncă nu există evaluări

- Introduction - Week 2Document37 paginiIntroduction - Week 2Tayyab AhmedÎncă nu există evaluări

- SBM Level of Practice Validation FormDocument43 paginiSBM Level of Practice Validation Formelvira pilloÎncă nu există evaluări