Documente Academic

Documente Profesional

Documente Cultură

IJAET Volume 7 Issue 3 July 2014

Încărcat de

IJAET JournalDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

IJAET Volume 7 Issue 3 July 2014

Încărcat de

IJAET JournalDrepturi de autor:

Formate disponibile

VOLUME-7, ISSUE-3

Date:

International Journal of Advances in

Engineering & Technology (IJAET)

Smooth, Simple and Timely Publishing of

Review and Research Articles!

ISSN : 2231-1963

July2014

International Journal of Advances in Engineering & Technology, Jul. 2014.

IJAET ISSN: 2231-1963

i Vol. 7, Issue 3, pp. i-vi

Table of Content

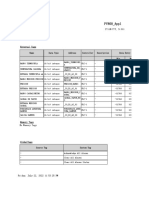

S. No. Article Title & Authors (Volume 7, Issue 3, July-2014) Page Nos

1.

Data Warehouse Design and Implementation Based on Quality

Requirements

Khalid Ibrahim Mohammed

642-651

2.

A New Mechanism in Hmipv6 to Improve Micro and Macro

Mobility

Sahar Abdul Aziz Al-Talib

652-665

3.

Efficiency Optimization of Vector-Controlled Induction Motor

Drive

Hussein Sarhan

666-674

4.

Flexible Differential Frequency-to-Voltage and Voltage-to-

Frequency Converters using Monolithic Analogue

Reconfigurable Devices

Ivailo Milanov Pandiev

675-683

5.

A Review Search Bitmap Image for Sub Image and the Padding

Problem

Omeed Kamal Khorsheed

684-691

6.

Potential Use of Phase Change Materials with Reference to

Thermal Energy Systems in South Africa

Basakayi J.K., Storm C.P.

692-700

7.

Improving Software Quality in the Service Process Industry

using Agility with Software Reusable Components as Software

Product Line: An Empirical Study of Indian Service Providers

Charles Ikerionwu, Richard Foley, Edwin Gray

701-711

8.

Produce Low-Pass and High-Pass Image Filter in Java

Omeed Kamal Khorsheed

712-722

9.

The Comparative Analysis of Social Network in International

and Local Corporate Business

Mohmed Y. Mohmed AL-SABAAWI

723-732

International Journal of Advances in Engineering & Technology, Jul. 2014.

IJAET ISSN: 2231-1963

ii Vol. 7, Issue 3, pp. i-vi

10.

Precise Calculation Unit Based on a Hardware Implementation

of a Formal Neuron in a FPGA Platform

Mohamed ATIBI, Abdelattif BENNIS, Mohamed

BOUSSAA

733-742

11.

Temperature Profiling at Southern Latitudes by Deploying

Microwave Radiometer

A. K. Pradhan, S. Mondal, L. A. T. Machado and P. K.

Karmakar

743-755

12.

Development and Evaluation of Trolley-cum-Batch Dryer for

Paddy

Mohammed Shafiq Alam and V K Sehgal

756-764

13.

Joint Change Detection and Image Registration Method for

Multitemporal SAR Images

Lijinu M Thankachan, Jeny Jose

765-772

14.

Load - Settlement Behaviour of Granular Pile in Black Cotton

Soil

Siddharth Arora, Rakesh Kumar and P. K. Jain

773-781

15.

Harmonic Study of VFDS and Filter Design: A Case Study for

Sugar Industry with Cogeneration

V. P. Gosavi and S. M. Shinde

782-789

16.

Precipitation and Kinetics of Ferrous Carbonate in Simulated

Brine Solution and its Impact on CO2 Corrosion of Steel

G. S. Das

790-797

17.

Performance Comparison of Power System Stabilizer with and

Without Facts Device

Amit Kumar Vidyarthi, Subrahmanyam Tanala, Ashish

Dhar Diwan

798-806

18.

Hydrological Study of Man (Chandrabhaga) River

Shirgire Anil Vasant, Talegaokar S.D.

807-817

19. Crop Detection by Machine Vision for Weed Management

818-826

International Journal of Advances in Engineering & Technology, Jul. 2014.

IJAET ISSN: 2231-1963

iii Vol. 7, Issue 3, pp. i-vi

Ashitosh K Shinde and Mrudang Y Shukla

20.

Detection & Control of Downey Mildew Disease in Grape Field

Vikramsinh Kadam, Mrudang Shukla

826-837

21.

Ground Water Status- A Case Study of Allahabad, UP, India

Ayush Mittal, Munesh Kumar

838-844

22.

Clustering and Noise Detection For Geographic Knowledge

Discovery

Sneha N S and Pushpa

845-855

23.

Proxy Driven FP Growth Based Prefetching

Devender Banga and Sunitha Cheepurisetti

856-862

24.

Search Network Future Generation Network for Information

Interchange

G. S. Satisha

863-867

25.

A Brief Survey on Bio Inspired Optimization Algorithms For

Molecular Docking

Mayukh Mukhopadhyay

868-878

26.

Heat Transfer Analysis of Cold Storage

Upamanyu Bangale and Samir Deshmukh

879-886

27.

Localized RGB Color Histogram Feature Descriptor for Image

Retrieval

K. Prasanthi Jasmine, P. Rajesh Kumar

887-895

28.

Witricity for Wireless Sensor Nodes

M. Karthika and C. Venkatesh

896-904

29.

Study of Swelling Behaviour of Black Cotton Soil Improved

with Sand Column

Aparna, P.K. Jain and Rakesh Kumar

905-910

30.

Effective Fault Handling Algorithm for Load Balancing using

Ant Colony Optimization in Cloud Computing

911-916

International Journal of Advances in Engineering & Technology, Jul. 2014.

IJAET ISSN: 2231-1963

iv Vol. 7, Issue 3, pp. i-vi

Divya Rastogi and Farhat Ullah Khan

31.

Handling Selfishness over Mobile Ad Hoc Network

Madhuri D. Mane and B. M. Patil

917-922

32.

A New Approach to Design Low Power CMOS Flash A/D

Converter

C Mohan and T Ravisekhar

923-929

33.

Optimization and Comparative Analysis of Non-Renewable and

Renewable System

Swati Negi and Lini Mathew

930-937

34.

A Feed Forward Artificial Neural Network based System to

Minimize DOS Attack in Wireless Network

Tapasya Pandit & Anil Dudy

938-947

35.

Improving Performance of Delay Aware Data Collection Using

Sleep and Wake Up Approach in Wireless Sensor Network

Paralkar S. S. and B. M. Patil

948-956

36.

Improved New Visual Cryptographic Scheme Using One

Shared Image

Gowramma B.H, Shyla M.G, Vivekananda

957-966

37.

Segmentation of Brain Tumour from Mri Images by Improved

Fuzzy System

Sumitharaj.R, Shanthi.K

967-973

38.

Implementation of Classroom Attendance System Based on

Face Recognition in Class

Ajinkya Patil, Mrudang Shukla

974-979

39.

A Tune-In Optimization Process of AISI 4140 in Raw Turning

Operation using CVD Coated Insert

C. Rajesh

980-990

40.

A Modified Single-frame Learning based Super-Resolution and

its Observations

Vaishali R. Bagul and Varsha M. Jain

991-997

International Journal of Advances in Engineering & Technology, Jul. 2014.

IJAET ISSN: 2231-1963

v Vol. 7, Issue 3, pp. i-vi

41.

Design Modification and Analysis of Two Wheeler Cooling

Fins-A Review

Mohsin A. Ali and S.M Kherde

998-1002

42.

Virtual Wireless Keyboard System with Co-ordinate Mapping

Souvik Roy, Ajay Kumar Singh, Aman Mittal, Kunal

Thakral

1003-1008

43.

Secure Key Management in Ad-Hoc Network: A Review

Anju Chahal and Anuj Kumar, Auradha

1009-1017

44.

Prediction of Study Track by Aptitude Test using Java

Deepali Joshi and Priyanka Desai

1018-1026

45.

Unsteady MHD Three Dimensional Flow of Maxwell Fluid

Through a Porous Medium in a Parallel Plate Channel under

the Influence of Inclined Magnetic Field

L. Sreekala, M. Veera Krishna, L. Hari Krishna and E.

Kesava Reddy

1027-1037

46.

Video Streaming Adaptivity and Efficiency in Social

Networking Sites

G. Divya and E R Aruna

1038-1043

47.

Intrusion Detection System using Dynamic Agent Selection and

Configuration

Manish Kumar, M. Hanumanthappa

1044-1052

48.

Evaluation of Characteristic Properties of Red Mud For

Possible use as a Geotechnical Material in Civil Construction

Kusum Deelwal, Kishan Dharavath, Mukul Kulshreshtha

1053-1059

49.

Performance Analysis of IEEE 802.11e EDCA with QoS

Enhancements Through Adapting AIFSN Parameter

Vandita Grover and Vidusha Madan

1060-1066

50.

Data Derivation Investigation

S. S. Kadam, P.B. Kumbharkar

1067-1074

International Journal of Advances in Engineering & Technology, Jul. 2014.

IJAET ISSN: 2231-1963

vi Vol. 7, Issue 3, pp. i-vi

51.

Design and Implementation of Online Patient Monitoring

System

Harsha G S

1075-1081

52.

Comparison Between Classical and Modern Methods of

Direction of Arrival (DOA) Estimation

Mujahid F. Al-Azzo, Khalaf I. Al-Sabaaw

1082-1090

53.

Modelling Lean, Agile, Leagile Manufacturing Strategies: An

Fuzzy Analytical Hierarchy Process Approach For Ready

Made Ware (Clothing) Industry in Mosul, Iraq

Thaeir Ahmed Saadoon Al Samman

1091-1108

Members of IJAET Fraternity A - N

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

642 Vol. 7, Issue 3, pp. 642-651

DATA WAREHOUSE DESIGN AND IMPLEMENTATION BASED

ON QUALITY REQUIREMENTS

Khalid Ibrahim Mohammed

Department of Computer Science, College of Computer, University of Anbar, Iraq.

ABSTRACT

The data warehouses are considered modern ancient techniques, since the early days for the relational

databases, the idea of the keeping a historical data for reference when it needed has been originated, and the

idea was primitive to create archives for the historical data to save these data, despite of the usage of a special

techniques for the recovery of these data from the different storage modes. This research applied of structured

databases for a trading company operating across the continents, has a set of branches each one has its own

stores and showrooms, and the company branchs group of sections with specific activities, such as stores

management, showrooms management, accounting management, contracts and other departments. It also

assumes that the company center exported software to manage databases for all branches to ensure the safety

performance, standardization of processors and prevent the possible errors and bottlenecks problems. Also the

research provides this methods the best requirements have been used for the applied of the data warehouse

(DW), the information that managed by such a applied must be with high accuracy. It must be emphasized to

ensure compatibility information and hedge its security, in schemes domain, been applied to a comparison

between the two schemes (Star and Snowflake Schemas) with the concepts of multidimensional database. It

turns out that Star Schema is better than Snowflake Schema in (Query complexity, Query performance,

Foreign Key Joins),And finally it has been concluded that Star Schema center fact and change, while Snowflake

Schema center fact and not change.

KEYWORDS: Data Warehouses, OLAP Operation, ETL, DSS, Data Quality.

I. INTRODUCTION

A data warehouse is a subject-oriented, integrated, nonvolatile, and time-variant collection of data in

support of managements decisions. The data warehouse contains granular corporate data. Data in the

data warehouse is able to be used for many different purposes, including sitting and waiting for future

requirements which are unknown today [1]. Data warehouse provides the primary support for

Decision Support Systems (DSS) and Business Intelligence (BI) systems. Data warehouse, combined

with On-Line Analytical Processing (OLAP) operations, has become and more popular in Decision

Support Systems and Business Intelligence systems. The most popular data model of Data warehouse

is multidimensional model, which consists of a group of dimension tables and one fact table

according to the functional requirements [2]. The purpose of a data warehouse is to ensure the

appropriate data is available to the appropriate end user at the appropriate time [3]. Data warehouses

are based on multidimensional modeling. Using On-Line Analytical Processing tools, decision

makers navigate through and analyze multidimensional data [4].

Data warehouse uses a data model that is based on multidimensional data model. This model is also

known as a data cube which allows data to be modeled and viewed in multiple dimensions [5]. And

the schema of a data warehouse lies on two kinds of elements: facts and dimensions. Facts are used to

memorize measures about situations or events. Dimensions are used to analyze these measures,

particularly through aggregations operations (counting, summation, average, etc.) [6, 7]. Data

Quality (DQ) is the crucial factor in data warehouse creation and data integration. The data

warehouse must fail and cause a great economic loss and decision fault without insight analysis of

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

643 Vol. 7, Issue 3, pp. 642-651

data problems [8]. The quality of data is often evaluated to determine usability and to establish the

processes necessary for improving data quality. Data quality may be measured objectively or

subjectively. Data quality is a state of completeness, validity, consistency, timeliness and accuracy

that make data appropriate for a specific use [9]. The paper is divided into seven sections. Section 1

introduction, Definition of Data Warehouse and The Quality of Data Warehouse. Section 2 presents

related work, Section 3 presents Data Warehouse Creation and the main idea is that a Data warehouse

database gathers data from an overseas trading company databases. Section 4describes Data

Warehouse Design For this study, we suppose a hypothetical company with many branches around

the world, each branch has so many stores and showrooms scattered within the branch location. Each

branch has a database to manage branch information. Section 5 describes our evaluation Study of

Quality Criteria for DW, which covers aspects related both to quality and performance of our

approach, and the obtained results, and work on compare between star schema and snowflake schema.

Section 6 provides conclusions. Finally, Section 7 describes open issues and our planned future work.

1.1 Definition of Data Warehouse

A data warehouse is a relational database that is designed for query and analysis rather than for

transaction processing. It usually contains historical data derived from transaction data, but it can

include data from other sources. It separates analysis workload from transaction workload and

enables an organization to consolidate data from several sources. In addition to a relational database,

a data warehouse environment can include an extraction, transportation, transformation, and loading

(ETL) solution, an online analytical processing (OLAP) engine, client analysis tools, and other

applications that manage the process of gathering data and delivering it to business users. A common

way of introducing data warehousing is to refer to the characteristics of a data warehouse as set forth

by William Inmon [10]:

1. Subject Oriented.

2. Integrated.

3. Nonvolatile.

4. Time Variant.

1.2 The Quality of Data Warehouse

Data quality has been defined as the fraction of performance over expectancy, or as the loss imparted

to society from the time a product is shipped [11]. The believe was the best definition is the one found

in [12, 13, and 14]: data quality is defined as "fitness for use". The nature of this definition directly

implies that the concept of data quality is relative. For example, data semantics is different for each

distinct user. The main purpose of data quality is about horrific data - data which is missing or

incorrect or invalid in some perspective. A large term is that, data quality is attained when business

uses data that is comprehensive, understandable, and consistent, indulging the main data quality

magnitude is the first step to data quality perfection which is a method and able to understand in an

effective and efficient manner, data has to satisfy a set of quality criteria. Data gratifying the quality

criterion is said to be of high quality [9].

II. RELATED WORK

In this section we will review related work in Data Warehouse Design and Implementation Based on

Quality Requirements. We will start with the former. The paper introduced by Panos Vassiladis,

Mokrane Bouzegeghoub and Christoph Quix, (2000), the proposed approach covers the full lifecycle

of the data warehouse, and allows capturing the interrelationships between different quality factors

and helps the interested user to organize them in order to fulfill specific quality goals. Furthermore,

they prove how the quality management of the data warehouse can guide the process of data

warehouse evolution, by tracking the interrelationships between the components of the data

warehouse. Finally, they presented a case study, as a proof of concept for the proposed methodology

[15]. The paper introduced by Leo Willyanto Santoso and Kartika Gunadi, (2006), this paper

describes a study which explores modeling of the dynamic parts of the data warehouse. This

metamodel enables data warehouse management, design and evolution based on a high level

conceptual perspective, which can be linked to the actual structural and physical aspects of the data

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

644 Vol. 7, Issue 3, pp. 642-651

warehouse architecture. Moreover, this metamodel is capable of modeling complex activities, their

interrelationships, the relationship of activities with data sources and execution details [16]. The paper

introduced by Amer Nizar Abu Ali and Haifa Yousef Abu-Addose, (2010), The aim of this paper is to

discover the main critical success factors (CSF) that leaded to an efficient implementation of DW in

different organizations, by comparing two organizations namely: First American Corporation (FAC)

and Whirlpool to come up with a more general (CSF) to guide other organizations in implementing

DW efficiently. The result from this study showed that FAC Corporation had greater returns from

data warehousing than Whirlpool. After that and based on them extensive study of these organizations

and other related resource according to CSFs, they categorized these (CSF) into five main categories

to help other organization in implementing DW efficiently and avoiding data warehouse killers, based

on these factors [17]. The paper introduced by Manjunath T.N, Ravindra S Hegadi, (2013), The

proposed model evaluates the data quality of decision databases and evaluates the model at different

dimensions like accuracy derivation integrity, consistency, timeliness, completeness, validity,

precision and interpretability, on various data sets after migration. The proposed data quality

assessment model evaluates the data at different dimensions to give confidence for the end users to

rely on their businesses. Author extended to classify various data sets which are suitable for decision

making. The results reveal the proposed model is performing an average of 12.8 percent of

improvement in evaluation criteria dimensions with respect to the selected case study [18].

III. DATA WAREHOUSE CREATION

The main idea is that a Data warehouse database gathers data from an overseas trading company

databases. For each branch of the supposed company we have a database consisting of the following

schemas:

Contracting schema consists a contract and contractor date.

Stores schema managing storing information.

Showrooms schema to manage showrooms information for any branch of the supposed

company.

At the top of the above schemas, an accounting schema was installed which manages all

accounting operations for any branch or the while company.

All information is stored into fully relational tables according to the known third normal form. The

data integrity is maintained by using a foreign keys relationship between related tables, non-null

constraints, check constraints, and oracle database triggers are used for the same purpose. Many

indexes are created to be used by oracle optimizer to minimize DML and query response time.

Security constraints are maintained using oracle privileges. Oracle OLAP policy is taken in

consideration.

IV. DATA WAREHOUSE DESIGN

As mentioned above a warehouse home is installed on the same machine. The data warehouse is

stored on a separate oracle table spaces and configured to use the above relational online tables as a

source data. So the mentioned schemas are treated as data locations. Oracle warehouse builder is a

java program, which are used warehouse managements. The locations of data sources are:

1. Accounting schema.

2. Stores schema.

3. Contracting schema.

4. Showrooms schema.

For this study, we suppose a hypothetical company with many branches around the world, each

branch has so many stores and showrooms scattered within the branch location. Each branch has a

database to manage branch information. Within each supposed branch database there are the

following schemas which work according to OLAP policies and maintain securities and data

integrity. The schemas are: Accounting schema, Contracting schema, Stores schema and showrooms

schema. All branches databases are connected to each other over WAN.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

645 Vol. 7, Issue 3, pp. 642-651

Figure 1. Distributed database for hypothetical company.

overseas company, as a base (or a source) for warehouse database. This paper suppose that each node

belongs to one company branch, so each branch controls its own data. The main office of the

company, controls the while data also with the central node. The warehouse database could be at the

central node, as the company needs. We suppose that all nodes use the same programs, which are

applied the database(s). Within each node, each activity is represented by a database schema, i.e.

stores, showrooms, contracting, and other schemas. The core of all schemas is the accounting schema.

According to jobs load, each schema could be installed on a separate database or on the same

database. All related databases around company branches are cooperated within the same WAN.

V. STUDY OF QUALITY CRITERIA FOR DW

In this study, we will carry out some of the criteria, and these criteria are:

5.1. Data warehouse using snowflake schema

Using oracle warehouse policies, each database has the following snow flaking modules:

1. Sales module.

2. Supplying module.

5.1.1. Sales module

It consist of the following relational tables

Table 1. Explain the relational table

Table name Table type Oracle schema (owner)

Sales_sh Fact table Showrooms

showrooms Dimensional table Showrooms

Items Dimensional table Accounting

Currencies Dimensional table Accounting

Customers Dimensional table Showrooms

Locations Dimensional table Accounting

The following diagram depicts the relations between the above dimensional and fact tables

Figure 2. Sales module.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

646 Vol. 7, Issue 3, pp. 642-651

Figure 2 above, represents all entities within the sales module. Any entity is designed using the Third

Normal Form (3NF) rule, so it has a primary key. The most important tools used to implement

integrity and validations are oracle constraints. After supplying data to the above module, and

transferring it to oracle warehouse design center, retrieving data (557,441 rows) from fact table sales

which are shown in the following figure 3, which mentions the detailed information for each single

sales within each showroom, and location. It consists of: Voucher (doc) no. And date, the sold item,

sold quantity and price. This data is available at the corresponding node (branch) and the center. Of

course, the same data would be transferred to warehouse database for historical purpose.

Figure 3. Sales data.

Customers dimensional table consists of some personal date about the customer, like mobile, email,

and location which is useful to contact him. Also it indicates the date of last purchase, and the total

amount purchased for last year. This data is available for the corresponding node and the center; also

it refers to the warehouse database. (See figure 4 customer data) The dimensional table of customers

would is shown below.

Figure 4. Customers data.

5.1.2. Supplying module

Supplying the company by materials according to company usual contracts is managed by this

module according to snowflake design. It consists of the following relational tables.

Table 2. Within supplying module

Table name Table type Oracle schema (owner)

STR_RECIEVING Fact table Stores

Contracts Dimensional table contracting

Items Dimensional table Accounting

Currencies Dimensional table Accounting

Stores Dimensional table Stores

Locations Dimensional table Accounting

Daily_headers Dimensional table Accounting

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

647 Vol. 7, Issue 3, pp. 642-651

The following diagram Fig 5 depicts the relations between the above dimensional and fact tables.

They are obey 3NF rule, so they have their primary key constraints, and constrained to each other

using foreign keys constraints. The fact table STR_RECIEVING consists of all charges information

received at company stores (contented by stores table owned by stores schema), according to the

contracts (contented by contracts table owned by contracting schema). Daily headers dimensional

table represent the accounting information for each contract. Using oracle triggers when new record

inserted into the STR_RECIEVING fact table, some other accounting data would be created into

details table row related (through foreign key) to Daily headers dimensional table. Also any charge

value could be converted to the wanted currency using the data maintained by currencies dimensional

table owned by accounting schema.

Figure 5. Supplying module.

For security reasons, direct excess to object fact table is not allowed, un imaginary view is created

(named str_recieving_v) , then all users are allowed to generate a DML (data manipulation language

instructions) on this view. A certain piece of code (oracle trigger) is written to manipulate data,

according to server policies (data integrity and consistency) as user supplies data to the imaginary

view. After supplying data to the above module, and transferring it to oracle warehouse design

center, retrieving data (415,511rows) from fact table str_recieving as shown in the following figure.

Figure 6. Received charges on str_recieving fact table.

During charges insertion, a background process (oracle trigger) should update the stock dimension

data to reflect the latest information about quantities in stock at each node and the center. The stock

data contains the quantity balance, quantity in and out for the current year. Its available at the

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

648 Vol. 7, Issue 3, pp. 642-651

corresponding node and the center, at the online database, and at warehouse database for previous

years. Stock data could be like figure 7 as viewed by oracle SQL developer.

Figure 7. Stock dimensional table data.

5.2. Data warehouse using star schema

As a study case using warehouse star schema, we have:

1. Stocktaking module.

2. Accounting module.

5.2.1. Stocktaking module

Its manage for the current stock within any store for the company, its data is determined by the

following table.

Table 3. Cooperated within stocktaking module

Table name Table type Oracle schema (owner)

Stock Fact table Stores

Items Dimensional table Accounting

Stores Dimensional table Stores

Currencies Dimensional table Accounting

showrooms Dimensional table Showrooms

Locations Dimensional table Accounting

contracts Dimensional table Contracting

Str_recieving Fact table Stores

To_show_t Fact table Stores

The stock fact table stands for the actual stock balances within each store belongs to each branch, and

the whole company at the center. The following diagram depicts the relations between the below

dimensions.

Figure 8. Stocktaking module as a warehouse star schema.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

649 Vol. 7, Issue 3, pp. 642-651

DML (data manipulation language instructions) is done on stock fact table through oracle triggers

which are the most trusted programs to maintain the highest level of integrity and security, so the

imaginary view ( named stock_v) was created, users are allowed to supply data to that view, then the

server would process the supplied data using oracle trigger. Querying the renormalized stock fact

table within the star schema module, using oracle design center is depicted as below (no. of rows on

stock table within our study case is 15,150). This figure 9 query execution is allowed for all users

(public).

Figure 9. Stocktaking on oracle warehouse design center.

5.2.2. Accounting module

One of the most importance aspects of accounting functions is the calculations of the daily cash

within each showroom belongs to the company. The daily totals for each branch and the grand total

could be calculated. Timely based cash could be accumulated later on demand.

Table 4. The tables needed for this activity

Table name Type Oracle schema (owner)

Daily cash Fact table Accounting

Show_sales Fact table Accounting

Showrooms Dimensional table Showroom

Currencies_tab Dimensional table Accounting

Locations Dimensional table Accounting

Customers Dimensional table showrooms

The daily cash is a view used to reflect the actual cash with each showroom on daily base.

Figure 10. Daily cash using warehouse star schema.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

650 Vol. 7, Issue 3, pp. 642-651

Using inner SQL joins, one could retrieve data about daily cash as follows.

Figure 11. Grand daily cash as depicted by Oracle warehouse design center.

VI. CONCLUSIONS

The following expected conclusions have been drawn:

1. Reduce the query response time and Data Manipulation Language and using many indexes which

are created to be used by oracle optimizer.

2. Star Schema is best of them Snowflake Schema the following points are reached:

Query complexity: Star Schema the query is very simple and easy to understand, while

Snowflake Schema is more complex query due to multiple foreign key which joins between

dimension tables .

Query performance: Star Schema High performance. Database engine can optimize and boost

the query performance based on predictable framework, while Snowflake Schema is more

foreign key joins; therefore, longer execution time of query in compare with star schema.

Foreign Key Joins: Star Schema Fewer Joins, while Snowflake Schema has higher number of

joins.

And finally it has been concluded that Star Schema center fact and change, while Snowflake Schema

center fact and not change.

VII. FUTURE WORKS

1. Using any other criteria in development implementation of the proposed system.

2. Using statistical methods to implement other criteria of Data Warehouse.

3. Applying algorithm Metadata and comparing between bitmap index and b-tree index.

4. Applying this work for a real organization not prototype warehouse.

5. Take advantage of the above standards in improving the performance of the use of the data

warehouse and institutions according to their environment.

ACKNOWLEDGEMENTS

I would like to thank my supervisor Assist. Prof. Dr. Murtadha Mohammed Hamad for his

guidance, encouragement and assistance throughout the preparation of this study. I would also like to

extend thanks and gratitude to all teaching and administrative staff in the College of Computer

University of Anbar, and all those who lent me a helping hand and assistance. Finally, special thanks

are due to members of my family for their patience, sacrifice and encouragement.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

651 Vol. 7, Issue 3, pp. 642-651

REFERENCES

[1] H. William Inmon, Building the Data Warehouse. Fourth Edition Published by Wiley Publishing, Inc.,

Indianapolis, Indiana.2005.

[2] R. Kimball, The data warehouse lifecycle toolkit. 1

st

Edition ed.: New York, John Wiley and Sons,

1998.

[3] K.W. Chau, Y. Cao, M. Anson and J. Zhang, Application of Data Warehouse and Decision Support

System in Construction Management. Automation in Construction 12 (2) (2002) 213-224.

[4] Nicolas Prat, Isabelle Comyn-Wattiau and Jacky Akoka , Combining objects with rules to represent

aggregation knowledge in data warehouse and OLAP systems. Data & Knowledge Engineering 70 (2011)

732752.

[5] Singhal, Anoop, Data Warehousing and Data Mining Techniques for Cyber Security. Springer. United

states of America.2007.

[6] Bhanali, Neera,Strategic Data Warehousing: Achieving Alignment with Business. CRC Prees .

United State of America. 2010.

[7] Wang, John. Encyclopedia of Data Warehousing and Mining .Second Edition. Published by

Information Science Reference. United States of America. 2009.

[8] Yu. Huang, Xiao-yi. Zhang, Yuan Zhen , Guo-quan. Jiang, A Universal Data Cleaning Framework

Based on User Model , IEEE,ISECS International Computing, Communication, Control , and Management,

Sanya, China, Aug 2009.

[9] T.N. Manjunath, S. Hegadi Ravindra, G.K. Ravikumar, Analysis of Data Quality Aspects in Data

Warehouse Systems. International Journal of Computer Science and Information Technologies, Vol. 2 (1),

2011, 477-485.

[10] Paul Lane, Oracle Database Data Warehousing Guide. 11g Release 2 (11.2), September 2011.

[11] D.H. Besterfield, C. Besterfield-Michna, G. Besterfield, and M. Besterfield-Sacre, Total Quality

Management. Prentice Hall, 1995.

[12] G.K. Tayi, D.P. Ballou, Examining Data Quality. In Communications of the ACM, 41(2), 1998. pp.

54-57.

[13] K. Orr, Data quality and systems theory. Communications of the ACM, 41(2), 1998. pp. 66-71.

[14] R.Y. Wang, D. Strong, L.M. Guarascio, Beyond Accuracy: What Data Quality Means to Data

Consumers. Technical Report TDQM-94-10, Total Data Quality Management Research Program, MIT Sloan

School of Management, Cambridge, Mass., 1994.

[15] Panos vassiladis, Mokrane Bouzegeghoub and Christoph Quix. Towards quality-oriented data

warehouse usage and evolution. Information Systems Vol. 25, No. 2, pp. 89-l 15, 2000.

[16] Leo Willyanto Santoso and Kartika Gunadi. A proposal of data quality for data warehouses

environment. Journal Information VOL. 7, NO. 2, November 2006: 143 148

[17] Amer Nizar AbuAli and Haifa Yousef Abu-Addose. Data Warehouse Critical Success Factors.

European Journal of Scientific Research ISSN 1450-216X Vol.42 No.2 (2010), pp.326-335.

[18] T.N. Manjunath, S. Hegadi Ravindra, Data Quality Assessment Model for Data Migration Business

Enterprise. International Journal of Engineering and Technology (IJET) ISSN: 0975-4024, Vol 5 No 1 Feb-

Mar 2013.

AUTHOR PROFILE

Khalid Ibrahim Mohammed was born in BAGHDAD, IRAQ, in 1981, received his

Bachelors Degree in computer Science from AL-MAMON UNIVERSITY COLLEGE,

BAGHDAD, IRAQ during the year 2006 and M. Sc. in computer Science from College of

Computer Anbar University, ANBAR , IRAQ during the year 2013. He is having total 8

years of Industry and teaching experience. His areas of interests are Data Warehouse &

Business Intelligence, multimedia and Databases.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

652 Vol. 7, Issue 3, pp. 652-665

A NEW MECHANISM IN HMIPV6 TO IMPROVE MICRO AND

MACRO MOBILITY

Sahar Abdul Aziz Al-Talib

Computer and Information Engineering

Electronics Engineering, University of Mosul, Iraq

ABSTRACT

The paper proposes new mechanism in Hierarchical Mobile IPv6 (HMIPv6) implementation for utilization in

IPv6 Enterprise Gateway. This mechanism provides seamless mobility and fast handover of a mobile node in

HMIPv6 networks. Besides it provides continuous communication among a member of HMIPv6 components

while roaming. The mechanism anticipates future movement of mobile node and accordingly provides an

effective updating mechanism. A bitmap has been proposed to help in applying the new mechanism as will be

explained throughout this paper. Instead of scanning the prefix bits which might be up to 64 bits for subnet

discovery, about 10% of this length will be sufficient to determine the hierarchical topology. This will enable

fast Micro and Macro HMIPv6 in Enterprise Gateway.

KEYWORDS: Hierarchical Mobile IPv6, bitmap, Enterprise Gateway, Mobility Anchor Point.

I. INTRODUCTION

IPv6 Enterprise Gateway is an apparatus or device which intercommunicate the public network with

enterprise network. The enterprise network includes many sub-networks with different access routers

or home anchors. For flexibility, HMIPv6 is used to organize these enterprise networks in hierarchal

way. HMIPv6 stands for Hierarchical Mobile Internet Protocol Version 6. HMIPv6 is provisioning

the different access areas by assigning mobile anchor point (MAP) to each area. The MAP stands on

behalf of the enterprise gateway (home agent) in its particular coverage area. Besides, it will reduce

the control messages exchanged and decrease the time when the mobile node roaming to another

network. The mobile node can use the local MAP to keep the communication on without the need to

communicate with enterprise gateway.

It is known that Hierarchical Mobile IPv6 (HMIPv6) provides a flexible mechanism for local mobility

management within visited networks. The main problem in hierarchical mobility is that the

communication between the correspondent node (CN) and the mobile node (MN) suffers from

significant delay in case of the mobile node roaming between different MAPs or between different

access routers (ARs) in the same MAP coverage area. This happens because the MN in the visited

network acquires a local care of address (LCoA), therefore it receives the router advertisement (RA)

and uses its prefix to build its new LCoA. This process causes a communication delay between the

CN and the MN for a while, especially when the roaming happens between different MAPs. This

process involves more binding update messages to be sent not just to the local MAP, but also to the

enterprise gateway as shown in Figure 1, the messages flow to acquire new CoA starts after MN

reached the foreign network.

The patent in [1] anticipates the probable CoAs based on the number of vicinity Access Routers,

which means the number of probable CoA in each single movement, and then the MAP should

multicast the traffic to all of these addresses. In this paper, the Home Anchor creates the anticipated

CoA based on the received bitmap from MN, this Bitmap refers to the strongest signal that the MN

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

653 Vol. 7, Issue 3, pp. 652-665

was triggered by the foreign router, this means Home Anchor have a single probable address to tunnel

the packet to, besides the current CoA.

RFC4260 [2] proposed the idea of anticipating the new CoA and tunnel the packets between the

previous Access Router and the New Access Router. The differences between the work in this paper

and in [2] is that packet loss will likely occur if the process is performed too late or too early with

respect to the time in which the mobile node detaches from the previous access router and attach to

the new one, or the packet loss is likely occur in case the BU cache in the home anchor is updated but

the layer 2 handover does not complete yet. The work in [3] proposed a scheme that reduced the 82%

of the total cost of the macro mobility handover of the original HMIPv6.

Kumar et al. in [4] proposed an analytical model which shows the performance and applicability of

MIPv6 and HMIPv6 against some key parameters in terms of cost.

An adaptive MAP selection based on active overload prevention (MAP-AOP) is proposed in [5]. The

MAP periodically evaluates the load status by using dynamic weighted load evaluation algorithm, and

then sends the load information to the covered access routers (AR) by using the expanded routing

advertisement message in a dynamic manner.

In this paper, a solution to the explained problem has been proposed in which the mobile node sends

binding update request at the moment when it initiates the movement to the foreign network, and to

add a bitmap field to the binding update request to recognize different components of the HMIPv6.

The paper is organized as follows. Section 2 describes the methodology of the proposed mechanism

supported by figures; section 3 illustrates the new operation of micro and macro-mobility. Binding

update message format is introduced in section 4 where the bitmap idea comes from. Finally, section

5 concludes the work and presents the future work.

II. METHODOLOGY

The objective of this paper is provided by a method for multi-cell cooperative communication

comprising: detecting a beacon from a new access router, translating the beacon received from the

new access router to a bitmap, sending a binding update request message that contains the bitmap to a

current mobile access point, assessing the bitmap, tunneling data packets from a correspondent node

through the enterprise gateway to the current destination and to the new destination simultaneously,

sending an update message once the mobile node reaches the new destination, refreshing binding

cache tables and tunneling the data packets only to the new destination according to the new address

of mobile node.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

654 Vol. 7, Issue 3, pp. 652-665

Figure 1 (a): Flow Control of Micro and Macro Mobility Process (part 1)

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

655 Vol. 7, Issue 3, pp. 652-665

Figure 1 (b): Flow Control of Micro and Macro Mobility Process (part 2)

Some of the most important key points in this paper:

Adding a bitmap which is a number of binary bits proposed to be used instead of the subnet

prefix to specify the subnet.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

656 Vol. 7, Issue 3, pp. 652-665

Propose to update the binding update message to include a bitmap field; this field is

occupied by a lowest part which refers to the routers prefix and a highest part which refers

to the home anchors address, the proposed message can be called binding update request

which should be sent as soon as the MN initiates the movement to the anticipated foreign

network.

The mobile node has the ability to measure the beacon strength in order to decide which

anchor point to join as the future home agent.

Binding Update message: it is mentioned in HMIPv6 standard [6, 7], this message is sent

by the mobile node to the home anchor or the home agent (in some cases it is sent to

correspondent node), it is used to register the MNs Care of Address in its home anchor or

its home agent, but this message is sent after the MN acquires a CoA in its foreign network.

Binding cache Table: it is a mapping table that resides in the Enterprise Gateway (home

agent) and home anchor points to translate the value of the bitmap to its equivalent prefix.

Also it is used to track the MNs movement.

The proposed solution contributes to both inter-domain (macro-mobility) and intra-domain

(micro-mobility) in terms of handover delay. This will improves multimedia applications.

1. The New Operation of Micro and Macro-mobility:

a) Operation of Micro Mobility: As shown in Figure 1, the proposed solution suggested sending a

binding update request (BU req.) when the MN initiates its movement to the foreign network. The

MN will sense the strong signal from the foreign network router. It translates this to the bitmap

field by setting up the corresponding bit. The contributed bitmap in this paper requires updating the

present binding update message by using the reserved bits already exist for future use.

Then the MN sends the updated BU req. message to the upstream Home Anchor. When the home

anchor receives the updated BU req., it will check the highest bits in the bitmap, as we can see in

the decision box (Figure 1), if these bits refers to the home anchor itself, the home anchor will learn

that this is a micro-mobility and create a new address (CoA) for the MN according to the lowest

bits of the bitmap.

Now, the Home Anchor cache table has two CoAs for a particular MN one for the previous MNs

location and the other for the new location. It starts to tunnel the packets to both CoAs for a while

till the handover preparation is finalized. Therefore the MN will have a seamless mobility and

continuous communication, even before obtaining the new CoA from the future foreign router.

When the MN reaches the foreign router area and obtains the CoA, it will send a Binding update

message which includes the HoA and the CoA to the upstream Home Anchor, then the Home

Anchor will refresh its binding cache and delete the old CoA.

Finally, the Home Anchor will tunnel the packets only to the new CoA.

b) Operation of Macro Mobility

This section explains the mobility between two routers each of them belongs to different Home

Anchor or different domains. The process flow is shown in Figure 1.

Assume the MN initiates the movement to a router that is connected to different home Anchor. The

MN receives the beacon from the foreign router and recognizes it as different from the old one from

the prefix. It reflects this in the higher bits of the bit map. It updates the BU request message

accordingly with the new bitmap then sends the message to the upstream Home Anchor. When the

Home Anchor receives the BU req., it will check the highest bits in the bitmap, in this case it will

recognize that these bits refers to another Anchor, therefore it forwards the BU req. to the Enterprise

Gateway (home agent). At the same time, the home anchor translates the lowest part of the bitmap to

its prefix and adds it to the MAC address to form the new CoA. The Home Anchor will tunnel the

packets to the current CoA and the new CoA at the same time. On the other hand, the Enterprise

Gateway receives the BU req. and translates the highest bits of the bitmap to the equivalent Home

Anchor address. Besides, it refreshes the cache table with this new value and starts to tunnel the

packets to the current Home Anchor and the new Home Anchor. But the traffic which is destined to

the new Anchor will not be forwarded to the MN till the MN reaches the particular foreign router and

obtained a new CoA. At this time the MN will send a BU message to the Enterprise Gateway. As a

result, the Home Agent refreshes its cache table to delete the previous Anchor and includes the

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

657 Vol. 7, Issue 3, pp. 652-665

updated one then tunnels the traffic to the new Home Anchor. The new home anchor in turn will

tunnel the packets to the new CoA of the mobile node in the new location.

2. Binding update message format

The main contribution in this work is based on the idea of using the reserved bits in the BU message

shown in Figure 2.

As can be seen in Figure 2 the binding update message has reserved bits starting from bit 5 up to bit

15, so some or all of these bits can be used to implement the proposed mechanism in this paper. For

example, if 6 bits are used, the highest 2- bits can be assigned to the Home Anchors with possibilities

of (00, 01, 10, 11), the lowest 4-bits can be assigned to the access routers (ARs) which can handle 2

4

or 16 routers starting from 0000 to 1111.

0 1 2 3

0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Sequence # |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

|A|H|L|K|M| Reserved | Lifetime |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| |

| |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

Figure 2: Binding update message format [3]

Figure 3 shows the system architecture for the proposed mechanism, here are some abbreviations:

CN: Correspondent Node.

MN: Mobile Node.

BU req.: Binding Update request message.

ACK: Acknowledgement message

HoA: MNs Home Address.

CoA: MNs Care of Address in the foreign network.

Based on the architecture, the MN initiates its movement from the home network to Home Anchor-1.

As shown in Figure 3, MN receives the beacon from R2 in Home Anchor1s coverage area and it

translates this signal to the bitmap by setting the correspondent bit. The MN will send a BU request

message which contains the bitmap to the Home Anchor1. Home Anchor1 builds its bitmap mapping

table by translating the received bitmap to CoA2, then it replies back an acknowledgment message to

MN. The MN receives the acknowledgment and sends another BU request message to the Enterprise

Gateway (home Agent). The Enterprise Gateway receives the BU req. and checks the highest bits in

the bitmap field and translates it to the equivalent home Anchors addresses. In this architecture, the

highest bits refer to the Home Anchor-1, then the Home Agent refreshes its bitmap mapping table to

add the Home Anchors addresses in the table.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

658 Vol. 7, Issue 3, pp. 652-665

Enterprise Gateway

/Home Agent

2407:3000:200d:312::2/64

Home Anchor1

2407:4000::2/64

Home Anchor2

2407:5000::2/64

R1

R2 R3 R4 R5 R6

2407:4010::/64 2407:4020::/64 2407:4030::/64

2407:4040::/64 2407:4050::/64 2407:4060::/64

MN

HoA: 2407:3000:200d:312::b6:d2

CoA2: 2407:4020::b6:d2

1. Movement

2. BU req. (HoA: 2407:3000:200d:312::b6:d2, Bit Map:

000010)

Home Address Bitmap

2407:3000:200d:312:b6:d2 000010

Care of Address

2407:4020::b6:d2

3.

Binding cache Table

Lowest bit represents->R

4. Ack

5. BU (HoA: 2407:3000:200d:312::b6:d2,

Bit Map: 000010)

2407:4000::2 (HA1)

Home Address Bitmap

2407:3000:200d:312:b6:d2

Home Anchor

00

Binding cache Table

The Highest bits represent Home

Anchors address

6.

5.

MN

Figure 3: MN moves from Home Network to the R2s Network

Assume there is a correspondent node (CN) in the Home Network vicinity as shown in Figure 4, the

CN will send the packets to the home agent address (HoA), and the Home Agent receives the traffic

and checks the binding cache table. It will find out the HoA refers to the Home Anchor-1, therefore

the Home Agent tunnels the packets to Home Anchor-1, and the Home Anchor-1 in turn tunnels the

packets to CoA2 according to its cache table. Finally the MN receives the packets from R2 as shown

in Figure 4.

Figure 5 shows the Micro-mobility scenario in the proposed mechanism, where MN indicates the

augmentation of the signal received from R3 while it initiates its movement towards R3, thus it

translates the beacon received from R3 to the equivalent bitmap. Then, MN sends a BU req. message

including the bitmap field to the Home Anchor-1 which checks the highest bits of the received

bitmap, the highest bits refers to the same Home Anchor-1. Therefore Home Anchor-1 translates the

received bitmap to the equivalent Care of Address which is CoA3 (new CoA) in this case, and it adds

it to the Home Anchor-1s binding cache table.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

659 Vol. 7, Issue 3, pp. 652-665

Enterprise Gateway

/Home Agent

2407:3000:200d:312::2/64

Home Anchor1

2407:4000::2/64

Home Anchor2

2407:5000::2/64

R1

R2 R3 R4 R5 R6

2407:4010::/64 2407:4020::/64 2407:4030::/64

2407:4040::/64 2407:4050::/64 2407:4060::/64

MN

HoA: 2407:3000:200d:312::b6:d2

CoA2: 2407:4020::b6:d2

Home Address Bitmap

2407:3000:200d:312:b6:d2 000010

Care of Address

2407:4020::b6:d2

2407:4000::2 (HA1)

Home Address Bitmap

2407:3000:200d:312:b6:d2

Home Anchor

000010

CN

1. Packet destined to HoA

2. Packet Tunneled to HA1

3. Packet Tunnel to CoA2

4. MN receives and

decapsulate the packet

Figure 4: CN sends Packets to the MN in its Foreign Network (R2)

So far, the Home Anchor-1 tunnels the packets to both CoA2 and CoA3 at the same time, this will

provide a continuous communication between CN and MN. MN still can receive the traffic even

before sending a BU message to Home Anchor-1 through R3.

Figure 6 shows that when MN obtains CoA3 from R3, it will send a BU message to Home Anchor-1

through R3, then Home Anchor-1 checks the Binding cache table again and refreshes it. It will

exclude CoA2 from the table and tunnels the packet only to CoA3 according to the new address of

MN.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

660 Vol. 7, Issue 3, pp. 652-665

Enterprise Gateway

/Home Agent

2407:3000:200d:312::2/64

Home Anchor1

2407:4000::2/64

Home Anchor2

2407:5000::2/64

R1 R2 R3 R4 R5 R6

2407:4010::/64 2407:4020::/64 2407:4030::/64

2407:4040::/64

2407:4050::/64 2407:4060::/64

MN

HoA: 2407:3000:200d:312::b6:d2

CoA2: 2407:4020::b6:d2

3. Home Address Bitmap

2407:3000:200d:312:b6:d2

000010

Care of Adress

2407:4020::b6:d2

2407:4000::2 (HA1)

Home Address Bitmap

2407:3000:200d:312:b6:d2

Home Anchor

000010

CN

Packet destined to HoA

Packet Tunneled to HA1

4. Tunneling the packets to CoA2

and Probable CoA3

5. MN receives and

decapsulate the packet

1. Movement

MN

2

2. BU req.

(HoA: 2407:3000:200d:312:b6:d2,

Expected bitmap: 000011)

000011 2407:4030::b6:d2

1. Initiate the movement

toward R3

Figure 5: MN starts to move to R3

Figure 7 shows the macro-mobility between two different home anchors. MN initiates its movement

toward R4, the same procedure previously explained will be followed, and MN will decry the

augmentation of R4s signal and translate it to the equivalent bitmap.

MN sends a BU req. message to Home Anchor-1; here the difference is that the Home Anchor checks

the received bitmap, it finds out that the highest bits of bitmap refers to another home anchor.

Therefore, Home Anchor-1 will forward the BU req. to the upstream Enterprise Gateway (Home

Agent) and at the same time it translates the lowest bits to the equivalent CoA (in this case CoA4).

Now, Home Anchor-1 tunnels the packets to CoA3 and CoA4 as shown in Figure 7, Enterprise

gateway receives the BU req. from Home Anchor-1, and translates the highest bit to the equivalent

home anchors address, as shown in figure 7. The Enterprise gateway will add the address of Home

Anchor-2, and then it will start to tunnel the packets to Home Anchor-2. Home Anchor-2 still does not

have a record in its binding cache table to track the new MNs CoA.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

661 Vol. 7, Issue 3, pp. 652-665

Enterprise Gateway

/Home Agent

2407:3000:200d:312::2/64

Home Anchor1

2407:4000::2/64

Home Anchor2

2407:5000::2/64

R1 R2 R3 R4 R5 R6

2407:4010::/64 2407:4020::/64 2407:4030::/64

2407:4060::/64

2407:4070::/64 2407:4080::/64

HoA: 2407:3000:200d:312::b6:d2

CoA3: 2407:4030::b6:d2

2. Home Address Bitmap

2407:3000:200d:312:b6:d2

000010

Care of Adress

2407:4020::b6:d2

2407:4000::2 (HA1)

Home Address Bitmap

2407:3000:200d:312:b6:d2

Home Anchor

000010

CN

Packet destined to HoA

Packet Tunneled to HA1

MN receives and

decapsulate the packet

1. BU

(HoA: 2407:3000:200d:312:b6:d2,

CoA3: 2407:3030::b6:d2)

000011 2407:4030::b6:d2

MN

2. Refresh

the table

Figure 6: MN obtained CoA3 from R3

As shown in figure 8, MN acquired CoA4 and sends a BU message to Home Anchor-2 and Home

Anchor-1 simultaneously.

Home Anchor-1 updates its table and sends packets to CoA4 only and at the same time Home

Anchor-2 starts to forward the traffic to CoA4. The MN will send a BU message to the Enterprise

Gateway (Home Agent) telling about CoA4.

Home Agent receives the BU message, checks the MNs CoA then excludes Home Anchor-1 from the

table and sends the traffic to Home Anhcor-2 only which is the next hop to reach CoA4.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

662 Vol. 7, Issue 3, pp. 652-665

Enterprise Gateway

/Home Agent

2407:3000:200d:312::2/64

Home Anchor1

2407:4000::2/64

Home Anchor2

2407:5000::2/64

R1 R2 R3 R4 R5 R6

2407:4010::/64 2407:4020::/64 2407:4030::/64

2407:4060::/64

2407:4070::/64 2407:4080::/64

HoA: 2407:3000:200d:312::b6:d2

CoA3: 2407:4030::b6:d2

3. Home Address Bitmap

2407:3000:200d:312:b6:d2

000010

Care of Adress

2407:4020::b6:d2

2407:4000::2 (HA1)

6. Home Address Bitmap

2407:3000:200d:312:b6:d2

Home Anchor

0010

CN

Packet destined to HoA

2. BU request

(HoA: 2407:3000:200d:312:b6:d2,

Bitmap: 100110)

000011 2407:4030::b6:d2

MN

1. Initiates movement toward R4

100110 2407:4060::b6:d2

4. checking the Highest bit

5. a)Forward BU request

(HoA: 2407:3000:200d:312:b6:d2,

Bitmap: 100110)

2407:5000::2 (HA2) 100110

7. Tunnel the packet to HA1 & HA2

5. b)Tunnel packets to CoA3 & CoA4

MN

Figure 7: MN initiates movement to Home Anchor-2 (R4)

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

663 Vol. 7, Issue 3, pp. 652-665

100110

Enterprise Gateway

/Home Agent

2407:3000:200d:312::2/64

Home Anchor1

2407:4000::2/64

Home Anchor2

2407:5000::2/64

R1

R2 R3 R4 R5 R6

2407:4010::/64 2407:4020::/64 2407:4030::/64

2407:4060::/64

2407:4070::/64 2407:4080::/64

HoA: 2407:3000:200d:312::b6:d2

CoA4: 2407:4060::b6:d2

2. Home Address Bitmap

2407:3000:200d:312:b6:d2

000010

Care of Adress

2407:4020::b6:d2

2407:4000::2 (HA1)

Home Address Bitmap

2407:3000:200d:312:b6:d2

Home Anchor

000010

CN

Packet destined to HoA

1. BU

(HoA: 2407:3000:200d:312:b6:d2,

CoA4: 2407:4060::b6:d2)

000011 2407:4030::b6:d2

MN

100110 2407:4060::b6:d2

2. a) Refresh the table

2407:5000::2 (HA2)

Tunnel the packet to HA1 & HA2

Home Address Bitmap

2407:3000:200d:312:b6:d2 0010

Home Address

2407:4060::b6:d2

2. a) Refresh the table

3. Forwards the packet from Home Agent

to CoA4

Figure 8: Home Anchor-2 forwards the traffic to CoA4

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

664 Vol. 7, Issue 3, pp. 652-665

100110

Enterprise Gateway

/Home Agent

2407:3000:200d:312::2/64

Home Anchor1

2407:4000::2/64

Home Anchor2

2407:5000::2/64

R1 R2 R3 R4 R5 R6

2407:4010::/64 2407:4020::/64 2407:4030::/64

2407:4060::/64

2407:4070::/64 2407:4080::/64

HoA: 2407:3000:200d:312::b6:d2

CoA4: 2407:4060::b6:d2

2. Home Address Bitmap

2407:3000:200d:312:b6:d2

000010

Care of Adress

2407:4020::b6:d2

2407:4000::2 (HA1)

2. Home Address Bitmap

2407:3000:200d:312:b6:d2

Home Anchor

000010

CN

Packet destined to HoA

1. BU

(HoA: 2407:3000:200d:312:b6:d2,

CoA4: 2407:4060::b6:d2)

000011 2407:4030::b6:d2

MN

100110 2407:4060::b6:d2

2. a) Refresh the table

2407:5000::2 (HA2)

Home Address Bitmap

2407:3000:200d:312:b6:d2 0010

CoA

2407:4060::b6:d2

Forwards the packet from Home Agent to

CoA4

2. refresh the Home Agent table

Packet tunnel to HA2

Figure 9: Updating the Home Agent Table

III. CONCLUSIONS AND FUTURE WORK

From the proposed and explained scenarios, the MN will have a seamless mobility by anticipating the

new location and new CoA and take some actions as soon as it initiates the movement. On the other

hand, this mechanism will save the network resources by updating the cache table of the server.

Which means the traffic will not keep multicasting to multi probable addresses but only to one

probable address in each single movement. As a result, the real time (multimedia) applications will

experience better service flow.

A future work will study how the multimedia applications are affected when applying the proposed

mechanism.

ACKNOWLEDGEMENT

The author would like to thank Mr. Aus S. Matooq for his help reviewing, commenting and his

feedback on this paper.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

665 Vol. 7, Issue 3, pp. 652-665

REFERENCES

[1]. Omae K., Okajima I., Umeda N., Mobility Management System, and Mobile Node Used in the

System, Mobility Management Method, Mobility Management Program, And Mobility Management

Node, International Patent US 7,349,364 B2, published date: 25 March 2008.

[2]. McCann P., Mobile IPv6 Fast Handovers for 802.11 Networks, Lucent Technologies, RFC4260,

2005.

[3]. Lee K. et. al., A Macro Mobility Handover Performance Improvement Scheme for HMIPv6, Lecture

Notes in Computer Science, Volume 3981, 2006, pp 410-419.

[4]. Kumar V., Lall G C and Dahiya P., Performance Evaluation of MIPv6 and HMIPv6 in terms of Key

Parameters, International Journal of Computer Applications 54(4):1-3, September 2012.

[5]. Tao M., Yuan H. and Wei W., Active overload prevention based adaptive MAP selection in HMIPv6

networks, Journal of Wireless Networks, February 2014, Volume 20, Issue 2, pp 197-208.

[6]. Soliman H., Castelluccia C.,El Malki K., Bellier L., Hierarchical Mobile IPv6 Mobility Management

(HMIPv6), RFC 4140, 2005.

[7]. Soliman H., Castelluccia C.,El Malki K., Bellier L., Hierarchical Mobile IPv6 (HMIPv6) Mobility

Management, RFC 5380, 2008.

AUTHORS BIOGRAPHY

Sahar A. Al-Talib received her BSc. in Electrical and Communication Engineering from

University of Mosul in 1976, her MSc, and PhD in Computer and Communications

Engineering from University Putra Malaysia (UPM), in 2003 and 2006 respectively. She

served at MIMOS Berhad, Technology Park Malaysia, for about 4 years as Staff Researcher

where she enrolled in infrastructure mesh network project (P000091) adopting IEEE

802.16j/e/d wireless technology. She became a lecturer at the Department of Computer and

Information Engineering, Electronics Engineering, University of Mosul, Iraq in 2011. She

was the main inventor of 10 patents and published more than 20 papers in local, regional, and international

journals and conferences. She certified CISCO-CCNA, WiMAX RF Network Engineer, WiMAX CORE

Network Engineer, IBM Basic Hardware Training Course- UK, Cii-Honeywell Bull Software Training

Course on Time Sharing System and Communication Concept- France, Six-Sigma white belt, Harvard Blended

Leadership Program by Harvard Business School Publishing USA, Protocol Design and Development for

Mesh Networks by ComNets Germany.

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

666 Vol. 7, Issue 3, pp. 666-674

EFFICIENCY OPTIMIZATION OF VECTOR-CONTROLLED

INDUCTION MOTOR DRIVE

Hussein Sarhan

Department of Mechatronics Engineering,

Faculty of Engineering Technology, Amman, Jordan

ABSTRACT

This paper presents a new approach that minimizes total losses and optimizes the efficiency of a variable speed

three-phase, squirrel-cage, vector-controlled induction motor drive through optimal control, based on combined

loss-model control and search control. The motor power factor is used as the main control variable. An

improvement of efficiency is obtained by online adjusting the stator current according to the value of power

factor corresponding to the minimum total losses at a given operating point. The drive system is simulated using

Matlab SIMULINK models. A PIC microcontroller is used as minimum loss power factor controller. Simulation

results show that the proposed approach significantly improves the efficiency and dynamic performance of the

drive system at all different operating conditions.

KEYWORDS: Efficiency Optimization, Induction Motor Drive, Optimal Power Factor, Simulation, Vector

Control.

I. INTRODUCTION

Squirrel-cage three-phase induction motors IMs are the workhorse of industries for variable speed

applications in a wide power range. However, the torque and speed control of these motors is difficult

because of their nonlinear and complex structure. In general there are two strategies to control these

drives: scalar control and vector control. Scalar control is due to magnitude variation of the control

variable only. The stator voltage can be used to control the flux, and frequency or slip can be adjusted

to control the torque. Different schemes for scalar control are used, such as: constant V/f ratio,

constant slip, and constant air-gap flux control. Scalar controlled drives have been widely used in

industry, but the inherent coupling effect (both torque and flux are function of stator voltage or current

and frequency) give sluggish response and system is easily prone to instability [1-3]. To improve the

performance of scalar-controlled drives, a feedback by angular rotational speed is used. However, it is

expensive and destroys the mechanical robustness of the drive system. Performance analysis of scalar-

controlled drives shows that scalar control can produce adequate performance in variable speed

drives, where the precision control is not required. These limitations of scalar control can be

overcome by implementing vector (field oriented) control.

Vector control was introduced in 1972 to realize the characteristics of separately-excited DC motor in

induction motor drives by decoupling the control of torque and flux in the motor. This type of control

is applicable to both induction and synchronous motors. Vector control is widely used in drive

systems requiring high dynamic and static performance. The principle of vector control is to control

independently the two Park components of the motor current, responsible for producing the torque

and flux respectively. In that way, the IM drive operates like a separately-excited DC motor drive

(where the torque and the flux are controlled by two independent orthogonal variables: the armature

and field currents, respectively) [4-8].

Vector control schemes are classified according to how the field angle is acquired. If the field angle is

calculated by using stator voltage and currents or hall sensors or flux sensing winding, then it is

known as direct vector control DVC. The field angle can also be obtained by using rotor position

measurement and partial estimation with only machine parameters, but not any other variables, such

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

667 Vol. 7, Issue 3, pp. 666-674

as voltages or currents. Using this field angle leads to a class of control schemes, known as indirect

vector control IVC [1, 9].

From energetic point of view, it is well known that three-phase induction motors, especially the

squirrel-cage type are responsible for most of energy consumed by electric motors in industry.

Therefore, motor energy saving solutions by increasing its efficiency has received considerable

attention during the last few decades due to the increase in energy cost. Energy saving can be

achieved by proper motor selection and design, improvement of power supply parameters and

utilizing a suitable optimal control technique [3-5, 10,11]

Induction motor operation under rated conditions is highly efficient. However, in many applications,

when the motor works at variable speed, it has more losses and less efficiency, so it operates far from

the rated point. Under these circumstances, it is not possible to improve the motor efficiency by motor

design or by supply waveform shaping technique. Therefore, a suitable control algorithm that

minimizes the motor losses will rather take place.

Minimum-loss control schemes have can be classified into three categories: search method, loss

model, power factor control. The power factor control scheme has the advantage that the controller

can be stabilized easily and the motor parameter information is not required. However, analytical

generation of the optimal power factor commands remains tedious and restrictive because empirical,

trial and error methods are generally used [4,5]. For this reason, search control using digital

controllers is preferable.

In this paper, a combined minimum-loss control and search control approach is used to find the power

factor, corresponding to the minimum losses in the drive system at a specified operating point. A PIC

microcontroller is used as an optimal power factor controller to online adjust the stator current

(voltage) to achieve the maximum efficiency of the drive system.

II. EFFICIENCY OPTIMIZATION OF VECTOR-CONTROLLED DRIVE SYSTEM

The generalized block diagram of vector-controlled induction motor drive is shown in Figure 1.

Figure 1. Generalized block diagram of vector-controlled induction motor drive.

To perform vector control, the following steps are required [1]:

1. Measurements of motor phase voltages and currents.

2. Transformation motor phase voltages and currents to 2-phase system ( | o, ) using Clarke

transformation, according to the following equation:

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

668 Vol. 7, Issue 3, pp. 666-674

) (

2

3

],

2

1

2

1

[

sc sb s

sc sb sa s

i i k i

i i i k i

=

=

|

o

(1)

where:

=

sa

i actual stator current of the motor phase A

=

sb

i actual stator current of the motor phase B

=

sc

i actual current of the motor phase C

const k =

3. Calculation of rotor flux space vector magnitude and position angle through the components of

rotor flux.

4. Transformation of stator currents to q d coordinate system using Park transformation, according

to:

field s field s sq

field s

ield

f s sd

i i i

i i i

u u

u u

| o

| o

cos sin

, sin cos

+ =

+ =

(2)

where

field

u is the rotor flux position.

The component

sd

i is called the direct axis component (the flux-producing component) and

sq

i is

called the quadrature axis component (the torque-producing component). They are time invariant; flux

and torque control with them is easy.

The values of

field

u sin and

field

u cos can be calculated by:

rd

r

field

rd

r

field

+

+

=

+

+

=

o

|

u

u

cos

, sin

(3)

where:

| o r r

rd

2 2

+ + + = + (4)

The rotor flux linkages can be expressed as:

o o o

o | |

s m r r r

s m r r r

i L i L

i L i L

+ = +

+ = + ,

(5)

5. The stator current torque-

sq

i and flux-

sd

i producing components are separately controlled.

6. Calculation of the output stator voltage space vector using decoupling block.

7. Transformation of stator space vector back from q d coordinate system to 2-phase system fixed

with the stator using inverse Park transformation by:

field sq field sd s

field sd s

i i i

field isq i i

u u

u u

|

o

cos sin

, sin cos

+ =

=

(6)

8. Generation of the output 3-phase voltage using space modulation.

The developed electromagnetic torque of the motor T can be defined as:

qs dr

r

m

i

L

L

P T |

4

3

= (7)

where P is the number of poles of the motor.

From Equation (7) it is clear that the torque is proportional to the product of the rotor flux linkages

and q-component of the stator current. This resembles the developed torque expression of the DC

motor, which is proportional to the product of the field flux linkages and the armature current. If the

International Journal of Advances in Engineering & Technology, July, 2014.

IJAET ISSN: 22311963

669 Vol. 7, Issue 3, pp. 666-674

rotor flux linkage is maintained constant, then the torque is simply proportional to the torque

producing component of the stator current, as in the case of the separately excited DC machine with

armature current control, where the torque is proportional to the armature current when the field is

constant.

The power factor p.f is also a function of developed torque, motor speed and rotor flux, and can be

calculated as the ratio of input power to apparent power [3-4]:

ds qs ds qs

ds ds qs qs

i i v v

i v i v

f p

2 2 2 2

.

+ +

+

= (8)

The power factor can be used as a criterion for efficiency optimization. The optimal power factor

corresponding to minimum power losses in the drive system can be found analytically or by using