Documente Academic

Documente Profesional

Documente Cultură

Clinical Governance

Încărcat de

ummuawisyDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Clinical Governance

Încărcat de

ummuawisyDrepturi de autor:

Formate disponibile

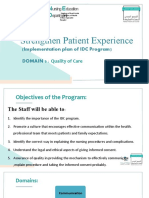

ACHIEVING EXCELLENCE

IN CLINICAL GOVERNANCE

Framework Document and Companion Guide

for the Integrated Management

of Quality, Safety and Risk In

The Malaysian Health Care System

Patient Safety Council of Malaysia & Quality in Medical Care Section

Medical Development Division Ministry of Health Malaysia

02.LayOut 12/3/10 4:55 PM Page 1

2 Achieving Excellence In Clinical Governance

1. Framework Document

for the integrated management of quality, safety and risk in

the Malaysian health care system

2. Companion Guide

to support self-assessment against the Framework

Document

3. Electronic Self Assessment Tool

in the form of a CD

This document

consists of three parts:

02.LayOut 12/3/10 4:55 PM Page 2

Contents

Achieving Excellence In Clinical Governance 3

Section A

1.0 Purpose 8

2.0 Background 8

2.1 The objectives of the Clinical Governance Framework 9

2.2 Related Policy and Regulatory Considerations 9

3.0 A Framework for the Integrated Management of Quality, Safety & Risk 11

3.1 Introduction 11

3.2 6 Essential Underpinning Requirements: 12

3.2.1 Communication and Consultation with Key Stakeholders 12

3.2.2 Clear Accountability Arrangements 12

3.2.3 Adequate Capacity and Capability 13

3.2.4 Standardized Policies, Procedures, Protocols and Guidelines 13

3.2.5 Monitoring and Review Arrangements 13

3.2.6 Assurance Arrangements 13

3.3 Check Questions 13

4.0 Six Core Processes and Programmes 16

4.1 Clinical Effectiveness and Audit 16

4.2 Involvement of Patients/ Service Users and the Public/ Community 16

4.3 Risk Management and Patient Safety 16

4.3.1 Risk Management Process 16

4.3.2 High-Priority Risk 16

4.3.3 Patient Safety 17

4.3.4 Occupational Health and Safety 17

4.3.5 Environmental Safety 17

4.3.6 Incident and Complaints Reporting/ Recording, Analysis and Learning 17

4.4 Staffing and Staff Management 17

4.5 Service Improvement 17

4.6 Learning and Sharing Information 18

4.7 Check Questions 18

5.0 Outcomes 21

5.1 Key Performance Indicators (KPIs) 21

5.2 Check Questions 21

6.0 Conclusion 22

02.LayOut 12/3/10 4:55 PM Page 3

4 Achieving Excellence In Clinical Governance

Section B

1. Introduction 24

1.1 Background 24

1.2 Performing a Self-assessment Against the Framework 25

1.3 Electronic Self-Assessment Tool 26

1.3.1 Running the Tool 26

1.3.2 Entering Data

1.3.3 Recording Good Practice 28

1.3.4 Recording Actions or Quality Improvement Plans (QIPs) 28

1.3.5 Aggregating information Across Departments, etc. 29

1.3.6 Analysing the data 30

1.4 Beyond self-assessment I - Improving Quality, Safety and Risk 33

management using the Plan-Do-Study-Act (PDCA) Improvement Model

1.5 Beyond Self-Assessment II - Improving quality, Safety and Risk 33

Management Using the HSE Change Model

2. Essential underpinning requirements 35

A Communication and Consultation with Key Stakeholders 35

B Clear Accountability Arrangements 37

C Adequate Capacity and Capability 39

D Standardised Policies, Procedures and Guidelines 41

E Monitoring and Review Arrangements 42

F Assurance Arrangements 44

3. Core processes and programmes 45

G Clinical Effectiveness and Audit 45

H Patient and Public Involvement 49

I Risk Management and Patient Safety 50

J Staffing and Staff Management 54

K Service Improvement 55

L Learning and Sharing Information 57

4. Outcomes 59

M Key Performance Indicators (KPIs) 59

5. Glossary of Terms 61

6. Frequently Asked Questions (FAQs) 65

02.LayOut 12/3/10 4:55 PM Page 4

Achieving Excellence In Clinical

Governance

Framework Document and Companion Guide

for the Integrated Management

of Quality, Safety and Risk In

The Malaysian Health Care System

02.LayOut 12/3/10 4:55 PM Page 5

6 Achieving Excellence In Clinical Governance

Foreword

By The Director-General of Health Malaysia

and Chairman of the Patient Safety Council of Malaysia

S

afety is an integral component of quality health care and one which

patients, their families as well as health care professionals value.

Patients and their families expect to receive health care that is safe as

well as effective. Thus, it is indeed apt that the safety of our healthcare

system be given paramount importance in line with the first principle of

medicine, Primum non nocere or Above all, do no harm, which is an

espoused value that all health care professionals subscribe to.

Making the Malaysian health care system safer has always been one of the

key goals of the Ministry of Health and is a core element of its many Quality

improvement activities. Malaysia is also a strong supporter of the WHOs

World Alliance for Patient Safety, to which it became one of the earliest

signatories in the world, in May 2006.

The pursuit of quality and safety requires the concerted efforts of all the

major stakeholders and the building of partnerships. Developing a safe

Malaysian health care system necessitates the institutionalization of a

culture of safety within it and one of the key steps is to do away with the

prevailing blaming and finger-pointing culture, replacing it with a just or

non-punitive, learning culture. This culture is a necessary requirement for

the successful implementation of clinical governance.

I am pleased that the Patient Safety Council of Malaysia and the Quality in

Medical Care Section, Medical Development Division of the Ministry of

Health, through the development of smart partnerships with both the public

and private sectors as well as the WHO, have succeeded in producing this

Achieving Excellence in Clinical Governance: Framework Document and

Companion Guide for the Integrated Management of Quality, Safety and

Risk in the Malaysian Health Care System which are part of the formidable

arsenal to develop and attain good Clinical Governance.

To begin our journey towards a safer health care system, elements of the

Clinical Governance Framework should be studied, discussed and

implemented by all stake-holders. Thus, I would like to wish all of you every

success as you endeavour to develop a world class health care system that

is capable of providing safe, effective, equitably-accessed and patient-

centred services to all who require them.

Tan Sri Dato Seri Dr. Hj. Mohd Ismail Merican

Director-General of Health Malaysia

02.LayOut 12/3/10 4:55 PM Page 6

Achieving Excellence In Clinical Governance 7

Advisors

Tan Sri Dato Seri Dr. Hj. Mohd Ismail Merican

Director-General of Health Malaysia and Chairman of the Patient Safety Council of Malaysia

Datuk Dr. Noor Hisham bin Abdullah

Deputy Director-General of Health (Medical), MOH

Dato Dr. Hassan bin Abdul Rahman

Deputy Director-General of Health (Public Health), MOH

Dato Dr. Maimunah bt. Abdul Hamid

Deputy Director-General of Health (Research and Technical Support), MOH

Dato Dr. Hj. Azmi bin Shapie

Director of Medical Development, Medical Development Division, MOH

Members of the Patient Safety Council of Malaysia

Authors

Dr. Hjh Kalsom bt. Maskon

Public Health Physician, Quality In Medical Care Section, Medical Development Division, MOH

Dr. PAA Mohamed Nazir bin Abdul Rahman

Public Health Physician, Quality In Medical Care Section, Medical Development Division, MOH

Mr. Stuart Emslie

WHO and MOH Consultant on Patient Safety and Clinical Risk Management, United Kingdom

Dr. Nor Aishah bt. Abu Bakar

Public Health Physician (Occupational Health), Quality In Medical Care Section, Medical Development

Division, MOH

Dr. Amin Sah bin Hj. Ahmad

Public Health Physician (Hospital and Health Management), Quality in Medical Care Section, Medical

Development Division, MOH

Secretariat

Sister Rashidah Ngah

Quality In Medical Care Section, Medical Development Division, MOH

Mrs. Roshaidah Othman

Quality In Medical Care Section, Medical Development Division, MOH

02.LayOut 12/3/10 4:55 PM Page 7

8 Achieving Excellence In Clinical Governance

1. Purpose

This document is intended to provide a broad policy direction for the adoption of Clinical Governance as

the over-arching framework for integrating all the Quality, Safety and Risk Management initiatives in the

health and health-related agencies in Malaysia, both public and private.

2. Background

The Ministry of Health Malaysia (MOH), as the lead agency for health in the country, is committed to

driving the health care sector in the provision of safe, high quality services through the implementation

of the Clinical Governance concept. Other health and health-related agencies in the public and private

sectors are actively implementing quality improvement efforts so as to improve the quality and safety of

patient care, in line with international efforts led by the World Alliance for Patient Safety, to which Malaysia

became a signatory in 2006. These activities are also ably supported by the Patient Safety Council of

Malaysia, set up by Cabinet decree in January 2003, and comprising key stake-holders of health in the

Malaysian health care system. The Patient Safety Council of Malaysia has been entrusted to lead national-

level efforts targeted at promoting and enhancing patient safety.

The Clinical Governance concept was first introduced to the National Health Service in England in the

1990s, in its attempt to improve the quality and safety of healthcare, in a systematic, integrated and

organized manner. Clinical Governance is a framework of accountability for quality and excellence in

health care in the National Health Service (NHS) of the United Kingdom. It is defined as a framework

through which NHS organizations are accountable for continually improving the quality of their health

services and safe-guarding high standards of care by creating an environment in which excellence in

clinical care will flourish. The NHS in Scotland defines Clinical Governance as corporate

accountability for clinical performance.

Currently, the many quality improvement initiatives being implemented are conducted by separate groups

of professionals, using their preferred approaches and methods, and are not integrated

1

. The framework

described in this document provides a comprehensive and clear picture of the quality and safety

improvement programmes as well as their linkages. It also defines the roles and responsibilities of the

health care organizations involved.

The MOHs vision of a safe health care system is epitomised by the 1Care for 1Malaysia concept, initiated

by the Malaysian Government, which focuses on people-centred health care services and gives priority to

performance, as embodied in the motto People First, Performance Now.

1Care is a structured national health system that is responsive and provides choice of quality health care, ensuring

universal coverage for the healthcare needs of the Malaysian population, based on solidarity and equity

Dato Dr. Maimunah bt. Abd.Hamid, Deputy Director-General of Health (Research and Technical Support)

To realize this noble vision, there is thus the need for a framework for the improvement of governance in

health care as well as the health care delivery system. The role of the MOH is manifold, one of which is

the development, implementation, monitoring of performance and evaluation of the various Quality

Improvement (QI) activities (which target patient safety) in the Quality Assurance Programme (QAP) in

Government hospitals. The MOH is also actively engaging the private sector, as key stake-holders of

health care, to further strengthen their capacity and capability to improve the quality and safety of health.

Raising and maintaining the quality and safety of health care requires commitment to continuous

improvement from everyone involved in the health care system as epitomised by the motto Quality and

Safety, its Everybodys Business and is expected to achieve the best possible health outcomes and

quality of life for the population within the resources available.

1

Research on Evaluation of Quality Assurance Programme, Institute for Health Systems Research, 2009

02.LayOut 12/3/10 4:55 PM Page 8

Achieving Excellence In Clinical Governance 9

The document is based on a framework that had been developed for, and is currently being implemented

in the public healthcare system in the Republic of Ireland, which is governed by the Health Service

Executive (HSE). It is based on the WHO and MoH Consultants ten year experience in implementing

clinical governance in public healthcare systems in the United Kingdom, Australia and Ireland. The WHO

consultant provided technical assistance in imparting practical knowledge and skills to implement an

integrated framework for the MoH Malaysia in 2009. Since 2006, the MOH has received technical support

from the WHO to develop and implement Clinical Risk Management, Incident Reporting and Root Cause

Analysis as a method of reporting, learning and improving the safety of health care delivered.

2.1 The Objectives of the Clinical Governance Framework are:

To ensure that there is a systematic framework for the healthcare sector (public and private) for

the integration of quality, safety and risk management programmes to support and drive the

provision of safe, effective and high quality services

To drive core programmes for quality, safety and risk management

To ensure that appropriate accountability, leadership and oversight arrangements are in place

to institutionalise and internalise quality and safety

It is hoped that greater synergy will be achieved through the harmonization of quality improvement

initiatives, which will contribute towards the institutionalisation of a culture of quality and professionalism

amongst health personnel at all levels.

Recognizing the multiple approaches and programmes already in place to improve quality, safety and risk

management within the Malaysian health sector, this document is not intended to be highly prescriptive.

Rather, the key requirements are set out as check questions which are provided for consideration by

service managers and clinicians in an attempt to identify any areas for improvement. A Self-Assessment

Tool is available to allow service providers to score themselves in relation to the check questions. The

Companion Guide is provided as an additional guidance to help meet the requirements of the check

questions.

2.2 Related policy and regulatory considerations

The critical importance of patient safety, quality of care and management of risk generally in the

planning, provision and review of health services is increasingly being recognized. The commitment

of the MOH towards quality is emphatically stated in its vision, mission and the core elements of its

corporate culture.

02.LayOut 12/3/10 4:55 PM Page 9

10 Achieving Excellence In Clinical Governance

Vision for Health A nation working together for better health

The Mission of the Ministry of Health is to lead and work in partnership

To facilitate and support the people to

attain fully their potential in health

appreciate health as a valuable asset

take individual responsibility and positive action for their health

To ensure a high quality health system that is

customer-centred

equitable

affordable

efficient

technologically appropriate

environmentally adaptable

innovative

with emphasis on:

professionalism, caring and teamwork

respect for human dignity

community participation

With the launching of the MOHs National Quality Assurance Programme (QAP) in 1985, Quality in health care

became a system-wide issue, from the previously individual professionals concern (through professional

excellence and self-regulation). Thus, Quality became everybodys business. Since then, all the Divisions of

the MOH have implemented Quality Improvement (QI) activities utilising the various relevant approaches, such

as the following:-

Indicator approach National Indicator Approach (NIA)

Key Performance Indicators (KPI)

Hospital Specific Approach (HSA)

Clinical Audit Peri-operative Mortality Review (POMR)

Adult Intensive Care Unit Audit, incorporating Care Bundles

Maternal and Peri-natal Mortality Review

Nursing Audit

External Organisation Audit Hospital Accreditation Programme (MSQH)

ISO

Clinical Risk Management Incident Reporting and Learning Systems

(including RCA and HFMEA)

WHO Global Patient Safety Challenge

Hospital Infection Control

Occupational Safety and Health

Patient-Centred Services Patient Satisfaction Surveys

Complaints Management

Statistical Process Control Cumulative Summation (CUSUM) incorporated in Clinical

Registries

02.LayOut 12/3/10 4:55 PM Page 10

Achieving Excellence In Clinical Governance 11

In May 1998, the Strategic Plan for Quality in Health (MOH/PPAK-15(QAP)

2

) which outlined the goals,

policies and strategies for quality improvement efforts in the MoH, was developed and adopted by the

MOH. It provided:

a framework within which decisions may be made regarding priorities and needs in addressing

quality-related issues

the direction of the various quality improvement strategies and activities; and a strategic framework

which was to be implemented at the various levels by all involved in pursuing quality in health care.

Thus, Quality Improvement (QI) has become the official MoH policy with the establishment of QA

committees at all levels of the organization, from the MOH level (QA Steering committee), Programme (or

Division) level, State level, to the hospital level. In addition, the Private Healthcare Facilities and Services

Act 1998 and Regulations 2006 mandate QI in private healthcare facilities for the following: National

Mortality Assessment, Incident Reporting and QI activities, thus making Quality and Safety a nation-wide

concern. This commitment was further reinforced in May 2006, when the Hon. Minister of Health ratified

Malaysias participation in the World Alliance for Patient Safety, making Malaysia one of the earliest

countries in the world committed to supporting WHOs comprehensive programme for patient safety at

national level.

The Government of Malaysia is committed to the equitable provision of excellent services to the Malaysian

public by promoting and implementing national efforts in quality improvement (QI). Government Circulars

are regularly produced and disseminated for implementation to drive the public sector towards excellence

in service delivery as well as outcomes through the implementation of quality initiatives such as MS ISO,

Total Quality Management (TQM), Key Performance Indicators (KPI), Innovation, The Prime Ministers

Quality Awards, Star Rating, Accreditation and others. The latest drive is towards measuring performance

using Key Performance Indicators for the nation and the Ministries, in line with the achievement of set

targets in priority Key Result Areas (KRAs) for the health sector in this country.

3. A Framework for the Integrated Management of Quality, Safety and Risk

3.1 Introduction

Acknowledgements: This framework is adapted from the Health Service Executive (HSE), Ireland:

Framework Document Version 1, January 2009 Towards Excellence in Clinical Governance A

Framework for Integrated Quality, Safety and Risk Management Across HSE Service Providers, is

based on the HSE Quality and Risk Standard. Questions in Section H are adapted from the Victorian

Health Safety and Quality Framework, Australia.

A training session and testing of the framework for the Malaysian context was conducted by the

WHO Consultant from 19th to 21st August 2009. Participants from various levels included the State

Health Deputy Directors (Medical), Hospital Directors, University Malaya, Universiti Kebangsaan

Malaysia, the Malaysian Society for Quality in Health (MSQH), KPJ group, MOHs Public Health

Division and the Secretariat of the Patient Safety Council of Malaysia, The assessments provided a

forum for introducing the framework and an initial assessment of the status of the health service

organisations in Malaysia, and the framework was well-received by all participants.

There are three key components to the framework

3

:

i. Essential underpinning requirements (equivalent to Donabedians structure)

ii. Core processes and programmes (equivalent to Donabedians process)

iii. Performance indicators (equivalent to Donabedians outcomes)

2

The Strategic Plan for Quality in Health, MOH, 1998

3

An Introduction to Quality Assurance in Health Care. Oxford University Press. 2000. The three components are based on the three components of

Avedis Donabedians Structure, Process, Outcome Model of Quality

02.LayOut 12/3/10 4:55 PM Page 11

12 Achieving Excellence In Clinical Governance

Figure 1: illustrates the framework for the integrated management of quality, safety and risk, adopted and

modified for use.

Figure 1- Framework for Intergrated Quality, Safety and Risk Management

These components form the basis for health care providers, through the process of self-assessment, to

determine the extent to which an integrated quality, safety and risk management system is in place in their

organizations. A total of 69 check questions relating to key aspects of the framework have been developed.

The supporting document, the Companion Guide and the Self-Assessment Tool, modified for the Malaysian

context, can be used by health managers and clinicians to assess the extent to which an integrated framework

for quality, safety and risk management is in place within their hospital or health service. On completion of the

self- assessment process, where there are improvements that are needed, an action plan should be developed.

Regular monitoring and review of the action plans will ensure that actions are being implemented, leading to

better outcomes.

3.2 6 Essential underpinning requirements (Donabedians Structure)

Like the skeletal structure of a human being, the essential underpinning requirements must be in

place, in order to drive safe and effective service. Effective leadership and management are required

to lead the quality, safety and risk management agenda.

3.2.1 Communication and consultation with key stakeholders

Structures and strategies to facilitate effective communication and consultation with key

stakeholders must be in place within and outside the organization. In addition, stakeholder

analysis should be conducted. This is to ensure that all relevant stakeholders are

identified and that appropriate mechanisms for communication and consulting with the

various stakeholders or stakeholder groups are put in place.

3.2.2 Clear accountability arrangements

Accountability arrangements for quality and risk management should be clearly defined

and put in place at all levels, from front-line staff up to the organisations most senior

Assurance Communication

Policies

Capacity &

Capabality

Monitoring &

Review

Patient /

Services Users

Accountability

Learning and Sharing Information

Learning form incident reviews

Learning from patient experience

Risk Management & Patient Safety

Complaints/claims/incident

Health and safety

Risk management process

Staffing & Staff Management

Workplace planning and recruitment

Induction

Continuous professional development

Clinical Effectiveness and Audit

Clinical guidelines

Clinical Audit

Service Improvement

Identifying bottlenecks

Reducing inefficiencies

Reducing variation in key processes e.g

discharge

Patient/Service/User and

Public/Community Involvement

Patient information

Consumer panels

Patient experience surveys

Outcome Outcome

Outcome Outcome

Outcome Outcome

02.LayOut 12/3/10 4:55 PM Page 12

Achieving Excellence In Clinical Governance 13

accountable manager or governing body. Individual responsibilities will be set out in the

job descriptions. Accountability arrangements for committees and /or groups involved in

quality, safety and risk management with terms of reference and robust reporting

arrangements must be set. Independent groups that must work together effectively and

share resources should be linked by hierarchy, information systems, and where relevant,

common memberships.

The organization will establish a committee or group to oversee quality, safety and risk

management performance and report periodically to local senior management. To achieve

comprehensive quality, safety and risk management across all service providers, it is

recommended that, where relevant, the committee comprises members from many

disciplines.

3.2.3 Adequate capacity and capability

Capacity and capability imply adequate numbers of qualified people as well as adequate

physical and financial resources. The organisation should have the capacity and

capability to implement and monitor effective quality, safety and risk management

systems. Managers at all levels should fulfill their responsibility by demonstrating

commitment to the management of quality, safety and risk.

3.2.4 Standardised policies, procedures, protocols and guidelines

The organisation should have a system in place to facilitate the development of

standardized policies, procedures, protocols and guidelines. These guidelines should be

based on best available evidence and should be governed by a formal document control

process that includes processes to support the on-going review and change of policies

and guidelines. Staff should be provided with support and guidance on the sourcing,

appraising, and implementation of evidence-based practice and on implementing any

resulting changes in practice. Where new services are being established, the development

of policies, procedures, protocols and guidelines should be considered at the time of

commissioning.

3.2.5 Monitoring and review arrangements

Senior managers should ensure adequate monitoring and review of the systems that are

in place for quality, safety and risk management. All aspects of the framework should be

regularly monitored and reviewed in order that management can learn from any weakness

in the systems and make improvements where necessary. There is a need to develop

suitable KPIs for quality, safety and risk management and to report on selected KPIs.

3.2.6 Assurance arrangements

Hospital directors/board of directors/top level managers should ensure that they obtain

sufficient assurance on the effectiveness of the systems in place for quality, safety and

risk management to form part of their monitoring and review process. Assurances can

come from a variety of sources either within or outside the orgnisation. The most objective

assurances are derived from independent reviewers such as internal audit or external

accreditation bodies (e.g. MSQH/JCI). These are supplemented from non-independent

sources which include clinical audit, mortality and morbidity reviews and internal

management processes, to name a few.

3.3 Check questions

The table below contains check questions that can be utilised to gain an understanding of their

strengths and areas for improvement in relation to implementation of the underpinning requirements

outlined above. The responses to these questions can be either yes, no, partial, not applicable

or dont know. The partial responses are categorised as low, moderate or high. Where a no

or partial response is provided, an action plan or quality improvement plan (QIP) should be

developed to implement any requirements. Where the question number box is shaded, this denotes

that the response to the question may need input and aggregation of information from a number of

departments, Further information on the assessment process is provided in the Companion Guide

and also with the Self-Assessment Tool.

02.LayOut 12/3/10 4:55 PM Page 13

14 Achieving Excellence In Clinical Governance

Clinical Governance Self-Assessment Tool

ESSENTIAL UNDERPINNING REQUIREMENTS: CHECK QUESTIONS

A. Communication and consultation with key stakeholders with regard to Goals and Objectives

for safety

1. Has a stakeholder analysis been carried out to identify all internal and external

stakeholders relating to quality, safety and risk management?

2. Are arrangements in place to ensure that the stakeholder analysis is maintained up-to-date?

3. Is there effective communication and consultation with internal stakeholders in relation to the

purpose, objectives and working arrangements for quality, safety and risk management?

4. Are internal and, (where appropriate), external stakeholders kept fully informed of progress in

achieving objectives for quality, safety and risk management?

5. Is there effective communication and consultation with external stakeholders in relation to

quality, safety and risk management?

B. Clear accountability arrangements (Roles and Responsibilities)

1. Are clearly documented accountability arrangements in place to support the hospital director to

discharge his/her responsibility for quality, safety and risk management?

2. Do the documented accountability arrangements ensure that that the hospital director is fully

informed in relation to key areas of quality, safety and risk performance?

3. Within the accountability arrangements, are the roles and responsibilities played by any

committees or groups clearly described?

4. Do committee structures and reporting arrangements provide for co-ordination and integration

of quality, safety and risk activities and priorities?

C. Adequate capacity and capability

1. Do managers and clinicians at all levels demonstrate commitment to the management of

quality, safety and risk?

2. Do service planning and other business arrangements take into account the hospitals quality,

safety and risk management Goals and Priorities when developing budget and other financial

strategies?

3. Is a specified portion of the hospitals annual budget committed to achieving defined goals for

quality, safety and risk management?

4. Is there access to appropriate resources to implement effective quality, safety and risk

management systems, e.g. qualified people, physical and financial resources, access to

specialist expertise, etc.?

5. Information and training programmes: Are there structured training programmes to ensure that

all staff are provided with adequate quality, safety and risk management information, instruction

and training appropriate to their role?

02.LayOut 12/3/10 4:55 PM Page 14

Achieving Excellence In Clinical Governance 15

D. Standardised policies, procedures, protocols and guidelines

1. Does the hospital operate a standardized document control process for all policies, procedures,

protocols and guidelines?

2. Are arrangements in place for training staff in appraising and developing policies, procedures,

protocols and guidelines and for identifying evidence-based best practice?

3. Are policies, procedures, protocols and guidelines standardized throughout the hospital and,

where appropriate, are they evidence-based?

4. Are arrangements in place to ensure that, where new services are being established, the

development of policies, procedures, protocols and guidelines is considered at the time of

commissioning?

E. Monitoring and review arrangements

1. Are all aspects of the framework described in this document regularly monitored and reviewed

in order that management can learn from any weaknesses in the systems and make

improvements, where necessary?

2. Are the results of independent and other audits used to improve the hospitals quality, safety

and risk management systems?

3. Are key performance indicators reviewed regularly to identify and correct shortfalls to drive

continuous improvement in quality, safety and risk management?

F. Assurance arrangements

1. Do the hospital director and senior management receive sufficient assurance on the systems in

place for quality, safety and risk management?

2. Do the assurances received by the hospital director and senior management form an integral

part of their on-going monitoring and review processes?

02.LayOut 12/3/10 4:55 PM Page 15

16 Achieving Excellence In Clinical Governance

4. Six Core Processes and Programmes (Donabedians Process)

Where appropriate, healthcare organisations should have in place the following core processes and

programmes:

4.1 Clinical effectiveness and audit

The term clinical effectiveness is used in this document to encompass clinical audit, QA or HSA

studies and evidence-based practice (refer to Companion Guide)

A structured programme, or programmes, should be in place to systematically monitor and improve

the quality of clinical care provided across all services. This should include, systems to monitor

clinical effectiveness activity (including clinical audit);mechanisms to assess and implement relevant

clinical guidelines; systems to disseminate relevant information; and use of supporting information

systems.

The processes and outcomes of care should be regularly audited and should demonstrate that the

delivery of care reflects adopted guidelines and protocols. Audits should be based on agreed

selection criteria such as: high risk, cost, or volume: serious concerns arising from adverse events

or complaints: new guidelines: local or national priorities; or patient focus.

Where appropriate, and whenever possible, clinical effectiveness activities should be patient -

centred, i.e., they should take into account the whole patient journey. This requires multi-

professional working and may require collaboration across organizational boundaries.

Clinical effectiveness activities have a significant cost implication in terms of the resources required

to support projects and the opportunity cost of professionals examining and assessing their

practice. These costs need to be justified and hospitals should be able demonstrate that the clinical

effectiveness activities that they support result in demonstrable improvements in the standards of

care and represent efficient use of resources.

4.2 Involvement of patients /service users and the public/ community

Mechanisms should be in place to involve patients/service users and the public/communities in the

planning, development, delivery and evaluation of health services. These mechanism should be

evaluated and the results of this involvement used to improve the manner in which services are

configured or delivered. This should include a systematic process to ensure that hospitals respond

to, and learn from all forms of feedback.

The involvement of service users and the community should be facilitated at all levels of the

hospitals, including individual care episodes, information development, service planning, staff and

service user education and quality review and improvement.

4.3 Risk management and patient safety

4.3.1 The risk management process

Risks of all kinds should be systematically identified, assessed and managed in order of

priority, in accordance with international standard ISO 31000 Risk management:

Principles and guidelines on implementation. Risk of all kinds means that risks need

to be managed across the board, including risk to the safety and quality of patient care;

occupational health, safety and welfare risk; environmental and fire safety risk; risks to

business continuity; and so on. The principal vehicle for managing and communicating risk

at all levels is the risk register, which allows a repository of risk information to be

maintained.

4.3.2 Knowing high-priority risks

Notwithstanding the need to systematically identify, assess and manage risks of all kinds,

service providers should be able to demonstrate that they have systems in place to manage

known high-priority risk issues such as:

02.LayOut 12/3/10 4:55 PM Page 16

Achieving Excellence In Clinical Governance 17

- Medication management

- Slip, trips and falls

- Violence and aggression

- Infection control

- Haemovigilance

- Utility contingency

- Medical devices

- Waste management

- Moving and handling

- Suicide and deliberate self-harm

- Patient absconsion

- Management of patient information

Pro-active methods such as Healthcare Failure Mode and Effects Analysis (HFMEA) can be

used to identify and control critical areas in the process of health care, to help ensure their

safety. Reactive methods such as Root Cause Analysis (or Systems analysis) can also be

utilized to ensure that future adverse events or near-misses are not repeated.

4.3.3 Patient safety

Internationally, patient safety is now recognized as a major concern which requires a specific

management focus. An on-going programme of patient safety improvement should

therefore be in operation. All risks to patient safety should be identified, assessed and

managed, in line with implementing the risk management process set out above.

4.3.4 Occupational safety, health and welfare

All staff-related occupational safety, health and welfare risks should be identified, assessed

and managed, in line with implementing the risk management process set out for

occupational safety, health and welfare. Occupational Safety and Health Act 1994 and

Regulations have stated clearly the responsibility of employers and employees with regard

to safety, health and welfare of workers and workplace. Health care facilities need to comply

with the Act and Regulations. This includes the establishment and function of a Safety and

Health Committee in an organisation which has forty (40) or more workers.

4.3.5 Environmental and fire safety

All environment and fire safety risks should be identified, assessed and managed, in line

with implementing the risk management process set out above. Appropriate systems and

processes should be in place to ensure that environmental and fire risks are minimised

through meeting legislative and mandatory requirements.

4.3.6 Incidents and complaints reporting/recording, analysis and learning

Reporting, recording, analyzing and learning from incidents and complaints are key

components of a successful reactive approach to risk management. All incidents and

complaints should be properly recorded; reported to management; managed in accordance

with an agreed policy; rated according to impact; reviewed (through RCA) where appropriate

to determine contributory factors, root causes and any actions required. Incidents/

complaints should be subjected to periodic aggregate reviews to identify trends and further

opportunities for learning, risk reduction and quality improvement.

4.4 Staffing and staff management

Systems should be in place to ensure appropriate work-force planning, induction, and training and

development for staff appropriate to their roles and responsibilities. Continuing learning and

development programmes aimed at meeting the development needs of staff and the service needs

of the hospital should be in place.

4.5 Service improvement

Notwithstanding the core processes and programmes outlined above, hospitals should ensure that

there is a structured programme in place to support continuous quality improvement across all

services. This requires the identification of quality priorities for the hospital e.g. through the hospital-

02.LayOut 12/3/10 4:55 PM Page 17

18 Achieving Excellence In Clinical Governance

level QA committees; adopting relevant approaches to Quality Improvement; and utilizing

appropriate quality tools to secure demonstrable benefits for patients.

Hospitals should be participate in relevant external Quality Assurance programmes, such the MSQH

Hospital Accreditation Programme, Joint Commission International, ISO, NATA Australia etc., where

available. This will assist them in implementing a comprehensive quality improvement programme

incorporating externally-recognised standards as well as internally-led initiatives.

4.6 Learning and sharing information

It is essential that all hospitals develop a learning culture and that effective learning and sharing

processes are developed to spread good practice and generally educate/inform others. The pursuit

of continuous improvement in quality, safety and risk management is crucially dependent on

learning from experience and on sharing information about good practice. This requires the

establishment and maintenance of effective processes for learning and for sharing good practice in

relation to quality, safety and risk management.

Examples of good practice can be identified by front-line staff or by independent assessors. In some

healthcare organisations, a library of good practice can be found, for example, on the organisations

intranet and this can be shared with other organizations. Some organisations establish regular

learning and sharing fora where staff can bring examples of good practice for discussion.

Newsletters are also a good means of disseminating information for learning and sharing. In

addition, good practice can be shared through third party organisations, e.g. quality accreditation

bodies such as the Malaysian Society for Quality in Health (MSQH).

4.7 Check questions

The table below contains check questions that can be utilized by hospitals to gain an understanding

of their strengths and areas for improvement in relation to the implementation of the core processes

and programmes outlined above. The responses to these questions can either yes, no, partial,

not applicable or dont know. The partial responses are categorized as low, moderate or high.

Where a no or partial response is provided, an action plan or quality improvement plan (QIP)

should be developed to implement any requirements. Where the question number box is shaded,

this denotes that the response requires the combination of information from a number of

departments, service areas, etc. Further information on the assessment process is provided in the

Companion Guide and also with the Electronic Self Assessment Tool.

CORE PROCESSES AND PROGRAMMES : CHECK QUESTIONS

G Clinical effectiveness and audit

1. Is a structured programme, in place to systematically monitor and improve the quality of clinical

care provided across all services?

2. Are arrangements in place to monitor clinical effectiveness activity, including clinical audit?

3. Is the implementation of evidence-based practice through use of recognized standards,

guidelines and protocols promoted?

4. Are information systems being properly exploited to support clinical effectiveness activity?

5. Are clinical audits based on agreed selection criteria (e.g. high risk, cost or volume; serious

concerns arising from adverse events or complaints; new guidelines; local or national priorities; or

patient focus)?

02.LayOut 12/3/10 4:55 PM Page 18

Achieving Excellence In Clinical Governance 19

6. Is there evidence that clinical effectiveness activities result in changes in clinical practice and

improvements in the standards of care

H Service user and Community Involvement

(**Questions are adapted from the Victorian Health Safety and Quality Framework, Australia

1. Is patient/ service user and public feedback (including feedback on actual patient experience)

regularly sought and integrated into quality, safety and risk management improvement activities?

2. Is sufficient information and opportunity provided for patient /service users to meaningfully

participate in their own care?

3. Are patient /service users and the public involved in the development of patient information?

4. Are arrangements in place to train and support patient /service users, staff and the public involved

in the patient and public involvement process?

5. Are patients/service users and the public invited to assist in planning new services?

I Risk management and patient safety

1. Are risks of all kinds systematically identified and assessed?

2. Are risk of all kinds managed in order of priority?

3. Are risk registers used for the purpose of managing and communicating risk at all levels?

4. Are arrangements in place to manage known high priority risk issues?

5. Are staff-related occupational safety, health and welfare risks identified, assessed and managed?

Are arrangements in place to ensure the management of occupational health, safety and welfare?

6. Are environmental and fire safety risks identified, assessed and managed?

Are arrangements in place to ensure that environmental and fire risks are minimized through

meeting legislative and mandatory requirements?

7. Is an on-going programme of patient safety improvement in operation?

8. Are arrangements in place to ensure that medical device alerts/safety notices are circulated to

all relevant staff and are acted on?

9. Are incidents properly recorded and reported to management?

10 Are incidents managed in accordance with an agreed policy?

11. Are incidents rated according to impact and reviewed, where appropriate, to determine

contributory factors, root causes and any actions required?

12. Are incidents subjected to periodic aggregate reviews to identify trends and further opportunities

for learning, quality and safety improvement, and risk reduction?

13. Are complaints, comments and appeals properly recorded and reported to management?

14. Are complaints managed in accordance with an agreed policy?

02.LayOut 12/3/10 4:55 PM Page 19

20 Achieving Excellence In Clinical Governance

15. Are complaints rated according to impact and reviewed, where appropriate, to determine

contributory factors, root cause and any action required?

16. Are complaints and comments subjected to periodic aggregate reviews to identify trends and

further opportunities for learning, quality and safety improvement, risk reduction?

17. Where appropriate, are all claims recorded and analysed to identify opportunities for learning,

quality and safety improvement, and risk reduction?

J Staffing and staff management

1 Are arrangements in place to ensure appropriate workforce planning?

2. Are arrangements in place to ensure appropriate recruitment, induction and training &

development for staff appropriate to their roles and responsibilities?

3. Do the arrangements set out in Questions 1 & 2 to ensure compliance with related Malaysian

employment registration?

4. Are continuing learning and development programmes in place and aimed at meeting the

development needs of staff and services?

5. Are robust pre-employment checks carried out and the requirement set out in this framework?

6. Are arrangements in place to identify and deal with poor professional performance?

K Service Improvement

1. Are quality, safety and risk management goals clear, communicated effectively throughout the

hospital and reflected in relevant service planning processes?

2. Do local quality, safety and risk management plans take account of identified national /

international priorities?

3. Does the hospital participate in relevant external accreditation programmes?

4. Do quality improvement activities utilize a range of quality improvement tools to assist with

assessing and diagnosing issues, identifying remedies and measuring improvement?

L Learning and sharing information

1. Does the hospital routinely learn from patient experience?

2. Does the hospital routinely learn from incidents occurring within the hospital and elsewhere?

3. Does the hospital regularly communicate to patients, staff and other relevant stake-holders

improvements that have been made as a consequence of learning from patient experience and

incidents?

4. Does the hospital share information and learning about serious incidents with other hospitals, the

State Health Department or the Ministry of Health head quarters?

5. Are arrangements in place for learning and for sharing information on good practice in relation to

quality, safety and risk management?

02.LayOut 12/3/10 4:55 PM Page 20

Achieving Excellence In Clinical Governance 21

5. Outcomes

5.1 Key Performance Indicators (KPIs)

The ultimate test of effective systems for quality, safety and risk management is the extent to which

they achieve improvements in outcomes or results for patients, service users and other

stakeholders. Demonstration of improvements in quality, safety and risk management requires the

definition of relevant key performance indicators (KPIs). Hospitals should take a systematic

approach to identifying a range of KPIs relevant to them.

In addition to locally-developed KPIs, all MOH hospitals should monitor their self-assessed

percentage compliance with the integrated quality, safety and risk management framework. This KPI

can be determined by using the electronic self-assessment tool accompanying this document.

Effective management of quality, safety and risk should result in demonstrable improvements in

KPIs. Further information on KPIs is contained in the Companion Guide.

5.2 Check Questions

The table below contains check questions that can be utilised by hospitals to gain an understanding

of their strengths and areas for improvement in relation to achieving the required outcomes from an

integrated quality, safety and risk management system. The responses to these questions can be

either yes, no, partial, not applicable, or dont know. The partial responses are categorized

as low, moderate or high. Where a no or partial response is provided, an action plan or quality

improvement plan (QIP) should be developed to implement any requirements. Where the question

number box is shaded, this denotes that the response to the question may need input from a number

of departments, service areas, etc. Further information on the assessment process is provided in the

Companion Guide and also with the Electronic Self Assessment Tool.

Outcomes: Check questions

M Key Performance Indicators (KPIs)

1. Have local KPIs been developed for quality, safety and risk management?

2. Are the KPIs monitored as part of on-going quality, safety and risk management

improvement activities?

3. Do the KPIs demonstrate that there is on-going improvement in quality, safety and

risk management?

02.LayOut 12/3/10 4:55 PM Page 21

22 Achieving Excellence In Clinical Governance

6. Conclusion

Every health care organisation is responsible and accountable for improving the quality of care provided

and sustaining high standards of care. Adoption of the Clinical Governance Framework will be a useful

guide for healthcare providers to develop and implement the various quality, safety and risk management

systems in the health sector in Malaysia and hopefully, result in excellence in clinical performance as well

as a culture of safety, making the realization of the noble aim of People First, Performance Now a

realisable goal in the near future.

1

Research on Evaluation of Quality Assurance Programme, Institute for Health Systems

Research, 2009

2

The Strategic Plan for Quality in Health, MOH, 1998

3

An Introduction to Quality Assurance in Health Care. Oxford University Press. 2000. The three

components are based on the three components of Avedis Donabedians Structure, Process, Outcome

Model of Quality

02.LayOut 12/3/10 4:55 PM Page 22

Achieving Excellence In Clinical Governance 23

Excellence In Clinical Governance

COMPANION GUIDE

To support self-assessment against the

Framework Document

02.LayOut 12/3/10 4:55 PM Page 23

24 Achieving Excellence In Clinical Governance

1. Introduction

1.1 Background

This Companion Guide provides managers and health care professionals with additional information

to self-assess the compliance of their organisational units with the various check questions

contained in the Framework Document. There is information contained in the Guide for each check

question, including, where appropriate, brief additional guidance, examples of verification and

pointers to web-based and other resources. Service providers are strongly encouraged to submit

their own examples of guidance, verification and resources for sharing with other providers through

updated versions of this Companion Guide.

This Guide is based, in part, on practical insights gained, and feedback obtained whilst undertaking

2 pilots of the draft Framework document for the Health Services Executive (HSE) of Ireland as well

as the MOH workshops on Clinical Governance in 2008 and 2009. Based on the feedback obtained,

the following comprise potential benefits of implementing the quality, safety and risk management

framework:

structure & standardisation nationally

inefficiencies & adverse events identified & addressed

provides a framework for planning services and prioritising resources

enables clear understanding of accountability & responsibility

provides a structure to share good practice

can help improve patient safety

The feedback also provided a list of potential concerns that need to be addressed, which can

jeopardise the successful implementation of the framework, including:

Will this be just a paper exercise?

Visibility of risk but no resources to correct

Increased workload, with no extra resources

Finger-pointingfear of the blame game

How to ensure buy-in at all levels?

Current climate poor staff morale

How do we get senior medical staff involved?

In the current climate, it is fully appreciated that there will be challenges for some in fully

implementing this framework a journey that may take 3-5 years. Consequently, a key concern

during self-assessment and implementation is that, wherever possible, providers who identify what

they believe to be examples of good practice in quality, safety and risk management within their own

organisations should share these with other provider units for learning and improvement purposes.

As Scally and Donaldson proposed for the National Health Service in England, it should be possible

to spread good practice in order to help others improve and, in so doing, shift the mean of quality

performance across all aspects of service provision (Figure 1).

Figure 1 Spreading good practice and shifting the mean quality performance

02.LayOut 12/3/10 4:55 PM Page 24

Achieving Excellence In Clinical Governance 25

Note that the examples of verification provided in this document should not be considered as THE

check-list for compliance or as an exhaustive list of examples. It is recommended that you draw up

your own check-list (of verification criteria), based on this guidance, to suit your own local context.

It is hoped that verification criteria and other information can be shared, for the benefit of all service

providers. In the spirit of continuous improvement, verification criteria contained within this

Companion Guide will be updated based on feedback received.

It should also be noted that whilst this Guide aims to provide additional information to managers and

clinicians in support of their self-assessment exercise against the Framework Document, it cannot

replace obtaining access to expert advice and assistance on quality, safety and risk management

matters.Just as in medicine, there is much in the field of healthcare quality, safety and risk

management that is necessarily subjective and dependent on local factors. This Companion Guide

should be updated regularly in response to suggestions and identified good practices across

Malaysian providers after they have conducted self-assessment studies against the Framework

Document.

1.2 Performing a self-assessment against the Framework

The Framework Document and the Companion Guide, taken together, are tools to help promote

change and build a culture of quality, safety and risk management across Malaysian health care

providers. With reference to this document and the main Framework Document, managers and

clinicians should undertake a reasonable (but not complete) assessment of the extent to which a

suitable framework is in place within their hospital or service. A total of 69 check questions relating

to key aspects of the framework are contained in this document. They are not exhaustive i.e. there

can be other questions that are relevant but have not beent included in the list of 69 check questions.

Responses to these questions can either be:

YES, NO, PARTIAL (low, moderate or high), NOT APPLICABLE or DONT KNOW.

Where a no, partial or dont know response is provided, either an action plan or Quality

Improvement Plan (QIP) should be developed to address these shortfalls. Proper monitoring and

review of the action plans and/or QIPs will ensure that actions are carried out, leading ultimately to

better outcomes for patients and their significant others.

The initial, or baseline assessment should represent an honest and searching analysis of the provider

organisations strengths and areas for improvement in relation to arrangements in place for quality,

safety and risk management. At all times, when considering the check questions, those doing the

assessment should consider carefully the extent to which arrangements are in place and working

effectively. This Companion Guide can assist in this regard. In addition, an electronic assessment

tool is provided to enable self-assessment to be carried out in relation to the check questions, and

this is outlined below. In preparing the baseline assessment, it is important to bring together all key

individuals who can contribute to the assessment process. They should be familiar with the

Framework Document and have an understanding of the kinds of information that will be required to

complete the assessment. Given the right people and suitable preparation, a reasonable baseline

assessment can be produced within a fairly short space of time.

During this time, the individuals participating in the process will, as a group:

1. Briefly review each check question and provide a consensus view of the level of compliance

across the organisation. Give a Dont know response if they cannot answer the question.

2. Identify any particular strengths in relation to the question which could lead to examples of

good practice that could be shared with others. Detailed information on these can always be

gathered as part of a subsequent exercise.

3. Identify weaknesses that they may have in relation to the question that will lead to an action

plan or Quality Improvement Plan (QIP). Again, detailed information on these can always be

gathered as part of a subsequent exercise.

02.LayOut 12/3/10 4:55 PM Page 25

26 Achieving Excellence In Clinical Governance

The assessment should draw, where appropriate, on the results of independent audits and the

perspectives of a range of stakeholders. It is recognised that there are aspects to the questions

contained in this document that are subjective and depend on managers detailed knowledge of their

local context together with an understanding of quality, safety and risk management.

Note that the issue here is NOT about how much your organisation scores in terms of compliance

to the Framework. What is important is that action is taken to rectify weaknesses in quality, safety

and risk management and, over time, there is improvement in compliance against the framework as

demonstrated by improvements in compliance scores. Healthcare quality, safety and risk

management are in a constant state of flux and standards are improving all the time. Thus, even

when a Yes response is given for any check question, which indicates, essentially, 100%

compliance in relation to the issue addressed by the question, this does not mean that we should be

complacent and not try to improve further. This is because, this years 100% compliance might, next

year, be rather less than 100%. The emphasis is on continual improvement.

1.3 Electronic self-assessment tool

An electronic self-assessment tool containing the check questions is available, which can be used

to determine compliance scores as key indicators of performance against the questions and the

overall framework for quality, safety and risk management.

1.3.1 Running the tool

Double-click on QSRMFrameworkScoring_V1.3_Feb_2009 to run the tool, which is an

Excel spreadsheet. You will see the following introductory screen (Figure 2), which

contains basic instructions on how to operate the tool. Note that there are several

worksheets listed at the bottom covering data entry, good practice, actions or QIPs,

aggregation and analysis. These are outlined in more detail below:

Figure 2 Introductory screen

1.3.2. Entering data

Click on the DATA ENTRY worksheet tab at the bottom of the screen. The following

screen appears:

3

A demonstration version of the spreadsheet tool is also provided, which is pre-populated with responses to the various questions so that you can get a

feel for the analytical capabilities of the tool. The demonstration version is named QSRMFrameworkScoring_V1.3_Feb_2009_DEMO. Some of the

screenshots in this document are taken from the demonstration version of the electronic self-assessment tool.

02.LayOut 12/3/10 4:55 PM Page 26

Achieving Excellence In Clinical Governance 27

Figure 3 Data entry screen

The check questions outlined in the quality, safety and risk management framework document have

been entered for scoring and analysis purposes. Each question is assigned a Level, which is:

1 (whether the question relates to underpinning requirements) or

2 (whether the question relates to core processes) or

3 (whether the question relates to outcomes)

Run your mouse cursor over the Question boxes with a small red triangle in the top right corner to

reveal each question. You can enter a response, in the form of the number 1, against each question.

In terms of scoring, there are five possible question responses : yes, high partial (HP), moderate

partial (MP), low partial (LP) and no. The tool automatically assigns the following scores to your

response: Yes=100%, H=80%, M=50%, L=20% and No=0%.

One way to think about a high partial response is consider it a yes, but.. i.e. you meet many of

the requirements of the question, but are not quite there yet. Similarly, a low partial can be thought

of as a no, but.. i.e. there is little in place but you can point to evidence of some aspects of

compliance.

You must enter ONE response for each question. This can include a not applicable (N/A) or a dont

know (D/K) response.

If the CHECK box is green, then you HAVE entered a response.

If the CHECK box is white, you have NOT entered a response.

If the CHECK box is red, you have entered too many responses. Make sure you enter ONLY ONE

response!

If the ACTION box is coloured red, this flags up that you have not scored 100% on the question

and, therefore, action(s) or a Quality Improvement Plan (QIP) may be needed.

The COUNT line simply counts the number of each type of response and this is then converted to

a Percentage response immediately below. Thus you can immediately get a feel for the response

profile in relation to the questions comprising the element.

02.LayOut 12/3/10 4:55 PM Page 27

28 Achieving Excellence In Clinical Governance

1.3.3 Recording good practice

Click on the GOOD PRACTICE worksheet tab. The following screen appears:

Figure 4 Good practice screen

The GOOD PRACTICE worksheet allows you to build a simple list of what you consider

to be good practices in your organisation. These will be determined from the strengths

you identify as part of your self-assessment against the framework. You can then share

this information, and your scoring information, with other organisations to build a

learning, sharing and benchmarking culture. Over time, this will help you improve quality

and safety and reduce risk.

1.3.4 Recording actions or Quality Improvement Plans (QIPs)

Click on the ACTIONS or QIPs worksheet tab. The following screen appears.

The ELEMENT SCORE (%) gives the overall score for the element, taking account of any not

applicable questions.

Note that scores are based on professional judgment made in relation to responding to the various

questions in the DATA ENTRY worksheet. Scoring is relative and not absolute. The objective is to

provide a profile, not to suggest precision.

Figure 5 Actions or quality improvement plans screen

02.LayOut 12/3/10 4:55 PM Page 28

Achieving Excellence In Clinical Governance 29

You can type in the relevant details under the various headings to build a comprehensive

action plan in relation to compliance with the quality, safety and risk management

framework. Alternatively, you can use your own local action planning approach.

1.3.5 Aggregating data across departments, service areas, etc.

Should you need to aggregate data for individual questions across departments, service

areas, etc. to establish an overall response to a question, click on the AGGREGATION

worksheet. You will see the following screen:

Figure 6 Aggregation matrix screen

The quality, safety and risk management framework set out in the framework document is applicable

at an organisational level. An organisation is defined as a collection of services, departments

and/or functions under the actual or assumed overall direction and control of a senior management

team or governing body. In practical terms, this definition is intended to cover public and private

hospitals and other health care organisations in Malaysia. There are a range of services, departments

and/or functions that aggregate up to provide a picture of the whole organisation. In a hospital you

would have various departments or services such as Accident and Emergency, Cardiology, General

Surgery, Paediatrics, Radiology, and so on. Similarly, in a Public Health Department of the MOH,

you would have various services such as primary care services, family health etc.

Many of the framework check questions may require aggregation across the organisation to

determine the overall question response (i.e. yes, high partial, moderate partial, low partial or no).

With reference to the questions contained within the Framework Document, and reiterated in this

Companion Guide, a SHADED QUESTION NUMBER BOX INDICATES THAT THE QUESTION

REQUIRES POSSIBLE AGGREGATION ACROSS THE ORGANISATION. It is up to senior

organisational managers to collect and collate, where appropriate, sufficient information at lower

levels within the organisation in order that a judgement can be made about the level of

organisational compliance with each framework check question.

As an example, consider question A.3 - Is there effective communication and consultation with

internal stakeholders in relation to the purpose, objectives and working arrangements for quality,

safety and risk management? Here you would ensure that all internal (i.e. within the organisation)

stakeholders had been identified (from question A.1) and that there was documented evidence of

communication and consultation on purpose, objectives and working arrangements for quality,

safety and risk management with each service and other stakeholder groups (e.g. finance

department, infection control, etc.). In looking at the evidence, ask yourself the question Does

02.LayOut 12/3/10 4:55 PM Page 29

30 Achieving Excellence In Clinical Governance

communication and consultation appear to be working effectively? You might have to ask specific

questions of a number of people representing different internal stakeholder groups in order to gain

a better picture of communication and consultation effectiveness. In doing this work, you might

deduce that there appears to be evidence of compliance in around half of all services/ departments,

and limited or no compliance in the remainder. Given the compliance rating options of no, low

partial, moderate partial, high partial and full compliance, you would select moderate partial as your

level of compliance and produce an action plan accordingly.

It is helpful to produce a matrix of relevant questions against various services, departments, etc.

so that you can identify compliance, using the yes, high partial, moderate partial, low partial and

no response approach, for each relevant question against each service. Figure 7 shows a simple

illustrative example using the aggregation matrix contained in the Electronic Self-Assessment Tool.

It can be seen that for each of the departments listed a numerical response has been provided for

each question that identifies the degree of compliance with the question within the department. This

numerical response is based on the Yes=100%, H=80%, M=50%, L=20% and No=0%approach, i.e.

100 is entered for a Yes response, 80 for a high partial response, and so on. When the response data

has been entered for each department/question combination, the overall question response (Yes, HP,

MP, LP, or No) is presented at the bottom of the matrix. This is used to determine the overall

response to the question on the DATA ENTRY worksheet (see section 1.3.2, above).

Figure 7 Specimen aggregation matrix

1.3.6 Analysing the data

Click on the ANALYSIS worksheet tab, a screen similar to Figure 8, overleaf, appears (this

particular screen shows that some data has been entered). This shows a table containing a

summary of responses to the questions in each element of the framework, together with the

element scores.

If you scroll down the worksheet you will find three graphical analysis presentations. Figure 9

overleaf shows a bar chart containing element scores. Figure 10 shows a pie chart containing

a breakdown of responses to self-assessment questions. Figure 11 shows a bar chart

containing a Level analysis depicting summary scores for underpinning requirements (level

1), core processes and programmes (level 2) and outcomes (level 3).

You can highlight and copy any of the above analysis options using standard Windows (TM)

copy facilities and paste them into, for example, a WORD document for reporting purposes.

You can also print them directly from the Electronic Self-Assessment Tool to a printer.

02.LayOut 12/3/10 4:55 PM Page 30

Achieving Excellence In Clinical Governance 31

Figure 8 Analysis: Table of element scores

Figure 9 Analysis: Bar chart showing element scores (%)

02.LayOut 12/3/10 4:55 PM Page 31

32 Achieving Excellence In Clinical Governance

Figure 10 Analysis: Breakdown of responses to self-assessment questions

Figure 11 Analysis: Level analysis depicting score for underpinning requirements (level 1),

core processes and programmes (level 2) and outcomes (level 3)

02.LayOut 12/3/10 4:55 PM Page 32

Achieving Excellence In Clinical Governance 33

1.4 Beyond self-assessment I - Improving quality, safety and risk management using the Plan-Do-

Study-Act (PDSA) improvement model

The PDSA improvement model (Figure 12) is widely used in healthcare internationally and can be

usefully applied in the context of the Quality, Safety and Risk Management Framework to help

identify, implement and evaluate improvements. Further information on the practical application of

the PDSA model in healthcare can be found on the website of the Institute for Healthcare

Improvement (IHI) at www.ihi.org/IHI/Topics/ImprovementMethod/HowToImprove/

Figure 12 The PDSA model

1.5 Beyond self-assessment II - Improving quality, safety and risk management using the HSE

Change Model

A very useful publication titled Improving Our Services A Users Guide to Managing Change in the

Health Service Executive was recently produced by the HSE of Ireland and it sets out a

comprehensive change model for improving services based on extensive research (Figure 13).

A summary of the guide can be downloaded at:

www.hse.ie/eng/Publications/Human_Resources/Improving_Our_Services_Summary.pdf

The full guide can be downloaded at:

www.hse.ie/eng/Publications/Human_Resources/Improving_Our _Services.pdf

02.LayOut 12/3/10 4:55 PM Page 33

34 Achieving Excellence In Clinical Governance

Figure 13 The HSE Change Model

02.LayOut 12/3/10 4:55 PM Page 34

Achieving Excellence In Clinical Governance 35

2. Essential underpinning requirements (Donabedians Structure)

A COMMUNICATION AND CONSULTATION WITH KEY STAKE-HOLDERS with regard to goals

and objectives for safety

NB Shaded number box indicates question requires possible aggregation across the

organisation.

1. Has a stakeholder analysis been carried out to identify all internal and external stakeholders

relating to quality, safety and risk management?

GUIDANCE

A stakeholder analysis should be conducted to ensure that:

all appropriate internal and external stakeholders have been identified and

appropriate mechanisms have been defined for communicating and consulting with the

various stakeholders or stakeholder groups (see questions A4 and A5).

A formal stakeholder analysis may not be necessary if there is sufficient evidence that there is a clear

understanding of who the key stakeholders are. Stakeholders are likely to have been identified in a

range of documentation (See below). However, it is considered good practice to undertake and

properly document a formal stakeholder analysis. A specimen stakeholder analysis (for illustration

only) is given below.

Specimen Stakeholder Analysis (Illustrative only)

EXAMPLES OF VERIFICATION

Stakeholder analysis documentation

Strategic framework document

Risk management strategy

Public engagement strategy

HR strategy

Training needs analysis

Staff survey

Patient survey

Stakeholder

Internal/

External

Communication/ Consultation

Strategies

Frequency

Staff INTERNAL Staff handbook Annually

Annual report Annually

Induction programme Monthly

Newsletter Quarterly

Communications boards Weekly

Staff survey Bi-annually

Internet-based podcast Quarterly

etc. etc.

Patients/ EXTERNAL Annual report Annually

Services Users Focus groups Ad-hoc

Patient/Service User survey Annually

Newspaper/magazine Quarterly

Conferences Annually

etc. Ad hoc

Consumer EXTERNAL Annual report Annually

Association / Focus groups Ad-hoc