Documente Academic

Documente Profesional

Documente Cultură

Embedded Systems - Comparative Anatomy and Phisiology

Încărcat de

dwurmfeldDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Embedded Systems - Comparative Anatomy and Phisiology

Încărcat de

dwurmfeldDrepturi de autor:

Formate disponibile

Florida Institute of Technology

College of Computer and Electrical Engineering

ECE4551 Computer Architecture

Fall 2009

Semester Project Paper:

The Comparative “Anatomy” and “Physiology” of Embedded Systems.

By David Wurmfeld

ECE4551 Page 1 of 31 03/12/2009 03:10:58

Table of Contents

Florida Institute of Technology....................................................................................1

Abstract............................................................................................................................3

Fundamental Anatomy of an Embedded Processor.........................................................4

The programmable FSM: The idea that became a computer:..................................4

ROM and RAM: memory structures making up the skeleton of an embedded

processor...................................................................................................................5

The Guts of the Processor: the Program Counter, ALU and Control Unit..............5

Putting it all together, the big picture:......................................................................7

Fundamental Physiology of an Embedded Processor....................................................11

The computer program: a recipe for functionality.................................................11

The Program Counter: more than meets the eye....................................................11

The Control Unit: Conductor, logic wizard and traffic Cop:.................................14

“110001110011”: I dig Computer Baby Talk!.......................................................14

Making Bits Work:.................................................................................................15

Instruction Encoding; how many bits do we need?................................................15

Looking Forward: Tools for us Humans:...............................................................16

Temporary storage: Data Memory.........................................................................17

The Arithmetic Logic Unit: Workhorse of the Embedded Processor....................18

Memory Organization and Program Memory:.......................................................19

Memory Organization and Data Memory:.............................................................21

................................................................................................................................24

The other “fiddly bits”: Input – Output and additional processor functionality........24

Summary....................................................................................................................28

Embedded Processor questions to test for understanding:.........................................29

Answers......................................................................................................................30

ECE4551 Page 2 of 31 03/12/2009 03:10:58

Abstract

An embedded system is a difficult animal to describe. In the general interpretation of

the term, an “Embedded System” refers to a dedicated computer used to accomplish a

pre-defined task. The term “embedded” usually relates to the encapsulated or contained

nature of the device. In the modern vernacular however its meaning is becoming less

sharply defined. The current idea of an embedded system is expanding to mean any

computer system dedicated to a specific purpose. The computer that is the autopilot on

a commercial airliner is considered an “Embedded System”, as is the Windows XP

powered console for a medical MRI imaging system.

Today, the domain of an embedded system is almost limitless, ranging from a full

blown LINUX system deployed on a single VirtexIV FPGAi chip with a PowerPC

microprocessor core and custom integrated peripherals to a 4-bit data security chip

glued onto the front of a smart bank card. Indeed, today’s embedded systems may not

have any “pins” to speak of, they may be pre-compiled “cores” or “software templates”

of hardware architectures designed to implement and complement common computer

resources. These cores are purely software in nature, describing hardware architecture

using a “Hardware Description Language” or HDL, and only take on a physical

manifestation when implemented within a particular ASIC or FPGA scheme. These

cores are often referred to as Intellectual Property or IP. The domain of the typical

embedded system however is dominated by single chip microcontrollers with fewer

than a dozen Input-Output (I/O) pins.

To understand the scope of embedded systems then it becomes necessary to understand

the resources available (chip/core architecture) as well as the tool chain used to exploit

those resources. This paper will endeavour to describe, using a top-down approach, the

animal that is the Embedded System; its comparative “Anatomy and Physiology” or

how the architecture and behaviours differ between three different real architectures,

selected by their market share.

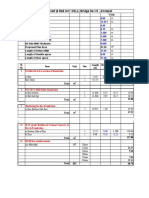

2006 2007

3.675, 33% 3.42, 31% 3.8, 30%

4.9, 39%

3.9, 36% 3.9, 31%

32-bit 16-bit 8-bit 32-bit 16-bit 8-bit

Microcontroller Market (in Billions of US Dollars) 2006 vs. 20071

According to WSTS2, the lowly 8-bit microcontroller dominated the microcomputer

chip market with monthly sales toping 250 million units per month in 2000, followed

1

www.emittsolutions.com Microcontroller market trend report by Emitt Solutions Inc.

2

ExtremeTech online, http://www.extremetech.com/article2/0,2845,1156706,00.asp, Embedded

Processors Part one, September 2009, Quoting WSTS, World Semiconductor Trade Statistics,

http://www.wsts.org

ECE4551 Page 3 of 31 03/12/2009 03:10:58

by the 4-bit microcontroller at 100 million per month. The so-called “hot” processors,

16 and 32 bit barely pull in 50 million units per month.

This paper will confine the domain to 8-bit and 16-bit microcontrollers, concentrating

on how they compare with one another, from the 30,000-foot view down to the register

level3. In addition to the “anatomy” or architecture of the embedded systems, the

“physiology” or behaviour, from high level constructs down to bits in silicon will be

outlined and compared. To synthesize the disparate facts and processes into meaningful

information, the embedded systems outlined will be compared from a simple

performance metric, using a “Gedanken experiment”4 to explore the performance of

three hypothetical embedded systems.

Fundamental Anatomy of an Embedded Processor

The heart of any embedded system is the computer core driving it. It manages the data

flow throughout the system, on chip5 and off chip. This construct constitutes a

revolution in logic design. Historically, digital logic has been combined to form

meaningful representations of the world, for example, an alarm system could be

modelled by representing the doors and windows to be monitored as elements in the

design, using registers and states to describe the behaviour when a door or window is

opened at the wrong time. These so called Finite State Machines (FSM) were used to

create the original embedded systems, with dedicated chips implementing logic

functionality (NAND, NOR…) all interconnected pin to pin to accommodate the data

flowing into and out of the machine. This dedicated functionality proved cumbersome

for anything but complex control systems, custom designed for a single, specific task.

Difficult to design, produce and maintain, a different solution was needed.

The programmable FSM: The idea that became a computer:

Early in the history of electronic devices the idea of a re-configurable system to use the

same hardware to accomplish many different tasks was developed. This so-called

“compute-or” idea first took shape in 1936 as the Z1 computer designed by Konrad

Zuse6. The first re-configurable or programmable machine, Doctor Zuse is credited

with designing and building the first machine to truly solve floating-point problems

using binary representation7. With these initial architectures and the technological

revolution of the transistor, the physical manifestation of a computer shrunk from room

sized behemoths to refrigerator sized boxes down to a single board comprised of only a

few chips.

Like its predecessors, the computer needed all the elements of a traditional Finite State

Machine with a new twist; the ability to change states by following bit patterns; bit

patterns found in configurable structures, structures not hard wired into the design.

3

For the purposes of this paper, we are taking as faith the silicon topologies and processes used to

implement registers work and are well described in other tomes.

4

Thought Experiment: http://en.wikipedia.org/wiki/Thought_experiment

5

For the initial part of this discussion, we will refer to a chip as the fundamental embedded system

building block. Later we will expand that definition to include the concept of a microcontroller “core”.

6

About.com: Inventors - http://inventors.about.com/library/blcoindex.htm

7

Technical Institute of Berlin; http://user.cs.tu-berlin.de/~zuse/Konrad_Zuse/index.html

ECE4551 Page 4 of 31 03/12/2009 03:10:58

This created the possibility of a new state machine paradigm, one of an Infinite State

Machine.

ROM and RAM: memory structures making up the skeleton of an

embedded processor.

This new topology could “execute” a pre-configured – “programmed” sequence of

states based on the contents of two new hardware constructs: read-write volatile data

memory and read-mostly program memory. The term “volatile data memory” refers to

memory that will not retain its contents after the power is removed. The term “Read

mostly memory” refers to memory that can be pre-configured or programmed with a

sequence of bits (bits, that we will later see are the patterns that represent computer

instructions) that is persistent or non-volatile and will be available after the power has

been removed. For the sake of brevity and tradition, non-volatile memory is referred to

as ROM8, whereas volatile memory is referred to as RAM9.

The Guts of the Processor: the Program Counter, ALU and Control

Unit.

Keeping with the comparative anatomy theme, every computer is built using these two

memory structures in one form or another. They are used to provide long and short-

term storage for data and instructions. The third element necessary to the operation of

the computer is the control unit. It is a multi-function module that controls and

synchronizes the flow of data from the outside to the inside, and between memory

elements and the outside world.

Like a policeman directing traffic, the control unit directs when and where data will

move. It also records state information for use by other operations. The key to the

control module is the program counter or PC. It is a special purpose register 10 that holds

the memory address of the next instruction to be executed. The width in bits of the PC

corresponds to the maximum number of instructions that can be addressed by the

computer.

The number of instructions that can be addressed is 2 n locations. The control module

“fetches” the instruction from ROM, “pointed” to by the PC. It then translates the

instruction into a sequence of control signals that route the data from and to the

appropriate location.

8

ROM is the acronym for Read Only Memory, meaning not writable but readable. In practice, these

memories are writable at least once, to configure the memory. Typically, they are implemented using

FLASH technology, allowing multiple write cycles using proper programming equipment.

9

RAM is the acronym for Random Access Memory, which is a misnomer as all addressable memory is

random access by definition. It traditionally refers to memory that looses all data when the power is off,

and is typically of a static or dynamic nature.

10

A “register” is a fixed width, volatile memory element, used to store intermediate information. This

“information” may be the next address to execute, or the flag bits used to configure the built in A/D

converter.

ECE4551 Page 5 of 31 03/12/2009 03:10:58

The next module important to the operation of the computer is known as the

Arithmetic-Logic Unit or ALU. It is responsible for performing various arithmetic

operations on the data, like addition or subtraction as well as various logic operations

like AND, OR, and NOT. In more sophisticated microcontrollers, the ALU may also

include a “perfect exchange register”, where word-oriented operations can be carried

out, like “swap bytes”, swapping the upper and lower bytes of the word, or “swap

nibbles”; single cycle operations11 that make short work of bit intensive operations. The

ALU can also test data for various states for the control unit to record. All ALU

operations are directed by the control unit, which is in turn directed by the instructions

found in the program memory.

The following structures are the building blocks of all embedded computer systems:

• Program memory – read mostly, stores instructions and constant data (data

that does not change over time). Non-Volatile, data is retained after the power

is turned off. Typically it is organized as an addressable matrix of α x β bytes,

where α is the memory address width and represents 2α locations, and β

represents 1 or 2 bytes of memory width.

• Data memory – read/write; stores the results of instructions and state

interactions. Volatile, looses all data when power is turned off. Typically it is

organized as an addressable matrix of α x β bytes, where α is the memory

address width and represents 2α locations, and β represents 1 or 2 bytes of

memory width.

• Program counter – a special purpose volatile memory element (usually a

dedicated register) that holds the address of where the processor is in its

instruction sequence, usually the address of the next instruction to be

“fetched”12 executed. The width of the PC corresponds to the maximum number

of instructions the computer can hold.

• Control unit – A dedicated Finite State Machine (FSM) that takes as its inputs

the instruction from the program memory, translating the bit pattern into actions

manifested as synchronized control signals and states to the other modules in

the computer.

• Arithmetic-Logic-Unit – A dedicated FSM that takes as inputs control signals

and “chunks” of data13 (usually whole bytes or words14) and gives as outputs the

results of the operation, in similar chunks of data.

11

It will be seen that embedded processors are “theme” oriented, that is as a motor controller, or

communications controller or a sensor controller, and the architecture correspondingly includes “special

functionality” (read dedicated registers/operations) that make those features efficient compared to doing

it manually in software.

12

The term “fetch” normally associated with a ball and the family dog is a good analogy in this context

as the verb describing the action of retrieving the instruction from program memory. It involves looking

it up, getting it physically and bringing it back.

13

Historically, the term “data word” is the fundamental width of the registers and program memory

native to the microprocessor. In the domain of processors we are outlining, it dependent on the

architecture. Wikkipedia: http://en.wikipedia.org/wiki/Word_%28computing%29

14

ECE4551 Page 6 of 31 03/12/2009 03:10:58

The anatomy of any embedded processor is made up of these five modules. It is the

way in which these elements are arranged that ultimately describe the behaviour or

how a specific controller actually executes a program of instructions. The next section

explores the fundamental “physiology” or how it actually works, of an embedded

processor.

Putting it all together, the big picture:

All embedded processors we encounter have various combinations of RAM, ROM and

register resources. Typically, for the domain of embedded processors we are exploring,

this memory will reside physically on the silicon from which the “chip15” is

constructed.

Figure 1 photomicrograph of physical microcontroller elements on silicon die.16

Other types of embedded processors have enough pins to support accessing memory

off chip. Starting out, we will address those topologies that have memory built into the

chip. The following figures illustrate three different embedded processors, the ARM-7,

the Atmel 89C2051 and the Microchip PIC18F1330 8-bit microcontrollers. Starting out

lets look at the “anatomy” of these processors.

In Figure 4, the PIC18 processor, it is easy to identify the modules we have described

so far, ALU, Program Counter, Control Unit “Instruction Decode and Control”,

Program Memory and Data Memory. You will also notice there are many other

modules in the processor we haven’t discussed yet but may be able to guess at the

15

The word “chip” is loosely used to mean those devices built from “chips” of silicon wafer, mounted

onto a leaded carrier, providing the pins that allow connection to the circuit.

16

Image copyright © © 2009 Micro Control Journal. All rights reserved.

(http://www.mcjournal.com/articles/arc105/arc105.htm)

ECE4551 Page 7 of 31 03/12/2009 03:10:58

functionality. Suffice it to say they all support the movement and modification of data,

which ultimately is the only purpose of any processor, embedded or not.

The other processor illustrations are not so straightforward to interpret. In Figure 2, it is

clear what the data and program memory is, the ALU and program counter as well but

where is the Control Unit? It is there, just split into several blocks, each illustrating a

function the control unit must accomplish, like “PC incrementer”, “program address

register” and “stack pointer”. As long as you understand these functions are common

to all embedded processors, it is not difficult to interpret the block diagram of any

microcontroller.

Figure 2: Atmel 89C2051 Architecture17

The diagram in Figure 3 stretch the “simple block diagram” concept, but with some

digging it is possible to catch the “islands of functionality18”. In the CPU diagram, it

lays out a stylized arrangement of registers, implying interconnection and the existence

of a control unit connecting them all. As this is a model of the CPU core, the memory

is not illustrated. The memory is laid out a little differently, both the program and data

memory share the same address, but the program counter and flag register are clearly

there along with many other, yet to be understood specialized registers. We will get to

those later; for now the important idea here to grasp is although it may seem like these

17

Image © 2009, Atmel, http://www.atmel.com/dyn/products/product_card.asp?part_id=1938

18

“Islands of Functionality” refer to isolated group of registers, FSM and other structures that perform a

single job, like the ALU, a timer, or an A/D controller module.

ECE4551 Page 8 of 31 03/12/2009 03:10:58

three processors are dramatically different, they actually differ only by the specifics of

how the various modules interact, and not so much by the modules they have.

Figure 3: The ARM-7 core architecture

ECE4551 Page 9 of 31 03/12/2009 03:10:58

Figure 4: The Microchip PIC18F1330 Architecture19

To put the embedded processor anatomy tutorial in perspective, keep in mind all

embedded processors have one simple goal of existence:

In a deterministic manner (meaning repeatable) move and modify data

according to a list of instructions.

So far we have outlined where data exists and what is used to access and modify it.

Now that we can understand what these modules are, from a generic concept to actual

examples of real machines, the next big step is to understand how these modules

interact. The next question to answer is: Exactly how does an embedded processor

execute an instruction?

19

Image © 2009, Microchip, http://www.microchip.com/wwwproducts/Devices.aspx?dDocName=en022957

ECE4551 Page 10 of 31 03/12/2009 03:10:58

Fundamental Physiology of an Embedded Processor

Now that we have outlined the structure of the building blocks of an embedded

processor, (memory, ALU, Control Unit, PC…) and briefly illustrated some real

processors, it is time to describe their inner workings, i.e. how they behave with one

another. Once the general ideas of structure (anatomy) and function (physiology) are

understood we can proceed to take a comparative look at how examples of actual

embedded processors work performing similar tasks.

The computer program: a recipe for functionality.

Although trivial in concept, it bears repeating. A computer “follows” a list of

“instructions”, starting at the “beginning” and “executing” each instruction until the

“end” of the recipe or program. From our previous glimpse into the anatomy of an

embedded processor, we know the program memory (ROM) stores the program

instructions in an addressable matrix of bytes or words. The Program Counter (PC) has

the vague job of “knowing” which instruction to execute. It is the job of the control

unit to know where to start the program (the beginning), fetch, decode and execute the

instruction and as long as it isn’t the last instruction (the end of the program), advance

to the next instruction, execute it and so on…

The Program Counter: more than meets the eye.

In Figure 5 we see our first look at a generic computer, complete with program

memory, control unit and program counter. The program counter is connected to the

program memory via the program memory address bus. The output of the program

memory goes directly into the control unit. The program counter is also connected to

the control unit. At any one moment in time, any computer is in the middle of a finite

set of cycles, performing mundane tasks like:

• Calculate the address of the next instruction

• Load the address of the next instruction in the program counter

• Enable the program memory to use the address the PC is presenting

• Get the value of the memory location (Fetch the instruction)

For the time being we will focus on these simple but vital tasks. Somehow, the control

unit is “smart” enough to know what the next address is to fetch the next instruction.

The key to understanding how something works is to “walk a mile in its shoes” as it

were, to follow it step by step as it does its job. Let’s consider a simple scenario with

two questions. What actually happens when an embedded processor20 is powered up

and how does the control unit orchestrate these events?

As we are dealing with events that take place in time, it is traditional to illustrate these

event relationships that happen in time or in synchrony with a “waveform” chart. A

20

From this point forward, the term “processor” or “embedded processor” or “computer” will all refer to

the same thing.

ECE4551 Page 11 of 31 03/12/2009 03:10:58

well-organized chart can illustrate in a single picture what would take pages of text to

describe.

The following is a generic power up sequence that could apply to almost any embedded

processor. It is organized as several rows, each representing a particular signal as it

changes in the time domain. The “signal” may represent an actual voltage, or a logical

state, for example 0V to 3.3V, or “asserted” or “not asserted”21. The row images of the

waveform are linked in time, that is they all start at the same time, and important events

are usually labelled. In this example, the first row represents a logic condition of power

being applied, (the lower line illustrates the zero or off condition, and the upper line

represents the high or on condition) rather than its actual voltage value(s). Important

here is the idea that not all signals are valid at all times.

The first few hundred microseconds of life: the power up timing

waveform.

POWER

power

stable

RESET

Start

Reset

CLOCK

clock

stable

ADDRESS

DATA

INST

Cont

FETCH

Fetch 1st Instructionf

Power on sequence:

1. Power is applied to the chip (The beginning of time as the chip sees it)

2. The reset signal is asserted, holding the chip in a reset state.

3. In the reset state, nothing happens within the computer, but the computer cycle

clocks start oscillating and everything is poised, just waiting for the reset to be

released. This is one of the most important times for a computer, without it, the

control unit and program counter would be in unknown states22, and could cause the

computer to go haywire, not knowing what state it is in, or where to go next.

21

It is more accurate to use the term “asserted/not asserted” to indicate the value of a particular state. “1

or 0”, or “true” or “false” can all imply an implementation of a state. A logic 0 may be represented by

anything less than 0.9 VDC in a 3.3V system, and represents the asserted or enabled state of a processor

reset signal, which would be logically “true” for its value.

22

By unknown state, consider what is physically happening in time when power is first applied to a

transistor circuit. This all happens on the time scale of pico and nanoseconds, but when dozens of

transistors are linked together, it can take hundreds or thousands of nanoseconds to settle down into a

known state

ECE4551 Page 12 of 31 03/12/2009 03:10:58

Putting the computer in the reset condition gives the processor the time it needs to

turn on and set up the physical transistors that make up the hardware to known

conditions, subsequently initializing the control unit to a known state.

4. Some time after the power is applied and stable (that is, within the operating range

of the processor), the system clock(s) have started and are stable, the reset signal is

released, and the control unit starts from its initial state. All this happens in a short

period of time to us (2 – 5 milliseconds), a lifetime to a processor that can execute a

half a million instructions in a second.

5. The control unit loads a pre-defined address (processor dependent) into the

program counter and fetches the first instruction from program memory. The word

“fetch12” is often used to describe this control unit cycle, and can be summarized

with the following steps:

a. Start the fetch cycle:

b. Using a FSM, assemble the pieces that will make up the next instruction

address. In the case of the first instruction location, no calculation is

necessary, it is a fixed location, just use that address.

c. On the appropriate system clock edge23, logic OR the address pieces24

together and load the assembled new address into the program counter.

d. Enable the new instruction address from the program counter onto the

program memory address bus.

e. Synchronized with the appropriate clock, and after there has been enough

time for the address to be stable and valid, (the last thing you want is the

address changing while you are trying to read an instruction from program

memory) enable the program memory output onto the instruction bus.

f. Synchronized with the appropriate system clock, read the instruction into a

holding register within the control unit.

g. Set the increment next instruction address increment value to be added to

the current instruction address when the next fetch cycle starts.

h. Fetch cycle complete, the control unit now has fetched the instruction from

the program memory.

6. The control unit is ready to decode the instruction fetched, execute it and start the

fetch cycle all over again.

23

For this overview, we are playing fast and loose with the necessity of system synchronism. Assume on

faith that every processor cycle that is executed is done in time and in sync with a clock, or clocks, or

portions of a clock to insure the data is taken or arrives where it belongs when it is valid to do so.

24

The “pieces referred to will be described in detail later, suffice it for now the pieces may be an offset

from the current location and the previous location, along with any increment pending.

ECE4551 Page 13 of 31 03/12/2009 03:10:58

CU

Buses

Data

Program Memory

PC

Memory

ALU

I/O

Port

Figure 5 Control Interconnections

As you can see, there is more to the program counter than meets the eye; it is the

signpost the entire computer uses to keep track of where it is in the instruction

sequence. It is a lot more than just a simple placeholder; it is an integral player in the

instruction fetch cycle as well as an essential element for proper program execution.

The Control Unit: Conductor, logic wizard and traffic Cop:

Up to this point we have glossed over many inner workings of the computer, limiting

our focus on just how does the computer know what instruction to do next. Do not

loose sight of the big picture:

There is a program stored in instruction memory (ROM)

This ROM holds the instruction sequence that is the program to be “executed”,

whatever that means.

The control unit is stuffing the program counter with addresses, fetching

instructions and doing something with them.

The next part of our discussion of embedded processor “physiology” is how the control

unit “knows” what to do with the instruction it fetched from the program memory.

The Control Unit is the very heart of any embedded processor. Ultimately it is

responsible for knowing what instruction to fetch next, how to fetch it, set up for the

next instruction, decode and execute the instruction just fetched. It is a relatively

complex FSM designed specifically to control the inner workings of the computer

according to basic cycle specifications like the fetch cycle mentioned previously, or in

real time by decoding the cycle information contained with the instruction.

“110001110011”: I dig Computer Baby Talk!

So far, this tutorial has been pretty fast with the information; it is kind of like trying to

drink water from a fire hose, possible, but a lot will spill out! So far, what do we know

about the inner workings of a computer?

ECE4551 Page 14 of 31 03/12/2009 03:10:58

• The computer works by executing instructions in sequence.

• The program memory stores the instructions to be executed.

• The control unit, in conjunction with the program counter can fetch instructions

from program memory, decode and execute them, whatever that means.

So far so good, before we continue, let’s do a little Boolean algebra review. Recall that

the number of permutations a particular binary number has is equal to 2 n where n is the

number of bits in the binary number. For example, if we had a 4 digit binary number it

has 24 or 16 possible combinations. An 8-bit byte has 28 or 256 possible combinations.

This organization is used extensively in computers to allow us to select one from many,

or address one memory location from the tens of thousands of memory locations

available to us. Like the ubiquitous “Apartment Number” analogy, for every memory

location, there is a unique address, just as there is a unique physical address or number

for every apartment.

Making Bits Work:

Remember the alarm example, where the finite state machine modelled the windows

and doors of the house as bit locations to encode the physical world into a digital

representation of that world? The dedicated finite state machine circuits decode the

binary bit locations to determine what door or window was opened.

This is what is being done with the so-called “instructions”, bit patterns are being used

to represent places and actions we wish the computer to access or execute. Now we can

say it; when a computer “executes” an instruction, it means that particular instruction

has a physical meaning associated to its unique bit pattern. That meaning is used to

enable the sequence of events that is required to “execute” the meaning or command.

Instruction Encoding; how many bits do we need?

This is exactly how the control unit “knows” what to do with the instruction it fetched

previously. Each instruction contains an encoded portion indicating what to do, who to

do it to and what to do it with. This is a lot to ask a few bits to do. As we will see later

when we compare processors, some only have enough bits to encode 64 instructions or

6 bits (what to do) and 8 bits of location or actual data information (what/who to do it

with/to). That adds up to an instruction word that is 14 bits wide, implying the program

data memory better be at least 14 bits wide.

Time for a real example; let’s say our embedded processor has an instruction called

“Add”. Its function is to cause the contents of some register (lets call it “a”) to be

added with the fixed value 0x14 and the results stored back in register a, wherever

that is. The operation code (opcode25) for this instruction could be “110001” in

binary, and the fixed (immediate) value might be 0x1426, “00010100”. The entire

25

See definition: http://en.wikipedia.org/wiki/Opcode

26

The traditional prefix for a hexadecimal number is the two character pair “0x”. Each hexadecimal digit

is four bits wide, thus having 16 values, from 0 (0000) to F (1111).

http://en.wikipedia.org/wiki/Hexadecimal

ECE4551 Page 15 of 31 03/12/2009 03:10:58

instruction data would be the concatenation (joining together) of the opcode and the

immediate data, for a complete instruction “word” of: “11000100010100”. The

control unit is expert at this kind of binary “computer baby talk” and decodes the first

six digits as the action to add the number represented by the last 8 digits to some

register called ‘a’, wherever that is. Unfortunately, for most of us humans, it is tedious

to impossible to manage lists of binary strings representing instructions and data. Some

sort of help was needed for our simple minds to handle binary instructions.

Looking Forward: Tools for us Humans:

The time has come to peek ahead and introduce the concept of mnemonics, the

mapping of a human understandable memory aid onto literal computer constructs27.

The trick is to come up with a pseudo language of sorts, with descriptive verbs and

nouns representing the operations we would like to perform. Which of the following

identical statements is easier to understand?

“11000100010100” - or - “add a,#0x14”

The first string28 of binary digits is traditionally called “machine language” (computer

baby talk) and the second statement is called “assembly language”, a pseudo English

patois of suggestive verbs and nouns loosely cobbled together to garner meaning.

Here is the beginning of what is called the “Tool Chain”, a very important concept in

understanding how computers work. There are tools (actually applications that run on a

separate development computer system) that help us translate language a human can

understand into machine language a computer can execute; the actual, physical binary

pattern stored in program memory.

In this simple example, we would create a program using a stand alone text editor or an

editor within an IDE29 containing among other things the “add” statement above and

use that human readable text file as the input to an application called an “assembler”.

The assembler interprets the assembly language “source code” into the appropriate bit

pattern. To complete the chain, that bit pattern is then combined with other bit patterns

to form an executable bit image. This bit image is then “programmed” or “burned30”

into the computer program memory ROM. We will be discussing the tool chain

concept in more detail later. At this point in our tutorial accept it on faith that there is

indeed a way that humans can create programs that ultimately physically are

manifested as bit patterns or instructions inside the processor, ready to execute when

the power is turned on.

27

Derived from Wikipedia definition: http://en.wikipedia.org/wiki/Mnemonic

28

Be careful, this is not a binary number; it is a composite representation of opcode and data.

29

“Integrated Development Environment”, a computer application that streamlines the creation of

computer programs by integrating the editor, compiler, assembler and linker into a single user interface.

30

“Burning” a ROM is a throw back to when physical metal fuses integral to the memory were burned

away using a high current pulse, permanently setting the state for that memory location. The specific

mechanisms for memory is beyond the scope of this paper; See

http://www.howstuffworks.com/rom.htm/printable for more details.

ECE4551 Page 16 of 31 03/12/2009 03:10:58

Figure 6 Simple Tool Chain, Assembly to Bits in Computer

Temporary storage: Data Memory.

So far we have explored how the control unit fetches an instruction from program

memory and decodes it to perform some task. What is missing is, where does the

control unit store temporary data? (Remember register ‘a’?) Not everything is known

when the program is compiled into machine language, for example, if the embedded

system was a thermostat, or an alarm controller, where does the computer store the

current temperature? Where does the computer store what doors are closed? This is the

job for Data Memory, a read/write volatile memory that the control unit can use to save

intermediate results, or just about anything that can and does change with time. In the

strictest sense, the program counter is an example of this type of memory; when the

power goes away, the data is lost. Other modules rely on this kind of memory; the

ALU uses temporary storage as scratch pad memory to hold intermediate results. The

control unit relies on temporary data storage to keep track of the current state of the

computer. Data memory is an integral part of the computer architecture, and it is

necessary to understand how it interacts with the other modules we have seen so far.

CU

Buses

Data

Program Memory

PC

Memory

ALU

I/O

Port

As you can see from the illustration above, most computer architectures have at least

some sort of program memory, data memory, program counter and control unit. We are

almost ready to start looking at particular embedded processors. It is first necessary to

understand the relationship between the program memory, data memory and dedicated

volatile memory elements or registers.

ECE4551 Page 17 of 31 03/12/2009 03:10:58

Before continuing, let’s review the concept of a “register”. It is nothing more or less

than an ordered set of bit(s), not unlike a memory location, that can “hold” a bit

pattern. A register may be 1 or 256 bits wide. It might hold a single bit from the

overflow of a binary addition, or the four bit value that points to a portion of memory

called a “file”. Whatever it holds, and however wide it is, it is a volatile memory

element, usually “controlled” (set, reset, read…) by the control unit.

Some embedded processors for example, have a special dedicated register for

everything. This is where the uniqueness of a processor manifests itself; how the

functions and data are organized physically on the processor. Registers may be general-

purpose scratch pad to hold any value (say the intermediate result of a logic operation)

or a special function register to hold a binary value that corresponds to the artefacts of

the last instruction31.

As embedded processors contain more and more functionality (timers, serial ports, A/D

converters…) it is necessary to have volatile memory elements to keep track of all their

settings and status. In some processors, there are over two-dozen separate special

function registers just for this purpose. Here is where the similarities end and

individual architectures begin to diverge from the generic model. How does the

computer organize the needs of program memory, data memory and special function

registers? Keep that question close to mind as we continue our exploration of the

computer’s last generic element, the ALU.

The Arithmetic Logic Unit: Workhorse of the Embedded Processor.

Up to this point we have hinted that there is a module that is used to do math and logic

operations managed by the control unit. Indeed, inside every embedded processor is an

ALU that can take two or more operands, perform an operation on them (addition,

subtraction, multiplication, AND/OR/NOT…) storing the result in data memory or a

purpose built register, updating the artefacts associated with that operation; negative,

zero, overflow, underflow, divide by zero…)

Figure 7 Simple ALU

31

An “artefact” is a processor state that may change when an operation is performed. For example, if the

result of a math operation is negative, a bit or “flag” could be set as an artefact of that operation. There

are various flags or artefacts that are updated after each instruction by the control unit.

ECE4551 Page 18 of 31 03/12/2009 03:10:59

The ALU itself is a special purpose finite state machine designed to take control signals

and operands and perform the function called out by the control unit. Its features

include the operations it can perform, the size of the operations it can handle and the

size of the results it can produce.

Consider the addition of two 8-bit numbers. The sum could be larger than an 8-bit

number can handle so the ALU must be able to accommodate that possibility. The

ALU must also be able to provide some sort of floating point functionality (or at least

mechanisms to support such operations), usually incorporated as partially hardware,

partially custom math libraries for that processor.32 The full complement of math

operations take up a lot of processor real estate and compromises need to be made to

get the maximum functionality in the minimum space with the best performance

possible. It is possible to multiply or divide any two numbers using successive

additions (or subtractions) but that would take a long time. Time then would be the

compromise over the real estate33 needed to have a hardware multiplier integral to the

ALU.

Memory Organization and Program Memory:

However you slice it34, every embedded controller operates on two types of memory;

the memory that stores instructions (program memory) and the memory that

temporarily hold values (data memory). In the previous real chip examples, we can see

the PIC18 and AT89 both have separate, distinct memory areas, with separate data and

address lines as opposed to the R8C architecture, where the program and data memory

is logically one monolithic block, with one common address and one common data bus.

It is not the intention of this paper to compare and contrast the ramifications of this

level of architectural choices35; we will however explore what they are and how they

are used.

Recall that it is the control unit that calculates the address for the next instruction to

fetch. In the case of the PIC18, this is an address that can accommodate a maximum of

8192 memory locations. As a review, how many bits are needed (the minimum

32

It is mathematically possible to do any math operation just using two bits and a lot of RAM, it would

just tale a lot of instructions to orchestrate even a simple 16 bit integer addition. On the other hand, you

could dedicate three separate registers, two 16 bit and one 32 bit register to hold the operands and the

sum respectfully. Controller architecture is a balance of what space you physically have and what

operations can be done in software.

33

It physically takes space in silicon to do anything, store a bit or make a control unit. Each processor

designer is faced with the problem of trying to find space for everything the marketing people want in

the new version. Compromises are made in performance or size (and power) when design decisions

(architectures) are made.

34

Off-hand homage to the so-called bit-slicers of old.

35

As is often the case, the choice of one particular architecture over another has “religious” implications,

with each ideology having its priests, each believing in their brand of “the truth”. More often than not,

the choice of processor is cost, or number of pins, or “what chip did we use last?” or “how much do the

tools cost?” and not some idealized architecture philosophy.

ECE4551 Page 19 of 31 03/12/2009 03:10:59

number) to uniquely address every location in the program memory?36 As it turns out,

the internal modules in the PIC18 family of processors are almost identical, and while

the PIC18F1330 may have a program memory depth of 8192 locations, each 16 bits

wide, the “flagship” of the PIC18 family, the PIC18F8722 has up to 128 kBytes (64k

words) of program memory.

This common control structure is not by accident. It is to insure instructions written for

the least capable member of the family will work on the most capable member. In fact,

the address latch for the program memory is a full 20-bits wide, allowing up to 220 or

one million memory locations (can we expect future versions of the PIC18 family with

more program memory?). For our PIC18 however, we have more than enough address

bits to accommodate the 8192 locations (16 kBytes) of instructions and constants37.

As we mentioned earlier, the Program counter is much more than a simple register, in

this example it is almost a mini ALU in the operations it can perform to assemble the

correct address for the next instruction. Keeping with the program memory theme, look

at the Atmel 89C2051 memory. Although difficult to read from the simple block

diagram, the literature specifies the program memory to be byte wide, with 16 address

bits for a maximum of 65532 locations, (64 k, 1k = 1024 locations) With our variant,

the 89C2015 has 2k of program memory or 2048 locations, each one byte wide.

Consider this simple fact for a moment. Each program memory access of a

PIC18F1330 processor returns 16 bits. Each program memory access of the 89C2015

returns half as much data. If both processors are running at the same speed, which one

moves more data per unit time? We can’t answer that right now, but keep it in mind

when we compare performance between our three embedded processors.

To complete the program memory tour, the ARM-7 processor addresses 32-bit wide

program memory, unlike the PIC18, which uses a 16-bit address. Very similar

processors, but different approaches to how the program instructions are addressed.

36

2n = 8192, log2(8192) = n = 13 bits

37

We include “and constants” on purpose when describing “program memory” as it is the ideal place to

store values that are known when the code is assembled, and would overwhelm the limited data memory

space. This convenience however comes at a cost, as we will soon see.

ECE4551 Page 20 of 31 03/12/2009 03:10:59

Figure 8: Microchip PIC18F8722

Memory Organization and Data Memory:

Previously we explored the anatomy of controllers, describing the blocks or modules

that made up the controller. These blocks are indeed islands of functionality, for the

most part independent of each other. That means while the control unit is “fetching”

the next instruction, the ALU can be logically OR’ing two values, sending the results

into a register, and the timer module (described later) can be counting down; all at the

same time, all on the same chip of silicon.

This ability begs to be used in a parallel fashion, and not simply in the serial “follow

the recipe” concept of a computer program. The tricky part is, how does the computer

keep track of all these independent operations? More on that later.

Going back to our real life examples, consider the monolithic memory architecture of

the ARM-7. To fetch an instruction, the control unit updates the program counter, then

ECE4551 Page 21 of 31 03/12/2009 03:10:59

places that address on the address bus. Some time later it reads the instruction from the

ROM then executes it. If there are data values to be stored, it places the destination

address on the same memory bus, and some time later writes it into the RAM.

In this simple example, there is some “dead time”, that time between subsequent

operations that could have been used doing things in parallel.

Figure 9 "Princeton" (Von Neumann's) Architecture

Figure 10 "Harvard" Architecture

Look at the Figure 4, on the PIC18 architecture; the program memory has its own

address and data busses, distinct and separate from the data memory. This means the

control unit can be building the next instruction to be fetched while it is decoding the

current instruction, and at the same time writing the results of the last ALU operation

into the data memory. The level of parallelism is common to architectures that have

separate program and data memory spaces. The figures above illustrate the basic

memory architecture differences, the main idea to keep in mind is in the “Princeton”

architecture, there is one Program/Data memory address and data bus, tying the

program and data memory logically together. In the “Harvard” architecture (hmmm, I

ECE4551 Page 22 of 31 03/12/2009 03:10:59

wonder where they were invented…) the program and data memory are separate and

distinct, each with its own address and data busses.

Is one architecture better than another? It is a difficult question to answer without

knowing the actual application. In a simple case when the computer is reading a

thermostat, checking it against a pre-set temperature and deciding if the heat should be

turned on, it doesn’t take many cycles to accomplish, and a monolithic memory may be

just fine. If more performance were required, a faster processor could be used. If

however, many functions were being handled at the same time, like reading the buttons

on a USB Nintendo™ controller, processing 8 buttons as well as the angles of the

left/right joysticks and sending the information back to the Play station in time to keep

from being sliced in half by the Org you are battling, it may be better to have a

microcontroller that can do as much as possible at the same time.

The organization or architecture of the computer includes how the various volatile

memory elements: registers: are organized and controlled. Some architectures use

individual, separate registers for everything: see Figure 11. An alternative to having

separate physical registers is the model used by the RC8 and PIC18, the registers for

the whole computer are contained in data memory as a set of registers, addressed like

any other memory element and often organized as “files” or “blocks” of memory.

This significantly reduces the complexity of the control unit while maintaining the

flexibility of added functionality. For example, consider two processors from the same

family; the PIC18F8722 and the PIC18F1330. Using the same register file,

architecture, it is possible to accommodate the five timers and 12 A/D modules using

the same control unit that the PIC18F1330 uses to maintain 2 timers and 5 A/D

modules.

ECE4551 Page 23 of 31 03/12/2009 03:10:59

Figure 11 PPC 405 Core Programmers model copyright 2006, IBM

The other “fiddly bits”: Input – Output and additional

processor functionality.

To round out the inner workings of the data memory, it is important to consider how

our computer actually moves data into and out of the processor chip. In most

architecture, an I/O pin is a simple RAM element, usually organized as a register of

individually accessible bits that is on the data memory bus. To perform an output

operation, the control unit asserts the direction control so the pin will be electrically an

output pin. Figure 12 illustrates one bit of the I/O port for the PIC18. The simple port

picture illustrated in Figure 4 glosses over the real work the control unit does in

orchestrating an I/O operation. This is further complicated when the I/O pin can be an

analogue input pin as well as a digital I/O pin.

ECE4551 Page 24 of 31 03/12/2009 03:10:59

Figure 12 Generic PIC18 I/O pin Figure 13 Digital & Analogue I/O pin

In addition to input and output functionality, most microcontrollers these days have

some sort of built in timer capability. These timer modules operate independently once

set and started, and provide a much-needed function to count events or time to be used

in an embedded application. Again, there is a trade off between chip real-estate and

software overhead. Any timer function can be implemented in software using loops and

tests, at the cost of having to execute in linear time with the program. No matter how

fast a program is it can only be doing one thing at a time. Implementing a timer in

hardware however, relieves the burden of maintaining a count.

ECE4551 Page 25 of 31 03/12/2009 03:10:59

Real world timers do more than just count; here the ARM-7 has two, 32-bit timers38,

with selectable input count frequencies (multiples of the master clock) as well as input

from I/O pins (to count events happening on a pin). It is a simple matter to preload a

16-bit timer39 and count from 0x0000 to 0xffff. If the master 32 MHz clock was used as

the count input then the timer would roll over or overflow40 every 2 or so milliseconds.

If the fc32 divider is used, then it would overflow every 64 milliseconds.

Timers, I/O ports, A/D modules, indeed most if not all computer special function

modules need registers to hold configuration parameters (in the case of the timer it may

be the flag bit that controls if the timer restarts after an overflow). As we saw in the

PIC18, these registers are part of the computer data memory area. In the case of the

PPC405, they are individual registers, peppered all over the die. Figure 15 illustrates

what is arguably the quintessence of microcontroller technology to date, the Philips

LPC2114.

Using what we have learned, let’s examine this “animal” closely. The key to evaluating

a microcontroller is in answering fundamental structure (anatomy) questions first, and

then if the structure is appropriate to the task, then take a closer look at the

functionality (physiology) of the beast.

First the “bones”; how is the Program counter, Control Unit and ALU arranged? How

does the chip get/put data to the outside world? What other goodies are available

(timers, USARTS, A/D…)?

At first blush, the block diagram of the LPC2114 seems to be missing many essential

elements. They are there; it just takes a little digging. This chip represents a new trend

in embedded processors, that is a common core of functionality, surrounded by the I/O

that makes that particular processor special. Here the core is illustrated in the block

named “ARM7-DTMI”. The actual “guts” of the core is illustrated in Figure 14. The

Program Counter is implemented by two elements, the “Address Incrementer” and the

“Address Register”, which makes sense considering how the program counter normally

functions. The other basic processor elements are present, The ALU, the Control Unit,

and a data register. Some interesting additions are a 32 x 8 hardware multiplier, and a

barrel shifter interfaced with the ALU. These could help floating point operations by

speeding up in hardware common math tasks. (Remember the real-estate –

performance trade-off discussion? Clearly, this chip is built with the building blocks of

speed.) Another interesting element is the 31 x 32 bit register bank, a good place to

hold intermediate results, or condition flags; at this point in the investigation it is not

clear, but should not come as a surprise when looking at the “physiology” of this beast

to discover there are such register locations in the bank.

38

Philips LPC2124 Datasheet.

39

Timers are characterized by how many bits are used to “count” with.

40

A timer typically starts at some preloaded value and count up, one bit at a time until the maximum

value is reached. Then, depending on the mode used, a “overflow” flag is set, the initial value reloaded

and the cycle started all over again.

ECE4551 Page 26 of 31 03/12/2009 03:10:59

This cursory look at the ARM-7 core should convince you that it has the infrastructure

to be a hot, 32 bit processor. Now, lets have a look at the I/O.

Figure 14 ARM-7 Core

Looking at Figure 15, we can now see where the program and data memory live, it is a

monolithic block, with a single address and data bus. The I/O however, has their own

address/data bus, an interesting combination, and should make the I/O modules

somewhat independent of the ARM-7core.

In this initial look, it is clear this chip has been designed to accommodate almost any

I/O scenario you would encounter with an embedded processor.

ECE4551 Page 27 of 31 03/12/2009 03:10:59

Figure 15 ARM-7 (Philips LPC2114) Block Diagram

Summary.

In this paper we have explored how any embedded processor is made up of the same

elements, and each individual element has basically the same behaviour. These

elements are mixed and matched to create the animal known as an embedded

processor, and along with the software applications in the tool chain, make up a

development environment the designer can use to solve real world problems using

embedded processors.

The heart of the embedded processor is the concept of an “infinite state machine”, that

I, dedicated hardware that can reconfigure functionality by following a set of

instructions. This innovation has enabled designers to move away from the direct

hardware manipulating bits to looking at problems from a modular perspective.

As important as the hardware advances is the tool chain used to design the control

software, the ultimate arbiter of any embedded system.

ECE4551 Page 28 of 31 03/12/2009 03:10:59

Embedded Processor questions to test for

understanding:

1. What is the difference between a Finite State Machine and an embedded

processor doing the same task?

2. How do you physically get the program into the embedded processor?

3. What is the first thing the embedded processor does when the power is turned

on?

4. What is the difference between an 8-bit processor and a 32-bit processor?

5. What are the steps needed to translate the following text into machine code?

int a = 5;

int b = 3;

if(a > b)

a = a+b;

else

a = b;

ECE4551 Page 29 of 31 03/12/2009 03:10:59

Answers

1. The FSM has one task it is designed to perform; the embedded processor can be

programmed to do an infinite number of tasks.

2. The embedded processor is connected to a chip programmer, either in the

circuit (so called in circuit programming) or as individual chips. The machine

code file is used by the programmer to change the instruction memory on the

chip.

3. At the beginning of time, all embedded processors are held in a reset state,

allowing all the internal circuitry a chance to start in a known state.

4. Generally, the difference is in the internal representation of data. In an 8-bit

machine, data is moved one byte at a time. In a 32-bot machine, data is moved

(in the same time frame) 4 bytes at a time.

5. The text is input to a compiler that translates the Human readable code (in this

case “C”) The result of the compiler is assembly code, the processor specific

human readable version. Finally, that assembly code is fed into an assembler –

linker tool that produces machine readable code, specific to that individual

processor.

ECE4551 Page 30 of 31 03/12/2009 03:10:59

i

Fundamentals of FPGAs: http://www.techonline.com/learning/course/210605004

S-ar putea să vă placă și

- Engineering Applications of FPGAsDocument230 paginiEngineering Applications of FPGAsHeitor Galvão100% (1)

- Arduino Processing Part 1Document114 paginiArduino Processing Part 1altugpolat9456100% (2)

- UML Workshop For Embedded SystemsDocument522 paginiUML Workshop For Embedded Systemsraja375205Încă nu există evaluări

- Block AB Mezzanine FloorDocument5 paginiBlock AB Mezzanine FloorYuvarasuÎncă nu există evaluări

- 1310 3011 01 - XAS 125 - JD - ASLDocument24 pagini1310 3011 01 - XAS 125 - JD - ASLmoises100% (1)

- Process Flow Diagram Symbols - EquipmentDocument17 paginiProcess Flow Diagram Symbols - EquipmentAris ApriantoÎncă nu există evaluări

- IP Network-Based Multi-Agent Systems For Industrial AutomationDocument195 paginiIP Network-Based Multi-Agent Systems For Industrial AutomationKazeemÎncă nu există evaluări

- Memory Notes1Document102 paginiMemory Notes1nirmal_inbox100% (1)

- Parts Manual Allied W8L PDFDocument328 paginiParts Manual Allied W8L PDFkertajaya ekarya86% (7)

- The Role of Mech Engrs in Nation BuildingDocument41 paginiThe Role of Mech Engrs in Nation BuildingEnibuetaÎncă nu există evaluări

- Digital Electronics, Computer Architecture and Microprocessor Design PrinciplesDe la EverandDigital Electronics, Computer Architecture and Microprocessor Design PrinciplesÎncă nu există evaluări

- Fingerprint Door Lock SystemDocument84 paginiFingerprint Door Lock Systemsrikanth reddyÎncă nu există evaluări

- Introduction To Computer Systems Networks and SecurityDocument191 paginiIntroduction To Computer Systems Networks and SecurityShaunÎncă nu există evaluări

- Formalizing Common SenseDocument50 paginiFormalizing Common SenseAlephNullÎncă nu există evaluări

- Kongsberg Powerware 700VA-3000VA UPS Type 9120, User's and Installation ManualDocument23 paginiKongsberg Powerware 700VA-3000VA UPS Type 9120, User's and Installation ManualZhiyong Shan100% (2)

- Microcomputer Architecturei 1Document16 paginiMicrocomputer Architecturei 1Augustine NonsoÎncă nu există evaluări

- Form 3 Chapter 6Document7 paginiForm 3 Chapter 6naza9775100% (6)

- Advanced Structural DesignDocument2 paginiAdvanced Structural DesignAnonymous zwnFXURJÎncă nu există evaluări

- Embedded Systems: Analysis and Modeling with SysML, UML and AADLDe la EverandEmbedded Systems: Analysis and Modeling with SysML, UML and AADLFabrice KordonÎncă nu există evaluări

- Wa600-3 PDFDocument143 paginiWa600-3 PDFanggieÎncă nu există evaluări

- 19.PIR Sensor Based Intrusion Detection SystemDocument84 pagini19.PIR Sensor Based Intrusion Detection SystemAr Ramya Prasad100% (3)

- Microcontroller Based Heartbeat Monitoring With Display LCDDocument90 paginiMicrocontroller Based Heartbeat Monitoring With Display LCDRama Krishna M64% (14)

- Dual Axis Solar Tracking SystemDocument141 paginiDual Axis Solar Tracking SystemSadanandÎncă nu există evaluări

- Arduino Micro Controller Processing For Everyone Part 1Document114 paginiArduino Micro Controller Processing For Everyone Part 1fashazee6750100% (1)

- Research Paper On Embedded SystemDocument8 paginiResearch Paper On Embedded Systemgz9g97ha100% (1)

- Analytical Performance Modeling For Computer Systems Third EditionDocument173 paginiAnalytical Performance Modeling For Computer Systems Third EditionjoaenÎncă nu există evaluări

- Seminar Report - Embedded OS For Real Time ApplicationsDocument27 paginiSeminar Report - Embedded OS For Real Time ApplicationsAnkit MakolÎncă nu există evaluări

- IOT-ques Bank (Sol)Document41 paginiIOT-ques Bank (Sol)Hrithik SahuÎncă nu există evaluări

- Latest Research Papers On Embedded SystemsDocument6 paginiLatest Research Papers On Embedded Systemsfvg2xg5r100% (1)

- Automatic Railway Gate Control SystemDocument85 paginiAutomatic Railway Gate Control SystemTarunKumarÎncă nu există evaluări

- Faculty of Engineering Production Engineering Department 1 Year Production EngineeringDocument16 paginiFaculty of Engineering Production Engineering Department 1 Year Production EngineeringillyricÎncă nu există evaluări

- The Execution Model of APZ/PLEX - An Informal Description: Johan Erikson and Bo LindellDocument47 paginiThe Execution Model of APZ/PLEX - An Informal Description: Johan Erikson and Bo Lindellyyyy22221111Încă nu există evaluări

- Project ReportDocument78 paginiProject ReportSolomon AkpanÎncă nu există evaluări

- Design and Development of Automatic Wheelchair Cum Patient BedDocument90 paginiDesign and Development of Automatic Wheelchair Cum Patient BedSRL MECHÎncă nu există evaluări

- Sri Venkateswara Engineering CollegeDocument12 paginiSri Venkateswara Engineering Collegeapi-19799369Încă nu există evaluări

- NGG Project ReportDocument25 paginiNGG Project ReportSadia AbidÎncă nu există evaluări

- Research Papers On Embedded Systems PDFDocument4 paginiResearch Papers On Embedded Systems PDFwtdcxtbnd100% (1)

- Introduction To MicrocontrollersDocument13 paginiIntroduction To Microcontrollersjlva_lkpbÎncă nu există evaluări

- Cougar DesignDocument25 paginiCougar DesignNewsie IvmÎncă nu există evaluări

- Recent Research Paper On Embedded SystemDocument5 paginiRecent Research Paper On Embedded Systemc9sj0n70100% (1)

- TMP 3492Document2 paginiTMP 3492nithiananthiÎncă nu există evaluări

- A Brief Computer HistoryDocument6 paginiA Brief Computer HistoryMalachi LamaÎncă nu există evaluări

- Systolic Algorithm Design: Hardware Merge Sort and Spatial FPGA Cell Placement Case StudiesDocument23 paginiSystolic Algorithm Design: Hardware Merge Sort and Spatial FPGA Cell Placement Case StudiesAbhishek SaxenaÎncă nu există evaluări

- Pic Microcontroller Literature ReviewDocument4 paginiPic Microcontroller Literature Reviewafdttjcns100% (1)

- Research Paper On Embedded Systems PDFDocument5 paginiResearch Paper On Embedded Systems PDFjrcrefvhf100% (1)

- Introduction To Microcontrollers: Department of EIE / Pondicherry Engineering CollegeDocument12 paginiIntroduction To Microcontrollers: Department of EIE / Pondicherry Engineering Collegeprof_kt100% (1)

- Eth Informatik Bachelor ThesisDocument6 paginiEth Informatik Bachelor ThesisCollegePaperHelpFargo100% (2)

- Frequency Locked Loop For DC MotorsDocument102 paginiFrequency Locked Loop For DC MotorsBhagya Sri100% (2)

- Cs Ee World 32/34 A MAY 2019Document35 paginiCs Ee World 32/34 A MAY 2019SherilÎncă nu există evaluări

- Expert System BookDocument310 paginiExpert System BookRadha GuhaÎncă nu există evaluări

- Sms Based Digital Notice BoardDocument107 paginiSms Based Digital Notice BoardSaara KhanÎncă nu există evaluări

- Fusion Technology: White PaperDocument11 paginiFusion Technology: White PapersamdakÎncă nu există evaluări

- 8-Bit ComputerDocument55 pagini8-Bit Computerxowodi9273Încă nu există evaluări

- What Is A Microcontroller?: MicrocontrollersDocument16 paginiWhat Is A Microcontroller?: MicrocontrollersJeanÎncă nu există evaluări

- PIC MicrocontrollersDocument188 paginiPIC MicrocontrollersMuhammad Auwal HajaraÎncă nu există evaluări

- Computer Organization Computer ArchitectureDocument23 paginiComputer Organization Computer Architectureyashmit maviÎncă nu există evaluări

- Understanding Microcontrollers To Choose HardwareDocument8 paginiUnderstanding Microcontrollers To Choose HardwareMoog AlexÎncă nu există evaluări

- ES QB With AnswersDocument41 paginiES QB With AnswersvamsiÎncă nu există evaluări

- Advanced Electronic Protection System To Prevent Exam Paper LeakagesDocument135 paginiAdvanced Electronic Protection System To Prevent Exam Paper LeakagesSyed Viquar AhmedÎncă nu există evaluări

- Course Title Microprocessor SystemDocument20 paginiCourse Title Microprocessor SystemElmustafa Sayed Ali AhmedÎncă nu există evaluări

- Computer Architecture: Dept. of Computer Science (UOG) University of GujratDocument20 paginiComputer Architecture: Dept. of Computer Science (UOG) University of GujratRabia ChaudharyÎncă nu există evaluări

- ZRNGDocument12 paginiZRNGzrng zrngÎncă nu există evaluări

- Department of Information and Communication Technology Course: ICT-3205: Computer Architecture and MicroprocessorDocument11 paginiDepartment of Information and Communication Technology Course: ICT-3205: Computer Architecture and MicroprocessorBashar AhmedÎncă nu există evaluări

- The Brain Behind The Computing: TechnologyDocument3 paginiThe Brain Behind The Computing: TechnologykarmaÎncă nu există evaluări

- Computer-Architecture 1 PDFDocument0 paginiComputer-Architecture 1 PDFwww.bhawesh.com.npÎncă nu există evaluări

- The Internet of Things (Iot) : International University of Sarajevo Fens Electrical and ElectronicsDocument8 paginiThe Internet of Things (Iot) : International University of Sarajevo Fens Electrical and ElectronicsSalahuddin HusseinÎncă nu există evaluări

- Application of FPGA to Real‐Time Machine Learning: Hardware Reservoir Computers and Software Image ProcessingDe la EverandApplication of FPGA to Real‐Time Machine Learning: Hardware Reservoir Computers and Software Image ProcessingÎncă nu există evaluări

- Immersion V FitbitDocument29 paginiImmersion V FitbitJonah ComstockÎncă nu există evaluări

- 5step E1Document338 pagini5step E1coolthÎncă nu există evaluări

- Basic Codes: Location On VehicleDocument7 paginiBasic Codes: Location On Vehicledaniel_jorge_10Încă nu există evaluări

- DC13 072A. 356-415 KW (408-471 kVA) : Fuel OptimizedDocument2 paginiDC13 072A. 356-415 KW (408-471 kVA) : Fuel OptimizedbrayandparavicinoÎncă nu există evaluări

- 20th SAG HR PDFDocument36 pagini20th SAG HR PDFDaskam JarkomÎncă nu există evaluări

- EricoDocument2 paginiEricoguspriyÎncă nu există evaluări

- Titanium Dioxide Nanoparticle - WikipediaDocument30 paginiTitanium Dioxide Nanoparticle - WikipediaAnonymous gUjimJKÎncă nu există evaluări

- Atlapex Solidtire Catalog 2020Document14 paginiAtlapex Solidtire Catalog 2020JONHHY NGUYEN DANGÎncă nu există evaluări

- Blade 360 Sensor Posicion Rotativo SensovantDocument2 paginiBlade 360 Sensor Posicion Rotativo SensovantGianfranco JulcaÎncă nu există evaluări

- Jain Plumbing SWR Upvc Pipe FittingsDocument6 paginiJain Plumbing SWR Upvc Pipe FittingsKrishan PandyaÎncă nu există evaluări

- FAST EnergyCam2 RF DatasheetDocument2 paginiFAST EnergyCam2 RF DatasheetMisbakhul MunirÎncă nu există evaluări

- Intern ReportDocument38 paginiIntern ReportMardi Mude100% (1)

- Main Point of StandardDocument5 paginiMain Point of StandardAMIT SHAHÎncă nu există evaluări

- Transportation Problem in The City of MakassarDocument6 paginiTransportation Problem in The City of MakassarDaftar Pustaka100% (1)

- Bridge 2 Raft CalculationDocument3 paginiBridge 2 Raft CalculationAnurag KumarÎncă nu există evaluări

- AutoFlow Automatic Balancing 10.23.15 SinglePageDocument16 paginiAutoFlow Automatic Balancing 10.23.15 SinglePagepradeepÎncă nu există evaluări

- HumpDocument6 paginiHumpdeepÎncă nu există evaluări

- STAAD Foundation Advanced Analysis and Design Software Addresses The BuildingDocument11 paginiSTAAD Foundation Advanced Analysis and Design Software Addresses The BuildingSuci PuspadillaÎncă nu există evaluări

- Rexton Notice English VersionDocument242 paginiRexton Notice English Versionnkapnangluther3099Încă nu există evaluări

- NAP Rectangular WebDocument6 paginiNAP Rectangular WebhadianatyaÎncă nu există evaluări

- FibersparDocument2 paginiFibersparippon_osotoÎncă nu există evaluări