Documente Academic

Documente Profesional

Documente Cultură

What Is Econometrics?: (Ref: Wooldridge, Chapter 1)

Încărcat de

universedrillTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

What Is Econometrics?: (Ref: Wooldridge, Chapter 1)

Încărcat de

universedrillDrepturi de autor:

Formate disponibile

QUAN201 Introductory Econometrics Dean Hyslop

Lecture 1 (Ref: Wooldridge, Chapter 1)

1. What is Econometrics?

It is the Statistical or empirical analysis of economic

relationships.

This may involve:

Using economic theory to guide statistical modelling

Using statistical methods to:

1. Estimate economic relationships

E.g. the effect of education on wages

2. Test economic theories

E.g. does raising minimum wage reduce

employment?

3. Evaluate / implement govt / business policy

E.g. how effective are job training programmes on

low-skilled employment & wages

4. Forecast / predict economic variables

E.g. GDP growth, inflation, interest rates, etc.

2

2. How does Econometrics differ from

(Classical) Statistics?

Classical Statistics generally deals in the context of

experimental data collected in laboratory environments in

the natural sciences:

So the effects of control/treatment variables on

outcome/response variables can be examined

directly by changing the level of the control

variable(s), holding all-else equal, and measuring

the effect on the outcome variable(s).

In contrast, most economic (and other social science) data

is generally non-experimental in nature:

So there is often no reason to believe that, if two

observations of a control variable differ, that all-else

is the same between these observations.

E.g. can we assume 2 randomly selected people with

different education levels the same in other respects?

Therefore, many non-experimental issues need to be

addressed in econ metrics in order to confidently infer

causality between a control variable and an outcome

variable.

This makes econometrics both difficult and interesting!

3

3. Stages of Econometric Analysis

1. Careful formulation of the question of interest

E.g. what is the effect of an additional year of education

on wages?

2. Either use economic theory or an informal/intuitive

approach to develop an economic framework for estimation

& testing

E.g. Mincers (1962) Human Capital Theory

3. Translate the economic model into an econometric

model to be estimated statistically. This requires:

functional form of the relationship(s) to be estimated

between the observed variables of interest;

assumptions on the effects of unobserved factors

E.g. linear relationship between log(wages) and years of

education, and assume other factors that affect wages are

uncorrelated with education

4. Formulate hypotheses of interest in terms of the

(unknown) model parameters.

E.g. H

0

: returns to education = 0 vs H

1

: not so

Empirical analysis requires data!

5. Given data, proceed to estimation, hypothesis testing

and general model-specification evaluation

Note: Generally econometrics begins at stage-3, the

econometric specification of the model

QUAN201 Introductory Econometrics Dean Hyslop

Lecture 2 (Ref: Wooldridge, Chapter 2)

The starting point for any statistical analysis should be a

description of the data to:

i. understand what the data are supposed to measure

ii. check whether there are values that look like they were

mis-measured e.g. outliers

This can be done using:

i. simple graphical methods e.g. histograms or

scatterplots, etc; and/or

ii. common descriptive statistics e.g. mean, median,

standard deviation, etc.

After some such descriptive analysis, regression is the most

important building block in econometrics

1. What is Regression?

possible answers:

i. Fitting a line through data e.g. scatterplot of two

variables X and Y.

ii. Estimating the relationship between two or more

variables e.g. how are variables X and Y related?

iii. Ultimately, used as a basis for causal interpretation in

econometrics e.g. to answer the question: what is the

effect of a change in variable-X on outcome-Y?

2

2. Regression as line fitting

Consider the following scatterplot of data for two

variables X and Y :

Our interest is to fit a (simple) linear regression,

represented by

i i i

e bX a Y

,

to the (X

i

,Y

i

) scatterplot of data, where:

Y

i

is called the outcome or dependent variable,

X

i

is the explanatory or independent variable,

e

i

is the residual (or error),

(a,b) are regression parameters or coefficients: a is

called the intercept, and b is called slope, and

the subscript i denotes observation-i.

3

Three classic Examples:

1. Galton (1886, Regression towards Mediocrity ):

X

i

= father-is height (in metres),

Y

i

= son-is height (in metres)

What is the relationship between ingenerational family

structure, as measured by fathers and sons heights?

2. Returns to education (e.g. Mincer, 1962):

X

i

= person-is schooling level (years of education),

Y

i

= is wages ($/hour worked)

What is the average effect of an additional year of

education on a workers wage?

3. Phillips-curve (Phillips, 1958, Economica):

X

t

= year-t unemployment rate (percent unemployed),

Y

t

= year-t wage inflation rate (percent)

What is the relationship between unemployment and

wage inflation?

4

Consider three possible lines fit to these data, labelled A, B,

and C as follows.

First, consider line-A

Let

i i

bX a Y

be the fitted (or predicted) value of Y,

given the value-X

i

i.e. the point on the fitted line

corresponding to X

i

.

Then the residual is the difference between the actual

and predicted Y-values: i.e.

) (

i i i i i

bX a Y Y Y e

.

Intuitively, line-A doesnt fit the data i.e. it doesnt go

through the scatterplot!

More formally, the residuals (e

i

) are all negative so the

average residual,

0

1

1

N

i

i

e

N

e

. This suggests:

Property 1: one desirable property of a regression line fit

is that the average residual is 0: i.e.

0

1

1

N

i

i

e

N

e

.

5

Next, consider line-B

Line-B satisfies the zero average residual condition but

still doesnt look like a good fit, because there are mostly

negative residuals for low-Xs and positive residuals for

high-Xs.

More formally, the problem is the residuals are correlated

with the X

i

s i.e. their covariance should be zero:

0 ) )( (

1

) , cov(

1

N

i

i i i i

X X e e

N

X e

.

This suggests:

Property 2: a second desirable property of a regression

line fit is that the residuals are uncorrelated with the Xs.

Note: if 0 e , then this implies:

0

1

) , cov(

1

N

i

i i i i

X e

N

X e

.

6

Finally, consider line-C

Line-C looks like a good fitting line, and satisfies both

properties 1 and 2.

This very intuitive sense of a good fitting regression line

is based on a method of moments approach

It turns out that this approach leads to the (essentially)

most commonly used estimators in regression analysis.

These are generally referred to as the Ordinary Least

Squares (OLS) estimators the OLS name comes from an

alternative approach than intuited here.

But, lets see what this method of moments approach

implies about the regression coefficient estimates

7

3. Summary / Implications

This very intuitive discussion of fitting a good line to a

scatterplot relied on three aspects:

1. The assumed functional form of the relationship

between Y and X i.e. is linear

2. The resulting residuals should have zero average; and

3. The residuals should be uncorrelated with the X

i

s

To estimate the coefficients (a & b) of the good-fitting line,

we use these three points.

Property 1: Zero average residual

0 )

(

1

1

N

i

i i

Y Y

N

e

Y Y

i.e. avg actual-Y = avg predicted-Y.

And, using the linear functional form assumption,

0 )) ( (

1

1

N

i

i i

bX a Y

N

which implies

X b a Y ,

and solving for a , gives:

X b Y a

i.e. the intercept = avg-Y b*avg-X.

8

Property 2: zero correlation between residuals and Xs

0

) 1 (

1

) , cov(

1

N

i

i i i i

X e

N

X e

0 )) ) ( (

1

N

i

i i i

X bX X b Y Y

0 ) ( ) (

1 1

N

i

i i

N

i

i i

X X X b X Y Y

Solving for b, gives

N

i

i i

N

i

i i

X X X

X Y Y

b

1

1

) (

) (

.

Since

N

i

i

N

i

i

X X X X Y Y

1 1

) ( 0 ) (

, we can rewrite

this to solve for b,

N

i

i i

N

i

i i

X X X X

N

X X Y Y

N

b

1

1

) )( (

) 1 (

1

) )( (

) 1 (

1

,

which is simply

) (

) , (

i

i i

X Var

Y X Cov

b

i.e. the slope parameter is the covariance between X

i

and Y

i

divided (i.e. normalised) by the variance of X

i

.

S-ar putea să vă placă și

- Wells Fargo Everyday CheckingDocument6 paginiWells Fargo Everyday CheckingDavid Dali Rojas Huamanchau100% (1)

- BLP Assignment 2014Document3 paginiBLP Assignment 2014universedrillÎncă nu există evaluări

- Statistical Inference in Financial and Insurance Mathematics with RDe la EverandStatistical Inference in Financial and Insurance Mathematics with RÎncă nu există evaluări

- Money, Banking and Finance Je Rey Rogers Hummel Warren C. GibsonDocument291 paginiMoney, Banking and Finance Je Rey Rogers Hummel Warren C. GibsonrickloriÎncă nu există evaluări

- Simple Regression Model: Erbil Technology InstituteDocument9 paginiSimple Regression Model: Erbil Technology Institutemhamadhawlery16Încă nu există evaluări

- Econometrics Chapter 1& 2Document35 paginiEconometrics Chapter 1& 2Dagi AdanewÎncă nu există evaluări

- Introductory Econometrics: Wang WeiqiangDocument57 paginiIntroductory Econometrics: Wang WeiqiangChadi SakhyÎncă nu există evaluări

- Econometrics Hw-1Document31 paginiEconometrics Hw-1saimaÎncă nu există evaluări

- Chapter Two: Bivariate Regression ModeDocument54 paginiChapter Two: Bivariate Regression ModenahomÎncă nu există evaluări

- UC Berkeley Econ 140 Section 10Document8 paginiUC Berkeley Econ 140 Section 10AkhilÎncă nu există evaluări

- Econometrics Assig 1Document13 paginiEconometrics Assig 1ahmed0% (1)

- Tema 0 EconometricsDocument6 paginiTema 0 EconometricsJacobo Sebastia MusolesÎncă nu există evaluări

- Lecture 1Document37 paginiLecture 1Eda UstaogluÎncă nu există evaluări

- Chen - Pearl Regression and Causation A Critical Examination of Six Econometrics Textbooks (2013)Document25 paginiChen - Pearl Regression and Causation A Critical Examination of Six Econometrics Textbooks (2013)Juan Manuel Cisneros GarcíaÎncă nu există evaluări

- Regression Analysis (Simple)Document8 paginiRegression Analysis (Simple)MUHAMMAD HASAN NAGRA100% (1)

- Regression Analysis With Cross-Sectional DataDocument0 paginiRegression Analysis With Cross-Sectional DataGabriel SimÎncă nu există evaluări

- Economics 717 Fall 2019 Lecture - HeckmanDocument16 paginiEconomics 717 Fall 2019 Lecture - HeckmanHarrison NgÎncă nu există evaluări

- Unit 1: Introduction To Econometric Analysis OUTLINE: A. Economic Questions and Data: The Role of EconometricsDocument23 paginiUnit 1: Introduction To Econometric Analysis OUTLINE: A. Economic Questions and Data: The Role of EconometricsMike WazowskiÎncă nu există evaluări

- ArunRangrejDocument5 paginiArunRangrejArun RangrejÎncă nu există evaluări

- Econometric SDocument26 paginiEconometric SMunnah BhaiÎncă nu există evaluări

- Eco Theory PDFDocument42 paginiEco Theory PDFRamiz AhmedÎncă nu există evaluări

- Chapter - Two - Simple Linear Regression - Final EditedDocument28 paginiChapter - Two - Simple Linear Regression - Final Editedsuleymantesfaye10Încă nu există evaluări

- Econometrics - SlidesDocument264 paginiEconometrics - SlidesSamuel ObengÎncă nu există evaluări

- Econometric Model With Cross-Sectional, Time Series, and Panel DataDocument4 paginiEconometric Model With Cross-Sectional, Time Series, and Panel DataDian KusumaÎncă nu există evaluări

- 2013 Trends in Global Employee Engagement ReportDocument8 pagini2013 Trends in Global Employee Engagement ReportAnupam BhattacharyaÎncă nu există evaluări

- 1 205Document205 pagini1 205bicameloÎncă nu există evaluări

- Bus 173 - Lecture 5Document38 paginiBus 173 - Lecture 5Sabab MunifÎncă nu există evaluări

- Studenmund Top1.107Document10 paginiStudenmund Top1.107Ramsha SohailÎncă nu există evaluări

- Linear Regression ModelDocument3 paginiLinear Regression ModelmarkkkkkkkheeessÎncă nu există evaluări

- Econometrics Lecture Notes BookletDocument81 paginiEconometrics Lecture Notes BookletilmaÎncă nu există evaluări

- Ordinary Least SquaresDocument21 paginiOrdinary Least SquaresRahulsinghooooÎncă nu există evaluări

- Econometrics Whole Course PDFDocument50 paginiEconometrics Whole Course PDFshubhÎncă nu există evaluări

- LecturesDocument766 paginiLecturesHe HÎncă nu există evaluări

- Econometrics PperDocument26 paginiEconometrics PperMunnah BhaiÎncă nu există evaluări

- Assignment 1 StatisticsDocument6 paginiAssignment 1 Statisticsjaspreet46Încă nu există evaluări

- Iskak, Stats 2Document5 paginiIskak, Stats 2Iskak MohaiminÎncă nu există evaluări

- Quantile RegressionDocument122 paginiQuantile RegressionXurumela AtomicaÎncă nu există evaluări

- Jep 15 4 143Document125 paginiJep 15 4 143Sangat BaikÎncă nu există evaluări

- Chapter 1 AcctDocument8 paginiChapter 1 Accthaile ethioÎncă nu există evaluări

- Chapter 2 AcctDocument18 paginiChapter 2 Accthaile ethioÎncă nu există evaluări

- Lecture 3Document31 paginiLecture 3Shahmir BukhariÎncă nu există evaluări

- Lesson1 - Simple Linier RegressionDocument40 paginiLesson1 - Simple Linier Regressioniin_sajaÎncă nu există evaluări

- MIT Graduate Labor Economics 14.662 Spring 2015 Note 1: Wage Density DecompositionsDocument39 paginiMIT Graduate Labor Economics 14.662 Spring 2015 Note 1: Wage Density Decompositionsarati.kundu.1943Încă nu există evaluări

- ECON3049 Lecture Notes 1Document32 paginiECON3049 Lecture Notes 1Kimona PrescottÎncă nu există evaluări

- Econometrics Notes PDFDocument8 paginiEconometrics Notes PDFumamaheswariÎncă nu există evaluări

- University of Caloocan City: Managerial Economics Eco 3Document34 paginiUniversity of Caloocan City: Managerial Economics Eco 3Tutorial VlogÎncă nu există evaluări

- Basic Econometrics 1 (Password - BE)Document30 paginiBasic Econometrics 1 (Password - BE)SULEMAN HUSSEINIÎncă nu există evaluări

- 03 ES Regression CorrelationDocument14 pagini03 ES Regression CorrelationMuhammad AbdullahÎncă nu există evaluări

- RegressionDocument14 paginiRegressionAndleeb RazzaqÎncă nu există evaluări

- ThesisDocument8 paginiThesisdereckg_2Încă nu există evaluări

- Handout 05 Regression and Correlation PDFDocument17 paginiHandout 05 Regression and Correlation PDFmuhammad aliÎncă nu există evaluări

- Nonparametric Analysis of Factorial Designs With Random Missingness: Bivariate DataDocument38 paginiNonparametric Analysis of Factorial Designs With Random Missingness: Bivariate DataHéctor YendisÎncă nu există evaluări

- Regression and CorrelationDocument13 paginiRegression and CorrelationzÎncă nu există evaluări

- RESEARCH METHODS LESSON 18 - Multiple RegressionDocument6 paginiRESEARCH METHODS LESSON 18 - Multiple RegressionAlthon JayÎncă nu există evaluări

- Assignment Linear RegressionDocument10 paginiAssignment Linear RegressionBenita NasncyÎncă nu există evaluări

- Stat 473-573 NotesDocument139 paginiStat 473-573 NotesArkadiusz Michael BarÎncă nu există evaluări

- Unit Regression Analysis: ObjectivesDocument18 paginiUnit Regression Analysis: ObjectivesSelvanceÎncă nu există evaluări

- (Bruderl) Applied Regression Analysis Using StataDocument73 pagini(Bruderl) Applied Regression Analysis Using StataSanjiv DesaiÎncă nu există evaluări

- Statistic SimpleLinearRegressionDocument7 paginiStatistic SimpleLinearRegressionmfah00Încă nu există evaluări

- Quantile Regression: Roger Koenker and Kevin F. HallockDocument14 paginiQuantile Regression: Roger Koenker and Kevin F. HallockveekenÎncă nu există evaluări

- Reading 11: Correlation and Regression: S S Y X Cov RDocument24 paginiReading 11: Correlation and Regression: S S Y X Cov Rd ddÎncă nu există evaluări

- Digital Signal Processing (DSP) with Python ProgrammingDe la EverandDigital Signal Processing (DSP) with Python ProgrammingÎncă nu există evaluări

- U 24Document8 paginiU 24Aron Santa CruzÎncă nu există evaluări

- Ene 12072501eDocument16 paginiEne 12072501euniversedrillÎncă nu există evaluări

- U 24Document8 paginiU 24Aron Santa CruzÎncă nu există evaluări

- ThesisDocument148 paginiThesisuniversedrillÎncă nu există evaluări

- Cannibalization and Preemptive Entry in Heterogeneous MarketsDocument38 paginiCannibalization and Preemptive Entry in Heterogeneous MarketsuniversedrillÎncă nu există evaluări

- UseR SC 10 B Part2 PDFDocument80 paginiUseR SC 10 B Part2 PDFuniversedrillÎncă nu există evaluări

- ABCDocument17 paginiABCuniversedrillÎncă nu există evaluări

- Lancaster 2Document27 paginiLancaster 2universedrillÎncă nu există evaluări

- Midterm PDFDocument9 paginiMidterm PDFuniversedrillÎncă nu există evaluări

- 04 Jackknife PDFDocument22 pagini04 Jackknife PDFuniversedrillÎncă nu există evaluări

- Lack of Competition Stifles European AuctionsDocument22 paginiLack of Competition Stifles European AuctionsuniversedrillÎncă nu există evaluări

- SEF - Honours - Symposium - PROGRAMME - 15 & 16 Apr 2013 PDFDocument4 paginiSEF - Honours - Symposium - PROGRAMME - 15 & 16 Apr 2013 PDFuniversedrillÎncă nu există evaluări

- Company Profile ManmulDocument3 paginiCompany Profile ManmulAston Rahul Pinto50% (2)

- Liebherr Annual-Report 2017 en Klein PDFDocument82 paginiLiebherr Annual-Report 2017 en Klein PDFPradeep AdsareÎncă nu există evaluări

- Job Order Costing Seatwork - 2Document2 paginiJob Order Costing Seatwork - 2Akira Marantal ValdezÎncă nu există evaluări

- EcoTourism Unit 8Document20 paginiEcoTourism Unit 8Mark Angelo PanisÎncă nu există evaluări

- Holly Tree 2015 990taxDocument21 paginiHolly Tree 2015 990taxstan rawlÎncă nu există evaluări

- Economic Impact of Beef Ban - FinalDocument18 paginiEconomic Impact of Beef Ban - Finaldynamo vjÎncă nu există evaluări

- H1 / H2 Model Economics Essay (SAMPLE MICROECONOMICS QUESTION)Document2 paginiH1 / H2 Model Economics Essay (SAMPLE MICROECONOMICS QUESTION)ohyeajcrocksÎncă nu există evaluări

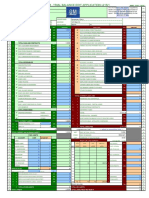

- GM OEM Financials Dgi9ja-2Document1 paginăGM OEM Financials Dgi9ja-2Dananjaya GokhaleÎncă nu există evaluări

- PHM PCH EnglishDocument9 paginiPHM PCH Englishlalit823187Încă nu există evaluări

- Indraneel N M.com SBKDocument3 paginiIndraneel N M.com SBKJared MartinÎncă nu există evaluări

- Price and Output Determination Under Monopoly MarketDocument16 paginiPrice and Output Determination Under Monopoly MarketManak Ram SingariyaÎncă nu există evaluări

- Indian Real Estate SectorDocument8 paginiIndian Real Estate SectorSumit VrmaÎncă nu există evaluări

- Gender Wise Classification of RespondentsDocument34 paginiGender Wise Classification of Respondentspavith ranÎncă nu există evaluări

- The Most Important Events in The History of The United StatesDocument20 paginiThe Most Important Events in The History of The United StatesJuan Carlos Ponce ReyesÎncă nu există evaluări

- Tax Reviewer For MidtermDocument4 paginiTax Reviewer For Midtermjury jasonÎncă nu există evaluări

- Lab Project On Procedure For Fixing and Revising Minimum Wages Our LawDocument13 paginiLab Project On Procedure For Fixing and Revising Minimum Wages Our LawSnigdha SharmaÎncă nu există evaluări

- Business Economics - Neil Harris - Summary Chapter 3Document2 paginiBusiness Economics - Neil Harris - Summary Chapter 3Nabila HuwaidaÎncă nu există evaluări

- Chapter 6 MoodleDocument36 paginiChapter 6 MoodleMichael TheodricÎncă nu există evaluări

- IpolDocument3 paginiIpolamelÎncă nu există evaluări

- Paper 3 of JAIIB Is Legal and Regulatory Aspects of BankingDocument3 paginiPaper 3 of JAIIB Is Legal and Regulatory Aspects of BankingDhiraj PatreÎncă nu există evaluări

- Account Statement: Date Value Date Description Cheque Deposit Withdrawal BalanceDocument2 paginiAccount Statement: Date Value Date Description Cheque Deposit Withdrawal BalancesadhanaÎncă nu există evaluări

- Yong Le: Beijing Huaxia Yongleadhesive Tape Co., LTDDocument9 paginiYong Le: Beijing Huaxia Yongleadhesive Tape Co., LTDColors Little ParkÎncă nu există evaluări

- Pipistrel Alpha Trainer e Learning 2020Document185 paginiPipistrel Alpha Trainer e Learning 2020Kostas RossidisÎncă nu există evaluări

- Resolution On Road and Brgy Hall Financial AssistanceDocument6 paginiResolution On Road and Brgy Hall Financial AssistanceEvelyn Agustin Blones100% (2)

- Katalog Akitaka Opory Dvigatelya 2017-2018Document223 paginiKatalog Akitaka Opory Dvigatelya 2017-2018Alexey KolmakovÎncă nu există evaluări

- Invitation To Bid: Dvertisement ArticularsDocument2 paginiInvitation To Bid: Dvertisement ArticularsBDO3 3J SolutionsÎncă nu există evaluări

- Indias Top 50 Best ItDocument14 paginiIndias Top 50 Best ItAnonymous Nl41INVÎncă nu există evaluări

- Baggage Provisions 7242128393292Document2 paginiBaggage Provisions 7242128393292alexpepe1234345Încă nu există evaluări