Documente Academic

Documente Profesional

Documente Cultură

A Technical 3PAR Presentation v9 4nov11 PDF

Încărcat de

DanTWDescriere originală:

Titlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

A Technical 3PAR Presentation v9 4nov11 PDF

Încărcat de

DanTWDrepturi de autor:

Formate disponibile

Copyright 2011 Hewlett-Packard Development Company, L.P.

The information

contained herein is subject to change without notice. Confidentiality label goes here

Peter Mattei, Senior Storage Consultant

November 2011

A technical overview of

HP 3PAR Utility Storage

The worlds most agile and efficient Storage Array

HP Copyright 2011 Peter Mattei

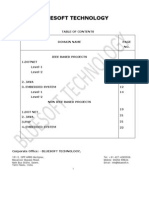

Table of content

The IT Sprawl and how 3PAR can help

HP Storage & SAN Portfolio

Introducing the HP 3PAR Storage Servers

F-Class

T-Class

V-Class

HP 3PAR InForm OS Virtualization Concepts

HP 3PAR InForm Software and Features

Thin Technologies

Full and Virtual Copy

Remote Copy

Dynamic and Adaptive Optimization

Peer Motion

Virtual Domain

Virtual Lock

System Reporter

VMware Integration

Recovery Manager

HP Copyright 2011 Peter Mattei

Creates challenges for Mission Critical Infrastructure

The IT Sprawl

Source: HP research

of resources captive in

operations and maintenance

70%

Increased Risk

Inefficient and Siloed

Complicated and Inflexible

Business innovation

throttled to

30%

3

HP Copyright 2011 Peter Mattei

And storage must change with it

The world has changed

Explosive growth

& new workloads

Virtualization

& automation

Cloud & utility

computing

Infrastructure &

technology shifts

Too complicated to manage

Expensive & hard to scale

Isolated & disconnected

Inefficient & inflexible

Simple

Scalable

Smart

Self-Optimized

Customers tell us storage is: Storage needs to be:

4

HP Copyright 2011 Peter Mattei

3PAR Thin Provisioning

Best new technology in the market

Industry leading technology to maximize storage utilization

Automatically optimizes using multiple classes of storage

Workload management and load balancing

Advanced shared memory architecture

Multi-tenancy for service providers and private clouds

HP 3PAR Industry Leadership

3PAR Autonomic Storage Tiering

3PAR Virtual Domains

3PAR Dynamic Optimization

3PAR Full Mesh Architecture

HP Copyright 2011 Peter Mattei

Constant evolution

HP 3PAR History

6

1999 2001 2002 2003 2004 2010 2005 2006 2007 2008 2009 2011

May 1999

3PAR founded

with 5 employees

July 2001

3PAR secures $100 Million

in third-round financing

June

3PAR Utility Storage and

Thin Provisioning launch

in the US and Japan

September

General

Availability of the

InServ S-Class

Storage Server

May

3PAR introduces

Dynamic Optimization

and Recovery Manager

August

Introduction of the

E-Class midrange

Storage Server

September

Introduction of the T-Class

with Gen 3 ASIC - the first

Thin Built in storage array

April

Introduction of the F-Class

the first quad controller

midrange array

November

3PAR IPO

Introduction of

Virtual Domains

and iSCSI support

September

3PAR acquired by HP

November

InForm OS v2.3.1 released

With many new features

March

Introduction of Adaptive

Optimization and Recovery

Manager for VMware

December 2000

Bring-up of the Gen 1

3PAR ASIC

2000 2012

August

Introduction of

V-Class w. Gen 4 ASIC,

InForm v3.1.1,

Peer Motion

HP Copyright 2011 Peter Mattei

HP 3PAR Leadership Efficient

HP 3PAR Customers reduce TCO by 50%

Green Optimized Thin

Reduce capacity

requirements by at

least 50%

Tiering balances

$/GB and $/IOP

Reduce power

and cooling costs at

least 50%

7

Tier 2

Nearline

Tier 1

FC

Tier 0 SSD

HP Copyright 2011 Peter Mattei

HP 3PAR Leadership Autonomic

HP 3PAR Customers reduce storage management

burden by 90% compared to competitors arrays

Respond to Change

Quickly

Maintain

Service Levels

Up Fast

15 Seconds to

provision a LUN

Deliver high performance

to all applications. Even

under failure scenarios.

Quickly adapt to the

unpredictable

8

HP Copyright 2011 Peter Mattei

HP 3PAR Leadership Multi-Tenant

The Tier-1 Storage for Utility Computing

Resilient Secure Shared

Massive

Consolidation

Storage can be used

across many different

applications and lines

of business

Virtual Private

Array

Secure segregation of

storage while preserving

the benefits of massive

parallelism

Ready for Change

Sustain and consolidate

diverse or changing service

levels without compromise

9

HP Copyright 2011 Peter Mattei

I

n

f

r

a

s

t

r

u

c

t

u

r

e

HP Networking Wired, Wireless,

Data Center, Security & Management

B, C & H Series

FC Switches & /Directors

SAN Connection

Portfolio

HP Networking

Enterprise Switches

N

e

a

r

l

i

n

e

D2D Backup

Systems

ESL tape

libraries

VLS virtual

library

systems

EML tape

libraries

MSL tape

libraries

RDX, tape drives

& tape autoloaders

S

o

f

t

w

a

r

e

S

e

r

v

i

c

e

s

O

n

l

i

n

e

P2000 X1000/X3000 P9500 XP X9000 P6000 EVA P4000 3PAR

Data Protector

Express

Storage

Essentials

Storage Array

Software

Storage

Mirroring

Data

Protector

Business Copy

Continuous Access

Cluster Extension

SAN Implementation Storage Performance Analysis Entry Data Migration Data Migration Installation & Start-up

Proactive 24 Critical Service Proactive Select Backup & Recovery SupportPlus 24 SAN Assessment

Consulting services (Consolidation, Virtualization, SAN Design) Data Protection Remote Support

The HP Storage Portfolio

X5000

E5000

for Exchange

HP Copyright 2011 Peter Mattei

HP Storage Array Positioning

P2000 MSA P9500 P4000 LeftHand

Virtual IT

Mission Critical

Consolidation

P6000 EVA

Utility Storage

3PAR

Application

Consolidation

Storage

Consolidation

Architecture Dual Controller Scale-out Cluster Dual Controller Mesh-Active Cluster Fully Redundant

Connectivity SAS, iSCSI, FC iSCSI FC, iSCSI, FCoE iSCSI, FC, (FCoE) FC, FCoE

Performance 30K Random Read IOPs ;

1.5GB/s seq reads

35K Random read IOPs

2.6 GB/s seq reads

55K Random read IOPS

1.7 GB/s seq Reads

> 400K random IOPs;

> 10 GB/s seq reads

>300K Random IOPS

> 10GB/s seq reads

Application

Sweet spot

SMB , enterprise ROBO,

consolidation/ virtualization

Server attach, Video

surveillance

SMB, ROBO and Enterprise

Virtualized inc VDI ,

Microsoft apps

BladeSystem SAN (P4800)

Enterprise - Microsoft,

Virtualized, OLTP

Enterprise and Service

Provider , Utilities, Cloud,

Virtualized Environments,

OLTP, Mixed Workloads

Large Enterprise - Mission

Critical w/Extreme

availability, Virtualized

Environments, Multi-Site DR

Capacity 600GB 192TB;

6TB average

7TB 768TB;

72TB average

2TB 480TB;

36TB average

5TB 1600TB;

120TB average

10TB 2000 TB;

150TB average

Key features Price / performance

Controller Choice

Replication

Server Attach

All-inclusive SW

Multi-Site DR included

Virtualization

VM Integration

Virtual SAN Appliance

Ease of use and Simplicity

Integration/Compatibility

Multi-Site Failover

Multi-tenancy

Efficiency (Thin Provisioning)

Performance

Autonomic Tiering and

Management

Constant Data Availability

Heterogeneous

Virtualization

Multi-site Disaster Recovery

Application QOS (APEX)

Smart Tiers

OS support Windows, vSphere, HP-UX,

Linux, OVMS, Mac OS X,

Solaris, Hyper-V

vSphere. Windows, Linux,

HP-UX, MacOS X, AIX,

Solaris, XenServer

Windows, VMware, HP-UX,

Linux, OVMS, Mac OS X,

Solaris, AIX

vSphere, Windows, Linux,

HP-UX, AIX, Solaris

All major OSs including

Mainframe and Nonstop

HP Copyright 2011 Peter Mattei

Brocade switch, director, HBA and software family

B-Series SAN Portfolio

8/80 SAN Switch

48-80 8Gb ports

Data Center Fabric Manager

Enhanced capabilities

8/40 SAN Switch

24-40 8Gb ports

HP 400 MP-Router

(16x 4Gb FC + 2GbE IP ports)

Integrated 8Gb SAN Switch

for HP EVA4400

DC04 SAN Director

32 - 256 8Gb FC ports

+ 4x 64Gb ICL

Director Blades

MP Router

16FC+2IP port

8/8 & 8/24 SAN Switch

8-24 8Gb ports

8Gb SAN Switch

for HP c-Class BladeSystem

Encryption Switch

(32x 8Gb FC ports)

DC Encryption

HP 2408 CEE ToR Switch

(24x 10Gb CEE

+ 8 8Gb FC ports)

Host Bus Adapters

4Gbps single and dual port HBA

8Gbps single and dual port HBA

DC SAN Backbone Director

32 - 512 8Gb FC ports

+ 4x 128Gb ICL

1606 Extension SAN

Switch - FC & GbE

10/24 FCoE

MP Extension

16Gb FC

32 & 48 port

8Gb FC & FICON

16, 32, 48 & 64 port

SN8000B 8-slot

32 384 16Gb FC ports

2.11Tb ICL bandwidth

SN8000B 4-slot

32 - 192 16Gb FC ports

1Tb ICL bandwidth

SN6000B FC Switch

24-48 16Gb ports

HP Copyright 2011 Peter Mattei

MDS9000 blades

FC switches MDS9000 multiprotocol switches and directors

Cisco MDS9000 and Nexus 5000 family

C-Series SAN Portfolio

Cisco Fabric Manager

Cisco NX-OS

Supervisor 2

1 - 4Gb FC

12, 24, 48-Port

10Gb FC

4-Port

18/4,

IP Storage

Services blade

SSM

Virtualization

blade

MDS 9506 MDS 9509 MDS 9513

MDS 9124

MDS 9222i

MDS 9134

1 - 8Gb FC

24 & 48-Port

MDS 9124e

c-class switch

Nexus DCE/CEE ToR switches

Nexus 5010

20 28 ports

Nexus 5020

40 56 ports

Nexus Expansion Modules

FC FC/4 10Gb Eth - 10Gb Eth

Management

Embedded OS

Fabric Manager and

Fabrice Manager

Server Package

Enhanced capabilities

SN6000C

(MDS 9148)

HP Copyright 2011 Peter Mattei

New Hardware

Announcement of 23th August 2011

14

New HP 3PAR top models P10000 V400 and V800

Higher performance 1.5 to 2 times T-Class

New SPC-1 performance world record of 450213 IOPS

Higher capacities 2 times T-Class

V400: 800TB

V800: 1600TB

Higher number of drives 1.5 times T-Class

V400: 960 disks

V800: 1920 disks

New faster Gen4 ASIC now 2 per node

PCI-e bus architecture provides higher bandwidth and resilience

8Gb FC ports higher IO performance

Chunklet size increased to 1GB to address future higher capacities

T10 DIF increased data resilience

HP Copyright 2011 Peter Mattei

New InForm OS and Features

Announcement of 23th August 2011

15

New InForm OS 3.1.1 for F-, T- and V-Class

64-bit architecture

Remote Copy enhancements

Thin Remote Copy reduces initial copy size

More FC RC links up to 4 from 2

Firmware upgrade enhancements

All upgrades are now node by node

RC copy groups can now stay online during FW upgrades

New additional Virtual Domain user roles

More granular 16kB Thin Provisioning space reclamation

VMware enhancements

Automated VM space reclamation (T10 compliant)

VASA support

Peer Motion for F-, T- and V-Class

Allows transparent tiering and data migration between F-, T- and V-Class systems

New license bundles

Thin Suite for F-, T- and V-Class

Optimization Suite for V-Class

HP Copyright 2011 Peter Mattei

HP 3PAR InServ Storage Servers

F200 F400 T400 T800 V400 V800

Controller Nodes 2 2 4 2 4 2 8 2 4 2 8

Fibre Channel Host Ports

Optional 1Gb iSCSI Ports

Optional 10Gb iSCSI Ports

3)

Optional 10Gb FCoE Ports

3)

Built-in IP Remote Copy Ports

0 12

0 8

NA

NA

2

0 24

0 16

NA

NA

2 4

0 48

0 16

NA

NA

2 4

0 96

0 32

NA

NA

2 4

0 96

NA

0 32

0 32

2 4

0 192

NA

0 32

0 32

2 4

GBs Control Cache

GBs Data Cache

8

12

8 16

12 24

8 16

24 48

8 32

24 96

32 - 64

64 - 128

64 - 256

128 - 512

Disk Drives 16 192 16 384 16 640 16 1,280 16 - 960 16 - 1920

Drive

Types

SSD

1)

FC 15krpm

NL 7.2krpm

2)

100, 200GB

300, 600GB

2TB

100, 200GB

300, 600GB

2TB

100, 200GB

300, 600GB

2TB

100, 200GB

300, 600GB

2TB

100, 200GB

300, 600GB

2TB

100, 200GB

300, 600GB

2TB

Max Capacity 128TB 384TB 400TB 800TB 800TB 1600TB

Read throughput MB/s

IOPS (true backend IOs)

1,300

34,400

2,600

76,800

3,800

120,000

5,600

240,000

6,500

180,000

13,000

360,000

SPC-1 Benchmark IOPS 93,050 224,990 450213

Same OS, Same Management Console, Same Replication Software

1) max. 32 SSD per Node Pair

2) NL = Nearline = Enterprise SATA

3) Planned 1H2012

16

HP Copyright 2011 Peter Mattei

Comparison between T- and V-Class

HP 3PAR T-Class HP P10000 3PAR

Bus Architecture PCI-X PCIe

CPUs 2 x dual-core per node 2 x quad-core per node

ASIC 1 per node 2 per node

Control cache 4GB per node V400: 16GB per node

V800: 32GB per node

Data cache 12GB per node V400: 32GB per node

V800: 64GB per node

I/O slots 6 9

FC host ports 0 - 128 4GB/s 0 - 192 8GB/s

iSCISI host ports 0 - 32 1GB/s 0 - 32 10GB/s*

FCoE host ports N/A 0 - 32 10GB/s*

Rack options 2M HP 3PAR rack 2M HP 3PAR rack

or 3rd party rack for V400*

Drives 16 - 1280 16 - 1920

Max capacity 800TB 1.6PB

T10DIF N/A Supported

17

*planned

HP Copyright 2011 Peter Mattei

P10000 3PAR Bigger, Faster, Better! ...all round

18

192

384

640

1,280

960

1,920

Disk Drives

128

384 400

800 800

1,600

Raw Capacity (TB)

20

40

64

128

192

768

Total Cache (GB)

12

24

64

128

96

192

Host Ports

46

93

156

312

180

360

Disk IOPS (,000)

1300

2600

3200

6400 6500

13000

Throughput (MB/s)

1.5x 6x 2.5x

2x 1.5x 1.5x

240

120

3800

5600

HP Copyright 2011 Peter Mattei

SPC-1 IOPS HP 3PAR P10000 World Record

Scalable performance

19

For details see: http://www.storageperformance.org/results/benchmark_results_spc1

HP Copyright 2011 Peter Mattei

SPC-1 $/IOPS

Scalable performance without high cost

20

For details see: http://www.storageperformance.org/results/benchmark_results_spc1

HP Copyright 2011 Peter Mattei

HP 3PAR Four Simple Building Blocks

F-Class

Controller Nodes

Performance/connectivity building block

CPU, Cache and 3PAR ASIC

System Management

RAID and Thin Calculations

Node Mid-Plane

Cache Coherent Interconnect

Completely passive encased in steel

Defines Scalability

Drive Chassis

Capacity Building Block

F Chassis 3U 16 Disk

T & V Chassis 4U 40 Disks

Service Processor

One 1U SVP per system

Only for service and monitoring

T-Class V-Class

HP 3PAR Utility Storage

A

d

d

i

t

i

o

n

a

l

H

P

3

P

A

R

S

o

f

t

w

a

r

e

3

P

A

R

I

n

F

o

r

m

O

p

e

r

a

t

i

n

g

S

y

s

t

e

m

S

o

f

t

w

a

r

e

F

-

,

T

-

&

V

-

C

l

a

s

s

Purpose built on native virtualization

HP 3PAR Architectural differentiation

ASIC

Active Mesh

Fast RAID 5 / 6

Mixed Workload

Zero Detection

Virtual Domains

Virtual Lock System Reporter

Virtual

Copy

Peer Motion

Recovery

Managers

Remote Copy

F-, T-, V-Class

Thin Suite

Thin

Provisioning

Thin

Conversion

Thin

Persistence

22

V-Class

Optimization Suite

Dynamic

Optimization

System Tuner

Adaptive

Optimization

Cluster Extension

Self-Configuring Self-Healing

Self-Monitoring

Autonomic Policy

Management

Self-Optimizing

InForm

fine-grained OS

Performance

Instrumentation

Utilization

Manageability

Full Copy LDAP Rapid Provisioning Access Guard

HP Copyright 2011 Peter Mattei

Hardware Based for Performance

HP 3PAR ASIC

Fast RAID 10, 50 & 60

Rapid RAID Rebuild

Integrated XOR Engine

Tightly-Coupled Cluster

High Bandwidth, Low Latency

Interconnect

Mixed Workload

Independent Metadata and Data

Processing

Thin Built in

Zero Detect

23

HP Copyright 2011 Peter Mattei

Traditional Modular Storage

Traditional Tradeoffs

Legacy vs. HP 3PAR Hardware Architecture

Cost-efficient but scalability and resiliency limited

by dual-controller design

Cost-effective, scalable and resilient architecture.

Meets cloud-computing requirements for efficiency,

multi-tenancy and autonomic management.

HP 3PAR meshed and active

Host Connectivity

Traditional Monolithic Storage

Scalable and resilient but costly.

Does not meet multi-tenant requirements efficiently

Disk Connectivity

Distributed

Controller

Functions

HP Copyright 2011 Peter Mattei

Host Connectivity

Data Cache

Disk Connectivity

Passive Backplane

Scale without Tradeoffs

HP 3PAR Hardware Architecture

A finely, massively, and

automatically load

balanced cluster

3PAR InSpire

F-Class Architecture

L

e

g

e

n

d

3PAR InSpire

T- and V-Class Architecture

3PAR

ASIC

3PAR

ASIC

25

HP Copyright 2011 Peter Mattei

Unified Processor

and/or Memory

Control Processor

& Memory

3PAR ASIC &

Memory

disk

Heavy throughput

workload applied

Heavy transaction

workload applied

I/O Processing : Traditional Storage

I/O Processing : 3PAR Controller Node

hosts

hosts

small IOPs wait for large IOPs to

be processed

control information and data are

pathed and processed separately

Heavy throughput

workload sustained

Heavy transaction

workload sustained

Disk

interface

= control information (metadata)

= data

Host

interface

Host

interface

disk

Disk

interface

Multi-tenant performance

3PAR Mixed workload support

26

HP Copyright 2011 Peter Mattei

0

500

1000

1500

2000

2500

3000

0 10 20 30 40 50 60 70 80 90 100

% Read IOPS from Host

M

B

s

o

f

C

a

c

h

e

D

e

d

i

c

a

t

e

d

t

o

W

r

i

t

e

s

p

e

r

N

o

d

e

20K IOPs

30K IOPs

40K IOPs

Self-adapting Cache 50 to 100% for reads / 50 to 0% for writes

3PAR Adaptive Cache

Measured System: 2-Node T800 with 320 15K FC Disks and12 GB data cache per Node

Host Load

HP Copyright 2011 Peter Mattei

Spare Disk Drives vs. Distributed Sparing

HP 3PAR High Availability

Traditional Arrays

3PAR InServ

Few-to-one rebuild

hotspots & long rebuild exposure

Spare drive

Many-to-many rebuild

parallel rebuilds in less time

Spare chunklets

28

HP Copyright 2011 Peter Mattei

S

h

e

l

f

S

h

e

l

f

3PAR InServ

Shelf-independent RAID

Despite shelf failure Data access preserved

S

h

e

l

f

S

h

e

l

f

A1

B1

C1

D1

B2

A2

C2

D2

B3

A3

C3

D3

Raidlet Groups

A4

B4

C4

D4

B5

A5

C5

D5

B6

A6

C6

D6

Guaranteed Drive Shelf Availability

HP 3PAR High Availability

S

h

e

l

f

S

h

e

l

f

C G C

D H D

Traditional Arrays

Shelf-dependent RAID

Shelf failure might mean no access to data

29

S

h

e

l

f

B F B

S

h

e

l

f

A E A

Raid Group RG

HP Copyright 2011 Peter Mattei

Write Cache Re-Mirroring

HP 3PAR High Availability

Traditional Arrays

3PAR InServ

Traditional Write-Cache Mirroring

Either poor performance due to write-thru mode

or risk of write data loss

Persistent Write-Cache Mirroring

No write-thru mode consistent performance

Works with 4 and more nodes

F400

T400, T800

V400, V800

Mirror

Write Cache

Mirror

Write Cache

Write-Cache off for data security

30

Write-Cache stays on

thanks to redistribution

HP Copyright 2011 Peter Mattei

HP 3PAR virtualization advantage

RAID5 Set RAID1 Set

RAID1 RAID5 Set RAID6 Set

LUN 1

LUN 0

LUN 3

LUN 4

LUN 5

Traditional Controllers

S

p

a

r

e

S

p

a

r

e

LUN 7

LUN 6

LUN 2

0 1 2 3 4 5 6 7

R1 R1 R5 R1 R5 R5 R6 R6

Each RAID level requires dedicated disks

Dedicated spare disk required

Limited single LUN performance

Traditional Array

3PAR InServ Controllers

0 1 2 3 4 5 6 7

R1 R1 R5 R1 R5 R5 R6 R6

HP 3PAR

All RAID levels can reside on same disks

Distributed sparing, no dedicated spare disks

Built-in wide-striping based on Chunklets

Physical Disks

31

HP Copyright 2011 Peter Mattei

HP 3PAR F-Class InServ Components

Controller Nodes (4U)

Capacity building block

Drive Magazines

Add non-disruptively

Industry leading density

Drive Chassis (3U)

Full-mesh Back-plane

Post-switch architecture

High performance, tightly coupled

Completely passive

3

P

A

R

4

0

U

,

1

9

C

a

b

i

n

e

t

o

r

C

u

s

t

o

m

e

r

P

r

o

v

i

d

e

d

Performance and connectivity building block

Adapter cards

Add non-disruptively

Runs independent OS instance

Service Processor (1U)

Remote error detection

Supports diagnostics and maintenance

Reporting to HP 3PAR Central

32

HP Copyright 2011 Peter Mattei

Configuration Options

HP 3PAR F-Class Node

2 built-in FC Disk Ports

2 built-in FC Disk or Host Ports

Slot 1: optional 2 FC Ports for Host , Disk or FC Replication

or 2 GbE iSCSI Ports

Slot 0: optional 2 FC Ports for Host , Disk or FC Replication

or 2 GbE iSCSI Ports

GigE Management Port

GigE IP Replication Port

One Xeon Quad-Core 2.33GHz CPU

One 3PAR Gen3 ASIC per node

4GB Control & 6GB Data Cache per node

Built-in I/O ports per node

10/100/1000 Ethernet port & RS-232

Gigabit Ethernet port for Remote Copy

4 x 4Gb/s FC ports

Optional I/O per node

Up to 4 more FC or iSCSI ports (mixable)

Preferred slot usage (in order); depending on

customer requirements

Disk Connections: Slot 2 (ports 1,2), 0, 1

higher backend connectivity and performance

Host Connections: Slot 2 (ports 3,4), 1, 0

higher front-end connectivity and performance

RCFC Connections: Slot 1 or 0

Enables FC based Remote Copy (first node pair only)

iSCSI Connections: Slot 1, 0

adds iSCSI connectivity

33

HP Copyright 2011 Peter Mattei

Cache per node

Control Cache: 4GB (2 x 2048MB

DIMMs)

Data Cache: 6 GB (3 x 2048MB

DIMMs)

SATA : Local boot disk

Gen3 ASIC

Data Movement

XOR RAID Processing

Built-in Thin Provisioning

I/O per node

3 PCI-X buses/ 2 PCI-X slots and one

onboard 4 port FC HBA

F-Class Controller Node

HP 3PAR InSpire Architecture

Controller Node(s)

SERIAL

LAN

SATA

Data

Cache

Control

Cache

4GB

6 GB

2 Onboard

4 Port FC

1

0

Quad-Core Xeon

2.33 GHz

High Speed

Data Links

Multifunction

Controller

34

HP Copyright 2011 Peter Mattei

Minimum F-Class configurations

F-Class DC3 Drive Chassis Configurations

35

Non-Daisy Chained Daisy Chained

Minimum configuration

2 Drive Chassis

16 same Drives

Min upgrade is 8 Drives

HP Copyright 2011 Peter Mattei

Maximum 2-node F-Class configurations

F-Class DC3 Drive Chassis Configurations

36

Non-Daisy Chained 96 Drives

Daisy Chained

192 Drives

HP Copyright 2011 Peter Mattei

Connectivity Options: Per F-Class Node Pair

Ports

0 1

Ports

2 - 3

PCI

Slot 1

PCI

Slot 2

# of FC

Host Ports

# of iSCSI

Ports

# of Remote

Copy FC Ports

# of Drive

Chassis

Max # of

Disks

Disk Host - - 4 - - 4 64

Disk Host Host - 8 - - 4 64

Disk Host Host Host 12 - - 4 64

Disk Host Host iSCSI 8 4 - 4 64

Disk Host iSCSI RCFC 4 4 2 4 64

Disk Host Disk - 4 - - 8 128

Disk Host Disk Host 8 - - 8 128

Disk Host Disk iSCSI 4 4 - 8 128

Disk Host Disk RCFC 4 - 2 8 128

Disk Host Disk Disk 4 - - 12 192

37

HP Copyright 2011 Peter Mattei

HP 3PAR T-Class InServ Components

Performance and connectivity building block

Adapter cards

Add non-disruptively

Runs independent OS instance

Controller Nodes (4U)

Capacity building block

Drive Magazines

Add non-disruptively

Industry leading density

Drive Chassis (4U)

Full-mesh Back-plane

Post-switch architecture

High performance, tightly coupled

Completely passive

3

P

A

R

4

0

U

,

1

9

C

a

b

i

n

e

t

B

u

i

l

t

-

I

n

C

a

b

l

e

M

a

n

a

g

e

m

e

n

t

Service Processor (1U)

Remote error detection

Supports diagnostics and maintenance

Reporting to HP 3PAR Central

38

HP Copyright 2011 Peter Mattei

Bus to Switch to Full Mesh Progression

The 3PAR Evolution

3PAR InServ Full Mesh Backplane

High Performance / Low Latency

Passive Circuit Board

Slots for Controller Nodes

Links every controller (Full Mesh)

1.6 GB/s (4 times 4Gb FC)

28 links (T800)

Single hop

3PAR InServ T800 with 8 Nodes

8 ASICS with 44.8 GB/s bandwidth

16 Intel Dual-Core processors

32 GB of control cache

96GB total data cache

24 I/O buses, totaling 19.2 GB/s of

peak I/O bandwidth

123 GB/s peak memory bandwidth

39

T800 with 8 Nodes

and 640 Disks of 1280 max

HP Copyright 2011 Peter Mattei

2 to 8 per System installed in pairs

2 Intel Dual-Core 2.33 GHz

16GB Cache

4GB Control/12GB Data

Gen3 ASIC

Data Movement, ThP & XOR RAID

Processing

Scalable Connectivity per Node

3 PCI-X buses/ 6 PCI-X slots

Preferred slot usage (in order)

2 slots 8 FC disk ports

Up to 3 slots 24 FC Host ports

1 slot 1 FC port used for Remote Copy

(first node pair only)

Up to 2 slots 8 1GbE iSCSI Host ports

Controller Node(s)

HP 3PAR T-Class Controller Node

T-Class Node pair

0 1 3 4 5 2 0 1 3 4 5 2 PCI Slots

Console port C0

Remote Copy Eth port E1

Mgmt Eth port E0

Host FC/iSCSI/RC FC ports

Disk FC ports

40

HP Copyright 2011 Peter Mattei

T-Class Controller Node

HP 3PAR InSpire architecture

Scalable Performance per Node

2 to 8 Nodes per System

Gen3 ASIC

Data Movement

XOR RAID Processing

Built-in Thin Provisioning

2 Intel Dual-Core 2.33 GHz

Control Processing

SATA : Local boot disk

Max host-facing adapters

Up to 3 (3 FC / 2 iSCSI)

Scalable Connectivity Per Node

3 PCI-X buses/ 6 PCI-X slots

Controller Node(s)

GEN3 ASIC

41

HP Copyright 2011 Peter Mattei

T-Class DC04 Drive Chassis

From 2 to 10 Drive Magazines

(1+1) redundant power supplies

Redundant dual FC paths

Redundant dual switches

Each Magazine always holds

4 disks of the same drive type

Each Magazines in a Chassis

can have different Drive types.

For example:

3 magazines of FC

1 magazine of SSD

6 magazines of SATA.

42

HP Copyright 2011 Peter Mattei

T400 Configuration examples

* Diagram is not intended to show all components in the 2M Cabinet, but rather to show how

controllers and drive chassis scale. Controllers and Drive Chassis are populated from bottom to top

Minimum configuration is 2 nodes and 4 drive chassis

with 2 magazines per chassis. That means a starting

configuration with 600GB drives is 19.2 TB of raw

storage.

Upgrades are done as Columns of Magazines down

the Drive Chassis. In this example we added 4 600GB

magazines or 16 Drives.

Once we fill up the original 4 Drive Chassis we have a

choice. Add 2 more nodes, drive chassis and disks or

just add 4 more drive chassis and some disks.

Considerations:

Do I need more IOP performance? (A node pair can

drive 320 15K disks or 8 fully loaded Chassis.)

It is virtually impossible to run out of CPUs power

with so few drives. Only SSD drives may hit node

IOP and CPU limits.

Do I need more Bandwidth? A nodes bandwidth can

be reached with much fewer resources. Adding

nodes increases overall bandwidth.

43

HP Copyright 2011 Peter Mattei

T400 Configuration examples

How do we grow? After looking at the performance

requirements it is decided that adding capacity to the

existing nodes is the best option. This offers a good balance

of capacity and performance.

The next upgrade is going to require additional Controller

Nodes, Drive Chassis and Drive magazines. The minimum

upgrade allowed is:

2 Controller nodes

4 Drive Chassis

8 Drive Magazines

Just because you can do something doesnt mean it is a

good idea. This upgrade makes the Node Pairs very

unbalanced.

Over 50,000 IOPs on 2 nodes and 6400 on the other 2

Over 320 TB on one Node Pair and 19TB on the other 2

A much cleaner upgrade would be to add a lot more FC

capacity. This will bring the node IOP balance up much

closer. 44,800 to 32,000 FC IOPs There will still be a lot

more capacity behind 2 nodes but the volumes that need

more IOPs can be balanced across all FC disks.

Due to power distribution limits in a 3PAR rack you can

only have 8 Chassis per rack. A T400 with 8 Chassis

requires 2 full racks and a mostly un-filled 3rd rack.

We decide that the next upgrade should be filling out the

first two nodes.

44

HP Copyright 2011 Peter Mattei

T400 Configuration examples

Youll notice that the T400 has space for 6 Drive

Chassis but the normal building block is 4 Chassis.

With a T400 you are allowed to deploy 6 Drive

Chassis on the initial deployment

But this has some important caveats:

Min. upgrades increment are 6 Magazines, 24

drives. In this example with 600GB drives that is

a minimum upgrade of 14TB.

This is the maximum configuartion in one rack:

2 nodes, 6 Chassis, 60 Magazines, 240 drives

The next min. upgrade requires:

2 nodes, 6 Chassis with 12 Magazines of 48

drives

You can finally fill out the configuration by

adding 4 more drive Chassis (2 per node)

Important note: To rebalance you probably

need a TS engagement

45

HP Copyright 2011 Peter Mattei

T800 Fully Configured 224000 SPC IOPS

8 Nodes

32 Drive Chassis

1280 Drives

768TB raw capacity

with 600GB drives

224000 SPC IOPS

46

Disk Chassis/Frames may be up to 100m apart from the Controllers (1

st

Frame)

HP Copyright 2011 Peter Mattei

T-Class redundant power

Controller Nodes and Disk Chassis (shelves) are

powered by (1+1) redundant power supplies.

The Controller Nodes are backed up by a string

of two batteries.

47

HP Copyright 2011 Peter Mattei

HP P10000 3PAR V400 Components

48

First rack

with controllers

and disks

Expansion rack(s)

with disks only

Full-mesh Back-plane

Post-switch architecture

High performance, tightly coupled

Completely passive

Up to 6 in first, 8 in expansion racks

Capacity building block

2 to 10 Drive Magazines

Add non-disruptively

Industry leading density

Drive Chassis (4U)

Service Processor (1U)

Remote error detection

Supports diagnostics and maintenance

Reporting to HP 3PAR Central

Performance and connectivity building block

Adapter cards

Add non-disruptively

Runs independent OS instance

Controller Nodes

HP Copyright 2011 Peter Mattei

Full-mesh Back-plane

Post-switch architecture

High performance, tightly coupled

Completely passive

HP P10000 3PAR V800 Components

2 in first, 8 in expansion racks

Capacity building block

2 to 10 Drive Magazines

Add non-disruptively

Industry leading density

Drive Chassis (4U)

49

Service Processor (1U)

Remote error detection

Supports diagnostics and maintenance

Reporting to HP 3PAR Central

First rack

with controllers

and disks

Expansion rack(s)

with disks only

Performance and connectivity building block

Adapter cards

Add non-disruptively

Runs independent OS instance

Controller Nodes

HP Copyright 2011 Peter Mattei

Bus to Switch to Full Mesh Progression

The 3PAR V-Class Evolution

V-Class Full Mesh Backplane

High Performance / Low Latency

112 GB/s Backplane bandwidth

Passive Circuit Board

Slots for Controller Nodes

Links every controller (Full Mesh)

2.0 GB/s ASIC to ASIC

Single hop

Fully configured P10000 3PAR V800

8 Controller Nodes

16 Gen4 ASICs 2 per node

16 Intel Quad-Core processors

256 GB of control cache

512 GB total data cache

136 GB/s peak memory bandwidth

50

Max V800 configuration

with 8 Nodes and 1920 Disks

HP Copyright 2011 Peter Mattei

2 to 8 per System installed in pairs

2 Intel Quad-Core per node

48GB or 96GB Cache per node

V400: 16GB Control/32GB Data

V800: 32GB Control/64GB Data

2 Gen4 ASIC per node

Data Movement, ThP & XOR RAID Processing

Scalable Connectivity per Node

3 PCI-e buses/ 9 PCI-e slots

4-port 8Gb/s FC Adapter

10Gb/s FCoE ready (post GA)

10Gb/s iSCSI ready (post GA)

Internal SSD drive for

InServe OS

Cache destaging in case of power failure

Controller Node(s)

HP 3PAR V-Class Controller Node

51

P

1

0

0

0

0

3

P

A

R

C

o

n

t

r

o

l

l

e

r

s

Remote Copy Ethernet port

RCIP E1

Serial ports

PCI Slots

0 1 2

3 4 5

6 7 8

PCI-e card installation order

Drive Chassis Connections 6, 3, 0

Host Connections 2, 5, 8, 1, 4, 7

Remote Copy FC Connections 1, 4, 2, 3

Management Eth port E0

HP Copyright 2011 Peter Mattei

V-Class Controller Node

HP 3PAR InSpire architecture

Scalable Performance per Node

2 to 8 Nodes per System

Thin Built In Gen4 ASIC

2.0 GB/s dedicated ASIC-to-ASIC bandwidth

112 GB/s total backplane bandwidth

Inline Fat-to-Thin processing in DMA engine2

2 x Intel Quad-Core Processors

V400: 48GB Cache

V800: 96GB Maximum Cache

8Gb/s FC Host/Drive Adapter

10Gb/s FCoE/iSCSI Host Adapter (planned)

Warm-plug Adapters

Controller Node(s)

52

Intel

Multi-Core

Processor

3PAR Gen4

ASIC

3PAR Gen4

ASIC

Control Cache

16 or 32GB

Data Cache

32 or 64GB

C

o

n

t

r

o

l

(

S

C

S

I

C

o

m

m

a

n

d

P

a

t

h

)

Data Paths

Intel

Multi-Core

Processor

Multifunction

Controller

PCIe

Switch

PCIe

Switch

PCIe

Switch

PCI-e

Slots

HP Copyright 2011 Peter Mattei

V-Class P10000 Drive Chassis

From 2 to 10 Drive Magazines

(1+1) redundant power supplies

Redundant dual FC paths

Redundant dual switches

Each Magazine always holds

4 disks of the same drive type

Each Magazines in a Chassis

can have different Drive types.

For example:

3 magazines of FC

1 magazine of SSD

6 magazines of SATA.

53

HP Copyright 2011 Peter Mattei

With 2 Controllers and 4 Drive Chassis Increments

V400 Configuration Examples

54

Minimum initial Configuration

1 Rack

2 Controller Nodes

4 Drive Chassis

8 Drive Magazines (32 Disks)

Minimum upgrade

4 Drive Magazines (16 Disks)

Maximum 2-node Configuration

2 Racks

12 Drive Chassis

480 Disks

HP Copyright 2011 Peter Mattei

With 4 Controllers and 4 Drive Chassis Increments

V400 Configuration Examples

55

Minimum initial Configuration

2 Racks

4 Controller Nodes

8 Drive Chassis

16 Drive Magazines (64 Disks)

Minimum upgrade

4 Drive Magazines (16 Disks)

Up to 320 Disks in 8 Chassis

HP Copyright 2011 Peter Mattei

With 4 Controllers and 4 Drive Chassis Increments

V400 Configuration Examples

56

Maximum Configuration

4 Racks

4 Controller Nodes

24 Drive Chassis

960 Disks

HP Copyright 2011 Peter Mattei

With 2 Controllers and 4 Drive Chassis Increments

V800 Configuration Examples

57

Minimum initial Configuration

2 Rack

2 Controller Nodes

4 Drive Chassis

8 Drive Magazines (32 Disks)

Minimum upgrade

4 Drive Magazines (16 Disks)

Up to 160 Disks in 4 Chassis

HP Copyright 2011 Peter Mattei

With 4 Controllers and 4 Drive Chassis Increments

V800 Configuration Examples

58

Minimum initial Configuration

2 Rack

4 Controller Nodes

8 Drive Chassis

16 Drive Magazines (64 Disks)

Minimum upgrade

4 Drive Magazines (16 Disks)

Up to 320 Disks in 8 Chassis

HP Copyright 2011 Peter Mattei

With 8 Controllers and 4 Drive Chassis Increments

V800 Configuration Examples

59

Minimum initial Configuration

3 Rack

8 Controller Nodes

16 Drive Chassis

32 Drive Magazines (128 Disks)

Minimum upgrade

4 Drive Magazines (16 Disks)

Up to 640 Disks in 16 Chassis

HP Copyright 2011 Peter Mattei

Max 8 Controllers configuration 450213 SPC-1 IOPS

V800 Configuration Examples

60

7 Racks

8 Controller Nodes

192 Host Ports

768GB Cache (256GB Control / 512GB Data)

48 Drive Chassis

1920 Disks

Disk Chassis/Frames may be up to 100m apart from the Controllers (1

st

Frame)

Copyright 2011 Hewlett-Packard Development Company, L.P. The information

contained herein is subject to change without notice. Confidentiality label goes here

HP 3PAR InForm OS

Virtualization Concepts

HP Copyright 2011 Peter Mattei

3PAR Mid-Plane

Example: 4-Node T400 with 8 Drive Chassis

HP 3PAR Virtualization Concept

Drive Chassis are point-to-point

connected to controllers nodes in

the T-Class to provide cage

level availability to withstand

the loss of an entire drive

enclosure without losing access to

your data.

Nodes are added in pairs for

cache redundancy

An InServ with 4 or more nodes

supports Cache Persistence

which enables maintenance

windows and upgrades without

performance penalties.

62

HP Copyright 2011 Peter Mattei

Example: 4-Node T400 with 8 Drive Chassis

HP 3PAR Virtualization Concept

T-Class Drive Magazines hold

4 of the very same drives

SSD, FC or SATA

Size

Speed

SSD, FC, SATA drive

magazines can be mixed

A minimum configuration has 2

magazines per enclosure

Each Physical Drive is divided

into Chunklets of

- 256MB on F- and T-Class

- 1GB on V-Class

63

HP Copyright 2011 Peter Mattei

Virtual

Volume

Virtual

Volume

Example: 4-Node T400 with 8 Drive Chassis

HP 3PAR Virtualization Concept

RAID sets will be built across

enclosures and massively striped

to form Logical Disks (LD)

Logical Disks are bound together

to build Virtual Volumes

Each Virtual Volume is

automatically wide-striped across

Chunklets on all disk spindles

of the same type creating a

massively parallel system

Virtual

Volume

Exported

LUN

Virtual Volumes can now be

exported as LUNs to servers

64

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

LD

R

A

I

D

5

(

3

+

1

)

LDs are equally allocated to

controller nodes

HP Copyright 2011 Peter Mattei

Why are Chunklets so Important?

Ease of use and Drive Utilization

Same drive spindle can service many different LUNs

and different RAID types at the same time

Allows the array to be managed by policy, not by

administrative planning

Enables easy mobility between physical disks, RAID

types and service levels by using Dynamic or Adaptive

Optimization

Performance

Enables wide-striping across hundreds of disks

Avoids hot-spots

Allows Data restriping after disk installations

High Availability

HA Cage - Protect against a cage (disk tray) failure.

HA Magazine - Protect against magazine failure

3PAR InServ Controllers

0 1 2 3 4 5 6 7

R1 R1 R5 R1 R5 R5 R6 R6

Physical Disks

65

HP Copyright 2011 Peter Mattei

Common Provisioning Groups (CPG)

CPGs are Policies that define Service and Availability level by

Drive type (SSD, FC, SATA)

Number of Drives (striping width)

RAID level (R10, R50 2D1P to 8D1P, R60 6D2P or 14D2P)

Multiple CPGs can be configured and optionally overlap the same drives

i.e. a System with 200 drives can have one CPG containing all 200 drives and

other CPGs with overlapping subsets of these 200 drives.

CPGs have many functions:

They are the policies by which free Chunklets are assembled into logical disks

They are a container for existing volumes and used for reporting

They are the basis for service levels and our optimization products.

66

HP Copyright 2011 Peter Mattei

The base for autonomic utility storage

HP 3PAR Virtualization the Logical View

Physical Disks Logical Disks

Virtual

Volumes

Chunklets

Exported

LUNs

Physical Disks are divided in Chunklets (256MB or 1GB)

The majority is used to build Logical Disks (LD)

Some are used for distributed sparing

Logical Disks (LD)

Are collections of Raidlets - Chunklets arranged as rows of RAID sets (Raid 0, 10, 50, 60)

Provide the space for Virtual Volumes, Snapshot and Logging Disks

Are automatically created when required

Virtual Volumes (VV) Exported LUNs

User created volumes composed of LDs according to the corresponding CPG policies

Can be fat or thin provisioned

User exports VV as LUN

CPGs

Common Provisioning Groups (CPG)

User created virtual pools of Logical Disks that allocates space to virtual volumes on demand

The CPG defines RAID level, disk type and number, striping pattern etc.

3PAR autonomy User initiated

HP Copyright 2011 Peter Mattei

R1

AO

AO

ThP

Fat

ThP

ThP

Fat

ThP

ThP

ThP

ThP

Fat

R

1

R

5

R

1

R

5

R

6

R6

R5 R1

R5

HP 3PAR Virtualization the Logical View

Physical Disks

Autonomically built

Logical Disks

User created

CPGs

R

5

R5

User created

Virtual

Volumes

ThP

Chunklets

User

exported

LUNs

F

C

N

e

a

r

l

i

n

e

S

S

D

HP Copyright 2011 Peter Mattei

Easy and straight forward

Create CPG(s)

In the Create CPG Wizard select

and define

3PAR System

Residing Domain (if any)

Disk Type

SSD Solid State Disk

FC Fibre Channel Disk

NL Near-Line SATA Disks

Disk Speed

RAID Type

By selecting advanced options more

granular options can be defined

Availability level

Step size

Preferred Chunklets

Dedicated disks

69

HP Copyright 2011 Peter Mattei

Easy and straight forward

Create Virtual Volume(s)

In the Create Virtual Volume

Wizard define

Virtual Volume Name

Size

Provisioning Type: Fat or Thinly

CPG to be used

Allocation Warning

Number of Virtual Volumes

By selecting advanced options more

options can be defined

Copy Space Settings

Virtual Volume Geometry

70

HP Copyright 2011 Peter Mattei

Easy and straight forward

Export Virtual Volume(s)

In the Export Virtual Volume

Wizard define

Host or Host Set to be presented to

Optionally

Select specific Array Host Ports

Specify LUN ID

71

HP Copyright 2011 Peter Mattei

Simplify Provisioning

HP 3PAR Autonomic Groups

Traditional Storage

V1 V2 V3 V4 V5 V6 V7 V10 V8 V9

Individual Volumes

Cluster of VMware ESX Servers Autonomic Host Group

Autonomic Volume Group

Initial provisioning of the Cluster

Add hosts to the Host Group

Add volumes to the Volume Group

Export Volume Group to the Host Group

Add another host

Just add host to the host group

Add another volume

Just add the volume to the Volume Group

Volumes are exported automatically

V1 V2 V3 V4 V5 V6 V7 V10 V8 V9

Autonomic HP 3PAR Storage

Initial provisioning of the Cluster

Requires 50 provisioning actions

(1 per host volume relationship)

Add another host

Requires 10 provisioning actions

(1 per volume)

Add another volume

Requires 5 provisioning actions

(1 per host)

72

Copyright 2011 Hewlett-Packard Development Company, L.P. The information

contained herein is subject to change without notice. Confidentiality label goes here

HP 3PAR InForm

Software and Features

HP Copyright 2011 Peter Mattei

Optimization Suite

Thin Suite

HP 3PAR Software and Licensing

Remote Copy

InForm Operating System

InForm Additional Software

Virtual Copy

Thin Persistence

Thin Conversion

Thin Provisioning

Virtual Domains

Dynamic Optimization

LDAP

Adaptive Optimization

Scheduler Host Personas

InForm

Administration Tools

InForm Host Software

Recovery Manager for

Oracle

Host Explorer

Recovery Manager for

VMware

Multi Path IO IBM AIX

Recovery Manager for

Exchange

Multi Path IO

Windows 2003

Recover Manager for

SQL

System Reporter

3PAR Manager for

VMware vCenter

3PAR InForm Software

Thin Copy

Reclamation

RAID MP

(Multi-Parity)

Autonomic Groups

Rapid Provisioning

Access Guard

System Tuner

Full Copy

Virtual Lock

Four License Models:

Consumption Based

Spindle/Magazine Based

Frame Based

Free*

* Support fee associated

74

Peer Motion

HP Copyright 2011 Peter Mattei

HP 3PAR Software Support Cost & Capping

Care Pack Support Services for spindle base licenses are charged by the number of

magazines

Support Services cost incrementally increase until they reach a predefined threshold/cap

and stay flat i.e. will not increase anymore.

Capping threshold by array

F200 11Magazine

F400 13 Magazines

T400 / V400 33 Magazines

T800 / V800 41 Magazines

Capping occurs for each software title per magazine type

Example for InForm OS on V800 with 3 Years Critical Service:

50 x 600GB Disk Magazine ---- 41 x HA112A3 - QQ6 - 3PAR InForm V800/4x600GB Mag LTU Support

24 x 2TB Disk Magazine ---- 24 x HA112A3 - QQ6 - 3PAR InForm V800/4x2TB Mag LTU Support

24 x 200GB SSD Magazine ---- 24 x HA112A3 - QQ6 - 3PAR InFrm V800/4x200GB SSD Mag LTU Support

The Thin Suite, Thin Provisioning, Thin Conversion and Thin Persistence do not have any

associated support cost

Copyright 2011 Hewlett-Packard Development Company, L.P. The information

contained herein is subject to change without notice. Confidentiality label goes here

HP 3PAR

Thin Technologies

HP Copyright 2011 Peter Mattei

HP 3PAR Thin Technologies Leadership Overview

Thin Provisioning

No pool management or

reservations

No professional services

Fine capacity allocation units

Variable QoS for snapshots

Thin Deployments

Stay Thin Over time

Reduce Tech Refresh

Costs by up to 60%

Buy up to 75% less

storage capacity

Start Thin Get Thin Stay Thin

Thin Conversion

Eliminate the time & complexity of

getting thin

Open, heterogeneous migrations for

any array to 3PAR

Service levels preserved during inline

conversion

Thin Persistence

Free stranded capacity

Automated reclamation for 3PAR

offered by Symantec, Oracle

Snapshots and Remote Copies stay

thin

77

HP Copyright 2011 Peter Mattei

HP 3PAR Thin Technologies Leadership Overview

Built-in

HP 3PAR Utility Storage is built from the ground up to support Thin

Provisioning (ThP) by eliminating the diminished performance and

functional limitations that plague bolt-on thin solutions.

In-band

Sequences of zeroes are detected by the 3PAR ASIC and not

written to disks. Most other vendors ThP implementation write

zeroes to disks, some can reclaim space as a post-process.

Reservation-less

HP 3PAR ThP draws fine-grained increments from a single free

space reservoir without pre-dedication of any kind. Other vendors

ThP implementation require a separate, pre-dedicated pool for

each data service level.

Integrated

API for direct ThP integration in Symantec File System, VMware,

Oracle ASM and others

78

HP Copyright 2011 Peter Mattei

Dedicate on write only

HP 3PAR Thin Provisioning Start Thin

Physically installed Disks

Required

net Array

Capacities

Server

presented

Capacities

/ LUNs

Physical

Disks

Physically installed Disks

Free

Chunkl

Traditional Array

Dedicate on allocation

HP 3PAR Array

Dedicate on write only

Actually written data

79

HP Copyright 2011 Peter Mattei

HP 3PAR Thin Conversion Get Thin

Thin your online SAN storage up to 75%

A practical and effective solution to

eliminate costs associated with:

Storage arrays and capacity

Software licensing and support

Power, cooling, and floor space

Unique 3PAR Gen3 ASIC with built-in

zero detection delivers:

Simplicity and speed eliminate the time &

complexity of getting thin

Choice - open and heterogeneous migrations for

any-to-3PAR migrations

Preserved service levels high performance during

migrations

Before After

0000

0000

0000

Gen3 ASIC

Fast

80

HP Copyright 2011 Peter Mattei

How to get there

HP 3PAR Thin Conversion Get Thin

1. Defragment source Data

a) If you are going to do a block level migration via an appliance or host volume

manager (mirroring) you should defragment the filesystem prior to zeroing the free

space

b) If you are using filesystem copies to do the migration the copy will defragment the

files as it copies eliminating the need to defragment the source filesystem

2. Zero existing volumes via host tools

a) On Windows use sdelete (free utility available from Microsoft )

sdel et e c <dr i ve l et t er >

b) On UNIX/Linux use dd to create files containing zeros like

dd i f =/ dev/ zer o of =/ pat h/ 10GB_zer of i l e bs=128K count =81920

or zero and delete a file directly with shred

shr ed n 0 z u / pat h/ f i l e

81

HP Copyright 2011 Peter Mattei

HP 3PAR Thin Conversion at a Global Bank

No budget for additional storage

Recently had huge layoffs

Moved 271 TBs, DMX to 3PAR

Online/non-disruptive

No Professional Services

Large capacity savings

The results shown within this

document demonstrate a highly

efficient migration process which

removes the unused storage

No special host software

components or professional services

are required to utilise this

functionality

0

50

100

150

200

Unix ESX Win

EMC

3PAR

Reduced

power &

cooling

costs

G

B

s

Sample volume migrations on different OSs

(VxVM) (VMotion) (SmartMove)

Capacity

requirement

s reduced

by >50%

$3 million

savings

in upfront

capacity

purchases

82

HP Copyright 2011 Peter Mattei

Keep your array thin over time

HP 3PAR Thin Persistence Stay Thin

Before After

Gen3 ASIC

0000

0000

Fast

Non-disruptive and application-

transparent re-thinning of thin

provisioned volumes

Returns space to thin provisioned

volumes and to free pool for reuse

New with InForm 3.1.1:

intelligently reclaims16KB pages

Unique 3PAR Gen3 ASIC with

built-in zero detection delivers:

Simplicity No special host software required.

Leverage standard file system tools/scripts to

write zero blocks.

Preserved service levels zeroes detected and

unmapped at line speeds

Integrated automated reclamation with

Symantec and Oracle

83

HP Copyright 2011 Peter Mattei

Remember: Deleted files still occupy disk space

HP 3PAR Thin Persistence manual thin reclaim

LUN 1

Data 1

LUN 2

Data 2

Free

Chunklets

LUN 1

Data 1

LUN 2

Data 2

Free

Chunklets

Initial state:

LUN1 and 2 are ThP Vvols

Data 1 and 2 is actually written data

LUN 1

Data1

LUN 2

Data 2

Free

Chunklets

Unused

Unused

After a while:

Files deleted by the servers/file system

still occupy space on storage

LUN 1

Data1

LUN 2

Data 2

Free

Chunklets

00000000

00000000

00000000

00000000

Zero-out unused space:

Windows: sdelete *

Unix/Linux: dd script

Run Thin Reclamation:

Compact CPC and Logical Disks

Freed-up space is returned to the free Chunklets

* sdelete is a free utility available from Microsoft

84

HP Copyright 2011 Peter Mattei

HP 3PAR Thin Persistence Thin API

Partnered with Symantec

Jointly developed a Thin APIAn industry first!

File system / array communication API (write same)

Most elements now captured as part of emerging T10 SCSI standard

HP has introduced API to other operating system

vendors and offered development support

VMware

Microsoft

85

HP Copyright 2011 Peter Mattei

ASM with ASRU

HP 3PAR Thin Persistence Oracle Integration

Oracle auto-extend allows customers to save on

database capacity with Thin Provisioning

Database Capacity can get stranded after writes

and deletes

3PAR Thin Persistence and Oracle ASM Storage

Reclamation Utility (ASRU) can reclaim 25% or

more stranded capacity

After Tablefile shrink/drop or Database drop

After a new LUN is added to ASM Disk Group

Oracle ASM Utility compacts files and writes zeroes to free space

3PAR Thin Built-In ASIC-based, zero-detection eliminates free

space

From a DBA perspective:

Non disruptive does not impact storage performance. ASIC

huge advantage

Increase DB Miles Per Gallon

Traditional Array

Unused space remains

Zeroes are written

0000000000

Disk Group

Tablespace

Tables

00000000

Oracle

3PAR Array with

Thin Persistence

Files compacted by ASRU

Zeroes removed

Space reclaimed

86

HP Copyright 2011 Peter Mattei

HP 3PAR Thin Persistence in VMware Environments

Introduced with vSphere 4.0

VMware VMFS supports three formats for VM disk images

o Thin

o Thick - ZeroedThick (ZT)

o EagerZeroedThick (EZT)

VMware recommends EZT for highest performance

More info http://www.vmware.com/pdf/vsp_4_thinprov_perf.pdf

3PAR Thin Technologies work with and optimize all three formats

Introduced with vSphere 4.1

vStorage API for Array Integration (VAAI)

Thin Technologies enabled by the 3PAR Plug-in for VAAI

Thin VMotion - Uses XCOPY via the plug-in

Active Thin Reclamation - Using Write-Same to offload zeroing to array

Introduced with vSphere 5.0

Automated VM Space Reclamation

Leverages industry standard T10 UNMAP

Supported with VMware vSphere 5.0 and InForm OS 3.1.1

87

HP Copyright 2011 Peter Mattei

Autonomic VMware Space Reclamation

Fine-grained Reclaim, Fast

20GB VMDKs post deletions consume ~20GB

40+ GB

X

X

100GB

X X

00000000

00000000

00000000

00000000

25GB 25GB 25GB 25GB

10GB 10GB

DATASTORE

TIME

Coarse Reclaim, Slow

20GB VMDKs post deletions consume 40+ GB

0

0

0

0

T10 UNMAP

(768kB42MB Coarse)

Standard Thin Provisioning

Slow, Post-process

Overhead

HP 3PAR with Thin Persistence Traditional Storage with Space Reclaim

20 GB

X

X

100GB

X X

00000000

00000000

00000000

00000000

25GB 25GB 25GB 25GB

10GB 10GB

DATASTORE

0

0

0

0

T10 UNMAP

(16kB granularity)

Rapid, Inline

ASIC Zero Detect

3PAR Scalable Thin Provisioning

HP Copyright 2011 Peter Mattei

Built-in not bolt on

HP 3PAR Thin Provisioning positioning

No upfront allocation of storage for Thin Volumes

No performance impact when using Thin Volumes unlike competing

storage products

No restrictions on where 3PAR Thin Volumes should be used unlike

many other storage arrays

Allocation size of 16k which is much smaller than most ThP

implementations

Thin provisioned volumes can be created in under 30 seconds

without any disk layout or configuration planning required

Thin Volumes are autonomically wide striped over all drives within

that tier of storage

89

Copyright 2011 Hewlett-Packard Development Company, L.P. The information

contained herein is subject to change without notice. Confidentiality label goes here

HP 3PAR

Full and Virtual Copy

HP Copyright 2011 Peter Mattei

Part of the base InForm OS

HP 3PAR Full Copy Flexible point-in-time copies

91

3PAR Full Copy

Share data quickly and easily

Full physical point-in-time copy of

base volume

Independent of base volumes RAID

and physical layout properties for

maximum flexibility

Fast resynchronization capability

Thin Provisioning-aware

Full copies can consume same physical

capacity as Thin Provisioned base volume

Base Volume

Full Copy

Full Copy

HP Copyright 2011 Peter Mattei

HP 3PAR Virtual Copy Snapshot at its best

92

Integration with

Oracle, SQL, Exchange, VMware

3PAR Virtual Copy

Base Volume 100s of Snaps

Smart

Promotable snapshots

Individually deleteable snapshots

Scheduled creation/deletion

Consistency groups

Thin

No reservations needed

Non-duplicative snapshots

Thin Provisioning aware

Variable QoS

Ready

Instant readable or writeable snapshots

Snapshots of snapshots

Control given to end user for snapshot

management

Virtual Lock for retention of read-only snaps

but just

one CoW

Up to 8192 Snaps per array

HP Copyright 2011 Peter Mattei

HP 3PAR Virtual Copy Snapshot at its best

Base volume and virtual copies can be mapped to different CPGs

This means that they can have different quality of service

characteristics. For example, the base volume space can be derived

from a RAID 1 CPG on FC disks and the virtual copy space from a

RAID 5 CPG on Nearline disks.

The base volume space and the virtual copy space can grow

independently without impacting each other (each space has its own

allocation warning and limit).

Dynamic optimization can tune the base volume space and the virtual

copy space independently.

93

HP Copyright 2011 Peter Mattei

HP 3PAR Virtual Copy Relationships

The following shows a complex relationship scenario

94

HP Copyright 2011 Peter Mattei

Creating a Virtual Copy Using The GUI

Right Click and select Create Virtual Copy

95

HP Copyright 2011 Peter Mattei

InForm GUI View of Virtual Copies

The GUI gives a very easy to read graphical view of VCs:

96

Copyright 2011 Hewlett-Packard Development Company, L.P. The information

contained herein is subject to change without notice. Confidentiality label goes here

HP 3PAR

Remote Copy

HP Copyright 2011 Peter Mattei

3PAR Remote Copy

HP 3PAR Remote Copy Protect and share data

Smart

Initial setup in minutes

Simple and intuitive commands

No consulting services

VMware SRM integration

Complete

Native IP-based, or FC

No extra copies or infrastructure needed

Thin provisioning aware

Thin conversion

Synchronous, Asynchronous Periodic or

Synchronous Long Distance (SLD)

Mirror between any InServ size or model

Many to one, one to many

Sync or

Async Perodic

Primary Secondary

P

S

S

P

Primary

Secondary

P

S

2

Tertiary

S

1

Async Periodic

Standby

Sync

Synchronous Long Distance

1:2 Configuration

1:1 Configuration

98

HP Copyright 2011 Peter Mattei

HP 3PAR Remote Copy N:1 Configuration

You can use Remote Copy over IP

(RCIP) and/or Fibre Channel (RCFC)

connections

InServ Requirements

Max support is 4 to 1.

One of the 4 can mirror bi-directionally

Each RC relationship requires dedicated

node-pairs. In a 4:1 setup the target site

requires 8 node-pairs.

Primary Site A

Primary Site B

Primary Site C

Primary / Target

Site D

Target Site

P

P

P

P

RC

RC P

RC RC

RC

99

HP Copyright 2011 Peter Mattei

HP 3PAR Remote Copy 1:N Configuration

You can use Remote Copy over IP

(RCIP) and/or Fibre Channel (RCFC)

connections

InServ Requirements

Max support is 1 to 2.

One mirror can be bi-directionally

Each RC relationship requires dedicated

node-pairs. The primary site requires 4

node-pairs.

100

Target Site A

Target Site B

Primary Site

RC

RC

P

P

HP Copyright 2011 Peter Mattei

2

InServ writes I/Os to secondary cache Step 2 :

HP 3PAR Remote Copy Synchronous

Real-time Mirror

Highest I/O currency

Lock-step data consistency

Space Efficient

Thin provisioning aware

Targeted Use

Campus-wide business continuity

P

Primary

Volume

Secondary

Volume

S

1

Host server writes I/Os to primary cache Step 1 :

3

Remote system acknowledges the receipt

of the I/O

Step 3 :

4

I/O complete signal communicated back

to primary host

Step 4 :

101

HP Copyright 2011 Peter Mattei

Data integrity

HP 3PAR Remote Copy

Assured Data Integrity

Single Volume

All writes to the secondary volume are completed in the

same order as they were written on the primary volume

Multi-Volume Consistency Group

Volumes can be grouped together to maintain write

ordering across the set of volumes

Useful for databases or other applications that make

dependant writes to more than one volume

102

HP Copyright 2011 Peter Mattei

The Replication Solution for long-distance implementations

HP 3PAR Remote Copy Asynchronous Periodic

Efficient even with high latency replication links

Host writes are acknowledged as soon as the data is written into cache of the primary array

Bandwidth-friendly

The primary and secondary Volumes are resynchronized periodically either scheduled or manually

If data is written to the same area of a volume in between resyncs only the last update needs to be

resynced

Space efficient

Copy-on-write Snapshot versus full PIT copy

Thin Provisioning-aware

Guaranteed Consistency

Enabled by Volume Groups

Before a resync starts a snapshot of the Secondary Volume or Volume Group is created

103

HP Copyright 2011 Peter Mattei

Remote Copy Asynchronous Periodic

Base Volume Snapshot Base Volume Snapshot

Primary Site

P

Sequence

Remote Site

A

S

A

1 Initial Copy

S

B

B-A

delta

Resynchronization.

Delta Copy

B S

A

Resynchronization.

Starts with snapshots

2

Ready for next

resynchronization

A S

A

B

S

B

Upon Completion.

Delete old snapshot

3

104

HP Copyright 2011 Peter Mattei

Supported Distances and Latencies

HP 3PAR Remote Copy

Remote Copy Type Max Supported Distance Max Supported Latency

Synchronous IP 210 km /130 miles 1.3ms

Synchronous FC 210 km /130 miles 1.3ms

Asynchronous Periodic IP N/A 150ms round trip

Asynchronous Periodic FC 210 km /130 miles 1.3ms

Asynchronous Periodic FCIP N/A 60ms round trip

105

HP Copyright 2011 Peter Mattei

Clustering solution protecting against server and storage failure

Cluster Extension for Windows

Microsoft

Cluster

Data Center 1 Data Center 2

Up to 210km

Cluster Extension

What does it do?

Manual or automated site-failover for

Server and Storage resources

Transparent Hyper-V Live Migration

between site

Supported environments:

Microsoft Windows Server 2003

Microsoft Windows Server 2008

HP ProLiant Storage Server

Up to 210km (RC supported max)

Requirements:

3PAR Disk Arrays

Remote Copy

Microsoft Cluster

Cluster Extension

Max 20ms network round-trip delay

A A

B

File share Witness

Data Center 3

Remote Copy

See also http://h18006.www1.hp.com/storage/software/ces/index.html

HP Copyright 2011 Peter Mattei

End-to-end clustering solution to protect against server and storage failure

Metrocluster for HP-UX

Serviceguard

for HP-UX

HP Metrocluster

What does it do?

Provides manual or automated site-

failover for Server and Storage resources

Supported environments:

HP-UX 11i v2 & v3 with Serviceguard

Up to 210km (RC supported max)

Requirements:

HP 3PAR Disk Arrays

3PAR Remote Copy

HP Serviceguard Metrocluster

Max 200ms network round-trip delay

A A

B

A

Quorum

Data Center 3

Data Center 1 Data Center 2

Up to 210km

Remote Copy

See also: http://h20000.www2.hp.com/bc/docs/support/SupportManual/c02967683/c02967683.pdf

HP Copyright 2011 Peter Mattei

Automated ESX Disaster Recovery

VMware ESX DR with SRM

HP 3PAR

Servers

VMware Infrastructure

Virtual Machines

VirtualCenter

Site

Recovery

Manager

HP 3PAR

Servers

VMware Infrastructure

Virtual Machines

VirtualCenter

Site

Recovery

Manager

Production Site

Recovery Site

What does it do?

Simplifies DR and increases reliability

Integrates VMware Infrastructure with HP

3PAR Remote Copy and Virtual Copy

Makes DR protection a property of the VM

Allowing you to pre-program your disaster

response

Enables non-disruptive DR testing

Requirements:

VMware vSphere

VMware vCenter

VMware vCenter Site Recovery Manager

HP 3PAR Replication Adapter for VMware

vCenter Site Recovery Manager

HP 3PAR Remote Copy Software

HP 3PAR Virtual Copy Software (for DR

failover testing)

Production LUNs

Remote Copy DR LUNs

Virtual Copy Test LUNs 108

Copyright 2011 Hewlett-Packard Development Company, L.P. The information

contained herein is subject to change without notice. Confidentiality label goes here

HP 3PAR

Dynamic and Adaptive

Optimization

HP Copyright 2011 Peter Mattei

A New Optimization Strategy for SSDs

Flash Price decline has enabled

SSD as a viable storage tier but

data placement is difficult on a

per LUN basis

Non-optimized

approach

Non-Tiered Volume/LUN

SSD only

Tier 2 NL

Tier 1 FC Optimized

approach for

leveraging SSDs

Multi-Tiered Volume/LUN

Tier 0 SSD

A new way of autonomic data

placement and cost/performance

optimization is required:

HP 3PAR Adaptive Optimization

110

HP Copyright 2011 Peter Mattei

Tier 0 SSD

Tier 1 FC

Tier 2 SATA

3PAR Dynamic

Optimization

3PAR Adaptive

Optimization

- Region

Autonomic

Tiering and

Data Movement

Autonomic

Data

Movement

Manual or Automatic Tiering

HP 3PAR Dynamic and Adaptive Optimization

111

HP Copyright 2011 Peter Mattei

Storage Tiers HP 3PAR Dynamic Optimization

P

e

r

f

o

r

m

a

n

c

e

Cost per Useable TB

FC

Nearline

RAID 1

RAID 5

2+1)

RAID 5

(3+1)

RAID 5

(7+1)

RAID 1

RAID 5

(2+1)

RAID 5

(3+1)

RAID 5

(7+1)

RAID 6 (6+2)

RAID 6 (14+2)

RAID 6 (6+2)

RAID 6 (14+2)

RAID 1

RAID 5

2+1)

RAID 5

(3+1)

RAID 5

(7+1)

RAID 6 (6+2)

RAID 6 (14+2)

SSD

In a single command

non-disruptively optimize and

adapt cost, performance,

efficiency and resiliency

112

HP Copyright 2011 Peter Mattei

HP 3PAR Dynamic Optimization Use Cases

Deliver the required service levels for the lowest possible cost throughout the data lifecycle

10TB net 10TB net 10TB net

~50%

Savings

~80%

Savings

RAID 10

300GB FC Drives

RAID 50 (3+1)

600GB FC Drives

RAID 50 (7+1)

2TB SATA-Class Drives

Free 7.5 TBs of net

capacity on demand !

10 TB net

7.5TB net free

20 TB raw RAID 10 20 TB raw RAID 50

10 TB net

Accommodate rapid or unexpected, application growth on demand by freeing raw capacity

113

HP Copyright 2011 Peter Mattei

Optimize QoS levels with autonomic rebalancing without pre-planning

HP 3PAR Dynamic Optimization at a Customer

Before Dynamic Optimization

0

100

200

300