Documente Academic

Documente Profesional

Documente Cultură

317DreamCam - Proves Our Point - Review

Încărcat de

RICHARDRUBINSTEINDescriere originală:

Titlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

317DreamCam - Proves Our Point - Review

Încărcat de

RICHARDRUBINSTEINDrepturi de autor:

Formate disponibile

DreamCAM: a FPGA-based Platform for Smart

Camera Networks

Cedric Bourrasset , Luca Maggiani , Jocelyn Serot , Francois Berry , Paolo Pagano

Institut

Pascal, UMR 6602 CNRS, Universite Blaise Pascal, Clermont-Fd, France

TeCIP Institute, Scuola Superiore SantAnna, Pisa, Italy

National Laboratory of Photonic Networks, CNIT, Pisa, Italy

AbstractThe main challenges in smart camera networks

come from the limited capacity of network communications.

Indeed, wireless protocols such as the IEEE 802.15.4 protocol

target low data rate, low power consumption and low cost wireless

networking in order to fit the requirements of sensor networks.

Since nodes more and more often integrate image sensors,

network bandwidth has become a strong limiting factor in

application deployment. This means that data must be processed

at the node level before being sent on the network.

In this context, FPGA-based platforms, supporting massive

data parallelism, offer large opportunities for on-board processing. We present in this paper our FPGA-based smart camera

platform, called DreamCam, which is able to autonomously

exchange processed information on an Ethernet network.

Altera. This FPGA is connected to 6x1MBytes of SRAM

memory blocks wherein each 1MB memory block has a private

data and address buses (hence programmer may address six

memories in parallel). The FPGA also embeds a CMOS driver

to control the image sensor and a communication controller

(USB or Giga-Ethernet).

I. I NTRODUCTION

On-board processing is key issue for smart camera platforms. Most existing platforms are based on GGP units [1],

[2]. This kind of architecture offers high level programming

and modern embedded processors provide good performances

but cannot meet real-time processing constraints when image

resolution increases. On the other side, FPGA-based architectures, offering more massive computing capability have

been the subject of tremendous attention for implementing

image processing applications on smart camera nodes [3],

[4]. However, if the introduction of FPGAs can address some

performance issues in smart camera networks, it introduces

new challenges concerning node programmability, hardware

abstraction, dynamic configuration and network management.

In [5], we proposed an hardware and software architecture

for smart camera addressing the aforementioned issues. This

paper aims at detailing first development of this ambitious

proposal, giving insights into the final hardware. We implement a simplified version of the hardware architecture and we

dont use full communication stack (applicative layer is not

integrated). Nonetheless, we show how processed information

- histograms here - can be exchange between two camera

nodes using an ethernet connection.

Fig. 1: Our smart camera system

III. E XPERIMENT

The proposed demonstration features two smart cameras exchanging information on an ethernet network, (see screenshot

Fig 2.)

II. P LATFORM

The platform used is a FPGA-based smart camera developed at our institute and called DreamCam [6], Fig 1.

The DreamCam platform is equipped by a 1.3 Mega-pixels

active-pixel digital image sensor from E2V, supporting subsampling/binning and multi Region of Interests (ROIs). The

FPGA is a Cyclone-III EP3C120 FPGA manufactured by

Fig. 2: Screenshot of the demonstration

The global system architecture is depicted in Figure 3. It includes two smart camera and a computer. The computer is used

to sniff Ethernet packet and check inter-node communication.

In this application, each camera process input video stream

on the fly (without frame buffering), calculates corresponding

image histograms and send them to other cameras at the frame

rate. This first simple application is the basis of a color-based

object tracking in smart camera network [7].

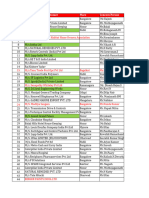

memory blocks available in the camera. Table I shows detailed

implementation results.

TABLE I: Hardware resource usage level

Operation

Logic Elements (%)

Memory bits (%)

Image sensor IP

271 (0,2%)

Softcore

2040 (1,7%)

1611264 (40,4%)

Processing

1250 (1,5%)

131072 (3,2%)

Ethernet IP

2643 (2,2%)

34944 (1%)

Total (usage level)

6204 (5,2%)

1777280 (44,6%)

IV. C ONCLUSION

Objectives of this demonstration are twice. First, it exposes

our theoretical architecture implemented in a real hardware

platform and supporting a simple application. Then, we guarantee the good integration of our smart camera platform in

a collaborative context through an Ethernet communication.

Future development will focus on a wireless transceiver integration and a real distributed smart camera application such

as multi-camera tracking.

Fig. 3: Demonstration system architecture

Figure 4 shows the FPGA logical architecture within each

camera. First component is an image sensor IP block which

controls the E2V image sensor. Then, the flow of pixel is

processed by an histogram hardware module which computes

the image intensity distribution. Next component implemented

is a softcore which controls image sensor configuration (resolution, frame rate, ROI). It is also in charge of network

management. In the proposed application, each softcore has

to manage a very simple kind of communication: send and

receive image histogram data at the image sensor frame rate.

Last component is an Ethernet controller IP which manage

transport to physical layer, from UDP packet encapsulation to

physical chip configuration (Marvell 88E1111). The Ethernet

IP can be addressed by the softcore register to configure port,

IP and MAC address.

Fig. 4: Smart Camera implementation

Total resource allocation of this application only use 5% of

logic elements but 45% of FPGA memory for a 1240 1080

resolution frame. The most memory consumer is the softcore

component, requiring instruction and data memory space. In

the case of a software application exceeds FPGA internal

memory, we will shift softcore memory space to external

ACKNOWLEDGMENT

This work has been sponsored by the French government

research programm Investissements davenir through the

IMobS3 Laboratory of Excellence (ANR-10-LABX-16-01),

by the European Union through the program Regional competitiveness and employment 2007-2013 (ERDF Auvergne

region), and by the Auvergne region. This work is made

in collaboration with the Networks of Embedded Systems

team at CNIT (Consorzio Nazionale Interuniversitario per le

Telecomunicazioni)

R EFERENCES

[1] P. Chen, P. Ahammad, C. Boyer, S.-I. Huang, L. Lin, E. Lobaton,

M. Meingast, S. Oh, S. Wang, P. Yan, A. Yang, C. Yeo, L.-C. Chang, J. D.

Tygar, and S. Sastry, Citric: A low-bandwidth wireless camera network

platform, in Distributed Smart Cameras, 2008. Second ACM/IEEE

International Conference on, 2008.

[2] W.-C. Feng, E. Kaiser, W. C. Feng, and M. L. Baillif, Panoptes:

scalable low-power video sensor networking technologies, ACM Trans.

Multimedia Comput. Commun. Appl., vol. 1, no. 2, pp. 151167, May

2005. [Online]. Available: http://doi.acm.org/10.1145/1062253.1062256

[3] I. Bravo, J. Balias, A. Gardel, J. L. Lzaro, F. Espinosa, and J. Garca,

Efficient smart cmos camera based on fpgas oriented to embedded image

processing, Sensors, vol. 11, no. 3, pp. 22822303, 2011.

[4] E. Norouznezhad, A. Bigdeli, A. Postula, and B. Lovell, A high

resolution smart camera with gige vision extension for surveillance

applications, in Distributed Smart Cameras, 2008. ICES 2008. Second

ACM/IEEE International Conference on, 2008, pp. 18.

[5] C. Bourrasset, J. Serot, and F. Berry, Fpga-based smart camera mote for

pervasive wireless network, in Distributed Smart Cameras, 2013. ICDSC

13. Seventh ACM/IEEE International Conference on, 2013.

[6] M. Birem and F. Berry, Dreamcam : A modular fpga-based smart camera

architecture, in Journal of System Architecture - Elsevier - To appear 2013, 2013.

[7] K. Nummiaro, E. Koller-meier, T. Svoboda, D. Roth, and L. V. Gool,

Color-based object tracking in multi-camera environments, in In: 25th

Pattern Recognition Symposium, DAGM (2003, 2003, pp. 591599.

S-ar putea să vă placă și

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- Buying Solutions' Total Cost of Ownership (TCO) CalculatorDocument93 paginiBuying Solutions' Total Cost of Ownership (TCO) Calculatorankitch123Încă nu există evaluări

- Simatic Wincc: Answers For IndustryDocument8 paginiSimatic Wincc: Answers For IndustryAlina MirelaÎncă nu există evaluări

- What Is NoSQLDocument4 paginiWhat Is NoSQLDulari Bosamiya BhattÎncă nu există evaluări

- Ibps Po Interview Capsule-1Document8 paginiIbps Po Interview Capsule-1atingoyal1Încă nu există evaluări

- Multilin 369Document5 paginiMultilin 369Edo RossÎncă nu există evaluări

- Sir - 11 - 21 Rate List 2022Document10 paginiSir - 11 - 21 Rate List 2022akshayÎncă nu există evaluări

- Chapter 2 GTAWDocument72 paginiChapter 2 GTAWDevrath Bangalore Bangalore100% (1)

- Goldwallet 2.1.1Document16 paginiGoldwallet 2.1.1yanuarÎncă nu există evaluări

- Total Productive Maintenance WorksheetDocument2 paginiTotal Productive Maintenance WorksheetSeda De Drasnia100% (1)

- Content Marketing Solution StudyDocument39 paginiContent Marketing Solution StudyDemand Metric100% (2)

- GTGDocument4 paginiGTGSANDEEP SRIVASTAVAÎncă nu există evaluări

- Example Quality PlanDocument11 paginiExample Quality PlanzafeerÎncă nu există evaluări

- Customers at SurveyDocument10 paginiCustomers at Surveynaren000Încă nu există evaluări

- High Pressure Accessories CatalogDocument117 paginiHigh Pressure Accessories CatalogRijad JamakovicÎncă nu există evaluări

- Marantz Product Catalogue 2010 2011Document31 paginiMarantz Product Catalogue 2010 2011O M Gee100% (1)

- Karcher Quotation List - 2023Document12 paginiKarcher Quotation List - 2023veereshmyb28Încă nu există evaluări

- Tyco SprinklerDocument3 paginiTyco SprinklerVitor BrandaoÎncă nu există evaluări

- PDFDocument7 paginiPDFJose JohnÎncă nu există evaluări

- Model 7691Document1 paginăModel 7691Khiết trầnÎncă nu există evaluări

- Mapal 2016Document416 paginiMapal 2016isuntxoÎncă nu există evaluări

- Top Links October 2018Document122 paginiTop Links October 2018Andrew Richard ThompsonÎncă nu există evaluări

- BibliografieDocument2 paginiBibliografieMadalin AlexandruÎncă nu există evaluări

- Guide Line On The Electrical Co-Ordination of Pipelines and Power LinesDocument96 paginiGuide Line On The Electrical Co-Ordination of Pipelines and Power Linesjboston123100% (1)

- Spam DetectionDocument142 paginiSpam DetectionRahul GantaÎncă nu există evaluări

- Dissolved Gas Analysis of Transformer Oil: Mrs. Harsha Shah Insulation DivisionDocument38 paginiDissolved Gas Analysis of Transformer Oil: Mrs. Harsha Shah Insulation Divisionsjavre9390100% (1)

- Weilding TechnologyDocument15 paginiWeilding TechnologyRAMALAKSHMI SUDALAIKANNANÎncă nu există evaluări

- p2 A2 Weekend Homework Week 1-KeyDocument4 paginip2 A2 Weekend Homework Week 1-KeyaysegulokrÎncă nu există evaluări

- Non Domestic Building Services Compliance GuideDocument76 paginiNon Domestic Building Services Compliance GuideZoe MarinescuÎncă nu există evaluări

- TX-SMS Remote Programming GuideDocument2 paginiTX-SMS Remote Programming GuidedjbobyÎncă nu există evaluări

- BSNL CRM FTTH User Manual - Release1.0 - ShiftDocument25 paginiBSNL CRM FTTH User Manual - Release1.0 - ShiftJTONIB AIZAWLÎncă nu există evaluări