Documente Academic

Documente Profesional

Documente Cultură

Introduction To Computation and Programming Using Python, Revised - Guttag, John v..233

Încărcat de

ZhichaoWangTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Introduction To Computation and Programming Using Python, Revised - Guttag, John v..233

Încărcat de

ZhichaoWangDrepturi de autor:

Formate disponibile

216

Chapter 15. Understanding Experimental Data

15.2.1 Coefficient of Determination

When we fit a curve to a set of data, we are finding a function that relates an

independent variable (inches horizontally from the launch point in this example)

to a predicted value of a dependent variable (inches above the launch point in

this example). Asking about the goodness of a fit is equivalent to asking about

the accuracy of these predictions. Recall that the fits were found by minimizing

the mean square error. This suggests that one could evaluate the goodness of a

fit by looking at the mean square error. The problem with that approach is that

while there is a lower bound for the mean square error (zero), there is no upper

bound. This means that while the mean square error is useful for comparing

the relative goodness of two fits to the same data, it is not particularly useful for

getting a sense of the absolute goodness of a fit.

We can calculate the absolute goodness of a fit using the coefficient of

determination, often written as R2.97 Let !! be the ! !! observed value, !! be the

corresponding value predicted by model, and ! be the mean of the observed

values.

!! = 1

!! )!

!

! (!! !)

! (!!

By comparing the estimation errors (the numerator) with the variability of the

original values (the denominator), R2 is intended to capture the proportion of

variability in a data set that is accounted for by the statistical model provided by

the fit. When the model being evaluated is produced by a linear regression, the

value of R2 always lies between 0 and 1. If R2 = 1, the model explains all of the

variability in the data. If R2 = 0, there is no relationship between the values

predicted by the model and the actual data.

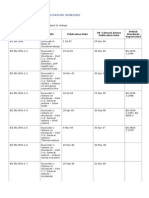

The code in Figure 15.5 provides a straightforward implementation of this

statistical measure. Its compactness stems from the expressiveness of the

operations on arrays. The expression (predicted - measured)**2 subtracts the

elements of one array from the elements of another, and then squares each

element in the result. The expression (measured - meanOfMeasured)**2

subtracts the scalar value meanOfMeasured from each element of the array

measured, and then squares each element of the results.

def rSquared(measured, predicted):

"""Assumes measured a one-dimensional array of measured values

predicted a one-dimensional array of predicted values

Returns coefficient of determination"""

estimateError = ((predicted - measured)**2).sum()

meanOfMeasured = measured.sum()/float(len(measured))

variability = ((measured - meanOfMeasured)**2).sum()

return 1 - estimateError/variability

Figure 15.5 Computing R2

97 There are several different definitions of the coefficient of determination. The definition

supplied here is used to evaluate the quality of a fit produced by a linear regression.

S-ar putea să vă placă și

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (399)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- Geotechnical ManualDocument546 paginiGeotechnical Manualdavid gonzalez50% (2)

- Notes On DynamicsDocument214 paginiNotes On Dynamicskhayat100% (1)

- Group 3-Report Mgt555Document19 paginiGroup 3-Report Mgt555Nurul FarahinÎncă nu există evaluări

- A Study on Customer Satisfaction at AirtelDocument62 paginiA Study on Customer Satisfaction at Airtelmansi100% (3)

- Sunil Bhosale Mba 2 Project CDDocument119 paginiSunil Bhosale Mba 2 Project CDMMV Plant100% (1)

- Forex Volatility Guide: VIX Index and USDJPY CorrelationsDocument1 paginăForex Volatility Guide: VIX Index and USDJPY CorrelationsZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..166Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..166ZhichaoWangÎncă nu există evaluări

- Abe Cofnas Sentiment Indicators Renko Price Break Kagi Point and Figure 2010 292 Pages.1Document1 paginăAbe Cofnas Sentiment Indicators Renko Price Break Kagi Point and Figure 2010 292 Pages.1ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..233Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..233ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..127Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..127ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..131Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..131ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..121Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..121ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..257Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..257ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..58Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..58ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..11Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..11ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..200Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..200ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..149Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..149ZhichaoWangÎncă nu există evaluări

- Machine learning chapter summaryDocument1 paginăMachine learning chapter summaryZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..312Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..312ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..189Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..189ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..315Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..315ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..312Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..312ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..192Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..192ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..223Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..223ZhichaoWangÎncă nu există evaluări

- Chapter 18. Dynamic Programming: Figure 18.2 Tree of Calls For Recursive FibonacciDocument1 paginăChapter 18. Dynamic Programming: Figure 18.2 Tree of Calls For Recursive FibonacciZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..310Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..310ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..160Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..160ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..162Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..162ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..311Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..311ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..181Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..181ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..200Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..200ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..123Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..123ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..126Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..126ZhichaoWangÎncă nu există evaluări

- Introduction To Computation and Programming Using Python, Revised - Guttag, John v..293Document1 paginăIntroduction To Computation and Programming Using Python, Revised - Guttag, John v..293ZhichaoWangÎncă nu există evaluări

- MODULE 4 of Tests and TestingDocument58 paginiMODULE 4 of Tests and TestingAngel Love HernandezÎncă nu există evaluări

- 10 1108 - Ijppm 02 2017 0037 PDFDocument22 pagini10 1108 - Ijppm 02 2017 0037 PDFJennyfer ValenzuelaÎncă nu există evaluări

- Galvanized Steel Performance in SoilDocument2 paginiGalvanized Steel Performance in SoilHarish KrothapalliÎncă nu există evaluări

- Autoethnography and Family ResearchDocument18 paginiAutoethnography and Family ResearchpecescdÎncă nu există evaluări

- 2023-Tutorial 05Document2 pagini2023-Tutorial 05chyhyhyÎncă nu există evaluări

- Combinatorial Calculus ExefrcisesDocument12 paginiCombinatorial Calculus ExefrcisesAnca O. ArionÎncă nu există evaluări

- Ethical Behavior and Bureaucratic Structure in Marketing Research OrganizationsDocument7 paginiEthical Behavior and Bureaucratic Structure in Marketing Research OrganizationsIra WahyudiÎncă nu există evaluări

- Impact of E-Supply Chain On Supply Chain Management of Retail Malls in Nagpur. (SYNOPSIS)Document11 paginiImpact of E-Supply Chain On Supply Chain Management of Retail Malls in Nagpur. (SYNOPSIS)Deepak Singh100% (2)

- Relationship Between Nurse Case Manager's Communication Skills and Patient Satisfaction at Hospital in JakartaDocument6 paginiRelationship Between Nurse Case Manager's Communication Skills and Patient Satisfaction at Hospital in Jakartakritis ardiansyahÎncă nu există evaluări

- DadaDocument4 paginiDadaRafsan NahinÎncă nu există evaluări

- Veterinarians' views on antimicrobial susceptibility testingDocument45 paginiVeterinarians' views on antimicrobial susceptibility testingCristhian Jover CastroÎncă nu există evaluări

- BS To ENDocument7 paginiBS To ENdownloadkaroÎncă nu există evaluări

- Soalan Lengkap P3 (2020)Document3 paginiSoalan Lengkap P3 (2020)Wendy LohÎncă nu există evaluări

- UntitledDocument5 paginiUntitledkarthick pÎncă nu există evaluări

- Factors Affecting Consumer Buying BehaviourDocument12 paginiFactors Affecting Consumer Buying BehaviourAsghar ALiÎncă nu există evaluări

- ProbablityDocument37 paginiProbablityewnget75% (4)

- Buccal ShelfDocument3 paginiBuccal Shelfshivaprasadm100% (1)

- Specification For Glazed Fire-Clay Sanitary AppliancesDocument15 paginiSpecification For Glazed Fire-Clay Sanitary AppliancesyesvvnÎncă nu există evaluări

- Reflective Journal on Business Research ExperienceDocument8 paginiReflective Journal on Business Research ExperienceParul JainÎncă nu există evaluări

- IMPRISONMENT Thesis BSP 4C To Be Print 4Document42 paginiIMPRISONMENT Thesis BSP 4C To Be Print 4Jennifer SantosÎncă nu există evaluări

- Robinson, H. S Et Al (2005), Business Performance Measurement Practices in Construction Engineering OrganisationsDocument28 paginiRobinson, H. S Et Al (2005), Business Performance Measurement Practices in Construction Engineering OrganisationsDavid SabaflyÎncă nu există evaluări

- A Risk Assessment Framework For Grade Crossing Accidents and Derailments in The United States: A Case Study of California Rail Network (2008-2017)Document16 paginiA Risk Assessment Framework For Grade Crossing Accidents and Derailments in The United States: A Case Study of California Rail Network (2008-2017)Ahmed LasisiÎncă nu există evaluări

- Biological control agent toleranceDocument8 paginiBiological control agent toleranceVictor Lauro Perez GarciaÎncă nu există evaluări

- Value of A Pilot StudyDocument3 paginiValue of A Pilot StudyXiaoqian ChuÎncă nu există evaluări

- Yavor Unit 28 Launching A New VentureDocument8 paginiYavor Unit 28 Launching A New VentureFahmina AhmedÎncă nu există evaluări