Documente Academic

Documente Profesional

Documente Cultură

Weblog Analysis With Map-Reduce and Performance Comparison of Single Vs Multinode Hadoop Cluster

Încărcat de

Editor IJRITCCTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Weblog Analysis With Map-Reduce and Performance Comparison of Single Vs Multinode Hadoop Cluster

Încărcat de

Editor IJRITCCDrepturi de autor:

Formate disponibile

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 2 Issue: 11

ISSN: 2321-8169

3692 - 3696

_______________________________________________________________________________________________

Weblog Analysis with Map-Reduce and Performance Comparison of Single v/s

Multinode Hadoop Cluster

Dhara Kalola

Prof. Uday Bhave

PG Student, Computer Engineering Department

Shah & Anchor Kutchhi Engineering College

Chembur, Mumbai, India

dharapatel23@gmail.com

I/C HOD, Computer Engineering Department

Shah & Anchor Kutchhi Engineering College

Chembur, Mumbai, India

uday_b1000@yahoo.com

Abstract In this internet era websites are useful source of many information. Because of growing popularity of World Wide Web a website

receives thousands to millions requests per day. Thus, the log files of such websites are growing in size day by day. These log files are useful

source of information to identify users behavior. This paper is an attempt to analyze the weblogs using Hadoop Map-Reduce algorithm. Hadoop

is an open source framework that provides parallel storage and processing of large datasets. This paper makes use of Hadoops this

feature to analyze the large, Semi structured dataset of websites log. The performance of the algorithm is compared on pseudo

distributed and fully distributed mode Hadoop cluster.

Keywords-Hadoop;Map-Reduce;Weblog Analysis

__________________________________________________*****_________________________________________________

I.

INTRODUCTION

When an internet user surfs any website or link, the web

server records the details of it in the form web access logs. In

web access log you can see the kinds of files users are

accessing, the location from where the request has been made

and other information like what kind of web browsers and

device visitors are using. An access log is a list of all the entries

that users have requested from a Web site. Now a days people

are using internet for their day to day activities like social

networking, news, education and many more. Thus, websites

are experiencing thousands of visitors every day. As web server

is recording each and every user action, the size of web server

log files is increasing in size day by day. Such log files are

semi structured and it is very difficult to store, process and

analyze using traditional database or data warehouse systems in

tolerable elapse time.

Hadoop is and open source apache frame work for

processing large, unstructured data set on Multinode cluster

made of commodity hardware. It divides the large datasets in

chunks and distributes them in different machines in the

cluster. The Map-Reduce is programming paradigm of Hadoop

which then process these chunks parallel and produce the result

in less time compared to traditional systems.

This paper tries to use the Hadoop Map-Reduce for

processing the large and semi structured data set of web access

logs to get useful information. It will help the website

administrators to track the usage of their websites and user

behavior.

II.

HADOOP OVERVIEW

The Apache Hadoop is a framework that allows for the

distributed processing of large data sets across clusters of

computers using simple programming models. It is designed to

scale up from single servers to thousands of machines, each

offering local computation and storage. [4]. Hadoop consists of

two parts. A distributed file system (HDFS) and a method for

distributed computing (Map-Reduce). It has master/slave

architecture. A Hadoop cluster consists of one Master Node

and one or more Slave Nodes. There are five daemons of

Hadoop: NameNode, Secondary NameNode, DataNode,

JobTracker, and TaskTracker.

A. HDFS:

The Hadoop Distributed File System (HDFS) is a

distributed file system designed to run on commodity

hardware. HDFS is designed to be deployed on low-cost

hardware. Three daemons of Hadoop are associated with

HDFS: NameNode, DataNode and Secondary NameNode. An

HDFS cluster consists of a single NameNode, a master server

that manages the file system namespace and regulates access to

files by clients. There are a number of DataNodes, usually one

per node in the cluster, which store the actual data blocks and

3692

IJRITCC |November 2014, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 2 Issue: 11

ISSN: 2321-8169

3692 - 3696

_______________________________________________________________________________________________

manage the storage attached to the nodes that they run on.

When a file is uploaded in HDFS at Master, it is divided into

blocks and distributed among all the DataNodes running on

slave machines in the same cluster. NameNode is responsible

for splitting of files, replication of blocks for fault tolerance,

maintaining update reports of each block for integrity.

DataNodes send periodic heartbeat message to NameNode

about their block report. Secondary NameNode periodically

updates the file system changes logs and performs the

housekeeping function for NameNode. This allows NameNode

to start faster next time. [2] [9].

passed to Reduce phase. The Reduce task merges the input to

produce the final output in <Key, Value> pair which is then

written in HDFS [7][9][10] .

Figure 2. Map-Reduce flow[11]

C. Hadoop Configuration

Hadoop runs in one of three modes [3].

Standalone: All Hadoop functionality runs in one Java

process.

Figure 1. Hadoop Architeture[6]

B. Map-Reduce

Hadoop MapReduce is a software framework for writing

applications which process large amounts of datasets in parallel

on large clusters of commodity hardware in a reliable, faulttolerant manner. Two daemons of Hadoop are associated with

MapReduce, JobTracker and TaskTracker. There is only one

JobTracker running on the Master. It divides the job in to

number of tasks and assigns tasks to each TaskTracker running

on slaves in same cluster. The TaskTracker is responsible for

executing the assigned task and sending the output back to the

JobTracker. The JobTracker then combines the result and

writes it in HDFS. Thus, a MapReduce job runs on Hadoop

cluster in completely parallel manner.

The MapReduce framework operates exclusively on <key,

value> pairs, that is, the framework views the input to the job

as a set of <key, value> pairs and produces a set of <key,

value> pairs as the output of the job. The Map task divides

workload into small workload, assigns the task to Mapper

which processes each unit of block of data. The output of

Mapper is sorted list of <Key, Value> pairs which is then

Pseudo-Distributed: It uses all the Hadoop daemons

and starts them on local machine. It provides a view of

real Hadoop setup but does not permit distributed

storage or processing across multiple machines.

Fully Distributed: Hadoop functionality is distributed

across a cluster of machines. Each machine

participates in somewhat different roles. This allows

multiple machines to contribute processing power and

storage to the cluster.

III.

EXPERIMENTAL SETUP

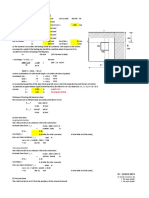

The setup of pseudo distributed and fully distributed mode

has been successfully implemented. The clusters are created

using machines with 2 GB RAM, Intel Atom CPU 330

processor and Windows 7 Ultimate.

In pseudo distributed mode the cluster is made up of single

machine with all Hadoop daemons are running on it. The setup

requires Hadoop 0.19.1, Eclipse Europa 3.3.2, Java 1.6, and

Cygwin. Figure 3 shows the cluster summary for single node

cluster with one machine.

3693

IJRITCC |November 2014, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 2 Issue: 11

ISSN: 2321-8169

3692 - 3696

_______________________________________________________________________________________________

website accessed by the user. It provides a raw feed of data

created any time when anyone clicks on a URL. The endpoint

responds to http requests for any URL and returns stream of

JSON(JavaScript Object Notation) entries, one per line, that

represent real-time clicks. The files hold 12.5 GB dataset

collected from various time period of the year 2011, 2012,

2013 [5]. The sample log file is shown in Figure 5.

Figure 3. NameNode Configuration for Single Node Cluster

In fully distributed mode, a cluster of two nodes is created

with which one acts as slave and other as both master and

slave. The master node contains daemons such as NameNode,

DataNode, JobTracker and TaskTracker. The slave node

contains TaskTracker and DataNode. Hadoop clusters are

capable to build with inexpensive computers. Even if one node

fails, the cluster continues to operate without losing data by

redistributing the work to remaining cluster.

The master node contains the IP address of the slave. The

Name Node contains all the file system metadata of the

developed cluster. The slave node is identified by the master

using its IP address and read the log files which are split into

blocks. The log data is split into blocks and processed in two

machines in parallel. The code is transmitted from the master to

slave to process the work for the given block. The setup

requires Hadoop 0.19.1, Eclipse Europa 3.3.2, Java 1.6,

Cygwin and More than one Windows PC on a LAN. Figure 4

shows the Cluster summary of Multinode cluster with two

machines.

Figure 5. Sample Weblog File

Log files can supply a lot of useful information to the

webmaster, such as; the number of hits the web site has

received within a given period of time or the type of browser

visitors is using to view your site. To analyze the weblog data

the files are uploaded in HDFS. Following functions are

performed on these set of log files to obtain useful information.

A. User Agent Analysis

When web users visit a web site a text string is sent to the

server of that web site in order to identify the user agent. The

identification of the user agent contains specific information,

including the web browser which is accessing the website, the

application name and version, the host operating system and

language. This function utilizes the a: USER_AGENT

attribute of the data set. This function breaks down the user

agent string and identifies that the request has been made from

which browser. The execution is given below. The user can

apply filters of country and city.

(Country=IN, City=Mumbai, User Agent)

Map1(Key,Value)

(Offset,(Country,City,User Agent)

Map2 (Key, Value)

(IN, (Mumbai, Mozilla))

(IN, (Mumbai, IE))

(Mumbai, (Mozilla))

(Mumbai, (IE))

(Mozilla,1)

(IE,1)

(IN, (Mumbai, Mozilla))

(IN, (Mumbai, Safari))

Map3 (Key, Value)

(Mumbai, (Mozilla))

(Mumbai, (Safari))

Reduce(Key, Value)

(Mozilla,1)

(Safari,1)

Figure 4. NameNode Configuration for Multi Node Cluster

Output

IV.

WEBLOG ANALYSIS AND RESULTS

In this experiment, we have analyzed the weblog data

published by 1.USA.GOV. The dataset consists of web logs of

Mozilla,2

IE,1

Safari,1

Figure 6. Sample Execution of Map-Reduce for Weblog

Analysis

3694

IJRITCC |November 2014, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 2 Issue: 11

ISSN: 2321-8169

3692 - 3696

_______________________________________________________________________________________________

The function reads the files from Input directory in HDFS

and applies the Map-Reduce chaining as per filters specified by

the user. The sample execution is shown in figure 6. After

execution, the result gets stored in a file of output directory in

HDFS. This result is also in the form of <Key,Value> pairs.

The function reads this file format and displays the result in

graphical format is shown in figure 7. It shows the number of

hits from various types of browsers in a Country and City

specified by the user.

Figure 7. User Agent v/s Number of Hits

V.

PERFORMANCE ANALYSIS

Above functions are executed on Single node and

Multinode clusters. The execution time is measured with

different sizes of dataset. The chart showing execution time

with respect to size of data set in HDFS is given in Figure 8. In

pseudo distributed mode single machine works as Master as

well as slave. All the files are stored in HDFS on single node.

Also the computation is local. In fully distributed mode there is

one master and one slave both are having their local storage.

The function execution divides the job in two tasks and these

tasks are executed in parallel on both the machine. Thus, the

fully distributed environment takes less time for execution. The

experiment result indicates that Hadoop cluster is scalable to

support increased dataset and takes less job execution time with

increase in number of nodes.

for

Country= IN City= Mumbai

B. Popular links by country

This function can be useful for finding the most popular

link in a country. It generates list of URL along with number of

times it has been accessed from a country. This list is sorted in

descending order by number of times it has been accessed. This

function utilizes the hh: SHORT_URL attribute of the

dataset. It takes user input for country name and applies Map

functions for only those entries in log files. It generates the

<Key,Value> pair like <hh,1> for all the URLs. The Reduce

function combines all the values with same Key and sum the

Value part. The final result is written in to HDFS. This function

reads the HDFS and displays the result in the form of table as

given below.

TABLE I.

POPULAR LINKS IN COUNTRY= JAPAN

URL

1.usa.gov

go.nasa.gov

bit.ly

dcft.co

j.mp

usat.ly

No of Hits

58

18

10

1

1

1

Figure 8. Performance Analysis Size of Data v/s Execution

Time

VI.

CONCLUSION

In this work, we have explored the weblog analysis problem

using Hadoop cluster, HDFS and Map-Reduce and also

identified that the performance of fully distributed Hadoop

cluster significantly improves with increasing size of data set.

These experiments will produce the useful finding by analyzing

the Web access log to help the advertisers and website

administrators to find the websites which are most requested

from a particular region. This will also help the website owners

to track the number of users visiting per day.

VII.

FUTURE WORK

Currently Multi-node Hadoop Cluster is made up of two

nodes. This can be expanded by adding more slave nodes to

achieve high performance. The Single Point NameNode failure

can be solved by making two Nodes as Master in Hadoop

Cluster and taking regular backup of NameNode logs of Main

Master nodes on Backup Node. Whenever Main Master Node

3695

IJRITCC |November 2014, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 2 Issue: 11

ISSN: 2321-8169

3692 - 3696

_______________________________________________________________________________________________

fails the Backup Node can take place of it and another node

from the Cluster will be chosen as the Backup Node.

REFERENCES

[1]

http://www.rackspace.com/knowledge_center/article/readingapache-web-logs

[2]

The Hadoop Distributed File System : Architecture and Design.

Website: hadoop.apache.org/docs/r0.18.0/hdfs_design.pdf

[3]

http://hayesdavis.net/2008/06/14/running-hadoop-on-windows/

[4]

Welcome

to

Apache

http://hadoop.apache.org/

[5]

http://www.usa.gov/About/developer-resources/1usagov.shtml

[6]

Aditya Patel,Manashvi Birla, Ushma Nair, Addressing Big

Data Problem Using Hadoop and Mapreduce, 2012 NIRMA

UNIVERSITY INTERNATIONAL CONFERENCE ON

ENGINEERING, NUiCONE-2012, 06-08DECEMBER, 2012

[7]

Hadoop: The Definitive Guide by Tom White 2nd Edition

[8]

http://ebiquity.umbc.edu/Tutorials/Hadoop/00%20%20Intro.html

[9]

http://www.fromdev.com/2010/12/interview-questions-hadoopmapreduce.html

Hadoop,

website:

[10] http://hadoop.apache.org/docs/r1.2.1/mapred_tutorial.html

[11] Understanding Big Data: Analytics for Enterprise Class Hadoop

and Streaming Data.

[12] Savita K., Vijaya MS, Mining of Web Server Logs in a

Distributed Cluster Using Big Data Technologies, (IJACSA)

International Journal of Advanced Computer Science and

Applications, Vol. 5, No. 1, 2014

3696

IJRITCC |November 2014, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

S-ar putea să vă placă și

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- F325 Redox Equations and TitrationsDocument9 paginiF325 Redox Equations and TitrationsDoc_Croc100% (1)

- A Review of 2D &3D Image Steganography TechniquesDocument5 paginiA Review of 2D &3D Image Steganography TechniquesEditor IJRITCCÎncă nu există evaluări

- A Review of Wearable Antenna For Body Area Network ApplicationDocument4 paginiA Review of Wearable Antenna For Body Area Network ApplicationEditor IJRITCCÎncă nu există evaluări

- Regression Based Comparative Study For Continuous BP Measurement Using Pulse Transit TimeDocument7 paginiRegression Based Comparative Study For Continuous BP Measurement Using Pulse Transit TimeEditor IJRITCCÎncă nu există evaluări

- Channel Estimation Techniques Over MIMO-OFDM SystemDocument4 paginiChannel Estimation Techniques Over MIMO-OFDM SystemEditor IJRITCCÎncă nu există evaluări

- Performance Analysis of Image Restoration Techniques at Different NoisesDocument4 paginiPerformance Analysis of Image Restoration Techniques at Different NoisesEditor IJRITCCÎncă nu există evaluări

- Comparative Analysis of Hybrid Algorithms in Information HidingDocument5 paginiComparative Analysis of Hybrid Algorithms in Information HidingEditor IJRITCCÎncă nu există evaluări

- IJRITCC Call For Papers (October 2016 Issue) Citation in Google Scholar Impact Factor 5.837 DOI (CrossRef USA) For Each Paper, IC Value 5.075Document3 paginiIJRITCC Call For Papers (October 2016 Issue) Citation in Google Scholar Impact Factor 5.837 DOI (CrossRef USA) For Each Paper, IC Value 5.075Editor IJRITCCÎncă nu există evaluări

- Fuzzy Logic A Soft Computing Approach For E-Learning: A Qualitative ReviewDocument4 paginiFuzzy Logic A Soft Computing Approach For E-Learning: A Qualitative ReviewEditor IJRITCCÎncă nu există evaluări

- Itimer: Count On Your TimeDocument4 paginiItimer: Count On Your Timerahul sharmaÎncă nu există evaluări

- Channel Estimation Techniques Over MIMO-OFDM SystemDocument4 paginiChannel Estimation Techniques Over MIMO-OFDM SystemEditor IJRITCCÎncă nu există evaluări

- A Review of Wearable Antenna For Body Area Network ApplicationDocument4 paginiA Review of Wearable Antenna For Body Area Network ApplicationEditor IJRITCCÎncă nu există evaluări

- A Review of 2D &3D Image Steganography TechniquesDocument5 paginiA Review of 2D &3D Image Steganography TechniquesEditor IJRITCCÎncă nu există evaluări

- Importance of Similarity Measures in Effective Web Information RetrievalDocument5 paginiImportance of Similarity Measures in Effective Web Information RetrievalEditor IJRITCCÎncă nu există evaluări

- Modeling Heterogeneous Vehicle Routing Problem With Strict Time ScheduleDocument4 paginiModeling Heterogeneous Vehicle Routing Problem With Strict Time ScheduleEditor IJRITCCÎncă nu există evaluări

- Diagnosis and Prognosis of Breast Cancer Using Multi Classification AlgorithmDocument5 paginiDiagnosis and Prognosis of Breast Cancer Using Multi Classification AlgorithmEditor IJRITCCÎncă nu există evaluări

- Efficient Techniques For Image CompressionDocument4 paginiEfficient Techniques For Image CompressionEditor IJRITCCÎncă nu există evaluări

- Network Approach Based Hindi Numeral RecognitionDocument4 paginiNetwork Approach Based Hindi Numeral RecognitionEditor IJRITCCÎncă nu există evaluări

- Predictive Analysis For Diabetes Using Tableau: Dhanamma Jagli Siddhanth KotianDocument3 paginiPredictive Analysis For Diabetes Using Tableau: Dhanamma Jagli Siddhanth Kotianrahul sharmaÎncă nu există evaluări

- A Study of Focused Web Crawling TechniquesDocument4 paginiA Study of Focused Web Crawling TechniquesEditor IJRITCCÎncă nu există evaluări

- Vehicular Ad-Hoc Network, Its Security and Issues: A ReviewDocument4 paginiVehicular Ad-Hoc Network, Its Security and Issues: A Reviewrahul sharmaÎncă nu există evaluări

- 45 1530697786 - 04-07-2018 PDFDocument5 pagini45 1530697786 - 04-07-2018 PDFrahul sharmaÎncă nu există evaluări

- Prediction of Crop Yield Using LS-SVMDocument3 paginiPrediction of Crop Yield Using LS-SVMEditor IJRITCCÎncă nu există evaluări

- Hybrid Algorithm For Enhanced Watermark Security With Robust DetectionDocument5 paginiHybrid Algorithm For Enhanced Watermark Security With Robust Detectionrahul sharmaÎncă nu există evaluări

- Image Restoration Techniques Using Fusion To Remove Motion BlurDocument5 paginiImage Restoration Techniques Using Fusion To Remove Motion Blurrahul sharmaÎncă nu există evaluări

- Space Complexity Analysis of Rsa and Ecc Based Security Algorithms in Cloud DataDocument12 paginiSpace Complexity Analysis of Rsa and Ecc Based Security Algorithms in Cloud Datarahul sharmaÎncă nu există evaluări

- Safeguarding Data Privacy by Placing Multi-Level Access RestrictionsDocument3 paginiSafeguarding Data Privacy by Placing Multi-Level Access Restrictionsrahul sharmaÎncă nu există evaluări

- 44 1530697679 - 04-07-2018 PDFDocument3 pagini44 1530697679 - 04-07-2018 PDFrahul sharmaÎncă nu există evaluări

- 41 1530347319 - 30-06-2018 PDFDocument9 pagini41 1530347319 - 30-06-2018 PDFrahul sharmaÎncă nu există evaluări

- A Clustering and Associativity Analysis Based Probabilistic Method For Web Page PredictionDocument5 paginiA Clustering and Associativity Analysis Based Probabilistic Method For Web Page Predictionrahul sharmaÎncă nu există evaluări

- A Content Based Region Separation and Analysis Approach For Sar Image ClassificationDocument7 paginiA Content Based Region Separation and Analysis Approach For Sar Image Classificationrahul sharmaÎncă nu există evaluări

- DSA Lab1Document22 paginiDSA Lab1dukeyasser107967Încă nu există evaluări

- Skin and Its AppendagesDocument3 paginiSkin and Its AppendagesMarchylle Faye JimenezÎncă nu există evaluări

- Solutions of Csca 2020 by Sourav GogoiDocument11 paginiSolutions of Csca 2020 by Sourav GogoiGyandeepÎncă nu există evaluări

- 2 Parameter Circular (61-80)Document6 pagini2 Parameter Circular (61-80)Papan SarkarÎncă nu există evaluări

- Iso With Eccentric LoadingDocument12 paginiIso With Eccentric Loadingsaubhagya majhiÎncă nu există evaluări

- Physics of Racing Series - Brian BeckmanDocument148 paginiPhysics of Racing Series - Brian BeckmanVipin NairÎncă nu există evaluări

- Synthetic Fiber Reinforced ConcreteDocument13 paginiSynthetic Fiber Reinforced ConcreteSahir Abas0% (1)

- New Microsoft Office Word DocumentDocument20 paginiNew Microsoft Office Word DocumentVinay KumarÎncă nu există evaluări

- FireEdge FE400 PLC Interface Tech NoteDocument2 paginiFireEdge FE400 PLC Interface Tech NoteTuyên VũÎncă nu există evaluări

- Lipid TestDocument4 paginiLipid TestHak KubÎncă nu există evaluări

- 02 OS90522EN15GLA0 Data StoragesDocument84 pagini02 OS90522EN15GLA0 Data StoragesElego13thÎncă nu există evaluări

- Offshore Riser CalculationDocument10 paginiOffshore Riser CalculationSergio MuñozÎncă nu există evaluări

- On The Way To Zero Defect of Plastic-Encapsulated Electronic Power DevicesPart III Chip Coating Passivation and DesignDocument8 paginiOn The Way To Zero Defect of Plastic-Encapsulated Electronic Power DevicesPart III Chip Coating Passivation and DesignJiyang WangÎncă nu există evaluări

- Introduction To The Visual Basic Programming LanguageDocument24 paginiIntroduction To The Visual Basic Programming Languageapi-3749038100% (1)

- A Short-Form Proof of Fermat's Last TheoremDocument15 paginiA Short-Form Proof of Fermat's Last TheoremNishkarsh SwarnkarÎncă nu există evaluări

- Early Thermal CrackingDocument2 paginiEarly Thermal Crackingsudhir12345Încă nu există evaluări

- CE325 - 06 Stability of Gravity Retaining WallsDocument29 paginiCE325 - 06 Stability of Gravity Retaining WallsRobert PrinceÎncă nu există evaluări

- Che 243 Fluid Dynamics: Problem Set #4 Solutions: SolutionDocument9 paginiChe 243 Fluid Dynamics: Problem Set #4 Solutions: SolutionKyungtae Park100% (2)

- RFID Adoption by Supply Chain Organizations in MalaysiaDocument5 paginiRFID Adoption by Supply Chain Organizations in MalaysiaWalid GradaÎncă nu există evaluări

- Image - VC - Full DocumentDocument106 paginiImage - VC - Full DocumentSanthosh ShivaÎncă nu există evaluări

- CSBS Syllabus Book 01 11 2021 1Document117 paginiCSBS Syllabus Book 01 11 2021 1PRITHVI P. K SEC 2020Încă nu există evaluări

- U Series: Vortex ImpellerDocument12 paginiU Series: Vortex ImpellerNichamon NÎncă nu există evaluări

- Diagnostic Trouble Codes For Sinotruk HOWO Engines PDFDocument4 paginiDiagnostic Trouble Codes For Sinotruk HOWO Engines PDFhektor AtkinsonÎncă nu există evaluări

- Chapter3 Torsion FinalDocument78 paginiChapter3 Torsion FinalNaveen KumarÎncă nu există evaluări

- Diversity in DNS Performance MeasureDocument13 paginiDiversity in DNS Performance MeasureAllan LeandroÎncă nu există evaluări

- Learning Activity Sheet In: Computer Systems ServicingDocument12 paginiLearning Activity Sheet In: Computer Systems ServicingCarvalds 0315100% (1)

- CharacterizigPlant Canopies With Hemispherical PhotographsDocument16 paginiCharacterizigPlant Canopies With Hemispherical PhotographsGabriel TiveronÎncă nu există evaluări

- Pedal Power Generation 0Document5 paginiPedal Power Generation 0super meal coleenÎncă nu există evaluări

- SIMULATION of EMERGENCY ROOMS USING FLEXSIMDocument10 paginiSIMULATION of EMERGENCY ROOMS USING FLEXSIMBrandon VarnadoreÎncă nu există evaluări