Documente Academic

Documente Profesional

Documente Cultură

01 01 CT53331EN53GLA0 RNC Architecture and Functionalities

Încărcat de

Dragos SmoleanuDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

01 01 CT53331EN53GLA0 RNC Architecture and Functionalities

Încărcat de

Dragos SmoleanuDrepturi de autor:

Formate disponibile

RNC Architecture and Functionalities

CT53331EN53GLA0

RNC Architecture and Functionalities

CT53331EN53GLA0

RNC Architecture and Functionalities

CT53331EN53GLA0

RNC Architecture and Functionalities

The picture shows an overview of mobile network supporting both 2G and 3G. The

core network (CN) is divided into Circuit Switched and Packet Switched domains.

The 3G radio access network, or UTRAN (UMTS Terrestrial Radio Access Network),

consists of Node B's and RNC's. One RNC together with all Node B controlled forms

an RNS (Radio Network Subsystem).

IPA2800 Platform is used in RNC and MGW

CT53331EN53GLA0

RNC Architecture and Functionalities

The general functional architecture of the IPA2800 Packet Platform based network elements is

shown above. At the high level network element consists of switching functions, interface functions,

control functions, signal processing functions, and system functions (such as timing and power

feed).

Functionality is distributed to a set of functional units capable of accomplishing a special purpose.

These are entities of hardware and software or only hardware.

Operation and Maintenance Unit (OMU) for performing centralized parts of system maintenance

functions; peripherals such as Winchester Disk Drive (WDU) and Floppy Disk Drive (FDU) (i.e.

magneto-optical disk in the ATM Platform) connected via SCSI interface;

Distributed Control Computers (signaling and resource management computers) which consist of

common hardware and system software supplemented with function specific software for control,

protocol processing, management, and maintenance tasks;

Network Interface Units (NIU) for connecting the network element to various types of transmission

systems (e.g. E1 or STM-1); (Please note that actual names of functional units are different, e.g.

NIS1 and NIP1 instead of NIU)

Network Interworking Units (NIWU, IWS1) for connecting the network element to non-ATM

transmission systems (e.g. TDM E1);

ATM Multiplexer (MXU) and ATM Switching Fabric Unit (SFU) for switching both circuit and packet

switched data channels, for connecting signaling channels, as well as for system internal

communications;

AAL2 switching unit (A2SU) performs switching of AAL type 2 packets;

Timing and Hardware Management Bus Unit (TBU) for timing, synchronization and system

maintenance purposes; and

Distributed Signal Processing units (DMCU/TCU) which provide support for e.g. transcoding, macro

diversity combining, data compression, and ciphering.

Units are connected to the SFU either directly (in the case of units with high traffic capacity) or via

the MXU (in the case of units with lower traffic capacity). The order of magnitude of the

interconnection capacity for both cases is shown in the figure.

CT53331EN53GLA0

RNC Architecture and Functionalities

More formal way to view the generic functional architecture is by the generic block diagram. Note

that the naming of functional units is different in actual network elements based on the platform.

Here more generic terms are used to describe the concepts (for example, NIU, SPU and CU).

Such generic terms are marked with an asterisk (*).

To achieve higher reliability, many functional units are redundant: there is a spare unit designated

for one or more active units. There are several ways to manage these spare units. All the

centralized functions of the system are protected in order to guarantee high availability of the

system.

To guarantee high availability, the ATM Switching Fabric and ATM Multiplexer as core functions

of the system are redundant. Power feed, hardware management bus, and timing supply are also

duplicated functions. Hot standby protected units and units that have management or mass

memory interfaces are always duplicated. Hard discs and buses connecting them to control units

are always duplicated.

Computing platform provides support for the redundancy. Hardware and software of the system

are constantly supervised. When a defect is detected in an active functional unit, a spare unit is

set active by an automatic recovery function. The number of spare units and the method of

synchronization vary, but redundancy always operates on software level.

If the spare unit is designated for only one active unit the software in the unit pair is kept

synchronized so that taking the spare in use in fault situations (switchover) is very fast. This is

called 2N redundancy principle or duplication.

For less strict reliability requirements, the spare unit may also be designated to a group of

functional units. The spare unit can replace any unit in the group. In this case the switchover is a

bit slower to execute, because the spare unit synchronization (warming) is performed as a part of

the switchover procedure. This redundancy principle is called replaceable N+1.

A unit group may be allocated no spare unit at all, if the group acts as a resource pool. The

number of unit in the pool is selected so that there is some extra capacity available. If a few units

of the pool are disabled because of faults, the rest of the group can still perform its designated

functions. This redundancy principle is called complementary N+1 or load sharing.

CT53331EN53GLA0

RNC Architecture and Functionalities

The IPA2800 Packet Platform consists of the Switching Platform Software, the Fault

Tolerant Computing Platform Software, Signal Processing Platform Software, and the

Hardware Platform. In addition, adjunct platforms can be used if needed in an application.

The Switching Platform Software provides common telecom functions (for example,

statistics, routing, and address analysis) as well as generic packet switching/routing

functionality common for several application areas (for example, connection control, traffic

management, ATM network operations and maintenance, and resource management).

The Fault Tolerant Computing Platform Software provides a distributed and fault tolerant

computing environment for the upper platform levels and the applications. It is ideal for use

in implementing flexible, efficient and fault tolerant computing systems. The Computing

Platform Software includes basic computer services as well as system maintenance

services, and provides DX Light and POSIX application interfaces.

The Computing Platform Software is based upon general purpose computer units with interprocessor communications implemented using ATM virtual connections. The number of

computer units can be scaled according to application and network element specific

processing capacity requirements.

The Hardware Platform based on standard mechanics provides cost-efficiency through the

use of modular, optimized and standardized solutions that are largely based on

commercially available chipsets.

The Signal Processing Platform Software provides generic services for all signal processing

applications. Digital signal processing (DSP) is needed in providing computation intensive

end-user services, such as speech transcoding, echo cancellation, or macrodiversity

combining.

The Adjunct Platform (NEMU) provides a generic platform for O&M application services and

different NE management applications and tools.

Concept platform and it's layer structure should in this context be seen as a modular set of

closely related building blocks which provide well defined services. Structure must not be

seen as static and monolithic, as the subset of services needed for an application (specific

network element) can be selected.

CT53331EN53GLA0

RNC Architecture and Functionalities

The IPA2800 platform introduces a new mechanics concept, with new cabinet, new

sub rack (EMC shielded), and new plug-in unit dimensions. Fan units are needed

inside the cabinet for forced cooling.

The M2000 mechanics comprises the basic mechanics concept based on ETSI 300

119-4 standard and IEC 917 series standards for metric dimensioning of electronic

equipment.

The concept supports the platform architecture which allows modular scalability of

configurations varying from modest to very large capacity. It also allows the

performance to be configured using only few hardware component types.

The mechanics consists of following equipment:

cabinet mechanics

19-slot subrack, it's backplane and front plate mechanics

connector and cabling system

cooling equipment.

Dimensions of the cabinet are: width 600 mm, depth 600 mm, and height 1800/2100

mm (based on standard ETS 300 119-2 and IEC 917-2).

Subrack has a height of 300 mm, a depth of 300 mm, and a width of 500 mm. The

nominal plug-in unit slot in the sub rack is 25 mm which results in 19 slots per one

sub rack. The basic construction allows dividing a part of a sub rack vertically into

two slots with optional guiding mechanics for the use of half-height plug-in units.

The backplane and cabling system provides reliable interconnections between plugin units. In addition to this, the backplane provides EMC shield to the rear side of the

sub rack. Common signals are delivered via the backplane and all other

interconnection signals are connected via cabling. This allows backplane modularity

and flexibility in different configurations. Because of flexible cabling and redundancy

it is possible to scale the system to a larger capacity in an active system without

shutting down the whole system.

Cabinet power distribution equipment and four sub racks with cooling equipment can

be installed in one cabinet. Openings in the sides of the cabinet behind the subrack

backplanes allow direct horizontal cabling between cabinets.

CT53331EN53GLA0

10

RNC Architecture and Functionalities

CT53331EN53GLA0

11

RNC Architecture and Functionalities

2N Redundancy (duplication) is used when two units are dedicated to a task for

which one is enough at any given time. One of the units is always active, that is in

the working state. The other unit is kept in the hot standby state, the spare state.

For example:

2N in RNC: OMU, SFU, MXU, RSMU

2N in BSC: OMU, GSW, MCMU

When a unit is detected faulty, it is taken into the testing state, and the fault location

and testing programs are activated. On the basis of the diagnosis, the unit is taken to

the separated state, if a fault is detected, or into use automatically, if no fault is

detected.

If the spare unit is designated for only one active unit, the software in the spare unit

is kept synchronized so that taking it in use in fault situations (switchover) is very

fast. The spare unit can be said to be in hot standby. This redundancy principle is

called duplication, abbreviated "2N".

CT53331EN53GLA0

12

RNC Architecture and Functionalities

Replaceable N+1 / N+m Redundancy are used when there is just one or a few spare

units for a set of N units of a given type. The spare unit is not used by the

applications and is not permanently bound to one of the N active units, but can take

over the load of any one of them. When a commandinitiated changeover for a

replaceable N+1 unit is performed, a pair is made up, the spare unit is warmed up to

the hot standby state, and changeover takes place without major interruptions.

When a unit is detected faulty, it is automatically replaced without interruptions to

other parts of the system.

For example:

N+1 in RNC: ICSU

N+1 in BSC: BCSU

CT53331EN53GLA0

13

RNC Architecture and Functionalities

Load sharing (SN+) or Complementary N+1 Redundancy

A unit group can be allocated no spare unit at all if the group acts as a resource pool.

The number of units in the pool is selected so that there is a certain amount of extra

capacity. If a few units of the pool are disabled because of faults, the whole group

can still perform its designated functions. This redundancy principle is called load

sharing and abbreviated as 'SN+

For example:

SN+ in RNC: GTPU, A2SU, DMCU

SN+ in BSC: -

CT53331EN53GLA0

14

RNC Architecture and Functionalities

For example:

RNC: OMS

BSC: ET

CT53331EN53GLA0

15

RNC Architecture and Functionalities

MSP is the SDH name for the Multiplex Section Protection scheme, as defined in

ITU-T

recommendation G.783. In SONET, the equivalent term APS (Automatic Protection

Switching) is used instead. Throughout the rest of the document the term MSP is

used

for both SDH and SONET. In the basic MSP functionality, the service line is

protected

using another line which is called the protection line: if an error occurs, for instance a

loss of signal (LOS), the protection mechanism switches over to the protection line.

CT53331EN53GLA0

16

RNC Architecture and Functionalities

CT53331EN53GLA0

17

RNC Architecture and Functionalities

CT53331EN53GLA0

18

RNC Architecture and Functionalities

Sub racks

The sub rack mechanics consist of a sub rack frame, backplane, and front plate

forming electromagnetic shielding for electronics to fulfill EMC requirements.

The basic construction allows dividing a part of a subrack vertically into two slots with

optional guiding mechanics for the use of half-height plug-in units.

Plug-in unit

The RNC is constructed by using a total of approximately 11 plug-in unit types. The

basic mechanical elements of the plug-in units are PCB, connectors and front plate

mechanics. Front plate mechanics include insertion/extraction levers, fixing screws

and EMC gasket.

CT53331EN53GLA0

19

RNC Architecture and Functionalities

External PDH lines are connected to the RNC cabinet using a back interface plug-in

unit which allows modular backplane connections. One back interface plug-in unit

supports one E1 plug-in unit. The back interface plug-in unit is installed in the same

row as the plug-in unit, but at the rear of the cabinet. There are two kinds of connector

panels available:

connector panel with RJ45 connectors for balanced E1/T1 line connection to/from the

cabinet

connector panel with SMB connectors for coaxial E1 line connection to/from the

cabinet

External timing requires a specific connector panel. PANEL 1 in the RNAC cabinet

provides the physical interface connectors

Picture on top:

Cabling cabinet IC183 installed next to IC186. Notice the balanced cabling between

rear transition cards and cabling cabinet patch panels.

Topmost patch panel in IC186 is CPSAL.

Picture on bottom:

BIE1C (SMB connectors) and BIE1T (RJ45 connectors) rear transition cards

installed to SRBI in rear side of cabinet.

CT53331EN53GLA0

20

RNC Architecture and Functionalities

Acoustic noise emitted by one IPA2800 fully equipped cabinet is 67 dBA (Power level) 61 dBA

(pressure level) in normal conditions (4 FTR1 fan trays containing 32 fans). Acoustic noise

increases by 3 dB per new cabinet. FTR1 meet the ETS 300-753 requirements.

Expected lifetime L10(time when 10% of fans failed) ~8years (@+40 degree Celsius).

Fan tray replacement is possible in live system. Without the fan tray live system will overheat

approx. in 5 minutes.

Faulty FTRA fan tray replacement procedure:

-Remove front cable conduit if present (move cables carefully away)

-Unscrew the fan tray from mounting flanges

-Unplug the control cable first from sub rack side and secondly from fan tray side.

-Extract the faulty fan tray from cabinet and insert the spare fan tray unit

-Plug the control cable first in fan tray and secondly to the sub rack side

-Screw the fan tray to the cabinet flanges

-Install cable conduit and cables (if present)

-Faulty FTRA-A and FTRA-B replacement procedure:

-Remove fan tray front grill and extract air filter

-Unplug the control cable from fan tray side (rear side of cabinet)

-Open two thumb-screws behind the grill

-Lower and extract the fan assembly by opening the locking latches (drawer assembly and

cable conduit is still mounted to cabinet)

-Insert spare fan assembly and secure latches and thumb-screws

-Plug the control cable

-Insert new air filter and close the fan tray front grill.

CT53331EN53GLA0

21

RNC Architecture and Functionalities

The functions are distributed to a set of functional units capable of accomplishing a special

purpose. These are entities of hardware and software. The main functional units of the RNC

are listed below:

The control computers (ICSU and RSMU) consist of common hardware and system software

supplemented with function-specific software.

The AAL2 switching units (A2SU) perform AAL2 switching.

The Data and Macro Diversity Unit (DMCU) performs RNC-related user and control plane L1

and L2 functions.

The Operation and Maintenance Unit (OMU) performs basic system maintenance functions.

The O&M Server (OMS) is responsible for RNC element management tasks. The OMS has

hard disk units for program code and data.

The Magneto-Optical Disk Drive (FDU) is used for loading software locally to the RNC.

The Winchester Disk Unit (WDU) serves as a non-volatile memory for program code and data

for the OMU.

The Timing and Hardware Management Bus Unit (TBU) takes care of timing, synchronization

and system maintenance functions.

The Network Interface Unit (NIU) STM-1/OC-3 (NIS1/NIS1P) provides STM-1 external

interfaces and the means to execute physical layer and ATM layer functionality.

Network interface and processing unit 2x1000Base-T/LX provides Ethernet external interfaces

and the means to execute physical layer and IP layer functionality.

The NIU PDH (NIP1) provides 2 Mbit/s / 1,5 Mbit/s (E1/T1) PDH external interfaces and the

means to execute physical layer and ATM layer functionality.

The GPRS Tunneling Protocol Unit (GTPU) performs RNC-related Iu user plane functions

towards the SGSN.

The External Hardware Alarm Unit (EHU) receives external alarms and sends indications of

them as messages to the OMU-located external alarm handler through HMS. Its second

function is to drive the Lamp Panel (EXAU), the cabinet-integrated lamp and other possible

external equipment.

The Multiplexer Unit (MXU) and the Switching Fabric Unit (SFU) are required for switching

both circuit- and packet-switched data channels, for connecting signaling channels and for the

system's internal communication.

CT53331EN53GLA0

22

RNC Architecture and Functionalities

The functions are distributed to a set of functional units capable of accomplishing a special

purpose.

These are entities of hardware and software. The main functional units of the RNC are listed

below.

The control computers (ICSU and RSMU) consist of common hardware and system software

supplemented with function-specific software.

The Data and Macro Diversity Unit (DMCU) performs RNC-related user and control plane L1

and L2 functions.

The Operation and Maintenance Unit (OMU) performs basic system maintenance functions.

The Operation and Maintenance Server (OMS) is responsible for RNC element management

tasks.

The OMS has hard disk units for program code and data.

From RU20/RN5.0, standalone OMS is recommended for new RNC2600 deliveries.

Both standalone and integrated OMS are supported in RU20/RN5.0 release.

The Winchester Disk Unit (WDU) serves as a non-volatile memory for program code and data.

The Timing and hardware management Bus Unit (TBU) takes care of timing, synchronization

and system maintenance functions.

The Network interface and processing unit 8xSTM-1/OC-3 (NPS1/NPS1P) provides STM-1

external interfaces and the means to execute physical layer and ATM/AAL2 layer functionality.

It also terminates the GTP protocol layer in Iu-ps interface.

Network interface and processing unit 2x1000Base-T/LX (NPGE/NPGEP) provides Ethernet

external interfaces and the means to execute physical layer and IP layer functionality.

The External Hardware alarm Unit (EHU) receives external alarms and sends indications of

them as messages to the OMU located external alarm handler via HMS. Its second function is

to drive the lamp panel (EXAU), the cabinet-integrated lamp and possible other external

equipment.

The Multiplexer Unit (MXU) and the Switching Fabric Unit (SFU) are required for switching

both circuit and packet-switched data channels, for connecting signaling channels and for the

system's internal communication.

CT53331EN53GLA0

23

RNC Architecture and Functionalities

CT53331EN53GLA0

24

RNC Architecture and Functionalities

RNC196/48M

The smallest capacity step, RNC196/48M includes the first cabinet and the plug-inunits

NIS1 and NIS1P share same unit locations and are mutually exclusive. If redundancy

is to be used, RNC196 can be configured to use NIS1 or NIS1P in case of STM1

ATM transport, and to NPGE or NPGEP in case of IP transport.

RNC196/85M to 196M

In capacity steps 2 to 5, the capacity is expanded by taking additional sub racks 1 to

4 into use from the second cabinet.

RNC196/300M

The capacity of RNC196/196M is increased to 300Mbit/s (Iub) by removing some

units and replacing them with other functional units.

NIP1 and FDU are removed. Optionally, one NIP1 can be left to the configuration.

The FDU or the magneto-optical disk drive functionality is replaced by an external

USB memory stick supported with OMU. The external USB memory stick can be

used for transferring data to or from the RNC. The OMU unit must be upgraded with

another hardware variant (CCP18-A) that supports the USB interface.

There are additional units for A2SU, ICSU, MXU, and GTPU.

The number of NIS1/NIS1P units can be increased.

The HDS-A plug-in-unit is replaced by another variant (HDS-B) that supports two

hard disk units in one card.

CT53331EN53GLA0

25

RNC Architecture and Functionalities

In case RAN1754: HSPA optimized configuration is used, the maximum possible R99

data capacity is 67% from the maximum throughput of the configuration defined in

Table Capacity and reference call mix model.

CT53331EN53GLA0

26

RNC Architecture and Functionalities

RNC450/150

The smallest capacity step, RNC150 includes the first cabinet and the plug-in-units

RNC450/300

Expanded capacity to 300 Mbits/s, the RNC can be obtained by adding another

cabinet and the necessary plug-in units and connecting internal cabling between the

cabinets.

RNC450/450

Expanded capacity to 450 Mbits/s, the RNC can be obtained by adding the

necessary plug-in units into two sub racks.

Note: NIS1 and NIS1P share same unit locations and are mutually exclusive.

If redundancy is to be used, RNC196 can be configured to use NIS1 or NIS1P in

case of STM1 ATM transport, and to NPGE or NPGEP in case of IP transport.

Reference: DN0628405 : RNC capacity extensions and upgrade

CT53331EN53GLA0

27

RNC Architecture and Functionalities

RNC450 carrier-optimized configurations

RNC450 supports the carrier connectivity optimization functionality that can be used

to increase the number of carriers by decreasing the Iub throughput at the same

time. Also the AMR capacity is increased in some of the carrier-optimized

configurations.

The carrier-optimized configuration is activated by altering the HSDPA configuration

values. For detailed information, see Activating Basic HSDPA with QPSK and 5

codes.

RAN1754: HSPA optimized configuration is not supported in carrier optimized

configurations.

CT53331EN53GLA0

28

RNC Architecture and Functionalities

configuration step.

RNC2600/step 1

The smallest configuration step RNC2600/step 1 includes the first cabinet and the

plug- in-units.

Note that NPS1 and NPS1P / NPGE and NPGEP are mutually exclusive.

RNC2600/step 2

Configuration extension to RNC2600/step 2 can be obtained by adding the new

cabinet, necessary plug-in units.

There are more reserved slots for NPGE(P) and NPS1 units than can be installed at

the same time - the combined maximum is 14.

RNC2600/step 3

Configuration extension to RNC2600/step 3 can be obtained by adding the

necessary plug-in units into two sub-racks

There is a restriction on a number of NPS1 and NPGE.

There is a total of 28 slots and 16 SFU ports available:

1 NPS1 occupies 2 slots and 1 SFU port

1 NPGE occupies 1 slot and 1 SFU port

As a result, you cannot exceed either of the available slots or SFU ports.

For PIU detail please check DN70474741 : RNC Capacity extension and upgrade

CT53331EN53GLA0

29

RNC Architecture and Functionalities

Recommended up to 1600 BTSs

Iub throughput is the traffic in downlink direction defined in FP level. Additionally,

30% PS traffic in the uplink direction is supported. For Rel99, throughput is calculated

in the Iub interface and the Soft Handover (SHO) (40%) are included. For High-Speed

Uplink Packet Access (HSUPA), throughput is calculated in the Iu-PS interface from

the effective High-Speed Downlink Packet Access (HSDPA) throughput where the

SHO is excluded. This means that in the case of HSUPA, if the SHO is added on top

of the 30%, and the actual HSUPA throughput in the Iub including the SHO is more

than 30% (= 30% * (1+ 40%)).

Maximum number of simultaneous HSDPA users in Cell_DCH state

CT53331EN53GLA0

30

RNC Architecture and Functionalities

Availability performance calculations describe the system from the availability point

of view presenting availability

Availability performance values are calculated for the complete system, that is,

redundancy principles are taken into account

In reference to ITU-T Recommendation Q.541, intrinsic unavailability is the

unavailability of an exchange (or part of it) due to exchange (or unit) failure itself,

excluding the logistic delay time (for example, travel times, unavailability of spare

units, and so on) and planned outages

The results of the availability performance calculations for the complete system

are presented in the Predicted availability performance values.

Some units from earlier releases are no longer exist, because

The functionalities are embedded to other units, or

The unit is no longer supported

The units are:

GTPU, functionalities are embedded to NPS1(P) and/or NPGE(P)

A2SU, functionalities are embedded to NPS1(P)

RRMU, functionalities are distributed to ICSU and OMU/RSMU

NIS1(P), replaced with NPS1(P)

NIP1, no more PDH interface are supported

CT53331EN53GLA0

31

RNC Architecture and Functionalities

CT53331EN53GLA0

32

RNC Architecture and Functionalities

Duplication (2N)

If the spare unit is designated for only one active unit, the software in the spare unit

is kept synchronized so that taking it in use in fault situations (switchover) is very fast.

The spare unit can be said to be in hot stand-by. This redundancy principle is called

duplication, abbreviated "2N".

Replacement (N+1)

For less strict reliability requirements, one or more spare units may also be

designated to a group of functional units. One spare unit can replace any unit in the

group. In this case, the execution of the switchover is a bit slower, because of the

spare unit synchronization (warming) is performed as a part of the switchover

procedure. The spare unit is in cold stand-by. This redundancy principle is called

replacement, abbreviated "N+1".

Load sharing (SN+)

A unit group may be allocated no spare unit at all, if the group acts as a resource

pool. The number of units in the pool is selected so that there is a certain amount of

extra capacity. If a few units of the pool are disabled because of faults, the whole

group can still perform its designated functions. This redundancy principle is called

load sharing, abbreviated "SN+".

None

Some functional units have no redundancy at all. This is because a failure in them

does not prevent the function or cause any drop in the capacity.

CT53331EN53GLA0

33

RNC Architecture and Functionalities

Switching Fabric Unit (SFU)

The Switching Fabric Unit (SFU) provides a part of the ATM cell switching function.

It provides redundancy, full accessibility and is non-blocking at ATM connection level

(that is, if input and output capacity is available, the connection can be established).

SFU supports point-to-point and point-to-multipoint connection topologies, as well as

differentiated handling of various ATM service categories.

High capacity network interface units and multiplexer units are connected to the 2N

redundant SFU.

CT53331EN53GLA0

34

RNC Architecture and Functionalities

SF10

The main function of the SF10 plug-in unit is to switch ATM cells from 16 input ports

to 16 output ports. The cell switching uses self-routing where the cell is forwarded by

hardware to the target output port based on the given output port address. The

correct cell sequence at the output port is guaranteed. The switching fabric supports

spatial multicasting.

The total switching capacity of SF10 is 10 Gbit/s with 16x16 switching fabric port

interfaces capacity of each is 622 Mbit/s. Port interfaces are duplicated for redundant

multiplexer units and redundant network interface units. The active input is selected

inside the SF10

SF10E

The main function of the SF10E (C110899) plug-in unit is to switch cells from input to

output ports. Within the SF10E switching is protocol independent, meaning that

before the cells are sent to the fabric they are encapsulated inside a special fabric

frame. In the case of APC based legacy port cards, the cells are always ATM cells,

but network processor based units (such as MX1G6) are able to process any

protocol.

SF20H

The main function of the SF20H plug-in unit is to switch cells from input to output

ports. Within the SF20H, switching is protocol independent. This means that before

the cells are sent to the fabric, they are encapsulated inside a special fabric frame.

With a total of 32 ports, the SF20H provides a 2.5 Gbit/s serial switching fabric

interface (SFPIF2G5). Several SFPIF2G5 ports can be combined for higher capacity

ports

CT53331EN53GLA0

35

RNC Architecture and Functionalities

Multiplexer Unit (MXU)

The Multiplexer Unit (MXU) multiplexes traffic from tributary units to the ATM

switching fabric. Therefore, it allows the efficient use of switching resources for low

bit rate network interface units and computer units with small to moderate bandwidth

requirements. The MXU also includes part of the ATM layer processing functions,

such as policing, statistics, OAM, buffer management and scheduling.

Control computers, signal processing units, and low bit rate network interface units

are connected to the switching fabric via the MXU, which is a 2N redundant unit. The

RNC has several pairs of MXUs, depending on the configured capacity. For more

information, see RNC2600 capacity.

CT53331EN53GLA0

36

RNC Architecture and Functionalities

MX622

The ATM Multiplexer Plug-in Unit 622 Mbit/s MX622 multiplexes and demultiplexes

ATM cells and performs ATM Layer functions and Traffic Management functions.

MX1G6 and MX1G6-A

The MX1G6 and MX1G6-A are 1.6 Gbit/s ATM multiplexer plug-in units. They

multiplex and demultiplex ATM cells and perform ATM layer and traffic management

functions. The MX1G6 and MX1G6-A enable connecting low speed units to the

switching fabric and improve the use of switching fabric port capacity by multiplexing

traffic from up to twenty tributary units to a single fabric port.

CT53331EN53GLA0

37

RNC Architecture and Functionalities

A2SU

AAL2 Switching Unit (A2SU) performs switching of AAL Type 2 CPS packets

between external interfaces and signal processing units. A2SU operates in the loadsharing redundancy configuration (SN+).

The AAL Type 2 guarantees bandwidth-efficient transport of information with limited

transfer delay in the RAN transmission network.

If Iub, Iu-CS, and Iur have been IP upgraded, A2SU units are not used.

CT53331EN53GLA0

38

RNC Architecture and Functionalities

AL2S, AL2S-A, AL2S-B

The AAL2 Switching unit (AL2S, AL2S-A or AL2S-B plug-in unit) serves to

demultiplex AAL2 channel from AAL2-VC, maps the AAL2 payload to AAL5 or AAL0,

terminates VC containing AAL2, AAL5 or AAL0 and performs traffic and performance

management and statistics collection for AAL2.

AL2S-D

The AAL2 Switching unit (AL2S-D plug-in unit) serves to demultiplex AAL2 channel

from AAL2-VC, maps the AAL2 payload to AAL5 or AAL0, terminates VC containing

AAL2, AAL5 or AAL0 and performs traffic and performance management and

statistics collection for AAL2

CT53331EN53GLA0

39

RNC Architecture and Functionalities

Operation and Maintenance Unit (OMU)

The RNC always includes a duplicated (2N) OMU to provide high availability and

minimized interruptions in usage (see Redundancy principles). Duplicated system

disk units are connected to and controlled by the OMU. The system disk units

contain the operative software and the fallback software of the RNC.

The cellular management functions of the OMU are responsible for maintaining the

radio network configuration and recovery. The OMU monitors the status of the

network and blocks the faulty units if necessary. The OMU contains the radio

network database, ATM/IP configuration database, RNC equipment database and

alarm history database.

The OMU unit further contains basic system maintenance functions and serves as an

interface between the RNC and the OMS unit. In the event of a fault, the unit

automatically activates appropriate recovery and diagnostics procedures within the

RNC. The unit has the following interfaces:

a duplicated SCSI interface that connects mass memory devices

an Ethernet interface; an auto-sensing 10 base-T/100 base-TX interface, which can

be used, for example, as a management interface of the network element

a service terminal interface which provides support for debugger terminals

a multiplexer interface that allows termination of ATM virtual connections to the

computer unit, thus supporting both inter-processor communication and termination

of external connections in the network element (used, for example, for signaling or

network management purposes).

a duplicated hardware management system interface (see RNC hardware

management and supervision)

a USB 1.1 port and drivers for loading software or making backups locally to the

RNC

CT53331EN53GLA0

40

RNC Architecture and Functionalities

CCP10

The Control Computer with 800 MHz Pentium III-M processor (CCP10) acts as the

central processing resource in the IPA2800 system computer units.

The CCP10 incorporates an Intel Mobile Pentium III-M Microprocessor with 133MHz

SDRAM memory on DIMM modules.

CCP10 has ATM connections to other plug-in units. This is done by an interface to

ATM multiplexer (MX622-B /-C).

CCP10 has an interface to the Hardware Management System (HMS) which is

implemented in CCP10 as two Hardware Management Nodes (HMN): the HMS

Master Node (HMSM) and HMS Slave Node (HMSS).

CCP10 has a 16 bit wide Ultra3 SCSI bus. It is possible to connect up to 16 devices

into the SCSI bus (including CCP10). CCP10 has two SCSI interfaces because the

mass memory system is 2N redundant. Current Ultra2 SCSI is also supported.

The timing and synchronization of CCP10 is provided by Timing and Synchronization

plug-in unit (TSS3). TSS3 provides 19.44 MHz clock signal for real time clock and

UX ASIC.

There are two V.24/V.28 based serial interfaces for service terminals to provide an

interface for controlling and monitoring CCP10.

CCP10 has two 10 Base-T /100 Base-TX /1000 Base-T Ethernet interfaces to

connect to LAN.

In addition to the interfaces described above CCP10 gets the - 48 V DC supply and

HMNs power feed through back plane connectors.

CCP10 is assembled into sub rack SRA1 and SRA2. There can be more than two

CCP10 units in the sub rack

CT53331EN53GLA0

41

RNC Architecture and Functionalities

OMU has two dedicated hard disk units, which serve as a redundant storage for the

entire system software, the event buffer for intermediate storing of alarms, and the

radio network configuration files.

Backup copies are made onto a USB memory stick that is connected to the CCP18A front plate. Only memory sticks can be used.

FDU is the functional unit when using the USB memory stick. No separate

configuration in the HW database is needed, because the USB memory stick is an

external device. When removing the USB memory stick, set the state to blocked,

because the system does not do it automatically.

In previous deliveries, the MDS-(A/B) magneto optical drive with a SCSI interface is

used. FDU is the functional unit. No separate configuration is needed.

CT53331EN53GLA0

42

RNC Architecture and Functionalities

The USB stick is an optional external device that is not automatically delivered. The

operator can choose to use the USB memory stick for backup purposes in RN2.2

new deliveries. When USB memory stick is used (the functional unit is FDU), it is

plugged in one CPU card. There is no direct connection to the other CPUs. Only the

USB memory stick that is connected to the active OMU can be used. For OMU

switchover, two USB memory sticks are needed: one for each OMU.

In previous deliveries, the MDS-A plug-in unit is used (the functional unit is FDU).

When MDS-A is used, FDU connects to the SCSI 0 bus. It has been left without

backup since it is primarily used for facilitating temporary service operations.

CT53331EN53GLA0

43

RNC Architecture and Functionalities

Interface Control and Signalling Unit (ICSU)

The Interface Control and Signalling Unit (ICSU) performs those RNC functions that

are highly dependent on the signaling to other network elements. The unit also

handles distributed radio resource management related tasks of the RNC.

The unit is responsible for the following tasks:

Layer 3 signaling protocols RANAP, NBAP, RNSAP, RRC, and SABP

Transport network level signaling protocol ALCAP

Handover control

Admission control

Load control

Power control

Packet scheduler control

Location calculations for location based services

According to the N+1 redundancy principle (for more information, see Redundancy

principles).there is one extra ICSU in addition to the number set by the dimensioning

rules. The additional unit is used only if one of the active units fails.

CT53331EN53GLA0

44

RNC Architecture and Functionalities

CCP18-A, CCP18-C

The Control Computer with Pentium M 745 processor (CCP18-A and CCP18-C) acts

as the central processing resource in the IPA2800 system computer units.

The CCP18-A/-C incorporate an Intel Pentium M 745 Microprocessor with DDR200

SDRAM memory on board

The CCP18-A and CCP18-C have ATM connections to other plug-in units. This is

done by an interface to the ATM multiplexer (MXU).

The CCP18-A and CCP18-C have an interface to the Hardware Management

System (HMS). CCP18-A has two Hardware Management Nodes (HMN): the HMS

Master Node (HMSM) and HMS Slave Node (HMSS-B). CCP18-C has only the HMS

Slave Node (HMSS-B).

The CCP18-A has a 16 bit wide Ultra3 SCSI bus. It is possible to connect up to 16

devices into the SCSI bus (including CCP18-A). The CCP18-A has two SCSI

interfaces because the mass memory system is 2N redundant. Current Ultra2 SCSI

is also supported. CCP18-C does not have a SCSI bus.

The timing and synchronization of the CCP18-A and CCP18-C is provided by the

Timing and Synchronization plug-in unit (TSS3). TSS3 provides 19.44 MHz clock

signal for real time clock and UX2 FPGA.

There are two V.24/V.28 based serial interfaces for service terminals to provide an

interface for controlling and monitoring the CCP18-A and the CCP18-C.

The CCP18-A and CCP18-C have two 10 Base-T /100 Base-TX /1000 Base-T

Ethernet interfaces to connect to LAN.

In addition to the interfaces described above, CCP18-A and CCP18-C get the - 48 V

DC supply and HMNs power feed through back plane connectors

CT53331EN53GLA0

45

RNC Architecture and Functionalities

GTPU

The GTPU performs the RNC-related IU user plane functions towards the SGSN.

The unit is SN+ redundant.

The unit is responsible for the following tasks:

Iu-PS transport level IP protocol processing and termination

Gateway Tunnelling Protocol User Plane (GTP-U) protocol processing

CT53331EN53GLA0

46

RNC Architecture and Functionalities

CCP18-A, CCP18-C

The Control Computer with Pentium M 745 processor (CCP18-A and CCP18-C) acts

as the central processing resource in the IPA2800 system computer units.

The CCP18-A/-C incorporate an Intel Pentium M 745 Microprocessor with DDR200

SDRAM memory on board

The CCP18-A and CCP18-C have ATM connections to other plug-in units. This is

done by an interface to the ATM multiplexer (MXU).

The CCP18-A and CCP18-C have an interface to the Hardware Management

System (HMS). CCP18-A has two Hardware Management Nodes (HMN): the HMS

Master Node (HMSM) and HMS Slave Node (HMSS-B). CCP18-C has only the HMS

Slave Node (HMSS-B).

The CCP18-A has a 16 bit wide Ultra3 SCSI bus. It is possible to connect up to 16

devices into the SCSI bus (including CCP18-A). The CCP18-A has two SCSI

interfaces because the mass memory system is 2N redundant. Current Ultra2 SCSI

is also supported. CCP18-C does not have a SCSI bus.

The timing and synchronization of the CCP18-A and CCP18-C is provided by the

Timing and Synchronization plug-in unit (TSS3). TSS3 provides 19.44 MHz clock

signal for real time clock and UX2 FPGA.

There are two V.24/V.28 based serial interfaces for service terminals to provide an

interface for controlling and monitoring the CCP18-A and the CCP18-C.

The CCP18-A and CCP18-C have two 10 Base-T /100 Base-TX /1000 Base-T

Ethernet interfaces to connect to LAN.

In addition to the interfaces described above, CCP18-A and CCP18-C

CT53331EN53GLA0

47

RNC Architecture and Functionalities

Data and Macro Diversity Combining Unit (DMCU)

The Data and Macro Diversity Combining Unit (DMCU) performs RNC-related user

and control plane functions. Each of these units has several state-of-the-art digital

signal processors (DSPs) and general purpose RISC processors. The signal

processing tasks can be configured and altered dynamically for each DSP. The unit

is SN+ redundant. The unit is responsible for the following tasks:

UE and L2 related protocols

Frame Protocol (FP)

Radio Link Control (RLC)

Medium Access Control (MAC)

The following functions are within protocols:

macro diversity combining and outer loop PC: FP

ciphering: FP and RLC/MAC

Packet Data Convergence Protocol (PDCP): header compression

High-Speed Packet Access (HSPA) processing: MAC-shared (MAC-SH) and

Enhanced Dedicated Transport Channel (EDCH)

CT53331EN53GLA0

48

RNC Architecture and Functionalities

CDSP, CDSP-B or CDSP-C

The Configurable Dynamic Signal Processing Platform (CDSP, CDSP-B or CDSP-C

plug-in unit) functions as a CDSP pool. Each CDSP (-B/-C) has 32 Digital Signal

Processors (DSPs) on four daughter boards, either type D5510 (CDSP), CIP (CDSPB), or CIP-A (CDSP-B version 4 and CDSP-C). The daughter boards are used for

transcoding, echo cancelling and other applications which need digital signal

processing. Four MPC 8260 processors control the DSPs.

CDSP-DT, CDSP-DH and CDSP-D

The configurable dynamic signal processing platform (CDSP-DT, CDSP-DH and

CDSP-D plug-in units) function as CDSP pool. Each CDSP-D (C109045) plug-in unit

has 16 multicore digital signal processors (DSP) and a total of 96 DSP cores. Each

CDSP-DH (C110830) and CDSP-DT (C111195) has 8 DSPs. The DSP cores are

used in transcoding and echo cancelling as well as other applications that need

digital signal processing. The DSPs are controlled by four MPC 8280 processors.

CDSP-D and CDSP-DT plug-in units are used in MGW and CDSP-DH in RNC.

CT53331EN53GLA0

49

RNC Architecture and Functionalities

OMS

The Operation and Maintenance Server (OMS) is a computer unit which provides an

open and standard computing platform for applications which do not have strict realtime requirements. The OMS provides functions related to external O&M interfaces.

For example:

Post-processing of fault management data

Post-processing of performance data

Software upgrade support

These functions include both generic interfacing to the data communication network

(DCN) and application specific functions such as processing of fault and

performance management data, implementation of the network element user

interface and support for configuration management of the network element. This

way the OMS provides easy and flexible interfacing to the network element.

The OMS is implemented with the Red Hat Enterprise Linux 4. It contains its own

disks devices, interfaces for keyboard, mouse and display for debugging purposes,

and a LAN (10/100/1000 Mbit Ethernet) interface. Communication between the OMS and

the rest of the network element uses Ethernet.

The basic services of the OMS are:

MMI interface implemented as a telnet protocol through which the user can execute

the existing MML commands.

Alarm transfer from network element to network management system (NMS)

Provides the statistical interface for NMS

CT53331EN53GLA0

50

RNC Architecture and Functionalities

MCP18-B

The MCP18-B plug-in unit is used as the management computer unit in network

elements. For OMS, MCP18-B B01 or later must be used.

The MCP18-B is a PentiumM based, PC compatible, single slot computer designed

to interface to the internal standard PCI bus. The PentiumM 745 central processing

unit (CPU) comes in an Intel 479 ball micro-FCBGA form factor. The Intel

PentiumM chipset (E7501 MCH & P64H2) provides the PCI and PCI-X interfaces.

Integrated PCI peripherals provide dual Ethernet, dual SCSI, SVGA and USB

interfaces.

Scalability

RNC OMS is capable of handling capacity of RNC2600, 2 800 WCDMA BTSs and 4

800 cells.

RNC OMS is capable of handling different types of mass management operations

under the control of Net Act, so that there are management operations going on in

parallel towards several elements. Mass operations are used when certain

management operations need to be done to certain group of network elements. An

example of this are configuration data and software downloads to new base stations.

CT53331EN53GLA0

51

RNC Architecture and Functionalities

HDS-B capacity and performance

Hard disks

73 GB formatted storage capacity/disk

Average seek time: read 4.5 ms/ write 5.0 ms

Data transfer rate of disk drive: 132.4 MB/s

SCSI buses

Data transfer rate 160 MB/s (80 MHz) in synchronous mode

SCSI bus is 16 bits wide

Maximum 16 devices on bus

SCSI bus can work in both LVD or SE mode

HDS-B

The HDS-B plug-in unit is used with the OMU and NEMU units. The computer units

serve two 16-bit wide Ultra SCSI buses which connect to the HDS-B through external

shielded back-cables. The HDS-B has two independent SCSI buses for two

computer units. In the case of OMU the SCSI buses pass through the HDS-B and

continue to the other unit of the duplicated pair (OMU only). In the case of NEMU the

SCSI buses pass into the HDS-B and end there. It is possible to connect other SCSI

devices on the same bus. The maximum number of installed SCSI devices, not

counting computer units, is 14.

The HDS-B plug-in unit is connected to the hardware management bus the via the

bus interface of the HMSS. HDS-B has an interface to two HMS transmission lines

via back connectors.

CT53331EN53GLA0

52

RNC Architecture and Functionalities

HDS-B

The HDS-B serves as a non-volatile memory for program code and data in the MGW

and RNC. It connects via the SCSI bus to the OMU and NEMU units.

Operating environment of HDS-B

The HDS-B plug-in unit is used with the OMU and NEMU units. The computer units

serve two 16-bit wide Ultra SCSI buses which connect to the HDS-B through external

shielded back-cables. The HDS-B has two independent SCSI buses for two

computer units. In the case of OMU the SCSI buses pass through the HDS-B and

continue to the other unit of the duplicated pair (OMU only). In the case of NEMU the

SCSI buses pass into the HDS-B and end there. It is possible to connect other SCSI

devices on the same bus. The maximum number of installed SCSI devices, not

counting computer units, is 14.

The HDS-B plug-in unit is connected to the hardware management bus the via the

bus interface of the HMSS. HDS-B has an interface to two HMS transmission lines

via back connectors.

The HDS-B has automatically functioning SCSI bus terminators.

The HMSS has a separate 2N redundant power feed.

The HDS-B gets also 48V DC supply and power feed from the HMSS through back

connectors

CT53331EN53GLA0

53

RNC Architecture and Functionalities

Ethernet Switch for ATM with 24 Ports (ESA24)

The ESA24 plug-in unit provides the Ethernet switch functionality for OMS.

2N redundant ESA24 provides duplicated IP connections towards the A-GPS server.

There are two Ethernet ports in the front panel.

ESA24

The 10/100 Mbps LAN switch plug-in unit ESA24 functions as the LAN switch unit of

the IPA2800 ATM platform.

The ESA24 features:

Complies with IEEE802.1d Spanning Tree protocol.

Store and Forward operation

Half and full duplex on all ports

IEE802.3X Full Duplex flow control on all ports

Back pressure in Half Duplex mode on all ports

Priority queuing based on Port or 802.1p

None blocking operation and VLAN per 802.1q

Address table contains 8000 entries and Port Trunking

Differences between ESA12 and ESA24

Increase in FLASH memory from 2 to 8 MB

RAM memory 64 MB

New operating system (BiNOS)

Complies with IEEE802.1w Rapid Spanning Tree protocol

Complies with IEEE802.1s Multiple Spanning Tree protocol

CT53331EN53GLA0

54

RNC Architecture and Functionalities

Operation and Maintenance Server (OMS)

The Operation and Maintenance Server (OMS) unit is responsible for RNC element

management tasks. It provides interface to the higher-level network management

functions and to local user interface functions.

These functions include both generic interfacing to the data communication network

(DCN) and application-specific functions like processing of fault and performance

management data, implementation of the RNC user interface and support for

configuration management of the RNC. This way the OMS provides easy and flexible

interfacing to the RNC.

In previous releases, OMS is integrated in RNC2600. It is implemented with Intelbased industry standard PC core. It contains own disk devices, interfaces for

keyboard and a display for debugging purposes, a serial interface, an USB interface,

and a LAN (100 Mbit/s Ethernet) interface.

In RN5.0, standalone OMS is introduced. It uses commercial HW: HP DL360

Proliant. This offers better scalability to OMS performance and always offers the

latest and best HW technology available. This is the first evolution step towards new

technologies - common OMS platform within different technologies. Same OMS

applications and functions are available on both platforms. This does not bring any

changes to existing OMS interfaces.

HP ProLiant DL360 Generation 6:

Quad core Nehalem Intel processors

12 GB memory (scalable up to 128 GB)

4 x Mirrored hard discs (4 x 146GB)

Dual port network card

SCSI card for external devices like data tapes and magneto-optical

USB 2.0 ports

Height 1U

CT53331EN53GLA0

55

RNC Architecture and Functionalities

Benefits of standalone RNC OMS

The main benefits of Nokia Siemens Networks RNC OMS are outlined below.

State-of-the-art feature set

Nokia Siemens Networks long experience of radio access and mobile data networks

management and input based on operator requirements ensure that RNC OMS

functionality and feature set are well considered and provide maximum benefit to

operators.

High quality, proven software platform

RNC OMS software is running on top of a carrier grade Flexi Platform (SW platform).

The Flexi Platform design ensures high availability, reliability, scalability and high

performance incorporating innovations from open standards such as Linux and

J2EE. RNC OMS is based on the field-proven NEMU unit used in Nokia Siemens

Networks 3G networks. Thus a high quality platform and increased benefits of

economies of scale are ensured.

Scalable, efficient architecture

RNC OMS provides scalability of operability architecture via aggregating, parsing

and intermediating the operation and management traffic flow between Net Act and

access network elements. RNC OMS performs individual management operations to

network elements under control of Net Act. RNC OMS is able to perform efficient

parallel mass operations towards several network elements and handle different

operation and management operations simultaneously to the same network element.

These capabilities reduce both the processing and database access load in Net Act

management system and overall management data transmission needs.

CT53331EN53GLA0

56

RNC Architecture and Functionalities

Benefits of standalone RNC OMS (Cont)

Accurate status of the network

RNC OMS provides synchronized measurement data from the WCDMA Access

Network elements and real-time WCDMA BTS and RNC state supervision and

management, giving thus an accurate picture of network status. Reliable, correct

information helps operators to make right daily operation and management

decisions. It also gives good input to longer-term network planning, enabling

operators to plan network investments in a cost efficient manner.

Local operation interface

RNC OMS offers a local operation interface towards RNC. The interface makes it

possible to monitor access networks locally via RNC OMS during network roll-out,

upgrade and expansion phases and during regular daily operation, when reasonable.

Secure software platform

RNC OMS runs on top of Flexi Platform/Red Hat, gaining thus from the security

benefits of Red Hat Linux Security Framework. Industry experts consider the security

risk of Linux to be low, thus giving relief to platform software security concerns.

Easy to place and install

RNC OMS has a compact size and it fits to a standard 19-inch rack.

CT53331EN53GLA0

57

RNC Architecture and Functionalities

NIP1 contains PDH E1/T1/JT1 interfaces with Inverse Multiplexing for ATM (IMA)

function, which allows for flexible grouping of physical links to logical IMA groups.

Normally, the PDH lines are used for connections between RNC and the BTSs.

NI16P1A

The NI16P1A plug-in unit implements sixteen PDH E1/T1/JT1 based ATM interfaces.

The NI16P1A supports IMA, that is, several E1/T1/JT1 interfaces can be grouped

into one group that seems like one interface to the upper protocol layers. The

NI16P1A makes ATM layer processing related to the traffic management and Utopia

address embedding. The NI16P1A also provides a reference clock (that is recovered

from the incoming E1/T1/JT1 lines) for the TSS3 plug-in unit.

CT53331EN53GLA0

58

RNC Architecture and Functionalities

NI4S1-B

The main functions of the NI4S1-B plug-in unit are the following:

implementing adaptation between SDH transport technology and ATM

performing ATM layer functions

implementing interface to ATM Switch Fabric.

The NI4S1-B can also be used to implement four SONET OC-3 interfaces.

Operating environment of NI4S1-B

NI4S1-B has the following interfaces with its environment:

four interfaces with physical medium

interface with ATM Switch Fabric (SF10)

interface with the Hardware Management System (HMS)

interface with TSS3 or TBUF plug-in unit.

CT53331EN53GLA0

59

RNC Architecture and Functionalities

NP8S1-B, NP8S1-A and NP8S1

The NP8S1-B, NP8S1-A and NP8S1 plug-in units are interface units for IPA2800

network elements that are specifically designed for the optimized use of the Internet

Protocol (IP) and the packet environment. NP8S1-B, NP8S1-A and NP8S1 are

targeted for the multiprotocol transport interfaces Iu-PS and Iu-CS. The primary

transport methods used are Packet over SONET (POS) and IP over ATM (IPoA).

NP8S1-B, NP8S1-A and NP8S1 provide multiprotocol packet processing at wire

speed and network connectivity with eight optical synchronous digital hierarchy

(SDH) STM-1 or synchronous optical network (SONET) OC-3 interfaces. The high

processing power of the network processor and the unit computer enable the

NP8S1-B, NP8S1-A and NP8S1 plug-in units to process protocol and data at the line

interface unit (LIU) instead of the dedicated processing units.

The unit NP8S1 also has capacity for two SDH STM-4 or SONET OC-12 interfaces,

but they are not supported and cannot be used.

NP8S1 and NP8S1-A are only used in MGW, NP8S1-B is only used in RNC.

CT53331EN53GLA0

60

RNC Architecture and Functionalities

NP2GE-B, NP2GE-A and NP2GE

The NP2GE-B, NP2GE-A and NP2GE plug-in units are interface units that are

specifically designed for the optimized use of the Internet Protocol (IP) and the

packet environment. NP2GE-B, NP2GE-A and NP2GE are targeted for the

multiprotocol transport interfaces Iu-PS and Iu-CS. The primary transport method

type used is IP over Ethernet.

NP2GE-B, NP2GE-A and NP2GE provide multiprotocol packet processing at wire

speed and also offer the possibility of using both electrical (copper) and optical (fibre)

based Ethernet. The high processing power of the network processor and the unit

computer enable the NP2GE-B, NP2GE-A and NP2GE plug-in units to process

protocol and data at the line interface unit (LIU) instead of the dedicated processing

units.

NP2GE and NP2GE-A are only used in MGW, NP2GE-B is only used in RNC.

CT53331EN53GLA0

61

RNC Architecture and Functionalities

The filter is closed in an EMC tight enclosure. Both supply lines go through the filter.

The supply lines share a common choke but have X- and Y-capacitors of their own.

In both supply branches, large electrolytic capacitors are located on two dedicated

capacitor boards. After the electrolytic capacitors, supply branches are branched

further to five sub branches which are protected by fast glass tube fuses and then

supplied to the backplane. One of the sub branches in the supply branches is for fan

tray.

CT53331EN53GLA0

62

RNC Architecture and Functionalities

PD20

The Power Distribution Unit 20 A (PD20 plug-in unit) is a sub rack level power

distribution unit in the IPA2800 network element power feed system. The PD20

provides filtering, power distribution and fan control functions.

PD30

The Power Distribution Unit 30 (PD30 plug-in unit) is a sub rack level power

distribution unit in the Nokia IPA2800 Network Elements power feed system. The

PD30 provides filtering, power distribution, and fan control functions. In addition, the

PD30 also provides over-current and overvoltage protection, and power dropout

stretching.

The PD30 incorporates reverse battery voltage protection for accidental installation

errors. It continues to operate after correct battery voltage polarity and voltage level

have been applied to it.

The use of the older fan tray models FTR1 and FTRA damages the equipment. Only

use the fan trays FTRA-A and FTRA-B with PD30

CT53331EN53GLA0

63

RNC Architecture and Functionalities

The CPD120-A allows for either grounding the 0V lead from the battery or for a use

of a separate grounding cable to achieve floating battery voltage. From the CPD120A unit, the voltage is fed through the sub rack-specific PD30 power distribution plugin units, which have individual 10-A fuses for each outgoing distribution line, to the

other plug-in units in a likewise manner as to the cabinets, that is, through two

mutually redundant supply lines. The two distribution lines are finally combined in the

power converter blocks of individual plug-in units, which adapt the voltage so that it is

appropriate for the plug-in unit components.

CT53331EN53GLA0

64

RNC Architecture and Functionalities

CT53331EN53GLA0

65

RNC Architecture and Functionalities

New clock plug-in unit variant TSS3-A is implemented in RN5.0 based RNC2600

deliveries. However, TSS3-A can be used with RN4.0 software if Bridge HMX1BNGX

version inside the plug-in unit is newer than in RN4.0 release package

Due to 2N redundancy a mixed configuration of TSS3 and TSS3-A is not allowed.

The same variant must be used for both clock units in each RNC.

TSS3/-As generate the clock signals necessary for synchronizing the functions of

RNC. Normally, TSS3/-A operates in a synchronous mode, that is, it receives an

input timing reference signal from an upper level of the network and adjusts its local

oscillator to the long time mean value by filtering jitter and wander from the timing

signal. It transmits the reference to the plug-in units in the same sub rack (all plug-in

units are equipped with onboard PLL blocks), as well as to the TBUF units, which

distribute the signals to units not directly fed by TSS3/-As.

TSS3/-A has inputs for both synchronization references from other network elements

(via the network interfaces) and for those from external sources (options are 2048

kbit/s, 2048 kHz, 64+8 kHz, 1544 kHz, or 1544 kbit/s (TSS3-A)). TSS3-A input is 5 V

tolerant.

If all synchronization references are lost, TSS3/-A can operate in plesiochronous

mode, that is, by generating independently the synchronization reference for the

units in the network element.

TSS3/-As are also involved in the functioning of the HMS bus. They convey HMS

messages through the HMS bridge node to the HMS master node. Each OMU has

one master node.

TSS3-A is designed to conform ITU-T G813, G.703 and Bell core GR-1244

recommendation.

CT53331EN53GLA0

66

RNC Architecture and Functionalities

TSS3 and TSS3-A

The Timing and Synchronization, SDH, Stratum 3 (TSS3 plug-in unit) or the Timing

and Synchronization, SDH, Stratum 3, Variant A (TSS3-A plug-in unit) and TBUF

plug-in units provide the functionality of the Timing and Hardware Management Bus

Unit (TBU) functional unit. This functional unit is responsible for synchronization,

timing signal distribution and message transfer in the Hardware Management

System of a network element.

The RNC and the MGW configurations have always one duplicated synchronization

unit implemented as two TSS3 or TSS3-A plug-in units. The TSS3s or TSS3-As are

located in either of the two half-size slots in sub racks 1 and 2 of rack 1. The

remaining half-size two slots in these sub racks are equipped with TBUs, and so are

all other such slots in other sub racks of the network element. Both TSS3s or TSS3As form a subsystem which is 2N redundant, so there are always two TSS3 or

TSS3-A plug-in units working in active/cold standby fashion.

TBUF

The Timing Buffer (TBUF plug-in unit) and the TSS3 plug-in units provide the

functionality of the Timing and Hardware Management Bus Unit (TBU) functional

unit. This functional unit is responsible for synchronization, timing signal distribution

and message transfer in the Hardware Management System of a network element.

The RNC and the MGW configurations have always one duplicated synchronization

unit implemented as two TSS3 plug-in units. The TSS3s are located in either of the

two half-size slots in subracks 1 and 2 of rack 1. The remaining half-size two slots in

these subracks are equipped with TBUFs, and so are all other such slots in other

subracks of the network element. The redundancy method is 2N.

CT53331EN53GLA0

67

RNC Architecture and Functionalities

Synchronization

Usually the distribution of synchronization references for RAN NEs (BTS, RNC) is

based on a master-slave architecture, where the transport network is used for

carrying the synchronization references. In particular, this is the case for base

stations.

In a master-slave synchronization architecture, a synchronization reference traceable

to the Primary Reference Clock (PRC) is carried via the transport network to RAN

NEs. Traceability to the PRC means that the synchronization reference originates

from a timing source of PRC quality. The characteristics of primary reference clocks

are specified in ITU-T Recommendation G.811 [8].

The hierarchical master-slave principle is generally used in traditional TDM based

synchronization, where a PRC traceable reference is carried through a

synchronization distribution chain via intermediate nodes to RAN NEs. In RAN NEs

(BTSs shown in the following figure) the timing reference is recovered from the

incoming transport interface (e.g. E1, T1, STM-1). The recovered reference is

frequency locked to the original PRC signal, but due to impairments in the transport

network there is some jitter and wander in the recovered synchronization reference.

CT53331EN53GLA0

68

RNC Architecture and Functionalities

RNC has two separate timing and synchronization distribution buses to ensure 2N

redundancy for the internal timing signal distribution. Each bus has its own system

clock (a TSS3/-A plug-in unit), distribution cabling, and timing buffers (TBUF plug-in

units).

The two TSS3/-A units backing up each other are placed in different subracks

(subracks 1 and 2), each of which is powered by a power supply plug-in unit of its

own to ensure redundancy for the power supply. Each of these subracks is also

equipped with a TBUF plug-in unit, which connects the equipment in the sub rack to

the other clock distribution bus. The RNAC subracks 3 and 4 and all RNBC subracks

have two separate TBUF units, which connect to different clock distribution buses by

means of cables of their own.

In order to function correctly, the differential buses need terminations in the ends of

the bus by means of a termination cable. Due to the expansion of the network

element through the capacity steps, the end of the bus and similarly the termination

point changes. When a new subrack is taken into use in a capacity step, the cabling

must always be moved to the new subrack.

Duplicated buses need two terminations, which means that four terminators

altogether in each cabinet are required for the HMS and the timing and

synchronization distribution bus

CT53331EN53GLA0

69

RNC Architecture and Functionalities

The optional peripheral EXAU-A / EXAU provides a visual alarm of the fault

indications of RNC. The EXAU-A / EXAU unit is located in the equipment room.

The CAIND/-A is located on top of the RNAC cabinet and provides a visual alarm

indicating the network element with a fault.

CT53331EN53GLA0

70

RNC Architecture and Functionalities

The Hardware Management System (HMS) provides a duplicated serial bus between

the master node (located in the OMU) and every plug-in unit in the system. The bus

provides fault tolerant message transfer facility between plug-in units and the HMS

master node.

The HMS is used in supporting auto-configuration, collecting fault data from plug-in

units and auxiliary equipment, collecting condition data external to network elements

and setting hardware control signals, such as restart and state control in plug-in

units.

The hardware management system is robust. For example, it is independent of

system timing and it can read hardware alarms from a plug-in unit without power.

The HMS allows power alarms and remote power on/off switching function.

The hardware management system forms a hierarchical network. The duplicated

master network connects the master node with the bridge node of each sub-rack.

The sub-rack level networks connect the bridge node with each plug-in unit in the

sub-rack.

CT53331EN53GLA0

71

RNC Architecture and Functionalities

HMS Master Node (HMMN)

Head of Hardware Management System, which has responsible for example

selecting the transfer line and supervision of HMS bridge nodes.

Reside on OMU

HMS Bridge (HMSB)

Divides Hardware Management System into sub network, which physically

each sub rack.

Reside on TSS3 and TBUF

HMS Slave (HMSS)

Interfaces with for example PIUs power and hardware alarms.

Reside on all PIUs except ESA12/24

CT53331EN53GLA0

72

RNC Architecture and Functionalities

CT53331EN53GLA0

73

S-ar putea să vă placă și

- 1320 CTDocument4 pagini1320 CTManuel Aguirre CamachoÎncă nu există evaluări

- Project On Honeywell TDC3000 DcsDocument77 paginiProject On Honeywell TDC3000 DcsAbv Sai75% (4)

- TDC 3000 DcsDocument7 paginiTDC 3000 DcsneoamnÎncă nu există evaluări

- Ocb 283 ProjectDocument32 paginiOcb 283 Projecthim92Încă nu există evaluări

- 1320 CraftDocument4 pagini1320 CraftRinoCastellanosÎncă nu există evaluări

- 9 ESSNo1Document168 pagini9 ESSNo1Shavel KumarÎncă nu există evaluări

- Transaction Monitoring in Encompass: '1tandemcomputersDocument28 paginiTransaction Monitoring in Encompass: '1tandemcomputerstuna06Încă nu există evaluări

- The Specification of Iec 61850 Based Substation Automation SystemsDocument8 paginiThe Specification of Iec 61850 Based Substation Automation Systemsyesfriend28Încă nu există evaluări

- Knxsci08-Tpuart IfDocument17 paginiKnxsci08-Tpuart IfalericÎncă nu există evaluări

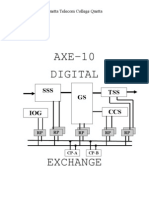

- Axe 10Document65 paginiAxe 10bleua1100% (3)

- Alcatel FibraDocument48 paginiAlcatel FibraJose Tony Angulo SaborioÎncă nu există evaluări

- Building IP Networks Using Advanced Telecom Computing ArchitectureDocument6 paginiBuilding IP Networks Using Advanced Telecom Computing Architectureavishek2005Încă nu există evaluări

- Dcs Architecture and TDC 3000: Presented By: Ziauddin/M. Sohail/Haroon YousafDocument34 paginiDcs Architecture and TDC 3000: Presented By: Ziauddin/M. Sohail/Haroon Yousafimek123Încă nu există evaluări

- T640 Integrated Loop ProcessorDocument5 paginiT640 Integrated Loop Processorరామకృష్ణ పాశలÎncă nu există evaluări

- Base Band Modem Hardware Design: P. Antognoni, E. Sereni, S. Cacopardi, S. Carlini, M. ScafiDocument5 paginiBase Band Modem Hardware Design: P. Antognoni, E. Sereni, S. Cacopardi, S. Carlini, M. Scafikokome35Încă nu există evaluări

- 05 - Serial Data Transmission - E0808f PDFDocument41 pagini05 - Serial Data Transmission - E0808f PDFedgardoboieroÎncă nu există evaluări

- On The Use of Ns-2 in Simulations of Internet-Based Distributed Embedded SystemsDocument5 paginiOn The Use of Ns-2 in Simulations of Internet-Based Distributed Embedded Systemsgajanand2007Încă nu există evaluări

- Smart Lock 400Document16 paginiSmart Lock 400Kelvin LoÎncă nu există evaluări

- Zte PTNDocument14 paginiZte PTNAntariksha SinghÎncă nu există evaluări

- Network TG e 1 1 PDFDocument4 paginiNetwork TG e 1 1 PDFDoDuyBacÎncă nu există evaluări

- White Paper - Data Communication in Substation Automation System SAS - Part 1 Original 23353Document5 paginiWhite Paper - Data Communication in Substation Automation System SAS - Part 1 Original 23353sabrahimaÎncă nu există evaluări

- Topic 8 PLCDocument26 paginiTopic 8 PLCKrista JacksonÎncă nu există evaluări

- Chapter-1: 1.1 Non Real Time Operating SystemsDocument58 paginiChapter-1: 1.1 Non Real Time Operating SystemsAnonymous XybLZfÎncă nu există evaluări

- Module-6 Part - II NotesDocument45 paginiModule-6 Part - II Notesamal santhoshÎncă nu există evaluări

- Opisanie K Alcatel 1641 SXDocument6 paginiOpisanie K Alcatel 1641 SXsmanaveed100% (1)

- Max DnaDocument17 paginiMax DnaAdil ButtÎncă nu există evaluări

- Unit 3 System Interfacing and ControllersDocument48 paginiUnit 3 System Interfacing and ControllersSrinivasan V PÎncă nu există evaluări

- MX-ONE User ManualDocument72 paginiMX-ONE User ManualAnisleidyÎncă nu există evaluări

- BSC O&mDocument48 paginiBSC O&mAri Sen100% (1)

- MCBSC and MCTC ArchitectureDocument6 paginiMCBSC and MCTC ArchitectureAmol PatilÎncă nu există evaluări

- Aal2 UmtsDocument7 paginiAal2 UmtsCatherine RyanÎncă nu există evaluări

- Conceptual Design of Substation Process Lan: M. Perkov, D. - XudvrylüDocument4 paginiConceptual Design of Substation Process Lan: M. Perkov, D. - Xudvrylücastilho22Încă nu există evaluări

- Ciena - OneControlDocument2 paginiCiena - OneControlkiennaÎncă nu există evaluări

- Unit - 8 A Generic Digital Switching System ModelDocument16 paginiUnit - 8 A Generic Digital Switching System ModelLavanya R GowdaÎncă nu există evaluări

- Atm-Pon: Special Issue On TELECOM99: UDC (621.395.74: 681.7.068.2) : 681.324Document4 paginiAtm-Pon: Special Issue On TELECOM99: UDC (621.395.74: 681.7.068.2) : 681.324kundan1094Încă nu există evaluări

- 01 - Nokia RNC ArchitectureDocument34 pagini01 - Nokia RNC ArchitectureManish AgarwalÎncă nu există evaluări

- 3rd Generation Mobile SystemDocument4 pagini3rd Generation Mobile SystemBiswajit BhattacharyaÎncă nu există evaluări

- SMA1 KDocument12 paginiSMA1 Kusr1507Încă nu există evaluări

- d900 MSC HardwareDocument9 paginid900 MSC HardwareDeepakÎncă nu există evaluări

- ESD Training ModuleDocument26 paginiESD Training ModuleFajar SantosoÎncă nu există evaluări

- dss-2 (1) CompressedDocument9 paginidss-2 (1) CompressedKeshavamurthy LÎncă nu există evaluări

- What Is A Distributed Control System?Document62 paginiWhat Is A Distributed Control System?Kharol A. Bautista100% (1)

- Micronet™: Control SystemDocument4 paginiMicronet™: Control Systemarab25Încă nu există evaluări

- Metode Monitoring Trafo DistributionDocument5 paginiMetode Monitoring Trafo DistributionSiswoyo SuwidjiÎncă nu există evaluări