Documente Academic

Documente Profesional

Documente Cultură

Review of Machine Vision

Încărcat de

Adarsh KumarDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Review of Machine Vision

Încărcat de

Adarsh KumarDrepturi de autor:

Formate disponibile

ELSEVIER

Computers

in Industry 34 (1997) 55-72

A review of machine vision sensors for tool condition monitoring

S. Kurada

*, C.

Bradley

D(?partment of Mechanical Engineering, Uniuersity of Victoria, Victoria, BC, Canada, V8W 3P6

Received 4 January

1994; revised 27 August 1996; accepted 27 August 1996

Abstract

Tool condition monitoring has gained considerable importance in the manufacturing industry over the preceding two

decades, as it significantly influences the process economy and the machined part quality. Recent advances in the field of

image processing technology have led to the development of various in-cycle vision sensors that can provide a direct and

indirect estimate of the tool condition. These sensors are characterised by their measurement flexibility, high spatial

resolution and good accuracy. This paper provides a review of the basic principle, the instrumentation and the various

processing schemes involved in the development

of these sensors. 0 1997 Elsevier Science B.V.

Keywords:

Machine

vision; Manufacturing

information;

Cutting tool monitoring;

1. Introduction

The concept of tool condition monitoring has

gained considerable importance in the manufacturing

industry. This is mainly attributed to the transfonnation of the manufacturing

environment

from manually operated production machines to CNC machine

tools and the highly automated CNC machining centres. For modem machine tools, 20% of the downtime is attributed to tool failure, resulting in reduced

productivity and economic losses. A reliable monitoring system could prevent these problems and allow optimum utilisation of the tool life, which is

highly desirable.

The current trend is for CNC machine tools to be

tended by operators, who are not fully equipped with

the blend of training and experience necessary to

gauge a tools wear. A skilled machinist will pay

* Corresponding

author.

Flank wear

close attention to cutting tool performance particularly when a new combination of tool, material and

part program parameters are being tried. However,

the recent trend towards unsupervised

machining

centres equipped with open architecture controllers

has changed the manufacturing

environment significantly. In this environment,

operators will not be

available to make tool changing decisions. Also, the

pre-planned tool replacement strategies are no longer

appropriate as the machining conditions vary considerably. Thus, there is a great demand for monitoring

systems that ensure optimum performance

of the

unsupervised machining centres.

In addition to the complexity of the metal cutting

operation, the various combinations of the operating

conditions, tooling and the materials, increases the

probability of the machine tool breakdown. Although

several models [l-5] have been developed to predict

cutting tool life, none of these are universally successful due to the complex nature of the machining

0166-3615/97/$17.00

0 1997 Elsevier Science B.V. All rights reserved.

PII SO166-3615(96)00075-9

56

S. Kurada, C. Bradley/

Computers in Industrv 34 (I997) 55-72

process. Various studies [6,7] have pointed out the

importance of sensing technology in the development of flexible manufacturing systems.

Sensors play a vital role in the acquisition of

information relating to the machine, process and part

to optimise the machine tool performance.

In the

case of unsupervised machining centres, it has been

demonstrated that the addition of sensor capabilities

can dramatically

reduce down time and improve

product quality [8]. The deployment of these sensors

for tool condition monitoring can be categorised as

either in-process or in-cycle. An in-process sensor

monitors the tool condition during the machining

operation, whereas an in-cycle sensor examines the

tool periodically,

for example between machining

blocks or during part changeovers.

Over the years, a number of sensors have been

developed, and most of these have been limited for

use in a laboratory environment.

However, recent

advances in the field of image processing technology

have led to the development

of various in-cycle

vision sensors that can be used to obtain information

about the cutting tool as well as the machined part.

The relative speed and absence of any physical

contact with the tool makes on-line monitoring feasible, provided the tool is not in permanent contact

with the workpiece. As vision and artificial intelligence (AI) are natural partners, integration of the

two technologies is also possible, to provide a better

understanding of the tool wear problem. The potential of these techniques for tool condition monitoring

is unlimited and hence will be explored in this

review paper.

Various techniques for tool wear monitoring were

reviewed by Shiraishi [9- 111, Lister and Barrow [ 121

and Martin et al. [ 131. A survey of the general

techniques used for surface roughness measurement

are reviewed by Vorburger and Teague [14] and

Thomas [15]. The main thrust of this review paper is

to include the development

of vision sensors for

cutting tool and the workpiece assessment. Accordingly, the paper is organised as follows: First, an

introduction to the background information in tool

condition monitoring is presented. Next, a description of the machine vision components is presented,

followed by a review of the literature in cutting tool

and workpiece quality inspection. Sensor fusion is

discussed next followed by the concluding remarks.

2. Tool condition monitoring

- The background

The life of a cutting tool can be brought to an end

either due to gradual wear leading to tool failure or

premature edge failure due to chipping. As the cutting tool approaches the end of its life, the degradation in surface quality of the machined workpiece is

quite evident. Characterisation

of the surface topography of a machined workpiece can act as a fingerprint of the machining process and, more specifically, the condition of the cutting tool. This is attributed to the change in the textural characteristics

of the machined workpiece becoming apparent as the

cutting tool approaches the end of its life. Hence, the

tool wear sensors can detect the signal either directly

from the tool or indirectly from the workpiece. The

following sections describe the direct and indirect

aspects of tool condition monitoring.

2.1. Tool life criteria

There are two predominant wear mechanisms that

limit a tools useful life; flank wear and crater wear.

Flank wear occurs on the relief face of the tool and

is mainly attributed to the rubbing action of the tool

on the machined surface and the high temperatures

developed. Crater wear occurs on the rake face of the

tool and changes the chip-tool interface, thus affecting the cutting process. The most significant factors

influencing crater wear are temperature at the toolchip interface and the chemical affinity between the

tool and workpiece materials [16].

Chipping is the term used to describe the breaking

away of a small piece from the cutting edge of the

tool. Unlike wear, which is a gradual process, chipping results in a sudden loss of tool material and

shape, and has detrimental

effect on the surface

finish. It is mainly attributed to the mechanical shock

due to interrupted cutting and thermal fatigue.

A typical flank wear profile is divided into three

regions (see Fig. 1):

Zone C - Nose or trailing groove, which forms

near the relief face and contributes significantly

to surface roughness.

Zone B - A plateau consisting of uniform wear

land.

Zone A - Leading edge groove, which marks the

outer end of the wear land.

S. Kuraah, C. Bradley / Computers in Industry 34 f 1997) 55-72

57

Direct

Fig. 1. Illustration

of the form of a typical flank wear pattern.

According to Imemational Standards Organisation

(ISO), tool life criteria are concerned only with the

leading edge groove in zone A. If the profile is

uniform, the tool can be used unless the average

value VB is greater than 0.3 mm. For uneven wear,

maximum wear land width (VBmax) should be less

than 0.6 mm. A tylpical crater wear profile is shown

in Fig. 2. The extent of cratering is specified by the

maximum depth of the crater from the original rake

face K,. In some cases, its size is specified by K,

and K,.

2.2. Tool wear sen,pors

The requirements

for tool wear sensors to be

successful in a machining environment include:

good correlation between the sensor signal and

the tool condition;

the response should be fast enough for feedback

control;

simple in design and rugged in construction;

non-contact, accurate and reliable;

no interference with the machining process.

Over the years, a number of sensing techniques

have been developed. Most of these systems have

been limited to the clean room environment,

and

only a few have emerged as viable tools for in-process measurement.

Generally, these techniques are

Fig. 2. Illustration

Indirect

of the form of a typical crater wear pattern.

Fig. 3. Classification

niques.

of previously

tested tool wear sensing tech-

classified into two categories

sensors and indirect senors.

(see Fig.

3): direct

2.2.1. Direct sensors

The measurement of the actual dimensions of the

worn area on the tool and/or direct determination of

the condition of the tools cutting edge. These methods have the advantage of providing a direct and

accurate assessment of the tools state but are limited

to in-cycle deployment.

The most common direct

sensing techniques are:

Proximity sensors. Proximity sensors estimate tool

wear by measuring the change in the distance between the tools edge and the workpiece [17,18].

This distance can be measured by electric feeler

micrometers and pneumatic touch probes. The measurement is affected by the thermal expansion of the

tool, deflection or vibration of the workpiece and the

deflection the cutting tool due to the cutting force.

Radioactive sensors. Radioactive sensors [ 19,201

have been used for direct measurement of tool wear.

A small amount of radioactive material is implanted

on the flank face of the cutting tool. During the

cutting process, worn tool material is transferred to

the chips. By monitoring the amount of radioactive

material deposited on the chips, tool wear can be

assessed. The need for collecting chips on-line and

the hazardous nature of radioactive material limits

this technique for laboratory environment.

Vision sensors. The direct application of the vision sensor for measurement of tool wear utilises the

cutting tool itself. In general, these sensors depend

on the higher reflective properties of the wear land,

58

S.

compared to the unworn surface, to derive various

morphological parameters that characterise tool wear.

The majority of the research work has pursued only

the measurement

of flank wear whereas few researchers have attempted to measure both flank and

crater wear. Flank wear regions can be imaged with

a CCD camera, however, crater wear determination

requires the projection of a structured light pattern

onto the tool, in order to derive depth information

from within the crater. In structured light sensing,

the distortion of parallel lines of laser light gives a

measure of crater depth. Due to the hostility of the

cutting environment (presence of lubricant, built-upedge or metal deposits on the cutting tool), current

vision sensors can only be used between cutting

cycles.

2.2.2. Indirect sensors

This approach measures a parameter that can be

correlated with tool condition. Although these parameters can be measured, they are often influenced

by non-wear phenomena

leading to an erroneous

prediction of tool life. Indirect tool wear sensors can

generally be deployed in-process. The most commonly used indirect sensing techniques are:

Cutting force. Cutting force signals have been

extensively used for condition monitoring due to the

availability

of sensor technology 121-231. A dynamometer is mounted on a tool holder to monitor

the cutting force in 1 or 2 orthogonal directions. The

force sensor signal indicates the increase in the

cutting force required as a progressively wearing tool

is forced through the material. Therefore, signal

analysis has to be performed on the force data in

order to determine when the tool has to be replaced.

This signal analysis problem is complicated by parameters, other than tool wear, affecting the cutting

force. For example, material properties (density,

hardness,

ductility),

cutting tool geometry,

chip

build-up on tool edge, etc. This makes it difficult to

develop a robust and reliable force sensor that can

predict tool wear.

Vibration. Machining with a worn tool increases

the fluctuation of forces on the cutting tool. This is

attributed to the friction between the flank face of

the cutting tool and the workpiece, and also the

internal fractures of the tool. Due to these force

fluctuations, vibrations occur in the system. There-

fore, by monitoring the level of vibration, tool wear

can be assessed 124,251. The sensing device consists

of a piezo-electric accelerometer attached to the upper surface of the cutting tool, as close as possible to

the cutting edge. The output of the sensor is compared to a reference threshold, and if the threshold is

exceeded repeatedly, failure is predicted. If the sensor is mounted close to the cutting location, the

variability of the signal increases with the progression of the cutting process. Also, the amplitude of

the signal decreases with an increase in the distance

between the sensor and the cutting edge.

Acoustic emission. Acoustic emission (AE) is defined as the transient elastic energy spontaneously

released in materials undergoing deformation, fracture or both [26]. The emission signal is usually

detected by a contacting piezo-electric

transducer

mounted on the machine tool. The acoustic signal

information must be carefully analysed to separate

the cutting signal from other signals present in the

spectrum. This requires, in addition, to the sensor,

signal amplifiers, filters and processing electronics.

Furthermore, sensor location on the machine tool is

problematic; different machine tools have different

characteristics that need to be considered when processing the AE data.

The sensors described above are not mutually

exclusive; by adopting techniques from the AI community and employing multiple sensors, some of the

problems described above have been minimised.

Fildes [27] provides an overview of the sensor fusion

techniques available to monitor at the part, tool, and

machine level. Sensor fusion is a philosophy whereby

several data modes, indirectly measuring the same

phenomenon,

are combined to increase prediction

reliability. Rangwala and Domfeld [28] applied neural network techniques to the multi-sensor tool wear

monitoring problem. Neural networks allow an automatic learning capability so that some of the machine dependency problems can be eliminated. Neural networks also permit data from multiple sensors

to be combined in order to use the maximum amount

of information

in a control decision.

As more

sensor-based

data is utilised, the certainty of the

derived tool wear parameters increases. In a similar

manner, fuzzy systems have also been applied to the

multi-sensor monitoring problem [29]. Du et al. 1301

reviewed these modes of machine monitoring and

59

S. Kurada, C. Bradley / Computers in Industry 34 (1997) 55-72

the use of AI techniques (such as neural networks

and fuzzy logic) to achieve multi-sensor

fusion.

Clearly, by monitoring,

and hence controlling, the

best operating state of a machine tool maintains the

parts produced by that machine in optimum quality

condition.

U

2.3. Surface texture

The accurate characterisation

of surface texture

involves the definition of parameters that quantify

the surface topographical or geometrical features. It

includes several features present in a parts surface

profile; roughness, waviness, lay and flaws. Roughness is comprised of the randomly distributed surface

irregularities,

extending over the whole area, on a

microscopic

scale. It is mainly attributed to the

intrinsic action of ,the machining process. Waviness,

characterised by height variations at a given spatial

frequency, is the more widely spaced component of

surface texture. It results from such factors as machine or work deflections, vibrations, and tool chatter. The lay of a surface is the direction of the

predominant

surface pattern and is usually determined by the machining method used. Flaws are

unintentional

irregularities, which occur at one location or at relatively infrequent intervals on the surface, arising from accidental damage either during or

after the surface generation. The parameters that are

commonly used to characterise surface texture include:

Flank face

Fig. 4. Schematic

sensing system.

diagram

of a computer

vision-based

tool wear

clean room. All the national and international

standards are defined in terms of the measurements

produced by this instrument. However, due to the

direct physical contact required to produce the surface profile, it damages the surface (particularly soft

materials). Also, line sampling of the data limits its

repeatability and ability to accurately describe the

surface characteristics.

3. Machine vision components

A vision-based tool condition monitoring system

consists of the major components discussed below (a

schematic diagram is shown in Fig. 4).

3.1. Illumination

- Spatial parameters, derived directly from the surface profile, are commonly used to characterise

textural properties of various machined surfaces.

Amplitude parameters,

R, (centre-line

average)

and R, (RMS average), are the most widely used

and are essentially the same.

- Frequency parameters, derived by decomposing

the surface profile into a number of periodic

components of different wavelengths and amplitudes, are used to reveal more information about

the machining process. The decomposition procedure is commonly carried out by using a discrete

Fourier transformation.

The stylus profilometer has traditionally been used

for surface roughness measurement in an industrial

One of the most important parts of the hardware

configuration is the illumination. The selection of an

appropriate light source is dependent on the stand-off

distances required, amount of light and the environmental issues involved [31]. For cutting tool wear

monitoring, the main requirement of the system is to

provide adequate contrast between the worn region

and the background. The intensity and the angle of

the illumination source should be adjusted to accentuate the tool region of interest. The light sources

that are commonly used for tool condition monitoring are incandescent lamps and lasers.

In addition to the selection of an appropriate light

source, consideration must also be given to the technique which will give the optimum results. The three

S. Kurada, C. Bradley / Computers in Industry 34 (I 997) 55-72

60

techniques that have been used extensively for various machine vision applications include [31]:

Front lighting, which involves direct illumination

of the object, has been widely used in tool wear

and surface roughness measurement.

Buck lighting provides excellent contrast, but limited to silhouette information.

Structured lighting refers to the sources of illumination, where the geometric shape of the projected light pattern is controlled by some means.

Structured light sensing has been used to estimate

the depth of the crater wear region.

3.2. Cameras

Two types of video cameras have been used for

tool condition monitoring. The earlier studies employed vidicon cameras, which consist of a photosensitive surface present inside a vacuum tube. When

an electron beam scans this surface, analogue voltage

proportional to the scene brightness (at that point in

the image) is produced. These cameras suffer from

geometric distortions and image drift.

The emergence of cost effective and improved

solid state technology over the past decade, has made

CCD sensors readily available for various machine

vision applications. The basic structure of the CCD

is that of an analogue shift register consisting of a

series of closely spaced capacitors. Typical sensors

offer a pixel resolution of 768 X 493 with imaging

rates of 30 images/set.

High resolution cameras

offer sensor sizes up to 2048 X 2048 pixels, but at

lower temporal resolution and at a very high cost.

High speed cameras, with imaging rates of up to

1000 images/set,

are limited by a reduced spatial

resolution and high cost. The CCD cameras have a

standard C-mount, which is compatible with a wide

variety of lenses.

Lri

Input DigitalImage

+

Breakage

Check

forTool Breakage

No

Fig. 5. Typical

parameters.

Breakage

sequence

equipped with

processing.

real

for determining

time

low

cutting tool flank wear

level

image

pre-

3.4. Image analysis

The processing methodologies employed in evaluating the images for direct or indirect assessment of

the tool condition are discussed in the following

sections.

3.3. Image digitisation

3.4.1. Tool wear

In principle, the steps involved in determining the

cutting tool condition are shown in Fig. 5. During

the monitoring process, the tool is positioned in front

of the camera and can focus on its flank or crater

face. A magnified image of the cutting tool clearly

shows the three distinct textural regions (see Fig. 6):

Image digitisation converts an infinitely variable

value (analogue signal) to an integer from 1 to N,

where N represents degree of Gray scale recognised

by the system. A wide range of frame grabbers are

available for the A/D conversion

of a standard

video signal. Some of the recent frame grabbers are

* the worn region, which is characterised

by a

non-uniform texture;

. the background, comprising of the tool holder and

the platform, is uniform and smoothly textured;

* the unworn region, which is distinguished by a

uniform but coarser texture than the background.

S. Kurada, C. Bradley/ Computers in Industry 34 (1997) 55-72

Fig. 6. Photograph

of a typical flank wear pattern on an insert.

The tool wear image can be affected by uneven

lighting or random bright spots due to the specular

reflections off asperities on the tool surface. Positioning of the illumination sources relative to the tool is

crucial in minimising

these effects, however, they

cannot be totally eliminated. Therefore, the image

has to be enhanced prior to the application of the

segmentation operators. This involves either contrast

stretching or a smoothing

algorithm. A contrast

stretching algorithm is comprised of a linear scaling

transformation

that normalises the actual intensity

values so that the:y are distributed over a wider

intensity range. A smoothing algorithm is primarily

used to remove the spurious effects present in the

image. It involves either a lowpass filtering approach

or cascaded median filtering.

A key step in evaluating flank wear is the delineation of the wear region pixels from the remainder

of the image, which is composed of the background

and the unworn tool region. Termed segmentation, a

simple approach involves global thresholding of the

image based on the Gray level histogram. Ideally,

the bright worn region in a dark background yields a

bimodal intensity histogram and an optimum threshold lies between the histogram peaks. However, due

to non-uniform

background illumination,

the selection of a threshold value from the histogram is

extremely difficult. Worn tool region segmentation

can be achieved through both edge operators (that

directly define the worn region boundary) and texture operators (that find regions of similar surface

texture). An edge is a significant change in the local

image intensity between, for example, the dark image background and the brighter region defining an

61

object surface. By locating the image pixels that are

edges in an image, and linking them together, the

boundaries of objects can be defined. As the gradient

of a function is a measure of change, edge detectors

utilise gradient techniques to locate edges. Recent

work by Kurada and Bradley [32], comparing edge

detection and texture-based operators, has shown the

advantages of texture operators in tool wear assessment.

Texture operators have received much attention in

the image processing literature for their ability to

perform superior segmentation in certain applications

[33]. Texture operators transform pixels with similar

texture into pixels that have a similar brightness,

thereby allowing segmentation to be completed by

applying a simple brightness thresholding operation.

The variance texture operator was used in [32] to

successfully

segment flank wear images acquired

with a CCD camera. The variance of the brightness

values in a 5 X 5 mask around each pixel of interest

is computed as the sum of the squares of the differences between the brightness of the central pixel and

its neighbours. A typical image generated by the

variance operator is shown in Fig. 7; the worn region

has clearly been delineated and can be segmented

from the remainder of the image through intensity

thresholding.

Pixels, present within the wear region boundary,

must be collected into an identifiable morphological

feature from which a set of useful tool wear parameters can be calculated. Clustering is performed on the

binary image generated by texture segmentation. The

algorithm proceeds from top to bottom, scanning left

to right, and collects bright pixel run lengths. Run

Fig. 7. Photograph

variance operator.

of a segmented

flank wear image employing

62

S. Kurada, C.

Computers in

lengths identified in the previous row are combined

with the current run length and amalgamated into the

same feature. The output of this operation are feature

vectors; the largest area feature obtained is the wear

region. However, the interiors still contain dark pixels which must be filled prior to any feature measurement. A feature vector describing the perimeter

of each region is generated, thereby allowing the

interior dark pixels to be converted to bright pixels

in order to obtain a homogenous region (i.e. without

any interior dark valued pixels). Small area features

that remain in the image are eliminated, leaving only

the tool wear feature, through morphological erosion.

A 5 X 5 structuring element is applied to the binary

image to erode smaller areas until the image contains

a single tool wear feature from which the set of tool

wear parameters is calculated. Since the processing

software computes the various wear lengths and

areas in terms of pixels, it is crucial to determine

precise calibration factors (mm/pixel)

for obtaining

an absolute value of these wear parameters.

3.4.2.

texture

Among the non-contact techniques, that have been

proposed as an alternative to the stylus profilometer,

optical techniques are the most promising in terms of

accuracy, speed and flexibility.

Of the numerous

optical techniques that have been used over the

years, the relatively recent introduction of low-cost

vision-based

processing systems has opened up a

new area of surface quality measurement with many

exciting possibilities. Previous research indicates that

the majority of these techniques have focused on

correlating one or more parameters, extracted from

the vision system software processing, with stylus

measurements of R, on the same surface.

During the monitoring process, the workpiece is

positioned on the platform such that the machining

marks are perpendicular to the longer dimension of

the imaging sensor (see Fig. 8). The first step in

processing these images consists of eliminating the

influence of the non-uniform background. This could

be done by subtracting the background image, obtained by fitting a second order regression surface

with points representing the background, from the

original image [34].

Images of the machined surfaces can be processed

either in the spatial or the frequency domain. In the

34 (1997)

Fig. 8. Photograph

surface.

of the characteristic

texture formed on a turned

spatial domain approach, the Gray level histogram of

the scattered pattern is used to extract statistical

parameters. These parameters are then correlated

with the roughness (R,) values, obtained from the

stylus profilometer,

to establish

the calibration

curves. This relationship

varies with the type of

material and machining process employed [35,36].

The frequency domain approach involves the transformation (either optically or digitally) of the original image from the spatial to the Fourier domain.

The magnitude of the frequency components indicates the degree to which periodically

occurring

features are present in the image. The frequency

spectrum is useful in indicating the roughness components due to lay marks, tool wear marks and the

tool vibration [37]. Statistical parameters, derived

from the spectrum, have been correlated with the

surface roughness

obtained with the stylus profilometer.

4. Cutting

tool inspection

Research work carried out in the direct assessment of the tool condition is presented in the following sections.

4.1. Flank wear

The first attempt to utilise a vision system for

characterising tool wear is attributed to Matsushima

et al. [38]. The cutting tool was examined by a TV

camera at every tool change. The gray level image

was converted into a binary image by using a threshold value, selected manually from the intensity histogram. The flank wear width was calculated directly

S. Kurada, C. Bradley/ Computers in Industry 34 (1997) 55-72

from the binary image by counting the number of

image elements in the direction of flank wear. Due to

the irregularities in the surface texture of the wear

land, the intensity of the reflected light varies over

the entire worn region. Hence, the use of global

thresholding generally produces a binary image consisting of stray dark pixels within the tool wear

region leading to erroneous results.

By deploying a vision sensor for in-cycle assessment of flank wear, the sophistication of the measurement technique was improved by Cuppini et al.

[39]. A TV camera., equipped with necessary optics,

was mounted on the machine-tool. The cutting tool,

illuminated

by a fibre-optic bundle, was imaged

during cutting dwells. Three different segmentation

algorithms were implemented. The measurement system was not calibrated to provide absolute units of

measurement.

No comparisons were made between

the segmentation

techniques that were used in the

work.

A more comprehensive

approach, using a VICOM-based

image analysis system, has been reported by Lee et al. [40]. The cutting tool was

positioned

under a microscope,

equipped with a

Vidicon camera, u:sing a specifically designed fixture. The processing of the tool wear image was

carried out in twcl steps. First, a simple contrast

stretching algorithm was used to enhance the image,

followed by an interactive segmentation process to

delineate the worn region from the background. By

deriving a number of parameters, an attempt was

made to provide a more complete description of the

flank wear phenomlena. However, the use of interactive segmentation limits the technique to laboratory

use. Controlled illumination

was identified as a key

factor in improving the system performance.

A fibre-optic sensor prototype was developed for

in-cycle inspection of cutting tool wear [41]. Two

different lighting arrangements

were used in conjunction with one camera position to record wear

images from flank and rake faces. For flank wear,

light from a diffused source was used to discriminate

between the worn and the unworn regions. The

segmentation of the flank wear images was accomplished by using lo-pixel wide stripes. For each

stripe, the threshold1 was selected by determining the

average gray level for the worn and unworn regions.

The sensor, with minor modifications, can be utilised

63

on several machines operating under different working conditions.

An alternate approach, using a coherent light

source, was investigated by Jeon and Kim [42]. The

cutting tool tip was illuminated by a laser beam (0.8

mm beam diameter) and the reflected pattern was

captured by a camera, located perpendicular

to the

flank face. A sequence of image processing steps

were performed on the binary image to remove noise

and produce a contour of the wear region. The

accuracy of the system was found to lie within 0.1

mm of the traditional tool microscope results. The

small size of the illumination area limits the amount

of wear information that could be derived from each

image frame. The high processing speed (1.7 set)

makes the sensor suitable for on-line measurement.

The possibility of using a vision sensor for on-line

assessment of flank wear was investigated by Pedersen [43]. The camera and the light source (halogen

lamp) were mounted on VDF-Boehringer

PNE 480

CNC turret lathe. Flank wear region was delineated

by using the threshold value determined from a

smoothed histogram. The measurements

from the

system were found to conform to the traditional

three-stage pattern (initial, steady state and terminal

wear). Due to the spurious reflections from other

parts of the tool, large variations in the flank wear

width were observed. Selection of a threshold from

the gray level histogram in conjunction

with the

inability of the lens to reproduce sharp changes of

contrast limited the accuracy of the measurement

system.

An adaptive observer, combination

of the observer technique based on the flank wear model and

the recursive least squares parameter estimation algorithm, was used to measure flank wear [44]. A

vision system was used to calibrate the results obtained with the adaptive observer. Due to the limited

resolution of the camera, only the central portion of

the flank wear region was imaged. After thresholding, the flank wear width was determined as the

distance between the top and bottom of the flank

wear region. From the experimental results, it was

observed that the integrated method performed well

under constant and time varying cutting conditions.

Teshima et al. [45] were the first to integrate a

vision sensor with neural network processing capability to predict tool life. The state of the flank and

64

S. Kurada, C. Bradley/ Computers in Industry 34 (1997) 55-72

crater wear along with the cutting conditions were

input to a 3-layer neural network classifier, which

predicted the rest of tool life and the wear type.

More emphasis was placed on the neural network

processing aspect rather than the tool wear assessment.

A slightly different approach for determining the

flank wear involved the use of the rake face image

[46]. The amount of flank wear was determined from

the deformation data and the sharp tool geometric

data. This method provides an entire flank wear map

along the cutting edge. However, the accuracy of the

method depends on how precisely the relative position and orientation of the tool could be achieved

with respect to the camera.

Sensor Adaptive Machines Inc. (SAMI) has recently developed an optical tool condition sensor for

commercial use. This has been used by Du et al. [47]

for monitoring flank wear. The sensor and the light

source are provided in a completely sealed housing

suitable for use in a machining environment.

The

tool conditions were determined by comparing the

master template of the tool profile, sensed when a

new tool is inserted, and the tool profile obtained

after each cut. The tool profile was calibrated, for the

presence of dirt on the tool tip and the relative

positioning,

from the master tool profile. Various

parameters describing flank wear were derived from

the two profiles. The positioning of the current tool

profile with respect to the master profile is crucial in

extracting accurate wear information. This limits the

deployment of this instrument for in-cycle applications.

A two-pass segmentation

process was used to

identify and label the three texturally distinct regions

in a flank wear image [48]. During the learning

period, several tools were imaged under different

lighting conditions and gray level ranges were assigned for each region. Hough transform, which

identifies straight lines and arcs in an image by

transforming

the image points into the parameter

space of a line or a circular arc was used to identify

the tool tip. Experiments were carried out with two

different workpiece materials, and the wear land

shape was found to be dependent on the material.

The selection of the threshold levels from the learning periods is critical for obtaining a good segmentation of the worn region.

4.2. Crater wear

As crater wear is prevalent only under certain

cutting conditions, fewer studies have been carried

out to investigate the problem. Lee et al. [40] were

the first to utilise a vision system to study the crater

wear growth. Based on the nose angle of the tool, the

software distinguished between the crater and flank

image. To ensure that the tool failure is crater dominated, higher cutting speeds and feeds were employed. Six parameters, including the average nose

radius and the chipped area, were derived from the

crater wear pattern. As pointed out in the flank wear

measurement, the necessity of performing interactive

segmentation limits the method to a laboratory environment.

Giusti et al. [41] proposed a more sophisticated

experimental configuration to record crater wear images. A laser beam was passed through a diffraction

grating and the resulting interference fringes were

projected onto the rake face of the tool. The deflection of the fringes indicated the amount of deformation on the rake face, i.e. crater wear. This arrangement has an advantage of providing a 3D map of

crater wear, however, the control of lighting conditions requires added complexity in the hardware and

would be hard to maintain in an industrial setting.

4.3. Tool breakage

One of the more dominant modes of failure, for

more than a quarter of all the advanced tooling

material, is attributed to the breakage of cutting tool

inserts [49]. For a sensor to be successful in detecting

tool breakage, it should be able to operate under

diverse cutting conditions and the output should be

uniquely distinguishable

[50]. Although machine vision is well suited to tackle this problem, only a few

systems have been implemented.

Matsushima et al. [38] detected the tool breakage

and deformation by tracing the cutting edge line of

the binary image of the cutting tool. If large variations were continuously present over a span of more

than three image elements, breakage was identified

on the cutting edge. Similarly the deformation was

detected when the difference between the original

cutting edge line and the actual exceeded a certain

value. The presence of other process irregularities

Non-coherent

Non-coherent

Park and Ulsoy [441

Du et al. [47]

Oguamanam

Note: PW: Plank wear; CW: Crater wear; +

et al. [48]

Non-coherent

Jeon and Kim 1421

Pedersen [43]

Non-coherent (PW)

Coherent (CW)

Coherent

(dia. 0.8 mm)

Non-coherent

: Not available.

E43

mm)

CCD (RGB)

VIDICON

(0.001 mm)

Philips LDH 0600 CCD

(0.001 mm)

TN 2500 CID

(0.0015 mm)

Thresholding

Template matching

Medium

Medium

Low

Tbresholding

Thresholding

Medium

Thresholding

Medium

Low

Speed

High

Thresholding

Thresholding

VIDICON

(488 X 380 pixels)

VIDICON

Giusti et al. [41]

Algorithm

Camera

Light Source

Non-coherent

Software

tool wear sensors used to-date.

Hardware

of vision-based

Lee et al. I401

Researcher

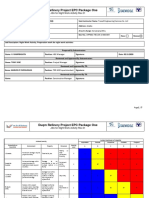

Table 1

Characteristics

Confidence

+

Level of 0.002

Approx. 5% (PW)

0.03 mm (PW)

0.03 mm (CW)

0.1 mm

Accuracy

Results

Lab

In-cycle

Lab

+

+

In-cycle

Lab

In-cycle

Lab

4.2% (PW)

7.8% (CW)

+

Repeatability

Application

__

Area

3

2

;=

zz

Y

s

B

!z

;:

S

z

$

\

_B

9

S. Kurada, C. Bradley/ Computers in Industry 34 (1997) 55-72

66

(hard spots, chip entanglement) or improper selection

of an optimum threshold could lead to an inaccurate

prediction of tool breakage.

Cuppini et al. [39] noted that tool breakage would

result in loss of image section that would be incompatible with normal wear dynamics. Thus a comparison of the tool templates before and after a cutting

process would reveal the occurrence of any breakage. An accurate matching of the templates would

depend on the tool and camera individual positions

being the same in both situations. Also, any problems that occur during the registration of the two

templates would lead to an erroneous prediction.

Oguamanam

et al. [48] predicted breakage by

determining the difference between the nearest tool

edge point and the tool tip, and comparing it with a

pre-set threshold. The tool was classified as broken if

the threshold was exceeded. The threshold was determined from a series of tests performed with a sharp

tool. The threshold was set to accommodate

the

allowable amount of tool tip roundness and round-off

errors. Selection of the proper breakage threshold is

crucial, as it could lead to catastrophic results if a

broken tool was incorrectly classified as good.

To facilitate the comparison of vision-based tool

wear measurement techniques, reviewed in this paper, Table 1 is provided. Table I organised into four

categories, hardware, software, results and the application area.

5. Workpiece surface quality inspection

Research work carried out in the indirect assessment of the tool condition is presented in the following sections.

5.1. Su$ace

texture assessment

One of the earlier attempts in using a vision

system involved a 2D light sectioning method to

study the effect of various operating conditions on

the surface finish of a turned part [51]. An equi-contour map of the patterns was used to highlight various features of the machining process. A 2D Fourier

analysis was shown to be an effective tool in characterising the chatter marks. Baker [52] developed a

microscope image comparator, capable of recording

the image intensity distribution along with the farfield diffraction pattern, for on-machine assessment

of workpiece surface texture. The degradation of the

surface finish with severity of tool wear was shown

by generating profiles from the intensity distribution.

A more successful attempt at quantifying roughness using a vision system involved the use of a

Gray level histogram of the light scattering pattern

from ground surfaces [35]. Optical roughness parameter, defined as the ratio of statistical parameters

derived from the histogram, was correlated with R,

for samples from different materials. A non-linear,

increasing trend with R,, was observed for the optical parameter. As the gray level histogram is based

on tallying the number of pixels for each intensity

level, the optical parameter is affected by the overall

uniformity and degree of illumination. By incorporating a fibre optic lighting arrangement to the measurement system, the technique was extended for

samples from different machining

processes [36].

The technique was further modified to include a

yellow LED (light emitting diode) light source in the

measurement system [53]. A qualitative comparison

of gray level histograms was carried out for various

machined surfaces, but failed to evaluate the results

on a broader set of samples.

Shiraishi and Sato [54] implemented dimensional

and roughness control in a turning operation by

developing an optical system based on the shadow

graph principle. Surface profiles of the turned part

were imaged by passing a laser light beam over the

edges profile. The sensor determined the maximum

value of roughness (typically rough surfaces were

examined, R, = 10 km> on the part and a flat bite

tool was used, where necessary, to keep the roughness profile within tolerance.

Digital Fourier patterns of the light scattering

distribution were shown to be an effective way of

comparing various machined surfaces [55]. It has

been pointed out that these patterns facilitate the

manifestation

of various machining process characteristics. The possibility of generating these patterns

with an optical set-up was investigated by Huynh et

al. [56]. The peaks in the power spectrum, derived

from the Fourier pattern, were found to correlate

with the feed rate spacing. Statistical parameters

computed from the spectrum were used to characterise surface roughness. Cuthbert et al. [57] derived

S. Kurada, C. Bradley/

Computers in Industry 34 (1997) 55-72

the gray level hismgram of the optical Fourier pattern to deduce a roughness parameter. As rougher

surfaces tend to c:reate a diffuse pattern on the

camera, the technique was limited to lower roughness range (R, < 0.4 pm). Also, the need for the

precise alignment of the imaging optics, makes it

unsuitable for on-line inspection.

A new hybrid roughness parameter, based on both

the spacing and amplitude characteristics of the machined surfaces, was proposed from the data obtained with a vision system [58]. The parameter was

shown to be successful in the assessment of wear

track for the evaluation of lubricants. However, the

measurements have been limited to the higher roughness range (6-100 pm) due to the low resolution of

the camera.

The ideal roughness profile, that the tool should

produce on the workpiece, was determined by imaging the cutting tool [59]. The profile was found to be

similar to the one observed on the workpiece, and

the differences werf: attributed to the swelling of the

material during the cutting process. Based on an

extensive literature survey, a design strategy for the

potential development of an on-line roughness sensor

was proposed by Jolic et al. [60]. Three algorithms,

based on analysing the scattered light distribution of

machined surfaces, were utilised to process the sensor data. Ceramic parts, machined by different processes, were examined. Parameters from the three

algorithms were faund to correlate reasonably well

with the stylus measurements.

An in-process assessment of turned part quality

was performed by Lonardo et al. [61]. Diffraction

patterns of the rotating stainless steel samples were

recorded by a CCD camera and input into the neural

network for classification.

The ability of the supervised and unsupervised networks for accurately classifying the machined surfaces was assessed.

More recently, a fairly comprehensive

database

comprising of three:-dimensional light scatter images,

their classification

measures, lookup tables of the

most efficient measures and the 3D stylus maps was

compiled for samples from different machining processes [62]. The classification measures used include:

geometric, colour content and AI. The lookup tables

were provided to identify the best measures for a

given machining process. It has been pointed out that

the system is capable of discriminating

surfaces with

67

similar finish, produced by different machining processes.

To facilitate the comparison of vision-based surface roughness measurement techniques, reviewed in

this paper, Table 2 is provided. Table 2 is organised

into four categories, hardware, software, highlights

and the application area.

5.2. Correlation

life

of surface texture with machine tool

Theoretically,

the surface roughness

workpiece is given by

f2

R, = ~

18fiR

of a turned

where f is the feed rate and R is the tool nose

radius. The roughness can be affected by the following factors [63]:

- Controllable

operating conditions such as the feed rate, cutting speed and the depth of cut;

material properties of the cutting tool and the

workpiece;

tool geometry.

- Uncontrollable

tool conditions such as chatter, wear and builtup-edge.

In the absence of uncontrollable

factors, the surface roughness was found to conform to the theoretical value [63]. The optimum cutting conditions for

carbide and ceramic tools were investigated by incorporating tool life, which was defined as the time

required for the surface roughness R, to deteriorate

to a value of 1.524 pm (representative

of general

finish turning) [64]. Allen and Brewer [65] related

the machine variability and tool flank wear to the

surface roughness of the workpiece. The R, values

were found to be distributed normally,

with the

lowest value reported at a tool flank wear of 0.737

mm. Gillibrand and Heginbotham

[66] related the

surface roughness values, obtained from the lay and

perpendicular

directions, to the cutting speed for

various workpiece materials.

Sundaram and Lambert [67] found in their investigation that the lowest roughness values were obtained at a flank wear width of 0.889 mm. It was

Non-coherent

Non-coherent

Non-coherent

Coherent

Al-Kindi et al. 1581

Jolic et al. 1601

Lonardo et al. 1611

Griffiths et al. 1621

: Not available.

Coherent

Cuthbert et al. [57]

Note: +

35 mm SLR

camera

CCD

(640 x 80)

CCD

Coherent

Huynh et al. [56]

Various processes

Turning

Various processes

Low

High

High

Reflection

Diffraction

pattern + Neural network

pattern and neural network

three different algorithms

Milling, turning

Medium

Compared

Grinding,

Medium

Optical Fourier transform and Gray

level histogram

Gray level image intensity profiles

flat lapping

Grinding

Low

Optical Fourier transform

processes

Grinding

Speed

Medium

Algorithm

Gray level histogram

Machining

Camera

CCD

(49 1 x 384)

CCD

(480 X 525)

CCD

(480 x 525)

CCD

Light source

Non-coherent

Highlights

Software

systems

Hardware

surface texture measurement

Luk et al. 1351

Researcher

Table 2

Attributes of vision-based

Lab

Lab

(480 X 525)

Lab

In-process

In-cycle

+

+

+

+

Lab

8.9%

+

Lab

8.6%

Repeatability

Application

area

2

<

z?

2

cn

i;

9

&

z

Y

s

B

E

9

S

2

f

2

ii

_B

0

S. Kurada, C. Bradley/Computers

also observed that the roughness values obtained

with a tool having a flank wear level of 0.838 mm

were less than those obtained in the initial stage of

tool wear. This suggested that the tool can be used

productively for a greater length of time rather than

discarding them at a wear level of 0.762 mm. Also,

no significant variation in the diameter of the turned

part was observed beyond the flank wear level of

0.84 mm.

Sata et al. [68] studied the wavelength spectrum

of the machined workpiece to identify abnormal

conditions

such as chatter, spindle error and the

swelling of the workpiece. A monitoring

system,

which utilised a light reflectance sensor in conjunction with a pattern classification model, was developed by Domfeld and Fei [69]. The classifier, based

on the linear discriminant

function, was able to

distinguish between surfaces, produced under different states of the cutting tool condition.

Xue et al. [70] utilised scanning electron microscope (SEM) images to study the effect of flank

wear on the machined surface quality. The roughness

of the machined surface was found to deteriorate

with increase in tool wear. Additionally, small cavities were detected on the surface. When a severely

worn tool was used., the machined surface was found

to be very rough, with partially fractured laps or

cracks on the outside boundary. This was attributed

to the higher contact pressure between the tools

flank face and the workpiece, resulting in adhesion

wear.

Surface roughne.ss of the workpiece was incorporated as a specification for tool condition by Du et al.

[48]. When a new insert was used, the roughness

values initially decreased over the first few cuts, and

then started to increase gradually until the tool was

worn out. The surface roughness values were found

to increase at a much higher rate, when the tool was

worn out. Based on this behaviour, a new model that

compensated for tool wear was proposed. The tool

was classified as good, if the roughness predicted by

the model was less than that required, otherwise it

was discarded. A workpiece inspection

strategy,

based on the statistical analysis of the roughness

data, was developed by Yang and Jeang [71]. A

mathematical model developed for predicting flank

wear was used to describe the surface roughness

behaviour.

69

in Industry 34 (I 997) 55-72

6. Integrating wear sensors with data communication

The diagram in Fig. 9 illustrates the integration of

a vision-based

tool wear monitor within a miniworkcell environment.

At the supervisory level of

the network, operations such as CNC part program

creation and part scheduling

are performed. The

information, for each CNC lathe, is transmitted to

the machine via a protocol such as MAP (manufacturing automation protocol) over a local area network. Fibre optic network links are preferred due to

their high bandwidth and immunity to radio frequency noise and cross talk.

Information on tool wear state (and additionally

surface texture of the work piece) is provided by

each of the vision sensors. As previously discussed,

each tool wear image is processed to extract the

relevant wear parameter; this can be accomplished at

each CNC lathe or alternatively, each image can be

supplied to a central image processing station (via

the fibre optic link) at the supervisory level. Higher

level information regarding tool wear and surface

quality can be combined with other sensor information to monitor the overall machine tool condition.

Each CNC controller, as illustrated in Fig. 9,

would typically be a closed box controller that has

Fig. 9. Integration

control network.

of vision-based

tool monitors

with work cell

S. Kurada, C. Bradley/ Computers in Industry 34 (1997) 55-72

70

the principal function of processing the part program

and controlling the tools servo motors. Recent trends

to open architecture controllers permit vision processor boards to reside on a common bus with the

CNCboard. This eliminates the need for additional

stand alone computers at each cell, and ultimately

would permit more immediate reaction to changing

tool condition at the machine tool level. A single

vision-based sensor (with associated processing software) can monitor both tool wear and workpiece

surface texture (such as average roughness and asperity peak count). Complimentary

sensor data permits construction of a process model that would be

unavailable from a single sensor which is inherently

a more reliable source of feedback on the tool wear

state.

7. Concluding

remarks

The state of vision technology as applied to tool

condition monitoring has been discussed in this paper. The development of these sensors is particularly

crucial for the realisation of fully automated manufacturing environments,

such as unmanned machining centres.

The computer vision techniques provide additional wear data (tool chipped area, tool wear area,

etc.) that are not available from most of the other

sensors. However, more research is needed in establishing vision sensors for on-line applications in an

industrial environment.

Future research should be

aimed at developing

a sensor that is capable of

deriving tool wear parameters from multiple data

modes (e.g. morphological

and textural data) and

fusing the data modes to provide a robust indicator

of tool condition. The work discussed in this paper

has demonstrated that vision sensors adapt well for

measuring multiple modes of wear data. Some of the

observations

arising from this review, are summarised below:

The use of Gray level images should be explored.

The inherently greater information content present

in gray level images will enable more reliable tool

wear monitoring at no extra cost. Textural characteristics or pattern recognition techniques could

also be used with gray level images, to greater

effect.

Surface texture information,

obtained from the

workpiece, should be incorporated into the tool

wear prediction model. Such information could be

vital in the selection of optimum cutting conditions. This information could be obtained by using the same vision sensor, that has been used for

tool condition monitoring, with minor modifications.

Most of the research that has been carried out

using vision sensors for tool wear measurement

involved global thresholding.

The use of more

efficient segmentation algorithms, such as region

based techniques, should be investigated as they

are less prone to errors.

To establish vision sensors as a standard for tool

wear measurement, more studies have to be undertaken with a wide range of materials and under

different machining conditions. Also, the results

should be validated with theoretical models that

have been proposed in the past, as the information

obtained from traditional techniques is very limited.

A standardised benchmark for the system performance in terms of accuracy, precision and bias

should be reported for every vision sensor that is

implemented for tool wear measurement.

References

[II V. Solaja and E. Kulanic, Effect of tool life data analysis on

tool life equation,

Annals of CIRP 25 (1976) 105-l 10.

El Y. Koren, Plank wear model of cutting tools using control

theory, Journal

of Engineering for Industry 100 (1978)

103-109.

[31B.M. Kramer and N.P. Suh, Tool wear solution: A quantitative understanding,

Journal of Engineering for Industry 102

(1980) 303-309.

[41E. Usui, T. Shirakashi and T. Kitigawa, Analytical prediction

of cutting tool wear, Wear 100 (1984) 129-151.

A transport diffusion equation in metal

[51E. Kannatey-Asibu,

cutting and its application to analysis of the rate of flank

wear, Journal of Engineering for Industry 107 (1985) 81-89.

PI P.K. Wright and D.A. Boume, Manufacturing Intelligence

(Addison-Wesley

Publishing Company Inc., 1988)

[71M. Death, Sensors: Keys to automation, Manufacturing Engineering 96(6) (1986) 54.

insights: Sensors, Society of Manufacturing

Bl Manufacturing

Engineers, Dearborn, Michigan.

S. Kurada, C. Bradley / Computers in Industry 34 (1997) 55-72

[9] M. Shiraishi, Stop: of in-process measurement, monitoring

and control techniques in machining processes, Part 1: Inprocess techniques for tools, Precision Engineering 10(4)

(1988) 179-189.

[lo] M. Shiraishi, Scope of in-process measurement, monitoring

and control techniques in machining processes, Part 2: Inprocess techniques for workpieces,

Precision Engineering

11(l) (1989) 27-37.

[ 1 l] M. Shiraishi, Scope of in-process measurement, monitoring

and control techniques in machining processes, Part 3: Inprocess techniques for cutting processes and machine tools,

Precision Engineering 1 l(4) (1989) 39-47.

1121 P.M. Lister and G Barrow, Tool condition monitoring systems, Proceedings of 27th Intemarional Machine Tool Design and Research Conference (1986) 271- 288.

[13] K.F. Martin, J.A. Brandon, R.I. Grosvenor and A. Owen, A

comparison of in- process tool wear measurement methods in

turning, Proceedings of 27th Internarional Machine Tool

Design and Research Conference (1986) 289-296.

1141 T.V. Vorburger and E.C. Teague, Optical techniques for

on-line measurement of surface topography, Precision Engineering 3(2) (1981) 61-83.

[15] T.R. Thomas, ed. Rough Sulfates (Longman Inc., New

York, 1982).

[16] G. Boothroyd, Fululamentals of Metal Machining and Machine Tools (McGraw-Hill,

1975).

[17] H. Takeyama, Y. Doi, T. Mitsoka and H. Sekiguchi, Sensors

of tool life for optimisation of machining, Proceedings of

Eighth International Machine Tool Design and Research

Conference (1967) 191-208.

1181 T.H. Stoferle and B. Bellmann, Continuous measuring of

flank wear, Proceedings of Sixteenth Inrernarional Machine

Tool Design and Research Conference (1975) 573-578.

[19] K. Uehara, New attempts for short time tool-life testing,

Annals of CIRP 22 (1973) 23-24.

[20] N.H. Cook and K.. Subramanian,

Micro-isotope

tool wear

sensor, Annals of (CIRP 27(l) (1978) 73-78.

[21] W. Konig, K. Langhammer and H.U. Schemmel, Correlation

between cutting force components and tool wear, Annals of

CIRP 21 (1972) 19-20.

[22] J. Tlusty and G.C. Andrews, A critical review of sensors for

unmanned machining, Annals of CIRP 32 (1983) 563-572.

[23] B. Lindstrom and B. Lindberg, Measurements

of dynamic

cutting forces in the cutting process, A new sensor for

in-process measurement,

Proc. of 24th Int. Machine Tool

Design and Research Co@ (1983) 137-147.

[24] H. Takeyama, Y. Doi, T. Mitsoka and H. Sekiguchi, Sensors

of tool life for optimisation of machining, Proc. of 8th Int.

Machine Tool Design and Research Co& (1967) 191-208.

[25] T.H. Stoferle and B. Bellmann, Continuous measuring of

flank wear, Proc. of 16th Inr. Machine Tool Design and

Research Con& (1975) 573-578.2.

[26] D.A. Domfield and E. Kannatey Asibu, Acoustic emission

during orthogonal metal cutting, Int. J. Mech. Sci. 22 (1980)

285-296.

[27] J.M. Fildes, Sensor fusion for manufacturing,

Sensors (1992)

11-15.

71

[28] S. Rangwala and D.A. Domfeld, Integration of sensors via

neural networks for detection of tool wear states, Proceedings of the Symposium on Integrated and Inrelligent Manufacturing Analysis and Synthesis (ASME Winter Annual

Meeting, New York, 1987) 109-120.

[29] S. Li, M.A. Elbestawi and R. Du, A fuzzy logic approach for

multi-sensor process monitoring in machining, in: PED, Vol.

55, Sensors and Signal Processing for Manufacturing, ASME

(1992) 1-16.

[30] R. Du, M.A. Elbestawi and S.M. Wu, Automated monitoring

of manufacturing processes Part 2: Applications,

Journal of

Engineering for Industry 117 (1995) 133-142.

[31] A. Novini, Fundamentals

of on-line gaging for machine

vision, Proceedings of the SME Conference and Exposition

(Detroit, Michigan, 1989).

[32] S. Kurada and C. Bradley, A vision system for in-cycle tool

wear monitoring,

Proceedings of the Eighth Inremarional

Congress on Condition Monitoring and Diagnostic Engineering Management (Canada, 1995) 139-142.

[33] J.C. Russ, The Image Processing Handbook (CRC Press Inc.,

Florida, USA, 1992).

[34] Y.J. Chao, C. Lee, M.A. Sutton and W.H. Peters, Surface

texture measurement by computer vision, SPIE Optical Testing and Merrology 661 (1986) 302-306.

[35] F. Luk, V. Huynh and W. North, Measurement of surface

roughness by a machine vision system, J. Phys. E: Sci.

Instrum. 22 (1989) 977-980.

[36] V. Huynh, Non-contact

inspection of surface finish, Proceedings of SME International Conference and Exposition

(FC89-343, Detroit, Michigan, 1989).

[37] D.J. Whitehouse, Surfaces - A link between manufacture

and function, Proceedings of rhe Ins&&on of Mechanical

Engineers 192(19) (1978) 179-188.

[38] K. Matsushima, T. Kawabata and T. Sata, Recognition and

control of the morphology of tool failures, Annals of CIRP

28(l) (1979) 43-47.

1391 D. Cuppini, G.DErrico and G. Rutelli, Tool image processing with applications to unmanned metal-cutting, A computer

vision system for wear sensing and failure detection, SPIE

701 (1986) 416-422.

[40] Y.H. Lee, P. Bandyopadhyay

and B.D. Kaminski, Cutting

tool wear measurement using computer vision, Proceedings

of Sensor 86 Conference (Detroit, MI, SME Technical Paper

MR86-934, 1986) 195-212.

[41] F. Giusti, M. Santochi and G. Tantussi, On-line sensing of

flank and crater Wear of cutting tools, Annals of CIRP 36(l)

(1987) 41-44.

[42] J.U. Jeon and S.W. Kim, Optical flank wear monitoring of

cutting tools by image processing, Wear 127 (1988) 207-217.

[43] K.B. Pedersen, Wear measurement of cutting tools by computer vision, International Journal of Machine Tools Manufacturing 30(l) (1990) 131-139.

[44] J.J. Park and A.G. Ulsoy, On-line flank wear estimation

using an adaptive observer and computer vision, Part 2:

Experiment, Journal of Engineering for Industry 115 (1993)

37-43.

[45] T. Teshima, T. Shibasaka, M. Takuma, A. Yamamoto and K.

72

S. Kurada. C. Bradley/

Computers in Industry 34 (1997) 55-72

Iwata, Estimation of cutting tool life by processing tool

image data with neural network, Annals of CIRP 42(l)

(1993) 59-62.

[46] Y. Maeda, H. Uchida and A. Yamamoto, Estimation of wear

land width of cutting tool flank with the aid of digital image

processing techniques, Bull. Japan Sot. of Prec. Eng. 21(3)

(1987) 211-213.

[47] R. Du, B. Zhang, W. Hungerford and T. Pryor, Tool condition monitoring and compensation

in finish turning using

optical sensor, Proceedings of the 1993 ASME Winter Annual Meeting (Symposium of Mechatronics,

1993).

[48] D.C.D. Oguamanam, H. Raafat and S.M. Taboun, A machine

vision system for wear monitoring and breakage detection of

single-point cutting tools, Computers and Industrial Engineering 26(3) (1994) 575-598.

1491 J.W. Powell, In-process control for manufacturing,

IEEE

ISth Video Conference, Vol. 2 (Sept 1986).

[50] J. Colgan, H. Chin, K. Danai and S.R. Hayashi, On-line tool

breakage detection in turning: A multi-sensor method, Journal of Engineering for Industry 16 (1994) 117-123.

[51] H. Tsuwa, H. Sato and M.O-hori, Characterisitcs

of two

dimensional surface roughness - Taking self excited chatter

marks as objective, Annals of CIRP 30(l) (1981) 481-486.

[52] L.R. Baker, On-machine

measurement

of surface texture

parameters,

SPIE, Vol. 1009, Surface Measurement

and

Characterisation (1988) 212-217.

[53] S. Damodaraswamy

and S. Raman, Texture analysis using

computer vision, Computers in Industry 16 (1991) 25-34.

[54] M. Shiraishi and S. Sato, Dimensional and surface roughness

controls in a turning operation, Journal of Engineering for

Industry 112 (1990) 78-83.

[55] D.E.P. Hoy and F. Yu, Surface quality assessment using

computer vision methods, Journal of Materials Processing

Technology 28 (1991) 265-274.

[56] V. Huynh, S. Kurada and W. North, Texture analysis of

rough surfaces using optical Fourier transform, Measurement

Science and Technology 2 (1991) 831-837.

[57] L. Cuthbert and V. Huynh, Statistical analysis of optical

Fourier transform patterns for surface texture assessment,

Measurement Science and Technology 3 (1992) 740-745.

[58] G.A. Al-Kindi, R.M. Baul and K.F. Gill, An application of

machine vision in the automated inspection of engineering

surfaces, Int. J. Prod. Res. 30 (1992) 241-253.

[59] G. Galante, M. Piacentini and V.F. Ruisi, Surface roughness

detection by tool image processing, Wear 148 (1991) 211220.37.

[60] K.I. Jolic, C.R. Nagarajah and W. Thompson, Non-contact,

optically based measurement of surface roughness of ceramics, Measurement Science and Technology 5 (1994) 671-684.

[61] P.M. Lonardo, A.A. Bmzzone and A.M. Lonardo, Analysis

of machined surfaces through diffraction patterns and neural

networks, Annals of CIRP 44(l) (1995) 509-512.

[62] B.J. Grifflths, B.A. Wilkie and R.H. Middleton, Surface

finish scatters the light, Sensor Reuiew 15(2) (1995) 3135.43.

H. Takeyama

[631

and T. Ono, Study on roughness of turned

surfaces, Bull. the Japan Sot. of Prec. Engg. l(4) (1967)

2744280.

C.T. Ansell and J. Taylor, The surface finishing properties of

1641

a carbide and ceramic cutting tool, Proceedings of the Third

International Conference on Machine Tool Design and Research (1962) 225-243.

61 A.F. Allen and R.C. Brewer, The influence of machine tool

variability and tool flank wear on surface texture, Adu.

Mach. Tool. Des. I (1965) 301

D. Gillibrand and W.B. Heginbotham, Experimental observa[661

tions on surface roughness in metal machining, Proceedings

of Sixth International Machine Tool Design and Research

Conference (Las Vegas, USA, 1977) 6299640.

[671 R.M. Sundaram and B.K. Lambett, Surface roughness variability of AIS1 4140 steel in tine turning using carbide tools,

Int. J. Prod. Res. 17(3) (1979) 249-258.

@I T. Sata, M. Li, S. Takata, H. Hiraoka, C.Q. Li, X.Z. Xing

and X.G. Xiao, Analysis of surface roughness generation in

turning and its applications,

Annals of CIRP 34(l) (1985)

473-476.

b91 D.A. Domfeld and R.Y. Fei, In-process surface finish characterisation, Manufacturing

Simulation and Processes, ASME

PED 20 (1986) 191-204.

[701 Q. Xue, A.E. Bayoumi and L.A. Kendall, On tool wear and

its effect on machined surface integrity, J. Mater. Shaping

Technol. 8 (1990) 255-265.

[711 K. Yang and A. Jeang, Statistical surface roughness checking

procedure based on a cutting tool wear model, Journal of

Manufacturing Systems 13(l) (1994) l-8.

Satya Kurada is presently working as a

research and development

engineer at

Mipox International

Corporation,

Hayward, California. He earned his doctorate in Mechanical Engineering from the

University

of Windsor,

Windsor,

Canada. His research interests include

automated inspection, surface topography and image processing.

CoIin Bradley is an Associate Professor

of Mechanical Engineering at the University of Victoria, British Columbia,

Canada. Currently, his research interests

include the application of 3D machine

vision to reverse engineering

and the

use of sensor technologies

within the

manufacturing work cell.

S-ar putea să vă placă și

- ME 1995 Unsolved PDFDocument8 paginiME 1995 Unsolved PDFSathya ThyaguÎncă nu există evaluări

- Block Brake With Long ShoeDocument9 paginiBlock Brake With Long ShoeAdarsh KumarÎncă nu există evaluări

- Block Brake With Long ShoeDocument9 paginiBlock Brake With Long ShoeAdarsh KumarÎncă nu există evaluări

- Me 1992Document7 paginiMe 1992Rahul KusumaÎncă nu există evaluări

- ME 1991 Unsolved PDFDocument13 paginiME 1991 Unsolved PDFSathya ThyaguÎncă nu există evaluări

- Gate QuestionDocument4 paginiGate QuestionAbhishekDasÎncă nu există evaluări

- ME 1995 Unsolved PDFDocument8 paginiME 1995 Unsolved PDFSathya ThyaguÎncă nu există evaluări

- 436-431 MECHANICS 4-Mechanical Vibration-Lecture NotesDocument247 pagini436-431 MECHANICS 4-Mechanical Vibration-Lecture Noteskulov1592Încă nu există evaluări

- 1 Journal of Cleaner Production, Experimental Investigation On PDFDocument11 pagini1 Journal of Cleaner Production, Experimental Investigation On PDFAdarsh KumarÎncă nu există evaluări

- Me 1992Document7 paginiMe 1992Rahul KusumaÎncă nu există evaluări

- Mechanical Engg 2005 PDFDocument20 paginiMechanical Engg 2005 PDFHarshaDesuÎncă nu există evaluări

- Mechanical Engg 2005 PDFDocument20 paginiMechanical Engg 2005 PDFHarshaDesuÎncă nu există evaluări