Documente Academic

Documente Profesional

Documente Cultură

Conceptual Review

Încărcat de

ApoorvaDescriere originală:

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

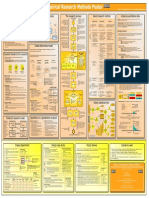

Conceptual Review

Încărcat de

ApoorvaDrepturi de autor:

Formate disponibile

REVIEW

Concepts

Review Outline

General data issues

Reliability and validity

Samples, Sampling distributions

Initial Examination of data

Hypothesis testing

Simple analyses

Simple regression

T-tests, equivalence testing

One-way ANOVA

Between

Within

Multiple factors/predictors

Numeric: central tendency, variability, correlation

Graphic: frequency, scatter

Multiple regression

Factorial ANOVA

ANCOVA

Modern univariate approaches

Effect size and confidence intervals for effect size

More reliance on good graphics

Bootstrapping

Robust

Model comparison and Bayesian techniques

Reliability and Validity

Reliability of a measure can refer to many things but in general it is the precision of

measurement, i.e. more reliability = less measurement error

In terms of psychometric measures, it can refer to the amount of true score present in a measure

This leads to: inaccurate estimates, attenuated effects, inefficient estimates, muddled and conflicting

results etc. and in short a big waste of research time and money

Validity refers more to the accuracy of the measure

In terms of individuals, reliability is a measure of how well a measure distinguishes one person from the

next

While extremely important for a scientific endeavor, it is for some reason often ignored by

some either outright (not reported or checked) or in the sense of using measures reported to

have poor reliability

Observed score = True score + Error

Even if it is reliable is it measuring what its designed to or perhaps some other construct?

As we will see later, bias estimates can help with model validity issues, but construct, face etc.

should be adequately assessed in the construction process

INITIAL EXPLORATION OF DATA

Every data set worth collecting should be examined from head to toe and leave

no stone unturned

A great deal of this should be of a graphical nature, histogram/density plots,

scatterplots etc.

Numerically it involves measures examining measures of central tendency,

variability, covariability, reliability etc.

Robust checks should also begin at this point

Most tests of univariate model assumptions do not occur at this point.

Univariate inspection is of limited to no use

Median vs mean, MAD vs. sd etc.

For example, normality is checked with regard to residuals which are produced after the regression

is run

Multivariate normality can and should be checked if it is an assumption

The gist is one should know everything about the variables of interest that will be

used in subsequent inferential analysis and realize that surprising discoveries of

worth can happen during this phase of analysis

SAMPLES, SAMPLING DISTRIBUTIONS

Recall that the population will rarely if ever be

available to us in full, but it must be explicit in the

research design and analysis

Depressed college students? Depressed Americans?

Depressed women?

In order to speak about a population of interest we

must take an appropriate sample of it

From the sample we infer something about the

population

Inferential statistics (vs. Descriptive)

Inductive logic

SAMPLING DISTRIBUTIONS

However statistical analysis makes use of not just the sample

statistics, which by themselves only speak about the sample, not

the population

It is the sampling distribution that allows for inference

Mean = sample statistic

CI or t-statistic regarding the mean = inferential statistic

In the past, we always assumed a theoretical distribution, e.g.

that was normal or approximated normal, in order to perform

our analysis

SAMPLING DISTRIBUTIONS

Modern analysis can easily implement other

distributions if more appropriate, or simply use the

data itself to construct an empirical sampling

distribution

In either case once we have a sampling distribution

specified, we now have probabilities that we can

attach to certain events (means, regression

coefficients etc.)

NULL HYPOTHESIS TESTING

Null hypothesis testing, despite the call for an outright ban by

a few statisticians and strong criticism by methodologists for

decades1, is the dominant statistical paradigm

There are actually two approaches

Fisher: based on the data and focus on the observed p-value, i.e.

p(D|H0), as a measure of disbelief in the null

Neyman-Pearson: based on the design of the study, focus on error rates,

power, addition of alternative hypothesis

Philosophically they are incompatible (or at least their

developers thought so), and both camps argued vigorously for

decades about which was the correct path

NULL HYPOTHESIS TESTING

Psychology and many other disciplines unfortunately

chose to mash the two approaches in an incoherent

fashion

Result:

Majority of applied researchers with misunderstanding of

the observed p-value

Equating the observed p-value to type I error rate

Equating the observed p-value to effect size

Insertion of Bayesian interpretation etc.

Ignoring type II error rate/power

Over-emphasis on statistical significance

AVOIDING NHST PROBLEMS

Most of the problems can be taken care of by:

Cautious

and correct use/interpretation when

implemented

Difficult

Avoiding

given it's not easy to understand even then

use

Emphasis on effect size, model fit/comparison,

prediction/validation etc. rather than statistical

significance

FURTHER ISSUES: ASSUMPTIONS

Inferential analyses come with a variety of assumptions but

that will vary depending on the nature of the analysis.

Examples

Homoscedasticity(homogeneity of variance)

Normal distribution of residuals

Independence of observations

Linearity

In practice these are routinely violated

Distant past wisdom was that our typical analyses were robust

to violations of assumptions

This was in part reflective of our inability to do anything about

it in a practical/computational fashion but also a lot of wishful

thinking

FURTHER ISSUES: ASSUMPTIONS

Recent past and current wisdom is that classical tests are not robust to

violations of assumptions

Ignoring violations has never been acceptable-one at least used the

interpret results with caution tagline if assumptions were violated

They never were robust to outliers, lack of linearity, independence, reliability,

rarely are to type II error, might be to type I in some situations

However with standard univariate techniques this is no longer a viable option

either, as methods old and new are easily implemented to deal with these issues

Studies that do not mention testing assumptions could conceivably be

wrong/inaccurate in every sense, and their statistics and any conclusions arising

from them are suspect at best.

Robust methods will at the very least give us a comparison and at best a

much more accurate portrayal of reality

ASSUMPTIONS MET

If we have met what do these inferential statistics tell us?

Z, t, F, 2, statistics are simply quantiles of a particular probability distribution

How they are calculated depends on the nature of the test, and so conceptually

they may imply different things

A t for a regression coefficient is not calculated the same as a t for a planned contrast in

ANOVA

A Z for a sample mean is not calculated the same a the Z used in a Sobel test for

mediation

2 for a 2-way frequency table is not calculated the same as that for goodness of fit in an

SEM

It is simply the case that the statistic we are looking at, e.g. ratio of mean between

group variance to mean within group variance in ANOVA, adheres to one of those

probability distributions with certain degrees of freedom

Each statistic has an associated probability of occurrence

PROBABILITY

So what does the observed p-value tell us?

It tells the probability of observing that test statistic or more

extreme if a certain state of affairs is true (H0)

If it is low enough, we reject the null hypothesis

Pseudo-falsification approach

As an example, in regression we get a sense of mean shared

variance of the DV and predictors (MSmodel) and mean squared

error in prediction (MSerror), leading to an F statistic with

associated probability

Conceptually it tells us the probability of the observed variance

accounted for or more extreme (R2) vs. a H0: R2 = 0

In terms of model comparison, the null model in this case is an

intercept only model in which the only prediction is the DV mean

and random error

i.e. no relationship between predictors and outcome

PROBABILITY

What that probability doesnt tell you

That what you found is actually meaningful

It doesnt tell you type I error rate (thats established before the study via

design)

It doesnt tell you the strength of association

It doesnt tell you the probability that your hypothesis is correct or some other

(null or otherwise) is incorrect

It doesnt give you any sense of replication

All it tells you is the probability of your statistic of interest assuming a

particular state of affairs (H0) is true

The key question is: Does anyone really assume H0 is viable in the first

place?

Science demands more than a p-value for the progression of knowledge.

MORE PROBABILITY: ERROR RATES

As mentioned, the observed p-value previously discussed is the conditional

probability of the data given the null hypothesis is true

Type I error rate is the probability of rejecting the null when it is actually true

P(D|H0)

P(reject H0|H0)

They neither look or sound the same and in fact are not

The observed (hint hint) p-value comes from actual observation of behavior or

whatever is of interest

Type I error rate is what is controlled/kept at a specified minimum if you design the

study a certain way.

The observed p-value after conducting the study might be .37, .19, .05, .0000001.

The probability of making a type I error, if the study is conducted as specified

beforehand and assumptions are met, is whatever value was decided upon in the

design phase, typically .05.

PROBLEMS WITH PROBABILITIES

A typical and incorrect method of reporting probabilities is a symptom of the philosophical mishmash noted

earlier.

Focus on observed p-values adheres to the Fisherian perspective, it was Neyman and Pearson that saw the

key probability in terms of error rates

If an alpha significance level of .05 is chosen during design, if we reject H0 only when the observed p is less

than .05 (i.e. the test statistic is beyond the critical value) the error rate is maintained

It makes no difference what the actual p-value is, only whether its associated statistic is within the region of

rejection

Furthermore, it makes no sense to report p < .05, p < .01, p < .001.

Type I error rate is fixed via design before data is collected, though not meeting assumptions may alter it

Reporting the actual p-value is not a difficult numeric interpretation: if p = .03 then we already know its < .05

In the N-P system it also makes no sense to report both the observed p-value and alpha significance level,

only whether the statistic falls into the region of rejection or not e.g. p < .05

It also is incorrect to use phrases such as marginal significance or a trend1 toward significance an similar.

If you want to play the game in this fashion (focus on error rates) you either reach the cutoff or not, there is

no close enough.

Rejecting at .09 .07 .12 etc., i.e. marginal significance, means your alpha is not .05 anymore

WHY THE CONFUSION?

Probabilities are confusing

NHST is really confusing

Put them together and its not surprising problems result

Add to this, poor journal practices that saw statistical

significance as the primary, if not only, measure of

meaningfulness of a study, which forced people to make a big

deal about anything that either reached the cutoff or got

close, and equated really small p-values as highly

significant1, i.e. even more important

Also people tend see p < .05, p < .001 etc. as just description

when in fact there is much more behind it

PROBLEMS WITH PROBABILITIES

Along with the observed p-value/alpha confusion, many ignore

type II error rate/power concerns, which often are more

pressing issue (assuming a typical situation on over-reliance of

statistical significance)

As an example, a simple model for regression with 5

predictors would require N = 122 to find an R2= .10

statistically significant if = .05. AND if assumptions have

been met (N required could easily double with

heteroscedasticity, lack of normality etc.).

Typical psych research is looking for small effects (at least

partly due to lack of reliability in measures)

Typical psych research often does not meet assumptions

Because of this typical psych research doesnt have adequate

sample sizes

THINGS TO NOTE

Despite certain beliefs to the contrary, p-values are

not required for scientific reporting

Statistical significance != practical significance

Unimportant differences in coefficients can have

dramatic differences in the observed p-value

Testing one theory against one of no relationship only

to say unlikely is largely uninformative

APA has requested past practices be done away with

and/or changed dramatically (and their

recommendations were light compared to what many

methodologists suggest)

WHAT CAN BE DONE

Relegate observed p-values to minor import or simply

dont report

e.g. Interval estimates provide the same functionality but

give additional information about uncertainty of estimates

Shift focus to effect size, model fit in comparison to

other viable models, accuracy in prediction, size and

meaningfulness of coefficients

Use methods that will result in more accurate

probabilities and intervals when desired

S-ar putea să vă placă și

- Hosmer D.W., Lemeshow S. Applied Survival Analysis PDFDocument206 paginiHosmer D.W., Lemeshow S. Applied Survival Analysis PDFApoorvaÎncă nu există evaluări

- Hypothesis Testing: An Intuitive Guide for Making Data Driven DecisionsDe la EverandHypothesis Testing: An Intuitive Guide for Making Data Driven DecisionsÎncă nu există evaluări

- Inferential StatisticsDocument48 paginiInferential StatisticsNylevon78% (9)

- Hosmer D.W., Lemeshow S. Applied Survival Analysis PDFDocument206 paginiHosmer D.W., Lemeshow S. Applied Survival Analysis PDFApoorvaÎncă nu există evaluări

- Null Hypothesis SymbolDocument8 paginiNull Hypothesis Symbolafbwrszxd100% (2)

- Inferential StatisticsDocument6 paginiInferential StatisticsRomdy LictaoÎncă nu există evaluări

- Parametric Vs Non Parametric StatisticsDocument12 paginiParametric Vs Non Parametric StatisticsiampuneiteÎncă nu există evaluări

- What Is Inferential StatisticsDocument4 paginiWhat Is Inferential StatisticsJomar Mendoza VillanuevaÎncă nu există evaluări

- Possible Questions For Final DefenseDocument9 paginiPossible Questions For Final DefenseJayson AliaboÎncă nu există evaluări

- Q2 Module 5 - Data Analysis Using Statistics and Hypothesis TestingDocument9 paginiQ2 Module 5 - Data Analysis Using Statistics and Hypothesis TestingJester Guballa de LeonÎncă nu există evaluări

- Statistics: An Introduction and OverviewDocument51 paginiStatistics: An Introduction and Overviewshariq_Încă nu există evaluări

- Defining Hypothesis TestingDocument17 paginiDefining Hypothesis TestingRv EstebanÎncă nu există evaluări

- Null Hypothesis TestingDocument5 paginiNull Hypothesis Testingmkdswzgig100% (2)

- INFERENTIAL STATISTICS: Hypothesis Testing: Learning ObjectivesDocument5 paginiINFERENTIAL STATISTICS: Hypothesis Testing: Learning Objectiveslynette cantosÎncă nu există evaluări

- Lecture 7Document29 paginiLecture 7Aguode Claire AguodeÎncă nu există evaluări

- Emperical Research Methods PosterDocument1 paginăEmperical Research Methods PosterДмитрий ТокаренкоÎncă nu există evaluări

- Sec 1 Quantitative ResearchDocument7 paginiSec 1 Quantitative ResearchtayujimmaÎncă nu există evaluări

- Ockhams Razor Stats Confidence IntervalsDocument5 paginiOckhams Razor Stats Confidence Intervalssorby99Încă nu există evaluări

- P ValueDocument13 paginiP ValueKamal AnchaliaÎncă nu există evaluări

- Data AnalysisDocument34 paginiData AnalysisRakhshanda Ayub Khan100% (1)

- StatisticsDocument8 paginiStatisticsTitikshaÎncă nu există evaluări

- What Is A HypothesisDocument4 paginiWhat Is A Hypothesis12q23Încă nu există evaluări

- Name: Hasnain Shahnawaz REG#: 1811265 Class: Bba-5B Subject: Statistical Inferance Assignment 1 To 10Document38 paginiName: Hasnain Shahnawaz REG#: 1811265 Class: Bba-5B Subject: Statistical Inferance Assignment 1 To 10kazi ibiiÎncă nu există evaluări

- Null Hypothesis Significance TestingDocument6 paginiNull Hypothesis Significance Testinggjgcnp6z100% (2)

- Statistical Analysis - An Overview IntroductionDocument57 paginiStatistical Analysis - An Overview IntroductionRajeev SharmaÎncă nu există evaluări

- Unit 2.3 Basic of Testing HypothesisDocument25 paginiUnit 2.3 Basic of Testing HypothesisHiba MohammedÎncă nu există evaluări

- ResearchMethods Poster Ver 0.6 PDFDocument1 paginăResearchMethods Poster Ver 0.6 PDFMohamed Saad BajjouÎncă nu există evaluări

- 208F7EB97450E93CDocument178 pagini208F7EB97450E93CAurelia RijiÎncă nu există evaluări

- The Research ProcessDocument48 paginiThe Research ProcessDaniela Marie John RonquilloÎncă nu există evaluări

- Null Hypothesis DefinitionDocument9 paginiNull Hypothesis DefinitionDoMyPaperForMoneySingapore100% (2)

- Research QuestionDocument13 paginiResearch QuestionKeith LambÎncă nu există evaluări

- Chapter 1 A Preview of Business StatisticsDocument3 paginiChapter 1 A Preview of Business StatisticsCharmaine OndoyÎncă nu există evaluări

- Uts WPS OfficeDocument7 paginiUts WPS OfficeSintia DeviÎncă nu există evaluări

- 01 - Introduction To Hypothesis TestingDocument4 pagini01 - Introduction To Hypothesis TestingChristian CalaÎncă nu există evaluări

- Hypothesis Testing in ResearchDocument4 paginiHypothesis Testing in ResearchNurun NabiÎncă nu există evaluări

- TESTING OF HYPOTHESIS Parametric Test (T, Z, F) Chi-SqDocument10 paginiTESTING OF HYPOTHESIS Parametric Test (T, Z, F) Chi-SqAnonymous CwJeBCAXp67% (3)

- Module 004 - Parametric and Non-ParametricDocument12 paginiModule 004 - Parametric and Non-ParametricIlovedocumintÎncă nu există evaluări

- Pages From Statistics CourseDocument26 paginiPages From Statistics CourseAnis AzziÎncă nu există evaluări

- Unit 1Document9 paginiUnit 1Saral ManeÎncă nu există evaluări

- Hypothesis TestingDocument21 paginiHypothesis TestingMisshtaCÎncă nu există evaluări

- Statistics Tutorial: Hypothesis Tests: Null Hypothesis. The Null Hypothesis, Denoted by HDocument66 paginiStatistics Tutorial: Hypothesis Tests: Null Hypothesis. The Null Hypothesis, Denoted by Htafi66Încă nu există evaluări

- P Values in Research PapersDocument8 paginiP Values in Research Papersafedtkjdz100% (1)

- Hypothesis DefinedDocument33 paginiHypothesis DefinedtinbtufngÎncă nu există evaluări

- Inferential Statistics PDFDocument48 paginiInferential Statistics PDFBrenda BanderadoÎncă nu există evaluări

- Unit 1Document9 paginiUnit 1Saral ManeÎncă nu există evaluări

- 3.normality Test and HomogenityDocument4 pagini3.normality Test and HomogenityRavi Kumar DubeyÎncă nu există evaluări

- Null Hypothesis FormulaDocument8 paginiNull Hypothesis Formulatracyhuangpasadena100% (2)

- Activity 5Document28 paginiActivity 5Hermis Ramil TabhebzÎncă nu există evaluări

- Allama Iqbal Open UniversityDocument21 paginiAllama Iqbal Open UniversityJabbar AwanÎncă nu există evaluări

- Academic Research UWDocument28 paginiAcademic Research UWKishor BhanushliÎncă nu există evaluări

- Choosing Stadistical Test. International Journal of Ayurveda Research 2010Document5 paginiChoosing Stadistical Test. International Journal of Ayurveda Research 2010Ernesto J. Rocha ReyesÎncă nu există evaluări

- Inferential Statistics Masters Updated 1Document27 paginiInferential Statistics Masters Updated 1Joebert CalagosÎncă nu există evaluări

- Expe FinalsDocument8 paginiExpe FinalsAirame Dela RosaÎncă nu există evaluări

- An Introduction To Multivariate StatisticsDocument19 paginiAn Introduction To Multivariate StatisticsKhampa Ngawang PhuntshoÎncă nu există evaluări

- Psychology (7th Ed.: (Title)Document6 paginiPsychology (7th Ed.: (Title)gabbieÎncă nu există evaluări

- Research Paper Inferential StatisticsDocument7 paginiResearch Paper Inferential Statisticsskpcijbkf100% (1)

- Process of ResearchDocument18 paginiProcess of ResearchSrivalli ReddyÎncă nu există evaluări

- Essentials of A Good TestDocument6 paginiEssentials of A Good TestdanypereztÎncă nu există evaluări

- Measurement in ResearchDocument8 paginiMeasurement in ResearchAF Ann RossÎncă nu există evaluări

- Research MethodDocument3 paginiResearch MethodamitinasiaÎncă nu există evaluări

- L Sherry2006Document23 paginiL Sherry2006Fabián OlazÎncă nu există evaluări

- Concise Biostatistical Principles & Concepts: Guidelines for Clinical and Biomedical ResearchersDe la EverandConcise Biostatistical Principles & Concepts: Guidelines for Clinical and Biomedical ResearchersÎncă nu există evaluări

- Mclust WeightsDocument5 paginiMclust WeightsApoorvaÎncă nu există evaluări

- 120 Interview QuestionsDocument19 pagini120 Interview Questionssjbladen83% (12)

- Regression Modeling Strategies - With Applications To Linear Models by Frank E. HarrellDocument598 paginiRegression Modeling Strategies - With Applications To Linear Models by Frank E. HarrellApoorva100% (3)

- 100 Data Science Interview Questions and Answers (General)Document11 pagini100 Data Science Interview Questions and Answers (General)Apoorva100% (1)

- Journal of Statistical Software: March 2005, Volume 13, Book Review 1Document2 paginiJournal of Statistical Software: March 2005, Volume 13, Book Review 1ApoorvaÎncă nu există evaluări

- Slect 7Document22 paginiSlect 7ApoorvaÎncă nu există evaluări

- Aberdeen Group - Asset Management Predictief Onderhoud Stimuleren Met Behulp Van AnalyticsDocument10 paginiAberdeen Group - Asset Management Predictief Onderhoud Stimuleren Met Behulp Van AnalyticsApoorvaÎncă nu există evaluări

- A Study On Children Influence On Family Purchase Decision in IndiaDocument10 paginiA Study On Children Influence On Family Purchase Decision in IndiaAdina ElenaÎncă nu există evaluări

- Quantitative Techniques Assignment-2: One Sample T-TestDocument8 paginiQuantitative Techniques Assignment-2: One Sample T-TestRAJAT LOKHANDEÎncă nu există evaluări

- Indr 344 - HW3Document3 paginiIndr 344 - HW3Arda SahinÎncă nu există evaluări

- AllexamDocument71 paginiAllexamKHÁNH NGÔ ĐÌNH BẢOÎncă nu există evaluări

- Hypothesis Development MakalahDocument6 paginiHypothesis Development MakalahSamsi DewiÎncă nu există evaluări

- Action Research Arold and Jessa 2Document24 paginiAction Research Arold and Jessa 2Ji Sae Mi0% (1)

- Jessica Utts - The Significance of Statistics in Mind-Matter ResearchDocument24 paginiJessica Utts - The Significance of Statistics in Mind-Matter ResearchHtemaeÎncă nu există evaluări

- Advanced Statistics: Session 1 & 2 - Some Revision of Statistical MethodsDocument74 paginiAdvanced Statistics: Session 1 & 2 - Some Revision of Statistical Methodsdevesh_mendiratta_61Încă nu există evaluări

- EJMCM - Volume 7 - Issue 8 - Pages 5106-5142Document37 paginiEJMCM - Volume 7 - Issue 8 - Pages 5106-5142manjusri lalÎncă nu există evaluări

- Atmospheric Research: Mohammad Sayemuzzaman, Manoj K. JhaDocument12 paginiAtmospheric Research: Mohammad Sayemuzzaman, Manoj K. JhaHydro PrimeÎncă nu există evaluări

- What Is The Difference Between Literature Review and EssayDocument6 paginiWhat Is The Difference Between Literature Review and EssayafmzsawcpkjfzjÎncă nu există evaluări

- Factors Influencing The Behavioural Intention For Smart Farming in SarawakDocument20 paginiFactors Influencing The Behavioural Intention For Smart Farming in SarawakNurin SyafiqahÎncă nu există evaluări

- Chapter 8 - Hypothesis TestingDocument90 paginiChapter 8 - Hypothesis TestingsamcareadamnÎncă nu există evaluări

- Analysis On Islamic Bank On Rate of Return RiskDocument25 paginiAnalysis On Islamic Bank On Rate of Return RiskNurhidayatul AqmaÎncă nu există evaluări

- Revised Quick Guidelines For Writing Ph.d. Thesis-1Document18 paginiRevised Quick Guidelines For Writing Ph.d. Thesis-1Ravi KharatÎncă nu există evaluări

- Lay Yoon Fah, Khoo Chwee Hoon, & Jenny Cheng Oi LeeDocument12 paginiLay Yoon Fah, Khoo Chwee Hoon, & Jenny Cheng Oi LeeawangbakhtiarÎncă nu există evaluări

- Quiz - Hypothesis Testing Attempt ReviewDocument6 paginiQuiz - Hypothesis Testing Attempt ReviewAnrudh kumarÎncă nu există evaluări

- MKT20019 A3 Group ReportDocument32 paginiMKT20019 A3 Group ReportChí NghĩaÎncă nu există evaluări

- How Sample Size Influence Research OutcomeDocument3 paginiHow Sample Size Influence Research OutcomeKshitish RayÎncă nu există evaluări

- Learning Plan (Stat)Document8 paginiLearning Plan (Stat)Joseph ManaligodÎncă nu există evaluări

- Group 8 - OM II - Project ReportDocument43 paginiGroup 8 - OM II - Project ReportSumit SahaÎncă nu există evaluări

- Math 112 Fall 2019 Practice Problemsfor Exam 4Document5 paginiMath 112 Fall 2019 Practice Problemsfor Exam 4aÎncă nu există evaluări

- Complemento Aula 8Document43 paginiComplemento Aula 8Hamsaveni ArulÎncă nu există evaluări

- De La Salle University - DasmariñasDocument26 paginiDe La Salle University - DasmariñasHazel Joy UgatesÎncă nu există evaluări

- 5040hypothesis TestingDocument31 pagini5040hypothesis TestingShital Tarachand WaghmareÎncă nu există evaluări

- Non Parametric TestsDocument19 paginiNon Parametric TestsKrislyn Ann Austria Alde100% (1)

- European Journal of Theoretical and Applied SciencesDocument13 paginiEuropean Journal of Theoretical and Applied SciencesEJTAS journalÎncă nu există evaluări

- Sample Size and Power: What Is Enough?: Ceib PhillipsDocument10 paginiSample Size and Power: What Is Enough?: Ceib Phillipsdrgeorgejose7818Încă nu există evaluări

- Skittles ProjectDocument6 paginiSkittles Projectapi-319345408Încă nu există evaluări