Documente Academic

Documente Profesional

Documente Cultură

NSSLabs DDoS Prevention Test Report

Încărcat de

akanksha0811Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

NSSLabs DDoS Prevention Test Report

Încărcat de

akanksha0811Drepturi de autor:

Formate disponibile

DISTRIBUTED DENIAL-OF-SERVICE (DDoS)

PREVENTION TEST REPORT

Arbor Networks APS 2800 v5.8.1

Authors Jerry Daugherty, Devon James

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

Overview

NSS Labs performed an independent test of the Arbor Networks APS 2800 v5.8.1. The product was subjected to

thorough testing at the NSS facility in Austin, Texas, based on the Distributed Denial-of-Service (DDoS) Prevention

Test Methodology v2.0 available at www.nsslabs.com. This test was conducted free of charge and NSS did not

receive any compensation in return for Arbor Networks participation.

While the companion Comparative Reports on security, performance, and total cost of ownership (TCO) will

provide information about all tested products, this Test Report provides detailed information not available

elsewhere.

During NSS testing, vendors tune their devices to create a performance baseline based on normalized network

traffic. Devices are further tuned for accuracy as needed. The performance baseline traffic is 40% of rated

throughput and consists of a mix of web application traffic to provide readers with relevant security effectiveness

and performance dimensions based on their expected usage.

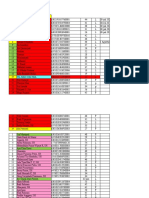

Product

Arbor Networks APS 2800

v5.8.1

Volumetric

Overall

Attack

Mitigation

Overall

Baseline

Impact

NSS-Tested

Throughput

3-Year TCO

(List Price)

3-Year TCO

(Street Price)

90.8%

0.4%

20,000

Mbps

NA

$274,600

Application1

Protocol

Attack Mitigation

82.5%

Attack Mitigation

90.0%

Attack Mitigation

Baseline Impact

0.3%

Baseline Impact

0.0%

Baseline Impact

100.0%

1.0%

Figure 1 Overall Test Results

Using the tuned policy, the APS 2800 provided 90.8% of overall attack mitigation. There was 0.4% impact on the

overall baseline traffic. The device also passed all stability and reliability tests.

The APS 2800 is rated by NSS at 20,000 Mbps, which is in line with the vendor-claimed performance; Arbor

licensed the device under test at 20 Gbps. NSS-Tested Throughput is calculated as an average of all of the realworld protocol mixes and the 21 KB HTTP response-based capacity test.

Arbor Networks declined to provide list pricing for the device tested.

Two of the devices have an HTTP redirect approach (a valid mitigation technique), which resulted in an incompatibility with the DDoS

Prevention test harness. For this reason, the HTTP GET Flood test score is not included in either the Application scores or the Overall scores.

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

Table of Contents

Overview............................................................................................................................... 2

Security Effectiveness ............................................................................................................ 5

Volumetric Attacks ........................................................................................................................................................5

Protocol Attacks.............................................................................................................................................................6

Application Attacks ........................................................................................................................................................6

Performance ......................................................................................................................... 7

HTTP Capacity with No Transaction Delays ...................................................................................................................7

Application Average Response Time HTTP .................................................................................................................8

Real-World Traffic Mixes ...............................................................................................................................................8

Stability and Reliability .......................................................................................................... 9

Management and Configuration .......................................................................................... 10

Total Cost of Ownership (TCO) ............................................................................................. 11

Installation Hours ........................................................................................................................................................11

List Price and Total Cost of Ownership ........................................................................................................................12

Street Price and Total Cost of Ownership ....................................................................................................................12

Detailed Product Scorecard ................................................................................................. 13

Test Methodology ............................................................................................................... 15

Contact Information ............................................................................................................ 15

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

Table of Figures

Figure 1 Overall Test Results.......................................................................................................................................2

Figure 2 Volumetric Attacks ........................................................................................................................................5

Figure 3 Protocol Attacks ............................................................................................................................................6

Figure 4 Application Attacks .......................................................................................................................................6

Figure 5 HTTP Capacity with No Transaction Delay ....................................................................................................7

Figure 6 Average Application Response Time (Milliseconds) .....................................................................................8

Figure 7 Real-World Traffic Mixes ..............................................................................................................................8

Figure 8 Stability and Reliability Results .....................................................................................................................9

Figure 9 Sensor Installation Time (Hours) .................................................................................................................11

Figure 10 List Price 3-Year TCO .................................................................................................................................12

Figure 11 Street Price 3-Year TCO .............................................................................................................................12

Figure 12 Detailed Scorecard ....................................................................................................................................14

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

Security Effectiveness

This section verifies that the DDoS prevention device under test (DUT) can detect and mitigate DDoS attacks

effectively. Both the legitimate network traffic and the DDoS attack are executed using a shared pool of IP

addresses, which represents a worst-case scenario for the enterprise. Since legitimate traffic uses the same IP

addresses as the attacker, no product can simply block or blacklist a range of IP addresses. This test can reveal the

DUTs true ability to effectively mitigate attacks.

NSS analysis is conducted first by testing every category of DDoS attack individually to determine that the DUT can

successfully detect and mitigate each attack. Once a baseline of security effectiveness is determined, NSS builds

upon this baseline by adding multiple DDoS attacks from different categories in an attempt to overwhelm the DUT

and allow attack leakage to occur. At each point during testing, NSS validates that legitimate traffic is still

allowed and is not inadvertently blocked by the DUT.

In all security effectiveness tests, a mix of http traffic is run to establish a baseline of legitimate traffic. This traffic is

run at 40% of the devices rated bandwidth, and is used to ensure that legitimate traffic is not affected during

mitigation. The baseline impact percentage is listed as the amount of baseline traffic that is affected by the device

while the device is mitigating an attack. As an example, if the baseline impact score is 5%, then 5% of the known

baseline traffic was inadvertently blocked during attack mitigation.

After the baseline traffic has been stabilized at 40% of the rated bandwidth, an attack is started. These attacks are

intended to saturate the network link in terms of either bandwidth or packet rate. To calculate the percentage of

each attack that is being mitigated, the known amount of attack traffic being injected into the device is compared

with the amount of attack traffic that is allowed to pass through the device. This percentage is calculated

separately from the baseline impact score, which allows for a scenario where a device may mitigate an attack very

well, but at the same time cause an unintended impact to legitimate services.

Volumetric Attacks

Volumetric attacks consume all of a targets available bandwidth. An attacker can use multiple hosts, for example,

a botnet, to generate a large volume of traffic that causes network congestion between the target and the rest of

the Internet, and leaves no available bandwidth for legitimate users. There are many types of packet floods used in

volumetric attacks, including regular and malformed ICMP, regular and malformed UDP, and spoofed IP.

Type of Volumetric Attack

Mitigation

Baseline Impact

ICMP Malformed Flood (Type)

100%

0%

ICMP Malformed Flood (Code)

100%

0%

ICMP Type 0 Flood (Ping Flood)

100%

0%

Malformed Raw IP Packet Flood

95%

0%

Malformed UDP Flood

0%

0%

100%

2%

UDP Flood

Figure 2 Volumetric Attacks

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

Protocol Attacks

Protocol DDoS attacks exhaust resources on the target or on a specific device between the target and the Internet,

such as routers and load balancers. After the DDoS attack has consumed enough of the devices resources, the

device cannot open any new connections because it is waiting for old connections to close or expire. Examples of

protocol DDoS attacks include SYN floods, ACK floods, RST attacks, and TCP connection floods.

Type of Protocol Attack

Mitigation

Baseline Impact

ACK Flood

60%

0%

RST Flood

100%

0%

udp-flood-frag.sh

100%

0%

SYN Flood

100%

0%

Figure 3 Protocol Attacks

Application Attacks

An application attack takes advantage of vulnerabilities in the application layer protocol or within the application

itself. This style of DDoS attack may require, in some instances, as little as one or two packets to render the target

unresponsive. Application DDoS attacks can also consume application layer or application resources by slowly

opening up connections and then leaving them open until no new connections can be made. Examples of

application attacks include HTTP floods, HTTP resource exhaustion, and SSL exhaustion.

Type of Application Attack

Mitigation

Baseline Impact

HTTP GET Flood

100%

0%

RUDY (Low and Slow)

100%

0%

LOIC

100%

0%

NTP Reflection Attack

100%

0%

10G DNS Reflection Attack

100%

5%

SIP Invite Flood

100%

0%

Figure 4 Application Attacks

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

Performance

There is frequently a trade-off between security effectiveness and performance. Because of this trade-off, it is

important to judge a products security effectiveness within the context of its performance and vice versa. This

ensures that new security protections do not adversely impact performance and that security shortcuts are not

taken to maintain or improve performance.

This section measures the performance of the system using various traffic conditions that provide metrics for realworld performance. Individual implementations will vary based on usage; however, these quantitative metrics

provide a gauge as to whether a particular device is appropriate for a given environment. Network traffic was

passed through the inspection engine, but no mitigation rules were in place. Performance metrics were measured

to see if the device was able to perform at the advertised rate.

The net difference between the baseline (without the device) and the measured capacity of the device is recorded

for each of the following tests.

HTTP Capacity with No Transaction Delays

The aim of these tests is to stress the HTTP detection engine and determine how the device copes with network

loads of varying average packet size and varying connections per second. By creating genuine session-based traffic

with varying session lengths, the device is forced to track valid TCP sessions, thus ensuring a higher workload than

for simple packet-based background traffic. This provides a test environment that is as close to real-world

conditions as possible, while ensuring absolute accuracy and repeatability.

Each transaction consists of a single HTTP GET request and there are no transaction delays; i.e., the web server

responds immediately to all requests. All packets contain valid payload (a mix of binary and ASCII objects) and

address data. This test provides an excellent representation of a live network (albeit one biased toward HTTP

traffic) at various network loads.

25,000

1,000,000

20,000

20,000

20,000

20,000

20,000

100,000

Megabits per Second

14,553

10,000

15,000

1,000

10,000

100

5,000

0

CPS

Mbps

10

2880 KB Response

768 KB Response

192 KB Response

44 KB Response

21 KB Response

10 KB Response

1.7 KB Response

800

3,000

12,000

50,000

100,000

200,000

582,100

20,000

20,000

20,000

20,000

20,000

20,000

14,553

Figure 5 HTTP Capacity with No Transaction Delay

Connections per Second

20,000

20,000

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

Application Average Response Time HTTP

Test traffic is passed across the infrastructure switches and through all port pairs of the device simultaneously (the

latency of the basic infrastructure is known and is constant throughout the tests). The results are recorded for each

HTTP response size (2880 KB, 768 KB, 192 KB, 44 KB, 21 KB, 10 KB, and 1.7 KB).

Application Average Response Time HTTP (at 90% Maximum Load)

Milliseconds

2880 KB HTTP Response Size 40 Connections per Second

768 KB HTTP Response Size 150 Connections per Second

0.310

2.000

0.700

1.100

0.430

0.580

0.700

192 KB HTTP Response Size 600 Connections per Second

44 KB HTTP Response Size 2500 Connections per Second

21 KB HTTP Response Size 5000 Connections per Second

10 KB HTTP Response Size 10,000 Connections per Second

1.7 KB HTTP Response Size 40,000 Connections per Second

Figure 6 Average Application Response Time (Milliseconds)

Real-World Traffic Mixes

This test measures the performance of the device under test in a real-world environment by introducing

additional protocols and real content, while still maintaining a precisely repeatable and consistent background

traffic load. Different protocol mixes are utilized based on the intended location of the device (network core or

perimeter) to reflect real use cases. For details about real-world traffic protocol types and percentages, see the

NSS Labs Distributed Denial-of-Service Prevention Test Methodology, available at www.nsslabs.com.

25,000

Mbps

20,000

20,000

20,000

20,000

20,000

Real-World (Financial)

Real-World (Mobile Users and

Applications)

"Real World" (Web Based

Applications And Services)

Real-World (ISP)

20,000

20,000

20,000

20,000

15,000

10,000

5,000

0

Mbps

Figure 7 Real-World Traffic Mixes

The APS 2800 was tested by NSS to perform in line with the throughput claimed by the vendor for all real-world

traffic mixes.

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

Stability and Reliability

Long-term stability is particularly important for an inline device, where failure can produce network outages. These

tests verify the stability of the device along with its ability to maintain security effectiveness while under normal

load and while passing malicious traffic. Products that cannot sustain legitimate traffic (or that crash) while under

hostile attack will not pass.

The device is required to remain operational and stable throughout these tests, and to block 100% of previously

blocked traffic, raising an alert for each. If any non-allowed traffic passes successfully, caused either by the volume

of traffic or by the device failing open for any reason, the device will fail the test.

Test Procedure

Result

Passing Legitimate Traffic under Extended Attack

PASS

Protocol Fuzzing and Mutation

PASS

Power Fail

PASS

Persistence of Data

PASS

Figure 8 Stability and Reliability Results

These tests also determine the behavior of the state engine under load. All DDoS prevention devices must choose

whether to risk denying legitimate traffic or risk allowing malicious traffic once they run low on resources. A DDoS

prevention device will drop new connections when resources (such as state table memory) are low, or when traffic

loads exceed its capacity. In theory, this means the DUT will block legitimate traffic but maintain state on existing

connections (and prevent attack leakage).

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

Management and Configuration

Security devices are complicated to deploy; essential systems such as centralized management console options, log

aggregation, and event correlation/management systems further complicate the purchasing decision.

Understanding key comparison points will allow customers to model the overall impact on network service level

agreements (SLAs), to estimate operational resource requirements to maintain and manage the systems, and to

better evaluate the required skills/competencies of staff.

Enterprises should include management and configuration during their evaluations, focusing on the following at a

minimum:

General Management and Configuration How easy is it to install and configure devices, and how easy is it to

deploy multiple devices throughout a large enterprise network?

Policy Handling How easy is it to create, edit, and deploy complicated security policies across an enterprise?

Alert Handling How accurate and timely is the alerting, and how easy is it to drill down to locate critical

information needed to remediate a security problem?

Reporting How effective is the reporting capability, and how readily can it be customized?

10

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

Total Cost of Ownership (TCO)

Implementation of security solutions can be complex, with several factors affecting the overall cost of deployment,

maintenance, and upkeep. All of the following should be considered over the course of the useful life of the

solution:

Product Purchase The cost of acquisition

Product Maintenance The fees paid to the vendor, including software and hardware support, maintenance,

and other updates

Installation The time required to take the device out of the box, configure it, put it into the network, apply

updates and patches, and set up desired logging and reporting

Upkeep The time required to apply periodic updates and patches from vendors, including hardware,

software, and other updates

Management Day-to-day management tasks, including device configuration, policy updates, policy

deployment, alert handling, and so on

For the purposes of this report, capital expenditure (capex) items are included for a single device only (the cost of

acquisition and installation).

Installation Hours

This table depicts the number of hours of labor required to install each device using only local device management

options. The table accurately reflects the amount of time that NSS engineers, with the help of vendor engineers,

needed to install and configure the device to the point where it operated successfully in the test harness, passed

legitimate traffic, and blocked and detected prohibited or malicious traffic. This closely mimics a typical enterprise

deployment scenario for a single device.

The installation cost is based on the time that an experienced security engineer would require to perform the

installation tasks described above. This approach allows NSS to hold constant the talent cost and measure only the

difference in time required for installation. Readers should substitute their own costs to obtain accurate TCO

figures.

Product

Installation (Hours)

Arbor Networks APS 2800

v5.8.1

Figure 9 Sensor Installation Time (Hours)

11

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

List Price and Total Cost of Ownership

Calculations are based on vendor-provided pricing information. Where possible, the 24/7 maintenance and

support option with 24-hour replacement is utilized, since this is the option typically selected by enterprise

customers. Prices are for single device management and maintenance only; costs for central management

solutions (CMS) may be extra.

Arbor Networks declined to provide list pricing for the device tested.

Product

Arbor Networks APS

2800

Purchase

Maintenance

/Year

Year 1

Cost

Year 2

Cost

Year 3

Cost

3-Year TCO

NA

NA

NA

NA

NA

NA

v5.8.1

Figure 10 List Price 3-Year TCO

Year 1 Cost is calculated by adding installation costs (US$75 per hour fully loaded labor x installation time) +

purchase price + first-year maintenance/support fees.

Year 2 Cost consists only of maintenance/support fees.

Year 3 Cost consists only of maintenance/support fees.

Street Price and Total Cost of Ownership

Calculations are based on vendor-provided pricing information. Where possible, the 24/7 maintenance and

support option with 24-hour replacement is utilized, since this is the option typically selected by enterprise

customers. Prices are for single device management and maintenance only; costs for CMS may be extra.

Product

Purchase

Maintenance

/Year

Year 1

Cost

Year 2

Cost

Year 3

Cost

3-Year TCO

Arbor Networks APS

2800

$175,000

$33,000

$208,600

$33,000

$33,000

$274,600

v5.8.1

Figure 11 Street Price 3-Year TCO

Year 1 Cost is calculated by adding installation costs (US$75 per hour fully loaded labor x installation time) +

purchase price + first-year maintenance/support fees.

Year 2 Cost consists only of maintenance/support fees.

Year 3 Cost consists only of maintenance/support fees.

For additional TCO analysis, including for the CMS, refer to the TCO Comparative Report.

12

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

Detailed Product Scorecard

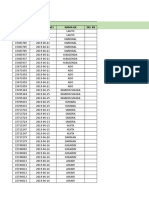

The following chart depicts the status of each test with quantitative results where applicable.

Description

Result

Security Effectiveness

Mitigation

Baseline Impact

ICMP Malformed Flood (Type)

100%

0%

ICMP Malformed Flood (Code)

100%

0%

ICMP Type 0 Flood (Ping Flood)

100%

0%

Malformed Raw IP Packet Flood

95%

0%

Malformed UDP Flood

0%

0%

100%

2%

ACK Flood

60%

0%

RST Flood

100%

0%

Udp-flood-frag.sh

100%

0%

SYN Flood

100%

0%

HTTP GET Flood

100%

0%

DDoS 10G RUDY One Armed

100%

0%

L.O.I.C. variant 2 (slow l.o.i.c)

100%

0%

NTP Reflection Attack

100%

0%

DDoS 10G DNS Reflect Attack

100%

5%

SIP Invite Flood

100%

0%

Volumetric

UDP Flood

Protocol

Application

Performance

HTTP Capacity with No Transaction Delays

2880 KB Response

800

768 KB Response

3,000

192 KB Response

12,000

44 KB Response

50,000

21 KB Response

100,000

10 KB Response

200,000

1.7 KB Response

582,100

Average Response Time (90%)

Milliseconds

2880 KB HTTP Response Size 40 Connections per Second

0.310

768 KB HTTP Response Size 150 Connections per Second

2.000

192 KB HTTP Response Size 600 Connections per Second

0.700

44 KB HTTP Response Size 2500 Connections per Second

1.100

21 KB HTTP Response Size 5000 Connections per Second

0.430

10 KB HTTP Response Size 10,000 Connections per Second

0.580

1.7 KB HTTP Response Size 40,000 Connections per Second

0.700

Real-World Traffic

Mbps

Real-World Protocol Mix (Data Center Financial)

20,000

Real-World Protocol Mix (Data Center Mobile Users and Applications)

20,000

Real-World Protocol Mix (Data Center Web Based Applications And Services)

20,000

13

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

Real-World Protocol Mix (Data Center Internet Service Provider (ISP) Mix)

20,000

Stability and Reliability

Passing Legitimate Traffic Under Extended Attack

PASS

Protocol Fuzzing and Mutation

PASS

Power Fail

PASS

Persistence of Data

PASS

Total Cost of Ownership (List Price)

Ease of Use

Initial Setup (Hours)

Time Required for Upkeep (Hours per Year)

See Comparative

Time Required to Tune (Hours per Year)

See Comparative

Expected Costs

Initial Purchase (hardware as tested)

NA

Installation Labor Cost (@$75/hr)

NA

Annual Cost of Maintenance & Support (hardware/software)

NA

Annual Cost of Updates (IPS/AV/etc.)

NA

Initial Purchase (enterprise management system)

See Comparative

Annual Cost of Maintenance & Support (enterprise management system)

See Comparative

Total Cost of Ownership

Year 1

NA

Year 2

NA

Year 3

NA

3 Year Total Cost of Ownership

NA

Total Cost of Ownership (Street Price)

Ease of Use

Initial Setup (Hours)

Time Required for Upkeep (Hours per Year)

See Comparative

Time Required to Tune (Hours per Year)

See Comparative

Expected Costs

Initial Purchase (hardware as tested)

$175,000

Installation Labor Cost (@$75/hr)

$600

Annual Cost of Maintenance & Support (hardware/software)

$33,000

Annual Cost of Updates (IPS/AV/etc.)

$0

Initial Purchase (enterprise management system)

See Comparative

Annual Cost of Maintenance & Support (enterprise management system)

See Comparative

Total Cost of Ownership

Year 1

$208,600

Year 2

$33,000

Year 3

$33,000

3 Year Total Cost of Ownership

$274,600

Figure 12 Detailed Scorecard

14

NSS Labs

Distributed Denial-of-Service Prevention Test Report Arbor Networks APS 2800 v5.8.1

Test Methodology

Distributed Denial-of-Service (DDoS) Prevention Test Methodology v2.0

A copy of the test methodology is available on the NSS Labs website at www.nsslabs.com.

Contact Information

NSS Labs, Inc.

206 Wild Basin Road

Building A, Suite 200

Austin, TX 78746 USA

info@nsslabs.com

www.nsslabs.com

This and other related documents are available at: www.nsslabs.com. To receive a licensed copy or report misuse,

please contact NSS Labs.

2016 NSS Labs, Inc. All rights reserved. No part of this publication may be reproduced, copied/scanned, stored on a retrieval

system, e-mailed or otherwise disseminated or transmitted without the express written consent of NSS Labs, Inc. (us or we).

Please read the disclaimer in this box because it contains important information that binds you. If you do not agree to these

conditions, you should not read the rest of this report but should instead return the report immediately to us. You or your

means the person who accesses this report and any entity on whose behalf he/she has obtained this report.

1. The information in this report is subject to change by us without notice, and we disclaim any obligation to update it.

2. The information in this report is believed by us to be accurate and reliable at the time of publication, but is not guaranteed.

All use of and reliance on this report are at your sole risk. We are not liable or responsible for any damages, losses, or expenses

of any nature whatsoever arising from any error or omission in this report.

3. NO WARRANTIES, EXPRESS OR IMPLIED ARE GIVEN BY US. ALL IMPLIED WARRANTIES, INCLUDING IMPLIED WARRANTIES OF

MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, AND NON-INFRINGEMENT, ARE HEREBY DISCLAIMED AND EXCLUDED

BY US. IN NO EVENT SHALL WE BE LIABLE FOR ANY DIRECT, CONSEQUENTIAL, INCIDENTAL, PUNITIVE, EXEMPLARY, OR INDIRECT

DAMAGES, OR FOR ANY LOSS OF PROFIT, REVENUE, DATA, COMPUTER PROGRAMS, OR OTHER ASSETS, EVEN IF ADVISED OF THE

POSSIBILITY THEREOF.

4. This report does not constitute an endorsement, recommendation, or guarantee of any of the products (hardware or

software) tested or the hardware and/or software used in testing the products. The testing does not guarantee that there are

no errors or defects in the products or that the products will meet your expectations, requirements, needs, or specifications, or

that they will operate without interruption.

5. This report does not imply any endorsement, sponsorship, affiliation, or verification by or with any organizations mentioned

in this report.

6. All trademarks, service marks, and trade names used in this report are the trademarks, service marks, and trade names of

their respective owners.

15

S-ar putea să vă placă și

- Data Final PPBD 2019 SMPN 3 KLPDocument20 paginiData Final PPBD 2019 SMPN 3 KLPbudi mÎncă nu există evaluări

- No. Kelas Rombel Nama - PD Tanggal - Lahir TL Nisn Nominal No - Rek No - KIPDocument4 paginiNo. Kelas Rombel Nama - PD Tanggal - Lahir TL Nisn Nominal No - Rek No - KIPsmkpuiÎncă nu există evaluări

- BpjsDocument390 paginiBpjsrsiourwodadiÎncă nu există evaluări

- Nik T1 Reg1Document2 paginiNik T1 Reg1Yessy SaputriÎncă nu există evaluări

- Alamat Cabang Bank DanamonDocument28 paginiAlamat Cabang Bank DanamonIMAN DJAYUSMANÎncă nu există evaluări

- Formulir PSG NikDocument5 paginiFormulir PSG NikAnonymous GibF14lgN0Încă nu există evaluări

- Daftar Peserta Tes Cpns 2014Document116 paginiDaftar Peserta Tes Cpns 2014crewÎncă nu există evaluări

- PitchBook 3Q 2017 Fintech Analyst Note ICOsDocument6 paginiPitchBook 3Q 2017 Fintech Analyst Note ICOsZerohedge100% (1)

- Data Jamkesda WNT Ada NIKDocument35 paginiData Jamkesda WNT Ada NIKpkmwonotirto kabblitarÎncă nu există evaluări

- Measuring Brand Performance in Financial ServicesDocument20 paginiMeasuring Brand Performance in Financial ServicesSara SantosÎncă nu există evaluări

- Data Pendataan Karyawan Desember 2017Document52 paginiData Pendataan Karyawan Desember 2017adrian lazuardiÎncă nu există evaluări

- Laporan Penjualan Cussons (Clear)Document516 paginiLaporan Penjualan Cussons (Clear)Alvin SeptianÎncă nu există evaluări

- Jan-DinkesDocument108 paginiJan-DinkesNirna PonselÎncă nu există evaluări

- KEC. CIAWI Non NIK BJ WruDocument573 paginiKEC. CIAWI Non NIK BJ Wrudellymulyati2811Încă nu există evaluări

- Terlaris Bukalapak Februari 2023Document876 paginiTerlaris Bukalapak Februari 2023Gflexku TeÎncă nu există evaluări

- Employee Data RecordsDocument114 paginiEmployee Data RecordsNamiratu ZahraÎncă nu există evaluări

- Kab. Bone BolangoDocument124 paginiKab. Bone BolangoNurnaningsih RaufÎncă nu există evaluări

- Data Mapping Matliyus Juli 2022Document207 paginiData Mapping Matliyus Juli 2022gloriaÎncă nu există evaluări

- Merchant Yg Belum AteuDocument528 paginiMerchant Yg Belum AteuSetia BudiÎncă nu există evaluări

- NTTDocument154 paginiNTTFarly RataÎncă nu există evaluări

- Contact - List - Area - Update - MarchDocument54 paginiContact - List - Area - Update - MarchVinnyÎncă nu există evaluări

- Register Covid PKM BBK Madang 2022Document103 paginiRegister Covid PKM BBK Madang 2022Reza NasuhaÎncă nu există evaluări

- Kertas Kerja A2 Dan SPT 1770 S Dan 1770 SS 2021 Dinas PendidikanDocument1.834 paginiKertas Kerja A2 Dan SPT 1770 S Dan 1770 SS 2021 Dinas PendidikanMirawati MirawatiÎncă nu există evaluări

- LIST OF IDENTIFICATION NUMBERSDocument200 paginiLIST OF IDENTIFICATION NUMBERSSuparman parmanÎncă nu există evaluări

- Data Bayar BPNT Viii 2022 Kec SetiabudiDocument411 paginiData Bayar BPNT Viii 2022 Kec SetiabudiSopian AzhariÎncă nu există evaluări

- Ar Per 10 Maret 2020Document682 paginiAr Per 10 Maret 2020Audi IhsanÎncă nu există evaluări

- DB SIMKES TrakhirDocument3 paginiDB SIMKES TrakhirHasanudin AdminÎncă nu există evaluări

- Data Bansos UMKM Sumedang Selatan IIDocument25 paginiData Bansos UMKM Sumedang Selatan IISilvi SitiÎncă nu există evaluări

- 4 SIT Malaysia Customer Reference ListDocument32 pagini4 SIT Malaysia Customer Reference ListmohdfadhirulÎncă nu există evaluări

- Lap BpjsDocument556 paginiLap BpjsPia PolgarÎncă nu există evaluări

- Data Sasaran SMPDocument122 paginiData Sasaran SMPSyahdan HudaÎncă nu există evaluări

- B1.1 - TABEL REKAPITULASI DATDocument847 paginiB1.1 - TABEL REKAPITULASI DATIckbal Kembali CodetÎncă nu există evaluări

- Bansos Wil SenenDocument5 paginiBansos Wil Senenbasatino71Încă nu există evaluări

- NO Nik KTP Nama Alamat Lengkap Desa Kecamatan Bidang Usaha Alamat Usaha No - Hp/WaDocument4 paginiNO Nik KTP Nama Alamat Lengkap Desa Kecamatan Bidang Usaha Alamat Usaha No - Hp/WaMidi YantoÎncă nu există evaluări

- Contoh Form Excel 34Document67 paginiContoh Form Excel 34Mas MahardikaÎncă nu există evaluări

- Financial Planning and Wealth ManagementDocument58 paginiFinancial Planning and Wealth ManagementsohanlÎncă nu există evaluări

- Daftar RPK CilacapDocument9 paginiDaftar RPK CilacapDhudut Sangpemburu IwakÎncă nu există evaluări

- Data BPJS AsmahDocument78 paginiData BPJS AsmahjohncuyÎncă nu există evaluări

- Rekap Hasil Verifikasi Data Jamda - Predator.fd.1Document1.006 paginiRekap Hasil Verifikasi Data Jamda - Predator.fd.1denjow87Încă nu există evaluări

- Rekap Data Berhak Lunas 110522 (Updated) - 1Document48 paginiRekap Data Berhak Lunas 110522 (Updated) - 1Suhendar JazzÎncă nu există evaluări

- Oarc 2015 Sponsorship Package (Print)Document7 paginiOarc 2015 Sponsorship Package (Print)api-281477296100% (1)

- Bhagak ShawaDocument292 paginiBhagak ShawaBenny Edysaputra SijabatÎncă nu există evaluări

- A01 Juni 2019Document1.006 paginiA01 Juni 2019Sri MulyaniÎncă nu există evaluări

- Askes BpjsDocument88 paginiAskes Bpjsrian sasbarÎncă nu există evaluări

- Fixpraya Sudirman 12Document118 paginiFixpraya Sudirman 12Datu MarjanÎncă nu există evaluări

- PENCATATAN PELAKSANAAN TINGKAT PUSKESMAS/FASYADocument61 paginiPENCATATAN PELAKSANAAN TINGKAT PUSKESMAS/FASYAPutraÎncă nu există evaluări

- Swab 23 April 2021 Revisi Revisi RevisiDocument699 paginiSwab 23 April 2021 Revisi Revisi RevisiRonii Ariia Black'whiteÎncă nu există evaluări

- TMBillPayment Retailer List (1) (1) SDocument905 paginiTMBillPayment Retailer List (1) (1) SAnjali KrishÎncă nu există evaluări

- Book 1Document272 paginiBook 1Jason JonathanÎncă nu există evaluări

- Target Sasaran Pelayanan Publik Untuk Pelaksanaan Vaksinasi Covid-19 Di BantenDocument23 paginiTarget Sasaran Pelayanan Publik Untuk Pelaksanaan Vaksinasi Covid-19 Di BantenYusuf CikalÎncă nu există evaluări

- Contoh Import Data DemoDocument672 paginiContoh Import Data DemoPUSKESMAS PEKKAEÎncă nu există evaluări

- Jakarta 23 April 5Document7 paginiJakarta 23 April 5dimasÎncă nu există evaluări

- Cetak KTP 2021Document2.370 paginiCetak KTP 2021Andi Sri junitasariÎncă nu există evaluări

- Kpi DirectDocument34 paginiKpi DirectLalaÎncă nu există evaluări

- Vaksin 1 - Gel 2Document4 paginiVaksin 1 - Gel 2Hendraloka Budi PÎncă nu există evaluări

- SDMK RSUD DATOE BINANGKANG 2018 DesDocument596 paginiSDMK RSUD DATOE BINANGKANG 2018 DesArlinto SimbalaÎncă nu există evaluări

- Raw Data Survey RecordsDocument572 paginiRaw Data Survey Recordspuskesmas wolasiÎncă nu există evaluări

- Data PDMDocument336 paginiData PDMMiranda NababanÎncă nu există evaluări

- SCP Remote Monitoring of Assets Reference Benchmark: Document Version 1.0 December 2019Document13 paginiSCP Remote Monitoring of Assets Reference Benchmark: Document Version 1.0 December 2019le nguyenÎncă nu există evaluări

- Webapp FirewallDocument2 paginiWebapp Firewallakanksha0811Încă nu există evaluări

- 15 July 2012Document3 pagini15 July 2012akanksha0811Încă nu există evaluări

- 03 - Quality Center Functionality and FeaturesDocument50 pagini03 - Quality Center Functionality and Featuresakanksha0811Încă nu există evaluări

- Solaris QuicksheetDocument2 paginiSolaris Quicksheetxllent1Încă nu există evaluări

- Session1 Telecom Security A Primer v6 SonyReviseDocument96 paginiSession1 Telecom Security A Primer v6 SonyReviseakanksha0811Încă nu există evaluări

- Course Description InteriorDocument10 paginiCourse Description Interiorakanksha0811Încă nu există evaluări

- Visual Reasoning SeriesDocument17 paginiVisual Reasoning SeriesArpit Singh100% (4)

- Microsoft Visio 2003Document10 paginiMicrosoft Visio 2003tapera_mangeziÎncă nu există evaluări

- CS 2007Document16 paginiCS 2007kammaraÎncă nu există evaluări

- CM Dashboard ADocument1 paginăCM Dashboard Aakanksha0811Încă nu există evaluări

- Network-Security-Essentials Study-Guide (En US) v12-5 PDFDocument312 paginiNetwork-Security-Essentials Study-Guide (En US) v12-5 PDFEmiliano MedinaÎncă nu există evaluări

- NetworkInitiated DocumentDocument61 paginiNetworkInitiated DocumentLogesh Kumar AnandhanÎncă nu există evaluări

- 4G TECHNOLOGY APPLICATIONS AND TECHNOLOGIESDocument6 pagini4G TECHNOLOGY APPLICATIONS AND TECHNOLOGIESAchmad YukrisnaÎncă nu există evaluări

- Wireless USB Adapter User's ManualDocument25 paginiWireless USB Adapter User's ManualGama LielÎncă nu există evaluări

- Provision ISR API ShortPolling 20200507Document187 paginiProvision ISR API ShortPolling 20200507kalliopeargentinaÎncă nu există evaluări

- Modulador ISDB-T 6Document3 paginiModulador ISDB-T 6Marlon RiojasÎncă nu există evaluări

- IoT Module1Document28 paginiIoT Module1raghuaceÎncă nu există evaluări

- Cisco ASA DataSheetDocument7 paginiCisco ASA DataSheetPhongLGÎncă nu există evaluări

- Balanceo PCC Con FailoverDocument3 paginiBalanceo PCC Con FailoverAugustus ContrerasÎncă nu există evaluări

- LLD - Draft-V1 5Document36 paginiLLD - Draft-V1 5America CorreaÎncă nu există evaluări

- Assignment #1Document5 paginiAssignment #1Abreham GetachewÎncă nu există evaluări

- CUSP Call ProcessingDocument9 paginiCUSP Call Processingsumit rustagiÎncă nu există evaluări

- Direct Sequence Spread Spectrum With Barker Code and QPSK: Saed ThuneibatDocument6 paginiDirect Sequence Spread Spectrum With Barker Code and QPSK: Saed ThuneibatAdit SoodÎncă nu există evaluări

- Application Note: Si-P3 & Si-P3/V Conformance To ProfidriveDocument12 paginiApplication Note: Si-P3 & Si-P3/V Conformance To Profidrivezxc112Încă nu există evaluări

- Fundamentals of IoTDocument24 paginiFundamentals of IoTRohit SaindaneÎncă nu există evaluări

- IP Networking Guide: KX-TDE100 KX-TDE200Document62 paginiIP Networking Guide: KX-TDE100 KX-TDE200snji kariÎncă nu există evaluări

- AdhocDocument17 paginiAdhocSripriya GunaÎncă nu există evaluări

- Foxboro™ SCADA Remote Terminal Viewer (RTV) - 41s2m13Document13 paginiFoxboro™ SCADA Remote Terminal Viewer (RTV) - 41s2m13Muhd Nu'man HÎncă nu există evaluări

- Darlington Choto Industrial Attachment ReportDocument26 paginiDarlington Choto Industrial Attachment ReportedwardÎncă nu există evaluări

- Wk2 CPS4222 Homework#1Document3 paginiWk2 CPS4222 Homework#1Jawan GainesÎncă nu există evaluări

- Driver Manual: Prolinx GatewayDocument88 paginiDriver Manual: Prolinx GatewayVicente SantillanÎncă nu există evaluări

- Infineon ErrataSheet XMC4700 XMC4800 AA ES v01 01 enDocument51 paginiInfineon ErrataSheet XMC4700 XMC4800 AA ES v01 01 enshhmmmÎncă nu există evaluări

- Get to Know Me: Name, Work, Address, Age FormDocument39 paginiGet to Know Me: Name, Work, Address, Age FormJohnpatrick DejesusÎncă nu există evaluări

- IPV6 (Next Generation Internet Protocal)Document40 paginiIPV6 (Next Generation Internet Protocal)Om Prakash SinghÎncă nu există evaluări

- UNIT1-Data Communication and Computer NetworksDocument43 paginiUNIT1-Data Communication and Computer NetworksPatel DhruvalÎncă nu există evaluări

- File Sharing UnityDocument66 paginiFile Sharing UnityArun Kumar R.Încă nu există evaluări

- KONNECT Kong PresentationDocument18 paginiKONNECT Kong PresentationJoão EsperancinhaÎncă nu există evaluări

- DDWRT OpenVPN Setup GuideDocument33 paginiDDWRT OpenVPN Setup Guidepedrama2002Încă nu există evaluări

- MulticastDocument97 paginiMulticastAndres ChavessÎncă nu există evaluări

- OneWireless Field Network Dictionary OW-CDX020Document54 paginiOneWireless Field Network Dictionary OW-CDX020Mahesh DivakarÎncă nu există evaluări