Documente Academic

Documente Profesional

Documente Cultură

Reability and Validity

Încărcat de

ria harianiDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Reability and Validity

Încărcat de

ria harianiDrepturi de autor:

Formate disponibile

Shweta Bajpai et al.

Goodness of Measurement

REVIEW ARTICLE

GOODNESS OF MEASUREMENT: RELIABILITY AND VALIDITY

Shweta Bajpai1, Ram Bajpai2

Department of Psychology, National University of Study and Research in Law, Ranchi, Jharkhand, India

2 Department of Community Medicine, Army College of Medical Sciences, New Delhi, India

Correspondence to: Ram Bajpai (rambajpai@hotmail.com)

DOI: 10.5455/ijmsph.2013.191120133

Received Date: 07.10.2013

Accepted Date: 19.01.2014

ABSTRACT

The two most important and fundamental characteristics of any measurement procedure are reliability and validity and lie at the heart of

competent and effective study. However, these phenomena have often been somewhat misunderstood or under emphasized. How

productive can be any research, if the instrument used does not actually measure what it purports to? How justifiable the research that is

based on an inconsistent instrument? What constitutes a valid instrument? What are the implications of proper and improper testing?

This paper attempts to explore these measurement related concepts as well as some of the issues pertaining thereto.

Key-Words: Item Analysis; Error; Validity; Reliability; Alpha

Introduction

Across disciplines, researchers often not only fail to report

the reliability of their measures, but also fall short of

grasping the inextricable link between scale validity and

effective research.[1,2] It is important to make sure that the

instrument we developed to measure particular concept is

indeed accurately measuring the variable i.e., we are

actually measuring the concept that we supposed to

measure. The scales developed can often be imperfect, and

errors are prone to occur in the measurement of scale

variables. The use of better instrument will ensure more

accuracy in results, which will enhance the scientific

quality of research. Hence, we need to assess the

goodness of the measures developed and reasonably

sure that the instrument measures the variables they are

supposed to, and measures them accurately.[3] First, an

item analysis of the responses to the questions tapping the

variable is carried out and then the reliability and the

validity of the measures are established.

True Score Model

Multi-item scales should be evaluated for accuracy,

reliability and applicability. Measurement accuracy refers

to capturing the responses as the respondent intended

them to be understood. Errors can result from either

systematic error, which affects the observed score in the

same way on every measurement, or random error, which

varies with every measurement.[4] This model provides a

framework for understanding the accuracy of

measurement. According to this model;

= + +

(1)

Where, XO = the observed score or measurement; XT = the

112

true score of the characteristic; XS = systematic error; XR =

random error

If the random error in equation (1) is zero then instrument

is termed as reliable and if both systematic error as well as

random error are zero then instrument considered as valid.

The total measurement error is the sum of the systematic

error, which affects the model in a constant fashion, and

the random error, which affects the model randomly.

Systematic errors occur due to stable factors which

influence the observed score in the same way on every

occasion that a measurement is made. However, random

error occurs due to transient factors which influence the

observed score differently each time.[4]

Item Analysis

Item analysis is carried out to see if the items in the

instrument belong there or not. Each item is examined for

its ability to discriminate between those subjects whose

total scores are high and those with low scores. In item

analysis, the means between the high-score group and the

low-score group are tested to detect significant differences

through the t-values. The item with a high t-value are then

included in the instrument.[3] Thereafter, tests for the

reliability of the instrument are carried out and the validity

of the measure is established. The various forms of

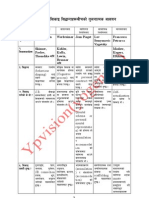

reliability and validity are depicted in Figure 1.

Validity

Validity is a test of how well an instrument that is

developed measures the particular concept it is intended to

measure as shown in Figure 2. In other words, validity

International Journal of Medical Science and Public Health | 2014 | Vol 3 | Issue 2

Shweta Bajpai et al. Goodness of Measurement

concerned with weather we measure the right concept or

not.[3] For example, when we ask a set of questions with the

hope that we are tapping the concept, how can we be

reasonably certain that we are indeed measuring the

concept we set out to measure and something else? This

can be determined by applying certain validity tests.

Several types of validity test are used to test the goodness

of measures and writers use different terms to denote

them.

and outline the domain of interest; (ii) gather resident

domain experts; (iii) develop consistent matching

methodology; and (iv) analyze results from the matching

task.[5]

Face Validity: it is considered as a basic and minimum

index of content validity. Face validity indicates the items

that are intended to measure a concept, do, on the face of

it, look like they measure the concept. In brief, it looks as if

it is indeed measuring what it is designed to measure.

Criterion-Related Validity

It is established when the measure differentiates

individuals on a criterion it is expected to predict. This can

be done by establishing concurrent validity or predictive

validity.

Figure-1: Forms of reliability and validity

Figure-2: Validity and reliability of instrument

Content Validity

It ensures that the measure includes an adequate and

representative set of items that tap the concept. The more

the scale items represent the domain or universe of the

concept being measured, the greater the content validity. It

is a function of how well the dimensions and elements of a

concept have been delineated. The development of content

valid of an instrument is typically achieved by a rational

analysis of the instrument by raters (ideally 3 to 5) familiar

with the construct of interest. Specifically, raters will

review all of the items for readability, clarity and

comprehensiveness and come to some level of agreement

as to which items should be included in the final

instrument. In short, a panel of judges can attest to the

content validity of the instrument.

Crocker and Algina suggest employing the following four

steps to effectively evaluate content validity: (i) identify

113

Concurrent Validity: It is established when the scale

discriminates individuals who are known to be different

i.e., they should score differently on the instrument. In

other words, the degree to which an instrument can

distinguish individuals who differ on some criterion

measured or observed at the same time. For example,

based on current observed behaviours, who should be

released from an institution?

Predictive Validity: It indicates the ability of the measuring

instrument to differentiate among individuals with

reference to a future criterion. In other words, how

adequately will an instrument be in differentiating

between the performance and behaviour of individuals on

some future criterion? For example, how well do GRE

scores predict future grades?

Construct Validity

Construct validity testifies to how well the results obtained

from the use of the measure fit the theories around which

the test is designed. This is assessed through convergent

and discriminate validity. For example, if one were to

develop an instrument to measure intelligence that does

indeed measure IQ, than this test is valid. Construct

validity is very much an ongoing process as one refines a

theory, if necessary, in order to make predictions about

test scores in various settings and situations.

Crocker and Algina (1986) provide a series of steps to

follow when pursuing a construct validation study [5]: (i)

Generate hypotheses of how the construct should relate to

both other constructs of interest and relevant group

differences; (ii) Choose a measure that adequately

represents the construct of interest; (iii) Pursue empirical

International Journal of Medical Science and Public Health | 2014 | Vol 3 | Issue 2

Shweta Bajpai et al. Goodness of Measurement

study to examine the relationships hypothesized; and (iv)

Analyze gathered data to check hypothesized relationships

and to assess whether or not alternative hypotheses could

explain the relationships found between the variables.

Convergent Validity: It is established when the scores

obtained with two different instruments measuring the

same concept are highly correlated.

Discriminant Validity: It is established when, based on

theory, two variables are predicted to be uncorrelated, and

the scores obtained by measuring them are indeed

empirically found to be so.

There are following methods could be used to check

construct validity of the instrument: (1) Correlational

analysis; (2) Factor analysis; and (3) The multitrait,

multimethod matrix of correlations

Finally, it is important to note that validity is a necessary

but not sufficient condition of the test of goodness of a

measure. A measure should not only be valid but also

reliable.

Reliability

If a measurement device or procedure consistently assigns

the same score to individuals or objects with equal values,

the instrument is considered reliable. In other words, the

reliability of a measure indicates the extent to which it is

without bias and hence insures consistent measurement

cross time and across the various items in the instruments

as given in Figure 2.

It is an indication of the stability (or repeatability) and

consistency (or homogeneity) with which the instrument

measures the concept and helps to assess the goodness

of a measure.[3,6]

This property is not a stagnant function of the test. Rather,

reliability estimates change with different populations (i.e.

population samples) and as a function of the error

involved. More important to understand is that reliability

estimates are a function of the test scores yielded from an

instrument, not the test itself.[2] Low internal consistency

estimates are often the result of poorly written items or an

excessively broad content area of measure.[5] However,

other factors can equally reduce the reliability coefficient,

namely, the homogeneity of the testing sample, imposed

time limits in the testing situation, item difficulty and the

length of the testing instrument.[5-9]

114

Stability

It is defined as the ability of a measure to remain the same

over time despite uncontrolled testing conditions or

respondent themselves. Two methods to test stability are

test-retest reliability and parallel-form reliability.

Test-Retest Reliability: The reliability coefficient obtained

by repetition of the same measure on a second time is

called the test-retest reliability. When a questionnaire

containing some items that are supposed to measure a

concept is administered to a set of respondents now, and

after some time ranging from few days to weeks or

months, again administered on the same respondents. The

correlation coefficient calculated between two set of data

and if it found to be high, better the test-retest reliability.

Parallel-Form Reliability: when responses on two

comparable sets of measures tapping the same construct

are highly correlated, known as parallel-form reliability. It

similar to test-retest method except order or sequence of

questions or sometimes wording of questions has changed

at second time. If two such comparable forms are highly

correlated (say more than 0.7), we may be fairly certain

that the measures are reasonably reliable.

Internal Consistency

The internal consistency of measures is indicative of the

homogeneity of the items in the measure that tap the

construct. In other words, the items should hang together

as a set, and be capable of independently measuring the

same concept. Consistency can be examined through the

inter-item consistency and split-half reliability.

Inter-Item Consistency: It is a test of consistency of

respondents answers to all concepts, they will be

correlated with one another.

a. Cronbach's alpha: In statistics, Cronbach's alpha () is

a coefficient of internal consistency and widely used in

social sciences, business, nursing, and other

disciplines. Cronbachs alpha is actually an average of

all the possible split-half reliability estimates of an

instrument and it is commonly used as an estimate of

the reliability of a psychometric test for a sample of

examinees.[10] It was first named alpha by Lee

Cronbach in 1951, as he had intended to continue with

further coefficients.[10] The measure can be viewed as

an extension of the KuderRichardson Formula 20

(KR-20), which is an equivalent measure for

dichotomous items. Cronbach's Alpha is not robust

against missing data. Suppose that we measure a

quantity which is a sum of K components (K-items or

International Journal of Medical Science and Public Health | 2014 | Vol 3 | Issue 2

Shweta Bajpai et al. Goodness of Measurement

testlets): X = Y1 + Y2 +.......+ Yk. Cronbach's is defined

as

=

(2)

Where, is variance of observed total test scores and

is variance of component i for the current sample

b.

of persons. The theoretical value of alpha varies from

zero to 1, since it is the ratio of two variances.

However, depending on the estimation procedure

used, estimates of alpha can take on any value less

than or equal to 1, including negative values, although

only positive values make sense. Higher values of

alpha are more desirable. Some professionals, as a rule

of thumb, require a reliability of 0.70 or higher

(obtained on a substantial sample) before they will use

an instrument.[4] As a result, alpha is most

appropriately used when the items measure different

substantive areas within a single construct.[11-13]

KuderRichardson Formula 20 (KR-20): KR-20, first

published in 1937 is a measure of internal consistency

reliability for measures with dichotomous choices and

it is analogous to Cronbach's .[14,15] A high KR-20

coefficient (e.g.,>0.90) indicates a homogeneous test.

Values can range from 0.00 to 1.00 (sometimes

expressed as 0 to 100), with high values indicating that

the examination is likely to correlate with alternate

forms (a desirable characteristic). The KR-20 may be

affected by difficulty of the test, the spread in scores

and the length of the examination. Since Cronbach's

was published in 1951, there has been no known

advantage to KR-20 over Cronbach alpha. Specifically,

coefficient alpha is typically used during scale

development with items that have several response

options (i.e., 1 = strongly disagree to 5 = strongly

agree) whereas KR-20 is used to estimate reliability

for dichotomous (i.e., Yes/No; True/False) response

scales.

Conclusion

In health care and social science research, many of the

variables of interest and outcomes that are important

derived from abstract concepts are known as theoretical

constructs. Therefore, using tests or instruments that are

valid and reliable to measure such constructs is a crucial

component of research quality. Reliability and validity of

instrumentation should be important considerations for

researchers in their investigations. Well-trained and

motivated observers or a well-developed survey instrument

will better provide quality data with which to answer a

question or solve a problem. In conclusion, remember that

your ability to answer your research question is only as good

as the instruments you develop or your data collection

procedure.

References

1.

2.

3.

4.

5.

6.

7.

8.

9.

10.

11.

12.

13.

Split-Half Reliability: It reflects the correlations between

two halves of an instrument. The estimates will vary

depending on how the items in measure are split into two

halves. Split-half reliabilities may be higher than

Cronbach's alpha only in the circumstances of there being

more than one underlying responses dimension tapped by

measure and when certain other conditions are met as

well.

115

14.

15.

Henson RK. Understanding Internal Consistency Reliability Estimates:

A Conceptual Primer on Coefficient Alpha. Meas Eval Coun Dev.

2001;34:177-188.

Thompson B. Understanding reliability and coefficient alpha, really. In:

Thompson B, editor. Score Reliability: Contemporary Thinking on

Reliability Issues. Thousand Oaks, CA: Sage; 2003. p. 5.

Shekharan U, Bougie R. Research Methods for Business: A Skill Building

Approach. 5th ed. New Delhi: John Wiley. 2010

Malhotra NK. Marketing Research: An Applied Orientation. 4th ed. New

Jersey: Pearson Education, Inc. 2004.

Crocker L, Algina J. Introduction to Classical and Modern Test Theory.

Philadelphia: Harcourt Brace Jovanovich College Publishers. 1986.

Zikmund WG. Business Research Methods. 7th ed. Ohio: Thompson

South-Western. 2003.

Mehrens WA, Lehman IJ. Measurement and Evaluation in Education

and Psychology. 4th ed. Orlando, Florida: Holt, Rinehart and Winston

Inc. 1991.

Devellis RF. Scale Development: Theory and Applications, Applied

Social Research Methods Series. Newbury Park: Sage. 1991.

Gregory RJ. Psychological Testing: History, Principles and Applications.

Boston: Allyn and Bacon. 1992.

Cronbach LJ. Coefficient alpha and the internal structure of tests.

Psychometrika. 1951;16:297334.

Tavakol M and Dennick R. Making sense of Cronbachs alpha. Int Jour

Med Edu. 2011;2:53-55.

Zinbarg R, Revelle W, Yovel I, Li W. Cronbachs, Revelles, and

McDonalds: Their relations with each other and two alternative

conceptualizations of reliability. Psychometrika. 2005;70:123133.

McDonald RP. Test theory: A unified treatment. Mahwah, NJ:

Lawrence Erlbaum Associates Inc; 1999.

Kuder GF, Richardson MW. The theory of the estimation of test

reliability. Psychometrika. 1937;2:151160.

Cortina JM. What Is Coefficient Alpha - an Examination of Theory and

Applications. Journal of Applied Psychology. 1993;78:98104.

Cite this article as: Bajpai SR, Bajpai RC. Goodness of Measurement:

Reliability and Validity. Int J Med Sci Public Health 2014;3:112-115.

Source of Support: Nil

Conflict of interest: None declared

International Journal of Medical Science and Public Health | 2014 | Vol 3 | Issue 2

S-ar putea să vă placă și

- Sholawat LirikDocument3 paginiSholawat LirikSri Yanti100% (1)

- IOAA SyllabusDocument6 paginiIOAA SyllabusAbdul Rasyid ZakariaÎncă nu există evaluări

- No Loker LaborDocument1 paginăNo Loker Laborria harianiÎncă nu există evaluări

- ConclusionsDocument6 paginiConclusionsria harianiÎncă nu există evaluări

- ConclusionsDocument6 paginiConclusionsria harianiÎncă nu există evaluări

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (119)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Schools Division Office I PangasinanDocument2 paginiSchools Division Office I PangasinanMark Gilbert TandocÎncă nu există evaluări

- Different Learning TheoriesDocument3 paginiDifferent Learning Theorieschapagain_yÎncă nu există evaluări

- Fiona Lesson Plan 2Document3 paginiFiona Lesson Plan 2api-250259068Încă nu există evaluări

- Characteristic of Constructivist Teaching RulesDocument3 paginiCharacteristic of Constructivist Teaching RulesNurul Ain100% (1)

- Tangub City National High School Holistic Health LessonDocument2 paginiTangub City National High School Holistic Health LessonJerameel Mangubat ArabejoÎncă nu există evaluări

- PortfolioDocument22 paginiPortfolioAntonio de Constantinople50% (2)

- Basic Psychological ProcessesDocument26 paginiBasic Psychological ProcessesKRITIKA RASTOGI PSYCHOLOGY NCRÎncă nu există evaluări

- MG Education Program Report 2017-2019Document6 paginiMG Education Program Report 2017-2019Geoffrey Tolentino-UnidaÎncă nu există evaluări

- Resume MasterDocument2 paginiResume Masterapi-416531490Încă nu există evaluări

- DLL Senior HighDocument3 paginiDLL Senior HighNeil Virgel IndingÎncă nu există evaluări

- Creativity Techniques: 1 D.Hariyani/SKIT/MEDocument7 paginiCreativity Techniques: 1 D.Hariyani/SKIT/MEharsh vermaÎncă nu există evaluări

- Intelligence TheoriesDocument52 paginiIntelligence TheoriesYashaswini P SÎncă nu există evaluări

- Ulster University Teaching and Assessment MethodsDocument2 paginiUlster University Teaching and Assessment MethodsOzden IsbilirÎncă nu există evaluări

- Learning Styles Assessment: Agree Neutral DisagreeDocument2 paginiLearning Styles Assessment: Agree Neutral DisagreeA BHARGAVIÎncă nu există evaluări

- Course Curriculum DesignDocument9 paginiCourse Curriculum DesignZulaiha AmirulÎncă nu există evaluări

- Accelerated Learning - A Revolution in Teaching MethodDocument6 paginiAccelerated Learning - A Revolution in Teaching MethodMichael OluseyiÎncă nu există evaluări

- Three Domains of Educational TechnologyDocument7 paginiThree Domains of Educational Technologysydney andersonÎncă nu există evaluări

- Module 1 - Andragogy of LearningDocument2 paginiModule 1 - Andragogy of LearningGRACE WENDIE JOY GRIÑOÎncă nu există evaluări

- Popbl Guideline Book For Teachers2Document20 paginiPopbl Guideline Book For Teachers2ardrichÎncă nu există evaluări

- Test Measurement Assessment EvaluationDocument31 paginiTest Measurement Assessment EvaluationBe Creative - كن مبدعا100% (2)

- Week Long Literacy Lesson PlanDocument4 paginiWeek Long Literacy Lesson Planapi-302758033Încă nu există evaluări

- Mackenzi Veilleux Mentor Teacher Observation Part 1 1Document9 paginiMackenzi Veilleux Mentor Teacher Observation Part 1 1api-510332603Încă nu există evaluări

- W04 Application Activity: Learning Plan: Charles AdinyiraDocument2 paginiW04 Application Activity: Learning Plan: Charles AdinyiraCharles LaceÎncă nu există evaluări

- Conceptual FrameworkDocument2 paginiConceptual Frameworkyssa_03Încă nu există evaluări

- Worksheet in LPW 012 - 21 Century Literature From The Philippines and The WorldDocument3 paginiWorksheet in LPW 012 - 21 Century Literature From The Philippines and The WorldAltheaRoma VillasÎncă nu există evaluări

- 7 Grade Grading PolicyDocument2 pagini7 Grade Grading Policyapi-413794743Încă nu există evaluări

- Task 3Document7 paginiTask 3api-338469817Încă nu există evaluări

- Debate Cie: Should Upsr Be Abolished?Document5 paginiDebate Cie: Should Upsr Be Abolished?Amaluddin AmranÎncă nu există evaluări

- Lesson 2Document4 paginiLesson 2api-340287403Încă nu există evaluări

- Reaction PaperDocument2 paginiReaction PaperWilliam Ranara100% (1)