Documente Academic

Documente Profesional

Documente Cultură

Estimation For Multivariate Linear Mixed Models

Încărcat de

Fir DausTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Estimation For Multivariate Linear Mixed Models

Încărcat de

Fir DausDrepturi de autor:

Formate disponibile

International Journal of Basic & Applied Sciences IJBAS-IJENS Vol:10 No:06

48

Estimation for Multivariate Linear Mixed Models

I Nyoman Latra1, Susanti Linuwih2, Purhadi2, and Suhartono2

1 Doctoral Candidate at Department of Statistics FMIPA-ITS Surabaya,

Email : i_nyoman_l@statistika.its.ac.id

2 Promotor and Co-promotors at Department of Statistics FMIPA-ITS Surabaya.

Abstract

This paper discusses about estimation of

multivariate linear mixed model or multivariate component of

variance model with equal number of replications. We focus on

two estimation methods, namely Maximum Likelihood

Estimation (MLE) and Restricted Maximum Likelihood

Estimation (REMLE) methods. The results show that the

parameter estimation of fixed effects yields unbiased estimators,

whereas the estimation for random effects or variance

components yields biased estimators. Moreover, assume that

both likelihood and ln-likelihood functions hold some of

regularity conditions, it can be proved that estimators as a

solutions set of the likelihood equations satisfy strong consistency

for large sample size, asymptotic normal and efficiency.

Index Term-- Linear Mixed Model, Multivariate Linear

Model, Maximum Likelihood, Asymptotic Normal and

Efficiency, Consistency.

I. INTRODUCTION

Linear mixed models or variance components models have

been effectively and extensively used by statisticians for

analyzing data when the response is univariate. Reference

[12] discussed the latent variable model for mixed ordinal or

discrete and continuous outcomes that was applied to birth

defects data. Reference [16] showed that maximum likelihood

estimation of variance components from twin data can be

parameterized in the framework of linear mixed models.

Specialized variance component estimation software that can

handle pedigree data and user-defined covariance structures

can be used to analyze multivariate data for simple and

complex models with a large number of random effects.

Reference [2] showed that Linear Mixed Models (LMM)

could handle data where the observations were not

independent or could be used for modeling data with

correlated errors. There are some technical terms for predictor

variables in linear mixed models, those are (i) random effects,

i.e. the set of values of a categorical predictor variable that the

values are selected not completely but as random sample of all

possibility values (for example, the variable product has

values representing only 5 of a possible 42 brands), (ii)

hierarchical effects, i.e. predictor variables are measured at

more than one level, and (iii) fixed effects, i.e. predictor

variables which all possible category values (levels) are

measured.

Otherwise, in this paragraph there are many papers

discussed about linear model for multivariate cases. Reference

[5] applied multivariate linear mixed model to Scholastic

Aptitude Test and proposed Restricted Maximum Likelihood

(REML) to estimate the parameters. Reference [6] used

multivariate linear mixed model or multivariate variance

components model with equal replication to predict the sum of

the regression mean and the random effects of models. This

prediction problem reduces to the estimation of the ratio of

two covariance matrices. The estimaion of the ratio matrix

obtaine the James-Stein type estimators based on the Bartletts

decomposition, the Stein type orthogonally equivariant

estimators, and the Efron-Morris type estimators. Recently,

Reference [10] applied linear mixed model in Statistics for

Biology Systems to calculate both covariates and correlations

between signals which followed non-stationary time series.

They used the estimation algorithm based on ExpectationMaximization (EM) which involved dynamic programming

for the segmentation step. Reference [11] discussed a joint

model for multivariate mixed ordinal and continuous

responses. The likelihood is found and modified Pearson

residuals. The model is applied to medical data, obtained from

an observational study on woman.

Most of previous papers about multivariate linear mixed

models focused on estimation method and not yet discuss

about the asymptotic properties of the estimators. By

assumption that both likelihood and ln-likelihood functions

hold for some of regularity conditions, then a solutions set of

the likelihood equations satisfy strong consistency for large

sample size, asymptotic normal and efficiency.

II.

MULTIVARIATE LINEAR MIXED MODEL

In this section, we firstly discuss about the univariate linear

mixed models and then continue to multivariate linear models

without replications. Whereas discuss about the multivariate

linear mixed models will be included in next section. Linear

mixed models are statistical models for continuous outcome

variables in which the residuals are normally distributed but

may not be independent or have constant variance. A linear

mixed model is a parametric linear model for clustered,

longitudinal, or repeated-measures data. It may include both

fixed-effect parameters associated with one or more

continuous or categorical covariates and random effects that

associated with one or more random factors. The fixed-effect

parameters describe the relationships of the covariates to the

dependent variable for an entire population, and the random

effects are specific to clusters or subjects within a population

[4], [9], [15], [17].

In matrix and vector, a linear mixed population model

(univariate) is written as [4], [7], [9], [17]

y X

e

Z

fixed

(1)

random

where y is a n 1 vector of observations, X is a n (p+1)

matrix of known covariates, Z is a n m known matrix, is a

(p + 1) 1 vector of unknown regression coefficients which

are usually known as the fixed effects, is a m 1 vector of

random effects, and e is a n 1 vector of random errors. Both

vectors and e are unobservable. The basic assumption of Eq.

109406-8484 IJBAS-IJENS December 2010 IJENS

IJENS

International Journal of Basic & Applied Sciences IJBAS-IJENS Vol:10 No:06

(1) for and e are ~ N (0, G) , and e ~ N (0, R) , where G and

R are the variance-covariance matrices (then called variance

matrices). To simplify the estimation of model parameters,

random effect is marginalized to errors e to create e* that is

N(0,), where = R + ZGZT. After marginalization process,

(1) can be written as,

y X e

y ~ N ( X , ) .

or

(2)

Equation (2) is called marginal model or sometimes called as

model of population mean.

For point estimation process, each of variance component

of model can be notated by that include in vector =

vech() = [1 ,, n ( n 1) ]T . It implies Gaussian mixed model has

1

2

a joint probability density function as follows:

f ( y, , ) 1 / (2 ) n | |

exp y X 1 y X / 2

T

(3)

49

by an asumption that second partial derivative of exist.

Mean value of second partial derivative respect to fixed

components or variance components will be [4]

2

E

T

X T 1 X ,

2

E

q

= 0 ; 1 q n(n+1)/2,

2

E

q q '

(1/2)tr 1 1

q

q '

1 q, q n(n+1)/2.

Base on (5) and a result of Theorem 1 in [15], the ML Iterative

Algorithm can be used to compute the MLE of the unknown

parameters in model (3). By using iteration process, the

estimator of variance components are found as elements of

) such that estimator of covariance matrix can be

vech (

written as,

(8)

where n is dimension of vector y and vech() indicates a

vector with all lower triangular elements of have been

stacked by column. A ln-likelihood function of (3) is

(, ) ln L( y , , ) ( n / 2)ln 2 (1 / 2)ln | |

(1 / 2)y X 1 y X .

T

(4)

Taking the partial derivatives of (4) with respect to , q and

makes each equal to zero such that we have parameters

estimation as follows:

1 y ,

1 X ) 1 X T

( X T

y T P

q

1

P y tr (

q

(5)

) ; q 1, 2, ..., n( n 1) / 2 , (6)

q

f R ( y 1 ) 1 / (2 ) n p 1 | A T A |

exp (1/2) y 1T ( A T A) 1 y 1 .

(9)

The ln-likelihood of (9) can be written as

where P 1 1 X (X T 1 X) 1 X T 1 .

Equation (5) has a closed-form. Whereas (6) does not

have a closed-form, therefore [4], [17] proposed three

algorithms that could be used to calculate the parameter

estimation q, i.e. EM (expectation-maximization), N-R

(Newton-Raphson), and Fisher Scoring algorithm. Due to the

MLE is consistent and asymptotically normal with covariance

matrix asymptotic equal to inverse of Fisher Information

matrix, so we need to obtain Fisher Information matrix

components. In both univariate and multivariate linear mixed

models, the regression coefficients and covariance matrix

components will be placed it in to a vector , that is

(T , T ) T . Thus, the Fisher Information matrix will have

expressions as follows

2

E

Var

T

that is generally bias. By subtituting (8) to (5), it yields

1 X ) 1 that is bias because (8) is bias. Bias in

Var ( ) ( X T

MLE can be eliminated by Restricted Maximum Likelihood

Estimation (REMLE).

REML estimation uses a transformation y1 = ATy, where

rank(X) = p + 1, A is n (n p 1) with rank(A) = n p

1, such that ATX = 0. It is easy to show that that

y 1 ~ N (0 , A T A ) . Furthermore, the joint probability density

function of y1 is

(7)

R () (1 / 2)[ln( A T A ) y1T (A T A) 1 y1 ] .

(10)

Estimation process can be carried out by partially derivation

of (10) respect to q ; q 1, ..., n ( n 1) / 2 (because it does not

contain components of ),

R

(1 / 2){y T P

Py tr(P

)}

q

q

q

(11)

where P in (11) is A ( A T A ) 1 A T which basically is equal to P

that the estimator is P in (6). Fisher Information matrix of

(11) is

Var R

109406-8484 IJBAS-IJENS December 2010 IJENS

2 R

E

T

(12)

IJENS

International Journal of Basic & Applied Sciences IJBAS-IJENS Vol:10 No:06

By asumming that the second derivative of in (10) is exist,

then it can be proven that its components Fisher is

2 R

E

q q '

(1 / 2)tr P P

q q '

1 q, q n(n 1) / 2 .

(13)

The estimator that be obtained from REMLE method is

unbias. However, the linear mixed models will yield Var ( )

that is bias, because Var ( ) is taken from (5) by doing a

substitution of by . Bias from variance of fixed effect

estimator that constitute the diagonal elements of Var ( ) is a

downward bias both in MLE and REMLE method [17].

Multivariate linear model that does not depend to random

factors was discussed by [1], [9]. Multivariate linear model

without replications in the matrix form could be written as

Y XB E

(14)

where Y = [ Yij ], E = [ij], X = [xia], and B = [aj]; i = 1,

..., n; j = 1, ..., s; a = 0, ..., p; intercepts xi0 = 1 for all i. For

individual i, (14) could be presented in the matrix and vector

form as follows:

y i = B T x i + ei ,

where

yi =

Yi1

Y

i2 ,

Yis

B =

01

11

p1

(15)

02

12

p2

1

i1

x

i

1

xi = , and ei = i 2 .

is

x ip

The error vectors ei in (15) are random vectors that be

assumed follow multivariate normal distribution with zero

mean vector in Rns. The assumption of rows of E are

independents because each of them are related to different

observations, however the columns of E are still allowed to

have a correlation. Thus,

Cov (e i , e i' )

Cov (e i , e i )

0,

i i'

L(vec(BT ), vech( )) 1 / (2 ) ns | |n exp{Q MLM / 2} (17)

where Q MLM vec ( Y T ) ( X I s )vec( B T ) ( I n ) 1

T

vec ( Y T ) ( X I s )vec( B T ) .

Maximize (17) with constraint that is known will yield

estimator

( X T X) 1 X T Y or

B

vec( B T ) (( X T X ) 1 X T I s )vec( Y T ) .

(18)

The estimation of can be obtained by assuming that B is

known. Then, maximize (17) and assume that B is known will

yield

bias

variance

estimator,

i.e.

(1 / 2)YT (I X( X T X) 1 X T )Y .

The unbias estimator of

variance [1] is,

S Y T (I X ( X T X ) 1 X T ) Y /(n rank ( X )) .

(19)

Hence, estimator in (18) is an unbias estimator with the

variance matrix

(20)

III. PARAMETERS ESTIMATION for

MULTIVARIATE LINEAR MIXED MODEL

The multivariate linear mixed model is an extension of the

multivariate linear model. It means that the model can be

constructed by adding a random component part to (14) and

assuming that each elements of Y has a linear correlation with

systematic part of the model. This model is called as the

multivariate linear mixed model without replication. There are

three types of the linear mixed model, i.e. cluster, longitudinal,

and replication. This paper focuses on the linear mixed model

without replication, i.e.

Y = XB + ZD + E

(16)

for all i = 1, 2, ..., n. The other assumption is matrix of

variance errors in each observation i = with size s s is

same for all i and positive definite. Then, (16) can be written

as [13]

Cov(vec(ET), vec(ET)) = In

where vec(E ) is a vector that indicates all elements of a

matrix E have been stacked by rows and In is identity matrix

with size n n.

Moreover, estimation of B can be done by assuming that

is known. The likelihood function of (14) for n observations is

T )) ( X T X) 1

.

Var(vec(B

0s

1s

,

ps

50

(21)

where X and Z are known as covariates matrices with size n

(p+1) and n k respectively, B is a (p+1) s matrix of

unknown regression coefficients of fixed effect, D is a k s

matrix of specific coefficients of random effect, and E is a n

s matrix of errors. It is also assumed that n-rows of E are

independents and each row is N(0,) and random effect D

satisfies vec(D) ~ N(0,). Thus, the distribution of respons Y

in model (21) can be written in matrix and vector form as

109406-8484 IJBAS-IJENS December 2010 IJENS

IJENS

International Journal of Basic & Applied Sciences IJBAS-IJENS Vol:10 No:06

vec( Y T ) ~ N ((X I s )vec(BT ), ( Z I s )( Z I s ) T (I n ))

(22)

Let a matrix of variance V (Z I s )(Z I s ) T (I n ) with size

nsns, then variance components of model (21) are elements

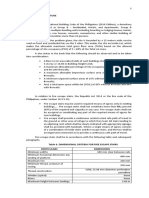

of = vech(V) = [1 ,, ns ( ns 1) / 2 ]T . The likelihood function of

51

3 L( y, )

H( y ) hold for all y, and

i i ' i ''

g ( y )dy , h( y )dy ,

E H(y ) for N ( 0 ) .

(R3). For each ,

0 E T ( y, )

probability density function (22) is

,,

= gradient, and

k

1

L(vec(BT ), vech( V)) 1 / (2 ) ns | V | exp{Q MLMM / 2} (23)

where

where Q MLMM vec( Y T ) ( X I s )vec( B T )

matrix.

V 1 vec( Y T ) ( X I s )vec( B T ) .

By applying partial derivation of ln-likelihood function (23)

respect to vec ( B T ) and q , then make equal to zero and it will

yield estimators

1 ( X I )]1 ( X I ) T V

1vec( Y T ),

vec( B T ) [( X I s ) T V

s

s

(24)

and by using a nonlinear optimization, with inequality

constraints imposed on so that positive definiteness

requirements on the and matrices are satisfied. There is

no closed-form solution for the , so the estimate of is

obtained by the Fisher scoring algoritm or Newton-Raphson

algoritm. After an iterative computational process we have

V

V

, i.e.

( Z I )

( Z I ) T (I

) .

V

s

s

n

(25)

can be obtained too

and

Both components estimator of

by applying iteration that are included in iteration process for

computing V . The result of estimation in (24) is unbiased

estimator with variance matrix

T )) [( X I ) T V

1 ( X I )]1 .

Var ( vec( B

s

s

IV. ESTIMATOR PROPERTIES: CONSISTENCY, EFFICIENCY,

and ASYMPTOTICALLY

Let vectors of random samples in the form of observations

y 1 , y 2 ,, y n from a vector of random variable y with

distribution F belonging to a family F F , , where

Consider random variables 1 , 2 , , and on a

probability space (, , P) . For , m will be said

converges with probability 1 (or strongly, almost surely,

almost everywhere) to if P( lim m ) 1 .

m

1

This is written m wp

; m . An equivalent condition

for convergence wp1 is

lim P( l , l m) 1, 0 [14].

variance of the random variable,

(27)

Proof:

The L( y, ) is a likelihood function. Thus, it can be

considered as a joint probability density function, so

L( y, )dy 1 .

Taking the partial derivative with respect to vector , then

the results are as follows:

L( y, )dy 0 .

containing 0 [8]. Assume R k and there are three

regularity conditions on F, those are [3], [14]:

( y, )

( y , )

,

i i ' i ''

i i '

2

, k .

(R2).

( y, )

; i.e.,

2 ( y, )

( y, )

I( ) Var

E

E[ T ( y, )].

neighborhood of 0 iff N( 0 ) is a superset of an open set G

( y, )

,

i

(26)

Lemma:

Let L( y, ) and ( y, ) are likelihood function and lnlikelihood function respectively. Assume that the regularity

conditions (R1) and (R2) hold. Fisher Information is the

[1 ,, k ]T . For each 0 , a subset N( 0 ) of is a

(R1). For each , the derivatives

= norm of a

Equation (28) can be written as

(28)

L( y , )

L( y, )dy 0

L( y , )

or

exist, for all y and i , i ' , i ' ' = 1, 2,

(ln L( y, ))

L( y, )dy 0 .

(29)

For each 0 , there are exist functions g(y), h(y), and H(y) (poss

Writing (29) as an expectation, we have established

109406-8484 IJBAS-IJENS December 2010 IJENS

IJENS

International Journal of Basic & Applied Sciences IJBAS-IJENS Vol:10 No:06

( y , )

E

0 .

(30)

( y , )

is

0. If (29) be partially differentiated again, it follows that

2 (ln L( y , ))

L( y , )

T

(ln L( y , ))

L( y , )}dy 0.

m 0 ) T (11T )]

{(1 / m ) T ( y, 0 ) (1 /( 2m ))[(

m 0 ) (1 / m )( y, 0 )

H( y, *m )} m (

(34)

a m (1 / m ) ( y i , 0 ) then

i 1

E 0 [a m ] (1 / m ) E 0 [( y i , 0 )] 0 ,

i 1

Var[a m ] (1 / m ) 2 mI( 0 ) I( 0 ) ,

2 (ln L( y, ))

L( y, )dy

T

m)

( y,

0 , so Taylor series (33) can be written as

Therefore, putting

This is equivalent to

where 0 *m m , H( y, *m ) (T ) ( y, *m ) .

But

It means that the vector mean of random variable

52

B m (1 / m) T ( y i , 0 ) then E0 [B m ] I( 0 ) , and

i 1

(ln L( y , ))

L ( y , ) d y 0.

This last equation can be written as an expectation, i.e.

( y, ) 2

2 ( y , )

0

T

(1 / 2) E * [H( y, *m )].

m 0 ) T (11T )]C m } m (

m 0 ) am

{B m [(

(35)

By applying the Law of Large Numbers (LLN), it can be

shown that

1

a m wp

0,

2 ( y, )

( y, )

I( ) Var

E

1

B m wp

I( 0 )

(36)

C m (1 / 2) E * [H( y, )] .

E[ ( y, )] [3], [14].

*

m

wp 1

Theorem:

Let n vectors observations y 1 , y 2 ,, y n be iid with

distribution F , for . Assume regularity conditions (R1),

(R2), and (R3) hold on the family F. Then, by m converges

with probability 1 to , the likelihood equations admit a

sequence of solutions m satisfying

a). strong consistency:

1

m wp

(31)

; m ;

b). asymptotic normality and efficiency

d

m

(32)

AN , (1 / m)I 1 ( ) ,

AN (,)

2 ( y , )

( y, )

E

Var

0 or

T

where I( ) E[ ( y, )] ,

asymptotic normal distribution.

i 1

E * [C m ] (1 / 2m)mE * [H( y, *m )]

Thus, (34) can be written as

By using (30) and definition of variance, the latter expression

can be rewritten as

C m (1 / 2m) H( y i , *m ) then

is a multivariate

Next, it will be seen that

H( y, *m )

is bounded in

probability. Let c0 be a constant and the m 0 c0 implies

*m 0 c0 such that,

m

i 1

i 1

(1 / m)H( y, *m ) (1 / m) H( y i , *m ) (1 / m) H( y i , 0 )

(37)

By condition (R2) E H(y ) and by applying the Law of

Large Numbers (LLN), it can be shown that

m

1

(1 / m) H( y i , 0 ) wp

E 0 [H( y, 0 )] .

i 1

Let 0 be given and we select 1 E [H( y, 0 )] . Choose

0

M1 and M2 so that,

Proof:

Expanding the function

( y, )

into a Taylor series of

second order about 0 and evaluating it at m , as follows:

m 0 c0 ] 1 / 2

m M 1 P[

m M 2 P[ (1 / m ) H( y i , 0 ) E 0 [H( y, 0 )]

i 1

(39)

1] 1 / 2.

m ) ( y, 0 )

( y,

m 0 )T

{ T ( y, 0 )(1 / 2)[(

m 0 )

(11T )]H( y, *m )}(

(38)

(33)

It follows from (37), (38) and (39) that,

109406-8484 IJBAS-IJENS December 2010 IJENS

IJENS

International Journal of Basic & Applied Sciences IJBAS-IJENS Vol:10 No:06

m max{ M 1 , M 2 }

P[ (1 / m)H( y, ) 1 E 0 [H( y, 0 )] ] 1 / 2

*

m

hence,

(1 / m)H( y, *m ) is bounded in probability.

2). Let a sequence n , which n [(vec( B T )) T , ( vech ( V )) T ]T

then by applying the proposed theorem it can be shown

that n is strong consistency, i.e.

1

n wp

; n and

Finally, to show that ( m 0 ) converges with probability 1

to 0, we have

1

m

H( y, *m ) is bounded in probability and by

1

Eq. (35) can be concluded that ( m 0 ) wp

0 for all

m } satisfying strong

0 . The sequence of solutions {

1

consistency m wp

; m is proven.

Furthermore, by using the Central Limit Theorem (CLT),

we have

a m ~ AN (0, I( 0 ))

(40)

By (40) : a m

AN (0, I( 0 ))

By (36) :

p

Bm

I( 0 )

[2]

[3]

[4]

[5]

[6]

This expression can be written as

d

m )

(

AN (0, (1/ m)I 1 ( )) or

[7]

d

m

AN ( , (1 / m)I 1 ( )) [3], [14].

Now, let a sequence n of solutions to the likelihood

function (23) where

T

))T ]T [ ,,

n [(vec(B T ))T , (vech ( V

n1

n ( ps s ns ( ns 1) / 2 ) ] . By using

the above Theorem, n will be convergence with probability

1 to or strong consistency n ; n and also

efficiency and asymptotic normality distribution, i.e.

d

d

n n

AN 0, I 1 ( ) or n

AN , n 1I 1 () .

V. CONCLUSION

This paper has discussed about estimation of multivariate

linear mixed model or multivariate component of variance

model with equal number of replications. The results show

that the parameter estimation of fixed effects yields unbiased

estimators, whereas the estimation for random effects or

variance components yields biased estimators. Moreover,

assume that both likelihood and ln-likelihood functions hold

some of regularity conditions, it can be proved that estimators

as a solutions set of the likelihood equations satisfy strong

consistency for large sample size, asymptotic normal and

efficiency.

Based on the discussion at the previous section, it can be

drawn some theoretical conclusions as follows:

1). The estimator of parameters by MLE method in the

multivariate linear mixed model Y = XB + ZD + E, with

the variance V ( Z I s )( Z I s )T (I n ) are

d

n

AN , n 1I 1 ( ) .

REFERENCES

[1]

d

m 0 )

m (

AN (0, I 1 ( 0 )).

53

[8]

[9]

[10]

R. Christensen, Linear Models for Multivariate, Time Series, and Spatial

Data. New York: Springer-Verlag, 1991, ch. 1.

G. D. Garson, Linear mixed models: random effects, hierarchical

linear, multilevel, random coefficients, and repeated measures models

(Statnotes style), Lecturer notes at Department of Public

Administration, North Carolina State University, 2008, pp. 1- 49.

R. V. Hogg, J. W. McKean, and A. T. Craig, Introduction to

Mathematical Statistics, 6rd ed., Intern. ed., New Jersey: PearsonPrentice Hall, 2005, ch. 6,7.

J. Jiang, Linear and Generalized Linear Mixed Models and Their

Applications, New York: Springer Science+Business Media, LCC.,

2007, ch. 2.

H. A. Kalaian and S. W. Raudenbush A multivariate mixed linear

model for meta-analysis, Psychological Methods, vol. 1(3), pp. 227235, Mar. 1996.

T. Kubokawa and M. S. Srivastava, Prediction in multivariate mixed

linear models (Discussion papers are a series of manuscripts style),

Discussion Papers CIRJE-F-180, pp. 1-24, Oct. 2002.

L.R. LaMotte,A direct derivation of the REML likelihood function,

Statistical Papers, vol. 48, pp. 321-327, 2007.

S. Lipschutz, Schaums Outline of Theory and Problems of General

Topology. New York: McGraw-Hill Book Co., 1965, ch. 1.

K. E. Muller and P. W. Stewart, Linear Model Theory: Univariate,

Multivariate, and Mixed Models, New Jersey: John Wiley & Sons, Inc.,

2006, ch. 2-5, 12-17.

F. Picard, E. Lebarbier, E. Budinsk, and S. Robin, Joint segmentation

of multivariate gaussian processes using mixed linear models (Report

style), Statistics for Systems Biology Group, Research Report (5), pp.

1-10, 2007.

http://genome.jouy.inra.fr/ssb/

[11] E. B. Samani and M. Ganjali, A multivariate latent variable model for

mixed continuous and ordinal responses, World Applied Sciences

Journal, vol. 3(2), pp. 294-299, 2008.

[12] M. D. Sammel, L. M. Ryan, and J. M. Legler, Latent variable models

for mixed discrete and continuous outcomes, Journal of Royal

Statistical Society B, vol. 59(3), pp. 667-678, 1997.

[13] S. Sawyer, Multivariate linear models (Unpublished style),

unpublished,

pp.

1-29,

2008.

www.math.wustl.edu/~sawyer/handouts/multivar.pdf 4/18/2008.

[14] R. J. Serfling, Approximation Theorems of Mathematical Statistics, New

York: John Wiley & Sons, Inc., 1980, ch. 1, 4.

[15] C. Shin, On the multivariate random and mixed coefficient analysis

(Dissertation style), Ph.D. dissertation, Dept. of Stat., Iowa State

University Ames, Iowa, 1995.

[16] P. M. Visscher, B. Benyamin, and I. White, The use of linear mixed

models to estimate variance components from data on twin pairs by

maximum likelihood, Twin Research vol. 7(6), pp. 670-674, 2004.

[17] B. T. West, K. B. Welch, and A. T. Gatecki, Linear Mixed Models a

Practical Guide Using Statistical Software. New York: Chapman &

Hall, 2007(2007). .

T ) [( X I ) T V

1 ( X I )]1

vec( B

s

s

T 1

( X I ) V vec( Y T ),

s

( Z I )

( Z I ) T (I

) ,

V

s

s

n

and

T )) [( X I ) T V

1 ( X I )]1 .

Var (vec ( B

s

s

109406-8484 IJBAS-IJENS December 2010 IJENS

IJENS

S-ar putea să vă placă și

- Estimation For Multivariate Linear Mixed ModelsDocument7 paginiEstimation For Multivariate Linear Mixed Modelsilkom12Încă nu există evaluări

- 1 s2.0 S0377042714002775 MainDocument13 pagini1 s2.0 S0377042714002775 MainFaria RahimÎncă nu există evaluări

- Cointegracion MacKinnon, - Et - Al - 1996 PDFDocument26 paginiCointegracion MacKinnon, - Et - Al - 1996 PDFAndy HernandezÎncă nu există evaluări

- Research Reports Mdh/Ima No. 2007-4, Issn 1404-4978: Date Key Words and PhrasesDocument16 paginiResearch Reports Mdh/Ima No. 2007-4, Issn 1404-4978: Date Key Words and PhrasesEduardo Salgado EnríquezÎncă nu există evaluări

- Modified Moment Estimation For A Two Parameter Gamma DistributionDocument9 paginiModified Moment Estimation For A Two Parameter Gamma DistributionInternational Organization of Scientific Research (IOSR)Încă nu există evaluări

- Gharamani PcaDocument8 paginiGharamani PcaLeonardo L. ImpettÎncă nu există evaluări

- 1 Nonlinear RegressionDocument9 pagini1 Nonlinear RegressioneerneeszstoÎncă nu există evaluări

- Estimation Strategies For The Regression Coefficient Parameter Matrix in Multivariate Multiple RegressionDocument20 paginiEstimation Strategies For The Regression Coefficient Parameter Matrix in Multivariate Multiple RegressionRonaldo SantosÎncă nu există evaluări

- Maruo Etal 2017Document15 paginiMaruo Etal 2017Andre Luiz GrionÎncă nu există evaluări

- 1-Nonlinear Regression Models in AgricultureDocument9 pagini1-Nonlinear Regression Models in AgricultureJaka Pratama100% (1)

- Chapter 1. Introduction and Review of Univariate General Linear ModelsDocument25 paginiChapter 1. Introduction and Review of Univariate General Linear Modelsemjay emjayÎncă nu există evaluări

- Based Methods For Monitoring Gamma ProfilesDocument6 paginiBased Methods For Monitoring Gamma Profilesieom2012Încă nu există evaluări

- An Autoregressive Distributed Lag Modelling Approach To Cointegration AnalysisDocument33 paginiAn Autoregressive Distributed Lag Modelling Approach To Cointegration AnalysisNuur AhmedÎncă nu există evaluări

- Ku Satsu 160225Document11 paginiKu Satsu 160225LameuneÎncă nu există evaluări

- 1-Nonlinear Regression Models in Agriculture PDFDocument9 pagini1-Nonlinear Regression Models in Agriculture PDFinternetidentityscriÎncă nu există evaluări

- Multiple Imputation of Predictor Variables Using Generalized Additive ModelsDocument27 paginiMultiple Imputation of Predictor Variables Using Generalized Additive ModelseduardoÎncă nu există evaluări

- Valor T de Dickey PDFDocument34 paginiValor T de Dickey PDFWinckels WidulskiÎncă nu există evaluări

- Pesaran - Shin - An Auto Regressive Distributed Lag Modelling Approach To Cointegration AnalysisDocument33 paginiPesaran - Shin - An Auto Regressive Distributed Lag Modelling Approach To Cointegration AnalysisrunawayyyÎncă nu există evaluări

- Switching Regression Models and Fuzzy Clustering: Richard CDocument10 paginiSwitching Regression Models and Fuzzy Clustering: Richard CAli Umair KhanÎncă nu există evaluări

- This Content Downloaded From 117.227.34.195 On Fri, 18 Nov 2022 17:23:35 UTCDocument11 paginiThis Content Downloaded From 117.227.34.195 On Fri, 18 Nov 2022 17:23:35 UTCsherlockholmes108Încă nu există evaluări

- Logistic Regression Analysis (Dayton)Document9 paginiLogistic Regression Analysis (Dayton)Vinod DhoundiyalÎncă nu există evaluări

- Huang 06 Cov BasisDocument28 paginiHuang 06 Cov BasisFrank LiuÎncă nu există evaluări

- Fixed vs. Random Effects Panel Data Models: Revisiting The Omitted Latent Variables and Individual Heterogeneity ArgumentsDocument20 paginiFixed vs. Random Effects Panel Data Models: Revisiting The Omitted Latent Variables and Individual Heterogeneity ArgumentsJoshua PryorÎncă nu există evaluări

- Inferences On Stress-Strength Reliability From Lindley DistributionsDocument29 paginiInferences On Stress-Strength Reliability From Lindley DistributionsrpuziolÎncă nu există evaluări

- SML and Probit in STATADocument31 paginiSML and Probit in STATADaniel Redel SaavedraÎncă nu există evaluări

- A Vector Auto-Regressive (VAR) ModelDocument21 paginiA Vector Auto-Regressive (VAR) Modelmeagon_cjÎncă nu există evaluări

- Institute of Mathematical StatisticsDocument12 paginiInstitute of Mathematical StatisticsSayoni BanerjeeÎncă nu există evaluări

- s1 ln1431401995844769 1939656818Hwf 1896444750IdV51689985614314019PDF - HI0001Document13 paginis1 ln1431401995844769 1939656818Hwf 1896444750IdV51689985614314019PDF - HI0001Sudheer BabuÎncă nu există evaluări

- Likelihood Functions For State Space Models With Diffuse Initial ConditionsDocument26 paginiLikelihood Functions For State Space Models With Diffuse Initial ConditionsAditya KumarÎncă nu există evaluări

- Inference in HIV Dynamics Models Via Hierarchical LikelihoodDocument27 paginiInference in HIV Dynamics Models Via Hierarchical LikelihoodMsb BruceeÎncă nu există evaluări

- Maximum Likelihood Estimation of Stationary Multivariate ARFIMA Processes TSayDocument17 paginiMaximum Likelihood Estimation of Stationary Multivariate ARFIMA Processes TSayAdis SalkicÎncă nu există evaluări

- Second-Order Nonlinear Least Squares Estimation: Liqun WangDocument18 paginiSecond-Order Nonlinear Least Squares Estimation: Liqun WangJyoti GargÎncă nu există evaluări

- Elly Aj NK Abc GradDocument35 paginiElly Aj NK Abc GradGag PafÎncă nu există evaluări

- Detecting Multiple Outliers in Multivariate Samples With S-Estimation MethodDocument10 paginiDetecting Multiple Outliers in Multivariate Samples With S-Estimation MethodDen MAs KampretÎncă nu există evaluări

- RJournal 2012-2 Kloke+McKeanDocument8 paginiRJournal 2012-2 Kloke+McKeanIntan PurnomosariÎncă nu există evaluări

- Chapter4 Regression ModelAdequacyCheckingDocument38 paginiChapter4 Regression ModelAdequacyCheckingAishat OmotolaÎncă nu există evaluări

- Prony Method For ExponentialDocument21 paginiProny Method For ExponentialsoumyaÎncă nu există evaluări

- Monte Carlo Techniques For Bayesian Statistical Inference - A Comparative ReviewDocument15 paginiMonte Carlo Techniques For Bayesian Statistical Inference - A Comparative Reviewasdfgh132Încă nu există evaluări

- Testing Significance of Mixing and Demixing Coefficients in IcaDocument8 paginiTesting Significance of Mixing and Demixing Coefficients in IcaManolo ParedesÎncă nu există evaluări

- Applications of Linear Models in Animal Breeding Henderson-1984Document385 paginiApplications of Linear Models in Animal Breeding Henderson-1984DiegoPagungAmbrosiniÎncă nu există evaluări

- Use of Sensitivity Analysis To Assess Reliability of Mathematical ModelsDocument13 paginiUse of Sensitivity Analysis To Assess Reliability of Mathematical ModelsaminÎncă nu există evaluări

- Linear Regression Analysis: Module - IvDocument10 paginiLinear Regression Analysis: Module - IvupenderÎncă nu există evaluări

- Egarch ModelDocument25 paginiEgarch ModelHuynhHaiAuÎncă nu există evaluări

- Nonparametric Analysis of Factorial Designs With Random Missingness: Bivariate DataDocument38 paginiNonparametric Analysis of Factorial Designs With Random Missingness: Bivariate DataHéctor YendisÎncă nu există evaluări

- Estimation in A Multivariate Errors in Variables Regression Model (Large Sample Results)Document22 paginiEstimation in A Multivariate Errors in Variables Regression Model (Large Sample Results)ainÎncă nu există evaluări

- Estimation of Multivariate Probit Models: A Mixed Generalized Estimating/pseudo-Score Equations Approach and Some Nite Sample ResultsDocument19 paginiEstimation of Multivariate Probit Models: A Mixed Generalized Estimating/pseudo-Score Equations Approach and Some Nite Sample Resultsjmurray1022Încă nu există evaluări

- A Survey of Dimension Reduction TechniquesDocument18 paginiA Survey of Dimension Reduction TechniquesEulalio Colubio Jr.Încă nu există evaluări

- Ols 2Document19 paginiOls 2sanamdadÎncă nu există evaluări

- Sciencedirect: Categorical Principal Component Logistic Regression: A Case Study For Housing Loan ApprovalDocument7 paginiSciencedirect: Categorical Principal Component Logistic Regression: A Case Study For Housing Loan ApprovalAncyMariyaÎncă nu există evaluări

- Christophe Andrieu - Arnaud Doucet Bristol, BS8 1TW, UK. Cambridge, CB2 1PZ, UK. EmailDocument4 paginiChristophe Andrieu - Arnaud Doucet Bristol, BS8 1TW, UK. Cambridge, CB2 1PZ, UK. EmailNeil John AppsÎncă nu există evaluări

- Lecture Series 1 Linear Random and Fixed Effect Models and Their (Less) Recent ExtensionsDocument62 paginiLecture Series 1 Linear Random and Fixed Effect Models and Their (Less) Recent ExtensionsDaniel Bogiatzis GibbonsÎncă nu există evaluări

- Two-Point Estimates in ProbabilitiesDocument7 paginiTwo-Point Estimates in ProbabilitiesrannscribdÎncă nu există evaluări

- Nelder 1972Document16 paginiNelder 19720hitk0Încă nu există evaluări

- AUZIPREDocument12 paginiAUZIPRENADASHREE BOSE 2248138Încă nu există evaluări

- Co-Clustering: Models, Algorithms and ApplicationsDe la EverandCo-Clustering: Models, Algorithms and ApplicationsÎncă nu există evaluări

- Difference Equations in Normed Spaces: Stability and OscillationsDe la EverandDifference Equations in Normed Spaces: Stability and OscillationsÎncă nu există evaluări

- A Weak Convergence Approach to the Theory of Large DeviationsDe la EverandA Weak Convergence Approach to the Theory of Large DeviationsEvaluare: 4 din 5 stele4/5 (1)

- Radically Elementary Probability Theory. (AM-117), Volume 117De la EverandRadically Elementary Probability Theory. (AM-117), Volume 117Evaluare: 4 din 5 stele4/5 (2)

- Digital Signal Processing (DSP) with Python ProgrammingDe la EverandDigital Signal Processing (DSP) with Python ProgrammingÎncă nu există evaluări

- Getting Started in Steady StateDocument24 paginiGetting Started in Steady StateamitÎncă nu există evaluări

- Case Analysis, Case 1Document2 paginiCase Analysis, Case 1Aakarsha MaharjanÎncă nu există evaluări

- 3949-Article Text-8633-1-10-20180712Document10 pagini3949-Article Text-8633-1-10-20180712Volodymyr TarnavskyyÎncă nu există evaluări

- 1.1. CHILLER 1.2. Centrifugal: 5.2.hrizontalDocument2 pagini1.1. CHILLER 1.2. Centrifugal: 5.2.hrizontalShah ArafatÎncă nu există evaluări

- Chemistry Project Paper ChromatographyDocument20 paginiChemistry Project Paper ChromatographyAmrita SÎncă nu există evaluări

- PSY502 OLd PapersDocument6 paginiPSY502 OLd Paperscs619finalproject.com100% (4)

- Sex, Law, and Society in Late Imperial China (Law, Society, and Culture in China) by Matthew SommerDocument444 paginiSex, Law, and Society in Late Imperial China (Law, Society, and Culture in China) by Matthew SommerFer D. MOÎncă nu există evaluări

- CS221 - Artificial Intelligence - Search - 4 Dynamic ProgrammingDocument23 paginiCS221 - Artificial Intelligence - Search - 4 Dynamic ProgrammingArdiansyah Mochamad NugrahaÎncă nu există evaluări

- Leap Motion PDFDocument18 paginiLeap Motion PDFAnkiTwilightedÎncă nu există evaluări

- Gaara Hiden Series Light NovelDocument127 paginiGaara Hiden Series Light NovelartfardadÎncă nu există evaluări

- Tank Top Return Line Filter Pi 5000 Nominal Size 160 1000 According To Din 24550Document8 paginiTank Top Return Line Filter Pi 5000 Nominal Size 160 1000 According To Din 24550Mauricio Ariel H. OrellanaÎncă nu există evaluări

- Recruitment and SelectionDocument50 paginiRecruitment and SelectionAmrita BhatÎncă nu există evaluări

- CAT 438 3kk Esquema ElectricoDocument2 paginiCAT 438 3kk Esquema ElectricocasigreÎncă nu există evaluări

- Normal DistributionDocument23 paginiNormal Distributionlemuel sardualÎncă nu există evaluări

- If You Restyou RustDocument4 paginiIf You Restyou Rusttssuru9182Încă nu există evaluări

- 2 Design Thinking - EN - pptx-ALVDocument18 pagini2 Design Thinking - EN - pptx-ALVSalma Dhiya FauziyahÎncă nu există evaluări

- Summative Lab Rubric-Intro To ChemistryDocument1 paginăSummative Lab Rubric-Intro To ChemistryGary JohnstonÎncă nu există evaluări

- Vishay Load Cell Calibration System - ENDocument3 paginiVishay Load Cell Calibration System - ENSarhan NazarovÎncă nu există evaluări

- BUCA IMSEF 2021 Jury Evaluation ScheduleDocument7 paginiBUCA IMSEF 2021 Jury Evaluation SchedulePaulina Arti WilujengÎncă nu există evaluări

- Guidelines and Standards For External Evaluation Organisations 5th Edition v1.1Document74 paginiGuidelines and Standards For External Evaluation Organisations 5th Edition v1.1Entrepre NurseÎncă nu există evaluări

- Floor DiaphragmDocument24 paginiFloor DiaphragmChristian LeobreraÎncă nu există evaluări

- Sample CBAP Elicitation Scenario Based Questions Set 01Document31 paginiSample CBAP Elicitation Scenario Based Questions Set 01Rubab Javaid100% (1)

- Example of Presentation Planning Document 1uf6cq0Document2 paginiExample of Presentation Planning Document 1uf6cq0Wilson MorenoÎncă nu există evaluări

- MoveInSync Native Android Mobile AppDocument12 paginiMoveInSync Native Android Mobile AppRismaÎncă nu există evaluări

- Human Resource Development Multiple Choice Question (GuruKpo)Document4 paginiHuman Resource Development Multiple Choice Question (GuruKpo)GuruKPO90% (20)

- A Research Paper On DormitoriesDocument5 paginiA Research Paper On DormitoriesNicholas Ivy EscaloÎncă nu există evaluări

- Storage Tank Design Calculation - Api 650: Close Floating RoofDocument32 paginiStorage Tank Design Calculation - Api 650: Close Floating RoofhgagÎncă nu există evaluări

- Employment Under Dubai Electricity & Water AuthorityDocument6 paginiEmployment Under Dubai Electricity & Water AuthorityMominur Rahman ShohagÎncă nu există evaluări

- Question 1: As Shown in Figure 1. A 6-Pole, Long-Shunt Lap-Wound CompoundDocument4 paginiQuestion 1: As Shown in Figure 1. A 6-Pole, Long-Shunt Lap-Wound Compoundالموعظة الحسنه chanelÎncă nu există evaluări

- Dyn ST 70 Ser 2Document12 paginiDyn ST 70 Ser 2alexgirard11735100% (1)