Documente Academic

Documente Profesional

Documente Cultură

Incremental Association Rule Mining Using Promising Frequent Itemset Algorithm

Încărcat de

Amaranatha Reddy PDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Incremental Association Rule Mining Using Promising Frequent Itemset Algorithm

Încărcat de

Amaranatha Reddy PDrepturi de autor:

Formate disponibile

Incremental Association Rule Mining Using

Promising Frequent Itemset Algorithm

Ratchadaporn Amornchewin Worapoj Kreesuradej

Faculty of Information Technology Faculty of Information Technology

King Mongkuts Institute of Technology Ladkrabang King Mongkuts Institute of Technology Ladkrabang

Bangkok, 10520 Thailand Bangkok, 10520 Thailand

ramornchewin@yahoo.com worapoj@it.kmitl.ac.th

Abstract Association rule discovery is an important area of rules and some existing association rules would become

data mining. In dynamic databases, new transactions are invalid. As a brute force approach, apriori may be reapplied to

mining the whole dynamic database when the database has

appended as time advances. This may introduce new association been changed. However, this approach is very costly even if

rules and some existing association rules would become invalid. small amount of new transactions is inserted into a database.

Thus, the association rule mining for a dynamic database is an

Thus, the maintenance of association rules for dynamic databases important problem. Several research works [7, 8, 9, 10, 11]

is an important problem. In this paper, promising frequent have proposed several incremental algorithms to deal with this

problem. Review of related works will be introduced in section

itemset algorithm, which is an incremental algorithm, is proposed

2.

to deal with this problem. The proposed algorithm uses

In this paper, a new incremental algorithm, called

maximum support count of 1-itemsets obtained from previous promising frequent itemset algorithm, is introduced. The goal

mining to estimate infrequent itemsets, called promising itemsets, of this work is to solve the efficient updating problem of

association rules after a nontrivial number of new records have

of an original database that will capable of being frequent been added to a database. Our approach introduces a promising

itemsets when new transactions are inserted into the original frequent itemset for an infrequent itemset that has capable of

being a frequent itemset after a number of new records have

database. Thus, the algorithm can reduce a number of times to

been added to a database. This can reduce a number of times to

scan the original database. As a result, the algorithm has scan an original database. As a result, the algorithm has

execution time faster than that of previous methods. This paper execution time faster than that of previous methods.

also conducts simulation experiments to show the performance of The remaining of this paper is organized as follows. We

brief review of related works in Section 2. The Promising large

the proposed algorithm. The simulation results show that the itemset algorithm is described in Section 3. We evaluate the

proposed algorithm has a good performance. performance in Section 4. Finally, we conclude the work of this

paper in section 5.

Keywordsassociation rule, maintain association rule, II. RELATED WORK

incremental associatin rule

I. INTRODUCTION

An influential algorithm for association rule mining is

Data mining is one of the processes of Knowledge Apriori [2]. Apriori computes frequent itemsets in the large

Discovery in Database (KDD) that is used for extracting database through several iterations based on a prior knowledge.

information or pattern from large database. One major Each iteration has 2 steps. For each iteration with 2 steps,

application area of data mining is association rule mining [1] processes are join and prune step. For an frequent itemset, its

that discovers hidden knowledge in database. The association support must be higher than a user-specified minimum support

rule mining problem is to find out all the rules in the form of X threshold. The association rule can be discoverd based on

=> Y, where X and Y I are sets of items, called itemsets. The frequent itemsets that must be higher than user-specified

association rule discovery algorithm is usually decomposed minimum confidence.

into 2 major steps. The first step is find out all large itemsets

that have support value exceed a minimum support threshold For dynamic databases, several incremental updating

and the second steps is find out all the association rules that techniques have been developed for mining association rules.

have value exceed a minimum confidence threshold. One of the previous work for incremental association rule

mining is FUP algorithm that was presented by Cheung et al

However, a database is dynamic when new transactions are [3]. FUP algorithm is the first incremental updating technique

inserted into the database. This may introduce new association

1-4244-0983-7/07/$25.00 2007 IEEE ICICS 2007

for maintenance association rules when new data are inserted to A. Original database Discovery

database. Based on the framework of Apriori algorithm, FUP A dynamic database may allow insert new transactions.

computes frequent itemsets using large itemsets found at the This may not only invalidate existing association rules but also

previous iteration. The major idea of FUP is re-use frequent activate new association rules. Maintaining association rules

itemsets of previous mining to update with incremental for a dynamic database is an important issue. Thus, this paper

database. The key performance of FUP is pruning technique to proposes a new algorithm to deal with such updating situation.

reduce the number of candidate set in update process. The Our assumption for the new algorithm is that the statistics of

extension algorithm of FUP is FUP2 [4] that is proposed to new transactions slowly change from original transactions.

handle all update cases when database are added to, deleted According to the assumption, the statistics of old transactions,

from a database. obtained from previous mining, can be utilized for

Ayan et al [5] present an algorithm called UWEP (Update approximating that of new transactions. Therefore, Support

With Early Pruning). UWEP follows the approaches of FUP count of itemsets obtained from previous mining may slightly

and partition algorithm. It employs a dynamic look-ahead different from support count of itemsets after inserting new

strategy in updating existing large itemsets by detecting and transactions into an original database that contains old

pruning superset of large itemsets in an original database that transactions. The new algorithm uses maximum support count

will no longer remain large in updated database. UWEP scans of 1-itemsets obtained from previous mining to estimate

at most once in both original database and incremental infrequent itemsets of an original database that will capable of

database. UWEP generates smaller candidate set from the set of being frequent itemsets when new transactions are inserted into

itemsets that are large both an original and incremental the original database. With maximum support count and

database. maximum size of new transactions that allow insert into an

original database, support count for infrequent itemsets that

Furthermore, negative border algorithm [6], an incremental will be qualified for frequent itemsets, i.e. min_pl, is shown in

mining algorithm based on FUP, reduces to scan original equation 1:

database and keeps track of large itemsets and negative border

when transaction is added to or delete from database. Negative

maxsupp

border consists of all itemsets that are candidates of the level- min_ sup DB ) inc _ size min_ PL < min_ sup DB (1)

wise method. An itemset is in the negative border did not have total size

enough support but all its subsets are frequent. If the negative

border of large itemsets expands, this algorithm is required to where min_sup(DB) is minimum support count for an

full scan a whole database. This is the case because negative original database, maxsupp is maximum support count of

border algorithm does not cover all large itemsets in updated itemsets, current size is a number of transaction of an original

database. database and inc_size is a maximum number of new

transactions.

III. PROMISING FREQUENT ITEMSET ALGORITHM

Here, a promising frequent itemsets is defined as following

In an observation the itemset will be frequent itemset in definition:

updated database if it is member of large itemset in original

database or incremental database. The main problem of Definition A promising frequent itemset is an infrequent

incremental update is changing of frequent itemset that cause itemset that satisfies the equation 1.

to re-execute from original database again. As an example, an original database shown in figure 1. has

In this paper we present the new idea to avoid scanning the 10 transactions, i.e. |DB|=10. Then, three new transactions is

original database. Then we compute not only frequent itemset inserted into the original database, i.e. |db| =3. Here, minimum

but also compute itemset that may be potentially large in an support count for mining association rules is set to 4 (40

incremental database called Promising frequent Itemset. percent). From figure 1, maximum support count of 1-itemsets

of the original database is 7. min_PL is computed as the

An algorithm find all possible k-itemset of promising follows:

frequent itemset in original database. If member of frequent for

each iteration is more than or equal to k-itemset. This idea is 7

min_ PL = 4

3 = 2 (2)

guarantee that promising frequent itemset algorithm are cover 10

all frequent itemset that occur in updated database.Thus, According to equation 2, if any itemset has support count at

updating the new transactions are quickly because it can use the least 2 but less than 4, then it will be promising frequent

information from the existing original database. itemsets. Thus, the frequent 1- itemset is {A, B, C} and the

In this section we describe our algorithm into 2 subsection. promising frequent 1- itemset is {D, E}.

In our approach, an original database is firstly mined and all In this paper, apriori algorithm is applied to find all possible

frequent itemsets and promising frequent itemset. Secondly frequent k- itemsets and promising frequent k-itemsets. Apriori

each incremental dataset in mined and updated to frequent and scans all transactions of an original database for each iteration

promising frequent itemset. The result of updating, some with 2 steps processes are join and prune step. Unlike typical

infrequent itemsets or new itemsets may be changed into apriori algorithm, items in both frequent k- itemsets and

frequent itemset. promising frequent k-itemsets can be joined together in the join

step. For a frequent item, its support count must be higher than

a user-specified minimum support count threshold and for a max supp

promising frequent item, its support count must be higher than min_ PLDB db = min_ sup DB db inc _ size (3)

total size

min_PL but less than the user-specified minimum support

count. As examples, figure 2. and 3. show the promising Then, If any k-item has support count greater than or equal

frequent and frequent 2- itemsets and the promising frequent to min_sup(DBdb), this itemset is moved to a frequent k-item of

and frequent 3- itemsets respectively. an updated database. In the other case, if any k-item has

support count less than min_sup(DBdb) but it is greater or equal

to min_PL(update) , this k-item is moved to a promise frequent

itemset of an upated database. The following algorithms are

developed to update frequent and promising frequent k-tems of

an updated database.

The first algorithm, shown in figure 4, updates a frequent

and a promising frequent 1-itemset. After obtaining support

count of candidate 1-itemsets of an incremental database, the

support count of the candidate 1-items is summed to that of the

1-items of an original database. Then, If any item has support

count greater than or equal to min_sup(DBdb), this item is

moved to a frequent 1-itemset of an updated database. In the

other case, if any item has support count less than

Figure 1. Transaction data and candidate 1-itemsets

min_sup(DBdb) but it is greater or equal to min_PL(update) , this

item is moved to a promise frequent 1-itemset of an updated

database. In addition, if any item is a new frequent item or a

. new promising frequent item, this item will be added to Temp1

set. Then, Temp1 is joined and pruned with both a promise

frequent k-itemset and a frequent k-itemset to obtain

Temp_newCk. The algorithm for joining and pruning items is

shown in figure 5.

The k-itemsets of the Temp_newCk are scanned in an

incremental database. A k-itemset of Temp_newCk can

become a frequent itemset in an updated database only if the k-

Figure 2. candidate , frequent and promise frequent 2-itemset itemset of the Temp_newCk is a frequent itemset in an

incremental database. Thus, if a itemset of the Temp_newCk

has support count greater than or equal to min_sup(db), the

item is moved to an estimated frequent k-itemset. Similarly, a

k-itemset of Temp_newCk can become a promising frequent

itemset in an updated database only if the k-itemset of the

Temp_newCk is a frequent itemset in an incremental database.

Thus, if a k-itemset of Temp_newCk has support count less

than min_sup(db) but greater or equal to min_PL(update) or

min_PL(DB), the k-itemset is moved to an estimated

promising frequent k-itemset. Then, both the estimated promise

Figure 3. candidate, frequent and promise frequent 3- itemset frequent k-itemsets and the estimated frequent k-itemsets are

added to Temp_scanDB. Figure 6 shows updating k-itemset

B. Updating frequent and promising frequent itemsets algorithm for k>=2.

When new transactions are added to an original database,

an old frequent k-item could become an infrequent k-item and As the last phase for the incremental algorithm, the k-items

an old promising frequent k-item could become a frequent k- of Temp_scanDB is scanned in an original database to update

item. This introduces new association rules and some existing their support count. Like previous cases, If any k-item has

association rules would become invalid. To deal with this support count greater than or equal to min_sup(DBdb), this k-

problem, all k-items must be updated when new transactions item is moved to a frequent k-itemset and if any k-item has

are added to an original database. In this section, we explain support count less than min_sup(DBdb) but it is greater or equal

how to update all old items. to min_PL(update) , this k-item is moved to a promise frequent k-

itemset. The algorithm for finding support count of k>=2

The size of an updated database increases when new itemsets shows in figure 7.

transactions are inserted into an original database. Thus,

min_PL must be recalculated in order to associate with the new

size of an updated database. min_PL (update) is computed as the

follows:

Algorithm 1 Updating frequent and promising frequent Algorithm 2 Gen_newcandidate

1-itemset Input :

Input : (1 )Lk(DB db) : frequent k-itemset in updated database,

(1) L1 DB: the set of all frequent 1-itemset in original database, (2) PLk DB db) : promising k-itemset in updated database.

(2) PL1 DB : the set of all promising frequent 1-itemset original (3) Temp1 : new frequent k-itemset in updated database

database, Output :

(3) C1DB : candidate 1-itemset of original database, (1) new Ck+1: new candidate k+1-itemset in updated database.

1

(4) C db: candidate 1-itemset of incremental database.

Output : 1 If k <= (length(L) + length(PL))

(1) L1(DB db) : frequent itemset in updated database, 2 For each Y Temp1

(2) PL1(DB db) : promising frequent itemset in updated 3 Ck+1 (new) = Y *(Lk(DB db))PLk(DB db))

database, 4 For c Ck+1 (new)

(3) new frequent itemsets : new frequent itemset in updated 5 Delete c from Ck+1DB(new) if all subset of c is in Lk or PLk

database ,

(4) new promising frequent itemsets : new promising frequent itemset in Figure 5. Generating new candidate itemset algorithm

updated database

(5) Temp_newCk : new candidate 2-itemset in updated database Algorithm 3 Update frequent and promising frequent

itemsets for k>= 2 itemset

1 C1db = all 1-itemsets in db with support >0 Input :

2 k=1 (1) LkDB : frequent k-itemset in original database,

3 While C1db > 0 do (2) PLkDB, : promising frequent k-itemset in original,

4 For each X C1DB do database

5 X.support ( DBdb) = X.supportDB + X.supportdb (3) Temp_newCk : new candidate k-itemset in updated database.

6 If (X L1DB or X PL1DB ) and Output :

7 (X.support ( DBdb) ) >= min_sup(DBdb) Then (1) Lk(DB db) : frequent k-itemset in updated database,

8 Add X to L1 (DBdb) (2) PLk DB db) : Promising frequent k-itemset in updated database,

9 Add X to temp1 (3) Temp_scanDB : estimated frequent k-itemset and estimated

10 For each X L1DB do promising frequent k-itemset in updated database

11 If X.support(DBdb) >= min_sup(DBdb) Then (4) Temp1 : new estimated frequent k-itemset and new estimated

12 Add X to L1 (DBdb) promising frequent k-itemset in updated database

13 Else (5) Temp_newCk : new candidate k+1-itemset in updated database.

14 If X.support(DBdb) >= min_PL (DBdb) Then

15 Add X to PL1 (DBdb) 1 k=2

16 For each X PL1DB do 2 While k <= (length(Lk) +length(PLk)) do

17 If X.support(DBdb) >= min_sup(DBdb) Then 3 Scan db for (Lk) , (PLk) and (items) Temp_newCk

18 Add X to L1 (DBdb) 4 X.support ( DBdb) = X.supportDB + X.supportdb

19 Add X to temp1 5 For each X LkDB do

20 Else 6 If X.support(DBdb) >= min_sup(DBdb) Then

21 If X.support(DBdb) >= min_PL(DBdb) Then 7 Add X to Lk (DBdb)

22 Add X to PL1 (DBdb) 8 Else

23 For each X C1DB do 9 If X.support(DBdb) >= min_PL(DBdb) Then

24 Add X to C1(DBdb) 10 Add X to PLk (DBdb)

25 If X.supportdb >= minsup(DBdb) (new item in db) Then 11 For each X PLkDB do

26 Add X to L1(DBdb) 12 If X.support(DBdb) >= min_sup(DBdb) Then

27 Add X to temp1 13 Add X to Lk (DBdb)

28 Else 14 Add X to temp1

29 If X.support(db) >= min_PL(DBdb) Then 15 Else

30 Add X to PL1 (DBdb) 16 If X.support(DBdb) >= min_PL(DBdb) Then

31 Add X to temp1 17 Add X to PL1 (DBdb)

32 If temp1 {} Then 18 For each Y Temp_newCk do

33 Y temp1 19 If Y.support(db) >= min_sup(db) Then

34 C2(DBdb) (new) = gen_newcandidate (Y) 20 Add Y to Temp_scanDB(Lk(DBdb))

35 Clear temp1 21 Add Y to Temp1(Lk(DBdb))

36 Add C2(DBdb) (new) to Temp_newCk 22 Else

37 k=k+1 23 If Y.support(db) >= (min_PL (DBdb))

24 or Y.support(db) >= min_PL (DB) )Then

Figure 4. Updating frequent and promising frequent 1-itemset algorithm 25 Add Y to Temp_scanDB(PLk(DBdb))

26 Add Y to Temp1(PLk(DBdb))

27 Clear Temp_newCk

28 If Temp1 {} Then

29 Y Temp1

30 Ck+1(DBdb) (new) = gen_newcandidate (Y)

31 Clear Temp1

32 Add Ck+1(DBdb) (new) to Temp_newCk

33 k=k+1

If Temp_scanDB {} Then Find_SuppcountDB

Figure 6. Update k >= 2 itemset algorithm

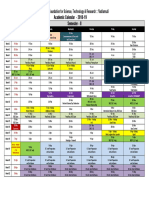

Algorithm 4 Find_SuppcountDB T10I4D10 FUP 7%

Input : 14000

(1) Lk(DBdb) Temp_scanDB : Estimated frequent k-itemset, Promising 7%

12000

(2) PLk(DBdb) Temp_scanDB : Estimated promising frequent k-itemset FUP 6%

Execution time

10000

Output :

(1) L (DBdb) : frequent k-itemset in updated database, 8000 Promising 6%

(2) PL(DBdb) : promising frequent k-itemset in updated database 6000 i FUP 5%

4000

1 For each W Temp_scanDB 2000

Promising 5%

2 Scan DB for W

0 FUP 4%

3 W.support(DBdb) = W.supportDB + W.supportdb

0% 20% 40% 60%

4 If W.support(DBdb) >= min_sup(DBdb) Then Promising 4%

Incremental size

5 Add W to Lk (DBdb)

6 Else Figure 8. Execution Time comparison

7 If W.support(DBdb) >= min_PL(DBdb) Then

8 Add W to PLk (DBdb)

9 Clear Temp_scamDB

V. CONCLUSIONS

Figure 7. Finding support count in an original database algorithm We have proposed promising frequent algorithm for

incremental association rule mining. Assuming that the two

IV. EXPERIMENT thresholds, minimum support and confidence, do not change,

the promising frequent algorithm can guarantee to discover

To evaluate the performance of promising frequent frequent itemsets. From the experiment, our algorithm has

algorithm, the algorithm is implemented and tested on a PC better running time than that of FUP algorithm. In the future,

with a 2.8 GHz Pentium 4 processor, and 1 GB main memory. further researches and experiments on the proposed algorithm

The experiments are conducted on a synthetic dataset, called will be presented.

T10I4D10K. The technique for generating the dataset is

proposed by Agrawal and etc. [1]. The synthetic dataset REFERENCES

comprises 20,000 transactions over 100 unique items, each

[1] R. Agrawal., T. Imielinski, and A. Swami, Mining association rules

transaction has 10 items on average and the maximal size between sets of items in large databases, In Proc. of the ACM

itemset is 4 SIGMOD Int'l Conf. on Management of Data (A CM SIGMOD '93),

Washington, USA,May 1993.

Firstly, the proposed algorithm is used to find association

[2] R. Agrawal and R. Srikant, Fast algorithms for mining association

rules from an original database of 10,000 transactions. Then, rules. In Proceedings of 20 th Intl Conf. on Very Large Databases

several sizes of incremental databases, i.e. 10%, 20%, 30%, (VLDB'94), pages487-499,Santiago, Chile, 1994.

40% and 50% of the original database, are added to the original [3] D. W. Cheung, J. Han, V. T. Ng, and C. Y. Wong, Maintenance of

database. For comparison purpose, FUP algorithm is also used discovered association rules in large databases: An incremental updating

to find association rules from the same original database and technique,In 12th IEEE International Conference on Data

the same incremental databases. The experimental results with Engineering,1996.

various minimum support thresholds are shown in Table1 and [4] D. W. Cheung, S.D. Lee, B. Kao, A General incremental technique for

Figure 8. From the results, the proposed algorithm has better maintaining discovered association rules, In Proceedings of the 5 th

Intl. Conf. on Database Systems for Advanced Applications

running time than that of FUP algorithm. (DASFAA'97), Melbourne, Australia, April 1997.

[5] N.F. Ayn, A.U. Tansel, and E. Arun, An efficient algorithm to update

TABLE I. EXECUTION TIME WITH VARYING SIZE OF INCREMENTAL large itemsets with early pruning. Proceedings of the Fifth ACM

DATABASE

SIGKDD International Conference on Knowledge Discovery and Data

Execution time (sec.) Mining, San Diego, August 1999.

Min_sup Algorithm Percent of Incremental database size [6] S. Thomas, S. Bodagala, K. Alsabti, and S. Ranka, An efficient

10% 20% 30% 40% 50% algorithm for the incremental updation of association rules in large

Promising databases, In Proceedings of the 3rd Intl. Conf. on Knowledge

Frequent 185.2 546 1047 1563.4 1935.5 Discovery and Data Mining (KDD'97), New Port Beach,

4% Itemset California,1997.

FUP 2521.5 5012 7110.7 9996.3 11539 [7] C. H. Lee, C. R. Lin , and M. S. Chen, Sliding-Window Filtering: An

Efficient Algorithm for Incremental Mining , ACM , 2000

Promising [8] C.C. Chang, Y.C. Li and J.S. Lee, An efficient algorithm for

Frequent 191.26 430.11 632.06 855.39 1048 incremental mining of association rules, Proceedings of the 15th

5% Itemset international workshop on research issues in data engineering: stream

FUP 2023.6 4162.4 5970.4 8678.1 10681 data mining and applications (RIDE-SDMA05) ,IEEE, 2005

[9] A. A. Veloso et al., Mining frequent itemsets in evolving databases, In

Promising

Proc. 2nd SIAM Intl. Conf. on Data Mining, Arlington, VA, Apr. 2002

Frequent 93.562 180.41 304.56 366.78 452.7

6% Itemset [10] K.L. Lee, G. Lee and A. L.P. Chen, Efficient Graph-based algorithm

for discovering and maintaining knowledge in large database,

FUP 1680.5 3868 5446.8 6889.5 9516.3 Proceedings of the third pacific-asia conference on methodologies for

Promising knowledge discovert and data mining, April 1999.

Frequent 52.985 98.296 463.22 1808.4 2147.1 [11] N. L. Sarda and N. V. Srinivas, An adaptive algorithm for incremental.

7% Itemset mining of association rules, In Proc. 9th Intl.Workshop on Database

and Expert System Applications,Vienna, Austria, pp. 240-245, Aug

FUP 1350.6 2723.7 4873.3 5972.3 8856.3 1998.

S-ar putea să vă placă și

- Web Content Mining Techniques Tools & Algorithms - A Comprehensive StudyDocument6 paginiWeb Content Mining Techniques Tools & Algorithms - A Comprehensive StudyseventhsensegroupÎncă nu există evaluări

- 9781788291118-Ibm Spss Modeler EssentialsDocument231 pagini9781788291118-Ibm Spss Modeler Essentialsrajeshisn0% (1)

- Assignment ClusteringDocument19 paginiAssignment ClusteringJoseÎncă nu există evaluări

- Customer Relationship Management (CRM) : A Technology Driven ToolDocument12 paginiCustomer Relationship Management (CRM) : A Technology Driven ToolManickath Mani NairÎncă nu există evaluări

- DARM: Decremental Association Rules Mining: Mohamed Taha, Tarek F. Gharib, Hamed NassarDocument9 paginiDARM: Decremental Association Rules Mining: Mohamed Taha, Tarek F. Gharib, Hamed NassarKurumeti Naga Surya Lakshmana KumarÎncă nu există evaluări

- EclatDocument24 paginiEclatMade TokeÎncă nu există evaluări

- Data Mining For Biological Data Analysis: Glover Eric Leo Cimi Smith CalvinDocument8 paginiData Mining For Biological Data Analysis: Glover Eric Leo Cimi Smith CalvinEric GloverÎncă nu există evaluări

- A New Two-Phase Sampling Algorithm For Discovering Association RulesDocument24 paginiA New Two-Phase Sampling Algorithm For Discovering Association Rulesanand_gsoft3603Încă nu există evaluări

- An Eective Hash-Based Algorithm For Mining Association RulesDocument12 paginiAn Eective Hash-Based Algorithm For Mining Association RulespostscriptÎncă nu există evaluări

- Apriori Algorithm Using Parallel Computing Concepts and Forest Fire PredictionDocument7 paginiApriori Algorithm Using Parallel Computing Concepts and Forest Fire PredictiongopitheprinceÎncă nu există evaluări

- Data Mining PPT 7Document14 paginiData Mining PPT 7RUSHIKESH SAWANTÎncă nu există evaluări

- Efficient Graph-Based Algorithms For Discovering and Maintaining Association Rules in Large DatabasesDocument24 paginiEfficient Graph-Based Algorithms For Discovering and Maintaining Association Rules in Large DatabasesAkhil Jabbar MeerjaÎncă nu există evaluări

- Using A Hash-Based Method With Transaction Trimming and Database Scan Reduction For Mining Association RulesDocument26 paginiUsing A Hash-Based Method With Transaction Trimming and Database Scan Reduction For Mining Association RulesAnna FrankÎncă nu există evaluări

- Evaluating The Performance of Apriori and Predictive Apriori Algorithm To Find New Association Rules Based On The Statistical Measures of DatasetsDocument6 paginiEvaluating The Performance of Apriori and Predictive Apriori Algorithm To Find New Association Rules Based On The Statistical Measures of DatasetsBudi Man TaroÎncă nu există evaluări

- V1i6 Ijertv1is6251 PDFDocument6 paginiV1i6 Ijertv1is6251 PDFBudi Man TaroÎncă nu există evaluări

- A New Efficient Matrix Based Frequent Itemset Mining Algorithm With TagsDocument4 paginiA New Efficient Matrix Based Frequent Itemset Mining Algorithm With Tagshnoor6Încă nu există evaluări

- Efficient Transaction Reduction in Actionable Pattern Mining For High Voluminous Datasets Based On Bitmap and Class LabelsDocument8 paginiEfficient Transaction Reduction in Actionable Pattern Mining For High Voluminous Datasets Based On Bitmap and Class LabelsNinad SamelÎncă nu există evaluări

- Image Content With Double Hashing Techniques: ISSN No. 2278-3091Document4 paginiImage Content With Double Hashing Techniques: ISSN No. 2278-3091WARSE JournalsÎncă nu există evaluări

- (IJCST-V4I2P44) :dr. K.KavithaDocument7 pagini(IJCST-V4I2P44) :dr. K.KavithaEighthSenseGroupÎncă nu există evaluări

- 76-Article Text-107-2-10-20180521Document4 pagini76-Article Text-107-2-10-20180521dpkmdsÎncă nu există evaluări

- AbstracttDocument5 paginiAbstracttAlfred JeromeÎncă nu există evaluări

- Application of Particle Swarm Optimization To Association Rule MiningDocument11 paginiApplication of Particle Swarm Optimization To Association Rule MiningAnonymous TxPyX8cÎncă nu există evaluări

- Advanced Engineering Informatics: Chun-Wei Lin, Tzung-Pei Hong, Guo-Cheng Lan, Jia-Wei Wong, Wen-Yang LinDocument12 paginiAdvanced Engineering Informatics: Chun-Wei Lin, Tzung-Pei Hong, Guo-Cheng Lan, Jia-Wei Wong, Wen-Yang LinSeminarsPuntersÎncă nu există evaluări

- DFP-Growth: An Efficient Algorithm For Frequent Patterns in Dynamic Data MiningDocument5 paginiDFP-Growth: An Efficient Algorithm For Frequent Patterns in Dynamic Data MiningInternational Journal of Application or Innovation in Engineering & ManagementÎncă nu există evaluări

- A TestDocument7 paginiA TestJimmy HuynhÎncă nu există evaluări

- CBAR: An Efficient Method For Mining Association Rules: Yuh-Jiuan Tsay, Jiunn-Yann ChiangDocument7 paginiCBAR: An Efficient Method For Mining Association Rules: Yuh-Jiuan Tsay, Jiunn-Yann Chiangmahapreethi2125Încă nu există evaluări

- Scalable Algorithms For Association Mining: Mohammed J. Zaki, Member, IEEEDocument19 paginiScalable Algorithms For Association Mining: Mohammed J. Zaki, Member, IEEEJamal aryan aÎncă nu există evaluări

- Volume 2, No. 5, April 2011 Journal of Global Research in Computer Science Research Paper Available Online at WWW - Jgrcs.infoDocument3 paginiVolume 2, No. 5, April 2011 Journal of Global Research in Computer Science Research Paper Available Online at WWW - Jgrcs.infoSadhuYadavÎncă nu există evaluări

- Study of An Improved Apriori Algorithm For Data Mining of Association RulesDocument8 paginiStudy of An Improved Apriori Algorithm For Data Mining of Association RulesHoàng Duy ĐỗÎncă nu există evaluări

- Implementation of An Efficient Algorithm: 2. Related WorksDocument5 paginiImplementation of An Efficient Algorithm: 2. Related WorksJournal of Computer ApplicationsÎncă nu există evaluări

- Incremental Mining On Association Rules: Toshi Chandraker, Neelabh SaoDocument3 paginiIncremental Mining On Association Rules: Toshi Chandraker, Neelabh SaoresearchinventyÎncă nu există evaluări

- (18-22) Hybrid Association Rule Mining Using AC TreeDocument5 pagini(18-22) Hybrid Association Rule Mining Using AC TreeiisteÎncă nu există evaluări

- Analysis and Implementation of FP & Q-FP Tree With Minimum CPU Utilization in Association Rule MiningDocument6 paginiAnalysis and Implementation of FP & Q-FP Tree With Minimum CPU Utilization in Association Rule MiningWARSE JournalsÎncă nu există evaluări

- Improving Upgrowth Algorithm Using Top-K Itemset Mining High UtilityDocument12 paginiImproving Upgrowth Algorithm Using Top-K Itemset Mining High UtilityPriya ChÎncă nu există evaluări

- A Framework To Discover Association Rules Using Frequent Pattern MiningDocument5 paginiA Framework To Discover Association Rules Using Frequent Pattern MiningIIR indiaÎncă nu există evaluări

- (IJCST-V12I2P8) :sheel Shalini, Nayancy KumariDocument10 pagini(IJCST-V12I2P8) :sheel Shalini, Nayancy Kumarieditor1ijcstÎncă nu există evaluări

- Efficient Algorithm For Mining Frequent Patterns Java ProjectDocument38 paginiEfficient Algorithm For Mining Frequent Patterns Java ProjectKavya SreeÎncă nu există evaluări

- Performance Study of Association Rule Mining Algorithms For Dyeing Processing SystemDocument10 paginiPerformance Study of Association Rule Mining Algorithms For Dyeing Processing SystemiisteÎncă nu există evaluări

- Data Mining: A Database PerspectiveDocument19 paginiData Mining: A Database PerspectiveSijo JohnÎncă nu există evaluări

- Association Rule: Association Rule Learning Is A Popular and Well Researched Method For DiscoveringDocument10 paginiAssociation Rule: Association Rule Learning Is A Popular and Well Researched Method For DiscoveringsskaleesÎncă nu există evaluări

- 2marks With AnswersDocument10 pagini2marks With AnswerssangeethaÎncă nu există evaluări

- Association Rule-A Tool For Data Mining: Praveen Ranjan SrivastavaDocument6 paginiAssociation Rule-A Tool For Data Mining: Praveen Ranjan Srivastavashailu1978Încă nu există evaluări

- An Optimized Distributed Association Rule Mining Algorithm in Parallel and Distributed Data Mining With XML Data For Improved Response TimeDocument14 paginiAn Optimized Distributed Association Rule Mining Algorithm in Parallel and Distributed Data Mining With XML Data For Improved Response TimeAshish PatelÎncă nu există evaluări

- University Institute of Engineering and Technology, ChandigarhDocument23 paginiUniversity Institute of Engineering and Technology, Chandigarhdhruv kumarÎncă nu există evaluări

- Data Mining Nov10Document2 paginiData Mining Nov10Harry Johal100% (1)

- Design and Implementation of Efficient APRIORI AlgorithmDocument4 paginiDesign and Implementation of Efficient APRIORI Algorithmsigma70egÎncă nu există evaluări

- p132 ClosetDocument11 paginip132 Closetjnanesh582Încă nu există evaluări

- Efficient Utility Based Infrequent Weighted Item-Set MiningDocument5 paginiEfficient Utility Based Infrequent Weighted Item-Set MiningijtetjournalÎncă nu există evaluări

- 14 - Novel High Average Utility Pattern Mining With Tighter UpperBoundsDocument78 pagini14 - Novel High Average Utility Pattern Mining With Tighter UpperBoundsCall Me Munna Bhai ArepalliÎncă nu există evaluări

- Data Mining ReportDocument15 paginiData Mining ReportKrishna KiranÎncă nu există evaluări

- It 6001 Da 2 Marks With Answer PDFDocument10 paginiIt 6001 Da 2 Marks With Answer PDFkumar3544Încă nu există evaluări

- Unary Association Rules Survey of Mining Services: AbstractDocument4 paginiUnary Association Rules Survey of Mining Services: AbstractIjctJournalsÎncă nu există evaluări

- Efficient Parallel Data Mining With The Apriori Algorithm On FpgasDocument16 paginiEfficient Parallel Data Mining With The Apriori Algorithm On FpgasKumara PrasannaÎncă nu există evaluări

- Advanced Eclat Algorithm For Frequent Itemsets GenerationDocument19 paginiAdvanced Eclat Algorithm For Frequent Itemsets GenerationSabaniÎncă nu există evaluări

- Deductive Database GroupworkDocument4 paginiDeductive Database Groupworkclinton migonoÎncă nu există evaluări

- Parallel Data Mining of Association RulesDocument10 paginiParallel Data Mining of Association RulesAvinash MudunuriÎncă nu există evaluări

- Data Mining Using Clouds: An Experimental Implementation of Apriori Over MapreduceDocument8 paginiData Mining Using Clouds: An Experimental Implementation of Apriori Over MapreduceSudhansu Shekhar PatraÎncă nu există evaluări

- Ijcs 2016 0303008 PDFDocument16 paginiIjcs 2016 0303008 PDFeditorinchiefijcsÎncă nu există evaluări

- Mtech Project Seminar1Document36 paginiMtech Project Seminar1patealÎncă nu există evaluări

- An Effective Heuristic Approach For Hiding Sensitive Patterns in DatabasesDocument6 paginiAn Effective Heuristic Approach For Hiding Sensitive Patterns in DatabasesInternational Organization of Scientific Research (IOSR)Încă nu există evaluări

- Literature Review On Mining High Utility Itemset From Transactional DatabaseDocument3 paginiLiterature Review On Mining High Utility Itemset From Transactional DatabaseInternational Journal of Application or Innovation in Engineering & ManagementÎncă nu există evaluări

- 5615ijdkp06 PDFDocument8 pagini5615ijdkp06 PDFAmaranatha Reddy PÎncă nu există evaluări

- Sophistication in Order To PerformDocument1 paginăSophistication in Order To PerformSrinivasÎncă nu există evaluări

- Vol 1,2Document2 paginiVol 1,2Amaranatha Reddy PÎncă nu există evaluări

- Volunteer Details Update UsersDocument4 paginiVolunteer Details Update UsersAmaranatha Reddy PÎncă nu există evaluări

- Response To ReviewersDocument4 paginiResponse To ReviewersAmaranatha Reddy PÎncă nu există evaluări

- Alq Ef: !e LuuDocument2 paginiAlq Ef: !e LuuAmaranatha Reddy PÎncă nu există evaluări

- RevisedScheduleNGRanga PDFDocument3 paginiRevisedScheduleNGRanga PDFAmaranatha Reddy PÎncă nu există evaluări

- E Commerce: AN Indian Perspective, Fifth Edition by P.T. Joseph, S.JDocument3 paginiE Commerce: AN Indian Perspective, Fifth Edition by P.T. Joseph, S.JAmaranatha Reddy PÎncă nu există evaluări

- Life Insurance Corporation of India Detailed Policy Status ReportDocument1 paginăLife Insurance Corporation of India Detailed Policy Status ReportAmaranatha Reddy PÎncă nu există evaluări

- S/o P.Obula Reddy Lavanur (Vi&po) Kondapuram (Mandal) Kadapa District +91-9701427020Document2 paginiS/o P.Obula Reddy Lavanur (Vi&po) Kondapuram (Mandal) Kadapa District +91-9701427020Amaranatha Reddy PÎncă nu există evaluări

- Javascript: Language Fundamentals IDocument53 paginiJavascript: Language Fundamentals IAmaranatha Reddy PÎncă nu există evaluări

- COMPUTER GRAPHICS (Department Elective) MID-1 ANALYSISDocument1 paginăCOMPUTER GRAPHICS (Department Elective) MID-1 ANALYSISAmaranatha Reddy PÎncă nu există evaluări

- Object-Oriented Programming Through JavaDocument12 paginiObject-Oriented Programming Through JavaAmaranatha Reddy PÎncă nu există evaluări

- Open Source GIS, DATAMINING and Statistics: Dr. V.V. Venkata RamanaDocument197 paginiOpen Source GIS, DATAMINING and Statistics: Dr. V.V. Venkata RamanaAmaranatha Reddy PÎncă nu există evaluări

- Moodle Content Status 21-01-2019Document4 paginiMoodle Content Status 21-01-2019Amaranatha Reddy PÎncă nu există evaluări

- 1.b.tech Academic Calendar Sem II 2018 19Document1 pagină1.b.tech Academic Calendar Sem II 2018 19Amaranatha Reddy PÎncă nu există evaluări

- Activity Wise Admin Duties - 2018-19 (1) - UpdatedDocument4 paginiActivity Wise Admin Duties - 2018-19 (1) - UpdatedAmaranatha Reddy PÎncă nu există evaluări

- Web TechnologiesDocument25 paginiWeb TechnologiesAmaranatha Reddy PÎncă nu există evaluări

- Memory Based ReasoningDocument12 paginiMemory Based ReasoningBharti Sharma50% (2)

- Student's Performance Prediction Using Weighted Modified ID3 AlgorithmDocument6 paginiStudent's Performance Prediction Using Weighted Modified ID3 AlgorithmijsretÎncă nu există evaluări

- Franco 2018Document13 paginiFranco 2018kalokosÎncă nu există evaluări

- BDA Worksheet 5 ArmanDocument5 paginiBDA Worksheet 5 Armanarman khanÎncă nu există evaluări

- 1.1 Overview: Data Mining Based Risk Estimation of Road AccidentsDocument61 pagini1.1 Overview: Data Mining Based Risk Estimation of Road AccidentsHarshitha KhandelwalÎncă nu există evaluări

- 10-601 Machine Learning: Homework 7: InstructionsDocument5 pagini10-601 Machine Learning: Homework 7: InstructionsSasanka Sekhar SahuÎncă nu există evaluări

- CS 228: Database Management SystemDocument46 paginiCS 228: Database Management SystemSaimo MghaseÎncă nu există evaluări

- Clustering Algorithm: A Fundamental Operation in Data MiningDocument44 paginiClustering Algorithm: A Fundamental Operation in Data MiningMaelo BorinqueñoÎncă nu există evaluări

- IDSA - Lesson 1Document10 paginiIDSA - Lesson 1orionsiguenzaÎncă nu există evaluări

- CS583 - Data Mining and Text MiningDocument22 paginiCS583 - Data Mining and Text MiningUsman worksÎncă nu există evaluări

- DBSCANDocument5 paginiDBSCANRandom PersonÎncă nu există evaluări

- Assessment IDocument16 paginiAssessment IIshita JindalÎncă nu există evaluări

- A Survey On Crop Prediction Using Machine Learning ApproachDocument4 paginiA Survey On Crop Prediction Using Machine Learning ApproachKOLLIPALLI HARIPRASADÎncă nu există evaluări

- Deep Convolutional Autoencoder For Assessment ofDocument7 paginiDeep Convolutional Autoencoder For Assessment ofRadim SlovakÎncă nu există evaluări

- Zero-Shot Learning Approach To Adaptive Cybersecurity Using Explainable AIDocument10 paginiZero-Shot Learning Approach To Adaptive Cybersecurity Using Explainable AIKhalid SyfullahÎncă nu există evaluări

- 2 & 16 Mark Questions and Answers: Enterprise Resource PlanningDocument33 pagini2 & 16 Mark Questions and Answers: Enterprise Resource PlanningBalachandar Ramamurthy82% (11)

- ML NotesDocument60 paginiML NotesshilpaÎncă nu există evaluări

- DWDM 01 IntroductionDocument43 paginiDWDM 01 IntroductionPranav A.RÎncă nu există evaluări

- TAVANI (1999) Informational Privacy, Data Mining, and The InternetDocument10 paginiTAVANI (1999) Informational Privacy, Data Mining, and The InternetRoberto Andrés Navarro DolmestchÎncă nu există evaluări

- Introduction To Information Technology Turban, Rainer and Potter John Wiley & Sons, IncDocument38 paginiIntroduction To Information Technology Turban, Rainer and Potter John Wiley & Sons, IncAKGÎncă nu există evaluări

- 1 - A Survey of Intrusion Detection Models Based On NSL-KDD Data Set (IEEE)Document6 pagini1 - A Survey of Intrusion Detection Models Based On NSL-KDD Data Set (IEEE)Bach NguyenÎncă nu există evaluări

- Clustering: K-Means, Agglomerative, DBSCAN: Tan, Steinbach, KumarDocument45 paginiClustering: K-Means, Agglomerative, DBSCAN: Tan, Steinbach, Kumarhub23Încă nu există evaluări

- 1.1. Crime: Chapter-1Document24 pagini1.1. Crime: Chapter-1sandesh devaramaneÎncă nu există evaluări

- Paper 1-Machine Learning For Bioclimatic ModellingDocument8 paginiPaper 1-Machine Learning For Bioclimatic ModellingEditor IJACSAÎncă nu există evaluări

- Unit 4Document65 paginiUnit 4lghmshariÎncă nu există evaluări

- SlidesDocument27 paginiSlidesJuNaid SheikhÎncă nu există evaluări