Documente Academic

Documente Profesional

Documente Cultură

A Neural Network Approach Based On Agent To Predict Stock Performance

Încărcat de

Dwight ThothTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

A Neural Network Approach Based On Agent To Predict Stock Performance

Încărcat de

Dwight ThothDrepturi de autor:

Formate disponibile

A Neural Network Approach Based on Agent to

Predict Stock Performance

Yanhua Shao1,2, Jianshi Li1 Feng Tian1, Tianjian Chen1, Jinrong wang1

1. College of Computer Science & technology 2. College of Economics & Management

Guizhou University Guizhou University for Nationalities

Guiyang, Guizhou Province, China Guiyang, Guizhou Province, China

online-8j@163.com sh_yh2002@163.com

AbstractPredicting stock performance is a very large and

profitable area of study. A large number of studies have been II. NEURAL NETWORK

reported in literature with reference to the use of artificial neural Neural networks, as structures very close, in econometric

network in modeling stock performance in western countries. terms, to nonlinear multidimensional regression functions:

However, not much work along the approach to neural network

based on agent has been reported. This thesis focuses on the y = f (B f (A.x)).

development and the simulation of a stock market performance

model of utilizing a neural network approach base on agent. This We can imagine a class of simple Artificial Neural

model is easy to understand, and can be easily implemented as a Networks (ANN), the multilayer feed forward network, as a

software simulation. First we will discuss the basic concepts system of connections linking several processing units of the

behind this type of neural network based on agent, then, we'll get kind shown in Fig. 1.

into some of the more application ideas.

Keywords-artificial neural network; agent; environment-rules-

agents scheme; cross target method a0 wk0 1

n

wk1

I. INTRODUCTION

a1

a w

s =0

s ks

0

an wkn

Because of their ability to deal with uncertain, fuzzy, or

insufficient data which fluctuate rapidly in very short periods

of time, neural networks have become very important method Fig. 1 a node or artificial neural

for predictions [Ref. 1]. These include business forecasting, The processing element (PE) can be considered as an over

credit scoring, bond rating, business failure prediction, simplified sketch of a natural neuron, where the parameters wkj

medicine, pattern recognition and image processing. A large represent the strength of the synapses. The aj values

number of studies have been reported in literature with (activations) are the inputs of the PE or neuron, which

reference to the use of ANN in modeling stock prices. calculates their weighted sum (this operation explains the use

However, not much work along the approach to neural network of the conventional name weight to designate the parameters

based on agent has been reported. of a function in the ANN jargon). The same operation, in

Stock market is a special example of complex economics matrix algebra, is done by the product Ax, where the x vector

system [Ref. 2, 3, 4]. Conventional stock market model bases contains the aj activations and the matrix A the wkj parameters.

on the assumption that the trader takes action rationally in The PE produces an output applying a non linear

accordance with market. Stock market seems to be a dead transformation to the weighted sum, as that shown in equation

physical system on the premise of the model assumption. (1), where k is the parameter that determines the closeness of

However, the assumption is far away from the realistic stock the function to a crude step function.

market, people often characterize stock market by the language

that express human psychology, such as stock market durative 1

f (z) =

downturn, crazy going up. Furthermore, phenomena like 1 + e ikz (1)

bubbles and crashes can emerge as collective phenomena. We

will put emphasis on how to supersede agent with neural Each row of A and B contains the weights of a PE. To find

network and how to use a neural network approach to the self- the A and B values (i.e. the ANN parameters) we have to use a

development of consistency in agents' behavior in this paper. numerical approximation with a simplified gradient method,

the socalled back propagation method [Ref. 5, 6] (which

comes from propagating back the error measures from the

output layer to the hidden one), starting with random

parameters and adapting the performances of the ANN function

978-1-4244-2108-4/08/$25.00 2008 IEEE 1

on the basis of a set of training examples. Other methods also B. CT Scheme

exist, but the back propagation is used here for its simplicity In our model, there is a type of artificial neural network

and robustness. agent: i.e. BP-CT ANN agent, which not only forecast price by

The process of parameter determination is also called using BP algorithm, but also generate target that vary

learning, because the quality of the result is obviously related continuously by using CT (CrossTarget) scheme [Ref. 7, 8].

to the number of numerical iterations of the trial and errors The name crosstarget comes from the technique used to

correction cycle upon the examples (patterns, always in the figure out the targets necessary to train the ANN representing

ANN jargon). Generally a second set of examples, the the artificial adaptive agents (AAAs) that populate our

verification set, not used in the learning phase, which is instead experiment. The characteristic of CT: the output of agent has

employed to check the performances of the ANN function. been split into actions and effects. Both the targets necessary to

train the network from the point of view of the actions and

III. MODEL OF STOCK MARKET those connected with the effects are built in a crossed way.

Targets in learning process are: (I) on one side, the actual

A. Model Structure effects-measured through accounting rules-of the actions made

Swarm is a natural candidate to this kind of structures, but by the simulated subject; (II) on the other side, the actions

we need some degree of standardization, mainly when we go needed to match guessed effects.

from simple models to complex results. For this reason, we Fig. 3 describes an AAA (Artificial Adaptive Agent)

introduce here a general scheme-ERA (Environment-rules- learning and behaving in a CT scheme. The AAA has to

Agents) [Ref. 7, 8] that can be employed in building agent produce guesses about its own actions and related effects on

based simulations, shown in Fig. 2. the basis of an information set (the input elements are I1, ,

Ik), such as the data exit in the initial file.

ANN ANN ANN

Agent Rule-Master Rule-Maker

Random

Environment Environment

Agent Action Effects (Actual) Targets

Requested Action to Match

Random

Imitating Rule-Master

Action Effects (Guess) Action (Guess)

Agent

E1 E2 Em A1 A2 An Output

Fig. 2 model of stock market

The main value of the ERA scheme is that it keeps both the Hidden

environment, which models the context by means of rules and

general data, and the agents, with their private data, at different

conceptual levels. Another advantage of using the ERA

structure is its modularity. I1 I2 Ik Input

In this model, agent behavior is determined by external

objects, named RuleMasters, which can be interpreted as Fig. 3 CT Scheme

abstract representations of the cognition of the agent. rule The acting and learning algorithm of CT: first of all, we

Masters obtain the information necessary to apply rules from introduce a generic effect E1 arising from two actions, named

the agents themselves or from special agents charged with the A1 and A2. The target for the effect is:

task of collecting and distributing data, therefore master agent

behavior (for example, applying rules generated from Rule

Maker to DataVerificationMatrix produce forecast), which can E 1 = f ( A1 , A2 ) (2)

be interpreted as abstract representations of the cognition of the

agent. Where f (*) is a definition, linking actions to effects on an

The Rule Master objects are linked to Rule Maker objects, accounting basis.

whose role is to modify the rules mastering agent behavior (for Our aim here is to obtain an output E1 (the guess made by

example, Rule Maker modifies the weight of neural network by

training DataTrainingMatrix, accordingly learn, modify and the network) closer to E1 , which is the correct measure of the

generate rule). Similarly, information necessary to the Rule effect of actions A1 and A2. The error related to E1 is:

Makers come from the Rule Masters. A Rule Maker may be

"used" by several Rule Masters, for instance to apply the 1

e= ( E1 E 1 ) 2

results of a learning process. e = E1 E1 or 2

978-1-4244-2108-4/08/$25.00 2008 IEEE 2

To minimize the error, we back propagate it through algorithm, but also generate target that vary continuously;

network weights. Random agent, which choose price and act (buy or sell)

randomly; Imitating agent, which choose action by imitating

Our aim is now to find the actions, as outputs of our (based on the price of market or the last N agents action).

network, more consistent with the outputs produced by the

effect side. So we have to correct A1 and A2 to made them ANN agent has the structure of experiment with three feed

closer to A1 and A2 , which are actions consistent with the output forward layers as follows:

E1. We cannot figure out the targets for A1 and A2 separately. The number of node in input layer is seven: meanprice5, the

From (2) we have: mean price of the t-5 (t is today) day; meanprice4, meanprice3,

meanprice2, meanprice1, the mean price of the t-4, t-3, t-2, t-1

A1 = g 1 ( E1 , A2 )

day successively. WealthQuantity0: the wealth of the previous

(3) day. ShareQuantity0: the share of the stock of the previous day.

Neural network design (once the input variables have been

A2 = g 2 ( E1 , A1 ) selected) follows a number of stages:

(4)

Select an initial configuration (typically, one hidden

Choosing a random value 1 from a random uniform

layer with the number of hidden units set to half the

sum of the number of input and output units).

distribution whose support is the closed interval [0, 1] and

setting 2 =1- 1 , from (3) and (4) we obtain:

Iteratively conduct a number of experiments with each

configuration, retaining the best network (in terms of

selection error) found. A number of experiments are

A1 = g 1 ( E 1 e 1 , A2 ) required with each configuration to avoid being fooled

(5) if training locates a local minimum, and it is also best

to resample.

A 2 = g 2 ( E 1 e 2 , A1 ) On each experiment, if under-learning occurs (the

(6)

network doesn't achieve an acceptable performance

level) try adding more neurons to the hidden layer(s).

Functions g1 and g2, being obtained from definitions that If this doesn't help, try adding an extra hidden layer.

link actions to effects mainly on an accounting basis, usually

have linear specifications. The errors to be minimized are: If over-learning occurs (selection error starts to rise)

try removing hidden units (and possibly layers).

a1 = A1 A1 Once have experimentally determined an effective

configuration for networks, resample and generate new

networks with that configuration.

a 2 = A 2 A2 According with above approach, the number of node in

hidden layer is five;

When the actions determine multiple effects, they are

The number of node in output layer is three:

included in multiple definitions of effects. So, those actions

WealthQuantity, the predict wealth of today; ShareQuantity,

will be affected by several corrections, only the largest absolute

the predict share of stock of today; BuySellp, the predict price

value is chosen.

of stock of today.

Building agent using CT method, beyond generating

internal consistency (IC), agents can develop other B. The Result of the Simulation experiment

characteristics, for example the capability of adopting actions Simple hypothesis: two hundred agents were distributed all

(following external proposals, EP) or evaluations of effects over the market, 30 percent of the agents are BP ANN agents,

(following external objectives, EO) suggested from the 10 percent of the agents are BP-CT ANN agents, 30 percent of

environment (for example, following rules) or from other the agents are Random agents, 30 percent of the agents are

agents (for examples, imitating them). Imitating agents (MarketImitating agent and LocallyImitating

This model have two outputs of effects: WealthQuantit\, agent are 20 percent and 10 percent respectively).

shareQuantity; one output of action: buySellp. Others parameters are: agentProbToAct =0.15; alpha =0.8;

BuySellproImit = 0.4; maxOrderNumber = 2; eps = 0.6;

IV. SIMULATION E XPERIMENT

probOfLocallmition =0.3; localHistoryLength =15;

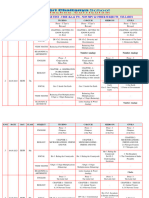

A. The design of the Experiment structure

weightRange =0.3; patternNumberInVerificationSet = -1;

There are four types of agents: BP ANN agent, which

forecast price by using Back Propagation (BP) algorithm; BP- patternNumberInTrainingSet = -1;

CT ANN agent, which not only forecast price by using BP epochNumberInEachTrainingCycle = 1.

978-1-4244-2108-4/08/$25.00 2008 IEEE 3

Fig. 4 and 5 reflect the shifty trend of the price and the V. CONCLUSION

number of orders with time elapsing respectively. Artificial Neural Networks offer qualitative methods for

business and economic systems that traditional quantitative

tools in statistics and econometrics cannot quantify due to the

complexity in translating the systems into precise mathematical

functions. Under normal conditions, in most cases, a good

neural network will outperform most other current stock

market predictors and be a very worthwhile. In this paper, we

have presented some preliminary results of the simulations

with an artificial stock market. The main conclusions are that

information plays a crucial role in the way the market behaves.

The second main conclusion is that only when introducing

heterogeneity amongst the agents, does the model generate a

market dynamics which exhibits similar characteristics as real

world stock markets.

Fig. 4 the time sequence of the price

This experiment generates increasing and decreasing price

sequences with relevant volatility. Moreover, the experiment ACKNOWLEDGMENT

reproduces some exceptional phenomena as a consequence of This paper is supported by the College of Computer

the rules of the market, such as bubbles, crashes and volatility Science and Technology of the Guizhou University, College of

congregation. Economics and Management of the Guizhou University for

Nationalities.

REFERENCES

[1] Schoeneburg, E., Stock Price Prediction Using Neural Networks: A

Project Report, Neuro-computing, vol. 2, 1990, pp. 17-27.

[2] John H. Holland. Hidden Order: How Adaptation Builds Complexity.

Addison Wesley Publishing Company, 1995.

[3] John H. Holland. Emergence: From Chaos to Order. Addison-Wesley

Publishing Company, 1998.

[4] Arthur W B, Holland J, Lebaron B, et al. Asset Pricing under

Endogenous Expectations in an Artificial Stock Market [C]//Arthur W

B, Durlauf S, Lane D. The Economy as an Evolving Complex System II.

Boston: Addison-Wesley, 1997, pp: 15-44.

[5] Anderson, J.A., An Introduction to Neural Networks, Cambridge, MA:

Fig. 5 the time sequence of the orders The MIT Press, 1995.

With the interaction of heterogeneous agents and structured

[6] Zekic, M., Neural Network Applications in Stock Market PredictionsA

environments or markets, we use the cross targets technique to Methodology analysis, University of Josip Juraj Strossmanyer in Osijek,

train artificial neural networks to develop minimal behavioral 1998.

rules. Moreover, generated sequences of prices linked to the [7] Pietro Terna (2000a). Self-Development of Consistency in Agents'

agents actions in nonlinear ways. Therefore, the experiment Behavior, in F. Luna and B. Stefansson (eds.), Economic Simulations in

satisfies to understand the dynamic specialty of stock market Swarm: Agent-Based Modeling and Object Oriented Programming.

fully. Dordrecht and London, Kluwer Academic, 2000.

[8] Gilbert, N., Terna, P., How to build and use agent-based models in

social science, Mind & Society, no. 1, 2000..

978-1-4244-2108-4/08/$25.00 2008 IEEE 4

S-ar putea să vă placă și

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5795)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1091)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- Electromagnet Lab ReportDocument2 paginiElectromagnet Lab ReportAnonymous cAOc8lc5PR40% (5)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- f6ea499c37d63b3801dee6dd82a4aaf2_320a9a62d020a9964c64b2c44de68cf1Document2 paginif6ea499c37d63b3801dee6dd82a4aaf2_320a9a62d020a9964c64b2c44de68cf1Hardik Gupta0% (1)

- Detection of Repetitive Forex Chart PatternsDocument8 paginiDetection of Repetitive Forex Chart PatternsDwight ThothÎncă nu există evaluări

- 688 852 IS S18 MarksonDocument4 pagini688 852 IS S18 MarksonDwight ThothÎncă nu există evaluări

- Introduction To Programming Using Fortran 95/2003/2008: Ed JorgensenDocument237 paginiIntroduction To Programming Using Fortran 95/2003/2008: Ed JorgensenDwight ThothÎncă nu există evaluări

- Drools Tutorial Practice: Caroline Rizzi RaymundoDocument45 paginiDrools Tutorial Practice: Caroline Rizzi RaymundoDwight ThothÎncă nu există evaluări

- ECA DL TransformacoesDocument92 paginiECA DL TransformacoesDwight ThothÎncă nu există evaluări

- Context Conceptual ModellingDocument54 paginiContext Conceptual ModellingDwight ThothÎncă nu există evaluări

- Optimal Design: of Volatility-Driven Algo-Alpha Trading StrategiesDocument1 paginăOptimal Design: of Volatility-Driven Algo-Alpha Trading StrategiesDwight ThothÎncă nu există evaluări

- Gerson J. Ferreira: Introduction To Computational PhysicsDocument99 paginiGerson J. Ferreira: Introduction To Computational PhysicsDwight ThothÎncă nu există evaluări

- Uma História Do ConhecimentoDocument32 paginiUma História Do ConhecimentoDwight ThothÎncă nu există evaluări

- The Limits of Noise Trading - An Experimental AnalysisDocument48 paginiThe Limits of Noise Trading - An Experimental AnalysisDwight ThothÎncă nu există evaluări

- Fama, 1970, P. 383!, Is An Important Theme See Shiller, 1989, P. 2!, and ADocument26 paginiFama, 1970, P. 383!, Is An Important Theme See Shiller, 1989, P. 2!, and ADwight ThothÎncă nu există evaluări

- An Intelligent Short Term Stock Trading Fuzzy System For AssistingDocument55 paginiAn Intelligent Short Term Stock Trading Fuzzy System For AssistingDwight ThothÎncă nu există evaluări

- Statistical Dynamical Models of Multivariate Financial Time SeriesDocument241 paginiStatistical Dynamical Models of Multivariate Financial Time SeriesDwight ThothÎncă nu există evaluări

- Book 1Document2 paginiBook 1Dwight ThothÎncă nu există evaluări

- Fluctuation Prediction of Stock Market Index by Legendre Neural NetworkDocument10 paginiFluctuation Prediction of Stock Market Index by Legendre Neural NetworkDwight ThothÎncă nu există evaluări

- Bayesianism AustrianDocument12 paginiBayesianism AustrianDwight ThothÎncă nu există evaluări

- Induction and Deduction in Bayesian Data AnalysisDocument12 paginiInduction and Deduction in Bayesian Data AnalysisDwight ThothÎncă nu există evaluări

- Grammar: Present Simple and Present ContinuousDocument9 paginiGrammar: Present Simple and Present ContinuousMarialucia LugnaniÎncă nu există evaluări

- Exercise-6 1 1 2-6 1 1 5Document7 paginiExercise-6 1 1 2-6 1 1 5Ramgie Danielle NamolÎncă nu există evaluări

- For Immediate ReleaseDocument3 paginiFor Immediate ReleaseLiberty Building Forensics GroupÎncă nu există evaluări

- National Conference: Indo Asian Academy Degree College Indo Asian Women'S Degree CollegeDocument2 paginiNational Conference: Indo Asian Academy Degree College Indo Asian Women'S Degree CollegeajaxÎncă nu există evaluări

- High Speed Design Techniques PDFDocument416 paginiHigh Speed Design Techniques PDFbolermÎncă nu există evaluări

- Agri Mapping UEGISDocument17 paginiAgri Mapping UEGISnarockavÎncă nu există evaluări

- AbsenteeismsmawfinalDocument13 paginiAbsenteeismsmawfinalJohn Ray VelascoÎncă nu există evaluări

- A Philosophy of Software DesignDocument6 paginiA Philosophy of Software Designyazid yazidcoders0% (1)

- GAM: The Predictive Modeling Silver Bullet: Author: Kim LarsenDocument27 paginiGAM: The Predictive Modeling Silver Bullet: Author: Kim LarsenNick CoronaÎncă nu există evaluări

- Unnaturalness in English Vietnamese Translation - Causes and CuresDocument65 paginiUnnaturalness in English Vietnamese Translation - Causes and CuresAnonymous 75JL8fvYbÎncă nu există evaluări

- A10 0250 (Rev. H 2000.09) EN - TECHNICAL SPECIFICATIONS SAFETY - REGULATION DEFINITION - PRESENTATIONDocument9 paginiA10 0250 (Rev. H 2000.09) EN - TECHNICAL SPECIFICATIONS SAFETY - REGULATION DEFINITION - PRESENTATIONDiego CamargoÎncă nu există evaluări

- SQADocument10 paginiSQAElvin Joseph Pualengco MendozaÎncă nu există evaluări

- Correlation & RegressionDocument26 paginiCorrelation & Regressionabhilashaupadhyaya100% (1)

- CS Lewis - The Discarded ImageDocument4 paginiCS Lewis - The Discarded Imagejscavani100% (2)

- Xii Practical Part B (2022-23) PDFDocument14 paginiXii Practical Part B (2022-23) PDFanshika goelÎncă nu există evaluări

- Ws6 ManualDocument17 paginiWs6 ManualNemanja StankovicÎncă nu există evaluări

- Ridpath History of The United StatesDocument437 paginiRidpath History of The United StatesJohn StrohlÎncă nu există evaluări

- Teaching Resume-2Document2 paginiTeaching Resume-2api-490943277Încă nu există evaluări

- The Eight Types of Interview QuestionsDocument2 paginiThe Eight Types of Interview QuestionsKayla Camille A. Miguel100% (1)

- Number System ConversionDocument5 paginiNumber System Conversionrakotogogo1Încă nu există evaluări

- Auto-Gate ReportDocument21 paginiAuto-Gate Reportstamford_bridgeÎncă nu există evaluări

- Using The Developer Console To Execute Apex CodeDocument5 paginiUsing The Developer Console To Execute Apex Codeksr131Încă nu există evaluări

- Methanation CatalystDocument4 paginiMethanation CatalystBạch Trung Phú100% (2)

- Curriculum Vitae: Akiggundu@kari - Go.ugDocument14 paginiCurriculum Vitae: Akiggundu@kari - Go.ugtahainam93Încă nu există evaluări

- Infinity Meta Weekly Ot - Ka & TN - Non MPC & Other Subjects - SyllabusDocument2 paginiInfinity Meta Weekly Ot - Ka & TN - Non MPC & Other Subjects - SyllabusR PranavÎncă nu există evaluări

- Memory Aid Philo of LawDocument5 paginiMemory Aid Philo of LawMichelangelo Tiu100% (4)

- Chapter 3 Consumers Constrained ChoiceDocument9 paginiChapter 3 Consumers Constrained ChoiceJanny XuÎncă nu există evaluări

- Bulanadi Chapter 1 To 3Document33 paginiBulanadi Chapter 1 To 3Anonymous yElhvOhPnÎncă nu există evaluări