Documente Academic

Documente Profesional

Documente Cultură

Summary of The Article: A Few Useful Things To Know About Machine Learning

Încărcat de

Ege Erdem0 evaluări0% au considerat acest document util (0 voturi)

111 vizualizări3 pagini1. Machine learning algorithms can provide cheap solutions to ambitious tasks by learning from examples rather than having algorithms manually constructed.

2. Machine learning consists of three main components: representation of data, evaluation of classifiers, and optimization to find the best classifier.

3. The goal of machine learning is to generalize beyond the training data to make accurate predictions, rather than just achieving success on the training data itself. Overfitting the training data can result in poor generalization to new examples.

Descriere originală:

Summary of the ML article

Titlu original

Summary of the Article : A Few Useful Things to Know about Machine Learning

Drepturi de autor

© © All Rights Reserved

Formate disponibile

DOCX, PDF, TXT sau citiți online pe Scribd

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest document1. Machine learning algorithms can provide cheap solutions to ambitious tasks by learning from examples rather than having algorithms manually constructed.

2. Machine learning consists of three main components: representation of data, evaluation of classifiers, and optimization to find the best classifier.

3. The goal of machine learning is to generalize beyond the training data to make accurate predictions, rather than just achieving success on the training data itself. Overfitting the training data can result in poor generalization to new examples.

Drepturi de autor:

© All Rights Reserved

Formate disponibile

Descărcați ca DOCX, PDF, TXT sau citiți online pe Scribd

0 evaluări0% au considerat acest document util (0 voturi)

111 vizualizări3 paginiSummary of The Article: A Few Useful Things To Know About Machine Learning

Încărcat de

Ege Erdem1. Machine learning algorithms can provide cheap solutions to ambitious tasks by learning from examples rather than having algorithms manually constructed.

2. Machine learning consists of three main components: representation of data, evaluation of classifiers, and optimization to find the best classifier.

3. The goal of machine learning is to generalize beyond the training data to make accurate predictions, rather than just achieving success on the training data itself. Overfitting the training data can result in poor generalization to new examples.

Drepturi de autor:

© All Rights Reserved

Formate disponibile

Descărcați ca DOCX, PDF, TXT sau citiți online pe Scribd

Sunteți pe pagina 1din 3

A Few Useful Things to Know about Machine Learning

Machine learning algorithms provide feasible and cheap solutions for ambitious tasks

by generalizing from examples. Purpose of the article is to show imporant points to pay

attention while dealing with machine learning applications.

1. Introduction

In machine learning, instead of manually constructing algorithms, systems learn from

data automatically. One type of machine learning is making classifications. A

classifier outputs a single value, the class) by using vector of feature values as an

input. Spam filter is an example of this. A learner inputs training set which consists of

observed inputs and outputs a classifier.

2. Learning = Representation +Evaluation + Optimization

Numerous learning algorithms exist in machine learning, yet they consists of three

components: Representation (a formal language which computer handles), evaluation

(to distinguish good/bad classifiers) and optimization (to find best classifier). There is

no simple rule or trick to choose components and many learners even may have both

discrete and continuous components.

3. Its Generalizaton That Counts

The ultimate purpose of machine learning is to generalize beyond the training set. If

you do test on your training data, you may have an illusion of success, this is the most

common msitake among beginners. In machine learning, training set errors should be

used wisely since unlike the most other applications, we dont have access to the

function which we want to optimize in machine learning.

4. Data Alone Is Not Enough

To generalize beyond, learners should combine knowledge with data. Machine

learning is getting more from less and very general assumptions are often well enough

to provide progress.

5. Overfitting Has Many Faces

If a classifier is succesfull among training data but very weak on generalizing, there is

probably an overfitting. Strong false assumptions may be better than weak true

assumptions. It is possible to avoid overfitting by falling into the opposite error of

underfitting but there is no single technique for every case.

6. Intuiton Fails In High Dimensions

Many systems may work well in low dimensions but may become useless when the

dimensionality is high. As dimensionality grows, generalizing truely become much

more harder. Since we are used to 3D world, our intuitions become wrong in high

dimensions. In high dimensionality, having more features even may be outweighted by

negative effect of dimensionality. Luckily, in most cases examples are concentrated

near a low dimensional manifold.

7. Theoretical Guarantees Are Not What They Seem

Theoretcial guarantees are not a criterion for practical decisions, but they may be the

source of understanding and be the driving force for the design of the algorithm.

8. Feature Engineering Is The Key

If one have lots of features that correlate well with the class, learning becomes easy.

You can construct features from the raw data that is beneficial to learning. Actually little

time is spent actually doing machine learning. Time goes for having, processing and

trying for the data.

9. More Data Beats A Cleverer Algorithm

A weak algorithm may outperform the better by having more data. But out 2 main

limited sources are time and memory. So even if you have numerous data, you may

not have time to process it. All learners basically work by grouping nearby examples

into the same class. Learners can produce different frontiers while still making the

same predictions. This is why powerful learners may be accurate eventhough they are

not stable.

10. Learn Many Models, Not Just One

There is no such thing like best learner. It varies from application to application. If you

combine different learners, you obtain better results. It is known as model ensembles

and involvse techniques like bagging, boosting, stacking.

11. Simplicity Does Not Imply Accuracy

There is no direct connection between simplicity and accuracy but yet simpler

hypotheses should be chosen because of its own virtue.

12. Representable Does Not Imply Learnable

Can it be represented? and Can it be learned? is two different questions that should

be thought.

13. Correlation Does Not Imply Causation

Correlations may be a sign for a potential casual relation but it is not necessarily so.

Some learning algorithms may reach causal info from observational data where

predictive variables are not under the control of the learner.

Conclusion

This paper gives brief information about most misinterpreted thought in machine

learning and aims to show pitfalls that should be avoided.

S-ar putea să vă placă și

- What Are ArticlesDocument29 paginiWhat Are ArticlesmitutrehanÎncă nu există evaluări

- ESP Use To Teach VocabularyDocument39 paginiESP Use To Teach Vocabularyfatima zahra100% (2)

- Noun ClausesDocument6 paginiNoun ClausesAgus MuhromÎncă nu există evaluări

- Video in ELT-Theoretical and Pedagogical Foundations: Johanna E. KatchenDocument4 paginiVideo in ELT-Theoretical and Pedagogical Foundations: Johanna E. KatchenIrene Lin FlorenteÎncă nu există evaluări

- Transactional Writing: Lesson 1 Different Writing FormsDocument43 paginiTransactional Writing: Lesson 1 Different Writing FormsRania FarranÎncă nu există evaluări

- Use Examples: Modals in English Grammar 1. CanDocument5 paginiUse Examples: Modals in English Grammar 1. Canangelito peraÎncă nu există evaluări

- Countable Uncountable Nouns KETDocument24 paginiCountable Uncountable Nouns KETYen Dinh100% (1)

- Conditional SentencesDocument10 paginiConditional SentencesTiada Hari Tanpa HaryÎncă nu există evaluări

- A) Understanding The Content of The ArticlesDocument3 paginiA) Understanding The Content of The ArticlesRohitÎncă nu există evaluări

- Machine Learning (ML) TechniquesDocument14 paginiMachine Learning (ML) TechniquesMukund TiwariÎncă nu există evaluări

- AI Assignment 2Document5 paginiAI Assignment 2Abraham Onyedikachi OguduÎncă nu există evaluări

- AIUnit IIDocument47 paginiAIUnit IIomkar dhumalÎncă nu există evaluări

- ML Assignment 1 PDFDocument6 paginiML Assignment 1 PDFAnubhav MongaÎncă nu există evaluări

- Machine Learning (Fba)Document3 paginiMachine Learning (Fba)Yaseen Nazir MallaÎncă nu există evaluări

- InTech-Types of Machine Learning AlgorithmsDocument30 paginiInTech-Types of Machine Learning AlgorithmsMohit AroraÎncă nu există evaluări

- MACHINE LEARNING FOR BEGINNERS: A Practical Guide to Understanding and Applying Machine Learning Concepts (2023 Beginner Crash Course)De la EverandMACHINE LEARNING FOR BEGINNERS: A Practical Guide to Understanding and Applying Machine Learning Concepts (2023 Beginner Crash Course)Încă nu există evaluări

- Jumpstart Your ML Journey: A Beginner's Handbook to SuccessDe la EverandJumpstart Your ML Journey: A Beginner's Handbook to SuccessÎncă nu există evaluări

- Data Science Interview Questions (#Day11) PDFDocument11 paginiData Science Interview Questions (#Day11) PDFSahil Goutham100% (1)

- واجب ذكاءDocument10 paginiواجب ذكاءahmed- OunÎncă nu există evaluări

- ML AlgosDocument31 paginiML AlgosvivekÎncă nu există evaluări

- Summer Internship ReportDocument27 paginiSummer Internship ReportSyed ShadÎncă nu există evaluări

- Terms in DSDocument6 paginiTerms in DSLeandro Rocha RímuloÎncă nu există evaluări

- Machine Learning - DataDocument11 paginiMachine Learning - DataAdeeba IramÎncă nu există evaluări

- IDS Unit - 3Document4 paginiIDS Unit - 3VrindapareekÎncă nu există evaluări

- An Enlightenment To Machine Learning - RespDocument22 paginiAn Enlightenment To Machine Learning - RespIgorJalesÎncă nu există evaluări

- Types of MLDocument4 paginiTypes of MLchandana kiranÎncă nu există evaluări

- From Novice to ML Practitioner: Your Introduction to Machine LearningDe la EverandFrom Novice to ML Practitioner: Your Introduction to Machine LearningÎncă nu există evaluări

- Machine Learning with Clustering: A Visual Guide for Beginners with Examples in PythonDe la EverandMachine Learning with Clustering: A Visual Guide for Beginners with Examples in PythonÎncă nu există evaluări

- Importany Questions Unit 3 4Document30 paginiImportany Questions Unit 3 4Mubena HussainÎncă nu există evaluări

- 170 Machine Learning Interview Questios - GreatlearningDocument57 pagini170 Machine Learning Interview Questios - GreatlearningLily LaurenÎncă nu există evaluări

- Supervised Machine LearningDocument7 paginiSupervised Machine LearningranamzeeshanÎncă nu există evaluări

- An Enlightenment To Machine LearningDocument16 paginiAn Enlightenment To Machine LearningAnkur Singh100% (1)

- Data Science Interview Questions (#Day9)Document9 paginiData Science Interview Questions (#Day9)ARPAN MAITYÎncă nu există evaluări

- Essay 1Document2 paginiEssay 1Iheb BelgacemÎncă nu există evaluări

- Predictive Modeling: Types, Benefits, and AlgorithmsDocument4 paginiPredictive Modeling: Types, Benefits, and Algorithmsmorph lingÎncă nu există evaluări

- Fundamentals of Machine Learning IIDocument13 paginiFundamentals of Machine Learning IIssakhare2001Încă nu există evaluări

- Machine Learning Explained - Algorithms Are Your FriendDocument7 paginiMachine Learning Explained - Algorithms Are Your FriendpepeÎncă nu există evaluări

- Machine LearningDocument29 paginiMachine LearningCubic SectionÎncă nu există evaluări

- Data Science Unit 5Document11 paginiData Science Unit 5Hanuman JyothiÎncă nu există evaluări

- How To Think About Machine LearningDocument15 paginiHow To Think About Machine LearningprediatechÎncă nu există evaluări

- COS 511: Foundations of Machine LearningDocument7 paginiCOS 511: Foundations of Machine LearningPooja SinhaÎncă nu există evaluări

- Mastering Machine Learning: A Comprehensive Guide to SuccessDe la EverandMastering Machine Learning: A Comprehensive Guide to SuccessÎncă nu există evaluări

- Introduction To Machine Learning Top-Down Approach - Towards Data ScienceDocument6 paginiIntroduction To Machine Learning Top-Down Approach - Towards Data ScienceKashaf BakaliÎncă nu există evaluări

- L01 - Introduction-to-MLDocument10 paginiL01 - Introduction-to-MLsayali sonavaneÎncă nu există evaluări

- Issues in MLDocument2 paginiIssues in MLnawazk8191Încă nu există evaluări

- Underfitting and OverfittingDocument4 paginiUnderfitting and Overfittinghokijic810Încă nu există evaluări

- Assessing and Improving Prediction and Classification: Theory and Algorithms in C++De la EverandAssessing and Improving Prediction and Classification: Theory and Algorithms in C++Încă nu există evaluări

- Supervised Vs UnsupervisedDocument8 paginiSupervised Vs UnsupervisedKhaula MughalÎncă nu există evaluări

- Seminar Report BhaveshDocument25 paginiSeminar Report BhaveshBhavesh yadavÎncă nu există evaluări

- Unit 1Document20 paginiUnit 1aswinhacker28Încă nu există evaluări

- Machine Learning For Beginners Overview of Algorithm TypesStart Learning Machine Learning From HereDocument13 paginiMachine Learning For Beginners Overview of Algorithm TypesStart Learning Machine Learning From HereJuanito AlimañaÎncă nu există evaluări

- Federal University of Lafia: Department of Computer ScienceDocument6 paginiFederal University of Lafia: Department of Computer ScienceKc MamaÎncă nu există evaluări

- Machine Learning: Interview QuestionsDocument21 paginiMachine Learning: Interview QuestionsSL MAÎncă nu există evaluări

- New To Machine Learning? Try To Avoid These MistakesDocument11 paginiNew To Machine Learning? Try To Avoid These MistakesrvinÎncă nu există evaluări

- Turner, Ryan - Python Machine Learning - The Ultimate Beginner's Guide To Learn Python Machine Learning Step by Step Using Scikit-Learn and Tensorflow (2019)Document144 paginiTurner, Ryan - Python Machine Learning - The Ultimate Beginner's Guide To Learn Python Machine Learning Step by Step Using Scikit-Learn and Tensorflow (2019)Daniel GÎncă nu există evaluări

- UntitledDocument2 paginiUntitledANSHIKA NIGAM (RA2011033010106)Încă nu există evaluări

- Chapter IDocument23 paginiChapter Ivits.20731a0433Încă nu există evaluări

- What Are The Basic Concepts in Machine LearningDocument6 paginiWhat Are The Basic Concepts in Machine LearningminalÎncă nu există evaluări

- DISCRETE-TIME RANDOM PROCESS SummaryDocument13 paginiDISCRETE-TIME RANDOM PROCESS SummaryEge ErdemÎncă nu există evaluări

- Bivariate Gaussian-1Document7 paginiBivariate Gaussian-1Ege ErdemÎncă nu există evaluări

- On The Representation and Estimation of Spatial UncertaintyDocument15 paginiOn The Representation and Estimation of Spatial UncertaintyEge ErdemÎncă nu există evaluări

- The Multivariate Gaussian Distribution: 1 Relationship To Univariate GaussiansDocument10 paginiThe Multivariate Gaussian Distribution: 1 Relationship To Univariate Gaussiansandrewjbrereton31Încă nu există evaluări

- Linear Algebra Quiz - Mit - Exam2 - 2Document101 paginiLinear Algebra Quiz - Mit - Exam2 - 2Ege ErdemÎncă nu există evaluări

- Math 215 HW #4 SolutionsDocument11 paginiMath 215 HW #4 SolutionsEge ErdemÎncă nu există evaluări

- FX Global CodeDocument78 paginiFX Global CodeEge ErdemÎncă nu există evaluări

- DTFT Table PDFDocument2 paginiDTFT Table PDFEge ErdemÎncă nu există evaluări

- Chapter4 (2W) - Signal Modeling - Statistical Digital Signal Processing and ModelingDocument137 paginiChapter4 (2W) - Signal Modeling - Statistical Digital Signal Processing and ModelingKhanh NamÎncă nu există evaluări

- Munication Systems PDFDocument389 paginiMunication Systems PDFEduardo Peixoto de AlmeidaÎncă nu există evaluări

- Munication Systems PDFDocument389 paginiMunication Systems PDFEduardo Peixoto de AlmeidaÎncă nu există evaluări

- Theory of Machines HomeworkDocument3 paginiTheory of Machines HomeworkEge ErdemÎncă nu există evaluări

- Music Genre Classification ReportDocument4 paginiMusic Genre Classification ReportEge ErdemÎncă nu există evaluări

- HALI Essence English 1807Document1 paginăHALI Essence English 1807Ege ErdemÎncă nu există evaluări

- The Crave - Jelly Roll MortonDocument3 paginiThe Crave - Jelly Roll MortonVisingTeoh75% (4)

- Wave EquationDocument39 paginiWave EquationEge ErdemÎncă nu există evaluări

- Willow Tree March - em OOOHH X 1 (Hep Beraber) Ooohh X 2Document2 paginiWillow Tree March - em OOOHH X 1 (Hep Beraber) Ooohh X 2Ege ErdemÎncă nu există evaluări

- Chicago Roxie SheetMusicDownloadDocument3 paginiChicago Roxie SheetMusicDownloadDerin Kvaner50% (2)

- Isnt She LovelyDocument1 paginăIsnt She LovelyEge ErdemÎncă nu există evaluări

- Ege Erdem - LT - Concert Hall AcousticsDocument15 paginiEge Erdem - LT - Concert Hall AcousticsEge ErdemÎncă nu există evaluări

- PTK40A Quick Start GuideDocument16 paginiPTK40A Quick Start GuideAFK MasterÎncă nu există evaluări

- Gartner Impact of AI Aug24EEichhorn AudienceDocument36 paginiGartner Impact of AI Aug24EEichhorn AudienceAndreea PironÎncă nu există evaluări

- Mobile Communication Lecture Set 1Document13 paginiMobile Communication Lecture Set 1ngobi25eddyÎncă nu există evaluări

- VM Series DeploymentDocument494 paginiVM Series DeploymentLuís Carlos JrÎncă nu există evaluări

- ELM327 Bluetooth Pin - Key CodeDocument9 paginiELM327 Bluetooth Pin - Key CodeNicole WintÎncă nu există evaluări

- M. Tech. EXAMINATION, May 2019: No. of Printed Pages: 03 Roll No. ......................Document2 paginiM. Tech. EXAMINATION, May 2019: No. of Printed Pages: 03 Roll No. ......................Harshini AÎncă nu există evaluări

- Microsoft AZ-900 July 2023-V1.6Document323 paginiMicrosoft AZ-900 July 2023-V1.6CCIEHOMER100% (1)

- A Comprehensive Survey On Radio Frequency (RF)Document31 paginiA Comprehensive Survey On Radio Frequency (RF)Ambrose SmithÎncă nu există evaluări

- Lamport's Algorithm For Mutual Exclusion in Distributed System - GeeksforGeeksDocument1 paginăLamport's Algorithm For Mutual Exclusion in Distributed System - GeeksforGeeksMako KazamaÎncă nu există evaluări

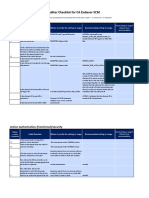

- Auditors ChecklistDocument9 paginiAuditors ChecklistNorah Al-ShamriÎncă nu există evaluări

- 5th Assignment To 12th Assignment Cloud Computing SolutionsDocument25 pagini5th Assignment To 12th Assignment Cloud Computing SolutionsKunchapu Vidya ViharikaÎncă nu există evaluări

- 13.3.2 Lab - Use Ping and Traceroute To Test Network Connectivity - ILMDocument20 pagini13.3.2 Lab - Use Ping and Traceroute To Test Network Connectivity - ILMArun KumarÎncă nu există evaluări

- PM1115UW Setup GuideDocument13 paginiPM1115UW Setup GuideFrancisco Baeza JiménezÎncă nu există evaluări

- Garment ManagementDocument43 paginiGarment ManagementSGamer YTÎncă nu există evaluări

- FLOWCHARTINGDocument58 paginiFLOWCHARTINGChristian Nhick C. BaquiranÎncă nu există evaluări

- Upload Data From Excel File in ABAP Using TEXT - CONVERT - XLS - TO - SAPDocument8 paginiUpload Data From Excel File in ABAP Using TEXT - CONVERT - XLS - TO - SAPKabil RockyÎncă nu există evaluări

- Bit Plane Slicing and Bit Plane CompressionDocument5 paginiBit Plane Slicing and Bit Plane CompressionSharmila ArunÎncă nu există evaluări

- Introduction Learn Structured Ladder Diagram ProgrammingDocument8 paginiIntroduction Learn Structured Ladder Diagram Programmingazizi202Încă nu există evaluări

- Vlsi Lab New Manuel - 1Document87 paginiVlsi Lab New Manuel - 1NAVEENTEJATEJÎncă nu există evaluări

- Computer Abbreviations For All Competitive ExamsDocument9 paginiComputer Abbreviations For All Competitive ExamsVortex 366Încă nu există evaluări

- BACnet - Alarm ManagementDocument4 paginiBACnet - Alarm ManagementMido EllaouiÎncă nu există evaluări

- CESNAV Integrated App InstructionsDocument4 paginiCESNAV Integrated App InstructionskadiriÎncă nu există evaluări

- GeneXus Presentation enDocument21 paginiGeneXus Presentation envaligherÎncă nu există evaluări

- Security Control Categories and Types FactsDocument2 paginiSecurity Control Categories and Types FactsВіталій ІванюкÎncă nu există evaluări

- 6 - Embedded System ProgrammingDocument117 pagini6 - Embedded System ProgrammingWarenya RanminiÎncă nu există evaluări

- Antarctica - The Planet-Positive Software ExpertsDocument24 paginiAntarctica - The Planet-Positive Software ExpertsChat HerrasÎncă nu există evaluări

- Samsung Galaxy A50 - SM-A505F - Administration GuideDocument20 paginiSamsung Galaxy A50 - SM-A505F - Administration GuideandresenriquelunamobileÎncă nu există evaluări

- Chapter 7 Lab Arrays Lab Objectives: Data Mean - The Arithmetic Average of The ScoresDocument4 paginiChapter 7 Lab Arrays Lab Objectives: Data Mean - The Arithmetic Average of The ScoresAkash PatelÎncă nu există evaluări

- CSA 2 Marks MvitDocument13 paginiCSA 2 Marks MvitPrema RajuÎncă nu există evaluări

- Software Project Management: UNIT-2 Prepared by Sathish Kumar/MitDocument28 paginiSoftware Project Management: UNIT-2 Prepared by Sathish Kumar/MitAnbazhagan SelvanathanÎncă nu există evaluări