Documente Academic

Documente Profesional

Documente Cultură

Module 4

Încărcat de

arunlaldsDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Module 4

Încărcat de

arunlaldsDrepturi de autor:

Formate disponibile

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

4.1 SYNTAX ANALYSIS

Syntax Analysis or parsing is the most important phase of a compiler. This

extracts the syntactic units within the input stream

4.1.1 ROLE OF PARSER

The parser looks into the sequence of tokens returned by the lexical analyzer and

extracts the constructs of the language appearing within the sequence. Thus, the role of parser

is

To identify the language constructs present in a given input program. If the parser

determines the input to be a valid one, it outputs a representation of the input in the

form of parse tree.

If the input is grammatically incorrect, the parser declares the detection of syntax error

in the input.

Figure 4.1 position of a parser in compiler model

4.1.2 ERROR HANDLING

Error handling is one of the most important features. The common errors

occurring in programs can be classified into four categories:

1. Lexical Errors: These errors are mainly the spelling mistakes and accidental

insertion of foreign characters, for example, $, if the language does not allow it,

they are mostly caught by the lexical analyzer.

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 84

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

2. Syntactic Errors: These are grammatical mistakes, such as unbalanced parantheses

in arithmetic expressions. These errors occur mostly in the program. The parser

should be able to catch these errors efficiently.

3. Semantic Errors: They involve errors due to undefined variables, incompatible

operands to an operator etc. These errors can be caught by introducing some extra

checks during parsing.

4. Logical Errors: These are errors such as infinite loops. There is no way to catch the

logical errors automatically.

The presence of an error in the input stream leads the parser to an erroneous state,

from where it cannot proceed further until certain portion of its work is undone. The strategies

involved in the process are broadly known as error recovery strategies:

Panic mode

Phrase level

Error Production

Global Correction

Panic mode:

In this case, the parser discards enough number of tokens to reach a descent state

on the detection of error.

Phrase level Recovery:

In this strategy, the parser makes some local corrections on the remaining input on

detection of an error, so that the resulting input stream gives a valid construct of the language.

Error Production:

This involves modifying the grammar of the language to include error situations.

Global Corrections:

This is a theoretical approach. The problem here can be stated as follows:Given

an incorrect input stream x for a grammar G, find another stream y acceptable by G, so that

the number of tokens to be modified to convert x into y is minimum.

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 85

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

4.2 THE SYNTACTIC SPECIFICATION OF

PROGRAMMING LANGUAGES

For the syntactic specification of a programming language we use context

free grammar, which is otherwise called as BNF(Backus-Naur Form). This notation has a

significant advantage as a method of specification for the syntax of a language.

A grammar gives a precise, yet easy to understand, syntactic specification for the

programs of a particular programming language

An efficient parser can be constructed automatically from a properly designed

grammar

A grammar imparts a structure to a program that is useful for its translation into

object code and for the detection of errors.

4.2.1 CONTEXT FREE GRAMMARS

Mathematically the context free grammar can be defined as follows:

“A grammar G = (Vn, Vt, P,S) is said to be context free”

where Vn: A finite set of non-terminals, generally representedby capital

letters: A, B,C,...

Vt: A finite set of terminals, generally represented by small letters,

like a, b, c,..

S : Starting non-terminal, called start symbol of the grammar. S

belongs to Vn.

P: Set of rules or productions in CFG.

All productions in P have the form

where and ( ).

In order to define certain programming languages constructs recursively we

assume that S1 and S2 are statements and E is an expression then we can state that

“if E then S1 else S2” is a statement

or if S1, S2, ...Snare statements then “beginS1, S2, ...Snend” is a statement.

To express this statement using rewriting rules a new category „statement-

list‟ has added to denote any sequence of statements separated by semicolons. So

statement begin statement-list end

statement-list statement | statement; statement-list

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 86

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

The vertical bar represents “or”.

If E1 and E2 are expressions then “E1+ E2 “ is an expression.

We can rewrite the above rule as

expression expression + expression

and read “One way to form an expression is to take two smaller expression and connect them

with a plus sign.

If we use statement to denote the set of statements and expression to denote

the class of expressions, then that above statements or expressions can be expressed using

rewriting rules or production.

statement if expression then statement else statement

So a grammar involves four quantities :terminals, non-terminals, a start symbol, and

productions.

A set of terminal symbols, sometimes referred to as "tokens." The terminals are the

elementary symbols of the language defined by the grammar

A set of non-terminals, sometimes called "syntactic variables." Each nonterminal

represents a set of strings of terminals, in a manner we shall describe.

A set of productions, where each production consists of a nonterminal called the head

or left side of the production, an arrow, and a sequence of terminals and/or non-

terminals, called the body or right side of the production. The intuitive intent of a

production is to specify one of the written forms of a construct; if the head

nonterminal represents a construct, then the body represents a written form of the

construct.

A designation of one of the non-terminals as the start symbol.

4.2.1.1 NOTATIONAL CONVENTIONS

The following are certain notations that are followed in context free grammars:

1. These symbols are usually non terminals:

(i) lower case names such as expression, statement, operator etc.

(ii) italic capital letters near the beginning of the alphabet

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 87

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

(iii)the letter S, which, when it appears, is usually the start symbol.

2. These symbols are usually terminals:

(i) single lower case letters a, b, c, ...

(ii) operator symbols such as +, -, etc.

(iii)Punctuation symbol such as parentheses, comma etc.

(iv) the digits 0, 1,...9

(v) bold face strings such as id or if.

3. Capital symbols near the end of the alphabet, mainly X, Y, Z represents grammar

symbols that is either non terminal or terminals

4. Small letters near the end of the alphabet, chiefly u, v, ...z represent strings of

terminals

5. Lower case Greek letters represent strings of grammar symbols. Thus a

generic production could appear as , indicating that there is a single non

terminal A on the left of the arrow and a string of grammar symbols to the right of

the arrow.

6. If are all productions with A on the left , we can write

as | | |

7. Unless otherwise stated, the left side of the first production is the start symbol.

4.2.2 DERIVATIONS AND PARSE TREES

A grammar derives strings by beginning with the start symbol and repeatedly

replacing a nonterminal by the body of a production for that nonterminal. The terminal strings

that can be derived from the start symbol form the language defined by the grammar.

The basic idea of derivation is to consider productions as rewrite rules:Whenever

we have a nonterminal, we can replace this by the right-hand sideof any production in which

the nonterminal appears on the left-hand side. Wecan do this anywhere in a sequence of

symbols (terminals and non-terminals)and repeat doing so until we have only terminals left.

The resulting sequenceof terminals is a string in the language defined by the grammar.

Formally, wedefine the derivation relation by the three rules.

1. if there is a production

2.

3. if there is a such that

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 88

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

For example, consider the following grammar for arithmetic expressions

| |( )| |

the non-terminal E is an abbreviation for expression. We can take a single E and repeatedly

apply productions in any order to obtain a sequence of replacements. For example:

( ) ( )

We call such a sequence of replacements as derivation of –(id) from E.Consider

the following grammar that derives the string aabbbcc

The following is the derivation for string aabbbcc.In each sequence ofsymbols

underlined the nonterminal that is rewritten in the following step

Here we can write T aabbbcc means that T derives aabbbcc in zero or more

steps. The derivation can be classified into two:

Leftmost derivation

Rightmost Derivation

Leftmost derivation is one in which the leftmost non-terminal is replaced, by

RHS of a production whose LHS is the non-terminal that is being replaced.

Rightmost derivation is one in which the rightmost non-terminal is replaced,

by RHS of a production whose LHS is the non-terminal that is being replaced.For example,

consider the grammar,

| |( )| |

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 89

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

The leftmost derivation for the string a * b + a is

The rightmost derivation for the string a * b + a is

4.2.2.1 SENTENTIAL FORMS

The intermediate strings obtained while deriving a string from a CFG are

called sentential forms ( ). A sentential form ( ) consists of terminals as well as non-

terminals i.e., ( ) . There are two types of sentential forms based upon the derivation

used

Left sentential form

Right sentential form

Left sentential form is an intermediate string in leftmost derivation and right

sentential form is an intermediate string in the rightmost derivation. For example, in the above

derivations is a left sentential form and is a right sentential form in the

rightmost derivation.

4.2.2.2 PARSE TREE

Parse tree is a tree structure used to represent the top down derivation with the

root of the tree as the start symbol of the grammar. The interior nodes of the tree are non-

terminals of the grammar and the leaves are the terminals.

Aparse tree pictorially shows how the start symbol of a grammar derives astring

in the language.If nonterminal A has a production A XYZ, then aparse tree may have an

interior node labeled A with three children labeled X,Y, and Z, from left to right:

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 90

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

A parse tree has the following properties:

The root is labeled by the start symbol.

Each leaf is labeled by a terminal or by

Each interior node is labeled by a nonterminal.

If A is the nonterminal labeling some interior node and X1, X2, . . . , Xn are the

labels of the children of that node from left to right, then there must be a

production A X1X2 . . Xn. Here, X1, X2, . . . ,Xn, each stand for a symbol that is

either a terminal or a nonterminal. As a special case, if A is a production,

then a node labeled A may have a single childlabeled .

The leaves of the parse tree are labeled by non-terminals or terminals and read

from left to right; they constitute the sentential form, called the yield or frontier of the tree.

Example:Consider the CFG

S XX

X XXX | bX | Xb | a

Find the parse tree for the string bbaaaab

Solution:

Given CFG is

S XX

X XXX | bX | Xb | a

and the string is w=bbaaaab.

We begin with S and apply the production S XX

To the leftmost X, let us apply the production X bX. To the right X, let us apply X XXX

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 91

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

Figure 4.2 Derivation of a parse tree from a grammar

Reading from left to right we have produced bbaaaab. These tree diagrams are

called parse trees.

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 92

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

4.2.2.3 AMBIGUITY

A grammar G is said to be ambiguous if there exists more than one parse tree for

the same sentence. An ambiguous grammar can have more than one leftmost and rightmost

derivations. For example, consider the grammar,

| |( )| |

and the string a*a + a may be considered. There are two leftmost derivations for this string as

shown below:

Hence, the given grammar is ambiguous. It is possible to construct two parse trees with the

same yield as shown in figure 4.3.

Figure 4.3 Two parse trees for the string a + a * a

A classical example of ambiguous grammar is that ofif-then-else construct of

many programming languages. Most of the languages have both if-then and if-then-else

versions of the statement. The grammar rules for it are as follows:

|

Now consider the following code segment,

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 93

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

We can have two different parse trees as shown in Figure 4.4(a) and Figure4.4(b).

The first figure shows the situation in which the else is taken with the outer if statement. In the

second case, the else is taken with the inner if. Thus, outer one is an if-then statement while

the inner one is if-then-else statement. Most of the programming languages accept second one

as the correct syntax, that is, else is associated with the innermost if.

(a)

(b)

Figure 4.4 Two parse trees for if-then-else statement

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 94

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

4.2.2.4 ELIMINATING AMBIGUITY

Ambiguities are eliminated by rewriting the grammar. For example, the if-then-

else grammar may be rewritten as follows:

Here, other_stmt represents all other statements part from if. There can be only

one parse tree for this grammar. So we can say that this grammar is unambiguous.

Also we can disambiguate the grammar by specifying the associativity and

precedence of the arithmetic operators.The operator unary minus have the highest precedence

followed by exponential operator ,*, /, + and -.

So the grammar is rewritten such that the precedence and associativity are given

for the operators. The grammar is rewritten starting with the lowest precedence.

4.2.3 PROCEDURE TO CONVERT REGULAR EXPRESSION

TO CFG

Regular expression uses the four operators binary plus, concatenation, unary plus

and star operator. The regular expression r1.r2 is written as the production

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 95

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

Where S1 and S2 are the variables(non-terminals) from which the strings

represented by the regular expressions r1 and r2 may be derived and S is the start symbol of

the new grammar.

Next the binary operator +, it is similar to union operator. Therefore the regular

expressions r1+r2 is written as the production

|

Where S1 and S2 are variables from which the strings given by the regular

expressions r1 and r2 can be derived and S is the start symbol of the new grammar.

When a regular expression r* is encountered, a new start symbol S is added and

the productions given below are added

Where S1 is the start symbol for the set of strings that can be derived from r and S

is the start symbol of the new grammar.

When a regular expression with unary + (r+) is encountered a new start symbol S

is added and the productions given below are added.

Where S2 is the start symbol for the set of strings that can be derived from r and S

is the start symbol of the new grammar.

4.3 BASIC PARSING TECHIQUES

Lexical analysis splits the input into tokens; the purpose of syntax analysis(also

known as parsing) is to recombine these tokens. Not back into a list of characters, but into

something that reflects the structure of the text. This “something” is typically a data structure

called the syntax tree of the text. As the name indicates, this is a tree structure. The leaves of

this tree are the tokens found by the lexical analysis, and if the leaves are read from left to

right, the sequence is the same as in the input text. Hence, what is important in the syntax tree

is how these leaves are combined to form the structure of the tree and how the interior nodes

of the tree are labeled.

4.3.1 PARSERS

A parser for a grammar G is a program that takes as input a string w and produces

as output either a parse tree for w, if w is a sentence of G, or an error message indicating that

w is not a sentence of G. In reality, the parse tree exists only as a sequence of actions made by

stepping through the tree construction process. There are two types of parsers –bottom-up

and top-down.

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 96

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

Bottom-up parser build parse trees from the bottom (leaves) to the top (root),

while top down parsers start with the root and work down to the leaves. In both cases, the

input to the parser is being scanned from left to right, one symbol at a time.

One method used in bottom up parsing is called shift-reduce parsing because it

consists of shifting input symbols on to stack until the right side of a production appears on

top of the stack. The right side may then be replaced by (reduced to) the symbol on the left

side of the production and the process is repeated.

One approach used in top down parsing is recursive descent parsing. In

recursive descent parsing, consists of a set of mutually recursive routines that may require

backtracking to create the parse tree.

Representation of a parse tree

There are two basic types of representation: implicit and Explicit. The sequence

of productions used in some derivation is an example of implicit derivation. A linked list

structure for the parse tree is an explicit representation.

The derivation in which the leftmost non terminal is replaced at every step is said

to be leftmost. If by a step in which the leftmost non-terminal in is replaced, we

write ⇒ .

Every sentence of a language has both a leftmost and a rightmost derivation. To

find one leftmost derivation for a sentence w, we can take any derivation for w and construct

from it the corresponding parse tree T. From T we can construct a leftmost derivation by

traversing the tree top down. We begin with the start symbol S, which corresponds to the root

of T. We then construct the leftmost derivation.

⇒ ⇒ ⇒

corresponding to T, one step at a time, using the following procedure.

If the root labeled S has children labeled A, B, and C, we create the first step of

the leftmost derivation by replacing S by the labels of its children; i.e., ⇒ . Here S is

and ABC is .

If the node for A has children labeled XYZ in T, we create the next step of the

derivation by replacing A by the labels of its children; i.e,, ABC⇒ is .If we

continue in this manner we finally got all terminals in the leaf node.

Example:

Consider the grammar

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 97

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

(Draw the parse tree for the leftmost and rightmost derivation for this grammar)

4.3.2 PREDICTIVE PARSING

A non recursive top down parsing method.Parser “predicts” which production to

use.It removes backtracking by fixing one production for every non-terminal and input token(s)

Predictive parsers accept LL(k) languages

First L stands for left to right scan of input

Second L stands for leftmost derivation

k stands for number of lookahead token

In general, the selection of a production for a non-terminal may involve trial-and-error; tat is,

we may have to try a production and backtrack to try another production if the first is found to

be unsuitable. A production is unsuitable if, after using the production, we cannot complete

the tree to match the input string. Predictive parsing is a special form of recursive-descent

parsing, in which the current input token unambiguously determines the production to be

applied at each step. After eliminating left recursion and left factoring, we can obtain a

grammar that can be parsed by a recursive-descent parser that needs no backtracking.

Basically, it removes the need of backtracking by fixing one production for every non-

terminal and input tokens.

Predictive parsers accept LL(k) languages where:

• The input is scanned from left to right.

• Leftmost derivations are derived for the strings.

• The number of lookahead tokens is k.

However, in practice, LL(1) grammars are used i.e. one lookahead token is used.

• Predictive parser can be implemented by maintaining an external stack

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 98

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

Parse table is a two dimensional array

M[X,a] where “X” is a non terminal and “a” is a terminal of the grammar

• Steps to be followed

– Remove left recursion

– Compute first sets

– Compute follow sets

– Construct the parse table

Compute first sets

To compute FIRST(X) for all grammar symbols X, apply the following rules until no more

terminals or ε can be added to any FIRST set.

1. If X is terminal, then First (X) is {X}.

2. If X → ε is a production then add ε to FIRST(X).

3. If X is a non terminal and X → Y1Y2.........Yk is a production, then place a in First (X) if for

some i, a is in FIRST(Yi) and ε is in all of FIRST(Y1), FIRST(Y2),……, FIRST(Yi-1); that

is, Y1……Yi-1 * ε. If ε is in FIRST(Yj) for all i = 1,2,……,k, then add ε to FIRST(X). For

example, everything in FIRST(Y1) is surely in FIRST(X). If Y1 does not derive ε, then we

add nothing more to FIRST(X), but if Y1 * ε, then we add FIRST(Y2) and so on.

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 99

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

Compute follow sets

To compute FOLLOW(A) for all non-terminals A, apply the following rules until nothing can

be added to any FOLLOW set:

1. Place $ in FOLLOW(S), where S is the start symbol and $ is the input right endmarker.

2. If there is a production A → αBß, then everything in FIRST(ß) except for ε is placed in

FOLLOW(B).

3. If there is a production A → αß, or a production A → αBß where FIRST(ß) contains ε (i.e.

ß * ε), then everything in FOLLOW(A) is in FOLLOW(B).

Constructing parse table

The following algorithm can be used to construct a predictive parsing table for a grammar G.

The idea behind the algorithm is the following. Suppose A α is a production with a in

FIRST(α). Then, the parser will expand A by α when the current input symbol is a. The only

complication occurs when α = ε or α * ε. In this case, we should again expand A by α if the

current input symbol is in FOLLOW(A), or if the $ on the input has been reached and $ is in

FOLLOW(A). So, the algorithm goes as follows:

1. For each production A α of the grammar, do steps 2 and 3.

2. For each terminal a in FIRST(α), add A α to M[A,a].

3. If ε is in FIRST(α), add A α to M[A,b] for each terminal b in FOLLOW(A). If ε is in

FIRST(α) and $ is in FOLLOW(A), add A α to M[A,$].

4. Make each undefined entry of M be error.

The construction of the parse table is aided by two functions associated with a grammar G.

These functions, FIRST and FOLLOW, allow us to fill in the entries of a predictive parsing

table for G, whenever possible.

If α is any string of grammar symbols, FIRST(α) is the set of terminals that begin the strings

derived from α. If α * ε, then ε is also in FIRST(α).

If X is a non-terminal, FOLLOW(X) is the set of terminals a that can appear immediately to

the right of X in some sentential form, that is, the set of terminals a such that there exists a

derivation of the form S * αAaß for some α and ß.

Note that there may, at some time, during the derivation, have been symbols between A and

a, but if so, they derived ε and disappeared. Also, if A can be the rightmost symbol in some

sentential form, then $ is in FOLLOW(A).

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 100

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

Parsing algorithm

The parser considers 'X' the symbol on top of stack, and 'a' the current input symbol

These two symbols determine the action to be taken by the parser

Assume that '$' is a special token that is at the bottom of the stack and terminates the input

string

if X = a = $ then halt

if X = a ≠ $ then pop(x) and ip++

if X is a non terminal

then if M[X,a] = {X UVW}

then begin pop(X); push(W,V,U)

end

else error

Consider the grammar

E T E‟

E' +T E' | Є

T F T'

T' * F T' | Є

F ( E ) | id

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 101

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

Parse table for the grammar

id + * ( ) $

E ETE’ ETE’

E’ E’+TE’ E’Є E’Є

T TFT’ TFT’

T’ T’Є T’*FT’ T’Є T’Є

F Fid F(E)

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 102

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

4.3.3 SHIFT-REDUCE PARSING

This parsing method is bottom-up because it attempts to construct a parse tree for

an input string beginning at the leaves (the bottom) and working up towards the root (the top).

We can think of this process as one of "reducing" a string w to the start symbol of a grammar.

At each step a string matching the right side of a production is replaced by the symbol on the

left.

For example, consider the grammar

S aAcBe

A Ab | b

B d

and the stringabbcde. We want to reduce this string to S. We scanabbcdelooking for

substrings that match the right side of some production. The substrings b and d qualify.

Let us choose the leftmost b and replace it by A, the left side of the productionA

b. We obtain the stringaAbcde. We now find thatAb, b, and d each match the right side of

some production. Suppose this time we choose to replace the substringAb by A, the left side of

the productionA Ab. We now obtainaAcde. Then replacing dby B, the left side of the

production B d, we obtainaAcBe.We can now replace this entire string by S.

Each replacement of the right side of a production by the left side in the process

above is called a reduction. Thus, by a sequence of four reductions we were able to reduce

abbcde to S. These reductions, in fact, traced out a rightmost derivation in reverse.

A substring which is the right side of a production such that replacement of that

substring by the production left side leads eventually to a reduction to the start symbol, by the

reverse of a rightmost derivation, is called a "handle." The process of bottom-up parsing may

be viewed as one of finding and reducing handles.

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 103

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

We must not be misled by the simplicity of this example. In many cases the

leftmost substring which matches the right side of some production is not a handle

because a reduction by the production may yield a string which cannot be reduced to

the start symbol. For example, if we replaced b by A in the second string aAbcde we would

obtain a string aAAcde which cannot be subsequently reduced to S. For this reason, we must

give a more precise definition of a handle. We shall see that if we write a rightmost derivation

in reverse, then the sequence of replacements made in that derivation naturally defines a

sequence of correct replacements that reduce the sentence to the start symbol.

Handles

A handle of a string can be defined as:

A substring that matches the RHS of some production AND whose reduction to the

non-terminal on the LHS is a step along the reverse of some rightmost derivation.

Formally:

Handle of a right sentential form is (A , location of in ) , that satisfies

the above property.

i.e. A is a handle of at the location immediately after the end of ,

if: S ⇒ A⇒

A certain sentential form may have many different handles.

Right sentential forms of a non-ambiguous grammar have one unique handle

Example

Consider:

S aABe

A Abc | b

Bd

S aABeaAdeaAbcdeabbcde

S aABe is a handle of aABe in location 1.

B d is a handle of aAde in location 3.

A Abc is a handle of aAbcde in location 2.

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 104

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

A b is a handle of abbcde in location 2.

Handle Pruning

The process of discovering a handle & reducing it to the appropriate left-hand

side is called handle pruning.A rightmost derivation in reverse, often called a canonical

reduction sequence, is obtained by "handle pruning".

To construct a rightmost derivation

S = 012 … n-1n = w

Apply the following simple algorithm

for i n to 1 by -1

Find the handleAi i ini

Replacei with Ai to generate i-1

Example:

Consider the grammar | |( ) | and the input string id1 + id2 * id3. The

following sequence of reductions reduces id1 + id2 * id3 to the start symbol E.

Right-Sentential Form Handle Reducing Production

id1+id2*id3 id1

E + id2 * id3 id2

E + E *id3 id3

E+E*E E*E

E+E E+E

There are two problems that must be solved if we are to automate parsing by

handle pruning. The first is how to locate a handle in a right-sentential form, and the second is

what production to choose in case there is more than one production with the same right side.

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 105

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

4.3.3.1 STACK IMPLEMENTATION OF SHIFT-REDUCE

PARSING

The data structures used to implement the shift reduce parsing in this

implementation are: stack and an input buffer. In this, we use $ to mark the bottom of the

stack and the right end of the input.

Stack Input

$ w$

The parser operates by shifting zero or more input symbols onto the stack until a

handle is on top of the stack. The parser then reduces to the left side of the appropriate

production. The parser repeats this cycle until it has detected an error or until the stack

contains the start symbol and the input is empty:

Stack Input

$S $

In this configuration the parser halts and announces successful completion of parsing.

The primary operations of the parser are shift and reduce, there are actually

four possible actions a shift-reduce parser can make: (1) shift, (2) reduce, (3) accept, and (4)

error.

(1) In ashiftaction, the next input symbol is shifted to the top of the stack.

(2) In a reduce action, the parser knows the right end of the handle is at the top of the

stack. It must then locate the left end of the handle within the stack and decide with

what nonterminal to replace the handle.

(3) In anacceptaction, the parser announces successful completion of parsing.

(4) In anerror action, the parser discovers that a syntax error has occurred and calls an

error recovery routine.

Example: The following figure shows the steps through the actions a shift reduce parser

might take in parsing the input string id1 + id2 * id3

Stack Input Action

$ id1 + id2 * id3$ shift

$id1 + id2 * id3$ reduce by

$E +id2 * id3$ shift

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 106

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

$E + id2 * id3$ shift

$E + id2 * id3$ reduce by

$E + E * id3$ shift

$E + E * id3$ shift

$E + E * id3 $ reduce by

$E + E * E $ reduce by

$E + E $ reduce by

$E $ accept

Figure 4.5 Shift reduce parsing actions

4.3.3.2 CONSTRUCTING A PARSE TREE

To construct a parse tree explicitly we perform a walk through shift reduce

parsing. The strategy is to maintain a forest of partially-completed derivation trees as we parse

bottom-up. With each symbol on the stack we associate a pointer to a tree whose root is that

symbol and whose yield is the string of terminals which have been reduced to that symbol,

perhaps by a long series of reductions. At the end of the shift-reduce parse, the start symbol

remaining on the stack will have the entire parse tree associated with it. The bottom-up tree

construction process has two aspects.

(1) When we shift an input symbol a onto the stack we create a one-node tree labeled a.

Both the root and the yield of this tree are a, and the yield truly represents the string of

terminals “reduced” (by zero reductions) to the symbol a.

(2) When we reduce X1X2...Xn to A, we create a new node labeled A. Itschildren, from left

to right, are the roots of the trees for X,X2,...,Xn. If for all i the tree forXi, has yield xi,

then the yield for the new tree is x1x2...xn. This string has in fact been reduced to A by a

series of reductions culminating in the present one. As a special case, if we reduce to

A we create a node labeled A with one child labeled .

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 107

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

(a) After shifting id1 (b)After reducing id1 to E.

(c) After reducing id1+id2 *id3 to E+E

(d) At completion

Figure 4.6 Parse tree Construction

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 108

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

4.4 SLR PARSERS

SLR (for Simple LR) is the weakest of the three(SLR ,Canonical LR,LALR) in terms of

grammars for which it succeeds but is the easiest to implement.

“ We will need some definitions:

1. An LR(0) item (or simply item) of a grammar is a production rule augmented with a

position marker (a dot) somewhere within its right hand side.

“ For example, the production A->XYZ yields the following four items:

“ A -> .XYZ

“ A -> X.YZ

“ A -> XY.Z

“ A -> XYZ.

“ Intuitively an item indicates how much of a production we have seen at a given point in

the parsing process.

4.4.1 Constructing an SLR parse table

S simple

L left‐to‐rights can of input

R rightmost derivation in reverse

Part 1: Create the set of LR(0)states for the parse table

For the rules in an augmented grammar,G‟, begin at rule zero and follow the steps

below:

State creation steps

1. Apply the start operation and

2. complete the state:

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 109

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

a. Use one read operation on each item C (non‐terminal or terminal)in the current state

to create more states.

b. Apply the complete operation on the new states.

c. Repeat steps a and b until no more new states can be formed.

Operations defined

A, S, X: non‐terminals

w,x,y,z: string of terminals and/or non‐terminals

C: one terminal or one non‐terminal

start: if S is a symbol with [S ‐> w] as a production rule, then [S ‐> .w}is the item

associated with the start state.

read: if[A ‐‐> x.Cz]is an item in some state, then [A ‐‐> xC.z]is associated with some

other state. When performing a read, all the items with the dot before the same C are

associated with the same state. (Note that the dot is before anything, either terminal or

non‐terminal.)

complete: if [A ‐‐> x.Xy]is an item, then every rule of the grammar with the

form[X ‐‐> .z]must be included within this state. Repeat adding items until no new

items can be added. (Note that the dot is before a non‐terminal.)

Example for Part 1

Consider the augmented grammarG‟:

0. S‟ ‐‐> S$

1. S ‐‐> aSbS

2. S ‐‐> a

The set of LR(0)itemsets,the states:

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 110

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 111

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 112

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 113

MODULE IV MCA-303 SYSTEM SOFTWARE ADMN 2011-‘12

Dept.of Computer Science And Applications, SJCET, Palai P a g e | 114

S-ar putea să vă placă și

- Unit-3 Cs6660-Compiler DesignDocument66 paginiUnit-3 Cs6660-Compiler DesignBuvana MurugaÎncă nu există evaluări

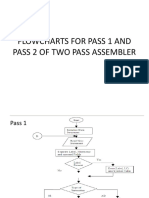

- Flowcharts For Pass 1 and Pass 2 ofDocument4 paginiFlowcharts For Pass 1 and Pass 2 ofarunlaldsÎncă nu există evaluări

- Chapter Three: Language Translation IssuesDocument18 paginiChapter Three: Language Translation IssuesFebenÎncă nu există evaluări

- 003chapter 3 - Syntax AnalysisDocument171 pagini003chapter 3 - Syntax AnalysisEyoabÎncă nu există evaluări

- Chapter 3 - Syntax AnalysisDocument160 paginiChapter 3 - Syntax Analysissamlegend2217Încă nu există evaluări

- Module 3-CD-NOTESDocument12 paginiModule 3-CD-NOTESlekhanagowda797Încă nu există evaluări

- Topic 2: Language Design Principles: 2.1 Describing Syntax and SemanticsDocument14 paginiTopic 2: Language Design Principles: 2.1 Describing Syntax and SemanticsMuhd FahmiÎncă nu există evaluări

- Programming Language HandoutDocument75 paginiProgramming Language HandoutDr Narayana Swamy RamaiahÎncă nu există evaluări

- Department of Computer Science: Subject Code: 18Kp2Cs05Document24 paginiDepartment of Computer Science: Subject Code: 18Kp2Cs05IT130 Fenil RanganiÎncă nu există evaluări

- What Is Syntax Analysis?: Syntax Analysis Is A Second Phase of The Compiler Design Process inDocument8 paginiWhat Is Syntax Analysis?: Syntax Analysis Is A Second Phase of The Compiler Design Process inRamna SatarÎncă nu există evaluări

- Syntax Analysis: Chapter - 4Document41 paginiSyntax Analysis: Chapter - 4Amir RajaÎncă nu există evaluări

- Compiler Design 2marksDocument21 paginiCompiler Design 2markslenaprasannaÎncă nu există evaluări

- Compiler AssignmentDocument6 paginiCompiler AssignmentNasir BilalÎncă nu există evaluări

- HW 6Document28 paginiHW 6Intekhab KhanÎncă nu există evaluări

- Compiler AnswersDocument18 paginiCompiler AnswersAnupam VatsÎncă nu există evaluări

- Lexical Analyzer (Compiler Contruction)Document6 paginiLexical Analyzer (Compiler Contruction)touseefaq100% (1)

- Compiler Design: ObjectivesDocument45 paginiCompiler Design: Objectivesminujose111_20572463Încă nu există evaluări

- Cs09 404 Programming Paradigm (Module 1 Notes)Document24 paginiCs09 404 Programming Paradigm (Module 1 Notes)Rohith BhaskaranÎncă nu există evaluări

- Syntax AnalyzerDocument65 paginiSyntax AnalyzerAsma FayyazÎncă nu există evaluări

- 3 Syntax AnalysisDocument22 pagini3 Syntax AnalysisAslam khanÎncă nu există evaluări

- System SW 4Document11 paginiSystem SW 4Surendra Singh ChauhanÎncă nu există evaluări

- Programming Language Syntax and SemanticsDocument54 paginiProgramming Language Syntax and SemanticskiranÎncă nu există evaluări

- Program Translation.: Idea: Use The Computer Itself To Ease The Programmer's WorkDocument26 paginiProgram Translation.: Idea: Use The Computer Itself To Ease The Programmer's WorkVerne Jules CabadingÎncă nu există evaluări

- CSC 318 Class NotesDocument21 paginiCSC 318 Class NotesYerumoh DanielÎncă nu există evaluări

- Software Tool For Translating Pseudocode To A Programming LanguageDocument9 paginiSoftware Tool For Translating Pseudocode To A Programming LanguageJames MorenoÎncă nu există evaluări

- Introduction To CompilerDocument14 paginiIntroduction To Compilerakhtar abbasÎncă nu există evaluări

- Compiler Design Visue: Q.1 What Is The Challenges of Compiler Design?Document16 paginiCompiler Design Visue: Q.1 What Is The Challenges of Compiler Design?trupti.kodinariya9810Încă nu există evaluări

- 1 Principles of Compiler DesignDocument89 pagini1 Principles of Compiler DesignPadmavathi BÎncă nu există evaluări

- UNIT 3 Syntax Analysis-Part1: Harshita SharmaDocument70 paginiUNIT 3 Syntax Analysis-Part1: Harshita SharmaVishu AasliyaÎncă nu există evaluări

- Lesson 16Document36 paginiLesson 16sdfgedr4tÎncă nu există evaluări

- Compiler Design Oral QBankDocument17 paginiCompiler Design Oral QBankPrashant RautÎncă nu există evaluări

- CS8602 CD Unit 2Document43 paginiCS8602 CD Unit 2Dr. K. Sivakumar - Assoc. Professor - AIDS NIETÎncă nu există evaluări

- Compiler DesignDocument17 paginiCompiler DesignGayathri RamasamyÎncă nu există evaluări

- CD Unit - 1 Lms NotesDocument58 paginiCD Unit - 1 Lms Notesashok koppoluÎncă nu există evaluări

- CD - 2 Marks Questions With AnswersDocument21 paginiCD - 2 Marks Questions With Answersprudhvi saiÎncă nu există evaluări

- PPL CDFDocument21 paginiPPL CDFHussain SaddamÎncă nu există evaluări

- Compiler L 400Document25 paginiCompiler L 400Reindolf ChambasÎncă nu există evaluări

- PPL CDFDocument21 paginiPPL CDFHussain SaddamÎncă nu există evaluări

- Chapter 3 Syntax Analysis (Parsing)Document29 paginiChapter 3 Syntax Analysis (Parsing)Feraol NegeraÎncă nu există evaluări

- CompilerDocument2 paginiCompilerElisante DavidÎncă nu există evaluări

- Compiler Design - Set 1: 1. What Is A Compiler?Document18 paginiCompiler Design - Set 1: 1. What Is A Compiler?Poojith GunukulaÎncă nu există evaluări

- CD Notes-2Document26 paginiCD Notes-2ajunatÎncă nu există evaluări

- Group Members: Sohail Memon (429) Abdul Qayoom (350) Rehmatullah (408) Muhammad Bilal (392) Noor-ul-AinDocument15 paginiGroup Members: Sohail Memon (429) Abdul Qayoom (350) Rehmatullah (408) Muhammad Bilal (392) Noor-ul-AinSohail JaffarÎncă nu există evaluări

- Parsing PDFDocument6 paginiParsing PDFAwaisÎncă nu există evaluări

- 1.Q and A Compiler DesignDocument20 pagini1.Q and A Compiler DesignSneha SathiyamÎncă nu există evaluări

- Lecture 4Document11 paginiLecture 4Chuks ValentineÎncă nu există evaluări

- CC QuestionsDocument9 paginiCC QuestionsjagdishÎncă nu există evaluări

- Semantic AnalysisDocument19 paginiSemantic Analysissuperstar538Încă nu există evaluări

- CD Important QuestionsDocument9 paginiCD Important Questionsganesh moorthiÎncă nu există evaluări

- System Programming Unit-2 by Arun Pratap SinghDocument82 paginiSystem Programming Unit-2 by Arun Pratap SinghArunPratapSingh100% (1)

- Language ProcessorsDocument41 paginiLanguage ProcessorsjaydipÎncă nu există evaluări

- Compiler DesignDocument7 paginiCompiler Designchaitanya518Încă nu există evaluări

- CD NotesDocument69 paginiCD NotesgbaleswariÎncă nu există evaluări

- Compiler Construction NotesDocument61 paginiCompiler Construction NotesmatloobÎncă nu există evaluări

- Compiler Design Two MarksDocument17 paginiCompiler Design Two MarksJanarish Saju CÎncă nu există evaluări

- UNIT-2 History of C' LanguageDocument15 paginiUNIT-2 History of C' LanguagelatharaviammudivyaÎncă nu există evaluări

- Python QB With AnswersDocument73 paginiPython QB With AnswersKRSHNA PRIYAÎncă nu există evaluări

- Compiler Construction and PhasesDocument8 paginiCompiler Construction and PhasesUmme HabibaÎncă nu există evaluări

- Programming Paradigms PDFDocument10 paginiProgramming Paradigms PDFsssadangi100% (1)

- CD Unit 2Document20 paginiCD Unit 2ksai.mbÎncă nu există evaluări

- System Software Module 1Document67 paginiSystem Software Module 1arunlalds0% (1)

- System Software Module 3Document109 paginiSystem Software Module 3arunlaldsÎncă nu există evaluări

- System Software Module 2Document156 paginiSystem Software Module 2arunlalds0% (1)

- Module 3Document23 paginiModule 3arunlaldsÎncă nu există evaluări

- Module 2Document35 paginiModule 2arunlaldsÎncă nu există evaluări

- Module 3Document23 paginiModule 3arunlaldsÎncă nu există evaluări

- 4 ProjectschedulingDocument21 pagini4 ProjectschedulingarunlaldsÎncă nu există evaluări

- Ss Module 1Document24 paginiSs Module 1noblesivankuttyÎncă nu există evaluări

- Assembler Module 1-1Document23 paginiAssembler Module 1-1arunlaldsÎncă nu există evaluări

- AssemblerDocument23 paginiAssemblerarunlaldsÎncă nu există evaluări

- 2.review TechniquesDocument21 pagini2.review TechniquesarunlaldsÎncă nu există evaluări

- Ss Module 1Document24 paginiSs Module 1noblesivankuttyÎncă nu există evaluări

- 5 RiskmanagementDocument40 pagini5 RiskmanagementarunlaldsÎncă nu există evaluări

- Product Metrics For SoftwareDocument26 paginiProduct Metrics For SoftwarearunlaldsÎncă nu există evaluări

- 2 Project&ProcessmetricsDocument30 pagini2 Project&ProcessmetricsarunlaldsÎncă nu există evaluări

- Estimation For Software ProjectsDocument57 paginiEstimation For Software ProjectsarunlaldsÎncă nu există evaluări

- 2.testing Conventional Applications1Document56 pagini2.testing Conventional Applications1arunlaldsÎncă nu există evaluări

- 3.OO TestingDocument9 pagini3.OO TestingarunlaldsÎncă nu există evaluări

- 4 TestingwebapplicationsDocument34 pagini4 TestingwebapplicationsarunlaldsÎncă nu există evaluări

- 4.software Configuration Management-s3MCADocument25 pagini4.software Configuration Management-s3MCAarunlaldsÎncă nu există evaluări

- 3.software Quality AssuranceDocument22 pagini3.software Quality AssurancearunlaldsÎncă nu există evaluări

- 1.software TestingDocument40 pagini1.software TestingarunlaldsÎncă nu există evaluări

- Project Management ConceptsDocument19 paginiProject Management ConceptsarunlaldsÎncă nu există evaluări

- 1.quality ConceptsDocument12 pagini1.quality ConceptsarunlaldsÎncă nu există evaluări

- Pressman 7 CH 15Document23 paginiPressman 7 CH 15arunlaldsÎncă nu există evaluări

- Pressman 7 CH 8Document31 paginiPressman 7 CH 8arunlaldsÎncă nu există evaluări

- Chapter 05 1Document20 paginiChapter 05 1arunlaldsÎncă nu există evaluări

- Chapter 5 - Understanding RequirementsDocument6 paginiChapter 5 - Understanding RequirementsDanish PawaskarÎncă nu există evaluări

- Flexfield DocumentDocument84 paginiFlexfield DocumentAvishek BoseÎncă nu există evaluări

- JU CD Lab ManualDocument26 paginiJU CD Lab ManualInbox 01Încă nu există evaluări

- Cheat Sheet Final FinalDocument2 paginiCheat Sheet Final FinalayoubÎncă nu există evaluări

- Reference Manual: X YstemsDocument242 paginiReference Manual: X Ystemsecraven7Încă nu există evaluări

- Compiler Vs InterpreterDocument4 paginiCompiler Vs InterpreterMarcel ShieldsÎncă nu există evaluări

- Compiler Design Chapter 2Document14 paginiCompiler Design Chapter 2Vuggam VenkateshÎncă nu există evaluări

- Lilypond ExtendingDocument47 paginiLilypond ExtendingmaraujoÎncă nu există evaluări

- Mapreduce LabDocument36 paginiMapreduce LabNhơn PhạmÎncă nu există evaluări

- Lab Manual SPOSLDocument60 paginiLab Manual SPOSLddÎncă nu există evaluări

- Chapter 3 - Scanning: 3.1 Kinds of TokensDocument17 paginiChapter 3 - Scanning: 3.1 Kinds of TokenslikufaneleÎncă nu există evaluări

- Experiment - 1: Aim-Develop Lexical Analyzer To Recognize Few PatternsDocument25 paginiExperiment - 1: Aim-Develop Lexical Analyzer To Recognize Few PatternsPranav RathiÎncă nu există evaluări

- Kaleidoscope - Implementing A Language With LLVM in Objective CamlDocument142 paginiKaleidoscope - Implementing A Language With LLVM in Objective Caml王遠圗Încă nu există evaluări

- Principles of Compiler DesignDocument35 paginiPrinciples of Compiler DesignRavi Raj100% (2)

- EnglishDocument17 paginiEnglishRahul RajÎncă nu există evaluări

- CS6612 Compiler RecordDocument68 paginiCS6612 Compiler RecordLOKESH V [34]Încă nu există evaluări

- Chadha ThesisDocument166 paginiChadha Thesisdarwin_huaÎncă nu există evaluări

- Visual Basic Language Specification 10.0Document593 paginiVisual Basic Language Specification 10.0y9831590Încă nu există evaluări

- Introduction To File Input and OutputDocument7 paginiIntroduction To File Input and OutputLyle Andrei SotozaÎncă nu există evaluări

- Chapter 3Document9 paginiChapter 3Antehun asefaÎncă nu există evaluări

- 12 SlideDocument64 pagini12 SlideVn196622Încă nu există evaluări

- Describing Syntax and Semantics: Isbn 0-321-49362-1Document55 paginiDescribing Syntax and Semantics: Isbn 0-321-49362-1ranga231980Încă nu există evaluări

- Lex Yacc TutorialDocument38 paginiLex Yacc TutorialAbhijit DasÎncă nu există evaluări

- Frama Clang ManualDocument23 paginiFrama Clang ManualArtix FoxÎncă nu există evaluări

- Lab Details and Specification: Sir Vishveshwaraiah Institute of Science and Technology, MadanapalleDocument93 paginiLab Details and Specification: Sir Vishveshwaraiah Institute of Science and Technology, MadanapallePurushothaman MannanÎncă nu există evaluări

- Literals, Variables and Data TypesDocument60 paginiLiterals, Variables and Data Typessreenu_pesÎncă nu există evaluări

- Message Spam Classification Using Machine Learning ReportDocument28 paginiMessage Spam Classification Using Machine Learning ReportDhanusri RameshÎncă nu există evaluări

- Compiler Design Ch1Document13 paginiCompiler Design Ch1Vuggam VenkateshÎncă nu există evaluări

- System ProgrammingDocument26 paginiSystem ProgrammingMohan Rao MamdikarÎncă nu există evaluări

- All MCQDocument59 paginiAll MCQTHANGAMARI100% (1)

- OpenNLP Developer 1Document2 paginiOpenNLP Developer 1Safdar HusainÎncă nu există evaluări