Documente Academic

Documente Profesional

Documente Cultură

Text Processing in R.R

Încărcat de

Ken MatsudaDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Text Processing in R.R

Încărcat de

Ken MatsudaDrepturi de autor:

Formate disponibile

# R code for basic text cleaning tutorial

#0. Preliminaries

rm(list = ls())

setwd("~/Desktop")

# install.packages("stringr", dependencies = TRUE)

library(stringr)

#1. Basic Text Cleaning

my_string <- "Example STRING, with numbers (12, 15 and also 10.2)?!"

lower_string <- tolower(my_string)

second_string <- "Wow, two sentences."

my_string <- paste(my_string,second_string,sep = " ")

my_string_vector <- str_split(my_string, "!")[[1]]

grep("\\?",my_string_vector)

grepl("\\?",my_string_vector[1])

str_replace_all(my_string, "e","___")

str_extract_all(my_string,"[0-9]+")

# Function to clean and tokenize a string

Clean_String <- function(string){

# Lowercase

temp <- tolower(string)

# Remove everything that is not a number or letter (may want to keep more

# stuff in your actual analyses):

temp <- stringr::str_replace_all(temp,"[^a-zA-Z\\s]", " ")

# Shrink down to just one white space:

temp <- stringr::str_replace_all(temp,"[\\s]+", " ")

# Split it on white spaces:

temp <- stringr::str_split(temp, " ")[[1]]

# Get rid of trailing "" if necessary:

indexes <- which(temp == "")

if (length(indexes) > 0) {

temp <- temp[-indexes]

}

return(temp)

}

sentence <- "The term 'data science' (originally used interchangeably with

'datalogy') has existed for over thirty years and was used initially as a

substitute for computer science by Peter Naur in 1960."

clean_sentence <- Clean_String(sentence)

print(clean_sentence)

# function to clean text

Clean_Text_Block <- function(text){

# Get rid of blank lines

indexes <- which(text == "")

if (length(indexes) > 0) {

text <- text[-indexes]

}

# See if we are left with any valid text:

if (length(text) == 0) {

cat("There was no text in this document! \n")

to_return <- list(num_tokens = 0,

unique_tokens = 0,

text = "")

} else {

# If there is valid text, process it.

# Loop through the lines in the text and combine them:

clean_text <- NULL

for (i in 1:length(text)) {

# add them to a vector

clean_text <- c(clean_text, Clean_String(text[i]))

}

# Calculate the number of tokens and unique tokens and return them in a

# named list object.

num_tok <- length(clean_text)

num_uniq <- length(unique(clean_text))

to_return <- list(num_tokens = num_tok,

unique_tokens = num_uniq,

text = clean_text)

}

return(to_return)

}

# make sure that you save this file in your current working directory (in my

# case the Desktop)

con <- file("Obama_Speech_2-24-09.txt", "r", blocking = FALSE)

text <- readLines(con)

close(con)

clean_speech <- Clean_Text_Block(text)

str(clean_speech)

# Read in the file

con <- file("Obama_Speech_1-27-10.txt", "r", blocking = FALSE)

text2 <- readLines(con)

close(con)

# Clean and tokenize the text

clean_speech2 <- Clean_Text_Block(text2)

#2. Create a Document Term Matrix

# install.packages("Rcpp",dependencies = T)

# install.packages("RcppArmadillo",dependencies = T)

# install.packages("BH",dependencies = T)

# jsut make sure you saved the cpp file to the currentworking directory

Rcpp::sourceCpp('Generate_Document_Word_Matrix.cpp')

#' Create a list containing a vector of tokens in each document for each

#' document. These can be extracted from the cleaned text objects as follows.

doc_list <- list(clean_speech$text, clean_speech2$text)

#' Create a vector of document lengths (in tokens)

doc_lengths <- c(clean_speech$num_tokens,clean_speech2$num_tokens)

#' Generate a vector containing the unique tokens across all documents.

unique_words <- unique(c(clean_speech$text,clean_speech2$text))

#' The number of unique tokens across all documents

n_unique_words <- length(unique_words)

#' The number of documents we are dealing with.

ndoc <- 2

#' Now feed all of this information to the function as follows:

Doc_Term_Matrix <- Generate_Document_Word_Matrix(

number_of_docs = ndoc,

number_of_unique_words = n_unique_words,

unique_words = unique_words,

Document_Words = doc_list,

Document_Lengths = doc_lengths

)

#' Make sure to add column names to Doc_Term_Matrix, then take a look:

colnames(Doc_Term_Matrix) <- unique_words

#3. Text processing the easy way

#' load the package and generate the document-term matrix

require(quanteda)

docs <- c(paste0(text,collapse = " "),paste0(text2,collapse = " "))

doc_term_matrix <- dfm(docs, stem = FALSE)

# find the additional terms captured by quanteda

missing <- doc_term_matrix@Dimnames$features %in% colnames(Doc_Term_Matrix)

# just includes all terms with hypens and '

doc_term_matrix@Dimnames$features[which(missing == 0)]

S-ar putea să vă placă și

- 12 Cs Cbse QP ProgramsDocument10 pagini12 Cs Cbse QP Programsroyalfancy704Încă nu există evaluări

- Loops: Genome 559: Introduction To Statistical and Computational Genomics Prof. James H. ThomasDocument27 paginiLoops: Genome 559: Introduction To Statistical and Computational Genomics Prof. James H. ThomasRahul JagdaleÎncă nu există evaluări

- Lab Digital Assignment 6 Data Visualization: Name: Samar Abbas Naqvi Registration Number: 19BCE0456Document11 paginiLab Digital Assignment 6 Data Visualization: Name: Samar Abbas Naqvi Registration Number: 19BCE0456SAMAR ABBAS NAQVI 19BCE0456Încă nu există evaluări

- Python BasicDocument22 paginiPython BasicSuman HalderÎncă nu există evaluări

- PythonDocument15 paginiPythonBizhub Konica MinoltaÎncă nu există evaluări

- Python - Tutorial: Suman HalderDocument28 paginiPython - Tutorial: Suman HalderSuman HalderÎncă nu există evaluări

- Python BasicDocument34 paginiPython BasicSuman HalderÎncă nu există evaluări

- p3 Python ProjectDocument4 paginip3 Python ProjectDaniella VargasÎncă nu există evaluări

- Text Mining Package and Datacleaning: #Cleaning The Text or Text TransformationDocument6 paginiText Mining Package and Datacleaning: #Cleaning The Text or Text TransformationArush sambyalÎncă nu există evaluări

- Progammin in R 2. Workspace and FilesDocument3 paginiProgammin in R 2. Workspace and FilesLuisBerumenMartinezÎncă nu există evaluări

- Lab01 PDFDocument17 paginiLab01 PDFJemelyn De Julian TesaraÎncă nu există evaluări

- DictionaryDocument3 paginiDictionaryVamshika KattaÎncă nu există evaluări

- Rica PythonDocument7 paginiRica PythonSamielyn Ingles RamosÎncă nu există evaluări

- Day 1Document13 paginiDay 1Hein Khant ThuÎncă nu există evaluări

- Vasu Nagar CS Report FileDocument38 paginiVasu Nagar CS Report Filenagar.vasu0810Încă nu există evaluări

- 6B While Loops PDFDocument23 pagini6B While Loops PDFSudesh KumarÎncă nu există evaluări

- Answer 1Document12 paginiAnswer 1sanju.kumar9363Încă nu există evaluări

- Sahil Malhotra 16 BCE 0113 Web Mining L51+L52: 1. Universal Crawling 1.1. CODEDocument11 paginiSahil Malhotra 16 BCE 0113 Web Mining L51+L52: 1. Universal Crawling 1.1. CODEsahilÎncă nu există evaluări

- Dictionaries in PythonDocument15 paginiDictionaries in PythonPritika SachdevaÎncă nu există evaluări

- Solution ProgrammingQuestions Part2Document7 paginiSolution ProgrammingQuestions Part2Rakshitha D NÎncă nu există evaluări

- Python LabDocument6 paginiPython LabTanuÎncă nu există evaluări

- Sudoku CheckerDocument2 paginiSudoku CheckerjustincbarclayÎncă nu există evaluări

- Julia Basic CommandsDocument10 paginiJulia Basic CommandsLazarus PittÎncă nu există evaluări

- Python Lab ManualDocument19 paginiPython Lab ManualRahul YadavÎncă nu există evaluări

- ExerciseDocument3 paginiExerciseShruti LakheÎncă nu există evaluări

- 2023 Aug How To Produce Data For A Neural networkORGDocument6 pagini2023 Aug How To Produce Data For A Neural networkORGAli Riza SARALÎncă nu există evaluări

- J.K. Institute of Applied Physics and Technology: Natural Language Processing AssignmentDocument22 paginiJ.K. Institute of Applied Physics and Technology: Natural Language Processing AssignmentVivek AryaÎncă nu există evaluări

- UntitledDocument59 paginiUntitledSylvin GopayÎncă nu există evaluări

- Tmcode Text MiningDocument2 paginiTmcode Text Miningratan203Încă nu există evaluări

- R Lab ProgramDocument21 paginiR Lab ProgramSachin ShimoghaÎncă nu există evaluări

- Ejercicio #1Document3 paginiEjercicio #1Michelle Galarza S.Încă nu există evaluări

- Python SolutionsDocument11 paginiPython Solutions4NM21EC011 AMBALIKA HEGDE KÎncă nu există evaluări

- Couesera Python On OsDocument3 paginiCouesera Python On OsJashwanth REddyÎncă nu există evaluări

- Python Programming Lab: Data Types in PythonDocument24 paginiPython Programming Lab: Data Types in PythonUjwala BhogaÎncă nu există evaluări

- Experiment 1: Write A Python Program To Find Sum of Series (1+ (1+2) + (1+2+3) +-+ (1+2+3+ - +N) )Document20 paginiExperiment 1: Write A Python Program To Find Sum of Series (1+ (1+2) + (1+2+3) +-+ (1+2+3+ - +N) )jai geraÎncă nu există evaluări

- VariabDocument9 paginiVariabHein Khant ThuÎncă nu există evaluări

- Xii SC Practical AssignmentDocument20 paginiXii SC Practical AssignmentSakhyam BhoiÎncă nu există evaluări

- R Code NBDocument3 paginiR Code NBbrahmesh_smÎncă nu există evaluări

- Lecture - Notes - II PseudocodeDocument30 paginiLecture - Notes - II PseudocodemphullatwambilireÎncă nu există evaluări

- My First Script.rDocument32 paginiMy First Script.rAlexandra Blasetti KuhnÎncă nu există evaluări

- Tuples: Python For EverybodyDocument16 paginiTuples: Python For EverybodyNofril ZendriadiÎncă nu există evaluări

- I R A E D: Mport EAD ND Xport ATADocument28 paginiI R A E D: Mport EAD ND Xport ATAnaresh darapuÎncă nu există evaluări

- Python Programming Reference SheetDocument5 paginiPython Programming Reference Sheetnitin raÎncă nu există evaluări

- PYTHON For Coding InterviewsDocument11 paginiPYTHON For Coding InterviewsSangeeta YadavÎncă nu există evaluări

- Apostila de Formulas, Funções e Macros PDFDocument227 paginiApostila de Formulas, Funções e Macros PDFeduardomarceloÎncă nu există evaluări

- Python DatabaseDocument7 paginiPython DatabasePavni TripathiÎncă nu există evaluări

- Project Anmol MishraDocument26 paginiProject Anmol MishranikÎncă nu există evaluări

- Week 13Document38 paginiWeek 13Mhamod othman MSN IT companyÎncă nu există evaluări

- IR - 754 All PracticalDocument21 paginiIR - 754 All Practical754Durgesh VishwakarmaÎncă nu există evaluări

- My Learning From Data Science ClassesDocument16 paginiMy Learning From Data Science ClassesSunny DograÎncă nu există evaluări

- 2013 - Notes - R Trinker'S - NotesDocument274 pagini2013 - Notes - R Trinker'S - NotesCody WrightÎncă nu există evaluări

- Python Code ExamplesDocument30 paginiPython Code ExamplesAsaf KatzÎncă nu există evaluări

- CS PRACTICALS ListDocument34 paginiCS PRACTICALS Listdelta singhÎncă nu există evaluări

- Dr. A. Obulesh Dept. of CSEDocument42 paginiDr. A. Obulesh Dept. of CSEObulesh AÎncă nu există evaluări

- Laksh PaajiDocument12 paginiLaksh Paajisameerhaque55Încă nu există evaluări

- R语言基础入门指令 (tips)Document14 paginiR语言基础入门指令 (tips)s2000152Încă nu există evaluări

- LAB02Document11 paginiLAB02mausamÎncă nu există evaluări

- Functions in PythonDocument19 paginiFunctions in Pythonsonali karkiÎncă nu există evaluări

- Comp2041 W1Document3 paginiComp2041 W1EmmaYoungÎncă nu există evaluări

- 5 Paso S Text MiningDocument4 pagini5 Paso S Text MiningKen MatsudaÎncă nu există evaluări

- ExamplesR Power LawDocument12 paginiExamplesR Power LawKen MatsudaÎncă nu există evaluări

- Vba Codes ExcelDocument46 paginiVba Codes ExcelKen Matsuda100% (1)

- AnovaDocument67 paginiAnovaJesúsValdezÎncă nu există evaluări

- Cybernetics and Systems: An International JournalDocument13 paginiCybernetics and Systems: An International JournalKen MatsudaÎncă nu există evaluări

- Agresti Ordinal TutorialDocument75 paginiAgresti Ordinal TutorialKen MatsudaÎncă nu există evaluări

- Agresti Ordinal TutorialDocument75 paginiAgresti Ordinal TutorialKen MatsudaÎncă nu există evaluări

- XlguitarDocument11 paginiXlguitarKen MatsudaÎncă nu există evaluări

- Landis & Koch 1977Document17 paginiLandis & Koch 1977Ken MatsudaÎncă nu există evaluări

- Iovitzu Linguistic Publications Updated October 2012Document3 paginiIovitzu Linguistic Publications Updated October 2012Ken MatsudaÎncă nu există evaluări

- Fractal Structures in Language. The Question of The Imbedding SpaceDocument11 paginiFractal Structures in Language. The Question of The Imbedding SpaceKen MatsudaÎncă nu există evaluări

- Project Goals/ ObjectivesDocument51 paginiProject Goals/ ObjectivesJoyce Abegail De PedroÎncă nu există evaluări

- IV-series-monitor Monitor Um 440gb GB WW 1027-3Document360 paginiIV-series-monitor Monitor Um 440gb GB WW 1027-3Quang DuyÎncă nu există evaluări

- New DatabaseDocument18 paginiNew DatabaseShafiq RosmanÎncă nu există evaluări

- Astm C-743Document4 paginiAstm C-743IyaadanÎncă nu există evaluări

- My CVDocument2 paginiMy CVKourosh AhadiÎncă nu există evaluări

- Unreal Tournament CheatDocument3 paginiUnreal Tournament CheatDante SpardaÎncă nu există evaluări

- A Project Report On DMRCDocument22 paginiA Project Report On DMRCRahul Mehrotra100% (1)

- kp-57-65wv600 SONYDocument33 paginikp-57-65wv600 SONYdjcamdtvÎncă nu există evaluări

- Pharma MarketingDocument55 paginiPharma MarketingArpan KoradiyaÎncă nu există evaluări

- The GPSA 13th Edition Major ChangesDocument2 paginiThe GPSA 13th Edition Major Changespatrickandreas77Încă nu există evaluări

- MK7850NDocument6 paginiMK7850NkherrimanÎncă nu există evaluări

- PDF CatalogEngDocument24 paginiPDF CatalogEngReal Gee MÎncă nu există evaluări

- Chapter 4 (Digital Modulation) - Review: Pulses - PAM, PWM, PPM Binary - Ask, FSK, PSK, BPSK, DBPSK, PCM, QamDocument7 paginiChapter 4 (Digital Modulation) - Review: Pulses - PAM, PWM, PPM Binary - Ask, FSK, PSK, BPSK, DBPSK, PCM, QamMuhamad FuadÎncă nu există evaluări

- VW Piezometer Input Calculation Sheet V1.1Document5 paginiVW Piezometer Input Calculation Sheet V1.1Gauri 'tika' Kartika100% (1)

- FGRU URAN 08.12.2015 Rev.02Document3 paginiFGRU URAN 08.12.2015 Rev.02Hitendra PanchalÎncă nu există evaluări

- Husqvarna 2008Document470 paginiHusqvarna 2008klukasinteria100% (2)

- Lesson 1 DataDocument4 paginiLesson 1 Dataapi-435318918Încă nu există evaluări

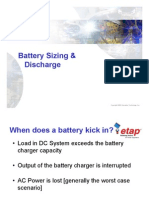

- 23 - Battery Sizing DischargeDocument19 pagini23 - Battery Sizing Dischargechanchai T100% (4)

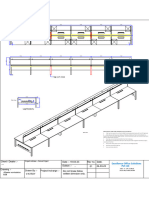

- 6seater Workstation B2BDocument1 pagină6seater Workstation B2BDid ProjectsÎncă nu există evaluări

- Promt MidjourneyDocument2 paginiPromt MidjourneyMarcelo PaixaoÎncă nu există evaluări

- DSCDocument7 paginiDSCthanhnguyenhhvnÎncă nu există evaluări

- UnLock Root Pro 4.1.1Document9 paginiUnLock Root Pro 4.1.1jackcarver11Încă nu există evaluări

- 000 139 PDFDocument17 pagini000 139 PDFtareqcccccÎncă nu există evaluări

- HSC Chemistry SkillsDocument9 paginiHSC Chemistry SkillsMartin KarlowÎncă nu există evaluări

- Pursuit ManualDocument26 paginiPursuit Manualhunter9903Încă nu există evaluări

- Re InviteDocument16 paginiRe InviteAjay WaliaÎncă nu există evaluări

- 23 Electromagnetic Waves: SolutionsDocument16 pagini23 Electromagnetic Waves: SolutionsAnil AggaarwalÎncă nu există evaluări

- HPB Install Manual ABB - Distribution BUS BarsDocument11 paginiHPB Install Manual ABB - Distribution BUS BarsArunallÎncă nu există evaluări

- Click and Learn How To Get Free TikTok FansDocument4 paginiClick and Learn How To Get Free TikTok FansFreedmanMcFadden9Încă nu există evaluări

- Period Based Accounting Versus Cost of Sales AccountingDocument13 paginiPeriod Based Accounting Versus Cost of Sales AccountingAnil Kumar100% (1)

- Understanding Software: Max Kanat-Alexander on simplicity, coding, and how to suck less as a programmerDe la EverandUnderstanding Software: Max Kanat-Alexander on simplicity, coding, and how to suck less as a programmerEvaluare: 4.5 din 5 stele4.5/5 (44)

- Python for Beginners: A Crash Course Guide to Learn Python in 1 WeekDe la EverandPython for Beginners: A Crash Course Guide to Learn Python in 1 WeekEvaluare: 4.5 din 5 stele4.5/5 (7)

- Microsoft 365 Guide to Success: 10 Books in 1 | Kick-start Your Career Learning the Key Information to Master Your Microsoft Office Files to Optimize Your Tasks & Surprise Your Colleagues | Access, Excel, OneDrive, Outlook, PowerPoint, Word, Teams, etc.De la EverandMicrosoft 365 Guide to Success: 10 Books in 1 | Kick-start Your Career Learning the Key Information to Master Your Microsoft Office Files to Optimize Your Tasks & Surprise Your Colleagues | Access, Excel, OneDrive, Outlook, PowerPoint, Word, Teams, etc.Evaluare: 5 din 5 stele5/5 (2)

- Learn Python Programming for Beginners: Best Step-by-Step Guide for Coding with Python, Great for Kids and Adults. Includes Practical Exercises on Data Analysis, Machine Learning and More.De la EverandLearn Python Programming for Beginners: Best Step-by-Step Guide for Coding with Python, Great for Kids and Adults. Includes Practical Exercises on Data Analysis, Machine Learning and More.Evaluare: 5 din 5 stele5/5 (34)

- Optimizing DAX: Improving DAX performance in Microsoft Power BI and Analysis ServicesDe la EverandOptimizing DAX: Improving DAX performance in Microsoft Power BI and Analysis ServicesÎncă nu există evaluări

- Dark Data: Why What You Don’t Know MattersDe la EverandDark Data: Why What You Don’t Know MattersEvaluare: 4.5 din 5 stele4.5/5 (3)

- How to Make a Video Game All By Yourself: 10 steps, just you and a computerDe la EverandHow to Make a Video Game All By Yourself: 10 steps, just you and a computerEvaluare: 5 din 5 stele5/5 (1)

- Excel Essentials: A Step-by-Step Guide with Pictures for Absolute Beginners to Master the Basics and Start Using Excel with ConfidenceDe la EverandExcel Essentials: A Step-by-Step Guide with Pictures for Absolute Beginners to Master the Basics and Start Using Excel with ConfidenceÎncă nu există evaluări

- Grokking Algorithms: An illustrated guide for programmers and other curious peopleDe la EverandGrokking Algorithms: An illustrated guide for programmers and other curious peopleEvaluare: 4 din 5 stele4/5 (16)

- Coders at Work: Reflections on the Craft of ProgrammingDe la EverandCoders at Work: Reflections on the Craft of ProgrammingEvaluare: 4 din 5 stele4/5 (151)

- Python Machine Learning - Third Edition: Machine Learning and Deep Learning with Python, scikit-learn, and TensorFlow 2, 3rd EditionDe la EverandPython Machine Learning - Third Edition: Machine Learning and Deep Learning with Python, scikit-learn, and TensorFlow 2, 3rd EditionEvaluare: 5 din 5 stele5/5 (2)

- Clean Code: A Handbook of Agile Software CraftsmanshipDe la EverandClean Code: A Handbook of Agile Software CraftsmanshipEvaluare: 5 din 5 stele5/5 (13)

- Once Upon an Algorithm: How Stories Explain ComputingDe la EverandOnce Upon an Algorithm: How Stories Explain ComputingEvaluare: 4 din 5 stele4/5 (43)

- Skill Up: A Software Developer's Guide to Life and CareerDe la EverandSkill Up: A Software Developer's Guide to Life and CareerEvaluare: 4.5 din 5 stele4.5/5 (40)

- A Slackers Guide to Coding with Python: Ultimate Beginners Guide to Learning Python QuickDe la EverandA Slackers Guide to Coding with Python: Ultimate Beginners Guide to Learning Python QuickÎncă nu există evaluări

- Linux: The Ultimate Beginner's Guide to Learn Linux Operating System, Command Line and Linux Programming Step by StepDe la EverandLinux: The Ultimate Beginner's Guide to Learn Linux Operating System, Command Line and Linux Programming Step by StepEvaluare: 4.5 din 5 stele4.5/5 (9)

- Python Programming For Beginners: Learn The Basics Of Python Programming (Python Crash Course, Programming for Dummies)De la EverandPython Programming For Beginners: Learn The Basics Of Python Programming (Python Crash Course, Programming for Dummies)Evaluare: 5 din 5 stele5/5 (1)

- Microsoft Excel Guide for Success: Transform Your Work with Microsoft Excel, Unleash Formulas, Functions, and Charts to Optimize Tasks and Surpass Expectations [II EDITION]De la EverandMicrosoft Excel Guide for Success: Transform Your Work with Microsoft Excel, Unleash Formulas, Functions, and Charts to Optimize Tasks and Surpass Expectations [II EDITION]Evaluare: 5 din 5 stele5/5 (2)

- Microsoft PowerPoint Guide for Success: Learn in a Guided Way to Create, Edit & Format Your Presentations Documents to Visual Explain Your Projects & Surprise Your Bosses And Colleagues | Big Four Consulting Firms MethodDe la EverandMicrosoft PowerPoint Guide for Success: Learn in a Guided Way to Create, Edit & Format Your Presentations Documents to Visual Explain Your Projects & Surprise Your Bosses And Colleagues | Big Four Consulting Firms MethodEvaluare: 5 din 5 stele5/5 (3)

- Competing in the Age of AI: Strategy and Leadership When Algorithms and Networks Run the WorldDe la EverandCompeting in the Age of AI: Strategy and Leadership When Algorithms and Networks Run the WorldEvaluare: 4.5 din 5 stele4.5/5 (21)

![Microsoft Excel Guide for Success: Transform Your Work with Microsoft Excel, Unleash Formulas, Functions, and Charts to Optimize Tasks and Surpass Expectations [II EDITION]](https://imgv2-2-f.scribdassets.com/img/audiobook_square_badge/728318885/198x198/86d097382f/1714821849?v=1)