Documente Academic

Documente Profesional

Documente Cultură

Marianthie Makropoulou - Spectral Music Analysis and Reasearch

Încărcat de

Marianthie Makropoulou0 evaluări0% au considerat acest document util (0 voturi)

83 vizualizări10 paginiSpectral music developed in France in the 1970s and analyzed sound spectra using Fourier analysis to decompose complex sounds into sine waves. Composers like Grisey and Murail focused on timbre, the overtone series, and slow harmonic development rather than chords or melodies. Spectral music treats pitch and timbre as inseparable aspects of sound. Compositions are based on the evolution of sound spectra over time. Grisey explored difference tones, the dynamic character of sounds, and the perception of multiple layers of time in music and other beings.

Descriere originală:

An essay on contemporary techniques in spectral music

Titlu original

Marianthie Makropoulou- Spectral music analysis and reasearch

Drepturi de autor

© © All Rights Reserved

Formate disponibile

PDF, TXT sau citiți online pe Scribd

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentSpectral music developed in France in the 1970s and analyzed sound spectra using Fourier analysis to decompose complex sounds into sine waves. Composers like Grisey and Murail focused on timbre, the overtone series, and slow harmonic development rather than chords or melodies. Spectral music treats pitch and timbre as inseparable aspects of sound. Compositions are based on the evolution of sound spectra over time. Grisey explored difference tones, the dynamic character of sounds, and the perception of multiple layers of time in music and other beings.

Drepturi de autor:

© All Rights Reserved

Formate disponibile

Descărcați ca PDF, TXT sau citiți online pe Scribd

0 evaluări0% au considerat acest document util (0 voturi)

83 vizualizări10 paginiMarianthie Makropoulou - Spectral Music Analysis and Reasearch

Încărcat de

Marianthie MakropoulouSpectral music developed in France in the 1970s and analyzed sound spectra using Fourier analysis to decompose complex sounds into sine waves. Composers like Grisey and Murail focused on timbre, the overtone series, and slow harmonic development rather than chords or melodies. Spectral music treats pitch and timbre as inseparable aspects of sound. Compositions are based on the evolution of sound spectra over time. Grisey explored difference tones, the dynamic character of sounds, and the perception of multiple layers of time in music and other beings.

Drepturi de autor:

© All Rights Reserved

Formate disponibile

Descărcați ca PDF, TXT sau citiți online pe Scribd

Sunteți pe pagina 1din 10

Marianthie Makropoulou

Spectral Music;

a brief presentation of a groundbreaking compositional technique, the

study of psychoacoustics and human perception.

Spectral music can be defined as the compositional technique developed in France in

the 1970s which used the mathematical analysis of sound spectra as a compositional

tool and was based on Fourier’s theory, stating that any complex signal can be

decomposed into a sum of sinusoidal waves, over an infinite time frame, by

specifying their relative amplitudes and phrases. That means, concerning the nature

of sound, that a complex tone can be decomposed into a sum of sine tones, called

the “partials” of the sound, the set of which form its spectrum. The knowledge of the

frequency components present in the sound resulted in the birth of the idea of

spectral composition. The spectral music composers of this time (including Gerard

Grisey and Tristan Murail) were greatly interested in the fundamental nature of

sound, in particular, the overtone series. Rather than creating works based on chord

progressions or tone rows, these composers wrote pieces that were constructed on

the development of a sound spectrum and were based on slow harmonic

development, while being devoid of a prominent melody or a strong sense of pulse.

Spectral music replaced the principle of interval sequence and motivic

transformation of western tradition with the principle of fusion and continuity. In

order to understand how spectral music functions in the context of physical reality,

one must bear in mind that a balance between calculation and intuition, theory and

experimentation is essential to music. Thus, the physical reality of spectral music is

characterized by the use of sounds ranging from white noise to sinusoidal tones,

rejecting, in a sense the established equal tempered tonal system.

Crucial to spectralism (and especially in Grisey’s music) is the concept of timbre,

standing at the head of a compositional strategy as the reference point in which

different structural and morphological approaches were applied. The spectral

composition of timbres serves the creation of a new system of pitches based on the

harmonic series and the reconstruction, by acoustic instruments or electronic

sounds, of the structure of certain timbres. It is important to stress that in spectral

music, pitch and timbre are treated not as separate notions, but as different aspects

of the same phenomenon. The notion of the tension between consonance and

dissonance governing tonal music is replaced by a more broadly understood tension

created through the opposition between sound and noise. Timbre can be perceived

as a multidimensional expressive feature of every sound that can provide numerous

syntactic possibilities for the creation of a composition and its function evolved from

adding color to certain pitches to determining an entire structure, contributing to

the formal construction of a musical work and working together with various

syntaxes. Hence, composition refers no longer to notes, but to an expanded look at

the acoustic object.

Spectral music is based on the development of a spectrum, or a group of spectra.

There is a tendency of the music to focus on the microstructure of sound, the ever-

changing relationships between the pitches of a sound’s overtone structure as it

develops in time. Since the spectra are in a constant state of evolution, they can

potentially have an impact on harmonic motion. The spectra can also influence

orchestration, as the relative amplitudes of certain instruments might well reflect

the relative amplitudes of the pitches in a particular overtone series. The evolution

of the sound over time is presented by a temporal wave and has a certain frequency

content visible in the spectrum. Electronic sound synthesis allows composers to

organize the sound itself, introducing harmony into timbre and timbre as a generator

of harmony.

Spectral music differs from much traditional music, even from most twentieth

century music. Often a single sonority is present for an entire section of music,

frequently lasting several minutes. Since the harmonic units unfold very slowly, the

listener becomes aware that the sonority is quite important. Changes in harmony

become easily recognizable. Spectral composers do not make use of functional

harmonic progressions the way tonal composers do; instead, the harmonies are

often metamorphosed from one to the next. Spectralists are interested in the

evolution of sound over time and focus on sounds and their dynamic character

rather than notes. The dynamic character of sounds releases within their

simultaneous flow differences between them, and by observing them one can

control the evolution of the sounds and the speed of that evolution. Spectral

compositions are very often based on the fundamental exploitation of the sonic

phenomenon and all its complexity (from harmonic tones to non harmonic tones).

Gerard Grisey, for example, uses difference tones (combination tones) created

during the simultaneous intonation of at least a couple of sounds to create ‘shadow

casting sounds’ and drew attention to the fact that certain intervals ‘do not cast

shadows’, since resultant tones only strengthen the ‘light’ of their harmonics. The

overall sound of a spectral musical work could be perceived through the connections

established between its partials. Timbre is also very important for Grisey, who

believed it to possess a strong qualitative value which inevitably hampered any sort

of serializing procedure as well as being an expressive feature in which the

indivisibility and interconnection of all sonic parameters is clearly apparent. The

essence of timbre for spectral composers and its dynamic character helped to avoid

a barren and quantitatively defined notion of the sound. Through the rebuilding of

the acoustic material based on the sound spectrum model, the sound can be

controlled with substantial precision. Using the modification of the distribution of

the constituent elements of a sound, it can be altered from consonance to noise,

from stability to instability, etc.

Grisey stressed that ‘the instrumentation and the distribution of force and intensity

suggest synthetic spectra, which are nothing other than a projection of naturally

structured sounds into an expanded, artificial space’.

A crucial parameter to spectralism is the concept of time. Grisey, being fascinated

with the processuality of time and form, considered that time was stretched out in

all directions while he didn’t identify it with the use of long and short rhythmic

values of sounds. Real music time was only a place of exchange and accord among a

multitude of times. In western music tradition, time is a straight line occurring within

musical structures and can be divided by the composer according to the proportions

fixed by them. The one perceiving the music stands in the middle of this straight line,

but such an understanding of musical time is deeply abstract and unrealistic for

human perception. In fact the listener perceiving the musical time makes an

observation from the level of another time, which is strictly linked with the rhythm

of human life. Thus, there are numerous time layers the exchange between which

results from this dynamic, that in turn is an effect of the interactions that arise

between the psychophysiological time of hearing (for example the rhythm of one’s

heart or breathing) and the mechanical time of the sound. According to Grisey there

is no notion in the world which could state unequivocally that something lasts too

long or not long enough due to the fact that everything depends on the kind of

information being transmitted. Influenced by the composer Conlon Nancarrow, who

created music in condensed time, written for or by insects or small creatures, he

came to the conclusion that musical creation ought to refer to the direct composing

of musical time, which is also related to the tempo of the language and can be

captured in the act of perception, and not to time measured beyond actual

impressions (chronometric time). He was interested in the way time was perceived

not only by people, but also by other beings (for example the expanded, stretched-

out time of the whales) and indicated that in the world of birds and insects

everything happens more quickly, calling ‘contracting time’ the time of birds or the

time of insects.

In the human perception, one can distinguish three kinds of time, between two

successive events:

1) Small differences between the events produce a natural passage of time, as it

were- time with a specific velocity, analogous to the tempo of language.

2) When an extremely different event after a previous event occurs, it disrupts the

linearity of the passage of time: time contracts.

3) A predictable/non surprising for the listener succession of events increases the

density of the present, and time expands.

If the listener focuses on the musical experience on some detail, on the internal

structure of a sound, time is perceived as expanded and, in that case, everything

occurs in slow motion. When experiencing microacoustic phenomena, one has to

magnify them and a certain law of perception indicating the reverse dependency

between the acuity of audio perception and the one of temporal perception is

claimed to exist. Thus, in order to perceive microacoustic phenomena (sharpen our

audio perception), we have to slow the time of perception.

Grisey, due to his goal to stretch the human sense of time, he avoided to employ

poetical texts in his music because they demand a natural tempo of narration- the

time of language, and the vocal parts in his works are treated instrumentally, with

long held sounds, devoid of melodic character. His specific treatment of time also

refers to cosmic time; he was interested in the sound of pulsars, so thanks to a radio

telescope that could integrate their electromagnetic waves into sound he integrated

music created by the tempo of the rotation of two pulsars which was captured

during the performance of his work thanks to the radio telescope.

A very important factor in spectral composition is the reflection on the abilities of

human perception. The mental reality of spectral music is directly linked to psychic

reality. Due to the fact that tonal music had been consolidated over a long period of

time, it had a huge advantage over spectral sound organization as listeners could

predict the occurrence of successive musical structures. This is also a matter of the

human nature causing arousal (or emotion) towards certain sounds or sonic

sequences. Human beings have a certain number of schemas, some innate and

directly linked to survival and others acquired and eventually modifiable that are

linked to past experience. Thus, perceptions are evaluated in terms of expectancies,

so when those perceptions conform to our expectancies one would expect that little

cognitive activity is necessary. On the other hand, a perception that goes against

expectancy triggers both an emotional reaction and cognitive processing in order to

adapt our representation of the external world to what has been perceived.

Numerous examples from neuropathology show that preceding arousal is not only

sufficient, but necessary for the undisrupted operation of many cognitive processes,

such as decision making and personal planning for the future. Thus, since arousal

occupies such an important place in our cognitive processing, the question of the

relation between emotion and music takes on a new dimension. One of the

previously mentioned schemas is the knowledge of tonality, which gives rise to

expectancies. Whether or not violated, those expectancies are to evoke arousal in

both professional musicians and non-musician listeners, who clearly express

expectancies linked to tonality. The feeling of tension can be seen as the result of an

enforcement of cultural rules that give rise to expectancies, but there are also

reactions to music that originate in the musical material, based on its immediate

qualities, and which generate expectancies over time.

Roughness, as another immediate sound quality has been shown to play an

important role in the perception of tension in the tonal context, along with these

other factors. The influence of roughness in harmony derives from its basic rules, so

when the composer rejects the conventional techniques of the tonal system the

provocation of a simple and immediate tension can become primordial again.

For Grisey, the ability or inability to predict, anticipation and surprise, a game played

between the listener and the composer of spectral music, is the factor which

determines the existence of musical time. It is crucial to take into consideration the

aspects of human perception during the creative process. By distinguishing the

difference between the sounds, the real material of the composer becomes the

degree of predictability (or better “preaudability”), and, in order to affect the levels

of that preaudibility, they have to shape perceptible time in opposition to

chronometric time. He pointed out that when the listener is familiarizing oneself

with a new score, they don’t follow it through detail, but rather seek the place where

the first distinctive change occurs.

That place could be described as the point where the composer attained and fixed

some idea also comprehensible to the listener’s perception. The challenge for the

composer lies not to the fact that the idea is fixed, rather than to finding the right

place for the new idea to be introduced. There is a certain limitation to the

perception of the listener, though, particularly in relation to motion, and,

consequently to dynamically constructed music (like spectral) which the composer

must take into consideration when creating soundscapes. The listener won’t ‘get

lost’ in a piece if the composer provides noticeable and memorable points of

reference, as during an attentive listening one becomes aware of the presence of

some object of perception and then continually compares subsequent sound events

with the objects which, thanks to previously experienced music, have created in their

memories certain cognitive schemata or patterns. It is very important for a composer

to have in mind that the difference or lack of it between compared objects defines

the essence of perception and that the shaping of different relations between

changeable configurations of sound events constitutes a crucial feature of music

creation.

The context in which an individual sound or sonic sequence appears plays an

important role in spectral music, and composition in general; a sound of a certain

pitch shows its individuality in the context of noise in the same way that the sound of

a particular interval is distinguished within the surroundings of other intervals. As

Grisey indicated, there must exist a threshold in human perception, a ‘point zero’

from which music should develop; rapid beats, for example, change the timbre of

sound , but slow ones are perceived as rhythms because of their periodicity.

In the matter of perception related to the spectral composition, Grisey considered

details to be understood as part of the whole; when a group of instruments plays a

certain spectrum the ear tends, not to discern partials, rather than being satisfied

with a global perception- the timbre. In instrumental synthesis, each instrument of

the ensamble which realizes a given partial of the spectrum helps to show the sound

of the spectrum in a macro scale, so a balance between fusion and differentiation is

achieved.

In Grisey’s view, such synthetic spectra are located in the borders of the awareness

threshold. As a result he describes the instrumental synthesis as a ‘hybrid entity’ that

is a sound which is neither a timbre nor a chord and thus, it stands in the sharp line

dividing differentiation from integration.

A distinctive feature of spectral music is also its natural approach and context. The

sound in spectral music is treated as a living organism in time, not as inanimate raw

material; the sound is ‘born’, ‘lives’ and finally ‘dies’ and is a subject of constant

change. Like other living organisms, the sound lives, not in isolation, but in spatial

and temporal vicinity with other sounds after its birth and before its decay and its

energy is constantly flowing. None of the physical characteristics of the sound is

permanent, resembling the constant flow of human existence.

For Grisey, spectralism also bears an ecological significance; there is a need to

reformulate the relationship between an isolated acoustic case and the musical

whole, as well as change our attitude and behavior towards the natural

environment. He classifies music in two types:

The first one is characterized by a linguistic attitude; the composer speaks in his

work about various things through sounds. As a result, the musical work produced

requires declamation, rhetoric and language. Some composers with a linguistic

approach are Luciano Berio, Pierre Boulez, Arnold Schonberg and Alban Berg.

On the other hand there is a naturalistic approach of sound, which focuses, not on

disquisition by means and sounds, but on capturing the nature of sound. Such

composers are Iannis Xenakis, Karlheinz Stockhausen and Gerard Grisey, claiming

that he had never thought about music in the sense of declamation, rhetoric or

language. Non-spectral composers used nature as musical material in its raw form

(water, wind, fire ect.) by recording naturally-formed sound phenomena to create

new material for composed music or playing them back during concerts. On the

contrary, the spectralists directed their attention to other aspects of nature and the

natural essence of sound, in particular, but also the listener’s perceptive ability. They

had an increasing interest towards acoustics and philosophy concerning the living

nature of sound, but also the technology, in the context of which they consider its

physical and cultural aspect.

The modern technological advancements helped the spectralists to imagine, capture

organize and transform their material. Spectral music was largely based on

technology; the phrase ‘informatique musicale’ was often linked with a description

of the spectralist current and has a wider context than its English equivalent

(‘computer music’). Technology assisted the compositional process, improved the

effectiveness of musical notation and helped to the analysis/synthesis of sounds,

while many performances required the involvement of electronic techniques, such

as playback. Modern music creators, wanting to break the tradition and be

completely in control of their musical pieces and the creative process, they became

composers, instrument makers and performers at once. They taught themselves

programming language, studied timbre and adopted discoveries related to acoustic

phenomena, psychoacoustics and human perception so that they could formalize

these discoveries and employ them in a certain way to produce new musical

material. The computer became an indispensible tool for spectral composers, since

they proceeded from acoustic and musical multi-representation to the process of

programming, as it enabled the use and development of numerous compositional

algorithms and the simulation of orchestral ensambles, as well as the manipulation

of synthesizers. A process in acoustics and psychoacoustics was noted, due to the

wide collaboration of scientists and composers. A new role for the composer was

slowly developing in the circle of IRCAM (Instintut de Recherche et Coordination

Acoustique/Musique, founded in France); the term ‘compositeur en recherche’

indicates the function held by the composer within a research group. His duty was to

assist the research group by carrying out a musical evaluation of computer tools,

documenting the results of their use and exchange ideas with the rest of the

researchers. Thus, scientists managed to arrive at a better understanding of the

human perception of the acoustic phenomenon and discover ways to access and

control this knowledge.

Although the composers of this time were fully aware of the possibilities provided by

technology, some of them, and Grisey among those, made a quite limited use of the

computer and electronics mainly due to the fact that those means were revision

dependent, meaning that at any given moment the composer may not have access

to the particular system needed for the work to be heard in the concert hall. This

could oblige composers to review their pieces, which was less than ideal.

As said above, spectral composers were intrigued by the manner in which sound

affects the listener, perceptual phenomena as the physiological mechanism for the

transmission of data and the expressive features of the perceived music.

The word ‘sound’ itself contains in a sense the notion of perception, since it can be

defined as a kind of acoustic pressure which can be heard (which our ears are able to

decode). This is conditioned by the physiology of the peripheral auditory system. The

relation between the pressure wave and the audible field of the waves (the sound)

can only be understood by studying the physiological mechanisms of perception.

The external ear has the ability to amplify the air vibrations of a sound wave,

communicating them to the inner ear and the basilar membrane, whose place of

arousal depends on the frequency of the signal. The hair cells placed along the

length undergo electrochemical changes and stimulate the fibers of the auditory

nerve which sends electrochemical impulses to the brain.

When the acoustic signal arrives to the brain, the first thing that happens to it is

some kind of spectral analysis; the basilar membrane spatially decomposes the signal

into frequency bands. The hair cells mentioned above, not only do they code the

frequency position of the signal components, but they preserve to a certain degree

their temporal information by producing neural firings at precise moments of the

stimulating wave they are responding to. This phenomenon is called phase-locking

and it decreases as frequency increases, until its disappearance in between about

2000 to 4000 Hz. Thus, the ear has the ability of processing a sound wave both

spectral and temporal.

Difference tones or combination tones are part of a very interesting psychoacoustic

phenomenon; two pure tones presented simultaneously to the auditory system

stimulate the basilar membrane at positions that are associated with their respective

frequencies, but also corresponding to frequencies that are in completion, towards

lower frequencies of the harmonic series. Simply put, the human auditory system,

when hearing a set of frequencies of higher pitch, can complete the fundamental

and lower harmonics based on the series of overtones it can perceive. The

‘difference tone’ is a physiological and perceptive term and even if it is not present in

the stimulating wave form, it is physically created in the inner ear and present on the

basilar membrane.

In addition, if two components of a complex signal are close in frequency, these

displacements will overlap; the minimum resolution of those two signals is called the

‘critical band’ and within it the ear cannot separate two simultaneous frequencies.

Two components, though, will not have the same perceptual event if they are

resolved (heard separately) or if they fall within a single critical band. The critical

band cannot be perceived as a frequency band in which all sounds appear to have

the same pitch, as, due to the ear’s sensitivity, differences in frequency are

perceptible only in the ones less than 1%. It is, thus, a limit in selectivity and not in

precision.

Critical band, as a selectivity limit can be obvious in the masking phenomenon

happening in the human ear; there can be an overlap of excitation patterns on the

basilar membrane. Simultaneous masking, due to the amount of activity presented

in the auditory nerve that represents each sound component present, can be

conceived as a kind of ‘swamping’ of the neural activity due to one sound by that of

another. This phenomenon can be understood when high-level components create a

level of activity that overwhelms the one created by lower-level components, which

are not presented at all, or presented lower than they would if presented alone.

These different components could also interact, masking each other, giving the

impression that they are of a lower frequency than originally.

This masking is determined mainly by the excitation pattern on the basilar

membrane, which is asymmetrical, extending more to the high-frequency side, than

to the low one and increases in proportion to the sound level. Concluding, the

frequency and amplitude will affect the masking of sounds in a quite complex way,

and the knowledge of these relations are essential to the understanding of the

perception of a musical message.

Concerning our perception of beats between two tones, the critical band also plays a

very important role; when we have two pure tones mistuned from the unison, one

should hear beats that result from their interaction slowly becoming more rapid.

And, while this happens in the beginning of the separation, very soon human

perception changes towards an experience of more and more rapid fluctuations,

which produce roughness. Thus, roughness cannot be understood as an acoustic

feature of sound, but as a characteristic of human perception. The roughness of a

sound varies depending on the register; a consonant interval in the upper register

could be perceived as dissonant when transposed in a lower register.

Crucial to the human perception of sound is the notion of auditory scene

organization; when we listen to the sounds of an environment, we don’t perceive

them as separate frequencies and amplitudes varying over time, rather than

structuring them in terms of coherent entities that we can detect, separate, localize

and identify. This capacity is of a vital importance for human species and quite

impressive, as we can distinguish the form of the time-frequency representations of

the superposition of all the vibrating sources surrounding us. In that way the listener

can structure the acoustic world confronting their ears, creating an ‘auditory image’

of their environment. Thus, an ‘auditory image’ can be defined as a psychological

representation of a sound entity that reveals a certain coherence in its acoustic

behavior (Mc Adams, 1984).

In an attempt to make sense of all the possible sound cues that the brain uses to

organize the sound world appropriately, it seems that there are two principal modes

involved in the formation of the auditory image: perceptual fusion of simultaneously

present acoustic components and the grouping of successive events into streams.

Auditory organization has been explored by composers for a long time; if each

instrument of the symphonic orchestra, for example, was perceived as a single

source, the musical structures imagined by the composer would be quite difficult.

It is, also, quite intriguing that, deceiving the horizontal organization of sound, one

can imply polyphony using monophonic melodic instruments, expressing more than

one voice at a time (harmonic monophony). Also, by using different instruments, a

composer can achieve a fusion of the polyphonic timbre, and, as a result make the

instruments non distinguishable (for example, Ligeti-Lontano, 1967).

Memory is also a factor one must take into consideration when one wishes to

understand some cognitive aspects of listening. Memory is linked to attending, in the

sense that attention seems to be predisposed to focus on events that are expected

on the basis of cultural knowledge. Shortly, expectancy, as mentioned above, has its

origin, not only in human culture, but also in memory. The notion of time, which is so

essential to music, derives from our ability to mentally establish event sequences

through the use of memory and is responsible for our linear perception of time.

According to scientific experiments on musical memory, in the presence of a

stimulus, the brain results in the extraction of the relevant features, making

generalizations and forming categories. That confirms Grisey’s approach on spectral

composing, that the listener is able to recognize the first distinctive change in an

unknown piece of music presented to him. By splitting (categorizing) the piece, one

creates a certain structure in their mind. This also has a physiological basis, since

perception activates the primary sensory cortices in the brain, which through their

activity in time detect and encode different features of sound. In addition to that,

when a new stimulus is perceived, if it is similar enough to a potential representation

already memorized, it will be categorized as a member of the same family.

In conclusion, the technological advancements of the 20th century brought

composers to a whole new understanding of psychoacoustics and the nature of

human perception, but also of the acoustic phenomenon and the sound spectrum. In

addition, sound analysis and synthesis through the computer was an important

breakthrough for music composition and performance. In that sense, spectral music,

although it rejected traditional harmony and the well tempered system, was clearly a

product of its time, and shed new light on musical experience in a truly

groundbreaking manner.

S-ar putea să vă placă și

- Music For Solo Performer by Alvin Lucier in An Investigation of Current Trends in Brainwave SonificationDocument23 paginiMusic For Solo Performer by Alvin Lucier in An Investigation of Current Trends in Brainwave SonificationJeremy WoodruffÎncă nu există evaluări

- A Comparison of The Discrete and Dimensional Models of Emotion in MusicDocument32 paginiA Comparison of The Discrete and Dimensional Models of Emotion in MusicAlexandru OlivianÎncă nu există evaluări

- Aspects of The Relationship Between Music and PaintingDocument7 paginiAspects of The Relationship Between Music and PaintingNarayan TimalsenaÎncă nu există evaluări

- James Tenney and The Poetics of HomageDocument16 paginiJames Tenney and The Poetics of HomageJMKrausÎncă nu există evaluări

- String HarmonicsDocument14 paginiString HarmonicsFrancis B100% (2)

- 200 Berger AestheticsDocument5 pagini200 Berger Aestheticsluis-miguel-380Încă nu există evaluări

- 1q Music Las g10Document13 pagini1q Music Las g10MAGNESIUM - VILLARIN, ANIKKA ALIYAH C.Încă nu există evaluări

- Studley - A Definition of Creative-Based Music GamesDocument10 paginiStudley - A Definition of Creative-Based Music GamesTateCarsonÎncă nu există evaluări

- About Cage String QuartetDocument9 paginiAbout Cage String Quartetnglaviola100% (2)

- Genres & History of MusicDocument35 paginiGenres & History of MusicRussel CarillaÎncă nu există evaluări

- Music From Here and There (2006)Document18 paginiMusic From Here and There (2006)Paul CraenenÎncă nu există evaluări

- An Eclectic Analysis of György Ligeti's "Chromatische Phantasie (1956) " For Solo PianoDocument14 paginiAn Eclectic Analysis of György Ligeti's "Chromatische Phantasie (1956) " For Solo PianoElliot SneiderÎncă nu există evaluări

- FolktoshapenoteDocument14 paginiFolktoshapenoteapi-318236959Încă nu există evaluări

- Lesson Plan Graphic NotationDocument8 paginiLesson Plan Graphic NotationBrodyÎncă nu există evaluări

- A Guide To James Dillon's Music - Music - The GuardianDocument3 paginiA Guide To James Dillon's Music - Music - The GuardianEtnoÎncă nu există evaluări

- Newm Icbox: Sitting in A Room With Alvin LucierDocument10 paginiNewm Icbox: Sitting in A Room With Alvin LucierEduardo MoguillanskyÎncă nu există evaluări

- InsideASymphony Per NorregardDocument17 paginiInsideASymphony Per NorregardLastWinterSnow100% (1)

- Clemens Gadenstätter - Studies For Imaginary Portraits (2019) For Accordion Solo - Modification - Corrected (16!12!2020)Document25 paginiClemens Gadenstätter - Studies For Imaginary Portraits (2019) For Accordion Solo - Modification - Corrected (16!12!2020)Dejan998Încă nu există evaluări

- UNIT I 20th Century MusicDocument40 paginiUNIT I 20th Century MusicJake BergonioÎncă nu există evaluări

- Other Minds 15 Program BookletDocument36 paginiOther Minds 15 Program BookletOther MindsÎncă nu există evaluări

- Class Notes. Penderecki, Stockhausen, LigetiDocument14 paginiClass Notes. Penderecki, Stockhausen, LigetiFantasma dell'OperaÎncă nu există evaluări

- Composing Intonations After FeldmanDocument12 paginiComposing Intonations After Feldman000masa000Încă nu există evaluări

- Kahn 1995Document4 paginiKahn 1995Daniel Dos SantosÎncă nu există evaluări

- Rhythm in The TurangalilaDocument25 paginiRhythm in The TurangalilaJoshua ChungÎncă nu există evaluări

- S BarberDocument9 paginiS BarberFrancesco CharquenhoÎncă nu există evaluări

- Interview With Stuart Saunders Smith and Sylvia Smith Notations 21Document10 paginiInterview With Stuart Saunders Smith and Sylvia Smith Notations 21artnouveau11Încă nu există evaluări

- Dhomont Chiaroscuro ScoreDocument3 paginiDhomont Chiaroscuro Scoretheperfecthuman100% (1)

- Other Minds 14Document40 paginiOther Minds 14Other MindsÎncă nu există evaluări

- The Fourth Movement of György Ligetis Piano Concerto - Investigat PDFDocument272 paginiThe Fourth Movement of György Ligetis Piano Concerto - Investigat PDFFrancesco Platoni100% (1)

- Thesis Holdcroft ZTDocument411 paginiThesis Holdcroft ZTDavid Espitia CorredorÎncă nu există evaluări

- CoalescenceDocument93 paginiCoalescenceDylan RichardsÎncă nu există evaluări

- PKokoras WestPole ScoreDocument30 paginiPKokoras WestPole ScoreKodokuna BushiÎncă nu există evaluări

- International Baccalaureate Music: Standard and Higher LevelDocument13 paginiInternational Baccalaureate Music: Standard and Higher LevelNicholas ConnorsÎncă nu există evaluări

- PGVIM International Symposium 2019Document76 paginiPGVIM International Symposium 2019AnoThai NitibhonÎncă nu există evaluări

- Xenakis's 'Polytope de Mycenae'Document3 paginiXenakis's 'Polytope de Mycenae'rhyscorr100% (1)

- Metamorphosen Beat Furrer An Der HochschDocument3 paginiMetamorphosen Beat Furrer An Der HochschLolo loleÎncă nu există evaluări

- Gerard Grisey: The Web AngelfireDocument8 paginiGerard Grisey: The Web AngelfirecgaineyÎncă nu există evaluări

- Bedrossian Charleston BookletDocument8 paginiBedrossian Charleston BookletVdoÎncă nu există evaluări

- Maceda - A Concept of Time in A Music of Southeast AsiaDocument9 paginiMaceda - A Concept of Time in A Music of Southeast AsiaSamuel Ng100% (1)

- Balancing Mathematics and Virtuosity A PDocument80 paginiBalancing Mathematics and Virtuosity A PCereza EspacialÎncă nu există evaluări

- WISHART Trevor - Extended Vocal TechniqueDocument3 paginiWISHART Trevor - Extended Vocal TechniqueCarol MoraesÎncă nu există evaluări

- Becker. Is Western Art Music Superior PDFDocument20 paginiBecker. Is Western Art Music Superior PDFKevin ZhangÎncă nu există evaluări

- Out Vinko GlobokarDocument73 paginiOut Vinko GlobokarjuanjoÎncă nu există evaluări

- Graphic ScoreDocument5 paginiGraphic ScoreAlmasi GabrielÎncă nu există evaluări

- Spectral Morphology and Space in Fausto Romitelli's Natura Morta ConDocument17 paginiSpectral Morphology and Space in Fausto Romitelli's Natura Morta ConFrancisco RosaÎncă nu există evaluări

- Ombak Final Score PDFDocument22 paginiOmbak Final Score PDFJeremy CorrenÎncă nu există evaluări

- Robin Holloway's 'Second Concerto For Orchestra': A Masterpiece Bridging Romanticism and ModernismDocument29 paginiRobin Holloway's 'Second Concerto For Orchestra': A Masterpiece Bridging Romanticism and ModernismKeane SouthardÎncă nu există evaluări

- Magic Numbers Sofia Gubaidulina PDFDocument9 paginiMagic Numbers Sofia Gubaidulina PDFMA LobatoÎncă nu există evaluări

- This Content Downloaded From 141.225.112.53 On Wed, 30 Jun 2021 01:34:12 UTCDocument22 paginiThis Content Downloaded From 141.225.112.53 On Wed, 30 Jun 2021 01:34:12 UTCjuan sebastian100% (1)

- Schweinitz SrutisDocument7 paginiSchweinitz SrutisjjrrcwÎncă nu există evaluări

- Lachenmann Pousseur Willy PDFDocument15 paginiLachenmann Pousseur Willy PDFmauricio_bonisÎncă nu există evaluări

- Composer Interview Helmut-1999Document6 paginiComposer Interview Helmut-1999Cristobal Israel MendezÎncă nu există evaluări

- Gesture Overview PDFDocument89 paginiGesture Overview PDFJoan Bagés RubíÎncă nu există evaluări

- Ian Shanahan - Full CV 2017Document28 paginiIan Shanahan - Full CV 2017Ian ShanahanÎncă nu există evaluări

- True Fire, Ciel D'hiver, TransDocument28 paginiTrue Fire, Ciel D'hiver, TransНаталья БедоваÎncă nu există evaluări

- Scratch Music - ReadingsDocument10 paginiScratch Music - Readingsemma_rapaportÎncă nu există evaluări

- Zohar IverDocument72 paginiZohar IverFrancisco Rosa100% (1)

- Fragments of A Compositional Methodology Ming Tsao PDFDocument14 paginiFragments of A Compositional Methodology Ming Tsao PDFang musikeroÎncă nu există evaluări

- Terrible Freedom: The Life and Work of Lucia DlugoszewskiDe la EverandTerrible Freedom: The Life and Work of Lucia DlugoszewskiÎncă nu există evaluări

- Summay Chapter 6 and 8 (Paul Goodwin and George Wright)Document10 paginiSummay Chapter 6 and 8 (Paul Goodwin and George Wright)Zulkifli SaidÎncă nu există evaluări

- San Jose Community CollegeDocument8 paginiSan Jose Community CollegeErica CanonÎncă nu există evaluări

- Lesson 11 Homework 5.3Document4 paginiLesson 11 Homework 5.3afodcauhdhbfbo100% (1)

- St501-Ln1kv 04fs EnglishDocument12 paginiSt501-Ln1kv 04fs Englishsanthoshs241s0% (1)

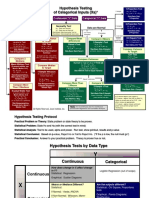

- Hypothesis Testing Roadmap PDFDocument2 paginiHypothesis Testing Roadmap PDFShajean Jaleel100% (1)

- Course Notes MGT338 - Class 5Document3 paginiCourse Notes MGT338 - Class 5Luciene20Încă nu există evaluări

- NDVI Index Forecasting Using A Layer Recurrent Neural Network Coupled With Stepwise Regression and The PCADocument7 paginiNDVI Index Forecasting Using A Layer Recurrent Neural Network Coupled With Stepwise Regression and The PCALuis ManterolaÎncă nu există evaluări

- Cse PDFDocument33 paginiCse PDFSha Nkar JavleÎncă nu există evaluări

- 6 Prosiding ICM2E 2017Document434 pagini6 Prosiding ICM2E 2017Andinuralfia syahrirÎncă nu există evaluări

- Looping StatementsDocument14 paginiLooping StatementsKenneth PanopioÎncă nu există evaluări

- Account Mapping Transformation FileDocument174 paginiAccount Mapping Transformation FileIrvandias AnggriawanÎncă nu există evaluări

- RoboticsDocument579 paginiRoboticsDaniel Milosevski83% (6)

- Dividing Fractions : and What It MeansDocument22 paginiDividing Fractions : and What It MeansFlors BorneaÎncă nu există evaluări

- IP Addressing and Subnetting IPv4 Workbook - Instructors Version - v2 - 1 PDFDocument89 paginiIP Addressing and Subnetting IPv4 Workbook - Instructors Version - v2 - 1 PDFfsrom375% (4)

- Diagnosis: Highlights: Cita Rosita Sigit PrakoeswaDocument68 paginiDiagnosis: Highlights: Cita Rosita Sigit PrakoeswaAdniana NareswariÎncă nu există evaluări

- Ds and AlgoDocument2 paginiDs and AlgoUbi BhattÎncă nu există evaluări

- Fluid Mechanics and Machinery - Lecture Notes, Study Material and Important Questions, AnswersDocument3 paginiFluid Mechanics and Machinery - Lecture Notes, Study Material and Important Questions, AnswersM.V. TVÎncă nu există evaluări

- Heat Transfer Lab: Me8512-Thermal Engineering LabDocument55 paginiHeat Transfer Lab: Me8512-Thermal Engineering LabVinoÎncă nu există evaluări

- Capital Asset Pricing ModelDocument20 paginiCapital Asset Pricing ModelSattagouda M PatilÎncă nu există evaluări

- Manual de Mathcad 14 en Español PDFDocument410 paginiManual de Mathcad 14 en Español PDFalejandro_baro419Încă nu există evaluări

- Figure 2.3 Illustration of Amdahl's Law: © 2016 Pearson Education, Inc., Hoboken, NJ. All Rights ReservedDocument10 paginiFigure 2.3 Illustration of Amdahl's Law: © 2016 Pearson Education, Inc., Hoboken, NJ. All Rights ReservedAhmed AyazÎncă nu există evaluări

- 05 SlideDocument42 pagini05 SlideAtheerÎncă nu există evaluări

- FPGA Implementation of CORDIC Processor: September 2013Document65 paginiFPGA Implementation of CORDIC Processor: September 2013lordaranorÎncă nu există evaluări

- Let Us C SolutionsDocument81 paginiLet Us C Solutionsneonav100% (2)

- Computer Architecture Complexity and Correctness by Silvia M MuellerWolfgang J PDFDocument568 paginiComputer Architecture Complexity and Correctness by Silvia M MuellerWolfgang J PDFDaryl ScottÎncă nu există evaluări

- Types of Relation 1Document17 paginiTypes of Relation 1Justin Adrian BautistaÎncă nu există evaluări

- Naac Lesson Plan Subject-WsnDocument6 paginiNaac Lesson Plan Subject-WsnAditya Kumar TikkireddiÎncă nu există evaluări

- Dokumen Tanpa JudulDocument12 paginiDokumen Tanpa JudulHairun AnwarÎncă nu există evaluări

- Perimeter & Area: Section - ADocument5 paginiPerimeter & Area: Section - AKrishna Agrawal100% (1)

- PQ - With - Id - PDF fkj1 PDFDocument17 paginiPQ - With - Id - PDF fkj1 PDFWorse To Worst SatittamajitraÎncă nu există evaluări