Documente Academic

Documente Profesional

Documente Cultură

A Fundamental Limitation of Symbol-Argum

Încărcat de

appled.ap0 evaluări0% au considerat acest document util (0 voturi)

10 vizualizări6 paginilimitation of symbol arguments

Drepturi de autor

© © All Rights Reserved

Formate disponibile

PDF, TXT sau citiți online pe Scribd

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentlimitation of symbol arguments

Drepturi de autor:

© All Rights Reserved

Formate disponibile

Descărcați ca PDF, TXT sau citiți online pe Scribd

0 evaluări0% au considerat acest document util (0 voturi)

10 vizualizări6 paginiA Fundamental Limitation of Symbol-Argum

Încărcat de

appled.aplimitation of symbol arguments

Drepturi de autor:

© All Rights Reserved

Formate disponibile

Descărcați ca PDF, TXT sau citiți online pe Scribd

Sunteți pe pagina 1din 6

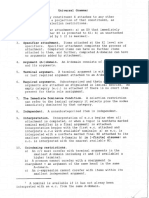

A Fundamental Limitation of Symbol-Argument-Argument Notation

As a Model of Human Relational Representations

Leonidas A. A. Doumas (adoumas@psych.ucla.edu)

John E. Hummel (jhummel@psych.ucla.edu)

Department of Psychology, University of California, Los Angeles

405 Hilgard Ave.

Los Angeles, CA 90095-1563

Abstract the symbols and their arrangement (i.e., role-filler bindings).

For example, the expressions chase (Pat, Don) and chase

Human mental representations are both structure-sensitive (Don, Pat) mean different things, but Pat, Don, and chase

(i.e., symbolic) and semantically rich. Connectionist models mean the same things in both expressions. Formal symbol

have well-known limitations in capturing the structure- systems have this property by assumption: It is given in the

sensitivity of mental representations, while traditional definition of the system that symbols retain their meanings

symbolic models based on varieties of symbol-argument-

across different expressions, and that the meaning of an

argument notation (SAA) have difficulty capturing their

semantic richness. We argue that this limitation of SAA is expression is a function of both its constituent symbols and

fundamental and cannot be alleviated in the notational format their arrangement. Physical symbol systems (Newell,

of SAA itself. Finally, we review an approach to human 1990)—such as digital computers and human

mental representation that captures both its structure- brains—cannot simply “assume” these properties, but

sensitivity and semantic richness. instead must actively work to ensure that both are satisfied.

The claim that human mental representations are symbolic

Relational reasoning—reasoning constrained by the in this sense is controversial (e.g., Elman et al., 1996),

relational roles that objects play, rather than just the features however, relational generalization has proved unattainable

of the objects themselves—is ubiquitous in human mental for non-symbolic models of cognition, and there is reason to

life, and includes analogical inference, schema induction, believe it is fundamentally unattainable for such models (see

and the application of explicit rules (Gentner, 1983; Doumas & Hummel, in press; Halford, Wilson, & Phillips,

Holyoak & Thagard, 1995). In order to support human-like 1998; Hummel & Holyoak, 2003a; Marcus, 1998).

relational thinking, a representational system must meet two

general requirements (Hummel & Holyoak, 1997): First, it

Human Relational Representations Specify the

must represent relations independently of their fillers and

simultaneously specify how fillers are bound to relational

Semantic Content of Objects and Relational Roles

roles (i.e., it must be a symbol system; Newell, 1990). A second important property of human relational

Second, it must explicitly specify the semantic content of representations is that they explicitly specify the semantic

relational roles and their fillers. In this paper, we consider content of objects and relational roles (e.g., the lover and

in detail the implications of the latter requirement, with an beloved roles of love (x, y) or the killer and killed roles of

emphasis on symbol-argument-argument notation (SAA), murder (x, y)): We know what it means to be a lover or a

which includes propositional notation, high-rank tensors, killer, and that knowledge is part of our representation of the

and many varieties of labeled graphs. relation itself (as opposed to being specified in a lookup

table, a set of inference rules, or some other external

Properties of Relational Representations structure). As a result, it is easy to appreciate that the

patient (i.e., killed) role of murder (x, y) is like the patient

role of manslaughter (x, y), even though the agent roles

Human Relational Representations are Symbolic

differ (i.e., the act is intentional in the former case but not

Symbolic representations have the property that symbols the latter); and the agent (i.e., killer) role of murder (x, y) is

are invariant with their role in an expression1, and the similar to the agent role of attempted-murder (x, y), even

meaning of the expression as a whole is a function of both though the patient roles differ.

More evidence that we represent the semantics of

1

This is not to say that symbols may not have shades of meaning relational roles explicitly is that we can easily solve

that vary from one context to another. For example, the symbol mappings that violate the “n-ary” restriction (Hummel &

“loves” suggests different relations in loves (Mary, John) vs. loves Holyoak, 1997). That is, we can map n-place predicates

(Mary, Chocolate). However, as noted by Hummel and Holyoak onto m-place predicates where n ≠ m . For instance, given

(2003a), this kind of contextual shading must be a function of the statements such as taller-than (Abe, Bill), tall (Chad) and

system’s knowledge, rather than an inevitable consequence of the

short (Dave), it is easy to map Abe to Chad and Bill to

way in which it binds relational roles to their fillers.

Dave. Given shorter-than (Eric, Fred), it is also easy to the graph. As such, SAA is meaningfully symbolic in the

map Eric to Bill (and Dave) and Fred to Abe (and Chad). sense described above.

These mappings are based on the semantics of the individual Because SAA systems are meaningfully symbolic they

relational roles rather than the formal syntax of naturally support operations that require relational

propositional notation, or, say, the fact that taller-than and representations, such as structure mapping (a.k.a., analogical

shorter-than are logical opposites: love (x, y) is in some mapping; see e.g., Falkenhainer et al., 1989; Forbus et al.,

sense the opposite of hate (x, y), but in contrast to taller- 1995) and matching symbolic rules (see Anderson &

than and shorter-than (in which the first role of one relation Lebiere, 1998). This is no small accomplishment.

maps to the second role of the other) the first role of love (x, Numerous representational schemes, including traditional

y) more naturally maps to the first role of hate (x, y). In distributed connectionist representations (e.g., Elman, et al.,

short, the similarity and/or mappings of various relational 1996) and the kinds of representations formed by latent

roles are idiosyncratic, based on the semantic content of the semantic analysis (e.g., Landauer & Dumais, 1997) have not

individual roles in question. The semantics of relational succeeded in modeling relational perception or cognition,

roles matter, and are an explicit part of the mental and, as noted above, there are compelling reasons to believe

representation of relations. they are fundamentally ill-suited for doing so.

The semantic properties of relational roles are also The successes of SAA notation as a model of human

evidenced in numerous other ways in human cognition, mental representation are thus decidedly non-trivial. At the

including memory retrieval (e.g., Gentner, Ratterman & same time, however, SAA notation has greater difficulty

Forbus, 1993; Ross, 1989), and analogical mapping and capturing the semantic content of human mental

inference (Bassok, Wu & Olseth, 1995; Kubose, Holyoak & representations. Indeed, this limitation was a central part of

Hummel, 2002; Krawczyk, Holyoak & Hummel, in press; the criticisms leveled against symbolic modeling by the

Ross, 1987). Indeed, the meanings of relational roles connectionists in the mid-1980s (e.g., Rumelhart,

influence relational thinking even when they are irrelevant McClelland, & the PDP Research Group, 1986; for a more

or misleading (e.g., Bassok et al., 1995; Ross, 1989), recent treatment, see Hummel & Holyoak, 1997). One of

suggesting that access to and use of role-based semantic the strengths of distributed connectionist representations is

information is quite automatic. This information appears to their natural ability to capture the semantic content of the

be an integral part of the mental representation of relations. objects they represent. By contrast, propositional notation

Given its centrality in human cognition, an important and labeled graphs have difficulty making this semantic

criterion for a general account of human mental content explicit. As a result, symbolic models based on

representation is that it must represent relations in a way SAA notation often resort to a patchwork of external fixes

that captures the semantics of their roles. such as look-up tables, inference rules and arbitrary, hand-

coded similarity matrices (e.g., Salvucci & Anderson, 2001;

SAA Accounts of Relational Representations high-rank tensors, e.g., Halford et al., 1998, are a notable

exception, but see Doumas & Hummel, in press, and

Numerous models of human cognition account for the

Holyoak and Hummel, 2000, for detailed treatments of the

symbolic nature of relational representations by postulating

limitations of this approach).

representations based on varieties of symbol-argument-

Importantly, these fixes are external to the relational

argument notation (SAA). These include propositional

representations themselves: They are not instantiated in the

notation, varieties of labeled graphs, and high-rank tensor

notation of the representations that encode relations, but

products (e.g., Anderson & Lebiere, 1998; Eliasmith &

rather only at the level of the system that processes these

Thagard, 2001; Falkenhainer, Forbus, & Gentner, 1989;

representations. This separation is fine for accounts of

Forbus, Gentner, & Law, 1995; Halford et al., 1998;

cognition at Marr’s (1982) level of computational theory,

Holyoak & Thagard, 1989; Keane, Ledgeway, & Duff,

but for accounts at the level of representation and algorithm

1994; Ramscar & Yartlett, 2003; Salvucci & Anderson,

such fixes entail specifying multiple sets of representations

2001). In SAA notation relations and their fillers are

(at minimum one for the notational system capturing the

represented as independent symbolic units, which are bound

relations and a second for the system that captures the

together to form larger relational structures. For example, in

relations between different relations and/or semantics of

propositional notation the statement “John loves Mary” is

those relations), and an interface for moving between them.

represented by binding the symbol for the predicate love to a

Moreover, relations in SAA are represented as indivisible

list of its arguments, here John and Mary, forming the

wholes, leaving the roles implicit as semantically empty

proposition love (John, Mary). By virtue of their positions

place-holders or arcs in a graph. For example, the love (x, y)

in the list of arguments, John is taken to be the filler of the

relation is represented by a single symbol (in propositional

first role of the loves relation (i.e., the lover), and Mary is

notation), a single vector (in high-rank tensor products), or a

the filler of the second role (i.e., the beloved). Labeled

single node (in a labeled graph)2, and its roles, lover (x) and

graphs use location in the graph to indicate relational

bindings in much the same way: Relations are represented

independently of their arguments (fillers), and relation-filler 2

Not all models that employ labeled-graphs are based on SAA

bindings are represented in terms of the fillers’ locations in

(e.g., Larkey & Love, 2003), although most are.

beloved (y), are not represented explicitly at all. As such, systems employing SAA (i.e., traditional symbolic models)

even if a relational symbol (as a whole) is assumed to have are at best incomplete as algorithmic accounts of human

semantic content, SAA notation itself does not specify how cognition.

this content is differentially applicable to its arguments. It is

this limitation that gives rise to the n-ary restriction: Even if Representing Relational Roles in SAA

the symbol taller-than is assumed to have meaning, the

In a symbol system, representing something explicitly

expression taller-than (x, y) does not explicitly specify how

means representing it as a structure (e.g., a proposition).

the role filled by x is semantically similar to tall (z), so it

Thus, to represent relational roles and their semantic content

provides no basis for mapping taller-than (x, y) onto tall (z).

explicitly in SAA, it is necessary to represent them

Recall the semantic relations between the roles of murder

structurally (i.e., to predicate them). For example, to

(x , y), manslaughter (x, y), and attempted-murder (x, y).

represent the murder (x, y) relation in terms of its killer (x)

SAA notation can, at best, treat the entire relations as simply

and victim (y) roles, SAA must represent killer (x) and

“similar” or “different”. High-rank tensor systems, for

victim (y) as propositions (or tensors, or nodes), and

instance, represent entire relations (but not their roles) as

simultaneously represent the fact that together they compose

vectors, and compute the similarities between relations

the murder (x, y) relation.

based on the similarities of their vectors. Models using

A relation specifies that its arguments are engaged in

propositional notation and labeled graphs can “recast”

certain specific functions or states. For instance, the

predicates into more abstract forms (e.g., coding both

statement drive (Brutus, the Honda) specifies that Brutus is

murder (x, y) and manslaughter (x, y) as commit-violence-

playing the role of the individual driving, and that the

against (x, y)). Neither of these approaches expresses the

Honda is playing the role of the thing being driven. This

similarity relations between the individual roles of relations,

information is entailed by the relational statement itself. As

however, because they fail to make individual relational

a separate matter, the relation may also imply or suggest

roles (and thus their semantic content) explicit. As a result,

other relations (e.g., that Brutus knows how to drive, that

they do not make clear how the roles of manslaughter (x, y)

the Honda is in running condition, etc.), but it directly

and attempted-murder (x, y) are related to the roles of

entails only the driver and driven-object roles, and the

murder (x, y), nor how they differ.

binding of Brutus and the Honda to these roles.

It is easy to imagine a look-up table or set of inference

A relation does more than simply imply its roles (just as

rules that would supply this information, but the number of

the roles do more than simply imply their parent relation), it

rules required to specify the similarity relations between all

consists of them: Collectively, the roles, along with their

relational roles would scale minimally with (n2 – n)/2 (or n 2

linkage to one another, are the relation. Imagine a relation

- n if equivalent bidirectional similarity is not assumed),

R (p, q), with roles R 1(p) and R 2(q), that implies relation S

where n is the number of relational roles in the system.

(p, q), with roles S 1(p) and S2(q). Although R (p, q) entails

Worse, these rules would be external to the system’s SAA-

or implies a total of four roles (i.e., those of R and those of

based representational system, so an account of how the

S), only two (R 1 and R 2) compose R itself. Therefore,

system operates at the level of representation and algorithm

representing relational roles explicitly requires explicitly

requires a description of the rule set, and an additional

specifying which relations consist of which roles.

control structure to read the SAA and access the rules as

As summarized above, this information seems to come

necessary. All of this is obviated in a system that simply

gratis (i.e., without computational cost) as an integral

codes the semantic content of individual relational roles

component of human relational representations. An

explicitly in the notation of the relational representations.

adequate model of human relational representations should,

The convenience of “postulate them as you need them”

therefore, capture this information in its relational

inference rules makes it is tempting to assume that the lack

representations, rather than relegating it to the processes that

of role-specific semantic information in SAA is merely a

operate on these representations or to external

thorny inconvenience: Surely the problem of role-based

representational systems. This distinction is subtle, but

semantics in SAA is solvable, and will be solved as soon as

important. It is not enough that the system as a whole

it becomes important enough for someone to give it

capture this information; instead the same representations

attention. In the meantime, it is certainly no reason to

that encode the relations should capture it. That is, the

abandon SAA as a model of mental representation,

information should be captured at the level of the notation,

especially if the only alternatives are non-symbolic

or language, of the system rather than in an external system

representational schemes (to anticipate, they are not).

of inference rules, look-up tables, etc.

But it turns out that the problem is more than just a thorny

However, it is not possible to capture relations, their roles

inconvenience. As elaborated in the next section, role-

and the relation between them in the notation of SAA. In

specific semantic information cannot be made internal to

SAA representing a relation explicitly requires instantiating

(i.e., explicit in) the notation of SAA. Instead, the

a proposition. If representing a relation implies representing

knowledge of the semantics of individual roles can only be

its roles, and representing a relation’s roles requires

specified at the level of the whole system, instantiated in

representing the relation between the relation and its roles,

external routines or representational structures. As a result,

then representing any given relation implies a second

proposition representing the relation between the first example, is an extremely useful tool for the purpose for

relation and its roles. This second proposition contains a which it was created, namely theorem proving. For this

new relation, though, which implies roles of its own that purpose, semantic emptiness is a virtue. It just happens that

must be related back to it in a third proposition, which also the design requirements for a representational system for

contains a new relation with roles that must be related back theorem proving differ markedly from the design

to it, and so on. requirements for a representational system for supporting

For example, imagine the propositions love (John, Mary) perception and cognition in living, behaving systems: While

and love (Bill, Sally) and their role bindings, lover (John), the former requires pristine semantic emptiness, the latter

beloved (Mary), lover (Bill) and beloved (Sally). The must deal with the semantically-rich, sometimes ugly

representations must specify which role bindings go realities of the world as it is. Given this, it is not surprising

together to form which relations (e.g., that lover (John) goes that a representational system designed for theorem proving

with beloved (Mary) – as opposed to beloved (Sally) or should prove inadequate as a model of human mental

lover (Bill) – to form the complete relation love (John, representation.

Mary)). Specifying this composition relation in SAA

notation requires a proposition of the form consists-of (love Possible Objections and Responses

(John, Mary), lover (John), beloved (Mary))3. The predicate

We are arguing that SAA is ill-suited for modeling human

consists-of, however, is itself a relation, and so specifies a

mental representations, including relational representations,

set of role bindings of its own, which must be explicitly

for which it appears at first to be ideally suited. Proponents

represented and linked back to the original consists-of

of traditional symbolic models of cognition are likely to

relation with a second consists-of relation. This second

object strenuously to our arguments. We shall try to

consists-of is also a relation and so specifies a set of role

anticipate some of these objections and respond to them.

bindings of its own that must be linked back to it via a third

One potential objection is that the infinite regress is

consists-of relation, and so on.4 The consequence is an

irrelevant because it is a trivial matter to simply terminate

infinite regress that can render the resulting representational

the regress at any arbitrary point and declare it done. The

system ill-typed (Manzano, 1996).

problem with this objection is that the system does not

In other words, it is not possible to represent the relation

finish the process it began. Once the progress halts, the

between relational roles and complete relations explicitly in

relation between roles and relations remains unspecified.

the notation of SAA. For the same reason, it is not possible

Another potential objection is that although role-specific

to specify the semantic content of relational roles explicitly

information cannot be specified in the SAA notation itself, it

in SAA. As noted previously, this information can be

might be adequate to have the information specified at the

specified in a complete system based on SAA (after all,

level of the system as a whole. The problem with this

many such systems are Turing complete), but it is not

objection is that it is an appeal to the status quo: Using

possible to capture this information in the SAA notation

lookup tables, etc., is precisely what current SAA-based

itself. Instead, the knowledge is necessarily contained in the

models are forced to do. As we have argued, the data on the

processes that operate on the SAA representations, or in

role of semantics in human cognition suggest that this

external representational systems that must be interfaced

approach is not adequate. Among other problems, it is too

with the SAA notation. This property renders SAA notation

deliberate: The data on the role of semantics in memory

fundamentally inadequate as a model of human mental

retrieval, analogical mapping, etc., suggest that people use

representations. It does not imply that representing the

role-based semantic information automatically and without

semantic content of relational roles explicitly is impossible

computational cost. Specifying information at multiple

in an SAA based system; it simply indicates that such

levels of representations and postulating routines for

systems cannot capture this content in the same way that

moving between the two is computationally costly.

people seem to—i.e., as an integral part of the relational

Although it is impossible, given the current state of our

representation itself.

knowledge, to rule such an account out definitively, it

It is important to emphasize that the problem of infinite

certainly seems more plausible to assume that role-based

regress is by no means an argument against the general

information is simply a part of relational representations

utility of SAA. On the contrary, propositional notation, for

than to assume that the mind is equipped with a complex

system of look-up tables. Indeed, the use of lookup tables is

3

Naming the relation that specifies the relation of a relation to its

perhaps even more awkward than it appears at first blush.

roles consists-of is, of course, completely arbitrary. Without an explicit representation of role-specific semantics

4

One might argue that this problem could be solved by using in its representations, an SAA system must use an external

binary rather than unary representations of roles. For example, meta-system to interpret its SAA representations, decide

representing lover (John) as lover (John, loves (John, Mary)), thus when the look-up table (another representational system

representing the role, its filler, and the relation to which it belongs. external to SAA) should be accessed, retrieve the relevant

The problem with this approach is that it simply transfers the same role information, translate that information into SAA, and

problem to a new representation. In SAA binary predicates dictate insert it into the original SAA format. Importantly, the

relations. As a result, the system would still have to specify the

external control structure could not, itself, utilize SAA: if it

roles of this binary predicate and how they relate back to it.

did, then it too would require an external meta-system to violating the role-filler independence required for symbolic

keep it’s own relation and role information straight. This representation. However, when a proposition becomes

solution is thus both inelegant and impractical, and in the active, its role-filler bindings are also represented

limit results in a regress, not of nested “consists-of” dynamically by synchrony of firing: SP units in the same

relations, but of external control structures. proposition fire out of synchrony with one another, causing

A third objection to our argument concerns the n-ary object and predicate units, along with their corresponding

restriction. This problem might be solved simply by semantics, to fire in synchrony with each other if they are

postulating that all n-place predicates (where n is free to bound together, and out of synchrony with other bound roles

vary) be replaced by m-place predicates (where m is a and objects. On the semantic units, the result is a collection

constant, say, 3). For example, tall (x) would be recast as of mutually desynchronized patterns of activation, one for

tall (x, -, -), and taller-than (x, y) as taller-than (x, y, -), each role-filler binding. Thus, when a proposition is active,

where “-“ denotes a permanently empty “dummy” slot. In role-filler independence is maintained on its object,

this way, tall (x, -, -) could be mapped to taller-than (x, y, -) predicate and semantic units, with the role-filler bindings

by virtue of their both taking three “arguments”. One carried by the synchrony relations among these units.

problem with this proposal is that it assumes some This is only one way to represent relational knowledge in

procedure for deciding how to recast the predicates. For a role-filler binding scheme (see also Halford et al, 1998,

example, should short (y) be recast as shorter-than (y, -, -) Smolensky, 1990). As demonstrated by Hummel, Holyoak

(the intuitive recasting) or as taller-than (-, y, -)? Note that and their colleagues (see Hummel & Holyoak, 2003b for a

this question must be answered prior to discovering the very review), however, it is a very useful one. LISA has been

mapping(s) the recasting is supposed to permit. A second shown to simulate aspects of memory retrieval, analogical

problem with this proposal is that it still leaves the problem mapping, analogical inference, schema induction, and the

of mapping non-identical predicates: How should the relations between them. It also provides a natural account

system map the arguments of taller-than (x, y, -) to those of of the capacity limits of human working memory, the effects

shorter-than (x, y, -) without knowing what the roles of each of brain damage and normal aging on reasoning, the relation

relation “mean”? between effortless (“reflexive”) and more effortful

(“reflective”) reasoning, and aspects of the perceptual-

Semantically Rich Representations of cognitive interface. It inherits these abilities from its ability

Relational Roles to capture both the relational structure and the semantic

richness of human mental representations.

We have argued that SAA is at best incomplete as a

model of human mental representation. However, this

limitation does not by any means imply that we must Conclusion

abandon symbolic accounts of mental representation. Few would claim that people literally think in the

Rather, what is needed is a representational system that predicate calculus. However, many researchers have argued

makes relational roles, their semantics, their bindings to that SAA-based representations serve at least as a plausible

their fillers and their composition into complete relations all shorthand for human mental representations. We have

explicit. This approach is commonly known as role-filler argued that SAA notation is at most a shorthand for human

binding (e.g., Halford, et al. 1998). relational representations—a shorthand that must

In a role-filler binding system roles are represented necessarily leave the messy business of the semantics of

explicitly and bound to their fillers. Relations are composed relational roles to fundamentally external representations

of linked role-filler bindings. One example is the and processes. Inasmuch as the semantics of roles are an

representational system employed by Hummel and important internal component of human mental

Holyoak’s (1997, 2003a) LISA model. LISA uses a representation, as we, and others, have argued they are, this

hierarchy of distributed and localist codes to represent fact leaves an important facet of human mental

relational structures. At the bottom, “semantic” units representation necessarily beyond the reach of SAA-based

represent objects and roles in a distributed fashion (e.g., for models. The solution to this problem is not to abandon

the relation love (John, Mary) “John” might be represented symbolic representations altogether, as proposed by some,

as human, adult, male, etc., and Mary by human, adult, but rather to replace SAA with a role-filler binding approach

female, etc.; similarly, the lover and beloved roles of the to the representation of relational knowledge in models of

love relation would be represented by units capturing their cognition. Doing so provides a natural basis for capturing

semantic content). At the next level, these distributed both the symbolic structure of human mental representations

representations are connected to localist units representing and their semantic richness.

individual objects and relational roles. Above the object and

role units, localist role-binding units (SPs) link object and Acknowledgements

role units into specific role-filler bindings. At the top of the

The authors would like to thank Keith Holyoak, Keith

hierarchy, localist P units link SPs into entire propositions.

Stenning, Ed Stabler, Tom Wickens, and Charles Travis for

In this representation, the long-term binding of roles to

their fillers is captured by the conjunctive SP units, thus

helpful discussion and comments. This work was supported Keane, M. T., Ledgeway, T., & Duff, S. (1994).

by a grant from the UCLA Academic Senate. Constraints on analogical mapping: A comparison of

three models. Cognitive Science, 18, 387-438.

References Krawczyk, D. C., Holyoak, K. J., & Hummel, J. E. (in

press). Structural constraints and object similarity in

Anderson, J. R. & Lebiere, C. (1998). The atomic

analogical mapping and inference. Thinking and

components of thought. Mahwah, NJ: LEA.

Reasoning.

Bassok, M., Wu, L., & Olseth, K. L. (1995). Judging a book

Kubose, T. T., Holyoak, K. J., & Hummel, J. E. (2002).

by its cover: Interpretative effects of content on problem-

The role of textual coherence in incremental analogical

solving transfer. Memory & Cognition, 23, 354-367.

mapping. Journal of Memory and Language, 47, 407-

Doumas, L. A. A., & Hummel, J. E. (in press). Modeling

435.

human mental representations: What works, what

Larkey, L. & Love, B. (2003). CAB: Connectionist Analogy

doesn’t, and why. In K. J. Holyoak & R. Morrison

Builder. Cognitive Science , 27, 781-794.

(Eds.), The Cambridge Handbook of Thinking and

Landauer, T.K. and Dumais, S. T. (1997) A solution to

Reasoning. Cambridge: Cambridge University Press.

Plato's problem: TheLatent Semantic Analysis theory of

Eliasmith, C. & Thagard, P. (2001). Integrating structure

acquisition, induction andrepresentation of knowledge.

and meaning: A distributed model of analogical mapping.

Psychological Review, 104, 211-240.

Cognitive Science, 25, 245-286.

Manzano, M. (1996). Extensions of first order logic.

Elman, J. L., Bates, E., Johnson, M. H., Karmaloff-Smith,

Cambridge: Cambridge University Press.

A., Parisi, D., & Plunkett, K. (1996). Rethinking

Marcus, G. F. (1998). Rethinking eliminative

Inateness: A Connectionist Perspective on Development.

connectionism. Cognitive Psychology, 37(3), 243-282.

Cambridge, MA: MIT Press.

Marr, D. (1982). Vision. Freeman: San Francisco.

Faulkenhainer, B., Forbus, K. D., & Gentner, D. (1989).

Newell, A. (1990). Unified theories of cognition.

The structure mapping engine: Algorithm and examples.

Cambridge, MA: Harvard University Press.

Artificial Intelligence, 41, 1-63.

Ramscar, M. & Yarlett, D. (2003) Semantic Grounding in

Forbus, K. D., Gentner, D., & Law, K. (1995). MAC/FAC:

Models of Analogy: An Environmental Approach.

A model of similarity-based retrieval. Cognitive Science,

Cognitive Science, 27, 41-71.

19, 141-205.

Ross, B. (1987). This is like that: The use of earlier

Gentner, D., Ratterman, M. J., & Forbus, K. D. (1993). The

problems and the separation of similarity effects. JEP:

roles of similarity in transfer: Separating retrievability

Learning, Memory, and Cognition, 13, 629-639.

from inferential soundness. Cognitive Psychology, 25,

Ross, B. (1989). Distinguishing types of superficial

524-575.

similarities: Different effects on the access and use of

Halford, G. S., Wilson, W. H., & Phillips, S. (1998).

earlier problems. JEP: Learning, Memory, and Cognition,

Processing capacity defined by relational complexity:

15, 456-468.

Implications for comparative, developmental, and

Rumelhart, D. E., McClelland, J. L., & the PDP Research

cognitive psychology. Brain and Behavioral Sciences,

Group (1986). Parallel distributed processing:

21, 803-864.

Explorations in the microstructure of cognition. (Vol. 1).

Holyoak, K. J., & Hummel, J. E. (2000). The proper

Cambridge, MA: MIT Press.

treatment of symbols in a connectionist architecture. In

Salvucci, D. D., & Anderson, J. R. (2001). Integrating

E. Dietrich and A. Markman (Eds.). Cognitive Dynamics:

analogical mapping and general problem solving: The

Conceptual Change in Humans and Machines (pp. 229 -

path mapping theory. Cognitive Science, 25, 67-110.

264). Hillsdale, NJ: Erlbaum.

Smolensky, P. (1990). Tensor product variable binding and

Holyoak, K. J., & Thagard, P. (1989). Analogical mapping

the representation of symbolic structures in connectionist

by constraint satisfaction. Cognitive Science, 13, 295-355.

systems. Artificial Intelligence, 46, 159-216.

Hummel, J. E., & Holyoak, K. J. (1997). Distributed

representations of structure: A theory of analogical

access and mapping. Psychological Review, 104, 427-

466.

Hummel, J. E., & Holyoak, K. J. (2003a). A symbolic-

connectionist theory of relational inference and

generalization. Psychological Review, 110, 220-263.

Hummel, J. E., & Holyoak, K. J. (2003b). Relational

reasoning in a neurally-plausible cognitive architecture:

An overview of the LISA project. Cognitive Studies:

Bulletin of the Japanese Cognitive Science Society, 10,

58-75.

S-ar putea să vă placă și

- Optimality Theory - Constraint Interaction in Generative Grammar PDFDocument245 paginiOptimality Theory - Constraint Interaction in Generative Grammar PDFappled.apÎncă nu există evaluări

- 2001 Gentner Holyoak Kokinov The Analogical MindDocument534 pagini2001 Gentner Holyoak Kokinov The Analogical Minddichodotchum100% (1)

- McfootDocument43 paginiMcfootappled.apÎncă nu există evaluări

- Mele-Free Will - Action Theory Meets Neuroscience PDFDocument31 paginiMele-Free Will - Action Theory Meets Neuroscience PDFappled.apÎncă nu există evaluări

- Affixal Light Verbs and Complex Predicat PDFDocument26 paginiAffixal Light Verbs and Complex Predicat PDFappled.apÎncă nu există evaluări

- Richard E. Nisbett - Intelligence and How To Get It - Why Schools and Cultures Count (2009, W. W. Norton & Company) PDFDocument310 paginiRichard E. Nisbett - Intelligence and How To Get It - Why Schools and Cultures Count (2009, W. W. Norton & Company) PDFappled.apÎncă nu există evaluări

- Empiricist Approaches to Language LearningDocument24 paginiEmpiricist Approaches to Language Learningappled.apÎncă nu există evaluări

- Syntactic Features of Proper Names: The Generativist Approach (Es) To ProperhoodDocument29 paginiSyntactic Features of Proper Names: The Generativist Approach (Es) To Properhoodappled.apÎncă nu există evaluări

- Unsupervised Learning of The Morphology PDFDocument46 paginiUnsupervised Learning of The Morphology PDFappled.apÎncă nu există evaluări

- A Theory of Reflexive Relational Generalization: Leonidas A. A. Doumas (Adoumas@indiana - Edu)Document6 paginiA Theory of Reflexive Relational Generalization: Leonidas A. A. Doumas (Adoumas@indiana - Edu)appled.apÎncă nu există evaluări

- Nikolaus Ritt - Selfish Sounds and Linguistic Evolution - A Darwinian Approach To Language Change (2004, Cambridge University Press) PDFDocument343 paginiNikolaus Ritt - Selfish Sounds and Linguistic Evolution - A Darwinian Approach To Language Change (2004, Cambridge University Press) PDFappled.apÎncă nu există evaluări

- Applicative Constructions and Suppletive PDFDocument10 paginiApplicative Constructions and Suppletive PDFappled.apÎncă nu există evaluări

- An Algorithm For The Unsupervised Learni PDFDocument20 paginiAn Algorithm For The Unsupervised Learni PDFappled.apÎncă nu există evaluări

- Geoffrey Sampson, David Gil, Peter Trudgill - Language Complexity As An Evolving Variable (Studies in The Evolution of Language) (2009) PDFDocument325 paginiGeoffrey Sampson, David Gil, Peter Trudgill - Language Complexity As An Evolving Variable (Studies in The Evolution of Language) (2009) PDFappled.ap100% (1)

- Cognitive Load and Semantic Analogies Searching Semantic Space PDFDocument7 paginiCognitive Load and Semantic Analogies Searching Semantic Space PDFappled.apÎncă nu există evaluări

- A Review of Mental Leaps - Analogy in Creative Thought by Hofstadter PDFDocument6 paginiA Review of Mental Leaps - Analogy in Creative Thought by Hofstadter PDFappled.apÎncă nu există evaluări

- Universal Grammar OnepageDocument1 paginăUniversal Grammar Onepageappled.apÎncă nu există evaluări

- Capacity, Complexity, Construction: Wayne State University, Detroit, MI 48202, USADocument16 paginiCapacity, Complexity, Construction: Wayne State University, Detroit, MI 48202, USAappled.apÎncă nu există evaluări

- Metadata of The Chapter That Will Be Visualized Online: MandalDocument8 paginiMetadata of The Chapter That Will Be Visualized Online: Mandalappled.apÎncă nu există evaluări

- X-Bar Theory and Morphological Juncture PDFDocument227 paginiX-Bar Theory and Morphological Juncture PDFappled.apÎncă nu există evaluări

- The syntax of verb/affix hybrids and clause fusion in HiakiDocument13 paginiThe syntax of verb/affix hybrids and clause fusion in Hiakiappled.apÎncă nu există evaluări

- Critical Thinking and GrammarDocument35 paginiCritical Thinking and Grammarappled.apÎncă nu există evaluări

- Journal-Notes On Cognitive Science (E.g., C. R. Gallistel and Adam Philip King's Important BookDocument13 paginiJournal-Notes On Cognitive Science (E.g., C. R. Gallistel and Adam Philip King's Important Bookappled.apÎncă nu există evaluări

- Syntactic Typology and Contrastive Studies Report Analyzes Relative Clauses and Case MarkingDocument259 paginiSyntactic Typology and Contrastive Studies Report Analyzes Relative Clauses and Case Markingappled.apÎncă nu există evaluări

- Hofstadter, D. & Gabora, L. (1989) - Synopsis of The Workshop On Humor and Cognition, Humor, 2 (4), 417-440 PDFDocument21 paginiHofstadter, D. & Gabora, L. (1989) - Synopsis of The Workshop On Humor and Cognition, Humor, 2 (4), 417-440 PDFappled.apÎncă nu există evaluări

- Chalmers, French, Hofstadter-High-level Perception, Representation, and Analogy - A Critique of AI Methodology PDFDocument26 paginiChalmers, French, Hofstadter-High-level Perception, Representation, and Analogy - A Critique of AI Methodology PDFappled.apÎncă nu există evaluări

- Hofstadter and McGraw (1993) - Letter Spirit - An Emergent Model of The Perception and Creation of Alphabetic StyleDocument29 paginiHofstadter and McGraw (1993) - Letter Spirit - An Emergent Model of The Perception and Creation of Alphabetic Styleappled.apÎncă nu există evaluări

- Chalmers, French, Hofstadter-High-level Perception, Representation, and Analogy - A Critique of AI Methodology PDFDocument26 paginiChalmers, French, Hofstadter-High-level Perception, Representation, and Analogy - A Critique of AI Methodology PDFappled.apÎncă nu există evaluări

- Hofstadter, D. & Gabora, L. (1989) - Synopsis of The Workshop On Humor and Cognition, Humor, 2 (4), 417-440 PDFDocument21 paginiHofstadter, D. & Gabora, L. (1989) - Synopsis of The Workshop On Humor and Cognition, Humor, 2 (4), 417-440 PDFappled.apÎncă nu există evaluări

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (587)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (399)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2219)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (265)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (119)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Hands-On With GEL Scripting, Xog and The Rest ApiDocument166 paginiHands-On With GEL Scripting, Xog and The Rest ApiVicente RJÎncă nu există evaluări

- CitectSCADA 7.20 Service Pack 2 Release NotesDocument31 paginiCitectSCADA 7.20 Service Pack 2 Release Notesbasecamp cikarangÎncă nu există evaluări

- 05-Power BI Lab ManualDocument48 pagini05-Power BI Lab ManualMiguel Angel Benito100% (1)

- JFLAP Manual PDFDocument23 paginiJFLAP Manual PDFdolivasdÎncă nu există evaluări

- Landmark Technology v. Bridgestone AmericasDocument18 paginiLandmark Technology v. Bridgestone AmericasPriorSmartÎncă nu există evaluări

- Assignment 3Document2 paginiAssignment 3Poonam VaswaniÎncă nu există evaluări

- OpenTable Case StudyDocument5 paginiOpenTable Case StudyLi LoanÎncă nu există evaluări

- MSBTE Solution App-1 PDFDocument186 paginiMSBTE Solution App-1 PDFOjaswini BorseÎncă nu există evaluări

- Study Permit Re-Application for Computer Systems ProgramDocument8 paginiStudy Permit Re-Application for Computer Systems ProgramKeshavDhanwantryÎncă nu există evaluări

- CP Roadmap For Below ExpertsDocument5 paginiCP Roadmap For Below ExpertsOmar ChessÎncă nu există evaluări

- Sekilas Pandang Cyberpreneurship: M. Fakhrurrazi, Skom, MSCDocument31 paginiSekilas Pandang Cyberpreneurship: M. Fakhrurrazi, Skom, MSCApinÎncă nu există evaluări

- Reviewer AIS Chap 1 and 2Document10 paginiReviewer AIS Chap 1 and 2Jyasmine Aura V. AgustinÎncă nu există evaluări

- 58MMPrinter Programmer Manual-20150312 PDFDocument28 pagini58MMPrinter Programmer Manual-20150312 PDFXelo Giacaman MuñozÎncă nu există evaluări

- Module 2 Creating A Wbs 1Document6 paginiModule 2 Creating A Wbs 1api-639091589Încă nu există evaluări

- Dual-Frequency Patch AntennaDocument8 paginiDual-Frequency Patch AntennaSukhmander SinghÎncă nu există evaluări

- Debug 1214Document4 paginiDebug 1214Anggun Mutia SariÎncă nu există evaluări

- Manual NATRDocument2 paginiManual NATRPaco Ramírez HernándezÎncă nu există evaluări

- Learn Touch Typing Free - Typingclub: Related To: ClubDocument3 paginiLearn Touch Typing Free - Typingclub: Related To: ClubGwen Cabarse PansoyÎncă nu există evaluări

- Mahesh Kumar GS - Technical Project Manager - Technical Lead - Senior Developer - DeveloperDocument9 paginiMahesh Kumar GS - Technical Project Manager - Technical Lead - Senior Developer - Developernagaraj.hÎncă nu există evaluări

- Struggles of ICT Students Who LackDocument13 paginiStruggles of ICT Students Who LackAjel Rafael Buenviaje100% (1)

- Field Study 1 Chapter 3 Pandemic EditionDocument46 paginiField Study 1 Chapter 3 Pandemic EditionShaenna AliÎncă nu există evaluări

- HLC-723GX Service Manual Rev.D PDFDocument278 paginiHLC-723GX Service Manual Rev.D PDFBiomedica100% (1)

- Research Paper Two-ColumnDocument22 paginiResearch Paper Two-ColumnJoseph TsoÎncă nu există evaluări

- Shoeb ResumeDocument4 paginiShoeb ResumeM-Shoeb ShaykÎncă nu există evaluări

- Fungsi Komposisi English YOLANDADocument11 paginiFungsi Komposisi English YOLANDAyolanda anastasyaÎncă nu există evaluări

- Isa HDDocument5 paginiIsa HDEmir KamberovicÎncă nu există evaluări

- Legal NewayDocument2 paginiLegal Newayhr pslÎncă nu există evaluări

- Using ERwin 7 Metadata IntegrationDocument20 paginiUsing ERwin 7 Metadata IntegrationAlagar PitchaiÎncă nu există evaluări

- 07 Alexandr - Dubovikov Sipcapture Introducing Homer 5 Captagent 6Document40 pagini07 Alexandr - Dubovikov Sipcapture Introducing Homer 5 Captagent 6Đăng Đào Hải100% (1)

- Emerging IT trends in management systemsDocument5 paginiEmerging IT trends in management systemsrohan kumarÎncă nu există evaluări