Documente Academic

Documente Profesional

Documente Cultură

A Compilation Target For Probabilistic Programming Languages - 2014 (Paige14) (Dragged) 2

Încărcat de

raqibappTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

A Compilation Target For Probabilistic Programming Languages - 2014 (Paige14) (Dragged) 2

Încărcat de

raqibappDrepturi de autor:

Formate disponibile

A Compilation Target for Probabilistic Programming Languages

#include "probabilistic.h" completely independently from the point where fork was

#define N 10

called. While copying program execution state may naı̈vely

// Observed data

static double data[N] = { 1.0, 1.1, 1.2,

sound like a costly operation, this actually can be rather

-1.0, -1.5, -2.0, efficient: when fork is called, a lazy copy-on-write pro-

0.001, 0.01, 0.005, 0.0 };

cedure is used to avoid deep copying the entire program

// Struct holding mean and variance parameters for each cluster

typedef struct theta {

memory. Instead, initially only the pagetable is copied to

double mu; the new process; when an existing variable is modified in

double var;

} theta; the new program copy, then and only then are memory con-

// Draws a sample of theta from a normal-gamma prior tents duplicated. The overall cost of forking a program is

theta draw_theta() {

double variance = 1.0 / gamma_rng(1, 1);

proportional to the fraction of memory which is rewritten

return (theta) { normal_rng(0, variance), variance }; by the child process (Smith & Maguire, 1988).

}

// Get the class id for a given observation index Using fork we can branch a single program execution state

static polya_urn_state urn;

void get_class(int *index, int *class_id) {

and explore many possible downstream execution paths.

*class_id = polya_urn_draw(&urn); Each of these paths runs as its own process, and will run

}

in parallel with other processes. In general, multiple pro-

int main(int argc, char **argv) {

double alpha = 1.0;

cesses run in their own memory address space, and do not

polya_urn_new(&urn, alpha); communicate or share state. We handle inter-process com-

mem_func mem_get_class; munication via a small shared memory segment; the details

memoize(&mem_get_class, get_class, sizeof(int), sizeof(int));

of what global data must be stored are provided later.

theta params[N];

bool known_params[N] = { false }; Synchronization between processes is handled via mutual

int class; exclusion locks (mutex objects). Mutexes become partic-

for (int n=0; n<N; n++) {

mem_invoke(&mem_get_class, &n, &class); ularly useful for us when used in conjunction with a syn-

if (!known_params[class]) {

params[class] = draw_theta();

chronized counter to create a barrier, a high-level blocking

known_params[class] = true; construct which prevents any process proceeding in exe-

}

o b s e r v e (normal_lnp(data[n], params[class].mu, cution state beyond the barrier until some fixed number of

}

params[class].var));

processes have arrived.

// Predict number of classes

p r e d i c t ("num_classes,%2d\n", urn.len_buckets);

3. Inference

// Release memory; exit

polya_urn_free(&urn);

return 0;

3.1. Probability of a program execution trace

}

To notate the probability of a program execution trace,

we enumerate all N observe statements, and the asso-

Figure 3. A infinite mixture of Gaussians on the real line. Class ciated observed data points y1 , . . . , yN . During a single

assignment variables for each of the 10 data points are drawn fol-

run of the program, some total number N 0 random choices

lowing a Blackwell-MacQueen urn scheme to sequentially sample

x01 , . . . , x0N 0 are made. While N 0 may vary between indi-

from a Dirichlet process.

vidual executions of the program, we require that the num-

ber of observe directive calls N is constant.

2.1. Operating system primitives

The observations yn can appear at any point in the program

Inference proceeds by drawing posterior samples from the source code and define a partition of the random choices

space of program execution traces. We define an execution x01:N 0 into N subsequences x1:N , where each xn contains

trace as the sequence of memory states (the entire virtual all random choices made up to observing yn but excluding

memory address space) that arises during the sequential any random choices prior to observation yn 1 . We can then

step execution of machine instructions. define the probability of any single program execution trace

The algorithms we propose for inference in probabilistic N

Y

programs map directly onto standard computer operating p(y1:N , x1:N ) = g(yn |x1:n )f (xn |x1:n 1) (9)

system constructs, exposed in POSIX-compliant operating n=1

systems including Linux, BSD, and Mac OS X. The cor-

In this manner, any model with a generative process that

nerstone of our approach is POSIX fork (Open Group,

can be written in C code with stochastic choices can be

2004b). When a process forks, it clones itself, creating a

represented in this sequential form in the space of program

new process with an identical copy of the execution state

execution traces.

of the original process, and identical source code; both

processes then continue with normal program execution Each observe statement takes as its argument

S-ar putea să vă placă și

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- Asset-V1 - NYIF+ITA - PC1x+2T2020+type@asset+block@Mod1 - 03 - Why - Technical - Analysis - Works (Dragged) 3Document1 paginăAsset-V1 - NYIF+ITA - PC1x+2T2020+type@asset+block@Mod1 - 03 - Why - Technical - Analysis - Works (Dragged) 3raqibappÎncă nu există evaluări

- Simple Portfolio Optimization That WorksDocument164 paginiSimple Portfolio Optimization That WorksraqibappÎncă nu există evaluări

- Asset-V1 - NYIF+ITA - PC1x+2T2020+type@asset+block@Mod1 - 03 - Why - Technical - Analysis - Works (Dragged) 4Document1 paginăAsset-V1 - NYIF+ITA - PC1x+2T2020+type@asset+block@Mod1 - 03 - Why - Technical - Analysis - Works (Dragged) 4raqibappÎncă nu există evaluări

- Asset-V1 - NYIF+ITA - PC1x+2T2020+type@asset+block@Mod1 - 03 - Why - Technical - Analysis - Works (Dragged)Document1 paginăAsset-V1 - NYIF+ITA - PC1x+2T2020+type@asset+block@Mod1 - 03 - Why - Technical - Analysis - Works (Dragged)raqibappÎncă nu există evaluări

- Asset-V1 NYIF+ITA - PC1x+2T2020+type@asset+block@Mod1 01 IntroductionDocument16 paginiAsset-V1 NYIF+ITA - PC1x+2T2020+type@asset+block@Mod1 01 IntroductionraqibappÎncă nu există evaluări

- Asset-V1 NYIF+ITA - PC1x+2T2020+type@asset+block@Mod1 02 Overview of Technical AnalysisDocument6 paginiAsset-V1 NYIF+ITA - PC1x+2T2020+type@asset+block@Mod1 02 Overview of Technical AnalysisraqibappÎncă nu există evaluări

- Asset-V1 - NYIF+ITA - PC1x+2T2020+type@asset+block@Mod1 - 03 - Why - Technical - Analysis - Works (Dragged) 2Document1 paginăAsset-V1 - NYIF+ITA - PC1x+2T2020+type@asset+block@Mod1 - 03 - Why - Technical - Analysis - Works (Dragged) 2raqibappÎncă nu există evaluări

- Simple Portfolio Optimization That WorksDocument164 paginiSimple Portfolio Optimization That WorksraqibappÎncă nu există evaluări

- A Novel Hybrid Quicksort Algorithm Vectorized Using AVX-512 On Intel Skylake - 2017 (Paper - 44-A - Novel - Hybrid - Quicksort - Algorithm - Vectorized)Document9 paginiA Novel Hybrid Quicksort Algorithm Vectorized Using AVX-512 On Intel Skylake - 2017 (Paper - 44-A - Novel - Hybrid - Quicksort - Algorithm - Vectorized)raqibappÎncă nu există evaluări

- Headerless, Quoteless, But Not Hopeless? Using Pairwise Email Classification To Disentangle Email ThreadsDocument9 paginiHeaderless, Quoteless, But Not Hopeless? Using Pairwise Email Classification To Disentangle Email ThreadsraqibappÎncă nu există evaluări

- Bringing Back Structure To Free Text Email Conversations With Recurrent Neural NetworksDocument12 paginiBringing Back Structure To Free Text Email Conversations With Recurrent Neural NetworksraqibappÎncă nu există evaluări

- SalesDocument1 paginăSalesraqibappÎncă nu există evaluări

- Hek PDFDocument1 paginăHek PDFraqibappÎncă nu există evaluări

- Design Patterns PDFDocument76 paginiDesign Patterns PDFfluptÎncă nu există evaluări

- A Little Journey Inside Windows Memory (Dragged) 8Document1 paginăA Little Journey Inside Windows Memory (Dragged) 8raqibappÎncă nu există evaluări

- A Little Journey Inside Windows Memory (Dragged) 6Document1 paginăA Little Journey Inside Windows Memory (Dragged) 6raqibappÎncă nu există evaluări

- A Little Journey Inside Windows Memory (Dragged) 9Document1 paginăA Little Journey Inside Windows Memory (Dragged) 9raqibappÎncă nu există evaluări

- A NUMA API For Linux - Novell (2005) (Dragged) 2Document1 paginăA NUMA API For Linux - Novell (2005) (Dragged) 2raqibappÎncă nu există evaluări

- A NUMA API For Linux - Novell (2005) (Dragged)Document1 paginăA NUMA API For Linux - Novell (2005) (Dragged)raqibappÎncă nu există evaluări

- A Little Journey Inside Windows Memory (Dragged) 7Document1 paginăA Little Journey Inside Windows Memory (Dragged) 7raqibappÎncă nu există evaluări

- A Little Journey Inside Windows Memory (Dragged) 3Document1 paginăA Little Journey Inside Windows Memory (Dragged) 3raqibappÎncă nu există evaluări

- A Little Journey Inside Windows Memory (Dragged) 5Document1 paginăA Little Journey Inside Windows Memory (Dragged) 5raqibappÎncă nu există evaluări

- A Little Journey Inside Windows Memory (Dragged) 4Document1 paginăA Little Journey Inside Windows Memory (Dragged) 4raqibappÎncă nu există evaluări

- A Little Journey Inside Windows Memory (Dragged)Document1 paginăA Little Journey Inside Windows Memory (Dragged)raqibappÎncă nu există evaluări

- A Compilation Target For Probabilistic Programming Languages - 2014 (Paige14) (Dragged)Document1 paginăA Compilation Target For Probabilistic Programming Languages - 2014 (Paige14) (Dragged)raqibappÎncă nu există evaluări

- A Little Journey Inside Windows Memory (Dragged)Document1 paginăA Little Journey Inside Windows Memory (Dragged)raqibappÎncă nu există evaluări

- A Comprehensive Study of Main-Memory Partitioning and Its Application To Large-Scale Comparison - and Radix-Sort (Sigmod14i) (Dragged) 2 PDFDocument1 paginăA Comprehensive Study of Main-Memory Partitioning and Its Application To Large-Scale Comparison - and Radix-Sort (Sigmod14i) (Dragged) 2 PDFraqibappÎncă nu există evaluări

- Introducing OpenAIDocument3 paginiIntroducing OpenAIraqibappÎncă nu există evaluări

- A Comprehensive Study of Main-Memory Partitioning and Its Application To Large-Scale Comparison - and Radix-Sort (Sigmod14i) (Dragged) PDFDocument1 paginăA Comprehensive Study of Main-Memory Partitioning and Its Application To Large-Scale Comparison - and Radix-Sort (Sigmod14i) (Dragged) PDFraqibappÎncă nu există evaluări

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Commands PDFDocument261 paginiCommands PDFSenan Alkaaby100% (1)

- Trendnet: 8-Port Stackable Rack Mount KVM Switch With OsdDocument2 paginiTrendnet: 8-Port Stackable Rack Mount KVM Switch With OsdAlmir HoraÎncă nu există evaluări

- Farlinx Mini x.25 Gateway DatasheetDocument6 paginiFarlinx Mini x.25 Gateway Datasheetbeto0206Încă nu există evaluări

- 407-1 KeepItSimple PDFDocument22 pagini407-1 KeepItSimple PDFHernan Soberon100% (1)

- SSD - DicasDocument15 paginiSSD - DicasAnonymous CPQKqCVPP1Încă nu există evaluări

- DO Deploying Basic QoSDocument66 paginiDO Deploying Basic QoSGesang BasusenaÎncă nu există evaluări

- Assignment 1 ReportDocument19 paginiAssignment 1 ReportJyiou YimushiÎncă nu există evaluări

- National Locksmith - Oct 2005Document101 paginiNational Locksmith - Oct 2005Benjamin DoverÎncă nu există evaluări

- A4Z01200PH63Document31 paginiA4Z01200PH63Sridharan VenkatÎncă nu există evaluări

- Ghi DiaDocument8 paginiGhi Diaapi-3758127Încă nu există evaluări

- Surges Happen!: How To Protect The Appliances in Your HomeDocument25 paginiSurges Happen!: How To Protect The Appliances in Your Home2zeceÎncă nu există evaluări

- Log 1507548350Document80 paginiLog 1507548350MimiÎncă nu există evaluări

- 800D Industrial EnginesDocument104 pagini800D Industrial EnginesMACHINERY101GEARÎncă nu există evaluări

- FDOTMicroStationEssentialsPart IDocument214 paginiFDOTMicroStationEssentialsPart ITrader RedÎncă nu există evaluări

- VNR i3SYNC ENGDocument10 paginiVNR i3SYNC ENGVANERUM Group - Vision InspiresÎncă nu există evaluări

- PW160-7K S 0411Document890 paginiPW160-7K S 0411ado_22100% (2)

- H&K Tube - FactorDocument10 paginiH&K Tube - FactorToMÎncă nu există evaluări

- Inspire 1 (DJI), Scout X4 (Walkera), Voyager 3 (Walkera)Document15 paginiInspire 1 (DJI), Scout X4 (Walkera), Voyager 3 (Walkera)Quads For FunÎncă nu există evaluări

- Sentron: Technological Leader Amongst The Circuit-Breakers: SENTRON CommunicationDocument186 paginiSentron: Technological Leader Amongst The Circuit-Breakers: SENTRON Communicationsatelite54Încă nu există evaluări

- Engineers Mini NotebookDocument80 paginiEngineers Mini NotebookvibhutepmÎncă nu există evaluări

- 5.11.1) Operation and Requirements: 5.11) Audio player/MP3 PlayerDocument10 pagini5.11.1) Operation and Requirements: 5.11) Audio player/MP3 PlayerManikandan AnnamalaiÎncă nu există evaluări

- Introduction To HP Load Runner Getting Familiar With Load Runner 4046Document28 paginiIntroduction To HP Load Runner Getting Familiar With Load Runner 4046DurgaCharan KanaparthyÎncă nu există evaluări

- FfmpegDocument44 paginiFfmpegKrishnadevsinh GohilÎncă nu există evaluări

- IC PackagesDocument17 paginiIC PackagesS.V.SubrahmanyamÎncă nu există evaluări

- IkegamiDocument4 paginiIkegamialelendoÎncă nu există evaluări

- OpenStack DocumentationDocument48 paginiOpenStack DocumentationSubramanyaÎncă nu există evaluări

- Ict Gadgets Gadget Basic Functions Image Brand Model Date of ReleaseDocument4 paginiIct Gadgets Gadget Basic Functions Image Brand Model Date of ReleaseMark Erwin SalduaÎncă nu există evaluări

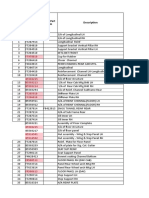

- Spare Parts MsDocument62 paginiSpare Parts MsEngineering EntertainmentÎncă nu există evaluări

- CMS Cables AceleracionDocument20 paginiCMS Cables AceleracionMarcel BaqueÎncă nu există evaluări

- Solidworks Mastercam Add in InstallationDocument11 paginiSolidworks Mastercam Add in InstallationShulhanuddin NasutionÎncă nu există evaluări