Documente Academic

Documente Profesional

Documente Cultură

A Computer Vision Based V-Dressing Room Application Using Web Camera

Încărcat de

Augus AngelTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

A Computer Vision Based V-Dressing Room Application Using Web Camera

Încărcat de

Augus AngelDrepturi de autor:

Formate disponibile

M. Augusta Angel et. al.

/ International Journal of New Technologies in Science and Engineering

Vol. 5, Issue. 7, 2018, ISSN 2349-0780

A Computer Vision based V-Dressing room

application using web camera

1 2 3

M.Augusta Angel , N.Augustia , A.J. Immanuel Gem Isaac

Assistant Professor1 ,Assistant Professor2, Assistant Professor3

V V College of Engineering,Arasoor,India.

Email:augusangel@gmail.com,augustia93@gmail.com,gem318@gmail.com

ABSTRACT - The unwanted occlusions between the user and the model. Radio frequency

identify The search for the safe and secure online shopping leads to the development of

virtual environment for clothes try on. This study proposes a real time virtual dressing

room application to enable users to try virtual garments in front of a virtual mirror. The

client's hand movements select the garments from a run down on the screen. A while later,

the chose virtual garments show up on the client in the virtual mirror (web camera). For

the purpose of aligning the 3D garments with the model, 3D locations of the joints are used

for positioning, scaling and rotating. The skin color detection algorithms are also used to

handle cation (RFID) is used as electronic prepaid card, to pay the price of the dress.

Keywords— Radio frequency identification (RFID),Computer Vision, OpenCV,

Augmented Reality, Human-Computer interaction.

I. INTRODUCTION

OpenCV was intended for computational productivity and with a solid spotlight on

constant applications. Written in upgraded C/C++, the library can exploit multi center handling.

One of OpenCV's objectives is to give an easy to-utilize PC vision foundation that enables

individuals to manufacture genuinely complex vision applications rapidly. OpenCV is aimed at

providing the basic tools needed to solve computer vision problems.

In this paper a virtual dressing room application using OpenCV is introduced. Trying

clothes in clothing stores is usually a time consuming activity. Besides, it might not even be

possible to try-on clothes in such cases as online shopping. By creating a virtual dressing room

environment we can increase the time Efficiency and improve the accessibility of clothes try on

in dressing room. The problem is simply the alignment of the user and the cloth models with

accurate position, scale, Rotation and ordering. First of all, detection of the user and the body

parts is one of the main steps of the problem. In literature, several approaches are proposed for

body part detection, skeletal tracking and posture estimation. Extraction of user allows us to

create an augmented reality environment by isolating the user area from the video stream and

superimposing it onto a virtual environment in the user interface.

Radio frequency identification (RFID) is utilized to portray a framework that transmits

the character (as a special serial number) of a protest or individual remotely, utilizing radio

waves. It's grouped under the broad category of automatic identification technologies. RFID is

used as prepaid electronic card and the price of the dress can be paid from the card.

II. DESIGN METHODOLOGY

The ultimate aim of this paper is to increase the time efficiency and improve the

Available online @ www.ijntse.com 22

M. Augusta Angel et. al. / International Journal of New Technologies in Science and Engineering

Vol. 5, Issue. 7, 2018, ISSN 2349-0780

accessibility of clothes try on by creating a virtual dressing room environment. In the existing

system, trying clothes involve superimposition of clothes on the photo frame of the person. This

may not satisfy the customer completely. Because sometimes the clothes chosen by them may

not be fit to them.

The proposed approach is mainly based on extraction of the user from the video stream,

alignment of models and skin color detection. The 3D locations of the joints are used for

positioning, scaling and rotation in order to align the 2D cloth models with the user.

In this approach the Viola Jones algorithm, a widely used method for real-time object

detection for face detection is used. Training is slow, but detection is very fast, segmentation is

done by using Graph Cut algorithm and fitting, scaling, and translation are done by using

Morphological algorithm. The user interface allows the user to choose a t-shirt by making a hand

movement towards to it.

Figure 1 Block diagram of the proposed system

Figure 2 Design Methodology

Available online @ www.ijntse.com 23

M. Augusta Angel et. al. / International Journal of New Technologies in Science and Engineering

Vol. 5, Issue. 7, 2018, ISSN 2349-0780

The Block diagram of the proposed system is shown in figure 1. Figure 2 depicts the

steps involved in design methodology. The input video is captured using web camera and is

given to PC through USB port.

Figure 3 Schematic block diagram

The PC has been pre-installed with openCV library. This carry out specific task like face

detection, The process of ‘fitting’ is carried out between the captured video and the mask image

using criteria like Translation, Rotation and Scaling after the dress has been selected. In this

approach, the Voila Jones algorithm for face detection. Segmentation is done by using Graph cut

algorithm and Fitting, Scaling and Translation are done by using Morphological algorithm.

The PC is connected to the microcontroller through UART which is for making serial

communication between the PC and the controller. The information about the selected dress is

given to the controller from the PC through this UART. From the controller the rate of the

selected dress is given to the LCD to display.

The Bar code information of the selected dress is given to the third port of ATMEL89s52

through UART. Thus the controller displays the rate of the dress in LCD through port2. The

RFID is connected at port0 of the microcontroller. This contains Reader and Tag using which the

customer can pay the displayed amount.

The maximum voltage that can be applied to the controller is 0v (VSS) to 5v (VDD). The

input supply given to RFID is 5v to energies the coil and to generate electromagnetic field.

ALGORITHM

Step1: Start

Step2: Face & body detection- using VIOLA JONES algorithm. This algorithm is implemented in

OpenCV as cvHaarDetectObjects()

Step3: Segmentation

Available online @ www.ijntse.com 24

M. Augusta Angel et. al. / International Journal of New Technologies in Science and Engineering

Vol. 5, Issue. 7, 2018, ISSN 2349-0780

Step4: Fitting using Translation, rotation and scaling

Step5: Morphologic operations (Dilation & Erosion)

Step6:Final Output video

Step7:Dress barcode information given to microcontroller through UART

Step8:The cost is displayed in LCD

Step9:The cost is paid using RFID

Step10:stop

III. RESULT AND DISCUSSIONS

The proposed system requires only to have a front image for each product to superimpose

it onto the user and the 2D graphics of the product seem to be relatively satisfactory and practical

for many uses.

Figure 4 Experimental model

The experiments have resulted with acceptable performance rates for regular postures.

Figure 4 shows the experimental results where the dress is superimposed on the user.

There are many possible implementations regarding the model used for fitting. It is

possible to apply a homographic transformation to the images rather than the simple scale-rotate

Available online @ www.ijntse.com 25

M. Augusta Angel et. al. / International Journal of New Technologies in Science and Engineering

Vol. 5, Issue. 7, 2018, ISSN 2349-0780

technique in order to match multiple joints altogether although it would require more

computation. Another alternative could be using many pictures at different angles so that it

would be possible to create more realistic video streams. One could achieve a similar effect using

3D models and rendering them according to the current angle and positions.

Application

• • Benefits to customer

• • Better opportunities for creative expression/ experiment for clothing style

• • Reduce the proportion of returned items

• • Increased opportunities for customization.

IV. CONCLUSION

To improve comfortable online shopping this paper proposes a methodology by which

the user can select their dress online and it is imposed on them so that, they can have happy

shopping. The proposed system uses various efficient algorithm to extract user from the video

stream. It also supports online payment through RFID.

REFERENCES

[1] R. Moore, A. Kipman, and A. Blake, “Real-Time Human Pose Recognition in Parts

from Single Depth Images”, Proceedings of IEEE Conference on Computer Vision and Pattern

Recognition, 2011.

[2] K. Kjærside, K.J. Kortbek, H. Hedegaard, “AR DressCode: Augmented

Dressing Room with Tag-based Motion Tracking and Real-Time Clothes Simulation”,

Proceedings of the Central European Multimedia and Virtual Reality Conference, 2005.

[3] K. Onishi, T. Takiguchi, and Y. Ariki, “3D Human Posture Estimation using the

HOG Features from Monocular Image”, 19th International Conference on Pattern Recognition,

2008.

[4] D. Chai, and K. N. Ngan, “Face Segmentation using Skin-Color Map in Videophone

Applications”, IEEE Transactions on Circuits and Systems for Video Technology, vol. 9, no. 4,

June 1999.

Available online @ www.ijntse.com 26

S-ar putea să vă placă și

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Annex A - Scope of WorkDocument4 paginiAnnex A - Scope of Workمهيب سعيد الشميريÎncă nu există evaluări

- Marine-Derived Biomaterials For Tissue Engineering ApplicationsDocument553 paginiMarine-Derived Biomaterials For Tissue Engineering ApplicationsDobby ElfoÎncă nu există evaluări

- 5000-5020 en PDFDocument10 pagini5000-5020 en PDFRodrigo SandovalÎncă nu există evaluări

- Issue15 - Chirag JiyaniDocument6 paginiIssue15 - Chirag JiyaniDipankar SâháÎncă nu există evaluări

- Tutorial 3 MFRS8 Q PDFDocument3 paginiTutorial 3 MFRS8 Q PDFKelvin LeongÎncă nu există evaluări

- Alto Hotel Melbourne GreenDocument2 paginiAlto Hotel Melbourne GreenShubham GuptaÎncă nu există evaluări

- Performance Evaluation of The KVM Hypervisor Running On Arm-Based Single-Board ComputersDocument18 paginiPerformance Evaluation of The KVM Hypervisor Running On Arm-Based Single-Board ComputersAIRCC - IJCNCÎncă nu există evaluări

- Turnbull CV OnlineDocument7 paginiTurnbull CV Onlineapi-294951257Încă nu există evaluări

- Studies - Number and Algebra P1Document45 paginiStudies - Number and Algebra P1nathan.kimÎncă nu există evaluări

- Distillation ColumnDocument22 paginiDistillation Columndiyar cheÎncă nu există evaluări

- DILG Opinion-Sanggunian Employees Disbursements, Sign Checks & Travel OrderDocument2 paginiDILG Opinion-Sanggunian Employees Disbursements, Sign Checks & Travel OrderCrizalde de DiosÎncă nu există evaluări

- Altos Easystore Users ManualDocument169 paginiAltos Easystore Users ManualSebÎncă nu există evaluări

- NJEX 7300G: Pole MountedDocument130 paginiNJEX 7300G: Pole MountedJorge Luis MartinezÎncă nu există evaluări

- NCP - DMDocument4 paginiNCP - DMMonica Garcia88% (8)

- Papalia Welcome Asl 1 Guidelines 1 1Document14 paginiPapalia Welcome Asl 1 Guidelines 1 1api-403316973Încă nu există evaluări

- E-Waste: Name: Nishant.V.Naik Class: F.Y.Btech (Civil) Div: VII SR - No: 18 Roll No: A050136Document11 paginiE-Waste: Name: Nishant.V.Naik Class: F.Y.Btech (Civil) Div: VII SR - No: 18 Roll No: A050136Nishant NaikÎncă nu există evaluări

- Chapter 10 OutlineDocument3 paginiChapter 10 OutlineFerrari75% (4)

- Microbiological Quality Ice CreamDocument9 paginiMicrobiological Quality Ice CreamocortezlariosÎncă nu există evaluări

- Oral Com Reviewer 1ST QuarterDocument10 paginiOral Com Reviewer 1ST QuarterRaian PaderesuÎncă nu există evaluări

- Motion To Dismiss Guidry Trademark Infringement ClaimDocument23 paginiMotion To Dismiss Guidry Trademark Infringement ClaimDaniel BallardÎncă nu există evaluări

- Under Suitable Conditions, Butane, C: © OCR 2022. You May Photocopy ThisDocument13 paginiUnder Suitable Conditions, Butane, C: © OCR 2022. You May Photocopy ThisMahmud RahmanÎncă nu există evaluări

- SAP HCM Case StudyDocument17 paginiSAP HCM Case StudyRafidaFatimatuzzahraÎncă nu există evaluări

- Ducati WiringDocument7 paginiDucati WiringRyan LeisÎncă nu există evaluări

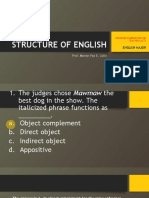

- LET-English-Structure of English-ExamDocument57 paginiLET-English-Structure of English-ExamMarian Paz E Callo80% (5)

- Group 4&5 Activity Syntax AnalyzerDocument6 paginiGroup 4&5 Activity Syntax AnalyzerJuan PransiskoÎncă nu există evaluări

- Teal Motor Co. Vs CFIDocument6 paginiTeal Motor Co. Vs CFIJL A H-DimaculanganÎncă nu există evaluări

- Dashboard - Reveal Math, Grade 4 - McGraw HillDocument1 paginăDashboard - Reveal Math, Grade 4 - McGraw HillTijjani ShehuÎncă nu există evaluări

- Team 6 - Journal Article - FinalDocument8 paginiTeam 6 - Journal Article - FinalAngela Christine DensingÎncă nu există evaluări

- Ziarek - The Force of ArtDocument233 paginiZiarek - The Force of ArtVero MenaÎncă nu există evaluări

- Offshore Training Matriz Matriz de Treinamentos OffshoreDocument2 paginiOffshore Training Matriz Matriz de Treinamentos OffshorecamiladiasmanoelÎncă nu există evaluări