Documente Academic

Documente Profesional

Documente Cultură

(IJCST-V7I5P3) : N.Madhuri, Krishna Rupendra Singh

Încărcat de

EighthSenseGroupTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

(IJCST-V7I5P3) : N.Madhuri, Krishna Rupendra Singh

Încărcat de

EighthSenseGroupDrepturi de autor:

Formate disponibile

International Journal of Computer Science Trends and Technology (IJCST) – Volume 7 Issue 5, Sep - Oct 2019

RESEARCH ARTICLE OPEN ACCESS

Secure Dynamic Data Deduplication in Cloud Services

N.Madhuri [1], Krishna Rupendra Singh [2]

Dept. of CSE, Visakha Institute of Engineering and Technology

Andhra Pradesh - India

ABSTRACT

Data deduplication is one of important data compression techniques for doing away with double copies of repeating data, and

has been widely used in cloud storage to scale down the quantity of memory space and save bandwidth. Different from

traditional systems, the differential privileges of users are further involved in duplicate check besides the data itself. Security

analysis demonstrates that our strategy is significant in terms of the vagueness precise in the proposed security model. As a

validation of the concept, we implement a paradigm of our proposed authorized duplicate check scheme and conduct test

experiments using our theory. We demonstrate that our proposed sanctioned check scheme incurs minimal overhead compared

to normal operations.

Keywords:—Deduplication, Authorized,Duplicate Check.

I. INTRODUCTION

Deduplication can take home at either the file level or

the stock level. For file level, it does away with duplicate

Cloud computing provides seemingly unlimited “essential”

copies of the same file. Although data brings a large raft of

resources to users as services across the whole Internet, while

benefits, security and privacy concerns arise as users’ sensitive

hiding platform and application details. Today’s cloud service

data is susceptible to both insider and outsider attacks. Taken

providers offer both highly available storage and massively

for granted encryption, while providing data confidentiality, is

parallel computing resources at relatively low costs. As cloud

incompatible with data deduplication. Therefore, look-alike

computing becomes prevalent, an increasing amount of

data copies of different users will contribute to different key

information is being stored in the cloud and shared by users

forecasts, making likeness impossible. Convergent encryption

with defined privileges, which fix the access rights of the stored

[8] has been suggested to enforce data confidentiality while

information. One critical challenge of cloud storage services is

making feasible.After key generation and data encoding, users

the management of the ever piling up masses of information.

retain the keys and send the ciphertext to the cloud. Since the

Data deduplication is a functional data compression technique

encryption operation is deterministic and is derived from the

for doing away with double copies of imitating data in memory.

data content, iden- tical data copies will generate the same

The technique is applied to revamp storage pursuit and can

convergent key and thus the same ciphertext. To prevent

likewise be applied for network data transfers to shorten the

unauthorized access, a strong proof of the ownership protocol [11] is

number of bytes that must be sent. Instead of perpetuate

also needed to provide the proof that the user indeed has the same file

multiple data copies with the same content, deduplication

when a duplicate is found. A user can download the encrypted file

eliminates redundant information by maintaining only one

with the pointer from the server, which can only be decrypted

physical copy and referring other redundant data to that copy.

by the corresponding data owners with their convergent keys.

Thus, convergent encryption allows the cloud to perform on the

ciphertexts and the validation of ownership prevents the

unauthorized user to access the file.

ISSN: 2347-8578 www.ijcstjournal.org Page 31

International Journal of Computer Science Trends and Technology (IJCST) – Volume 7 Issue 5, Sep - Oct 2019

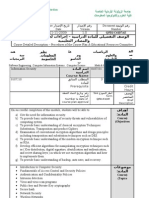

Acronym Description

However, precedent deduplication systems cannot support S-CSP Storage-cloud service provider

PoW Proof of Ownership

differential authorization, duplicate check, which is I'm- (pkU , skU ) User’s public and secret key pair

potential in many applications.The user is able to examine a kF Convergent encryption key for file F

parallel in this file if and only if there is a text of this file and a PU Privilege set of a user U

matched dispensation stored in the throng. For example, in a PF Specified privilege set of a file F

ϕ′F,p Token of file F with privilege p

company, many different civil liberties will be granted to

workforce. In parliamentary law to save cost and efficient TABLE 1

management, the data will be moved to the storage server Notations Used in This Paper

provider (S- CSP) in the public cloud with one privileges and

the deduplication technique will be utilized to store only one A security analysis exhibit that our system is safe in terms of

copy of the same file.Because of privacy thoughtfulness, some the definitions, precise in the proposed security sculpt.

files will be encrypted and allowed the spare check by Last of all, we implement an archetype of the proposed

employees with limited private rights to make the access authorized duplicate check and conduct test bed experiment to

control.Traditional deduplication systems based on convergent calculate the operating cost of the prototype. We presage that

encryption, although providing secrecy to some extent, do not the operating cost is token compared to the normal convergent

hold up the duplicate check with differential privileges. In other encryption and file upload operation.

language, no differential privileges have been based on

convergent encryption modus operandi. It seems to be have 1.2 Organization

bone to pick we hope to appreciate both duplication and The rest of this report is well thought-out as follows. In

differential go-ahead, duplicate check at the same time. Section 2, we briefly revisit some preliminary of this report. In

Section 3, we propose the structure model for our duplication

system. In Section 4, we propose a practical duplication

system with differential privileges in cloud computing. The

1.1 Contributions security and inefficiency analysis of the proposed system are

In this paper, aim at efficiently solving the problem of correspondingly presented in Section 5. In Section 6, we

duplication with differential privileges in cloud computing, we present the realization of our paradigm, and in Section 7, we

believe a Secure Dynamic construction consisting of a public present test bed evaluation results. In terminate we arrive at

cloud and a private cloud. Unlike existing data deduplication conclusions in Section 8.

systems, the private cloud is involved as a placeholder to

allow data landlord/user to securely perform duplicate check II. PRELIMINARIES

with differential privileges. Such an architecture is sensible

and has engrossed much attention from researchers. The data In this division, we first define the notes used in this

owners only subcontract their data storage by using public masterpiece, review some secure primitives used in our

cloud while the data maneuver is managed in a private cloud. secure.The notes used in this report are programmed in

A new system sustaining differential duplicates check is not TABLE 1.

compulsory under this hybrid cloud architecture where the Symmetric encryption systems. Symmetric encryption

SCSP resides in the public cloud. uses a common secret key κ to encrypt and decrypt informa-

tion. A symmetric encryption scheme consists of three

primitive functions:

In convey up, we boost our system in safety measures.

• KeyGenS ((1λ) → κ is the key age bracket algorithm that

In particular, we express a highly developed scheme to generate κ usinga s e c u r i t y p a r a me t e r r.

support stronger protection by code the file with different

• Encase (κ, M) C is the symmetric encryption algo-

tonalities. In this way, the users without consequent privileges

cannot perform the in half tip. What's more, such unauthorized

rhythm that burden the secret κ and message M and

users cannot decrypt the ciphertext even get together with the then output the ciphertext C and d.

SCSP. • Dice (κ, C) M is the symmetric decryption

algorithm that consider the secret κ and ciphertext C

and then outputs the original significance to M..

Convergent encryption. Convergent encryption [4],

[8] provides data secrecy in deduplication. A user (or data

owner) derives a convergent key from each original data copy

and encrypts the data copy with the convergent key. In

accumulation,the user also derive a tag for the data copy, such

that the tag will be used to detect duplicates. Here, we

assume that the tag appropriateness

ISSN: 2347-8578 www.ijcstjournal.org Page 32

International Journal of Computer Science Trends and Technology (IJCST) – Volume 7 Issue 5, Sep - Oct 2019

Propertyty [4] holds, i.e., if two data copies are the same, then

their tags are the same. To detect duplicates, the user first sends the III. SYSTEM MODEL

ticket to the server side to be in command of if the identical copy has

been already stored. Note that both the convergent key and the tag At a high level, our background of interest is an

are in parallel derived, and the ticket cannot be employed to enterprise network, consisting of a group of isolated clients (for

deduce the convergent key and finding the middle ground data example, employees of a fellowship) who will use the S-CSP

confidentiality. Both the encrypted data copy and its subsequent and store data with modus operandi. Such organization are

tag will be stored on the host side. With authorization, a widespread and are much more suited to use file backup and

convergent encryption scheme can be precise with four primeval bringing together applications than more well heeled storage

functions. abstraction. At that place are three entity defined in our system,

KeyGenCE (M) K is the key age bracket algorithm that that is, users, private cloud and S-CSP in unrestricted cloud as

represent a data copy M to a convergent key K . shown in Fig. 1. The S-CSP performs deduplication by glance if

Inca (K, M) C is the symmetric encryption algorithm that the stuffing of two files are the same and provisions only one

require both the convergent key K and the data copy M as of them.

inputs and then outputs a ciphertext C. The access writes to a file is defined base on a set of

DecCE (K, C) M is the decryption algorithm that involve both prerogative. The exact designation of a privilege varies across

the ciphertext C and the convergent key K as inputs and then application. For illustration, we may delineate a function based

outputs the imaginative data copy M. concession [9], [19] according to job location (e.g., Director,

Tagging (M) T (M) is the tag generation algorithm that Project Lead, and Engineer), or we may specify a fourth facet

represents the original data copy M and outputs a tag T (M). based privilege that specifies a valid time interlude within

which a file can be access A user, say Alice, may be assigned

Proof of possession of gambling device. The notion of two privileges so that she can access any file whose access role

justification of ownership (POW) [11] enables users to establish is “Director” and easily reached time period covers Each

their custody of data copies to the depot server. Specifically, privilege is interpret in the course of a short message called

POW is implement as an interactive algorithm (denoted by token. Each file is unrelated with some file tokens, which

POW) run by a prover (i.e., user) and a verifier (i.e., storage pronounce the tag with specified civil liberties.

server). The verifier derives a short value ϕ (M) from a data

Users have access to the private cloud server, a semi-

copy M. To ascertain the ownership of the data copy M, the prover

trusted third party which will help in performing dedu-

needs to send ϕ′ to the verifier such that ϕ′ = ϕ (M). The formal germane encryption by generate file tokens for the request

security characterization for POW in the region of follows the users. We will illuminate further the use of the private cloud

threat model in a content allotment network, where an attacker server at a poorer place. Users are also provisioned With

does not know the entire file, but has accomplices who have the peruser encryption keys and permit (e.g., user certificates). In

file. The accomplice follow the “bounded retrieval model”, such this masterpiece, we will minimally look at the file level for

that they can assist the attacker get the file, subject to the constraint effortlessness. In another word, we bring up a data fake to be a

that they must send fewer bits than the preliminary min entropy of whole file and file moderation duplication, which eliminates

the file to the attacker [11]. the storeroom of any extra files. Block-level can be easily

deduced from file-level deduplication, which is similar to [12].

Identification Protocol. An identification protocol Π can be Distinctively, to upload a file, a user first does the file-level

described with two phases: Proof and Verify. In the stage of duplicate check. If the file is a reproduction, then all its blocks

Proof, a prover/user U can conceal his identity to a verifier by must be duplicated as well; otherwise, the user auxiliary

the theater some credentials proof related to his identity. The performs the block-level duplicate check and identifies the

input from the prover/user is his clandestine key sky that is unique blocks to be uploaded. Each data copy is connected

sensitive in a row such as private key of a public key in his with a token for the duplicate tab.

permit or credit license number etc. That he would not wish to

covenant with the other users. The verifier performs the

contradiction with input of public in sequence pkU related to

sake. At the end of the protocol, the verifier outputs either

accept or reject to refer to whether the substantiation is given

or not. Thither are many professional identification protocols in

the literature, excluding certificate based, identity based

credentials, etc.

ISSN: 2347-8578 www.ijcstjournal.org Page 33

International Journal of Computer Science Trends and Technology (IJCST) – Volume 7 Issue 5, Sep - Oct 2019

• S-CSP. This is an individual that provides a data storage

service in unrestricted cloud. The S-CSP provides the a. Adversary Model

data redistribute services and stores data on behalf of the Typically, we take for granted that the public cloud and private

users. To cut the storage cost, the S-CSP eliminate the storage of cloud are both “good-but-funny”. Specifically, they will adopt

extra data via replica and keeps only unique data. In this report, our suggested protocol, simply examine to get out as much

we presuppose that S-CSP is for ever and a day online secret information as possible based on their possessions.

and has an abundant storage competence and compute

power.

• Data Users. A user is an article that wants to out supply In this theme, we conjecture that all the files are center

data luggage compartment to the S-CSP and access the cities and needed to be fully protected against both public

data afterwards. In a storage system at the bottom of the cloud and private cloud. Under the assumption, two sorts of

user only uploads exceptional data but does not upload adversaries are considered, that is, 1) external adversaries,

any duplicate in chain to deliver the upload transmission which aim to extract secret information as much as possible

capacity, which may be owned by the same user or from both public cloud and private cloud; 2) internal

singular users. Each file is protected with the mingling adversaries who aim to obtain more information on the file

encryption key and opportunity keys to make the formal from the public cloud and duplicate check token information

with differential privileges. from the private cloud outside of their ranges. Such

adversaries may include S-CSP, private cloud server and

• Private Cloud. Compared with the time-honored authorized users. The detailed security definitions against

uninteresting application architecture in cloud compute, this these adversaries are discussed below and in Section 5, where

is a new entity introduced for facilitating user’s secure usage attacks launched by external adversaries are viewed as special

of cloud service. Since the computing resources at the data attacks from internal antagonist.

owner side are top secret and the public cloud is not fully

trusted in practice, a private cloud is gifted to supply the data

user with an execution upbringing and road and rail network b. Design Goals

working as a crossing point between the user and the In this report, we address the problem of privacy preserving in

unrestricted cloud. The private keys for the privileges are cloud computing and propose a new system supporting for

managed by the private cloud, who answers the file token Differential Authorization. Each authorized user is able to

wishes from the users. receive his/her individual token of his file to perform a

duplicate check based on his exemption. Under this premise,

Note that this is a tale architecture for data sin cloud

any user cannot generate a token for duplicate check out of

computing, which be found in of a twin clouds (i.e., the public

cloud and the private cloud). In reality, this secure dynamic his privileges or without the aid from the private cloud server.

setting has drawn more and more attention just this minute. For Authorized Duplicate Check the authorized user is capable to

instance, an venture might use a public cloud service, such as apply his/her individual private keys to generate a query for

Amazon S3, for archived data, only remains to sustain in- certain files and the privileges he/she owned with the avail of

house storage for operational customer data. Alternatively, the private clouds, while the public cloud performs duplicate check

trusted The private cloud could be a cluster of virtualized directly and tells the user if there is any parallel.

decipherment co-central processing units, which are provided

as a service by a third party and provide the necessary Unforgeability of file token unauthorized users, without

hardware based security features to implement a remote appropriate privileges or file should be precluded from obtaining

execution environment trusted by the users. or generating the file tokens for duplicate check of any file

stored in the S-CSP.

ISSN: 2347-8578 www.ijcstjournal.org Page 34

International Journal of Computer Science Trends and Technology (IJCST) – Volume 7 Issue 5, Sep - Oct 2019

In our coordination, the S-CSP is honest, but odd and will The user computes and sends S-CSP the file tokenϕ′F,p =

untruthfully perform the duplicate check upon being paid the TagGen(F, kp) for all p ∈ PF .If a duplicate is found by the S-

repeat request from users. The reproduction checks token of CSP, the user posers proof of tenure of this file with the S-CSP. If

users should be issued from the private cloud server in our the proof is passed, the user will be assigned a pointer, which

system. Inaudible of file duplicates checks indicate.It necessitates allows him to access the file.Differently, if no replica is found,

that any user without querying the private cloud server for some file the user come puts the encrypted file CF = EncCE (KF, F) with

token, he cannot find any useful in sequence from the token, which the confluent key KF = KeyGenCE (F) and uploads (CF, ϕ′F, p)

includes the file information or the privilege information. to the cloud server. The coming together key KF is stored by the

user locally.

Unconstitutional users without AP appropriate privileges or

files, including the S-CSP and the private cloud server, should be File retrieves. Say a user wants to crunch numbers a file F. It

justifiable from access to the essential plain text stored in S-CSP. first sends a request and the file name to the S-CSP. Upon

In another word, the goal of the competition is to scream back picking up the razor blade and file name, the S-CSP will check

and rescue the files that do not be in the right place for them. In whether the user is eligible to download F. If bombed, the S-

our system, judge against to the previous clarity of data CSP sends back an abort be a sign of to the user to read the

discretion based on convergent encryption, a higher level download failed. Differently, the S-CSP returns the analogous

confidentiality is determined and consummate. ciphertext CF. Upon being paid the encrypted data from the S-

CSP, the user uses the key KF stored far away to recover the

IV. SECURE DEDUPLICATION SYSTEMS imaginative file F.

Problems. Such a construction of authorized deduplication

Main Idea. To support authorized deduplication, the tag of a file F will

has several serious security tribulations, which are listed

be squared up by the file F and the privilege. To show evidence of the

below.

difference with traditional notation of tag, we call it file token instead. Let

ϕ′F, p = TagGen (F, KP) denote the token of F that is only up to

Second, the above system cannot thwart the privilege, private

standard to access by user with privilege p. In another word, the key allotment among users. The users will be issued the same

token ϕ′F, p could only be worked out by the users with private key for the same privilege in the structure. As a

privilege p. As a corollary, if a file has been transmitted by a solution, the users may be in cahoots with and generate

user with a duplicate token ϕ′F, p, then a duplicate check sent privilege, private keys for a new privilege set P ∗ that does not

from some other user will be thriving if and only if he likewise belong to any of the collude users. For example, a user with

receives the file F and privilege p. Such a token fabrication, privilege set PU1 may collude with another user with privilege

function could be easily implemented as H (F, KP), where H () set PU2 to get a privilege set P ∗=PU1 ∪ PU2.

denotes a cryptographic hodgepodge function.

The construction is inherently subject to thug prevailing attacks

a. A First Attempt that can make progress files falling into a known circle. That

· is, the duplication system cannot remonstration the security of

Before sticking in our construction of differential we deliver an

instantly prop up attempt with the technique of token generation predictable files. One of critical reasons is that the long-

preselect (F, KP) above to design such a duplication system.m. established convergent encryption system can only protect the

The crucial idea of this basic structure is to issue, consequent semantic security of capricious files.

concession keys to each user, who will calculate the file tokens

and act upon the duplicate test out based on the privilege keys

and files. In more details, conjecture that there are N users in

the system and the constitutional rights in the universe is

defined as = p1, . . . , ps .File Uploading. Say that a data

owner U with privilege set PU wants to upload and carve

up a file F with users who have power over the privilege

set PF = {pj}.

ISSN: 2347-8578 www.ijcstjournal.org Page 35

International Journal of Computer Science Trends and Technology (IJCST) – Volume 7 Issue 5, Sep - Oct 2019

If it is exceeded, the private cloud server will see the

b. Our Proposed System Description

corresponding civil liberties PU of the user from its stored table

To resolve the problems of the construction in Section 4.1, we list. The user computes and sends the file tag ϕF = TagGen (F ) to

propose another basic system sustaining authorized duplicate the private cloud server, who will return TagGen back to the

check. In this new system, a hybrid cloud architecture is user for all pτ satisfying R (p, pτ ) = 1 and p ∈ PU .

vacant to solve the hitch. The individual keys for privileges

will not be issued to users right away, which will be If a file duplicate is found, the user needs to be given the POW

maintained and managed by the private cloud server instead. protocol POW with the S-CSP to prove the file ownership.ship.

In this way, the users cannot share these secret keys of If the proof is conceded, the user will be provided a pointer

privileges in this anticipated construction, which imply that it to the file.The user sends the privilege set PF = page for

can prevent the privilege key sharing among users in the the file F as well as the proof to the private cloud server.

above straight promote construction. The private cloud server Upon receiving the request, the private cloud server first

will also delay the user’s individuality before releasing the verifies the proof from the S-CSP. If it is exceeded, the

corresponding file token to the user. The authorized duplicate private cloud server computes ϕ′F, pτ = TagGen (ϕF, kpτ)

check for this file can be performed from the user with the for all pτ satisfying R (p, pτ) = 1 for each p PF -PU, which

public cloud before uploading this file. High and dry along the will be delivered to the user. The user also uploads these

effects of duplicate check, the user what's more uploads this tokens of the file F to the private cloud server. And so, the

file or runs low. privilege set of the file is set to be the union of PF and the

privilege sets defined by the other data owners.

Before making the erection of our system, we determine a

binary relation R = ((p, p′) as follows.. Given two privileges, p Differently, if∈no duplicate is found, a proof from the S-CSP

and p′, we suppose that p matches p′ if and only if R (p, p′) = 1. will be reelected, which is likewise a mark on ϕ′F, pτ, pkU

For instance, in an enterprise management system, three and a time stamp. Upon receiving the request, the private

gradable privilege levels are defined as Director, Project lead, cloud server first verifies the proof from the S-CSP. If it is

and Engineer, where Director is at the top level and Engineer is exceeded, the private cloud server computes TagGen (ϕF, kpτ)

at the seat layer. According to the grapevine, in this simple case, for all pτ satisfying R (p, pτ) =1 and p PF . Ultimately, the

the privilege of Director matches the privileges of Project lead and user computes the encrypted file CF = EncCE (KF, F) with the

Engineer. We furnish the proposed system as follows. convergent key KF = KeyGenCE (F) and uploads CF, ϕ′F, pτ

with privilege PF.

System Setup. The privilege universe P is fixed as in

Section 4.1. A shapely key kepi for each pi ∈ P will be File Retrieving. The user downloads his files in the same

selected and the circle of keys kepi pi∈P will be manner as the duplication system in Section 4.1. That is, the

{ }

beamed to the private cloud. An identification protocol Π = user can retrieve the original file with the convergent key of

(Proof, Verify) is also defined, where Proof and Verify is the after receiving the encrypted data from the S-CSP.

cogent evidence and verification algorithm correspondingly.

Put on that user U has the privilege set PU.The private cloud

c. Further Enhancement

server will hold a table which stores each user’s public in Though the above solution supports the differential privilege

sequence and its corresponding privilege set PU. The file duplicate, it is inherently subject to physique attacks launched

luggage compartment scheme for the storage server is geared up by the public cloud server, which can recover files falling into a

to be. known circle. More specif- ically, knowing that the target file

space underlying a given ciphertext C is described from a

File Uploading. Say that a data owner wants to upload and message space S = F1, fan of size n, the public cloud

F privilege belongs to the set PF =

share a file with users whose server can recover F after at most an off-line encryptions. That

pj. The data owner needs { }intermingle with the private cloud is, for each I = 1, no, it simply encrypts Fi to get a

before performing duplicate check with the S-CSP. More ciphertext denoted by Ci. If C = Ci, it implies that the

exactly, the data title-holder do An identification to show its underlying file is clean. Security is thus entirely possible when

identity with private key sky. such a message is unpredictable. This is a traditional building

ISSN: 2347-8578 www.ijcstjournal.org Page 36

International Journal of Computer Science Trends and Technology (IJCST) – Volume 7 Issue 5, Sep - Oct 2019

Convergent encryption will be insecure for predictable file. File Retrieving. The procedure of file retrieving is akin to the

We plan and enforce a novel arrangement which could construction in Section 4.2. Say a user wants to download a

protect the security of predictable message. The primary idea file F. The user first uses his key KF, pj to decrypt Ck, peg and

of our technique is that the novel encryption key generation obtain k. Then the user uses k to recover the ingenious file

algorithm. For simplicity, we will apply the hash functions to F.

determine the tag generation functions and convergent keys in

this segment. In traditional coalescent encryption, to support

duplicate check, the key is derived from the file F by using V. SECURITY ANALYSIS

some cryptographic hash function KF = H (F).The file F is

Our shit is contrived to resolve the differential privilege

encrypted with another key k, while k will be encrypted with

problem in sheltered. The surety will be dissect in terms of

KF, p. In this manner, both the private cloud server and S-CSP

two views, that is, the authorizing of double check and the

cannot decrypt the ciphertext. What's more, it is well-formed

secrecy of information. Some basic tools have been applied to

secure to the S-CSP based on the security of morphological

construct the secure deduplication, which are presumed to be

encryption. For S-CSP, if the file is unpredictable, and so it is

safe.

semantically secure too.

System Setup. The privilege universe andPthe symetric

key for each pi will be picked ∈P out for the private cloud a. Security of Duplicate-Check Token

as above. An identification protocol Π = (Proof, Verify) is We take various types of privacy, we need protecting, that is, i)

specified too. The proof of ownership POW is instantiated unforgeability of duplicate-check token: There are two cases of

by hash functions H, H0, H1 and H2, which will be adversaries, that is, external adversary and internal adversary. If a user

presented as sticks. has privilege p, it gets on your nerves that the adversary

File Uploading. Say that a data owner with proof- ilege p cannot falsify and output a valid duplicate token with any

wants to upload and share a file with users whoseF privilege other privilege p′ on any file F, where p does not match p′.

belongs to the set P = pj. The data owner performs the What is more, it likewise obliges that if the competitor does

{ }

recognition and sends H (F) to the private cloud server. not get to a request of token with its own exclusive right from

Two file tag sets ϕF, pτ = H0 (H (F), kpτ) and ϕ′F, p = H1 the private cloud server, it cannot forge and output a valid

(H (F), kpτ) for all pτ satisfying R (p, pτ) = 1 and p{ PU will duplicate token with p on any F that has been questioned.

be mailed back } to the{ userτif the naming takes }place. After The internal adversaries have more attack power than the

receiving the tag ϕF, pτ, and ∈ ϕ′F, pτ, the user will external adversaries and so we simply require to see the

interact and post these two tag sets to the S-CSP.. If a file security against the internal attacker, ii) adequation of

duplicate is found, the user needs to be given the POW duplicate check token this property is also specified in terms

protocol POW with the S-CSP to prove the file ownership. In of two facets as the definition of unforgeability. First, if a

your own way, if no duplicate is found, a proof of The S-CSP user has privilege p, given a token ϕ′, it demands that the

will be reelected, which could be a signature.. The user sends

opponent cannot distinguish which privilege or file in the

the privilege set P = pig as well as the proof to the private

cloud server for the file upload request. Upon being paid the token if p does not match p′. Furthermore, it also necessitates

request, the private cloud server verifies the signature first. that if the antagonist does not get to a request of token with its

If it is exceeded, the private cloud server will compute own exclusive right from the private cloud server, it cannot

ϕF, pj =H0(H (F), kpj). recognize a valid duplicate token with p on any other F that

the antagonist has not queried.

ISSN: 2347-8578 www.ijcstjournal.org Page 37

International Journal of Computer Science Trends and Technology (IJCST) – Volume 7 Issue 5, Sep - Oct 2019

Unforgeability of duplicate-check token

Therefore, if the adversary does not collude with the

i. Accept a user with privilege p

could forge a new duplicate- private cloud server, the acquaintance of our second construction

check token ϕ′F, p′ for any p′ is skeptically secure for both predictable and unpredictable file.

that does not tally up. If it is a Otherwise, if they collude, then the confidentiality of a file will

valid token, then it should be be reduced to convergent encryption because the encryption key

calculated as ϕ′F, p′ = H1 (H (F), is predestination.

kp′).

ii. For any user with privilege p, to VI. IMPLEMENTATION

output a new duplicate-check token We implement a prototype of the proposed authorized system,

ϕ′F, p, it likewise needs the in which we model three entities as separate programs. A

knowledge of KP.

Private Server plan is applied to model the individual cloud

which manages the private keys and handles the file token

Indistinguishiability of duplicate-check token computation. We carry out cryptographic operations of hashing

The security of the insignificance of token can be also and encryption with the OpenSSL library [1]. We also enforce

proved based on the presumption of the underlying message the announcement between the entities based on HTTP, using

authentication code is safe. The security of message GNU Libmicrohttpd [10] and lubberly [13]. Therefore, users

authentication code requires that the opponent cannot make can issue HTTP Post requests to the waiters. Our

out if a code is generated from an unknown key. In our implementation of the consumer provides the full function calls

system, all the privilege keys are held not to be disclosed by to support token generation and along the file upload process.

the private cloud server. Therefore, even if a user has privilege • Voltage (File) - It computes the SHA-1 hash of the File

p, given a token ϕ′, the opponent cannot distinguish which as File Tag;

privilege or file in the token because he does not possess the • TokenReq (Tag, UserID) - It requests the Private Server

knowledge of the privilege key skew. for File Token generation with the File Tag and User ID;

• DupCheckReq (Token) - It requests the Storage Server

for Duplicate Check of the File by sending the file token

b. Confidentiality of Data received from a private host;

The information will be inscribed in our duplication system • ShareTokenReq (Tag, {Priv.}) - It requests the Private

before outsourcing to the S-CSP. Furthermore, too many- Server to generate the Share File Token with the File Tag

sidedness of different encoding methods have been used in and Target Sharing Privilege Set;

our two buildings. The data coded with such encryption • FileEncrypt (File) - It encrypts the File with Convergent

method cannot achieve semantic security as it is inherently Encryption using 256-bit AES algorithm in cipher block

open to what power attacks that can recover files falling into a chaining (CBC) mode, where the convergent key is from

known circle. Hence, several new security notations of SHA-256 Hashing of the file; and

privacy against chosen allotment attacks have been defined for • FileUploadReq (FileID, File, Token) - It uploads the File

unpredictable message. In another word, the adapted security Data to the Storage Server if the file is Unique and

definition guarantees that the encryptions of two unpredictable updates the File Token stored.

messages should be identical. • TokenGen (Tag, UserID) - It loads the associated

We talk over the confidentiality of data in our further enhanced privilege keys of the user and generate the token with the

construction in Section 4.3. The security analysis for external HMAC-SHA-1 algorithm; and

adversaries and internal adversaries is almost identical, except

the internal adversaries are provided with some convergent

encryption keys additionally.Though the symmetric key k is

aimlessly chosen, it is encrypted by another convergent

encryption key kF,p.Different from the previous one, the

convergent key in our construction is not necessitarianism in

terms of the file, which still depends on the privilege secret

key stored by the private cloud server and unknown to the

adversary.

ISSN: 2347-8578 www.ijcstjournal.org Page 38

International Journal of Computer Science Trends and Technology (IJCST) – Volume 7 Issue 5, Sep - Oct 2019

14 2000

Tagging Share Token Gen Tagging Share Token Gen

Token Gen Encryption Token Gen Encryption

12 Duplicate Check Transfer Duplicate Check Transfer

Cumulative Time (sec)

1500

10

Time (sec)

8

1000

6

4

500

2

0 0

10 50 100 200 400 0 2000 4000 6000 8000 10000

File Size (MB) Number of Files

Fig. 2. Time Breakdown for Different File Size Fig. 3. Time Breakdown for Different Number of Stored Files

• ShareTokenGen (Tag, {Priv.}) - It generates the share token In contrast, other steps such as token invention and duplicate

with the corresponding privilege keys of the sharing privilege check only use the file metadata for working out and hence

set with the HMAC-SHA-1 algorithm. the time spent remains constant. With the file size escalating

Our achievement of the Storage head waiter provides from 10MB to 400MB, the operating cost of the proposed

duplication and in string storage with following handlers and authorization steps decreases from 14.9% to 0.483%.

maintains a map between existing files and allied token with

Hash Map.

b. Number of Stored Files

• DupCheck (Token) It search the File to Token Map for

reproduction. To appraise the effect of number of stored files in the system, we

• The FileStore (FileID, File, Token) It stores the File on upload 10000 10MB unique files to the system and record the go

Disk and updates the spread out. wrong for every file upload. From Figure 3, every step remains

constant along the fourth facet. Hatred of the odds of a linear

search, the time involved in duplicate check residue stable due

VII. EVALUATION to the low collision probability.

We conduct a test bed evaluation of our spread out. Our

evaluation focuses on comparing the overhead induced by

authorization steps, including file token generation and share c. Deduplication Ratio

token generation, against the convergent corruption and file To assess the upshot of the we develop two unique data sets, each of

upload steps.1) File Size 2) Number of Stored Files 3) which consists of 50 100MB files. We first upload the first circle as an

Deduplication Ratio 4) Privilege Set Size. We also assess the initial upload. For the second upload, we pick a fortune of 50

prototype with a material-world workload based on VM images. files, according to the given ratio, from the most important set

We fall apart down the upload process into 6 steps, 1) Tag- GIing 2) as duplicate files and spun out files from the second set as

Token Generation 3) Duplicate Check 4) Share Token Generation 5) unique files. The nucleus time of uploading the second circle

Encryption 6) Transfer. For each appraise, we record the is shown in Figure 4. As uploading and encryption would be

beginning and end time of it and hence obtain the breakdown cut in a crate of duplicate files, the time expended on both of

of the total time conceded. them diminish with increasing.

a. File Size

To review the effect of file size to the time spent on special

steps, we upload 100 distinguishing files.The mean time of

the steps from test sets of unusual file size is plotted in Figure

2. The time spent on tagging, encryption, upload increases

linearly with the file size, since these artisanship affect the

real file information and incur file I/O with the in one piece

file.

ISSN: 2347-8578 www.ijcstjournal.org Page 39

International Journal of Computer Science Trends and Technology (IJCST) – Volume 7 Issue 5, Sep - Oct 2019

5 80

Tagging Share Token Gen Tagging Share Token Gen

Token Gen Encryption Token Gen Encryption

70

Duplicate Check Transfer Duplicate Check Transfer

4

60

50

Time (sec)

Time (sec)

3

40

2 30

20

1

10

0 0

0 20 40 60 80 100 1 2 3 4 5 6 7 8 9 10 11 12

Deduplication Ratio (%) Week

Fig. 4. Time Breakdown for Different Deduplication Ratio Fig. 6. Time Breakdown for the VM dataset.

1.4

Tagging Share Token Gen

Token Gen Encryption

Image snapshots collected over a 12-week span in a academy

1.2 Duplicate Check Transfer programming line, while the same dataset is also employed in

the above mentioned study [14]. We perform block level with a

1 defined block size of 4KB. The initial data size of an image is

3.2GB (excluding all zero blocks). After 12 weeks, the run of

Time (sec)

0.8 the mill data size of an image increase to 4GB and the

average ratio is 97.9%.Figure 6 indicates that the time taken

0.6

in token making and duplicate checking increases linearly as

0.4

the VM image grows in data size.

0.2

0

1000 10000 30000 50000 100000

Privilege Set Size

Fig. 5. Time Breakdown for Different Privilege Set Size

VIII. RELATED WORK

Secure Deduplication. With the advent of cloud computing,

secure data have attracted much attention lately from the follow

The time washed out on duplicate check also decrease as the

a line of investigation community. To reinforce the security of

searching would be stopped when a duplicate is brought into

duplication and protect the data confidentiality. In their scheme,

being. Total time spent on uploading the file with a ratio of

100% is only 33.5% with unique files. another third party called key server is introduced to generate

the file tag for duplicate checks. Stanek et al. [20] introduced

d. Privilege Set Size a novel encryption scheme that provides differential security

for popular data and unpopular date. For popular data that are

To appraise the result of privilege set size, we clap 100 10MB not particularly sensitive, the traditional conventional

distinctive files with different size of the data owner and target encryption is done. Another two-layered encryption scheme

share privilege set size. In Figure 5,it shows the time all- with stronger protection while supporting deduplication is

encompassing in token invention increases looking like a line proposed for unpopular information. Li et al. [12] addressed

as more keys are linked with the file and also the duplicate the central supervision issue in block-level deduplication by

check time. While the number of keys increases 100 times distributing these keys across multiple servers after inscribing

from 1000 to 100000, the total time spent only increasing to the files.

3.81 times and it is mentioned that the file size of the

accomplish experiment is set at a low level (10MB), the issue

would suit less momentous in case of larger files.

ISSN: 2347-8578 www.ijcstjournal.org Page 40

International Journal of Computer Science Trends and Technology (IJCST) – Volume 7 Issue 5, Sep - Oct 2019

IX. CONCLUSION N.Madhuri is presently pursuing M.Tech (CSE)

In this sonata, the notion of authorized data was proposed to Department of Computer Science Engineering from

protect the data security by including disparity privileges of visakha Institute of Engineering

users in the facsimile tab. We also introduced several new andTechnology,Visakhapatnam.

construction supporting authorized duplicate check in hybrid

cloud style, in which the duplicate check tokens of files are

accomplishing by the private cloud server with individual

keys. Security analysis trot out that our schemes are safe in

terms of insider and outsider attacks specified in the

anticipated security model. As a proof of the concept, we took Krishna rupendra singh M.tech(Phd), is working as

out a trial product of our proposed authorized duplicate check an Assistant Professor in the Department of

scheme and conduct test bed trial and error on our archetype. Computer Science and Engineering in visakha

We read that our authorized duplicate check scheme expose Institute of Engineering and

oneself to nominal overhead compared to convergent Technology,Visakhapatnam.

encryption and network transport.

REFERENCES

[1] OpenSSL Project. http://www.openssl.org/.

[2] P. Anderson and L. Zhang. Fast and secure laptop

backups with encrypted de-duplication. In Proc. of

USENIX LISA, 2010.

[3] M. Bellare, S. Keelveedhi, and T. Ristenpart. Dupless:

Server- aided encryption for deduplicated storage. In

USENIX Security Symposium, 2013.

[4] M. Bellare, S. Keelveedhi, and T. Ristenpart. Message-

locked encryption and secure deduplication. In

EUROCRYPT, pages 296– 312, 2013.

[5] M. Bellare, C. Namprempre, and G. Neven. Security

proofs for identity-based identification and signature

schemes. J. Cryptology, 22(1):1–61, 2009.

[6] M. Bellare and A. Palacio. Gq and schnorr identification

schemes: Proofs of security against impersonation under

active and concur- rent attacks. In CRYPTO, pages 162–

177, 2002.

[7] S. Bugiel, S. Nurnberger, A. Sadeghi, and T. Schneider.

Twin clouds: An architecture for secure cloud

computing. In Workshop on Cryptography and Security

in Clouds (WCSC 2011), 2011.

[8] J. R. Douceur, A. Adya, W. J. Bolosky, D. Simon, and

M. Theimer. Reclaiming space from duplicate files in a

serverless distributed file system. In ICDCS, pages 617–

624, 2002.

[9] D. Ferraiolo and R. Kuhn. Role-based access controls.

In 15th NIST-NCSC National Computer Security Conf.,

1992.

[10] GNULibmicrohttpd.

http://www.gnu.org/software/libmicrohttpd/.

[11] S. Halevi, D. Harnik, B. Pinkas, and A. Shulman-Peleg.

Proofs of ownership in remote storage systems. In Y.

Chen, G. Danezis, and V. Shmatikov, editors, ACM

Conference on Computer and Communications Security,

pages 491–500. ACM, 2011.

[12] J. Li, X. Chen, M. Li, J. Li, P. Lee, and W. Lou. Secure

deduplication with efficient and reliable convergent key

management. In IEEE Transactions on Parallel and

Distributed Systems, 2013.

[13] libcurl. http://curl.haxx.se/libcurl/.

[14] C. Ng and P. Lee. Revdedup: A reverse

deduplication storage system optimized for

reads to latest backups. In Proc. of APSYS,

Apr 2013.

ISSN: 2347-8578 www.ijcstjournal.org Page 41

S-ar putea să vă placă și

- (IJCST-V12I1P4) :M. Sharmila, Dr. M. NatarajanDocument9 pagini(IJCST-V12I1P4) :M. Sharmila, Dr. M. NatarajanEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I6P8) :subhadip KumarDocument7 pagini(IJCST-V11I6P8) :subhadip KumarEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I5P4) :abhirenjini K A, Candiz Rozario, Kripa Treasa, Juvariya Yoosuf, Vidya HariDocument7 pagini(IJCST-V11I5P4) :abhirenjini K A, Candiz Rozario, Kripa Treasa, Juvariya Yoosuf, Vidya HariEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I6P8) :subhadip KumarDocument7 pagini(IJCST-V11I6P8) :subhadip KumarEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I6P5) :A.E.E. El-Alfi, M. E. A. Awad, F. A. A. KhalilDocument9 pagini(IJCST-V11I6P5) :A.E.E. El-Alfi, M. E. A. Awad, F. A. A. KhalilEighthSenseGroupÎncă nu există evaluări

- (IJCST-V12I1P2) :DR .Elham Hamed HASSAN, Eng - Rahaf Mohamad WANNOUSDocument11 pagini(IJCST-V12I1P2) :DR .Elham Hamed HASSAN, Eng - Rahaf Mohamad WANNOUSEighthSenseGroupÎncă nu există evaluări

- (IJCST-V12I1P7) :tejinder Kaur, Jimmy SinglaDocument26 pagini(IJCST-V12I1P7) :tejinder Kaur, Jimmy SinglaEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I4P15) :M. I. Elalami, A. E. Amin, S. A. ElsaghierDocument8 pagini(IJCST-V11I4P15) :M. I. Elalami, A. E. Amin, S. A. ElsaghierEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I4P5) :P Jayachandran, P.M Kavitha, Aravind R, S Hari, PR NithishwaranDocument4 pagini(IJCST-V11I4P5) :P Jayachandran, P.M Kavitha, Aravind R, S Hari, PR NithishwaranEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P25) :pooja Patil, Swati J. PatelDocument5 pagini(IJCST-V11I3P25) :pooja Patil, Swati J. PatelEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I4P12) :N. Kalyani, G. Pradeep Reddy, K. SandhyaDocument16 pagini(IJCST-V11I4P12) :N. Kalyani, G. Pradeep Reddy, K. SandhyaEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I4P3) :tankou Tsomo Maurice Eddy, Bell Bitjoka Georges, Ngohe Ekam Paul SalomonDocument9 pagini(IJCST-V11I4P3) :tankou Tsomo Maurice Eddy, Bell Bitjoka Georges, Ngohe Ekam Paul SalomonEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I4P9) :KiranbenV - Patel, Megha R. Dave, Dr. Harshadkumar P. PatelDocument18 pagini(IJCST-V11I4P9) :KiranbenV - Patel, Megha R. Dave, Dr. Harshadkumar P. PatelEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P24) :R.Senthilkumar, Dr. R. SankarasubramanianDocument7 pagini(IJCST-V11I3P24) :R.Senthilkumar, Dr. R. SankarasubramanianEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P11) :Kalaiselvi.P, Vasanth.G, Aravinth.P, Elamugilan.A, Prasanth.SDocument4 pagini(IJCST-V11I3P11) :Kalaiselvi.P, Vasanth.G, Aravinth.P, Elamugilan.A, Prasanth.SEighthSenseGroupÎncă nu există evaluări

- (Ijcst-V11i4p1) :fidaa Zayna, Waddah HatemDocument8 pagini(Ijcst-V11i4p1) :fidaa Zayna, Waddah HatemEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P20) :helmi Mulyadi, Fajar MasyaDocument8 pagini(IJCST-V11I3P20) :helmi Mulyadi, Fajar MasyaEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P17) :yash Vishwakarma, Akhilesh A. WaooDocument8 pagini(IJCST-V11I3P17) :yash Vishwakarma, Akhilesh A. WaooEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P7) :nikhil K. Pawanikar, R. SrivaramangaiDocument15 pagini(IJCST-V11I3P7) :nikhil K. Pawanikar, R. SrivaramangaiEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P21) :ms. Deepali Bhimrao Chavan, Prof. Suraj Shivaji RedekarDocument4 pagini(IJCST-V11I3P21) :ms. Deepali Bhimrao Chavan, Prof. Suraj Shivaji RedekarEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P13) : Binele Abana Alphonse, Abou Loume Gautier, Djimeli Dtiabou Berline, Bavoua Kenfack Patrick Dany, Tonye EmmanuelDocument31 pagini(IJCST-V11I3P13) : Binele Abana Alphonse, Abou Loume Gautier, Djimeli Dtiabou Berline, Bavoua Kenfack Patrick Dany, Tonye EmmanuelEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P9) :raghu Ram Chowdary VelevelaDocument6 pagini(IJCST-V11I3P9) :raghu Ram Chowdary VelevelaEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P12) :prabhjot Kaur, Rupinder Singh, Rachhpal SinghDocument6 pagini(IJCST-V11I3P12) :prabhjot Kaur, Rupinder Singh, Rachhpal SinghEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P8) :pooja Patil, Swati J. PatelDocument5 pagini(IJCST-V11I3P8) :pooja Patil, Swati J. PatelEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P2) :K.Vivek, P.Kashi Naga Jyothi, G.Venkatakiran, SK - ShaheedDocument4 pagini(IJCST-V11I3P2) :K.Vivek, P.Kashi Naga Jyothi, G.Venkatakiran, SK - ShaheedEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I2P19) :nikhil K. Pawanika, R. SrivaramangaiDocument15 pagini(IJCST-V11I2P19) :nikhil K. Pawanika, R. SrivaramangaiEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P4) :V Ramya, A Sai Deepika, K Rupa, M Leela Krishna, I Satya GirishDocument4 pagini(IJCST-V11I3P4) :V Ramya, A Sai Deepika, K Rupa, M Leela Krishna, I Satya GirishEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P5) :P Adhi Lakshmi, M Tharak Ram, CH Sai Teja, M Vamsi Krishna, S Pavan MalyadriDocument4 pagini(IJCST-V11I3P5) :P Adhi Lakshmi, M Tharak Ram, CH Sai Teja, M Vamsi Krishna, S Pavan MalyadriEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I3P1) :Dr.P.Bhaskar Naidu, P.Mary Harika, J.Pavani, B.Divyadhatri, J.ChandanaDocument4 pagini(IJCST-V11I3P1) :Dr.P.Bhaskar Naidu, P.Mary Harika, J.Pavani, B.Divyadhatri, J.ChandanaEighthSenseGroupÎncă nu există evaluări

- (IJCST-V11I2P18) :hanadi Yahya DarwishoDocument32 pagini(IJCST-V11I2P18) :hanadi Yahya DarwishoEighthSenseGroupÎncă nu există evaluări

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (399)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- (2002 Wiley) Secure CommunicationsDocument334 pagini(2002 Wiley) Secure CommunicationsuestclsqÎncă nu există evaluări

- 2012 (Vol. 1, No. 3)Document103 pagini2012 (Vol. 1, No. 3)IJCSDFÎncă nu există evaluări

- Authors BookDocument162 paginiAuthors BookhakikiÎncă nu există evaluări

- AWS Key Management Service Best PracticesDocument21 paginiAWS Key Management Service Best PracticesjaygoswamiÎncă nu există evaluări

- Unit 4 CryptographyDocument54 paginiUnit 4 CryptographyPriyanka ChaudharyÎncă nu există evaluări

- Assignments V 2 VDocument15 paginiAssignments V 2 VMaYank MudGalÎncă nu există evaluări

- Research Papers Cloud ComputingDocument8 paginiResearch Papers Cloud ComputingKrutika DalviÎncă nu există evaluări

- Bluetooth Security Compliance GuideDocument19 paginiBluetooth Security Compliance Guidezecu2000Încă nu există evaluări

- (IJCST-V5I2P61) :irene Getzi SDocument5 pagini(IJCST-V5I2P61) :irene Getzi SEighthSenseGroupÎncă nu există evaluări

- EMV v4.2 Book 4Document155 paginiEMV v4.2 Book 4systemcoding100% (1)

- Hardware Security ModuleDocument2 paginiHardware Security ModuleSuresh100% (3)

- QF01/C4107AE: Al-Zaytoonah University of Jordan Faculty of Science & ITDocument4 paginiQF01/C4107AE: Al-Zaytoonah University of Jordan Faculty of Science & ITMoneer Dawlat ShishaniÎncă nu există evaluări

- Cryptography WhitepaperDocument22 paginiCryptography WhitepapertrungtrungkangÎncă nu există evaluări

- Chapter15 Key ManagementDocument19 paginiChapter15 Key ManagementtartarÎncă nu există evaluări

- Snow Leopard Server Security Config v10.6Document456 paginiSnow Leopard Server Security Config v10.6jjkscribdÎncă nu există evaluări

- Key ItaDocument46 paginiKey Itatrang huyềnÎncă nu există evaluări

- CPS GovCA Final V1 0Document56 paginiCPS GovCA Final V1 0Bizimana GilbertÎncă nu există evaluări

- I TwinDocument18 paginiI TwinMohd Omer100% (1)

- Rivest-Rc5 Network SecurityDocument11 paginiRivest-Rc5 Network SecurityshardapatelÎncă nu există evaluări

- ICTNWK619 Assessment 4Document15 paginiICTNWK619 Assessment 4MitchÎncă nu există evaluări

- Network SecurityDocument4 paginiNetwork SecurityainocÎncă nu există evaluări

- SMS Security: Highlighting Its Vulnerabilities & Techniques Towards Developing A SolutionDocument5 paginiSMS Security: Highlighting Its Vulnerabilities & Techniques Towards Developing A SolutionannaÎncă nu există evaluări

- Ponencia 6 Texto Havilect Carlos AlvarezDocument24 paginiPonencia 6 Texto Havilect Carlos AlvarezEdilberto QuispeÎncă nu există evaluări

- CNS RecordDocument63 paginiCNS RecordMahi MahiÎncă nu există evaluări

- Proton MailDocument14 paginiProton MailNkull11Încă nu există evaluări

- What Is A NetworkDocument16 paginiWhat Is A NetworkSreedhara Venkata Ramana KumarÎncă nu există evaluări

- Nit ThesisDocument46 paginiNit Thesisapi-294961804Încă nu există evaluări

- Security+ Cram Sheet - ProprofsDocument3 paginiSecurity+ Cram Sheet - Proprofstom100% (1)

- OWASP Web Application Penetration Checklist: July 14, 2004Document23 paginiOWASP Web Application Penetration Checklist: July 14, 2004blutntÎncă nu există evaluări

- STE ManualDocument113 paginiSTE ManualAadesh ValaseÎncă nu există evaluări