Documente Academic

Documente Profesional

Documente Cultură

Eda Joint Probability Distribution

Încărcat de

Jimmy Morales Jr.Descriere originală:

Titlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Eda Joint Probability Distribution

Încărcat de

Jimmy Morales Jr.Drepturi de autor:

Formate disponibile

Page 1 of 6

ENGINEERING DATA ANALYSIS

JOINT PROBABILITY DISTRIBUTION

So far we have studied probability models for a single discrete or continuous random variable.

In many practical cases it is appropriate to take more than one measurement of a random observation. For

example:

1. Height and weight of a medical subject.

2. Grade on quiz 1, quiz 2, and quiz 3 of a math student.

How are these variables related?

The air quality type of situation is very important and is the foundation of much of inferential statistics.

Joint Probability Distribution

In general, if X and Y are two random variables, the probability distribution that defines their simultaneous behavior

is called a joint probability distribution.

If X and Y are discrete, this distribution can be described with a joint probability mass function.

If X and Y are continuous, this distribution can be described with a joint probability density function.

Joint Probability Mass function

Definition: Let X and Y be two discrete random variable, and let S denote the two-dimensional support of X and Y.

Then the function 𝑓𝑋𝑌 (𝑥, 𝑦) = 𝑃(𝑋 = 𝑥, 𝑌 = 𝑦) is a joint probability mass function (jpmf) if it satisfies the

following conditions:

1. 0 ≤ 𝑓(𝑥, 𝑦) ≤ 1

2. ∑𝑥∈𝑆 ∑𝑦∈𝑆 𝑓(𝑥, 𝑦) = 1

Marginal Probability Mass Function

Let X be a discrete random variable with support 𝑆1, and let Y be a discrete random variable with support 𝑆2 . Let X

and Y have the joint probability mass function 𝑓(𝑥, 𝑦) with support S. Then the probability mass function of X

alone, which is called the marginal probability mass function of X is defined by:

𝑓𝑋 (𝑥) = 𝑃(𝑋 = 𝑥) = ∑ 𝑓(𝑥, 𝑦) , 𝑥 ∈ 𝑆1

𝑦

Where, for each x in the support 𝑆1, the summation is taken over all possible values of y. Similarly, Then the

probability mass function of Y alone, which is called the marginal probability mass function of Y is defined by:

𝑓𝑌 (𝑦) = 𝑃(𝑌 = 𝑦) = ∑ 𝑓(𝑥, 𝑦) , 𝑦 ∈ 𝑆2

𝑥

Where, for each y in the support 𝑆2 , the summation is taken over all possible values of x.

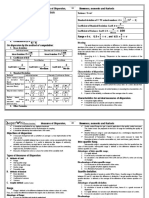

Example #1:

Suppose we toss a pair of fair, four sided dice, in which one of the dice is red and the other is black. Let X be the

outcome on the red die, and Y be the outcome on the black die.

a. Find the joint probability mass distribution of red and black die.

b. Find the marginal probability mass function of X

c. Find the marginal probability mass function of Y

EDA: Joint Probability Distribution

Page 2 of 6

Example#2:

Measurements for the length and width of a rectangular plastic covers for CDs are rounded to the nearest mm (so

they are discrete). Let X denote the length and Y denote the width. The possible values of X are 129, 130, and 131

mm. The possible values of Y are 15 and 16 mm (Thus, both X and Y are discrete). The joint probability mass

distribution table is given below.

a. Determine if the jpmf is valid or not.

b. Find the marginal probability mass function of X

c. Find the marginal probability mass function of Y

Expected Value and Variance

Let X be a discrete random variable with support 𝑆1, and let Y be a discrete random variable with support 𝑆2 . Let X

and Y have the joint probability mass function 𝑓(𝑥, 𝑦) with support S. Then the expected value of X and Y is given

by:

𝐸(𝑋) = ∑ ∑ 𝑥 𝑓(𝑥, 𝑦)

𝑥 𝑦

𝐸(𝑌) = ∑ ∑ 𝑦 𝑓(𝑥, 𝑦)

𝑥 𝑦

Let X be a discrete random variable with support 𝑆1, and let Y be a discrete random variable with support 𝑆2 . Let X

and Y have the joint probability mass function 𝑓(𝑥, 𝑦) with support S. Then the expected value of X and Y is given

by:

𝑉(𝑋) = 𝐸(𝑋 2 ) − [𝐸(𝑋)]2

𝑉(𝑌) = 𝐸(𝑌 2 ) − [𝐸(𝑌)]2

Example#3:

a. Find the mean of X and the mean of Y in example#1

b. Find the variance of X and the variance of Y in example#2

Joint Probability Density Function

Let X and Y be two continuous random variables, and let S denote the two-dimensional support of X and Y. Then

the function 𝑓 (𝑥, 𝑦) is a joint probability density function (jpdf) if it satisfies the following conditions:

1. 𝑓(𝑥, 𝑦) ≥ 0

∞ ∞

2. ∫−∞ ∫−∞ 𝑓 (𝑥, 𝑦)𝑑𝑥𝑑𝑦 = 1

Example#4: Determine if the joint pdf given below is valid or not.

2

1. 𝑓 (𝑥, 𝑦) = 21 𝑥 2 𝑦 , 1 ≤ 𝑥 ≤ 2 and 0 ≤ 𝑦 ≤ 3.

2. 𝑓𝑋𝑌 (𝑥, 𝑦) = 4𝑦 − 2𝑥 , 0 < 𝑦 < 1; 0 < 𝑥 < 1

EDA: Joint Probability Distribution

Page 3 of 6

Marginal Probability Density Function

The marginal probability density function of the continuous random variables X and Y are given respectively by:

∞

𝑓𝑋 (𝑥) = ∫ 𝑓(𝑥, 𝑦)𝑑𝑦

−∞

∞

𝑓𝑌 (𝑦) = ∫ 𝑓(𝑥, 𝑦)𝑑𝑥

−∞

Where 𝑠1 and 𝑠2 are the respective supports of X and Y.

Example#5: Find the marginal probability density function of the following:

2

1. 𝑓 (𝑥, 𝑦) = 21 𝑥 2 𝑦 , 1 ≤ 𝑥 ≤ 2 and 0 ≤ 𝑦 ≤ 3

2. 𝑓 (𝑥, 𝑦) = 4𝑦 − 2𝑥 , 0 < 𝑦 < 1; 0 < 𝑥 < 1

Expected Value and Variance

The expected value of a continuous random variable X can be found from the joint pdf of X and Y by:

∞

𝐸(𝑋) = ∫ 𝑥 𝑓𝑋 (𝑥)𝑑𝑥

−∞

The expected value of a continuous random variable Y can be found from the joint pdf of X and Y by:

∞

𝐸(𝑌) = ∫ 𝑦 𝑓𝑌 (𝑦)𝑑𝑦

−∞

The variance of a continuous random variable X can be found from the joint pdf of X and Y by:

𝑉(𝑋) = 𝐸(𝑋 2 ) − [𝐸(𝑋)]2

The varinace of a continuous random variable Y can be found from the joint pdf of X and Y by:

𝑉(𝑌) = 𝐸(𝑌 2 ) − [𝐸(𝑌)]2

Example#6: Find the mean and variance of X and Y

2

1. 𝑓 (𝑥, 𝑦) = 21 𝑥 2 𝑦 , 1 ≤ 𝑥 ≤ 2 and 0 ≤ 𝑦 ≤ 3

2. 𝑓 (𝑥, 𝑦) = 4𝑦 − 2𝑥 , 0 < 𝑦 < 1; 0 < 𝑥 < 1

EDA: Joint Probability Distribution

Page 4 of 6

CONDITIONAL DISTRIBUTION FOR DISCRETE RANDOM VARIABLE

In the last two lessons, we've concerned ourselves with how two random variables X and Y behave jointly. We'll now turn to

investigating how one of the random variables, say Y, behaves given that another random variable, say X, has already

behaved in a certain way.

Definition. A conditional probability distribution is a probability distribution for a sub-population. That is, a conditional

probability distribution describes the probability that a randomly selected person from a sub-population has the one

characteristic of interest.

Definition. The conditional probability mass function of X, given that Y = y, is defined by:

𝑓(𝑥, 𝑦)

𝑔(𝑥|𝑦) = 𝑝𝑟𝑜𝑣𝑖𝑑𝑒𝑑 𝑓𝑌(𝑦) > 0

𝑓𝑌(𝑦)

Similarly, the conditional probability mass function of Y, given that X = x, is defined by:

𝑓(𝑥, 𝑦)

ℎ(𝑦|𝑥) = 𝑝𝑟𝑜𝑣𝑖𝑑𝑒𝑑 𝑓𝑋(𝑥) > 0

𝑓𝑋(𝑥)

Example

Let X be a discrete random variable with support S1 = {0, 1}, and let Y be a discrete random variable with support S2 = {0, 1,

2}. Suppose, in tabular form, that X and Y have the following joint probability distribution f(x,y):

X 0 1

Y 0 1/8 2/8

1 2/8 1/8

2 1/8 1/8

a. What is the conditional distribution of X given Y? That is, what is 𝑔(𝑥|𝑦)?

b. What is the conditional distribution of Y given X? That is, what is ℎ(𝑥|𝑦)?

Conditional Distribution holds the following properties:

(1) Conditional distributions are valid probability mass functions in their own right. That is, the conditional

probabilities are between 0 and 1, inclusive:

0 ≤ 𝑔(𝑥|𝑦) ≤ 1 𝑎𝑛𝑑 0 ≤ ℎ(𝑦|𝑥) ≤ 1

and, for each subpopulation, the conditional probabilities sum to 1:

∑ 𝑔(𝑥|𝑦) = 1 𝑎𝑛𝑑 ∑ ℎ(𝑦|𝑥) = 1

𝑥 𝑦

(2) In general, the conditional distribution of X given Y does not equal the conditional distribution of Y given X. That

is:

𝑔(𝑥|𝑦) ≠ ℎ(𝑦|𝑥)

EDA: Joint Probability Distribution

Page 5 of 6

Conditional Means and Variances

Suppose X and Y are discrete random variables. Then, the conditional mean of Y given X = x is defined as:

𝜇𝑌|𝑋 = 𝐸[𝑌|𝑥] = ∑ 𝑦ℎ(𝑦|𝑥)

𝑦

The conditional mean of X given Y = y is defined as:

𝜇𝑋|𝑌 = 𝐸[𝑋|𝑦] = ∑ 𝑥𝑔(𝑥|𝑦)

𝑥

The conditional variance of Y given X = x is:

𝜎 2 𝑌|𝑥 = 𝑉(𝑌|𝑥) = 𝐸[𝑌 2 |𝑥] − (𝐸[𝑌|𝑥])2

The conditional variance of X given Y = y is:

𝜎 2 𝑋|𝑦 = 𝑉(𝑋|𝑦) = 𝐸[𝑋 2 |𝑦] − (𝐸[𝑋|𝑦])2

Example: Calculate the following using the previous data

a. What is the conditional mean of Y given X = x?

b. What is the conditional mean of X given Y = y?

c. What is the conditional variance of Y given X = 0?

d. What is the conditional variance of X given Y = 1?

Conditional Distributions for Continuous Random Variables

Definition. Suppose X and Y are continuous random variables with joint probability density function f(x,y) and marginal

probability density functions fX(x) and fY(y), respectively.

Then, the conditional probability density function of X given Y = y is defined as:

𝑓(𝑥, 𝑦)

𝑔(𝑥|𝑦) = provided 𝑓𝑌(𝑦) > 0.

𝑓𝑌 (𝑦)

Then, the conditional probability density function of Y given X = x is defined as:

𝑓(𝑥, 𝑦)

ℎ(𝑦|𝑥) = provided 𝑓𝑋(𝑥) > 0.

𝑓𝑋 (𝑥)

The conditional mean of X given Y = y is defined as:

∞

𝐸(𝑋|𝑦) = ∫ 𝑥 𝑔(𝑥|𝑦)𝑑𝑥

−∞

The conditional mean of Y given X = x is defined as:

∞

𝐸(𝑌|𝑥) = ∫ 𝑦 ℎ(𝑦|𝑥)𝑑𝑦

−∞

The conditional variance of X given Y = y is defined as:

𝑉𝑎𝑟(𝑋|𝑦) = 𝐸[𝑋 2 |𝑦] − [𝐸(𝑋|𝑦)]2

EDA: Joint Probability Distribution

Page 6 of 6

The conditional variance of Y given X = x is defined as:

𝑉𝑎𝑟(𝑌|𝑥) = 𝐸[𝑌 2 |𝑥] − [𝐸(𝑌|𝑥)]2

Example

1. Suppose the continuous random variables X and Y have the following joint probability density function:

3

𝑓(𝑥, 𝑦) = 2 for 𝑥 2 ≤ 𝑦 ≤ 1 and 0 < 𝑥 < 1.

a. What is the conditional joint probability density function of Y given X = x?

b. If X=1/4, What is the conditional joint probability density function of Y?

c. If X=1/2, What is the conditional joint probability density function of Y?

2. What is the conditional mean of Y given X=x?

a. What us the conditional mean of Y given X=1/2 ?

3. What is the conditional variance of X given Y = y?

EDA: Joint Probability Distribution

S-ar putea să vă placă și

- Bayes' Theorem and Its ApplicationsDocument11 paginiBayes' Theorem and Its ApplicationsJohn Sydric Rendeza0% (1)

- Joint Probability FunctionsDocument7 paginiJoint Probability FunctionsBran RelieveÎncă nu există evaluări

- Joint Probability DistributionDocument10 paginiJoint Probability DistributionJawad Sandhu100% (1)

- Basic Concepts in StatisticsDocument19 paginiBasic Concepts in StatisticsMILBERT DE GRACIAÎncă nu există evaluări

- Stats Discrete Prob Distribution 2011Document22 paginiStats Discrete Prob Distribution 2011Syed OvaisÎncă nu există evaluări

- Multicollinearity Assignment April 5Document10 paginiMulticollinearity Assignment April 5Zeinm KhenÎncă nu există evaluări

- Impacts of Climate Extreme Events On Agriculture and Hydrology in NigeriaDocument9 paginiImpacts of Climate Extreme Events On Agriculture and Hydrology in NigeriaNjoku Andy ElijahÎncă nu există evaluări

- 2015 Clustering of Random... (CSIT)Document4 pagini2015 Clustering of Random... (CSIT)Ani KocharyanÎncă nu există evaluări

- 5 - Joint Probability DistributionsDocument81 pagini5 - Joint Probability DistributionsKevin CárdenasÎncă nu există evaluări

- Example of Paired Sample TDocument3 paginiExample of Paired Sample TAkmal IzzairudinÎncă nu există evaluări

- Week - 4 - Joint Probability Distributions, Marginal Distributions, Conditional Probability DistributionsDocument21 paginiWeek - 4 - Joint Probability Distributions, Marginal Distributions, Conditional Probability DistributionsDilara Küçükkurt100% (1)

- Module 5 - Joint ProbabilityDocument7 paginiModule 5 - Joint ProbabilityGerovic ParinasÎncă nu există evaluări

- Joint ProbabilityDocument12 paginiJoint ProbabilityMercedesÎncă nu există evaluări

- Chapter5: Joint Probability DistributionsDocument39 paginiChapter5: Joint Probability DistributionsVidya MalavÎncă nu există evaluări

- Probability Densities 1Document38 paginiProbability Densities 1Armand DaputraÎncă nu există evaluări

- Joint Probability Distributions PDFDocument19 paginiJoint Probability Distributions PDFCharlesVandonnV.BenamerÎncă nu există evaluări

- Cramer Raoh and Out 08Document13 paginiCramer Raoh and Out 08Waranda AnutaraampaiÎncă nu există evaluări

- Fluid MechanicsDocument38 paginiFluid MechanicsMansi TayalÎncă nu există evaluări

- Jacobian TransformDocument18 paginiJacobian TransformLênon Guimarães Silva AlípioÎncă nu există evaluări

- Measures of Variability PDFDocument39 paginiMeasures of Variability PDFmakoyÎncă nu există evaluări

- Laws of ProbabilityDocument31 paginiLaws of ProbabilitySherazÎncă nu există evaluări

- Chi Square and AnnovaDocument29 paginiChi Square and AnnovaLloyd Lamington100% (1)

- Session 14 - Joint Probability Distributions (GbA) PDFDocument69 paginiSession 14 - Joint Probability Distributions (GbA) PDFkhkarthikÎncă nu există evaluări

- Regression CookbookDocument11 paginiRegression CookbookPollen1234Încă nu există evaluări

- D. EquationsDocument39 paginiD. Equationsmumtaz aliÎncă nu există evaluări

- Advanced Stat Day 1Document48 paginiAdvanced Stat Day 1Marry PolerÎncă nu există evaluări

- DIFFYQS PDEs, Separation of Variables, and The Heat EquationDocument12 paginiDIFFYQS PDEs, Separation of Variables, and The Heat EquationLG TVÎncă nu există evaluări

- Test To Identify Outliers in Data SeriesDocument16 paginiTest To Identify Outliers in Data SeriesplanetpbÎncă nu există evaluări

- Thesisproposaldefense FLOODDocument20 paginiThesisproposaldefense FLOODMildred DomingoÎncă nu există evaluări

- Chi-Square TestDocument20 paginiChi-Square TestAnoop VishnaniÎncă nu există evaluări

- ES84 Numerical MethodsDocument5 paginiES84 Numerical MethodsSharrah Shelley E. LomigoÎncă nu există evaluări

- Statistics and ProbabilityDocument70 paginiStatistics and Probabilityishaq_ahmed_9100% (1)

- Basic Statistics in The Toolbar of Minitab's HelpDocument17 paginiBasic Statistics in The Toolbar of Minitab's HelpTaufiksyaefulmalikÎncă nu există evaluări

- Estimating Spatial Relationships Between Land Use/Land Cover Change and Sediment Transport in The Asejire Reservoir Catchment Area, SouthWest NigeriaDocument187 paginiEstimating Spatial Relationships Between Land Use/Land Cover Change and Sediment Transport in The Asejire Reservoir Catchment Area, SouthWest NigeriaAbiodun F. OsunmadewaÎncă nu există evaluări

- Solutions BedientDocument47 paginiSolutions BedientRaul Cordova Alvarado100% (1)

- Descriptive MethodDocument21 paginiDescriptive MethodDoc AemiliusÎncă nu există evaluări

- ANCOVADocument17 paginiANCOVAErik Otarola-CastilloÎncă nu există evaluări

- Continuity Equation - Definition, Equation, Formula and ExamplesDocument13 paginiContinuity Equation - Definition, Equation, Formula and ExamplesEsther oluwatosin AdeyemiÎncă nu există evaluări

- Chap08 - 8up - Numerical Integration and DifferentiationDocument8 paginiChap08 - 8up - Numerical Integration and DifferentiationagcoreÎncă nu există evaluări

- Unit 5 Exploratory Data Analysis (EDA)Document41 paginiUnit 5 Exploratory Data Analysis (EDA)Shamie Singh100% (1)

- Exploratory Data Analysis Stephan Morgenthaler (2009)Document12 paginiExploratory Data Analysis Stephan Morgenthaler (2009)s8nd11d UNI100% (2)

- ME200-Fluid Mechanics and MachineryDocument3 paginiME200-Fluid Mechanics and MachineryvishnuÎncă nu există evaluări

- GATE Problems in ProbabilityDocument12 paginiGATE Problems in ProbabilitySureshÎncă nu există evaluări

- MATH 221 Final Exam Statistics For DecisionDocument8 paginiMATH 221 Final Exam Statistics For DecisiongeorgettashipleyÎncă nu există evaluări

- Chapter 3 Data DescriptionDocument140 paginiChapter 3 Data DescriptionNg Ngọc Phương NgânÎncă nu există evaluări

- Disperson SkwenessOriginalDocument10 paginiDisperson SkwenessOriginalRam KrishnaÎncă nu există evaluări

- Fundamental of Stat by S C GUPTADocument13 paginiFundamental of Stat by S C GUPTAPushpa Banerjee14% (7)

- Statistical Analysis For Change Detection and Trend Assessment in Climatological ParametersDocument21 paginiStatistical Analysis For Change Detection and Trend Assessment in Climatological ParameterspdhurveyÎncă nu există evaluări

- Implications of Climate Variability and Change On Urban and Human HealthDocument11 paginiImplications of Climate Variability and Change On Urban and Human HealthYesid RiveraÎncă nu există evaluări

- Module 5 EE Data AnalysisDocument33 paginiModule 5 EE Data AnalysisJea MosenabreÎncă nu există evaluări

- Sustainable Management of ResourcesDocument11 paginiSustainable Management of ResourcesSTUDENTS addaÎncă nu există evaluări

- Matched Sample: Edward I. Altman New York University Financial DistressDocument7 paginiMatched Sample: Edward I. Altman New York University Financial DistressArpita AroraÎncă nu există evaluări

- CH 4 Order StatisticsDocument5 paginiCH 4 Order StatisticsSupraja NiranjanÎncă nu există evaluări

- CE206 Curvefitting Interpolation 4Document20 paginiCE206 Curvefitting Interpolation 4afsanaÎncă nu există evaluări

- Numerical Methods Ch:Ode Equation and Its Practical ApplicationDocument38 paginiNumerical Methods Ch:Ode Equation and Its Practical ApplicationSupriya RaiÎncă nu există evaluări

- 3 - Discrete Random Variables & Probability DistributionsDocument80 pagini3 - Discrete Random Variables & Probability DistributionsMohammed AbushammalaÎncă nu există evaluări

- Yates PDFDocument8 paginiYates PDFMilena MilovanovićÎncă nu există evaluări

- New Multivariate Time-Series Estimators in Stata 11Document34 paginiNew Multivariate Time-Series Estimators in Stata 11Aviral Kumar TiwariÎncă nu există evaluări

- ENGDAT1 Module4 PDFDocument32 paginiENGDAT1 Module4 PDFLawrence BelloÎncă nu există evaluări

- Random VariablesDocument4 paginiRandom VariablesAbdulrahman SerhalÎncă nu există evaluări

- The Global Economy: (Structures of Globalization)Document33 paginiThe Global Economy: (Structures of Globalization)Jimmy Morales Jr.Încă nu există evaluări

- NOKITADocument27 paginiNOKITAJimmy Morales Jr.Încă nu există evaluări

- Occupational Health Personal Protective EquipmentDocument41 paginiOccupational Health Personal Protective EquipmentJimmy Morales Jr.100% (1)

- Microprocessor System: Instructor: Engr. Khayzelle CayabyabDocument20 paginiMicroprocessor System: Instructor: Engr. Khayzelle CayabyabJimmy Morales Jr.Încă nu există evaluări

- Dispersion Compensation FibreDocument16 paginiDispersion Compensation FibreGyana Ranjan MatiÎncă nu există evaluări

- Jesus Died: Summary: Jesus Died We Need To Have No Doubt About That. Without Jesus' Death We Would Have NoDocument6 paginiJesus Died: Summary: Jesus Died We Need To Have No Doubt About That. Without Jesus' Death We Would Have NoFabiano.pregador123 OliveiraÎncă nu există evaluări

- Types of Numbers: SeriesDocument13 paginiTypes of Numbers: SeriesAnonymous NhQAPh5toÎncă nu există evaluări

- Influencing Factors Behind The Criminal Attitude: A Study of Central Jail PeshawarDocument13 paginiInfluencing Factors Behind The Criminal Attitude: A Study of Central Jail PeshawarAmir Hamza KhanÎncă nu există evaluări

- Strategic ManagementDocument14 paginiStrategic ManagementvishakhaÎncă nu există evaluări

- 576 1 1179 1 10 20181220Document15 pagini576 1 1179 1 10 20181220Sana MuzaffarÎncă nu există evaluări

- Ergatividad Del Vasco, Teoría Del CasoDocument58 paginiErgatividad Del Vasco, Teoría Del CasoCristian David Urueña UribeÎncă nu există evaluări

- Power and MagicDocument40 paginiPower and MagicSandro AmoraÎncă nu există evaluări

- Leg Res Cases 4Document97 paginiLeg Res Cases 4acheron_pÎncă nu există evaluări

- CRM - Final Project GuidelinesDocument7 paginiCRM - Final Project Guidelinesapi-283320904Încă nu există evaluări

- E TN SWD Csa A23 3 94 009 PDFDocument5 paginiE TN SWD Csa A23 3 94 009 PDFRazvan RobertÎncă nu există evaluări

- GRADE 1 To 12 Daily Lesson LOG: TLE6AG-Oc-3-1.3.3Document7 paginiGRADE 1 To 12 Daily Lesson LOG: TLE6AG-Oc-3-1.3.3Roxanne Pia FlorentinoÎncă nu există evaluări

- Hedonic Calculus Essay - Year 9 EthicsDocument3 paginiHedonic Calculus Essay - Year 9 EthicsEllie CarterÎncă nu există evaluări

- Calendar of Cases (May 3, 2018)Document4 paginiCalendar of Cases (May 3, 2018)Roy BacaniÎncă nu există evaluări

- Tugas Conditional Sentences YanneDocument3 paginiTugas Conditional Sentences Yanneyanne nurmalitaÎncă nu există evaluări

- Prepositions French Worksheet For PracticeDocument37 paginiPrepositions French Worksheet For Practiceangelamonteiro100% (1)

- Klabin Reports 2nd Quarter Earnings of R$ 15 Million: HighlightsDocument10 paginiKlabin Reports 2nd Quarter Earnings of R$ 15 Million: HighlightsKlabin_RIÎncă nu există evaluări

- Espinosa - 2016 - Martín Ramírez at The Menil CollectionDocument3 paginiEspinosa - 2016 - Martín Ramírez at The Menil CollectionVíctor M. EspinosaÎncă nu există evaluări

- Is 13779 1999 PDFDocument46 paginiIs 13779 1999 PDFchandranmuthuswamyÎncă nu există evaluări

- Focus Charting of FDocument12 paginiFocus Charting of FRobert Rivas0% (2)

- Algebra. Equations. Solving Quadratic Equations B PDFDocument1 paginăAlgebra. Equations. Solving Quadratic Equations B PDFRoberto CastroÎncă nu există evaluări

- Proper AdjectivesDocument3 paginiProper AdjectivesRania Mohammed0% (2)

- MAT2355 Final 2002Document8 paginiMAT2355 Final 2002bojie_97965Încă nu există evaluări

- 1.quetta Master Plan RFP Draft1Document99 pagini1.quetta Master Plan RFP Draft1Munir HussainÎncă nu există evaluări

- Thomas E. Skidmore-The Politics of Military Rule in Brazil, 1964-1985-Oxford University Press, USA (1988) PDFDocument433 paginiThomas E. Skidmore-The Politics of Military Rule in Brazil, 1964-1985-Oxford University Press, USA (1988) PDFMarcelo Ramos100% (2)

- 206f8JD-Tech MahindraDocument9 pagini206f8JD-Tech MahindraHarshit AggarwalÎncă nu există evaluări

- Research ProposalDocument18 paginiResearch ProposalIsmaelÎncă nu există evaluări

- In Mein KampfDocument3 paginiIn Mein KampfAnonymous t5XUqBÎncă nu există evaluări

- Karly Hanson RèsumèDocument1 paginăKarly Hanson RèsumèhansonkarlyÎncă nu există evaluări

- Chpater 2 PDFDocument44 paginiChpater 2 PDFBilalÎncă nu există evaluări

- Mental Math: How to Develop a Mind for Numbers, Rapid Calculations and Creative Math Tricks (Including Special Speed Math for SAT, GMAT and GRE Students)De la EverandMental Math: How to Develop a Mind for Numbers, Rapid Calculations and Creative Math Tricks (Including Special Speed Math for SAT, GMAT and GRE Students)Încă nu există evaluări

- Quantum Physics: A Beginners Guide to How Quantum Physics Affects Everything around UsDe la EverandQuantum Physics: A Beginners Guide to How Quantum Physics Affects Everything around UsEvaluare: 4.5 din 5 stele4.5/5 (3)

- Basic Math & Pre-Algebra Workbook For Dummies with Online PracticeDe la EverandBasic Math & Pre-Algebra Workbook For Dummies with Online PracticeEvaluare: 4 din 5 stele4/5 (2)

- Build a Mathematical Mind - Even If You Think You Can't Have One: Become a Pattern Detective. Boost Your Critical and Logical Thinking Skills.De la EverandBuild a Mathematical Mind - Even If You Think You Can't Have One: Become a Pattern Detective. Boost Your Critical and Logical Thinking Skills.Evaluare: 5 din 5 stele5/5 (1)

- Images of Mathematics Viewed Through Number, Algebra, and GeometryDe la EverandImages of Mathematics Viewed Through Number, Algebra, and GeometryÎncă nu există evaluări

- A Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormDe la EverandA Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormEvaluare: 5 din 5 stele5/5 (5)

- Limitless Mind: Learn, Lead, and Live Without BarriersDe la EverandLimitless Mind: Learn, Lead, and Live Without BarriersEvaluare: 4 din 5 stele4/5 (6)

- Math Workshop, Grade K: A Framework for Guided Math and Independent PracticeDe la EverandMath Workshop, Grade K: A Framework for Guided Math and Independent PracticeEvaluare: 5 din 5 stele5/5 (1)

- ParaPro Assessment Preparation 2023-2024: Study Guide with 300 Practice Questions and Answers for the ETS Praxis Test (Paraprofessional Exam Prep)De la EverandParaPro Assessment Preparation 2023-2024: Study Guide with 300 Practice Questions and Answers for the ETS Praxis Test (Paraprofessional Exam Prep)Încă nu există evaluări

- Mental Math Secrets - How To Be a Human CalculatorDe la EverandMental Math Secrets - How To Be a Human CalculatorEvaluare: 5 din 5 stele5/5 (3)

- Fluent in 3 Months: How Anyone at Any Age Can Learn to Speak Any Language from Anywhere in the WorldDe la EverandFluent in 3 Months: How Anyone at Any Age Can Learn to Speak Any Language from Anywhere in the WorldEvaluare: 3 din 5 stele3/5 (80)

- Interactive Math Notebook Resource Book, Grade 6De la EverandInteractive Math Notebook Resource Book, Grade 6Încă nu există evaluări