Documente Academic

Documente Profesional

Documente Cultură

IDC Worldwide Storage

Încărcat de

JOSE RAFAEL COHECHA RODRIGUEZDrepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

IDC Worldwide Storage

Încărcat de

JOSE RAFAEL COHECHA RODRIGUEZDrepturi de autor:

Formate disponibile

MARKET FORECAST

Worldwide Storage for Cognitive/AI Workloads Forecast,

2018–2022

Ritu Jyoti Natalya Yezhkova

IDC MARKET FORECAST FIGURE

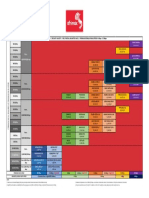

FIGURE 1

Worldwide Storage for Cognitive/AI Workloads Revenue Snapshot

2017–2022 Revenue ($B) with Growth

($B) (%)

12 90

80

10

70

8 60

50

6

40

Total: 8.4

4 30

2.1

20

2

10

1.8

0 0

2017 2022

Hardware Software Total growth (%)

Selected Segment Growth Rate Total Market

CAGR

▲ Hardware CAGR 35.9%

▲ Software CAGR 45.0%

37.2%

Note: Chart legend should be read from left to right.

Source: IDC, 2018

April 2018, IDC #US43707918e

IN THIS EXCERPT

The content for this excerpt was taken directly from Worldwide Storage for Cognitive/AI Workloads

Forecast, 2018–2022 . (Doc # US43707918). All or parts of the following sections are included in this

excerpt: Executive Summary, Market Forecast, Appendix and Learn More. Also included is Figures 1,

2, and 3, and Tables 1, 2, and 3.

EXECUTIVE SUMMARY

Intelligent applications based on artificial intelligence (AI), machine learning (ML), and continual deep

learning (DL) are the next wave of technology transforming how consumers and enterprises work,

learn, and play. While data is at the core of the new digital economy, it's about how you sense the

environment and manage the data from edge to core to cloud, how you analyze it in near real time,

learn from it, and then act on it to affect outcomes. IoT, mobile devices, big data, machine learning,

and cognitive/artificial intelligence all combine to continually sense and collectively learn from an

environment. What differentiates winning organizations is how they leverage that to deliver meaningful,

value-added predictions and actions for personalized life efficiency/convenience, improving industrial

processes, healthcare, experiential engagement, or any enterprise decision making.

AI has been around for decades, but due to the pervasiveness of data, seemingly infinite scalability of

cloud computing, availability of AI accelerators, and sophistication of the ML and DL algorithms, AI has

grabbed the center stage of business intelligence. IDC predicts that, by 2019, 40% of digital

transformation (DX) initiatives will use AI services; by 2021, 75% of commercial enterprise apps will

use AI, over 90% of consumers will interact with customer support bots, and over 50% of new

industrial robots will leverage AI. AI solutions will continue to see significant corporate investment over

the next several years.

As per IDC research, IT automation tops the use case for AI workloads, followed by workforce

management, customer relationship management, supply chain logistics, fraud analysis and

investigation, and predictive analytics (e.g., sales forecasting or failure prediction for preventive

maintenance). Privacy and security concerns will present serious landmines for AI-based DX efforts.

The IT organization will need to be among the enterprise's best and first use case environments for AI

— in development, infrastructure management, and cybersecurity.

Artificial intelligence is not only changing the way data and business processes are carried out; it is

leading to a broad reconfiguration of underlying infrastructure as well. Machine learning and deep

learning algorithms need huge quantities of training data and AI effectiveness depends heavily on

high-quality (and diverse) data inputs. Both training and inferencing are compute intensive and need

high performance for fast execution. All this drives the need for lots of compute, storage, and

networking resources, and to be truly successful, one needs to employ intelligent infrastructure.

IDC has predicted that, by 2021, 50% of enterprise infrastructure will employ artificial intelligence to

prevent issues before they occur, improve performance proactively, and optimize available resources.

IT and businesses will benefit from self-configurable, self-healing, self-optimizing infrastructure to

improve enterprise productivity, manage risks, and drive overall cost reduction.

©2018 IDC #US43707918e 2

In aggregate, revenue for storage systems and storage software for cognitive/AI workloads will grow at

a compound annual growth rate (CAGR) of 37.2% for 2017–2022 and reach $10.1 billion in 2022 and

capacity will grow at a CAGR of 63.3% for 2017–2022 and reach 201.2EB in 2022.

This IDC study presents the worldwide 2018–2022 forecast for storage hardware and software for

cognitive/AI workloads.

"Cognitive/AI is poised to transform next-generation IT. Machine learning and deep learning require

huge amounts of training (and diverse) data. Storage for cognitive/AI needs to be dynamically

scalable, adaptable, high performing, cloud integrated, and intelligent," said Ritu Jyoti, research

director, IDC's Enterprise Storage, Server and Infrastructure software team. "AI services continue to be

integral to digital transformation (DX) initiatives."

ADVICE FOR TECHNOLOGY SUPPLIERS

In the past few years, we have witnessed the rise of digital transformation and the disruptions and

opportunities it poses for traditional businesses and society. Organizations of every size and industry

risk fundamental disruption because of new technologies, new players, new ecosystems, and new

ways of doing business. IDC predicts worldwide spending on digital transformation technologies will

expand at a CAGR of 17.9% through 2021 to more than $2.1 trillion. Large and diverse data sets

create new challenges, but when combined with AI technologies and exponential computing power,

they create ever-greater opportunities.

Cognitive/AI applications and workloads will continue to shape the storage industry, with the central

theme "scale, performance, intelligence, and self-management." Naturally, storage suppliers are

aggressively building or need to build AI workload–centric products and solutions:

Embrace AI within your own IT to accelerate time to market of your offerings.

Exploit the power of AI to optimize software deployment strategies, faster and efficient next-

generation service desks, and use of data services for quality, security, and resource

optimization.

Build bundled engineering solutions to help customers accelerate adoption of AI workloads.

Support for multiformat storage infrastructure is also crucial, given that machine learning, cognitive

computing, and other forms of AI must pull both structured and unstructured data from multiple

sources that rely on iSCSI, NFS, SMB, and other solutions. The new infrastructure will have to push

speed, agility, and scale to entirely new levels if the enterprise hopes to draw meaningful results from

all that number-crunching.

Make your offerings intelligent to help customers improve enterprise productivity, manage risks, and

drive overall cost reduction. Self-configurable, self-healing, and self-optimizing storage can help:

Prevent issues before they occur

Improve performance proactively

Optimize available resources

©2018 IDC #US43707918e 3

MARKET FORECAST

Table 1 provides a top-line view of IDC's forecast of the storage for cognitive/AI workloads for 2017–

2022 (also refer back to Figure 1). For major market forces driving this forecast, see the Market

Context section.

TABLE 1

Worldwide Storage for Cognitive/AI Workloads Revenue and Capacity

Shipment, 2017–2022

2017 2022 2017–2022 CAGR (%)

Revenue ($B)

Storage systems 1.82 8.42 35.9

Storage software 0.26 1.66 45.0

Total 2.08 10.08 37.2

Total capacity (EB) 17.3 201.2 63.3

Note: The data includes a new definition of internal storage, and it does not include tape media.

Source: IDC, 2018

IDC estimates that:

Both storage systems and storage software for cognitive/AI workloads are expected to exhibit

growth in the next five years, exceeding growth of the respective broad markets (enterprise

storage systems and enterprise storage software).

In the DX era, with massive growth in data volumes and greater emphasis in analytics

(including real time), storage systems revenue will grow, incorporating larger capacity and

newer memory/flash technologies.

As cognitive/AI workloads become business critical, storage software revenue for data

protection, availability, integration, security, and data location optimization will grow.

Table 2 provides estimates for spending on storage systems for deployment in support of a variety of

cognitive/AI workloads use cases.

©2018 IDC #US43707918e 4

TABLE 2

Worldwide Storage Systems for Cognitive/AI Workloads Revenue by

Use Case, 2017–2022 ($B)

2017 2022 2017–2022 CAGR (%)

IT automation 0.57 2.10 29.7

Workforce management 0.23 0.70 25.5

Customer relationship management 0.23 0.70 25.5

Program advisors and recommendation 0.24 1.64 46.5

Predictive analytics 0.23 1.57 46.3

Fraud analysis and investigation 0.17 0.87 39.3

Other 0.15 0.84 41.2

Total 1.82 8.42 35.9

Source: IDC, 2018

IDC estimates:

IT automation is the topmost use case for AI workloads. Revenue for storage used for IT

automation will start from a larger percentage and have decent growth.

Organizations are prioritizing use cases that have immediate revenue and cost impact. Back-

office functions are prioritized as there is already extensive amounts of computers in use,

alleviating the skill set and human concerns related to AI adoption.

Front-office functions usage of AI is expected to have a large impact on business, and with

change management in place, retooling of existing staff and improvement in trustworthiness of

data and algorithms, AI adoption for front-office functions are expected to grow the fastest.

Table 3 provides estimates for spending on storage systems for deployment in private cloud, public

cloud, edge, and traditional deployment scenario.

©2018 IDC #US43707918e 5

TABLE 3

Worldwide Storage Systems for Cognitive/AI Workloads

Revenue by Consumption Model/Storage Deployment Scenario,

2017–2022 ($B)

2017 2022 2017–2022 CAGR (%)

On-premises (traditional) 0.22 0.47 16.0

Private cloud 0.37 2.13 42.1

Public cloud 1.18 5.36 35.3

Edge 0.04 0.46 60.3

Total 1.82 8.42 35.9

Source: IDC, 2018

IDC estimates:

Revenue for storage used for cognitive/AI workloads in the public and private cloud

consumption/storage deployment scenario will grow much faster when compared with

traditional (on/off-premises) storage deployment scenario. Agility and flexibility are

fundamental to cognitive/AI workloads deployments. Many businesses don't have the ability to

scale their existing infrastructure in capacity or performance, skill set to support newer

technologies, or the floor space to support the scale of capacity. All of these are leading to

more and more of cognitive/AI deployments on the public cloud or with hosted service

providers. However, private cloud/on-premises traditional deployment is expected to be

significant for the training phase of AI workload, especially where intellectual property

protection is critical. Today, in some cases, the training phase is run on-premises, also due to

the lack of powerful infrastructure availability in public cloud. Inferencing is typically run on

public cloud.

Revenue for storage used for cognitive/AI workloads at the edge is expected to grow the

fastest as the bulk of inferencing for IoT data will happen at the edge.

MARKET CONTEXT

Drivers and Inhibitors

With regard to storage for cognitive/AI workloads, while there are quite a few drivers, it is important to

realize that some of the market forces like the "the future workforce — global demand for digital talent"

is an inhibitor as well as a driver — inhibits the overall growth, while driving employment of more

AI/machine learning–based cognitive workloads.

©2018 IDC #US43707918e 6

Drivers

Accelerating DX — Technology-Centric Transformation Altering Business and Society

Assumption: As per IDC's prediction, worldwide spending on digital transformation

technologies will expand at a CAGR of 17.9% through 2021 to more than $2.1 trillion. Digital

transformation initiatives are underpinned by the need to get business insights quicker and

more accurately to more decision makers than ever before. A growing number of executives

are placing premium value on data-driven decision making and the ability of analytics and

technology to derive differentiated insights from the wide variety and large volume of internal

and external data available to organizations.

Impact: What differentiates winners is how they leverage digitization and how fast they can

deliver meaningful, value-added predictions and actions or any enterprise decision making.

Organizations will exploit the power of cognitive/AI applications and platforms to generate

more inferencing models, more accurately, than traditional analytical and programming

approaches. They will leverage public cloud computing and services along with on/off-

premises private cloud and edge computing for AI workloads. All this will drive the need for

high-performance, scalable storage that is tuned for varied data formats and access and has

the power to process and analyze large volumes of data and speed to support faster compute

calculations and decision making.

Sense, Compute, Actuate — The New Data-Centric Paradigm

Assumption: While data is at the core of the new digital economy, it's about how you sense the

environment and manage the data from edge to core to cloud, analyze it in near real time,

learn from it, and then act on it to affect outcomes. IoT, mobile devices, big data, machine

learning, and cognitive/AI all combine to continually sense and collectively learn from an

environment. IDC forecasts that software, services, and hardware spending on AI and ML will

grow from $12 billion in 2017 to $57.6 billion by 2021.

Impact: Large and diverse data sets create new challenges, but when combined with AI

technologies and exponential computing power, they create ever-greater opportunities. ML

and DL algorithms need quantities of training data and AI effectiveness depends heavily on

high-quality (and diverse) data inputs. All this will lead to more and dynamically scalable and

adaptable storage.

Human Versus Machine — The Impact of AI and Automation

Assumption: Cognitive advances, combined with robotics and AR/VR, are now actively

impacting experiential engagement, business and manufacturing processes, and strategies.

Automation is enhancing and, in some cases, replacing human decisions, yet is less

transparent and more difficult to understand or challenge. Many tasks can be automated, but

tough management decisions must be made about if, how, or when. Automation can empower

humans to be more intelligent and productive but may also redefine or eliminate job

categories.

Impact: AI embedded in almost all IT and business systems/solutions will enable accelerated

improvement/enhancement cycles, through continuous learning and automation. Selecting

digital services and apps without "AI inside" will put your organization at risk of falling behind

competitors' pace of innovation. IDC predicts that, by 2021, 50% of enterprise infrastructure

will employ some form of cognitive and artificial intelligence to improve enterprise productivity,

manage risks, and drive overall cost reduction. All this drive the need for software-defined,

scalable, cloud-integrated intelligent storage.

©2018 IDC #US43707918e 7

Inhibitors

The Future Workforce — Global Demand for Digital Talent

Assumption: As market shifts and rapidly changing technologies transform businesses,

companies that don't have up-to-date, evolving skill sets are falling behind. There is a "war" or

at least a "grab" to attract the emerging skill sets needed to excel in digital transformation.

Millennials, especially those with both business and IT skills, are increasingly in high demand

— for leadership, analytics, coding, and managing projects to scale — yet universities are not

turning out sufficient candidates to meet the needs.

Impact: IDC believes that this can be both a driver and an inhibitor for the cognitive/AI

workloads — a driver in the sense of support for automated cognitive/machine learning–based

applications that can fill in the skill gap, but an inhibitor from the aspect of lack of developers

that can help build and deploy cognitive/AI applications. AI will be used to analyze log files to

manage security risks, automate infrastructure provisioning, support intelligent data

placement, perform predictive maintenance to eliminate unexpected failures and downtime,

and run repetitive tasks to improve overall enterprise productivity and drive cost reduction.

Significant Market Developments

Market developments disrupting the overall IT industry continue to shape the storage market

landscape for cognitive/AI applications and workloads at a fast pace. The concepts and technologies

that hit the market just two to three years ago are now well known within the end-user community and

define the strategic initiatives and road maps of storage vendors.

Enterprise Storage Systems Trend for Big Data Analytics Including

Cognitive/AI Workloads

Historically, data analytics centered around large files, sequential access, and batched data. It ran on

distributed disks and colocated compute and storage to offset slow network access speed.

Modern data sources and characteristics are different. Today, data consists of small to large files,

structured, semistructured, and unstructured content and data access varies from random to

sequential. By 2025, more than a quarter of the global data set will be real time in nature and real-time

IoT data will make up more than 95% of it. In the new age of big data, modern applications and

compute technologies leverage massively parallel architecture for performance.

If we examine the data pipeline for AI workloads (see Figure 2), one can see that the application

profiles, compute, and I/O profiles change from ingestion to real-world inferencing. Machine learning

and deep learning need huge quantities of training data. Both training and inferencing are compute

intensive and need high performance for fast execution. Artificial intelligence applications push the

limits on thousands of GPU cores or thousands of CPU servers. Parallel compute demands parallel

storage. While the training phase requires large data stores, inferencing has less need for it. The

inference models are often stored in a DevOps-style repository where they benefit from ultra-low-

latency access. While the training phase is all-set once the execution model has been developed

based on the data and the workload has moved to the inferencing stage, often retraining of the model

is needed as new or modified data comes to light. In some cases, the real-time nature of the

application may require near-constant retraining and update of the model. Also, over a period of time,

organizations may benefit from retraining the model due to additional data sources and insights in

play.

©2018 IDC #US43707918e 8

FIGURE 2

Data Pipeline for AI Workloads

Source: IDC, 2018

Today, customers are using different infrastructure solutions and approaches to support the data

pipeline for AI, generally leading to data silos. Some of them create duplicate copies of the data for the

AI pipeline with the intent of not disturbing the stable applications.

If data doesn't flow smoothly through the entire pipeline, productivity will be compromised and

organizations will need to commit increasing amounts of effort/resources to manage the pipeline.

Organizations need to adopt dynamically adaptable and scalable intelligent infrastructure, that is tuned

for varied data formats and access, has the power to process and analyze large volumes of data, and

speed to support faster compute calculations and decision making. It also needs to be efficient to help

generate more inferencing models, more accurately, than traditional analytical and programming

approaches leading to overall improvement in productivity.

As per IDC's Cognitive, ML and AI Workloads Infrastructure Market Survey conducted in January 2018

(n = 405, 1,000+ U.S. employees and 500+ Canadian employees), today, traditional SAN/NAS is

largely used for on-premises run of AI/ML/DL workloads due to their existing deployment footprint and

earlier stages of AI adoption, but with the need to scale dynamically, store large volumes of data at

relatively low cost, and support high-performance, software-defined storage, hyperconverged

infrastructure, and all-flash arrays with newer memory technologies will gain adoption, aligned with the

individual offering-specific advantages and the data pipeline stage of AI deployment.

Public and Private Cloud — AI-Powered Services

The rapid adoption of artificial intelligence will continue to be turbocharged by the cloud's "AI war" —

that is, the ongoing battle among the major public cloud providers to outdo each other with an ever-

expanding variety of AI-powered services. Amazon, Microsoft, and Google continue to expand their AI

services. Amazon Web Services (AWS) and Microsoft Azure allow organizations to test different

machine learning algorithms, for example, to see what might be possible with their data. From there,

organizations can choose between two options: fail it or scale it.

A large percentage of the enterprise that is using the public cloud right now for AI are using it as a test

bed — an inexpensive way to get started and to figure out which applications are going to be amenable

©2018 IDC #US43707918e 9

to different forms of AI. Public cloud also eliminates the need for organizations to invest in expensive

specialty hardware that many AI workloads require. Most of the major public cloud providers, for

example, now offer cloud instances based on GPUs, which are especially beneficial for compute-

intensive AI workloads.

The decision to run the AI pipeline on public cloud versus on-premises is also typically driven by data

gravity — where the data currently is or is likely to be stored, easy access to compute resources,

applications, and the speed by which the capabilities need to be explored and deployed. Movement of

large data sets out of the cloud is cost prohibitive, so it is more than likely that the total pipeline will run

on the public cloud once the data is committed there.

Intelligent Storage

The future of enterprise storage is not just feeds and speeds, but intelligence and self-management

lead to self-configurable, self-healing, and self-optimizing storage. Predictive analytics and artificial

intelligence will enable companies to sharply reduce downtime and ensure optimal application

performance, essentially switching from "firefighter mode" to a more proactive IT strategy.

Today, AI/ML capabilities in storage are in infancy and advancements are being made. Below we

cover a few representative vendor examples supporting/working toward intelligent storage. Note that

this list is not meant to be comprehensive.

Hewlett Packard Enterprise (HPE) is updating its acquired Nimble Storage InfoSight array

management system with a machine learning-driven recommendation engine and adding InfoSight to

3PAR arrays. The new AI Recommendation Engine (AIRE) is supposed to improve both infrastructure

management and application reliability. InfoSight now, HPE says, preemptively advises IT how to

avoid issues, improve performance, and optimize available resources. HPE's vision is to create

autonomous datacenters.

IBM recently announced plans to add new artificial intelligence functionality to its cloud-based storage

management platform, IBM Spectrum Control Storage Insights. The new cognitive capability will

stream device metadata from IBM Storage systems to the cloud, augmenting human understanding

with machine learning insights to help optimize the performance, capacity, and health of clients'

storage infrastructure. IBM cognitive storage management capabilities offered by this platform already

include optimization with data tiering and reclamation of unused storage. IBM plans to add further

artificial intelligence that will reduce the time it takes to monitor complex infrastructures and to find and

resolve issues that impact application performance.

NetApp Active IQ leverages machine learning to teach the telemetry system new patterns, so it's

continually learning and adapting to your evolving environments.

Dell EMC CloudIQ proactively monitors and measures the overall health of an unlimited number of Dell

EMC Unity storage systems through intelligent, comprehensive, and predictive analytics. It's these

context-aware insights backed by extensive telemetry data that help you ensure stringent application

performance requirements are being met while proactively differentiating standard and "normal" spikes

in performance from atypical adverse behaviors and providing potential root causes and remediation

for the latter. CloudIQ dynamically compares the behavior of each Dell EMC Unity storage array

against the "norms" while looking for anomalies that could indicate potential problems.

©2018 IDC #US43707918e 10

Pure Storage Meta highlights issues, finds known problems, raises tickets, and so on. It also has the

ability to model workloads across systems and do "what if" scenarios for new workloads. This can

mean testing whether a new workload will fit on a specific platform but also testing whether two sets of

workloads will interact well together. The logical conclusion to this is being able to optimize workloads

across a range of existing deployments, then determine what is the best new purchase to make,

without having to guess.

Eventually cloud-based AI and ML will be used to fully automate the operation of the storage. This will

mean storage systems that do more of the data management themselves, enabling organizations to

shift dollars away from today's IT maintenance budgets over to tomorrow's DX initiatives.

Emerging Memory Technologies

Up until now, NVMe-based flash drives and storage arrays have typically been 30–50% more

expensive than equivalent all-flash arrays. Today, things are changing. The volumes are higher, costs

have come down, and multiple NVMe SSD suppliers are becoming mainstream.

In addition, there are several other viable emerging memory candidates that will be valuable for the

cognitive/AI workloads. Intel's 3DXP, new storage class memory, is expected to go mainstream in a

year or so. They should operate in 10–20 microsecond realm, instead of the 100–200 microsecond

range for flash. This 10x performance improvement will manifest as both storage cache and tier to

deliver better, faster storage for cognitive/AI workloads.

Changes from Prior Forecast

This is the first publication of the worldwide storage for cognitive/AI applications or workloads, so there

are no changes from prior forecast to report.

MARKET DEFINITION

Artificial intelligence(AI), machine learning (ML), and deep learning (DL) are all interrelated. AI is

defined as the study and research of providing software and hardware that emulates human beings.

ML is a discipline and sets of algorithms that have evolved from statistics and is now considered a part

of AI. ML does not require much of explicit programming in advance to gain intelligent insight from

data, because of its ability to use learning algorithms that simulate human learning capabilities by

developing statistical models based on data, lots of data. The learning can be supervised by humans,

unsupervised or reinforced. DL is a subset of ML. It relies on a type of algorithm called neural networks

with extensive layers between input/output and, again, lots of data.

IDC defines the storage for cognitive/AI workloads as the storage used for running the cognitive/AI

software platforms, content analytics, advanced and predictive analytics, and search systems using

machine learning and deep learning algorithms.

Cognitive/AI software platforms provide the tools and technologies to analyze, organize, access, and

provide advisory services based on a range of structured and unstructured information. These

platforms facilitate the development of intelligent, advisory, and cognitively enabled applications. The

technology components of cognitive software platforms include text analytics, rich media analytics

(such as audio, video, and image), tagging, searching, machine learning, categorization, clustering,

hypothesis generation, question answering, visualization, filtering, alerting, and navigation. These

platforms typically include knowledge representation tools such as knowledge graphs, triple stores, or

©2018 IDC #US43707918e 11

other types of NoSQL data stores. These platforms also provide for knowledge curation and

continuous automatic learning based on tracking past experiences. Representative software vendors

in this market include IBM (Watson), Amazon (AI Services), Intel (Saffron Technology), Microsoft

(Cognitive Services), Palantir, IPsoft (Amelia), Nuance Communications (Nina), CustomerMatrix

(Opportunity Science), Tata Consultancy Services (Ignio), Wipro (HOLMES), Infosys (Mana), and

Google (Cloud Machine Learning Platform).

Content analytics systems provide tools for recognizing, understanding, and extracting value from text

or by using similar technologies to generate human readable text. This submarket also includes

language analyzers and automated language translation as well as text clustering and categorization

tools. This submarket also includes software for recognizing, identifying, and extracting information

from audio, voice, and speech data as well as speech identification and recognition plus converting

sounds into useful text. Finally, this submarket includes software for recognizing, identifying, and

extracting information from images and video, including pattern recognition, objects, colors, and other

attributes such as people, faces, cars, and scenery. These tools are used for computer vision

applications and clustering, categorization, and search applications. Representative software vendors

in this submarket include SAP (HANA Text Analysis), Google (Cloud Speech API), Nuance (Automatic

Speech Recognition and Natural Language Understanding), and IBM Intelligent Video Analytics.

Advanced and predictive analytics tools include data mining and statistical software. These tools use a

range of techniques to create, test, and execute statistical models. Some techniques used are

machine learning, regression, neural networks, rule induction, and clustering. Advanced and predictive

analytics tools and techniques are used to discover relationships in data and make predictions that are

hidden, not apparent, or too complex to be extracted using query, reporting, and multidimensional

analysis software. Products on the market vary in scope. Some products include their own

programming language and algorithms for building models, but other products include scoring engines

and model management features that can execute models built using proprietary or open source

modeling languages. Representative software vendors in this market include SAS, IBM (SPSS),

RapidMiner, MathWorks (MATLAB), Fuzzy Logix, Microsoft (Microsoft R Server), SAP (SAP Predictive

Analytics), Oracle (Oracle Advanced Analytics), Quest Software (Statistica), and Wolfram

(Mathematica).

Search systems include departmental, enterprise, and task-based search and discovery systems as

well as cloud-based and personal information access systems. This submarket also includes unified

information access tools and systems that combine text analytics, clustering, categorization, and

search into a comprehensive information access system. Representative software vendors in this

market include Palantir (Gotham), Google (Site Search and Cloud Search), IBM (Watson Explorer),

and Elastic (Elasticsearch).

For further details on the cognitive/AI software platforms, content analytics, advanced and predictive

analytics, and search systems, please refer IDC's Worldwide Big Data and Analytics Software

Taxonomy, 2017 (IDC #US42353216, March 2017).

Worldwide storage for cognitive/AI workloads is a subset of the worldwide storage for big data

analytics market (see Figure 3). It draws upon most of the revenue from the "rest of the BDA" segment,

which covers cognitive/AI software platforms, content analytics tools, and advanced and predictive

analytics tools; "nonrelational data stores" and "continuous analytic tools," and a small subset from

"relational data warehouses." The storage can be varied by storage model, array and infrastructure

type, and data organization. It can be consumed as nonservice or as a service.

©2018 IDC #US43707918e 12

The storage forecast for cognitive/AI workloads covers data used in the explore, training, and

inferencing phases of AI workload deployment.

For details on IDC's definition of worldwide storage for big data and analytics, refer to IDC's Worldwide

Storage for Big Data and Analytics Taxonomy, 2017 (IDC #US42555117, May 2017).

FIGURE 3

Worldwide Storage for Big Data and Analytics

Source: IDC, 2018

METHODOLOGY

The five-year annual forecast published in this study is an annual rollup of IDC's worldwide storage for

cognitive/AI workloads. This forecast covers all of the storage systems and associated storage

software.

The forecast methodology leveraged a wide variety of information sources, including:

Worldwide Storage for Big Data and Analytics Forecast, 2017–2021 (IDC #US43013117,

September 2017)

IDC's Worldwide Storage for Big Data and Analytics Taxonomy, 2017 (IDC #US42555117,

May 2017)

IDC models of historical IT technology adoption/diffusion

The forecast was developed by integrating this information with IDC-developed forecast assumptions

about key market growth drivers and inhibitors.

The tables and figures in this study are generated from a proprietary IDC database and analytical

tools. Our census process researches enterprise storage information on a product-, vendor-, and

©2018 IDC #US43707918e 13

geography-specific basis. IDC's methodology provides customers with a nearly unlimited ability to

analyze the enterprise storage market from many perspectives. IDC's Continuous Intelligence Services

and consulting services can provide additional insights beyond the scope of this study using the

database supporting it.

Note: All numbers in this document may not be exact due to rounding.

RELATED RESEARCH

What Type of Storage Architecture Will Be Used for On-Premises Run of AI/ML/DL

Workloads? (IDC #US43587818, February 2018)

Are You Ready for Intelligent Infrastructure in Enterprise Datacenters? (IDC #DR2018_T5_RJ,

February 2018)

IDC's Forecast Scenario Assumptions for the ICT Markets and Historical Market Values and

Exchange Rates, Version 4, 2017 (IDC #US43531218, January 2018)

Applying Cognitive/Artificial Intelligence Techniques to Next-Generation IT (IDC

#US43176317, November 2017)

Worldwide Storage for Big Data and Analytics Forecast, 2017–2021 (IDC #US43013117,

September 2017)

IDC's Worldwide Storage for Big Data and Analytics Taxonomy, 2017 (IDC #US42555117,

May 2017)

©2018 IDC #US43707918e 14

About IDC

International Data Corporation (IDC) is the premier global provider of market intelligence, advisory

services, and events for the information technology, telecommunications and consumer technology

markets. IDC helps IT professionals, business executives, and the investment community make fact-

based decisions on technology purchases and business strategy. More than 1,100 IDC analysts

provide global, regional, and local expertise on technology and industry opportunities and trends in

over 110 countries worldwide. For 50 years, IDC has provided strategic insights to help our clients

achieve their key business objectives. IDC is a subsidiary of IDG, the world's leading technology

media, research, and events company.

Global Headquarters

5 Speen Street

Framingham, MA 01701

USA

508.872.8200

Twitter: @IDC

idc-community.com

www.idc.com

Copyright Notice

This IDC research document was published as part of an IDC continuous intelligence service, providing written

research, analyst interactions, telebriefings, and conferences. Visit www.idc.com to learn more about IDC

subscription and consulting services. To view a list of IDC offices worldwide, visit www.idc.com/offices. Please

contact the IDC Hotline at 800.343.4952, ext. 7988 (or +1.508.988.7988) or sales@idc.com for information on

applying the price of this document toward the purchase of an IDC service or for information on additional copies

or web rights.

Copyright 2018 IDC. Reproduction is forbidden unless authorized. All rights reserved.

S-ar putea să vă placă și

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5795)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- All Code ListsDocument3.292 paginiAll Code ListsshanzÎncă nu există evaluări

- Tube Material SelectionDocument67 paginiTube Material Selectionpatidar11100% (6)

- Duo Dan MillDocument4 paginiDuo Dan Millnhungocbk92_22841857Încă nu există evaluări

- Siva QADocument6 paginiSiva QAsivakanth mÎncă nu există evaluări

- Learn PowerShell Core 6.0Document800 paginiLearn PowerShell Core 6.0Felippe Coelho100% (2)

- Repair Manual For Claas Mega 202 218 Combine HarvesterDocument382 paginiRepair Manual For Claas Mega 202 218 Combine Harvesterramunas100% (7)

- Food Delivery Through Mobile App - India (A Case Study)Document11 paginiFood Delivery Through Mobile App - India (A Case Study)Niraj Singh100% (1)

- Six Sigma Awareness New VersionDocument46 paginiSix Sigma Awareness New VersionBESÎncă nu există evaluări

- Backup Exec 12.5 AVVIDocument6 paginiBackup Exec 12.5 AVVIcharanjit_singhÎncă nu există evaluări

- Leadership Culture and Management Practices A Comparative Study Between Denmark and Japan PDFDocument70 paginiLeadership Culture and Management Practices A Comparative Study Between Denmark and Japan PDFMichelle Samillano PasaheÎncă nu există evaluări

- Comsol Acdc RF 42aDocument71 paginiComsol Acdc RF 42aneomindxÎncă nu există evaluări

- Gunite Slack Adjuster: Service ManualDocument16 paginiGunite Slack Adjuster: Service ManualMarlon MontenegroÎncă nu există evaluări

- Delhi Subordinate Services Selection Board: Government of NCT of DelhiDocument60 paginiDelhi Subordinate Services Selection Board: Government of NCT of Delhiakkshita_upadhyay2003Încă nu există evaluări

- NeuCardio E12Document2 paginiNeuCardio E12Daniel ParÎncă nu există evaluări

- Otrs Admin BookDocument619 paginiOtrs Admin BookMendoza Carlos100% (1)

- Pakistan Machine Tool Factory Internship ReportDocument14 paginiPakistan Machine Tool Factory Internship ReportAtif MunirÎncă nu există evaluări

- B-Trees and B+-Trees: Jay Yim CS 157B Dr. LeeDocument34 paginiB-Trees and B+-Trees: Jay Yim CS 157B Dr. LeemaskplyÎncă nu există evaluări

- Flyover Construction ThesisDocument4 paginiFlyover Construction ThesisLeyon Delos Santos60% (5)

- TX IrhsDocument1 paginăTX IrhsvelizarkoÎncă nu există evaluări

- Decrypt SSL and SSH TrafficDocument5 paginiDecrypt SSL and SSH TrafficMinh Luan PhamÎncă nu există evaluări

- 8 PCO Training Invitation Letter December 2018Document2 pagini8 PCO Training Invitation Letter December 2018sayo goraÎncă nu există evaluări

- GS-1930 GS-1530 Service Manual: Technical PublicationsDocument137 paginiGS-1930 GS-1530 Service Manual: Technical PublicationshabibullaÎncă nu există evaluări

- Diffusion of Helium Through QuartzDocument5 paginiDiffusion of Helium Through QuartzElenaÎncă nu există evaluări

- Gea 01 RyDocument2 paginiGea 01 RyHasan CelebesÎncă nu există evaluări

- Afrimax Pricing Table Feb23 Rel BDocument1 paginăAfrimax Pricing Table Feb23 Rel BPhadia ShavaÎncă nu există evaluări

- Anti Banned HostDocument1.276 paginiAnti Banned HostVicky Kumar?skÎncă nu există evaluări

- أثر جودة الخدمة المصرفية الإلكترونية في تقوية العلاقة بين المصرف والزبائن - رمزي طلال حسن الردايدة PDFDocument146 paginiأثر جودة الخدمة المصرفية الإلكترونية في تقوية العلاقة بين المصرف والزبائن - رمزي طلال حسن الردايدة PDFNezo Qawasmeh100% (1)

- Mobile Master Card PayPass TSM Functional Requirements v1-0Document40 paginiMobile Master Card PayPass TSM Functional Requirements v1-0Wiraj GunasingheÎncă nu există evaluări

- What Is JavaScriptDocument10 paginiWhat Is JavaScriptKhalinux AnonimoÎncă nu există evaluări

- Analysis of Challenges Facing Rice Processing in NDocument8 paginiAnalysis of Challenges Facing Rice Processing in Nanon_860431436Încă nu există evaluări