Documente Academic

Documente Profesional

Documente Cultură

10.1.1.72.7494

Încărcat de

Ivan Philip PerezTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

10.1.1.72.7494

Încărcat de

Ivan Philip PerezDrepturi de autor:

Formate disponibile

A Post-Implementation Evaluation of a Student Information

System in the UK Higher Education Sector.

Margaret Gemmell

University of Salford, UK

m.gemmell@salford.ac.uk

Rosane Pagano

Manchester Metropolitan University, UK

r.pagano@mmu.ac.uk

Abstract: The dramatic expansion of the higher education sector in the UK has contributed to a significant

increase in competition among organizations within the sector. Based in the North of England, Salford University

is one of the largest universities in the UK with regards to student numbers and programs of study. The Student

Information System supports the management of student information throughout key business activities, that is,

recruitment, admission, registration, invoicing, accommodation, assessment, progression, graduation and

careers. The purpose of this study is to perform a user-centered post-implementation evaluation of this business

critical IT system at Salford University.

Keywords: human and organizational aspects of IT/IS; post implementation of projects.

1. Introduction users from across the organization, and their

general reluctance to adopt the system, an in-

The dramatic expansion of the higher depth evaluation of this substantial IT function

education sector in the UK has contributed to a was launched by Senior Management.

significant increase in competition among

organizations within the sector. With resources The project aim was to elicit users’ perceptions

increasing at a much lower pace, the need to and overall attitude to the effectiveness of the

harness the effectiveness of core business Student Information System. It has been

processes has brought to the fore the recognized in the literature that user

importance of continuous monitoring of IT information satisfaction significantly affects the

function through the systematic evaluation of success or failure of information systems. The

computer based systems. There is a relatively methodology used to carry out the

small number of studies reported in the investigation was based on the Whyte &

literature evaluating IS implementations in the Bytheway framework for assessing an

higher education sector. Furthermore, in view information system effectiveness. In a detailed

of the lessons learned from the research analysis of user needs, they proposed twenty-

project reported in this paper, it has become one system attributes which most influenced

apparent that, rather than a reaction to a major users’ perceptions of the overall effectiveness.

crisis – non-adoption of a business critical IT They also specified three elements relevant to

system – evaluation methods should be used the assessment of a system – the Product, the

as a norm. Making it an incremental life-long Service, and the Process (the management of

learning experience is likely to help optimize IT the project).

investments for organizations in the sector.

The Product-Service-Process grid was used to

This case study focuses on the post analyse the Student Information System. The

implementation evaluation of a business critical attributes associated with each element of the

IT system at Salford University. Based in the grid were then evaluated by the users. As part

North of England, Salford University is one of of the research method, a Gap Approach was

the largest universities in the UK with regards taken to the measurement of those system

to student numbers and programs of study. attributes (importance and performance). Data

The purpose of the system is to manage were collected through a four-part

student information throughout key business questionnaire, mostly closed questions. Open

activities, that is, recruitment, admission, questions were also included to ascertain

registration, invoicing, accommodation, users’ perceptions of the business case for

assessment, progression, graduation and developing the system in terms of achieving

careers. This is a large centralized computer organizational objectives. A comprehensive

based system that can be accessed by all evaluation of the system was then constructed

areas of the University. In response to the by examining the individual attribute scores,

strong dissatisfaction expressed by potential the free responses, and the respondents’

www.ejise.com ©Academic Conferences Limited

Electronic Journal of Information Systems Evaluation Volume 6 Issue 2 (2003) 95-106 96

profile. The sample included a wide variety of against other sources. Miller & Doyle (1984)

users across the University, who were then were able to identify: completeness, accuracy,

grouped into types. Gap analysis was flexibility and relevance of information outputs

performed on the whole sample as well as on as major success factors for users.

each of the groupings, yielding interesting

comparisons. The evaluation project was 2.1.2 The design of the front-end

conducted over a period of six months during

the year 2002. The 'front-end' of the system, consists of the

elements of the system that the users see and

One of the most compelling implications of the interact with, i.e. the forms, screens and

case study findings concerns the overall reports. A well designed 'front-end' is likely to

impact on IT project management in general result in successful use of an information

within the organization. How well the users’ system. Users evaluate the design of the 'front-

business needs are met by the Product and end' according to: its affect, efficiency,

the Service attributes is dependent upon the ‘learnability’, helpfulness and levels of Control

Process. The research outcome suggests that (Oulanov & Pajanillo, 2001).

the organization would greatly benefit from

moving to a continuous participative evaluation 2.1.3 The level of functionality

activity integrated into the project management The functionality of a system relates to how it

process. performs various processes concerned with

the business needs of the users. Clegg et al

2. User evaluation of an (1997) determined functionality and its

information system – consequential impact on business processes

Conceptual framework as one of the principle variables that influence

users reactions to a new Information System.

Projects are more likely to be successful where

users do not become disillusioned, from having 2.1.4 The quality of training

overly high expectations of a system that

cannot be met. In order for users to perceive The quality of training is vital to project

an Information System as a success, it is success. It is the main way users learn about

important for their expectations and the system, and its quality will affect not only

perceptions to be managed effectively (Clegg their successful use of the system but also

et al, 1997) (Marcella & Middleton, 1996) (Lim their attitudes towards it. Riley & Smith (1997)

& Tang, 2000). Whyte & Bytheway (1996) observed how insufficient training could

proposed a holistic approach to IS evaluation contribute to users 'resistance to change', and

by specifying three core elements to a system: Clegg et al (1997) credited training as core to

the Product, that is, hardware, software, and successful technological change.

training provided to users; the Service, that is,

how users are responded to; and the Process 2.1.5 The quality of documentation

by which the Product and Service are

User documentation of an information system

provided. How well the business needs of the

usually takes the form of training manuals and

user are met by product and service attributes

user instructions. To achieve documentation of

of the system is dependent upon the Process,

a high quality and of use to users, it should

i.e. the Management of the Project.

take a user perspective, by satisfying the

varying skill levels among users, orientating

2.1 The product and reassuring users (Nahl, 1999).

Aspects of the product which users value are

the quality information held, the design of the 2.2 The service

'front end', the level of functionality, quality of

Elements to the service provided which are

training and quality of documentation.

important to users are concerned with user

involvement, communication and response to

2.1.1 The quality of the information held their needs.

The information held in an information system

needs to be reliable and accurate, if the users 2.2.1 User involvement

are to have any faith in the system. When

Oulanov & Parjrillo (2001) emphasised the

users cease to trust the data held in a system,

importance of user participation in system

they either stop using the system and create

planning and design as significant factors in

their own smaller systems, or they spend time

their perceiving project success. Cicmill (1999)

checking the information outputs of the system

www.ejise.com ©Academic Conferences Limited

97 Margaret Gemmell & Rosane Pagano

cited a case where the non-inclusion of users subsequently used in determining a

in the requirements gathering process lead to comprehensive measurement of the users’

suspicion and lack of co-operation from users, evaluation of the Student Information System.

resulting in a system that was unsuccessful in Some of Whyte & Bytheway’s attributes were

meeting their information needs. Conversely split into several other more detailed attributes,

Middleton (2000) was able to cite cases of a few were discarded as deemed irrelevant,

comparatively successful projects, where and others were renamed to match the current

project workers and users shared the same users’ jargon. The grid of attributes used in the

office space, there by enabling a development system’s evaluation is presented in Table 1.

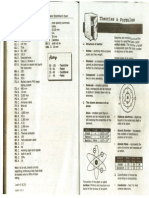

of trust and shared vision between them. Table 1: Grid of attributes used in the system’s

evaluation

2.2.2 Communication and response Attribute Attribute Description

Communication and responsiveness are Number Name

extremely important in an information 1 Business The level of support

technology project as they affect users Process to the business

processes carried

perceptions and expectations, allow them to

out in the University

keep up to date with project developments and by users.

provide an environment where their views can 2 Necessity The level of

be heard. Collyer (2000) cited the example of a requirement to use

successfully designed and implemented the system in order

business processes and IT system at to perform business

Guinness, where the IS director of the processes.

Guinness Global Support Team said 3 University The system’s ability

communication was "key" and "leadership from Strategy (1) to support the

University Strategic

top was excellent". (Middleton, 2000) observed

Plan.

successful projects, where supportive 4 University The system’s ability

atmospheres were created allowing Strategy (2) to support the

communication to flourish and users to express development of the

themselves freely. University Strategic

Plan.

2.3 The process 5 University The system’s ability

Strategy (3) to support the

The process by which a project is managed is promotion of the

vital to successful projects, regardless of University.

whether users directly perceive its affect on 6 Accuracy The quality of

themselves and the project. Management of information held on

projects is not a simple process, often the the system.

7 Constraint The control on users’

change achieved is more emergent than Control interaction to prevent

planned and although managers may have them from making

goals at the beginning of a project, the actual errors on the

outcomes are usually quite different (Boddy, system.

2000). Managers need to be aware of the 8 Effectiveness The usefulness of

issues surrounding users. When introducing the system.

new technology into a volatile environment, 9 Navigation The ease at which

there is a need for flexibility (Boddy, 2002) and users are able to

for service quality standards to be set (Pitt et search the various

system forms in

al, 1998). Whittaker (1999) reported how poor order to find the

project planning, especially a weak project information they

plan and inadequate risk management, caused require.

project failure. 10 Ease of Use The simplicity and

learnability of the

2.4 Attributes related to product- system.

11 Transparency The different

service-process

components of the

Whyte & Bytheway (1996) were able to carry system are

out a more detailed analysis of User Needs integrated,

and to determine a set of attributes related to appearing as one.

12 Communication The publicizing and

the Product, the Service and the Process

explanation of the

discussed previously. In this study, Whyte & system to users.

Bytheway’s grid of attributes was adapted and

www.ejise.com ©Academic Conferences Limited

Electronic Journal of Information Systems Evaluation Volume 6 Issue 2 (2003) 95-106 98

Attribute Attribute Description information system (Remenyi et al, 2003). With

Number Name gap analysis, when there is a positive gap

13 Reporting (1) The availability of performance has exceeded the perceived

reports provided by importance of the individual elements of the

the system. system, and it could be argued that there has

14 Reporting (2) The relevance of been a waste of resources. When there is a

reports provided by

the system.

negative gap, the performance of the different

15 Training The availability of elements of the system do not meet the

comprehensive and perceived needs of the users and, therefore,

informative training. can be identified as areas for improvement and

16 System The upkeep and development. For these reasons it is a reliable

Maintenance regular upgrading of method of assessment of an Information

the system. System, as it is able to identify the areas where

17 User The involvement in the system is failing (Remenyi et al, 2003).

Involvement the planning and

implementation of

the system.

Pitt et al (1998) argued that managers could

18 Skilled Project The possession of gain much from adopting a service gap

Staff the necessary skills approach in the management of user

to perform tasks information satisfaction. Wisniewski (2001)

involving planning reported how Parasuraman et al (1985, 1986,

and implementation 1988, 1991, 1993, 1994) were the forerunners

of the system, by the in the development of Gap Analysis with their

project team staff. SERVQUAL model. This uses twenty-two

19 Project The control and statements to assess service quality by using

Management planning of the

information project.

each statement twice, first to measure

20 Training The quality of expectations and second to measure

Manuals documentation perception. Parasuman et al claimed that

provided to the users SERVQUAL the information gained from the

of the system. service gaps could help managers decide

21 Focus The direction of the where performance improvements need to be

project team with targeted.

regards to the

design, development In this study, twenty-four statements were

and implementation

of the system.

employed to assess users’ information

22 Reliable The provision of a satisfaction. Each statement was used twice,

Services dependable service first to capture users’ ratings of importance,

which users are able and second, to measure users’ ratings of

to rely upon. performance. The system’s attributes were

23 Response The rapidness of thus rated in importance and in performance,

response to users’ the gap (difference) then being computed. The

problems and needs terms ‘importance’ and ‘performance’ were

regarding the used in the data collection, rather than the

system, by the

project team.

terms ‘expectation’ and ‘perception’. This is

24 Understanding The appreciation of because, as it was felt, the terms ‘expectation’

users’ problems and and ‘perception’ are not specific enough for

needs regarding the conveying the users’ evaluation of the system.

system, by the

project team. 3. Data collection

Attributes 1-5 are associated to the Process,

The data was collected through a

attributes 6-16 are associated to the Product,

questionnaire. It was initially piloted to a small

and attributes 17-24 are associated to the

group of users before being distributed for data

Service.

collection. In total 84 users responded to the

questionnaires. The final version of the

User information satisfaction can be measured

questionnaire had four sections. Section A

by comparing the level of support users expect

presented twenty-four statements, each one

from a system against their perceptions of the

related to a system attribute. Users were asked

actual level of performance of the system, that

to rate the importance of each system attribute

is, analyzing the distance between the two

on a five-point scale. In Section B, which

(gap analysis). It is a holistic approach as it

presents the same twenty-four statements,

considers the various elements of the

users were asked to rate the performance of

www.ejise.com ©Academic Conferences Limited

99 Margaret Gemmell & Rosane Pagano

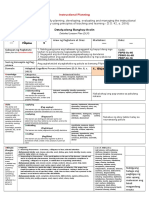

each system attribute on a five-point scale. The scales are described in Table 2.

Table 2: Scale for evaluating the system’s attributes

Importance

Scale-Point 1 2 3 4 5

Description Irrelevant Not Important Don’t Know Important Critical

PERFORMANCE

Scale-Point 1 2 3 4 5

Description Very Poor Poor Average Good Excellent

In Section C, users were asked to rank the Table 3: Users Profile

overall performance of the system on a five-

Position

point scale (the performance scale). They were Managers Intermediate Clerical

also given the opportunity to add their own 35% 39% 26%

personal comments in response to an open Division

question. In Section D, users were asked to School Faculty Central

indicate their profile in terms of position at the 51% 14% 35%

University, division of affiliation, number of Frequency of Use

years of computer experience, frequency and Daily Weekly Monthly

range of use of the system. Table 3 and Table 62% 19% 19%

4 summarize the users profile. Years of Computer Experience

0-10 11-20 21-30

Finally, also in Section D, users were asked to 60% 35% 5%

answer two open questions. The first question Table 4: Range of system use across

was to ascertain users thoughts on why the functional areas

Student Information System was being Number of

implemented. The second question was to 1 2 3 4 5

functional areas

investigate the overall reaction to the system. Proportion of

Questions were deliberately broad in scope 20% 28% 29% 10% 13%

respondents

(“What do you think the Student Information

The data on the users’ understanding of the

System is?”) to encourage a free and indirect

system was collected through two open

response.

questions in Section D. A framework based

upon Grounded Theory was again used to

A framework based on Grounded Theory

analyse this data. Themes were established by

(Glaser, 1967) was used to analyse the rich

breaking the data down into its component

data collected from the open question in

parts and labeling the emerging issues against

Section C on the users overall opinion of the

the transcripts of the question answers. The

system. References to the individual attributes

emerging themes were then examined for their

were given text and number labels against the

relationships and groupings. The text labels

transcripts of the question answers and coded

were then given number codes and the

according to whether they were positive or

frequency of the codes calculated to give an

negative statements. The frequency of the

indication of the themes dominantly occurring.

negative and positive codes were then

calculated to give an indication of the attitudes

users had towards the different elements of the 4. Data analysis

system and which they were most concerned Table 5 shows the mean score of importance

about. Themes relating to the users positive or for each attribute, the mean score of

negative answers were then coded and performance for each attribute, and the

grouped against the attribute statements resulting gap between the two. The standard

according to whether they were a cause of or deviation around the mean was computed as

result of the users negative or positive answer. an indicative measure of the users consensus

The frequency of these codes were then on a particular score. The relative rank of each

calculated as an indicator of the prevailing attribute score on importance and performance

causes of and results of the user opinion. were also included in the table, as an aid to

assess the gap.

The mean Importance score for all attributes

was 3.6, which is interpreted as between 'Don't

Know' and 'Important' on the Importance scale.

The mean Performance score for all attributes

www.ejise.com ©Academic Conferences Limited

Electronic Journal of Information Systems Evaluation Volume 6 Issue 2 (2003) 95-106 100

was 2.3, which is interpreted as between 'Poor' indicates a variation of two points on a scale of

and 'Average' on the Performance scale. This five used to evaluate the system’s attributes. A

evaluation did not meet the level of consensus very high standard deviation of 5 was found for

among users that was expected, but relatively the ‘User Involvement’ attribute score. This

convergent in the light of users’ profile. Given lack of consensus, when analyzed in the light

that the standard deviation ranged between of the rich data (open questions), pointed to

1.3 and 1.8 on Importance scores, and the need of managing users’ expectation

between 1.3 and 1.6 (with one exception during the project.

computed at 5) on Performance scores, it

Table 5: Attribute Importance Mean and Attribute Performance Mean for all respondents

Importance Performance Gap

Attribute

Number Rank Mean SD Rank Mean SD

1 16 3.6512 1.6768 15 2.2674 1.3573 -1.3837

2 8 3.7558 1.7253 13 2.2941 1.4024 -1.4617

3 21 3.2558 1.5069 22 1.9294 1.4559 -1.3264

4 22 3.1860 1.5158 24 1.7765 1.4155 -1.4096

5 24 2.7791 1.3921 23 1.7976 1.4364 -0.9815

6 1 4.0233 1.7213 18 2.1412 1.3152 -1.8821

7 2 3.9884 1.6988 21 1.9412 1.2361 -2.0472

8 6 3.8372 1.8151 19 2.0706 1.2223 -1.7666

9 12 3.6977 1.6760 20 2.0465 1.2342 -1.6512

10 15 3.6628 1.6640 17 2.1512 1.3344 -1.5116

11 23 2.9767 1.6387 12 2.3095 1.6527 -0.6672

12 19 3.5349 1.6445 2 2.8488 1.4267 -0.6860

13 9 3.7442 1.7552 14 2.2857 1.4405 -1.4585

14 17 3.6163 1.7221 15 2.2674 1.4028 -1.3488

15 3 3.8837 1.7333 5 2.7558 1.5460 -1.1279

16 14 3.6744 1.6669 7 2.6941 1.5417 -0.9803

17 18 3.5698 1.6591 4 2.7857 5.0003 -0.7841

18 3 3.8837 1.7102 9 2.5238 1.5169 -1.3599

19 5 3.8488 1.7375 10 2.5176 1.4844 -1.3312

20 13 3.6860 1.7042 1 3.1395 1.5135 -0.5465

21 20 3.4651 1.6138 8 2.6667 1.6190 -0.7984

22 11 3.7326 1.6777 6 2.7326 1.4737 -1.0000

23 9 3.7442 1.6431 3 2.8235 1.5515 -0.9207

24 7 3.7907 1.7400 11 2.3488 1.4006 -1.4419

The mean Gap between the Importance Performance score mentioned above which

scores and the Performance scores for all was obtained by discriminating the attributes.

attributes was a negative one of –1.2. This

indicates that users have an overall negative Graph 1 shows the gap between importance

perception towards the implementation of the and performance for each attribute, as per

Student Information System. When asked to Table 5. It can be seen that, overall, users

rate the system’s performance overall (no have negative perceptions about the

attribute discrimination), users’ mean score implementation of the Student Information

was 2.2, which is ‘Poor’ on the Performance System.

scale. This result corroborates the mean

www.ejise.com ©Academic Conferences Limited

101 Margaret Gemmell & Rosane Pagano

Results Overall

4.5

4.0

3.5

3.0

2.5

Rating

2.0

1.5

1.0

0.5

0.0

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24

Attributes

Importance Importance Mean Performance Performance Mean

Graph 1: Gap between Attribute Importance Mean and Attribute Performance Mean

The range of mean Importance scores was to the questionnaire suggested otherwise. The

between 2.8 and 4. The highest scores – largest gaps (in absolute value), between –2.0

between 3.8 and 4.2, and interpreted as and –1.6, were for the attributes ‘Accuracy’,

'Important' on the Importance scale – were for ‘Constraint Controls’, ‘Effectiveness’ and

the attributes ‘Accuracy’, ‘Constraint Controls’, ‘Navigation’, suggesting that these are the

‘Effectiveness’, ‘Project Management’, ‘Skilled areas of the system that users are most

Project Staff’, and ‘Training’. The lowest scores dissatisfied with.

– between 2.8 and 3.2, and interpreted as

‘Don’t Know’ on the Importance scale – were An analysis similar to the one performed above

for the attributes ‘University Strategy (2, 3)’ and for the whole sample was also performed for

‘Transparency’. all the user groupings (see Table 3), yielding

interesting comparisons. Due to the word limit,

The range of mean Performance scores was these were not included in this text. An

between 1.8 and 3.1. The highest scores – extensive research report, including the

between 2.8 and 3.2, and interpreted as detailed questionnaire, can be obtained from

‘Average’ on the Performance scale – were for the first author on request.

the attributes ‘Training Manuals’,

‘Communication’, ‘Response’. The lowest Another way of viewing and exploring the data

scores – between 1.8 and 2.2, and interpreted set was to focus on a particular attribute and to

as ‘Poor’ on the Performance scale – were for examine its Importance and Performance

the attributes ‘Accuracy’, ‘Constraint Controls’, ratings across various user groupings. Take for

‘Effectiveness’, ‘University Strategy (1-3)’, example the attribute ‘Accuracy’, which is

‘Navigation’, and ‘Ease of Use’. This makes related to the quality of the information held on

one third of the attributes at the lowest end of the system. For this attribute, regarding

the performance evaluation. perceived Performance, Graph 2 shows at a

glance what can be interpreted as:

The gap between Importance and the range of user perceptions in each

Performance scores ranged from –2.0 to –0.5. grouping, indicated by the maximum and

The smallest gaps (in absolute value), minimum scores linked by a vertical line;

between –0.8 and –0.4, were for the attributes the point of convergence of perceptions in

‘Focus’, ‘Transparency’, ‘Training Manuals’, each grouping, indicated by the mean

‘Communication’, and ‘User Involvement’. This score (black dot on the range line)

seems to indicate a marginal dissatisfaction

Table 6 displays the data values that underline

regarding these attributes (negative values).

Graph 2. It also includes additional data helpful

The notable exception is the ‘User

to understand this scenario, that is, the

Involvement’ attribute, for which free answers

www.ejise.com ©Academic Conferences Limited

Electronic Journal of Information Systems Evaluation Volume 6 Issue 2 (2003) 95-106 102

standard deviation (SD) of the performance Table 6: Performance ratings per user

scores in each grouping. The concentration of groupings for the ‘Accuracy’ attribute

scores around the mean can be interpreted as User groupings Max Min Mean SD

indicative of the level of consensus among 1

users of the same grouping regarding Clerical 5.0 1.0 2.8 1.1

2 Intermediate 4.0 1.0 2.4 1.2

perceived performance. By inspecting the ‘SD’

column of Table 6 in conjunction with Graph 2, 3 Managers 4.0 1.0 2.3 0.9

it can be concluded that, in the majority of 4 Daily 5.0 1.0 2.5 1.1

cases, ‘Accuracy’ performance scores are 5 Weekly 4.0 1.0 2.1 1.1

between ‘Poor’ and ‘Average’. The extreme 6 Monthly 4.0 1.0 2.6 1.3

values (5 and 1) observed in the case of

7 Central 4.0 1.0 2.2 1.0

‘Clerical’, ‘Daily’ and ‘School’ groupings seem

to be few isolated responses. 8 Faculty 4.0 1.0 2.5 1.2

9 School 5.0 1.0 2.6 1.3

Accuracy

6.0

Performance Rating

5.0

4.0

3.0

2.0

1.0

0.0

1 2 3 4 5 6 7 8 9

User Groupings

Graph 2: Performance ratings per user groupings for the ‘Accuracy’ attribute

Managers

5.0

Performance Rating

4.0

3.0

2.0

1.0

0.0

11

13

15

17

19

21

23

1

Attributes

Graph 3: Performance ratings per attribute for the ‘Managers’ user group

www.ejise.com ©Academic Conferences Limited

103 Margaret Gemmell & Rosane Pagano

Using the same method as above to grouping that attributes more directly

summarize the data set, the focus was then dependent upon project management are

placed on a particular user grouping and its below average standards, for example, ‘User

Importance and Performance ratings across all Involvement’ (Mean 2.2, SD 0.9) and

attributes (see Table 1 for attribute ‘Understanding User Needs’ (Mean 2.4, SD

descriptions) were plotted for comparison. 0.9).

Consider for example the user group

‘Managers’. Graph 3 shows a remarkably The low performance ratings of the Navigation

similar pattern of perceptions in this group and Ease of Use attributes of the system show

across attributes. The range of scores is wide that many users find the system difficult to use.

and falls within the same boundaries for all The more practiced, and therefore more

attributes but three. More important, the skilled, regular users rated the performance of

standard deviation around the mean score for these attributes higher than non-regular users,

the vast majority of attributes was smaller (less indicating that the less skilled the user the less

than 1) in this group than in any other user likely they are to give system usability a high

group. This indicates a higher level of evaluation. Marcella & Middleton (1996)

consensus on the performance score of each observed that users will only access available

particular attribute (mean score). Attribute training and education on a 'need to know'

mean scores vary between ‘Poor’ (score 2) basis, which revealed a predominance of

and ‘Average’ (score 3) as seen in Graph 3. infrequent consultation of Training Manuals

The data collected suggests that there is and Help Screens amongst the users. Table 7

agreed perception within the ‘Managers’ illustrates this point.

Table 7: User consultation of information on the system provided by the project team

Daily Weekly Monthly Rarely Never

Training Manuals 12% 22% 26% 36% 4%

Reporting Help Screen 1% 3% 6% 46% 44%

System’s Intranet Site 0 1% 3% 27% 69%

E-mail Correspondence 10% 12% 9% 49% 20%

System’s News Letter 0 1% 9% 53% 37%

.

The medium high importance ratings of they do not see the value in the system being

Navigation and Ease of Use amongst users, able to support Business Processes and

particularly, non-regular users, support the University Strategy. As Riley & Smith (1997)

findings of Kebede (2002) that users wish to pointed out, a lack of 'felt need' for change can

access information within the constraints of result in much of a systems attributes being

their skills. The difficulties that users had in undervalued and thereby affect the success of

operating the system seemed to be the cause the project. It is vital, therefore, that the issue

of many of the negative feelings they had of data quality is resolved, so that user trust

about the Student Information System. As and utilisation of the system can be won.

observed by Riley & Smith (1997), users may

see a new system as threatening if it requires Marcella & Middleton (1996), Clegg et al

new skills from them. The findings support (1997) and Lim & Tang (2000) all commented

Kebede’s (2002) view that user skills should be upon the importance of managing user

taken into consideration when implementing expectations, as reproduced in the rich data of

new information technology. this study, with emerging themes of users

being 'promised great things' but actually

The strong references to the continued use of finding the system problematic, inconvenient

internal departmental systems, in the rich data and a general disappointment. There was also

(answer to open questions) indicate that a significant reference made of the legacy

‘resistance to change’ is present amongst system being superior, despite it having less

users, due to their reluctance to replace these functionality, indicating that users have

internal systems with the Student Information preconceived expectations of how a system

System. As already identified, the mistrust of should look and function, based upon their

the data quality within the system is the experiences of the previous system. However,

primary reason for this resistance to change. there were some optimistic statements made

This continued mistrust and dependency on about the future of the new system. It is

internal systems, however, means the Student important that these higher expectations are

Information System is under utilised as users built upon and managed effectively.

do not run the system to its full capacity as

www.ejise.com ©Academic Conferences Limited

Electronic Journal of Information Systems Evaluation Volume 6 Issue 2 (2003) 95-106 104

5. Conclusion developments and improvements to the

system.

The primary concern must be the improvement

of data quality (‘Accuracy’) within the Student The lack of understanding amongst users of

Information System. The ‘Accuracy’ attribute the benefits the system could bring to

was rated high in importance and low in supporting the University’s business strategy

performance by users, which accounts for one indicates a need for staff development in this

this attribute’s large negative gap (see section area. First, the internal promotion of the

4. Data Analysis). The rich data also supported business strategy itself should be carried out,

this result. Without improvement and the so that staff are made aware of how the

perceived improvement by users, they will University’s strategic developments will benefit

continue to mistrust and under-utilise the individual departments, for example, in

system, thereby hampering the development of marketing, planning, and customer based

the system as a whole. For these data quality focus. Second, staff, as users, should be made

issues to be resolved, as well as a cleaning of more involved in the system implementation in

the data, it is necessary to discover the causes order to better understand how the Student

of data errors, so their future existence can be Information System can effectively support the

minimised. developments in University business strategy.

A deeper understanding of users’ needs is Since the data collection process was

required by the project team. For this to be undertaken, a more continuous participative

achieved, there needs to be a mechanism for evaluation approach has been adopted, as

continuously monitoring and gathering users described in (Remenyi et al, 2003). A more

requirements, in synergy with their evolving methodological approach has been taken to

business scene, so that the system can project planning and process development with

effectively support their business processes. the aim to improve user involvement, data

What is proposed is that such mechanism be quality, business strategy support

consolidated through Active Benefit understanding of user needs, through the

Realization (ABR). ABR, described in creation of Business Analyst roles, user

(Remenyi et al, 2003), is the process for councils and working parties and the

setting up evaluation as part of IT documentation of objectives and processes.

Management. This involves the integration of a Positive feedback from users has shown

continuous participative evaluation activity into evidence of these initiatives bringing about an

the project management function. improvement in user confidence in the Student

Information System.

Improvements in communication between the

users and the project team need to be made

so that it becomes a two way process.

References

Primarily, users need to be encouraged to Boddy, D. (2000) “Implementing

consult frequently the information provided by interorganizational IT systems: lessons

the project team, to ensure they are kept up to from a call center project”, Journal of

date with current issues and new Information Technology, Vol 15, pp 29-

developments. Secondly, users need to be 37, The Association for Information

given incentives to communicate their wants, Technology Trust.

requirements and general misgivings about the Cicmil, S. (1999) “An insight into management

system, to the project team. This could be of organizational change projects”,

achieved by providing them with a specific Journal of Workplace Learning, Vol 11,

vehicle for feedback, such as web site notice No.1, pp 5-15, MCB University Press.

board or a specified email address. Clegg, C., Carey, N., Dean, G., Hornby, P.&

Bolden, R. (1997) “Users’ Interaction

The difficulties users have experienced with to Information Technology: some

using the system, indicate that an assessment multivariate models and their

of the user skill base is required. More implications”, Journal of Information

importantly, this skill base should be taken into Technology, Vol 12, pp 15-32. MCB

consideration when implementing new University Press.

functionalities of the system. As it is likely that Collyer, M. (2000) “Communication – the route

the project will continue to undergo difficulties, to successful change management:

it would be prudent of the project team to lessons from the Guiness Integrated

acknowledge this to the users, so that they Business Program”, Supply Chain

have realistic expectations of future Management: an International Journal,

www.ejise.com ©Academic Conferences Limited

105 Margaret Gemmell & Rosane Pagano

Vol 5, No.5, pp 222-225, MCB Oulanov, A. & Pajarillo, E. (2001) “Usability

University Press. Evaluation of the City University of

Glaser, G. & Strauss, L. (1967) The Discovery New York CUNY + database”, The

of Grounded Theory: Strategies for Electronic Library, Vol 19. No 2, pp 84-

Qualitative Research, Aldine de 91, MCB University Press.

Gruyter, New York. Pitt, L., Berthon, P. & Lane, N. (1998) “Gaps

Kebede, G. (2002) “The changing information within the IS department: barriers to

needs of users in electronic service quality”, Journal of Information

information environments”, The Technology, Vol 13, pp 191-200, MCB

Electronic Library, Vol 20, No.1, pp14- University Press.

21, MCB University Press. Remenyi, D., Money, A., Sherwood-Smith, M.,

Lim, P. & Tang, N., (2000), “A study of Irani, Z. (2003) The effective

patients’ expectations and satisfaction measurement and management of IT

in Singapore hospitals”, International costs and benefits, Butterworth-

Journal of Health Care Quality Heinemann, Oxford.

Assurance, Vol 13, No 7, pp 290-299, Riley, L., & Smith, G. (1997) “Developing and

MCB University Press. implementing IS: a case study analysis

Marcella, R. & Middleton, I. (1996) “The role of in social services”, Journal of

the Help Desk in the Strategic Information Technology, Vol 12, pp

Management of Information Systems”, 305-321, MCB University Press.

OCLC Systems & Services, Vol 12, No Whittaker, B. (1999) “What went wrong?

4, pp 4-19, MCB University Press. Unsuccessful Information Technology

Middleton, P. (2000) “Barriers to the efficient Projects”, Information Management

and effective use of information and Computer Security, Vol 7, Issue 1,

technology”, The International Journal pp 23-29, MCB University Press.

of Public Sector Management, Vol 13, Whyte, G & Bytheway, A. (1996) “Factors

No. 1, pp 85-99, MCB University affecting information systems

Press. success”, International Journal of

Miller, J., & Doyle, B. (1984) “Measuring the Service Industry Management, Vol 7,

Effectiveness of Computer-Based Issue 1, pp 74-93, MCB University

Information Systems”, MIS Quarterly, Press.

March, pp 17-25, MCB University Wisniewski, M. (2001) “Using SERVQUAL to

Press. assess customer satisfaction with

Nahl, D. (1999) “Creating user-centred public sector services”, Managing

instructions for novice end-users”, Service Quality, Vol 11, No.6, pp 380-

Reference Services Review, Vol 27, 388, MCB University Press.

No.3, pp 280-286, MCB University

Press.

www.ejise.com ©Academic Conferences Limited

Electronic Journal of Information Systems Evaluation Volume 6 Issue 2 (2003) 95-106 106

www.ejise.com ©Academic Conferences Limited

S-ar putea să vă placă și

- S D I: A P S: Ystem Esign and Mplementation Ilot TudyDocument5 paginiS D I: A P S: Ystem Esign and Mplementation Ilot TudyVirtueciÎncă nu există evaluări

- Analisis Tingkat Kepuasan Mahasiswa Terhadap Kinerja Sistem Informasi Akademik (Simak) Di Program Studi InformatikaDocument5 paginiAnalisis Tingkat Kepuasan Mahasiswa Terhadap Kinerja Sistem Informasi Akademik (Simak) Di Program Studi InformatikaMona PermatasariÎncă nu există evaluări

- Interactive Learning: 1. Introduction and Objectives of The ProjectDocument69 paginiInteractive Learning: 1. Introduction and Objectives of The ProjectThansiya ThansiÎncă nu există evaluări

- USE OF INFORMATION SYSTEMS IN CONSUMER EXPERIENCE MANAGEMENT - InrevisionDocument14 paginiUSE OF INFORMATION SYSTEMS IN CONSUMER EXPERIENCE MANAGEMENT - InrevisionPaulo FranciscoÎncă nu există evaluări

- Exploratory Study of Information System User Satisfaction: A Study of University of Ibadan Post Graduate School Web PortalDocument10 paginiExploratory Study of Information System User Satisfaction: A Study of University of Ibadan Post Graduate School Web Portalbudi.hw748Încă nu există evaluări

- Lee 2008Document10 paginiLee 2008Tri Monarita JohanÎncă nu există evaluări

- Matecconf Bisstech2016 03001Document6 paginiMatecconf Bisstech2016 03001anthyÎncă nu există evaluări

- Net Impact Implementation Application Development Life-Cycle Management in Banking SectorDocument14 paginiNet Impact Implementation Application Development Life-Cycle Management in Banking SectorCSIT iaesprimeÎncă nu există evaluări

- Sample Capstone Chapter 3 PDFDocument60 paginiSample Capstone Chapter 3 PDFKATE MANALOÎncă nu există evaluări

- P M S A I I P S: Abdullah Saeed Bani Ali and Frank T. AnbariDocument22 paginiP M S A I I P S: Abdullah Saeed Bani Ali and Frank T. AnbariVictor Emeka AzukwuÎncă nu există evaluări

- 2378-Article Text-12297-1-10-20180604Document24 pagini2378-Article Text-12297-1-10-20180604mememew suppasitÎncă nu există evaluări

- An Empirical Study of Accounting Software AcceptanDocument15 paginiAn Empirical Study of Accounting Software AcceptanGuess what happened masterÎncă nu există evaluări

- Cminaccre A Mindoro State University Cloud Based Accreditation Management SystemDocument11 paginiCminaccre A Mindoro State University Cloud Based Accreditation Management SystemClarisse Nicole AdevaÎncă nu există evaluări

- CT071 3 M Oosse 2Document33 paginiCT071 3 M Oosse 2Dipesh GurungÎncă nu există evaluări

- An Empirical Study of Accounting Software AcceptanDocument15 paginiAn Empirical Study of Accounting Software AcceptanArseniojakejr FloresÎncă nu există evaluări

- Exploration of Success Factors of Information System: KeywordsDocument10 paginiExploration of Success Factors of Information System: KeywordsWendelynn Giannina AngÎncă nu există evaluări

- Knowledge Impact On Information Quality PDFDocument12 paginiKnowledge Impact On Information Quality PDFJean Diane JoveloÎncă nu există evaluări

- Critical Failure Factors of Information System ProjectssDocument14 paginiCritical Failure Factors of Information System ProjectssMateus da SilvaÎncă nu există evaluări

- Employee Survey Feedback System 201: 1.1. ObjectiveDocument32 paginiEmployee Survey Feedback System 201: 1.1. ObjectiveAbhijeet NigoskarÎncă nu există evaluări

- Effects of Information Security Management Systems On Firm PerformanceDocument11 paginiEffects of Information Security Management Systems On Firm PerformanceMugluuÎncă nu există evaluări

- PDFDocument6 paginiPDFBayu AlamÎncă nu există evaluări

- The Impact of Information Systems On User Performance: An Exploratory StudyDocument28 paginiThe Impact of Information Systems On User Performance: An Exploratory Studyjaveria khanÎncă nu există evaluări

- Kuliah 03 Audit Dan Tata Kelola TIDocument101 paginiKuliah 03 Audit Dan Tata Kelola TIAdam RiskyÎncă nu există evaluări

- Tec Empresarial: P-ISSN: 1659-2395 E-ISSN: 1659-3359Document15 paginiTec Empresarial: P-ISSN: 1659-2395 E-ISSN: 1659-3359drranitkishoreÎncă nu există evaluări

- Effect of Management Support and User Participation On Implementation of Information Systems Success and Undergraduate Programs PerformanceDocument15 paginiEffect of Management Support and User Participation On Implementation of Information Systems Success and Undergraduate Programs PerformanceIsnan Hari MardikaÎncă nu există evaluări

- Model of Post Implementation User Participation Wi - 2016 - Asia Pacific ManagemDocument10 paginiModel of Post Implementation User Participation Wi - 2016 - Asia Pacific ManagemSneha KothiwaleÎncă nu există evaluări

- Dmmmsu-Mluc Research Information and Management System 1362131785Document15 paginiDmmmsu-Mluc Research Information and Management System 1362131785Mark Greg FyeFye IIÎncă nu există evaluări

- Chapter 1 2 January 16 2024 Updated AutosavedDocument98 paginiChapter 1 2 January 16 2024 Updated Autosavedglennroysiton23Încă nu există evaluări

- The Effect of Implementation of Report Online Application On User Satisfaction and Operational PerformanceDocument11 paginiThe Effect of Implementation of Report Online Application On User Satisfaction and Operational PerformanceInternational Journal of Innovative Science and Research TechnologyÎncă nu există evaluări

- Assessing Educational Web-Site Usability Using Heuristic Evaluation RulesDocument8 paginiAssessing Educational Web-Site Usability Using Heuristic Evaluation RulesLoai F AlzouabiÎncă nu există evaluări

- Factors Influencing The Usage and Selection of Project Management Software - Engineering Management, IEEE Transactions OnDocument11 paginiFactors Influencing The Usage and Selection of Project Management Software - Engineering Management, IEEE Transactions OnLud SchaikoskiÎncă nu există evaluări

- 270182192Document18 pagini270182192Angela CabanaÎncă nu există evaluări

- Research Sa BakasyonDocument38 paginiResearch Sa BakasyonjohnericbalmadridÎncă nu există evaluări

- Synopsis On Coll MGMTDocument27 paginiSynopsis On Coll MGMTJeffrey ShawÎncă nu există evaluări

- Effectiveness of E-Rkap System Implementation With Human, Organizing, Technology (Hot) Fit ModelapproachDocument10 paginiEffectiveness of E-Rkap System Implementation With Human, Organizing, Technology (Hot) Fit ModelapproachAJHSSR JournalÎncă nu există evaluări

- Anderson 1997 Decision Support SystemsDocument18 paginiAnderson 1997 Decision Support SystemsIim MucharamÎncă nu există evaluări

- Usability in Context: Improving Quality of Use: Miles MacleodDocument7 paginiUsability in Context: Improving Quality of Use: Miles MacleodWarren De Lumen CabunyagÎncă nu există evaluări

- MIS AssignmentDocument8 paginiMIS AssignmentCharlesTichavavamweÎncă nu există evaluări

- User Requirements AnalysisDocument16 paginiUser Requirements AnalysisRaj MgrÎncă nu există evaluări

- Analysis of The Implementation of Hospital Management Information Systems With Hot - Fit Model at Rsia Resti MulyaDocument6 paginiAnalysis of The Implementation of Hospital Management Information Systems With Hot - Fit Model at Rsia Resti MulyaInternational Journal of Innovative Science and Research TechnologyÎncă nu există evaluări

- Briand 1998Document30 paginiBriand 1998Ali AmokraneÎncă nu există evaluări

- Assignment 1Document10 paginiAssignment 1Yonse Epic StoriesÎncă nu există evaluări

- Critical Failure Factors in Information System Projects: K.T. YeoDocument6 paginiCritical Failure Factors in Information System Projects: K.T. YeoAbdullah MelhemÎncă nu există evaluări

- College Info and Solution": Rajasthan Technical University, KotaDocument24 paginiCollege Info and Solution": Rajasthan Technical University, KotaNilesh Kumar ParjapatiÎncă nu există evaluări

- Critical Failure Factors in Information System ProjectsDocument6 paginiCritical Failure Factors in Information System ProjectsRafiqul IslamÎncă nu există evaluări

- 2022.IJRESM - Dedi - Syarif.armanDocument9 pagini2022.IJRESM - Dedi - Syarif.armanPadila MaharamiÎncă nu există evaluări

- Success in TaiwanDocument11 paginiSuccess in TaiwanaashinshajuÎncă nu există evaluări

- Ordering System Thesis DocumentationDocument4 paginiOrdering System Thesis Documentationnadugnlkd100% (2)

- Evaluasi Penerapan Sistem Informasi Absensi OnlineDocument9 paginiEvaluasi Penerapan Sistem Informasi Absensi OnlineVa2kurniawanÎncă nu există evaluări

- Online Event Management System Using PHPDocument4 paginiOnline Event Management System Using PHPLogaNathanÎncă nu există evaluări

- 1 CHAPTER 1 5 - FinalDocument71 pagini1 CHAPTER 1 5 - Finalcastillokaizen27Încă nu există evaluări

- Measuring Success of Accounting Informat PDFDocument10 paginiMeasuring Success of Accounting Informat PDFJean Diane JoveloÎncă nu există evaluări

- Student-Run Usability TestingDocument9 paginiStudent-Run Usability TestingValeriaSilvaÎncă nu există evaluări

- STUDENT FEEDBACK PHPDocument34 paginiSTUDENT FEEDBACK PHPk8881557Încă nu există evaluări

- Optimizing The Student Application Process With A Laravel-Based System of Waziri Umaru Federal Polytechnic Birnin Kebbi: A Case StudyDocument10 paginiOptimizing The Student Application Process With A Laravel-Based System of Waziri Umaru Federal Polytechnic Birnin Kebbi: A Case StudyInternational Journal of Innovative Science and Research TechnologyÎncă nu există evaluări

- System Development Life CycleDocument12 paginiSystem Development Life CycleReiny Rose AutrizÎncă nu există evaluări

- Paper NorainiDiet16 Cameraready LatestDocument6 paginiPaper NorainiDiet16 Cameraready LatestTECHNO WORLD & MOTIVATIONERÎncă nu există evaluări

- The Impact of Information Systems On User Performance: A Critical Review and Theoretical ModelDocument13 paginiThe Impact of Information Systems On User Performance: A Critical Review and Theoretical ModeldureÎncă nu există evaluări

- Automated Network Technology: The Changing Boundaries of Expert SystemsDe la EverandAutomated Network Technology: The Changing Boundaries of Expert SystemsÎncă nu există evaluări

- Cost Estimation in Agile Software Development: Utilizing Functional Size Measurement MethodsDe la EverandCost Estimation in Agile Software Development: Utilizing Functional Size Measurement MethodsÎncă nu există evaluări

- MTB - 1 - LP Q1 W4-Letter TTDocument42 paginiMTB - 1 - LP Q1 W4-Letter TTMasher ViosÎncă nu există evaluări

- Classified 2015 04 29 000000Document6 paginiClassified 2015 04 29 000000sasikalaÎncă nu există evaluări

- Law Case Study AnalysisDocument3 paginiLaw Case Study Analysisapi-323713948Încă nu există evaluări

- Google Innovations: Name Institution DateDocument5 paginiGoogle Innovations: Name Institution DateKibegwa MoriaÎncă nu există evaluări

- Lessonn 1.2 ActivityDocument2 paginiLessonn 1.2 ActivityDanica AguilarÎncă nu există evaluări

- Registered Master Electrician Theories and FormulasDocument21 paginiRegistered Master Electrician Theories and FormulasFrancis Paul92% (13)

- Weekly Home Learning Plan Grade 6-Justice: Schools Division of Misamis Oriental Kimaya Integrated SchoolDocument16 paginiWeekly Home Learning Plan Grade 6-Justice: Schools Division of Misamis Oriental Kimaya Integrated SchoolDaling JessaÎncă nu există evaluări

- Solution Manual For Creative Editing 6th EditionDocument5 paginiSolution Manual For Creative Editing 6th EditionDan Holland100% (34)

- Seven Personality Traits of Top SalespeopleDocument5 paginiSeven Personality Traits of Top SalespeopleDanialKaziÎncă nu există evaluări

- Pilot Exam 2020Document5 paginiPilot Exam 2020Nikita AtrahimovicsÎncă nu există evaluări

- DLP in Filipino For Observation RevisedDocument4 paginiDLP in Filipino For Observation RevisedLissa Mae Macapobre100% (1)

- Essentialism 3.0Document14 paginiEssentialism 3.0MJ MurillaÎncă nu există evaluări

- Sft300 - Project Del.1 - Spring 22 2Document4 paginiSft300 - Project Del.1 - Spring 22 2Ahmad BenjumahÎncă nu există evaluări

- Chapter 2 Taking Charge of Your Health: Lesson 1 Building Health SkillsDocument2 paginiChapter 2 Taking Charge of Your Health: Lesson 1 Building Health SkillsEthan DennisonÎncă nu există evaluări

- District Reading Action PlanDocument4 paginiDistrict Reading Action PlanAnthony ChanÎncă nu există evaluări

- 0408 - s23 - QP - 22 - World LiteratureDocument4 pagini0408 - s23 - QP - 22 - World LiteratureAzrin AzÎncă nu există evaluări

- FDNACCT Full-Online Syllabus - 1T2021Document7 paginiFDNACCT Full-Online Syllabus - 1T2021Ken 77Încă nu există evaluări

- 01 Handout 10 PDFDocument3 pagini01 Handout 10 PDFMaverick ZarakiÎncă nu există evaluări

- Christian MinistriesDocument23 paginiChristian MinistriesAnonymous 2Fx1dyÎncă nu există evaluări

- Think - l0 - Unit 2 - Grammar - BasicDocument2 paginiThink - l0 - Unit 2 - Grammar - BasicEmmaBordetÎncă nu există evaluări

- DLSU HUMAART Policies (Third Term 2013)Document3 paginiDLSU HUMAART Policies (Third Term 2013)Mhykl Nieves-HuxleyÎncă nu există evaluări

- Snake and Ladder Games in Cognition Development On Students With Learning DifficultiesDocument13 paginiSnake and Ladder Games in Cognition Development On Students With Learning DifficultiesharinichristoberÎncă nu există evaluări

- A Content Oriented Smart Education System Based On Cloud ComputingDocument16 paginiA Content Oriented Smart Education System Based On Cloud ComputingvinsenÎncă nu există evaluări

- ID 333 VDA 6.3 Upgrade Training ProgramDocument2 paginiID 333 VDA 6.3 Upgrade Training ProgramfauzanÎncă nu există evaluări

- Behavioral Perspectives in PersonalityDocument11 paginiBehavioral Perspectives in PersonalityMR2692Încă nu există evaluări

- Pronunciation in The Classroom - The Overlooked Essential Chapter 1Document16 paginiPronunciation in The Classroom - The Overlooked Essential Chapter 1NhuQuynhDoÎncă nu există evaluări

- Time Management - TALKDocument22 paginiTime Management - TALKmitch sapladaÎncă nu există evaluări

- Math g4 m3 Full ModuleDocument590 paginiMath g4 m3 Full ModuleNorma AguileraÎncă nu există evaluări

- Inverse VariationDocument4 paginiInverse VariationSiti Ida MadihaÎncă nu există evaluări

- Amherst Schools Staff Salaries 2012Document17 paginiAmherst Schools Staff Salaries 2012Larry KelleyÎncă nu există evaluări