Documente Academic

Documente Profesional

Documente Cultură

Mathcc 18 PDF

Încărcat de

Briely BrizTitlu original

Drepturi de autor

Formate disponibile

Partajați acest document

Partajați sau inserați document

Vi se pare util acest document?

Este necorespunzător acest conținut?

Raportați acest documentDrepturi de autor:

Formate disponibile

Mathcc 18 PDF

Încărcat de

Briely BrizDrepturi de autor:

Formate disponibile

Applied Mathematics in Electrical and Computer Engineering

Comparing Image Classification Methods:

K-Nearest-Neighbor and Support-Vector-Machines

JINHO KIM¹ BYUNG-SOO KIM², SILVIO SAVARESE³

Okemos High School Department of Electrical Engineering and Computer Science

2800 Jolly Road University of Michigan

Okemos, MI 48864 Ann Arbor, MI 48109-2122

¹jcykim2008@gmail.com ²bsookim@umich.edu, ³silvio@eecs.umich.edu

Abstract: - In order for a robot or a computer to perform tasks, it must recognize what it is looking at. Given an

image a computer must be able to classify what the image represents. While this is a fairly simple task for

humans, it is not an easy task for computers. Computers must go through a series of steps in order to classify a

single image. In this paper, we used a general Bag of Words model in order to compare two different

classification methods. Both K-Nearest-Neighbor (KNN) and Support-Vector-Machine (SVM) classification

are well known and widely used. We were able to observe that the SVM classifier outperformed the KNN

classifier. For future work, we hope to use more categories for the objects and to use more sophisticated

classifiers.

Key-Words: - Bag of Words Model, SIFT (Scale Invariant Feature Transform), k-means clustering, KNN (k-

nearest-neighbor), SVM (Support Vector Machine)

1 Introduction deformation, occlusion, and intra-class variation.

The human ability to analyze and classify objects Applications for classification in computer vision

and scenes rapidly and accurately is something that include computational photography, security,

everybody finds highly useful in everyday. Thorpe surveillance, and assistive driving.

and his colleagues found that humans are able to

categorize complex natural scenes very quickly [1].

In order to understand a complex scene, the first

step is to recognize the objects and then recognize

the category of the scene [2]. In order to do this in

computer vision, we use various classifiers that all

have different characteristics and features.

In the past, many classifiers have been developed by

various researchers. These methods include naïve

Bayes classifier, support vector machines, k-nearest

neighbors, Gaussian mixture model, decision tree

and radial basis function (RBF) classifiers [3,4].

These classifiers are used in algorithms that involve

object recognition. However object recognition is

challenging for several reasons. The first and most

obvious reason is that there are about 10,000 to Fig. 1 A conceptual illustration of the process of

30,000 different object categories. The second image classification.

reason is the viewpoint variation where many

objects can look different from different angles. The A typical classification method using the bag of

third reason is illumination in which lighting makes words model consists of four steps as shown in

the same objects look like different objects. The Fig.1 In short, the bag of words model creates

fourth reason is background clutter in which the histograms of images which is used for

classifier cannot distinguish the object from its classification.

background. Other challenges include scale,

ISBN: 978-1-61804-064-0 133

Applied Mathematics in Electrical and Computer Engineering

In this paper, we will be comparing two different 128-dimensional vectors. Fig. 2 shows SIFT

classification methods: Experimental evaluation is descriptors in work on one of our images of

conducted on the Caltech-4-Cropped dataset [5] to airplanes. The SIFT algorithm we used was from the

see the difference between two classification VLFEAT library [9], which is an open source

methods. In Section 2, we will discuss and outline library for popular computer vision algorithms.

our bag of words Model. In Section 3, we will

explain the two different classification methods we

have used: KNN and SVM.

2 Image Representation - Bag of

Words Model

One of the most general and frequently used

algorithms for category recognition is the bag of

words (abbreviation BoW) also known as bag of

features or bag of keypoints model [6, 7]. This

algorithm generates a histogram, which is the

distribution of visual words found in the test image,

and then classifiers classify the image based on each

classifier’s characteristics. The KNN classifier Fig. 2 Features extracted from an image of airplanes

compares this histogram to those already generated by using SIFT. The circles represent the various

from the training images. In contrast, the SVM features detected by the SIFT descriptor.

classifier uses the histogram from a test image and a

learned model from the training set to predict a class

for the test image. The purpose of the BoW model is 2.2 k-means Clustering

representation. Representation deals with feature After extracting features from both testing and

detection and image representation. Features must training images, we converted vector represented

be extracted from images in order to represent the patches into codewords. To do this we performed k-

images as histograms. means clustering over all the vectors. k-means

Section 2.1 deals with the feature extraction clustering is a method to cluster or divide n

process of the BoW model. We extracted features observations or, in our case, features into k clusters

using SIFT [8]. Section 2.2 deals with clustering the in which each feature belongs to the cluster of its

features extracted in Section 2.1 by k-means nearest mean [10]. We cluster our features and

clustering. Section 2.3 deals with the histogram prepare the data for histogram generation. As the

computation process. number of clusters is k, an input, an inappropriate

choice of k may yield poor results. Therefore to

prevent this problem, we tested classification with 5

2.1 Scale-Invariant Feature Transform different k values or codewords: 50, 100, 250, 500,

The first step for our two classification methods is and 1000. These codewords are defined as the

to extract various features the computer can see in centers of each cluster. The number of codewords is

an image. For any object in an image, there are the size of each codebook.

certain features or characteristics that can be

extracted and define what the image is. Features are

then detected and each image is represented in 2.3 Histogram Generation

different patches. In order to represent these patches Each patch in an image is mapped to a certain

as numerical vectors we used SIFT descriptors to codeword through the k-means clustering process

convert each patch into a 128-dimensional vector. and thus, each image can be represented by a

To perform reliable recognition, it is important that histogram of the codewords. This is the final step

the features extracted from the training image be before the actual classification, which is to generate

detectable even under changes in image scale, noise histograms of the features extracted in each image

and illumination, which is why we used SIFT [11]. These features are stacked according to which

descriptors. After converting each patch into cluster they were clustered in by k-means clustering.

numerical vectors, each image is a collection of

ISBN: 978-1-61804-064-0 134

Applied Mathematics in Electrical and Computer Engineering

7

individual query point. In Section 3.1.1, we will

discuss KNN classification while in Section 3.1.2,

6

we will discuss SVM classification.

5

3.1.1 K-Nearest-Neighbor Classification

3

k-nearest neighbor algorithm [12,13] is a method for

2 classifying objects based on closest training

1

examples in the feature space. k-nearest neighbor

algorithm is among the simplest of all machine

0

0 10 20 30 40 50 60 learning algorithms. Training process for this

algorithm only consists of storing feature vectors

Fig.3 Histogram generated from image of airplanes and labels of the training images. In the

from Fig. 2 classification process, the unlabelled query point is

simply assigned to the label of its k nearest

neighbors.

Typically the object is classified based on the

3 Methods/Results labels of its k nearest neighbors by majority vote. If

Once the BoW model represents the images as

k=1, the object is simply classified as the class of

certain features, the next step towards classification the object nearest to it. When there are only two

is learning. Learning is what is referred to as the classes, k must be a odd integer. However, there can

“training process” where the classifier learns still be ties when k is an odd integer when

different features for different categories and forms performing multiclass classification. After we

a codeword dictionary. It is essential to have a solid convert each image to a vector of fixed-length with

training process which is why the train ratio is real numbers, we used the most common distance

greater than the test ratio. The final step is function for KNN which is Euclidean distance:

classification or recognition during which the

classifier tests or classifies an image based on the , ·

similarities between the feature extracted and the

codeword dictionary produced through the training ∑ ⁄

(1)

process of the algorithm.

Section 3.1 deals with the two classification where x and y are histograms in X = . Fig. 4

methods used in this research: KNN and SVM. shows visualizes the process of KNN classification.

Section 3.2 shows the classification results of the

two classifiers.

3.1 Classification Methods

The problem of object classification can be

specified as a problem to identify the category or

class that the new observations belong to based on a

training dataset containing observations whose

category or class is known.

The purpose of the BoW Model was for image

representation. As shown in Sections 2.1 and 2.2,

the features detected by SIFT descriptors were put

into a codebook by k-means clustering.

Classification can now be performed by comparing

histograms representing the codewords. Fig. 4 KNN Classification. At the query point of the

Generally, classification works by first plotting circle depending on the k value of 1, 5, or 10, the

training data into multidimensional space. Then query point can be a rectangle at (a), a diamond at

each classifier plots testing data into the same (b), and a triangle at (c).

multidimensional space as the training data and

compares the data points between testing and

training to determine the correct class for each

ISBN: 978-1-61804-064-0 135

Applied Mathematics in Electrical and Computer Engineering

A main advantage of the KNN algorithm is that it The function maps training vectors xi into a higher

performs well with multi-modal2 classes because the dimensional space while 0 is the penalty

basis of its decision is based on a small parameter for the error term [17]. We used two

neighborhood of similar objects. Therefore, even if different basic kernels: linear and radial basis

the target class is multi-modal, the algorithm can function (RBF). The linear kernel function and the

still lead to good accuracy. However a major radial basis function can be represented as,

disadvantage of the KNN algorithm is that it uses all

the features equally in computing for similarities. , 3

This can lead to classification errors, especially and

when there is only a small subset of features that are , exp , 0 (4)

useful for classification.

respectively, while is a kernel parameter. Fig. 5

shows a visualization of the process of SVM

3.1.2 Support Vector Machine Classification classification.

SVM classification [14] uses different planes in

space to divide data points using planes. An SVM

model is a representation of the examples as points

in space, mapped so that the examples of the

separate categories or classes are divided by a

dividing plane that maximizes the margin between

different classes. This is due to the fact if the

separating plane has the largest distance to the

nearest training data points of any class, it lowers

the generalization error of the overall classifier. The

test points or query points are then mapped into that

same space and predicted to belong to a category

based on which side of the gap they fall on as shown Fig. 5 SVM Classification. In multidimensional

in Figure 5. space, support vector machines find the hyperplane

Since MATLAB’s SVM classifier does not that maximizes the margin between two different

support multiclass classification and only supports classes. Here the support vectors are the dots circled.

binary classification, we used lib-svm toolbox,

which is a popular toolbox for SVM classification. A main advantage of SVM classification is that

Since the cost value, which is the balancing SVM performs well on datasets that have many

parameter between training errors and margins, can attributes, even when there are only a few cases that

affect the overall performance of the classifier, we are available for the training process. However,

tested different cost parameter values among several disadvantages of SVM classification include

0.01~10000 to see which gives the best performance limitations in speed and size during both training

using validation set. and testing phase of the algorithm and the selection

As mentioned before, the goal of SVM of the kernel function parameters.

Classification is to produce a model, based on the

training data, which will be able to predict class

labels of the test data accurately. Given a training 3.2 Results

set of instance-label pairs , , 1, , where We performed classification with a 5-fold validation

xi and yi are histograms from training images, set, each fold with approximately 2800 training

and 1, 1 , [15,16] the support images and approximately 700 testing images. Each

vector machines can be found as the solution of the experiment had different images in training and

following optimization problem: testing compared to the other experiments so as to

prevent the overlapping of testing and training

, , ∑ (2) images in each experiment.

In order to compare the performance levels of KNN

Subject to w x 1– ξ , ξ 0. classification and SVM classification, we have

implemented the framework for the BoW model as

2

discussed in section 2.

Multi-modal: consisting of objects whose independent

variables have different characteristics for different subsets

ISBN: 978-1-61804-064-0 136

Applied Mathematics in Electrical and Computer Engineering

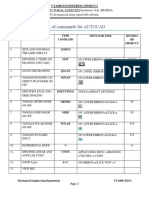

Taable 1. The avverage perfoormance of KNN

K classifieer

A

Airplanes Cars Faces Motorbikes

Airplanes 87.24% 0.009% 8.47% 4.19%

Cars 5.28% 61.13% 7.79% 25.80%

Faces 5.57% 00% 87.74%

% 6.69%

Motorbikes

M 5.56% 00% 10.05%

% 84.39%

Fig. 6 Samplle test imagees of the 4 classes.

F c Startting

o the uppeer right and in clockwisse order: Caars,

on

M

Motorbikes, F

Faces, Airplanes.

3.2

2.2 SVM Classificatio

C on Results

Thhe number of codeworrds to produ uce the besst

We have maainly used MATLAB

W M ass our compuuter

claassification results

r for SVVM turned out

o to be 5000

l

language buut have reverted bacck and foorth

codewords. Thhe overall bbest cost vallue for SVM M

o

occasionally with the winndows comm mand promptt in

waas 10000. Thhe overall acccuracy rate for the SVM M

o

order to run multiclass

m cllassification with

w SVM. Our O

claassifier was 92%. We found that unlike KNN N

d

dataset, whiich containns 3505 im mages from 4

claassification, SVM classiification wass much morre

d

different classses (airplanees, cars, facees, motorbikees),

adept at classiifying cars w which is thee main reasoon

w divided into testing images and training imaage

was

whhy the accurracy rate forr SVM classsification waas

b a 1:5 ratiio in order to

by t increase diversity

d witthin

mu uch better coompared to K KNN classifification. Tablle

e

each experimment. The reesults are mainly shownn in

2 shows the average peerformance of the SVM M

t form of confusion taables (or connfusion matrrix)

the

claassifier for each

e individdual class wiith the actuaal

w

which are useful

u for comparison of classifiiers

claass representted on the leeft and the predicted classs

p

performance among thhe differentt classes. We W

reppresented abbove. Like Table 1, th he diagonallly

d

discuss KNN N classificatiion results inn Section 3..2.1

shaaded boxess show thee percent of o accuratelly

a we discuuss SVM classsification inn Section 3.2.2.

and

claassified images for eacch class wh hile the otheer

boxes show thhe percent of erroneou usly classifieed

im

mages. The average acccuracy for the overaall

3

3.2.1 KNN

N Classificaation Resullts claassifier withh the best ccost value ofo 10000 waas

The best number off codewordds for KN

T NN 91.9%.

c

classification

n was 50 coodewords. Thhis was maiinly

d to the faact that our algorithm

due a couuld not classsify Taable 2. The avverage perfoormance of SV

VM classifieer

c as well as

cars a the other classes.

c In geeneral, once we

A

Airplanes Cars Faces Motorbikes

m

moved on to other number of codewoords apart frrom

5 the accuuracy of the overall claassifier droppped

50, Airplanes 93.39% 0.665% 3.45% 2.51%

d

dramatically due to the algorithm m’s inability to Cars 0.78% 96.779% 0.35% 2.08%

c

classify carrs. For thee other coodewords, the

Faces 4.46% 1.334% 86.85%

% 7.36%

c

classification

n accuracy foor the other classes

c rose but

d not rise enough to compensate

did c for the lostt of Motorbikes

M 3.03% 4.336% 2.06% 90.56%

a

accuracy forr cars. We used

u Euclideean distancee as

t distance metric

the m and since the constant value of o k

c

can influennce the acccuracy off the overall

c

classification w 5 differrent k values: 1,

n, we tested with

4 Conclusiion

5 10, 15, and

5, a 20. Overall the bestt value for the In this workk, we implemented tw wo differennt

c

constant k was 5. Tabble 1 show ws the averaage fraameworks foor image classsification. With

W a propeer

p

performance of the classsifier for each

e individdual leaarning proceess, we coould observee that SVM M

c

class with thhe actual claass represennted on the left claassification outperformeed KNN classificationn.

a

and the preedicted classs representeed above. The T Allthough the performancee of KNN was w very low w

d

diagonally s

shaded boxxes show thhe percent of com mpared to SVM,

S this waas due to thee fact that thhe

a

accurately classified imagges for each class while the KN NN classifier could not distinguish cars whereaas

o

other boxess show thee percent of o erroneouusly SVVM was veryy effective iin classifyin ng cars. If we

w

c

classified mages. The average acccuracy for the

im takke the class of cars away from m the overaall

o

overall classsifier with the best k value was w exp periment wee can see thaat SVM only y outperform ms

7

78.03%.k-ne arest neighboor algorithmm KN NN by 4%. If we add thee cars back innto the overaall

exp periment however,

h we can see that SVM M

ISBN: 978-1-61804-064-0 137

Applied Mathematics in Electrical and Computer Engineering

outperforms KNN by 12%. To improve upon this European Conference on Machine Learning.

work, we can extract individual features from the Springer Verlag, 1998, pp. 4–15.

cars in our Caltech-4-Cropped dataset and [7] S. Savarese, J. Winn, and A. Criminisi,

investigate why SVM was so effective in classifying

Discriminative Object Class Models of

cars compared to KNN.

The performance of various classification Appearance and Shape by Correlations, Proc.

methods still depend greatly on the general of IEEE Computer Vision and Pattern

characteristics of the data to be classified. The exact Recognition. 2006.

relationship between the data to be classified and the [8] Lowe, David G, Object recognition from local

performance of various classification methods still scale-invariant features, Proceedings of the

remains to be discovered. Thus far, there has been International Conference on Computer Vision.

no classification method that works best on any

Vol. 2. 1999, pp. 1150–1157.

given problem. There have been various problems to

the current classification methods we use today. To [9] A. Vedaldi and B. Fulkerson, VLFeat: An Open

determine the best classification method for a and Portable Library of Computer Vision

certain dataset we still use trial and error to find the Algorithms, http://www.vlfeat.org/, 2008

best performance. For future work, we can use more [10] Chris Ding and Xiaofeng He, K-means

different kinds of categories that would be difficult Clustering via Principal Component Analysis,

for the computer to classify and compare more Proceeding of International Conference

sophisticated classifiers. Another area for research

Machine Learning, July 2004, pp. 225-232.

would be to find certain characteristics in various

image categories that make one classification [11] Rafael G. Gonzales and Richard E. Woods,

method better than another. Digital Image Processing, Addison Wesley,

1992.

Acknowledgements [12] Bremner D, Demaine E, Erickson J, Iacono J,

Langerman S, Morin P, Toussaint G, Output-

We would like to thank Mr. Andrew Moore,

sensitive algorithms for computing nearest-

Research Instructor of Okemos High School

Research Seminar Program, for creating this neighbor decision boundaries, Discrete and

opportunity for research. Computational Geometry, 2005, pp. 593–604.

[13] Cover, T., Hart, P. , Nearest-neighbor pattern

classification, Information Theory, IEEE

References: Transactions on, Jan. 1967, pp. 21-27.

[1] S. Thorpe, D. Fize, and C. Marlot. Speed of [14] Christoper J.C. Burgers, A Tutorial on Support

processing in the human visual system. Nature, Vector Machines for Pattern Recognition, Data

381, 1996, pp. 520–522. Mining and Knowledge Discovery, 1998, pp.

[2] A. Treisman and G. Gelade. A feature- 121–167.

integration theory of attention. Cognitive [15] B. E. Boser, I. Guyon, and V. Vapnik. A

Psychology, 12, 1980, pp. 97–136. training algorithm for optimal margin

[3] R. Szeliski, Computer Vision: Algorithms and classifiers, Proceedings of the Fifth Annual

Applications, Springer, 2011. Workshop on Computational Learning Theory,

[4] D.A. Forsyth and J. Ponce, Computer Vision, A ACM Press, 1992, pp. 144-152.

Modern Approach, Prentice Hall, 2003. [16] C. Cortes and V. Vapnik. Support-vector

[5] L. Fei-Fei, R. Fergus and P. Perona. Learning network. Machine Learning, 20, 1995, pp. 273-

generative visual models from few training 297.

examples: an incremental Bayesian approach [17] Chih-Wei Hsu, Chih-Chung Chang, and Chih-

tested on101 object categories, IEEE Computer Jen Lin. A Practical Guide to Support Vector

Vision Pattern Recognition 2004, Workshop on Classification (Technical report). Department

Generative-Model Based Vision. 2004. of Computer Science and Information

[6] Lewis, David, Naive (Bayes) at Forty: The Engineering, National Taiwan University.

Independence Assumption in Information

Retrieva, Proceedings of ECML-98, 10th

ISBN: 978-1-61804-064-0 138

S-ar putea să vă placă și

- Briz - ULO3a Let's Analyze (ELEC3)Document2 paginiBriz - ULO3a Let's Analyze (ELEC3)Briely BrizÎncă nu există evaluări

- Format For Final DefenseDocument12 paginiFormat For Final DefenseBriely BrizÎncă nu există evaluări

- Radiowave Propagation MCQDocument10 paginiRadiowave Propagation MCQBriely BrizÎncă nu există evaluări

- ECE542L - Laboratory Experiment 1 PDFDocument13 paginiECE542L - Laboratory Experiment 1 PDFBriely BrizÎncă nu există evaluări

- ECE542L - Laboratory Experiment 1 PDFDocument13 paginiECE542L - Laboratory Experiment 1 PDFBriely BrizÎncă nu există evaluări

- Lecture 5 8051 ArchitectureDocument22 paginiLecture 5 8051 ArchitectureBriely BrizÎncă nu există evaluări

- Lecture 5 8051 ArchitectureDocument22 paginiLecture 5 8051 ArchitectureBriely BrizÎncă nu există evaluări

- Water Dispenser BasicsDocument3 paginiWater Dispenser BasicsBriely BrizÎncă nu există evaluări

- FILEDocument4 paginiFILEBriely BrizÎncă nu există evaluări

- Mathcc 18 PDFDocument6 paginiMathcc 18 PDFBriely BrizÎncă nu există evaluări

- The Title ProposalDocument6 paginiThe Title ProposalBriely BrizÎncă nu există evaluări

- The Title ProposalDocument6 paginiThe Title ProposalBriely BrizÎncă nu există evaluări

- Development of Smart Solar-Powered Waste Bin Segregation Using Image ProcessingDocument7 paginiDevelopment of Smart Solar-Powered Waste Bin Segregation Using Image ProcessingBriely BrizÎncă nu există evaluări

- Trans PDF 2Document117 paginiTrans PDF 2Briely BrizÎncă nu există evaluări

- Transmission Line MCQ 2Document49 paginiTransmission Line MCQ 2Briely Briz100% (2)

- Shoe Dog: A Memoir by the Creator of NikeDe la EverandShoe Dog: A Memoir by the Creator of NikeEvaluare: 4.5 din 5 stele4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDe la EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeEvaluare: 4 din 5 stele4/5 (5794)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDe la EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceEvaluare: 4 din 5 stele4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)De la EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Evaluare: 4 din 5 stele4/5 (98)

- Grit: The Power of Passion and PerseveranceDe la EverandGrit: The Power of Passion and PerseveranceEvaluare: 4 din 5 stele4/5 (588)

- The Little Book of Hygge: Danish Secrets to Happy LivingDe la EverandThe Little Book of Hygge: Danish Secrets to Happy LivingEvaluare: 3.5 din 5 stele3.5/5 (400)

- The Emperor of All Maladies: A Biography of CancerDe la EverandThe Emperor of All Maladies: A Biography of CancerEvaluare: 4.5 din 5 stele4.5/5 (271)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDe la EverandNever Split the Difference: Negotiating As If Your Life Depended On ItEvaluare: 4.5 din 5 stele4.5/5 (838)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDe la EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyEvaluare: 3.5 din 5 stele3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealDe la EverandOn Fire: The (Burning) Case for a Green New DealEvaluare: 4 din 5 stele4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDe la EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureEvaluare: 4.5 din 5 stele4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDe la EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryEvaluare: 3.5 din 5 stele3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDe la EverandTeam of Rivals: The Political Genius of Abraham LincolnEvaluare: 4.5 din 5 stele4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDe la EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaEvaluare: 4.5 din 5 stele4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDe la EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersEvaluare: 4.5 din 5 stele4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaDe la EverandThe Unwinding: An Inner History of the New AmericaEvaluare: 4 din 5 stele4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDe la EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreEvaluare: 4 din 5 stele4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)De la EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Evaluare: 4.5 din 5 stele4.5/5 (121)

- Her Body and Other Parties: StoriesDe la EverandHer Body and Other Parties: StoriesEvaluare: 4 din 5 stele4/5 (821)

- Breaker Thesis PDFDocument121 paginiBreaker Thesis PDFKarthik SriramakavachamÎncă nu există evaluări

- Latent Graph Diffusion A Unified Framework For Generation and Prediction On GraphsDocument21 paginiLatent Graph Diffusion A Unified Framework For Generation and Prediction On GraphslinkzdÎncă nu există evaluări

- MathsDocument16 paginiMathsAmith LakshmanÎncă nu există evaluări

- ProblemDocument2 paginiProblemJishnuÎncă nu există evaluări

- Measuring The Acceleration Due To Gravity LabDocument6 paginiMeasuring The Acceleration Due To Gravity Labapi-616433899Încă nu există evaluări

- PPRF Complete Research Report PDFDocument53 paginiPPRF Complete Research Report PDFGuillermo Bautista FuerteÎncă nu există evaluări

- 5.2 Orthogonal Complements and ProjectionsDocument17 pagini5.2 Orthogonal Complements and ProjectionsCostalotÎncă nu există evaluări

- Problem Set 3 (Solution)Document5 paginiProblem Set 3 (Solution)Mark AstigÎncă nu există evaluări

- PDFDocument589 paginiPDFJean Raziel Cabansag BaysonÎncă nu există evaluări

- GR 4 Mathematics 1st 4thDocument230 paginiGR 4 Mathematics 1st 4thGolden SunriseÎncă nu există evaluări

- Mphasis Aptitude Questions AnswersDocument11 paginiMphasis Aptitude Questions Answersmaccha macchaÎncă nu există evaluări

- Stat Module 5Document10 paginiStat Module 5Remar Jhon PaineÎncă nu există evaluări

- Data Science Linear RegressionDocument105 paginiData Science Linear RegressionJaime Prades KošćinaÎncă nu există evaluări

- Calculate The Gradients of The Following Linear Graphs. Remember, GradientDocument10 paginiCalculate The Gradients of The Following Linear Graphs. Remember, GradientSuman JoyÎncă nu există evaluări

- Comparative Study of RP-HPLC and UV Spectrophotometric Techniques For The Simultaneous Determination of Amoxicillin and Cloxacillin in CapsulesDocument6 paginiComparative Study of RP-HPLC and UV Spectrophotometric Techniques For The Simultaneous Determination of Amoxicillin and Cloxacillin in Capsulesiabureid7460Încă nu există evaluări

- GATE 2020 Online Test Series:: Civil EngineeringDocument6 paginiGATE 2020 Online Test Series:: Civil EngineeringAvinash JhaÎncă nu există evaluări

- Abstract Classes Versus Interfaces: C# (And Other Languages) Polymorphism Explained To Young PadawansDocument4 paginiAbstract Classes Versus Interfaces: C# (And Other Languages) Polymorphism Explained To Young Padawanssridevishinas100% (1)

- Theory Notes of Newton's Laws of Motion For NEET & IIT 2017Document18 paginiTheory Notes of Newton's Laws of Motion For NEET & IIT 2017umved singh yadav90% (10)

- GD&T PDFDocument109 paginiGD&T PDFBikash Chandra SahooÎncă nu există evaluări

- Quarter 1: Learner'S MaterialDocument39 paginiQuarter 1: Learner'S MaterialCynara AlexaÎncă nu există evaluări

- Power SeriesDocument2 paginiPower SeriesAbhi DebÎncă nu există evaluări

- Strouhal ADocument8 paginiStrouhal Asivaaero41Încă nu există evaluări

- Trade-Off Strategies in Engineering DesignDocument27 paginiTrade-Off Strategies in Engineering DesignDan SantosÎncă nu există evaluări

- The Undiscovered Self - Carl Gustav JungDocument25 paginiThe Undiscovered Self - Carl Gustav JungYusuf IrawanÎncă nu există evaluări

- List of Commands For Autocad: Cad Lab Tutorial Exercises Instructor: S.K. Sharma To Be Practiced Using Autocad SoftwareDocument15 paginiList of Commands For Autocad: Cad Lab Tutorial Exercises Instructor: S.K. Sharma To Be Practiced Using Autocad SoftwareShakeelÎncă nu există evaluări

- Using Multibase Dienes For Basic Six Pupils of Assin NkranDocument41 paginiUsing Multibase Dienes For Basic Six Pupils of Assin NkranAiman UsmanÎncă nu există evaluări

- AI CLIPS TutorialDocument30 paginiAI CLIPS Tutorialintonation iÎncă nu există evaluări

- Measures of Dispersion: Greg C Elvers, PH.DDocument27 paginiMeasures of Dispersion: Greg C Elvers, PH.DorchuchiÎncă nu există evaluări

- Linear Algebra With Applications 9th Edition Leon Solutions ManualDocument32 paginiLinear Algebra With Applications 9th Edition Leon Solutions ManualLafoot BabuÎncă nu există evaluări